Nvidia GeForce RTX 2060 Will Cost $349, RTX Mobile is Here

Nvidia head Jensen Huang took the stage at a packed CES keynote briefing dedicated specifically to the company's gaming technology. He introduced the RTX 2060, the company's latest GPU and one capable of ray-tracing. It will debut at $349 on January 15 with 52 trillion tensor FLOPs, 6 GB of G6 memory and 5 gigarays per second. He additionally announced G-Sync Ultimate, a new driver that will make adaptive sync monitors work like those that already have Nvidia's proprietary technology, as well as RTX cards for laptops.

He showed it off with a demo of Battlefield V, with RTX on at 1440p. We saw a mission in the Battle of Rotterdam, and were pointed to how water reflects the sun and boats on the water. A building in the game was reflected on the water on a train car. Huang said that performance on games is "dramatically higher" than the GTX 1060, performing up to twice as well on Wolfenstein II and outpacing on other popular titles like Call of Duty: Black Ops 4 and Deus Ex: Mankind Divided.

"An RTX 2060 has higher performance than a 1070 Ti" he said, showing off a performance chart of several previous GPUs, including the GTX 960 and 970.

There will also be a bundle with either Anthem or Battlefield V for the RTX 2060 or 2070. On an RTX 2080 or 2080 Ti, you'll get both games.

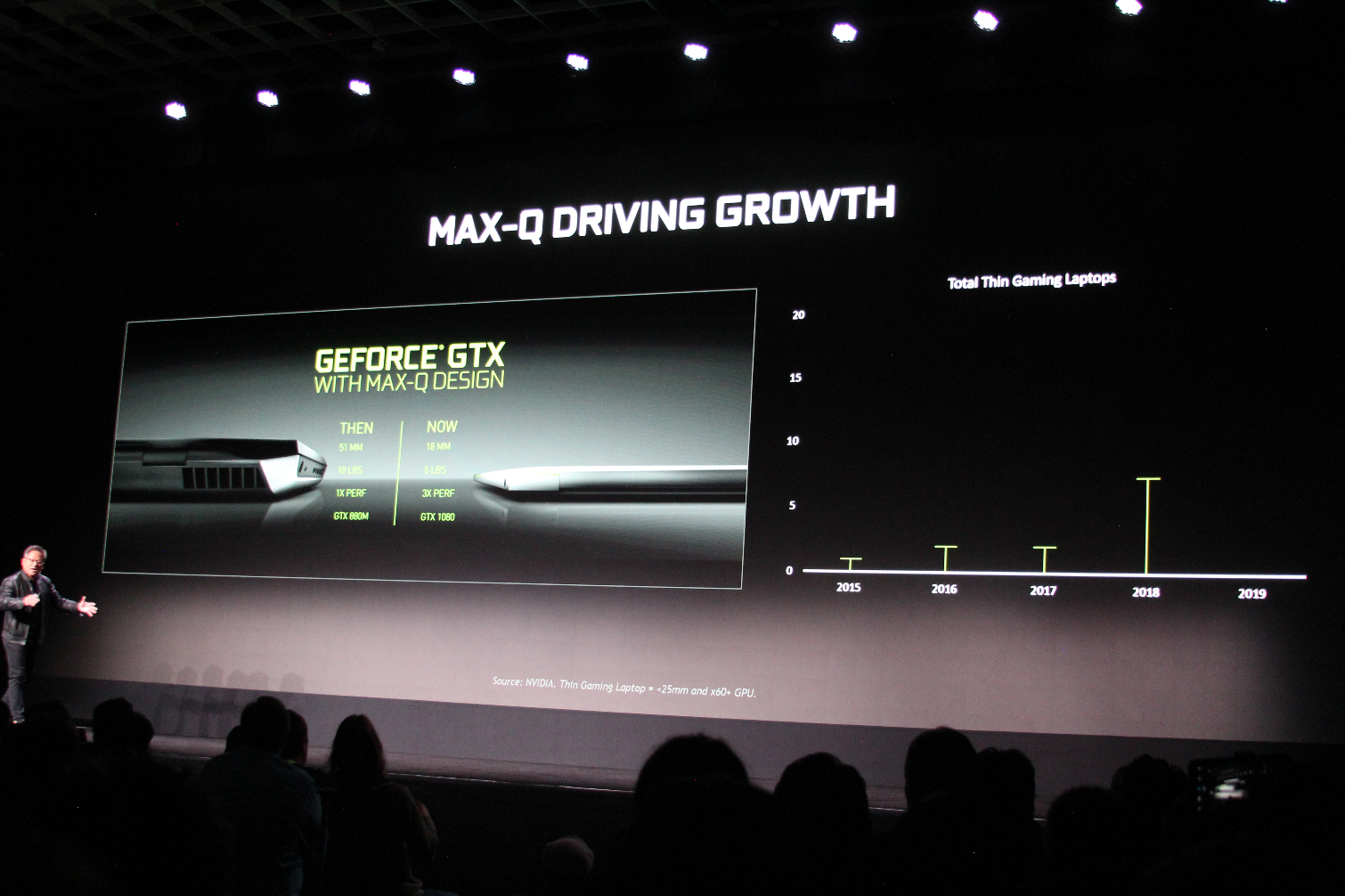

Turing for Mobile

But this wasn't all about desktops. Huang reminisced about Max-Q and how power could be brought to laptops while also being low power and super-efficient. Now, RTX is coming to laptops: 40 machines will launch beginning January 29th, with 17 using Max-Q designs. Those Max-Q cards include the RTX 2070 Max-Q and 2080 Max-Q.

"We optimize every single game for laptop use," Huang said.

The new laptops include the MSI GS65 Stealth, Acer Predator Triton 500 and an unspecified Gigabyte Aero that Huang held on stage, but appeared to be a revamp of the Aero 15.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Tom's Hardware will have hands-ons with several of these laptops at CES this week.

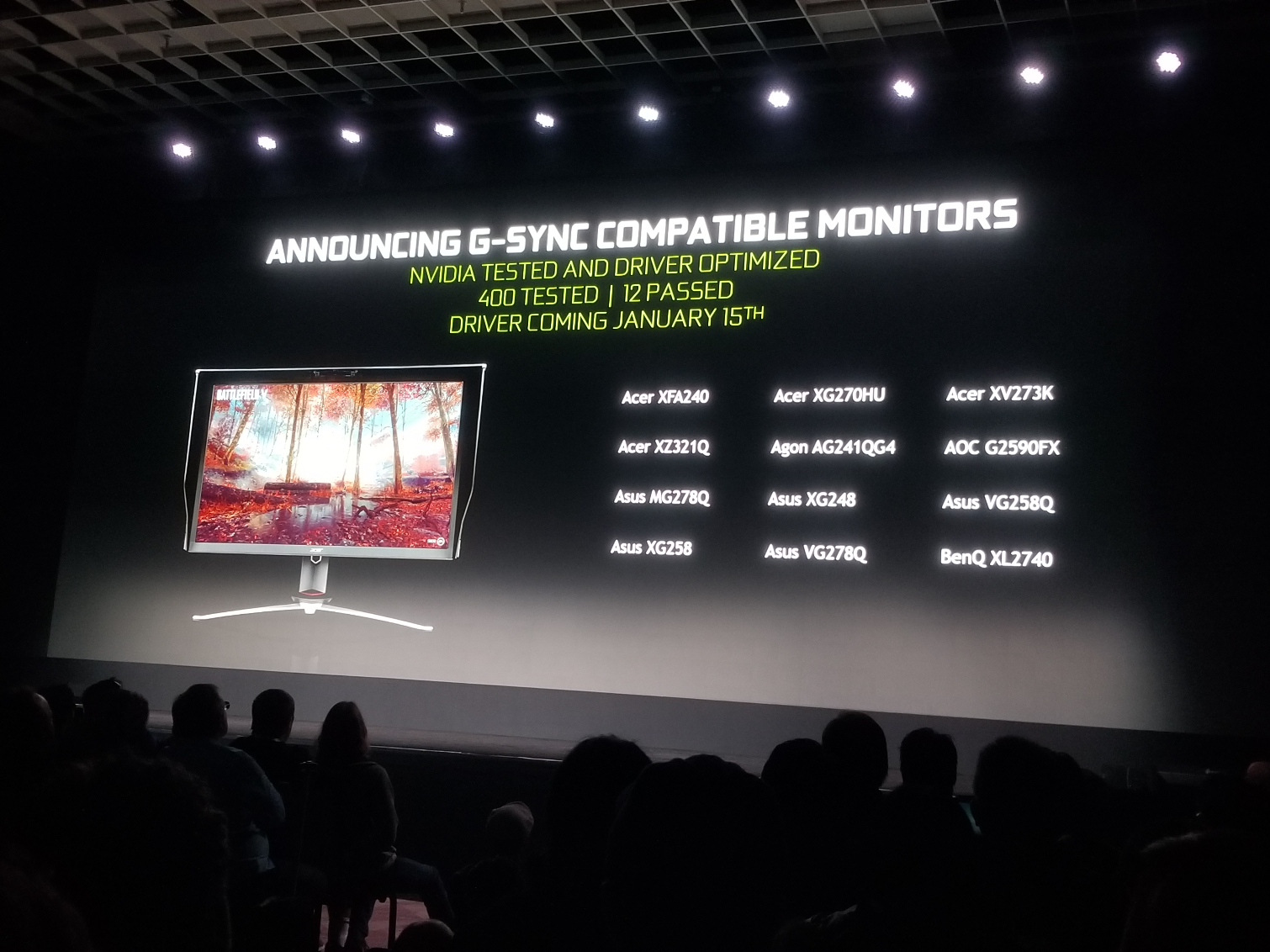

G-Sync Ultimate

Another big announcement was the G-Sync Ultimate, ith new monitors: the Asus ROG Swift PG27UQ., HP Omen X Emporium 65 and the Acer Predator X27. These are "NVidia tested and driver optimized." The Omen has last been seen as one of Logitech's big format gaming displays. He mentioned A-Sync, or adaptive sync and suggested blanking and pulsing can ruin a game. They will use a driver to support adaptive sync, and if monitors pass specification, it will be considered G-Sync ready. This is taking on FreeSync in a very big way, and the driver will release on January 15.

"The GPU revolutionized modern gaming" Huang said. He discussed how rasterization and texture mapping, pixel shading, SSAO, depth of field, tessellation and photogrammetry made graphically groundbreaking games like Battlefield 1942, Gears of War, Crysis, Battlefield 3 and Battlefield V over the last two decades. But he touted lighting and reflections as effects that are too difficult to reproduce with geometry.

"That's where ray-tracing comes in," he said, talking up the technology's ability to produce more life-like reflections, area lights, global illumination, and, when paired with deep learning, facial learning and character animation.

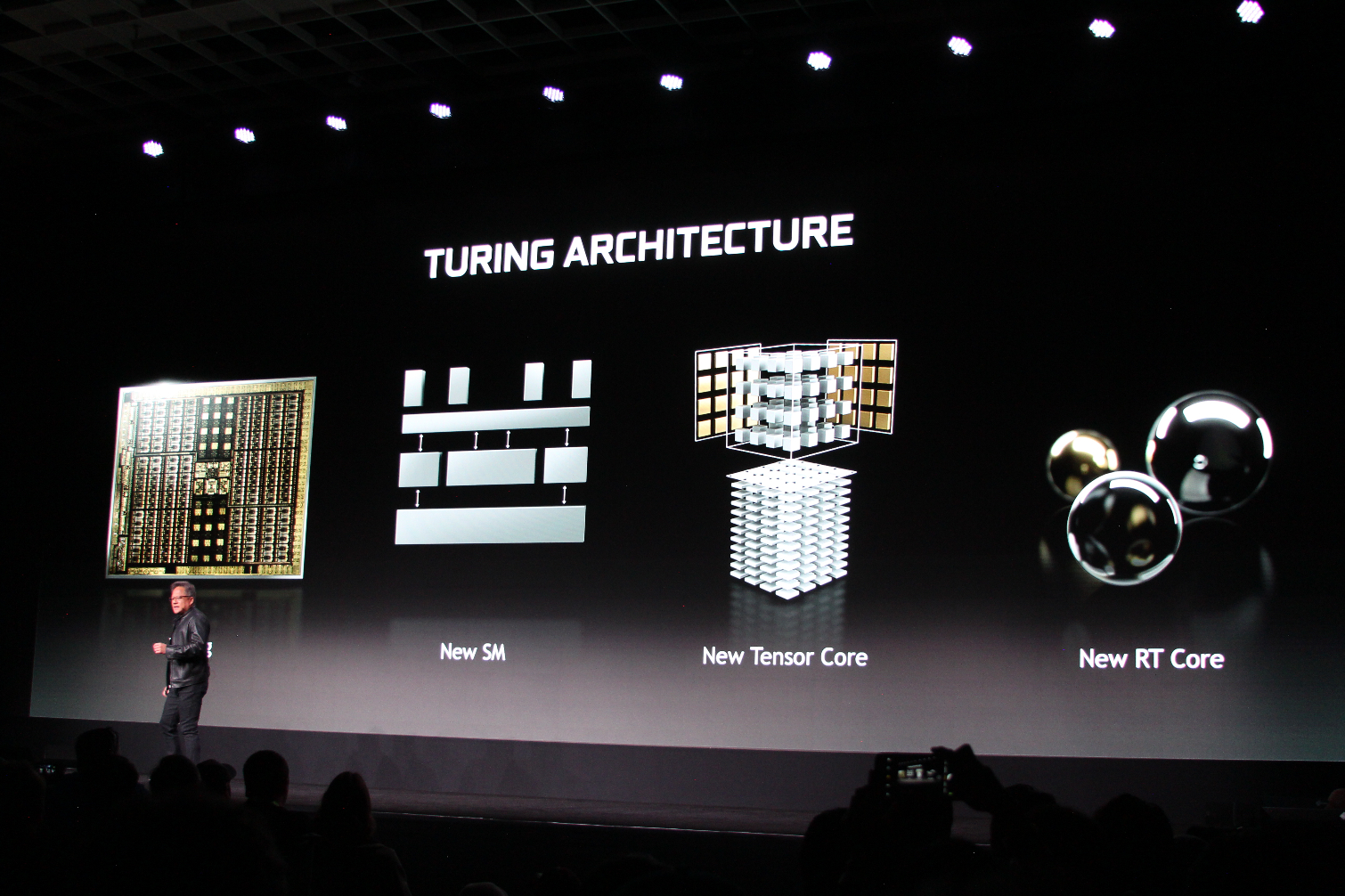

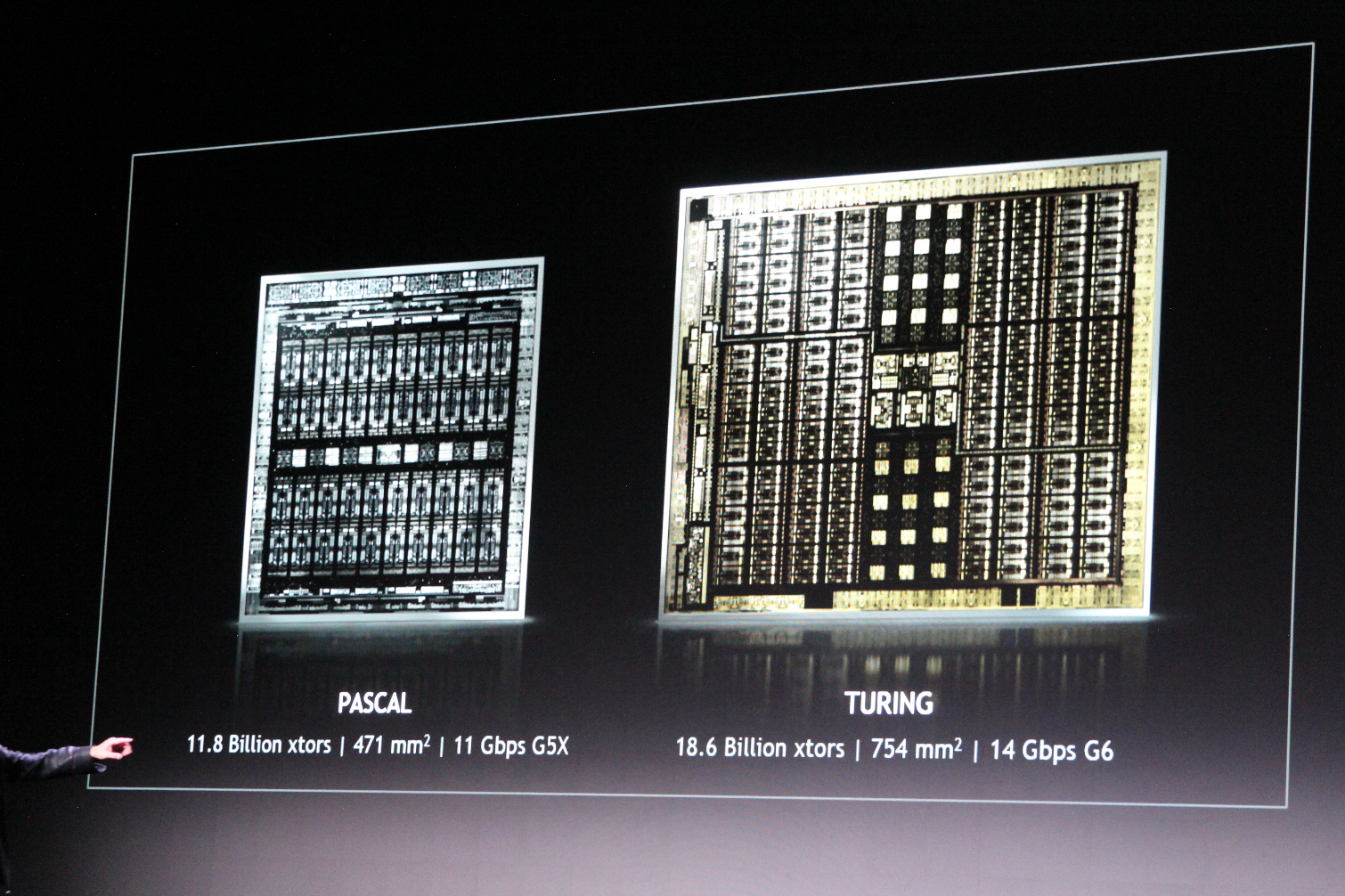

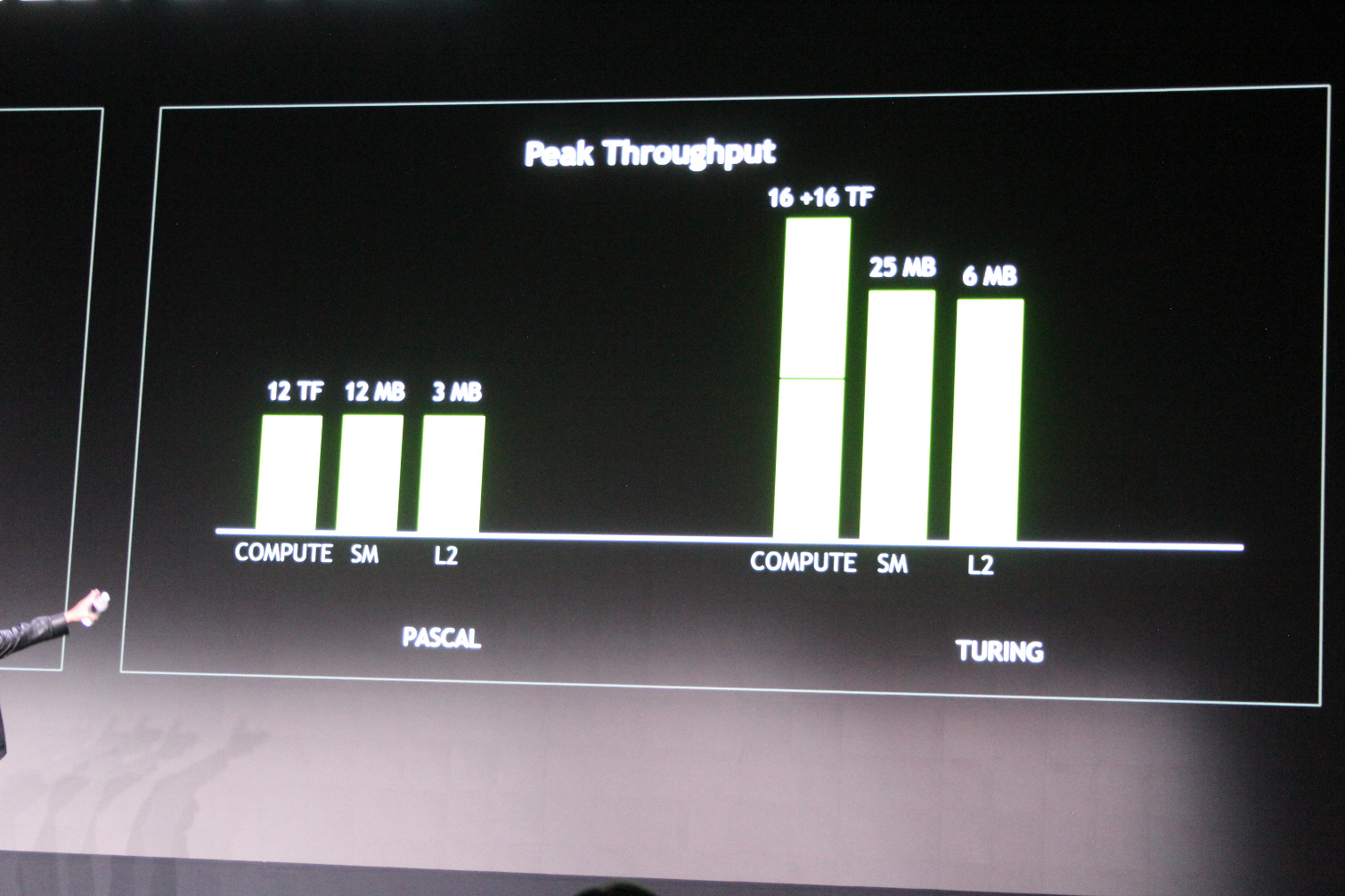

Huang then gave a primer on the Turing architecture, tensor cores, RT cores and the technologies that make its RTX technologies possible.

He showed off the largest Turing chip, and explain with 18.6 billion transistors with 14 GBps G6 memory that is 754 millimeters squared and how the rest of the family would come from it.

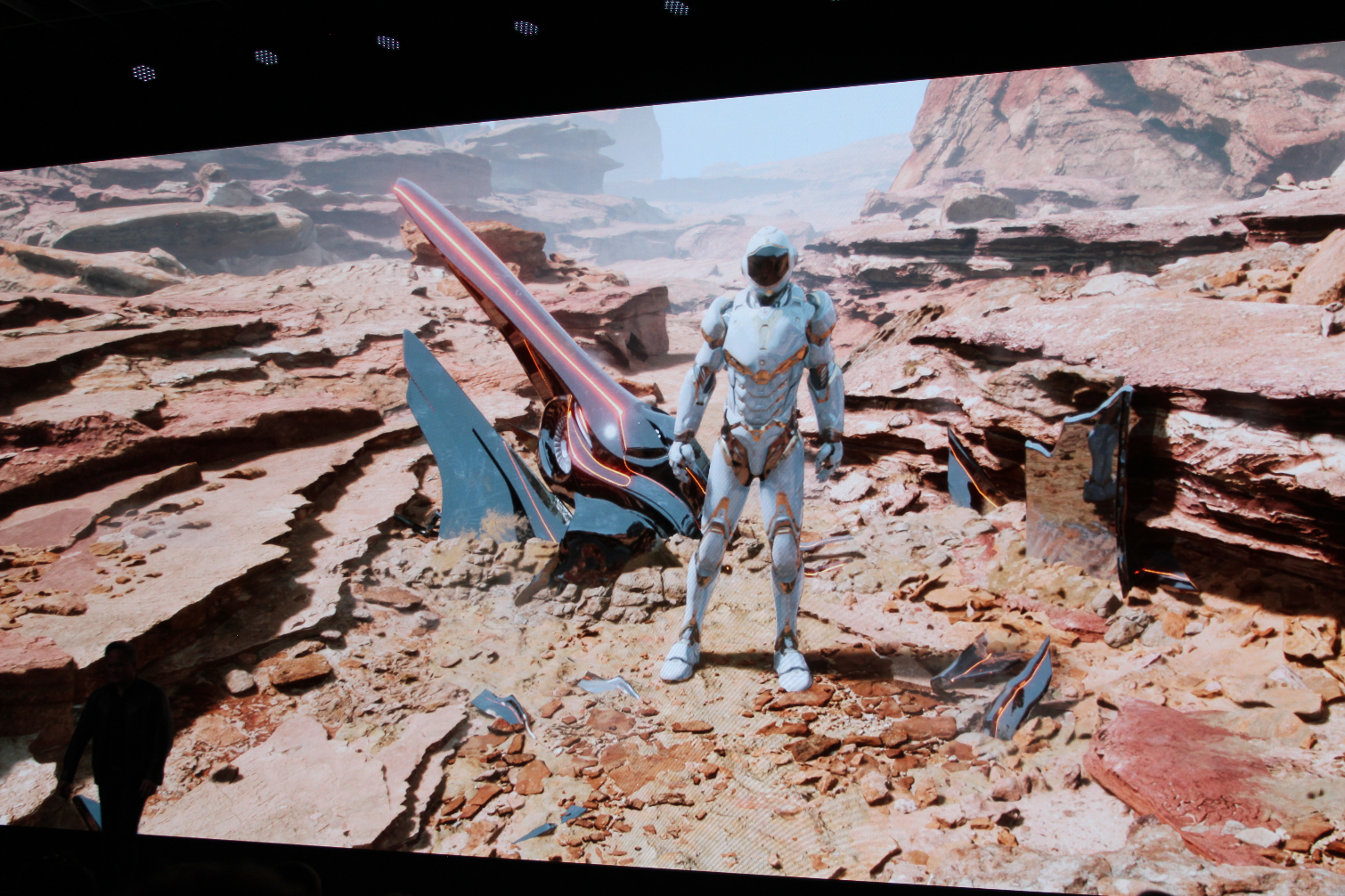

Then came a demo, of a man suiting up in armor and making a space jump, only to find issues standing up and blowing a huge hole in the ground. It was an impressive animation, all produced in real-time.

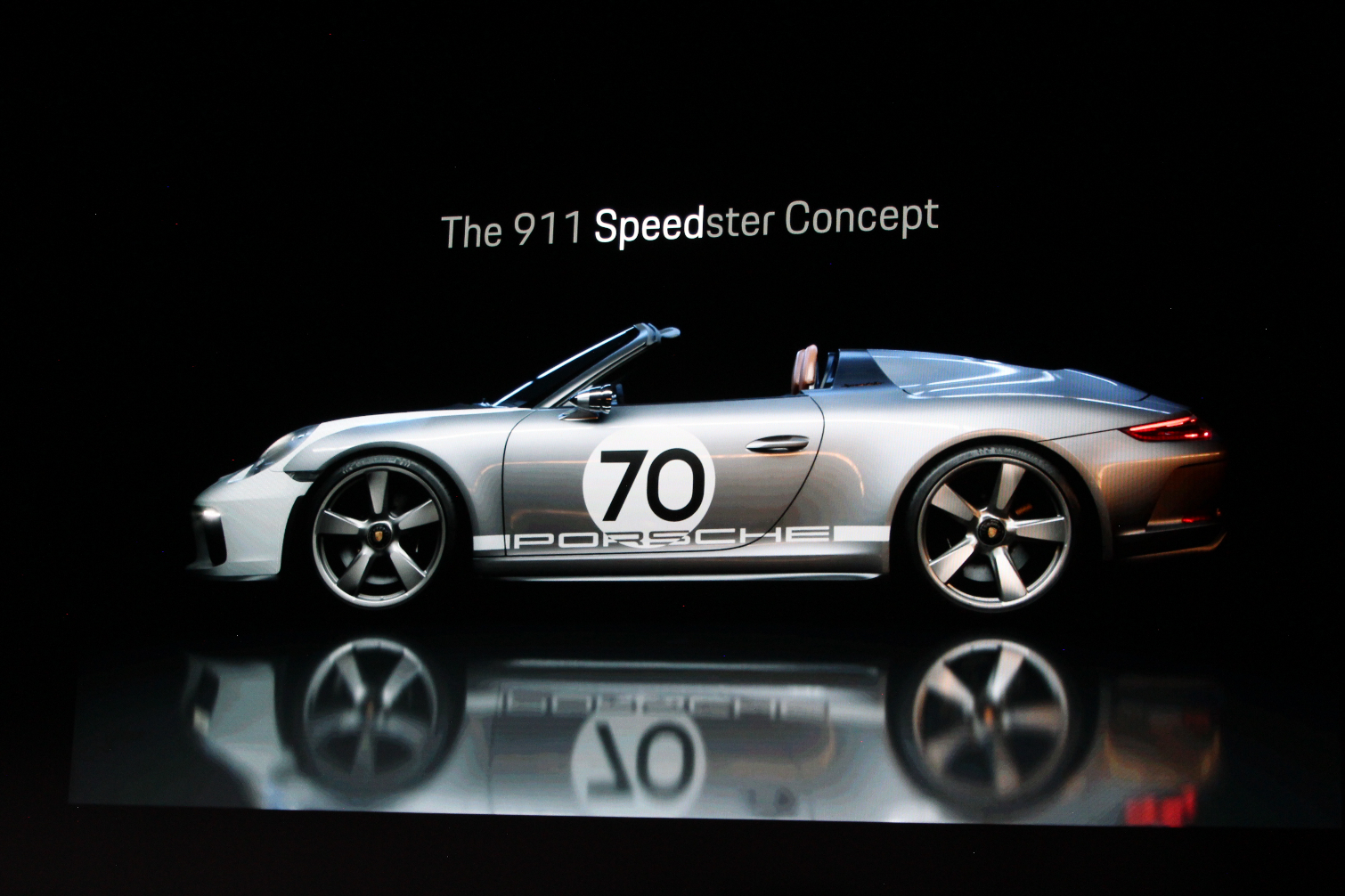

"This is not a movie. This is next-generation graphics," Huang said. He then changed the camera to show reflections on the armor and changed the light to show reflections. He also screened the ever popular Stormtrooper demo that has been played along many RTX reveals, as well as a video for Porsche's seventieth anniversary and other technical demos.

DLSS in Action

But he also described AI's role in ray-tracing. Without it, he suggested, RTX technology might not be possible. DLSS comes from a neural network of computers taking 64 samples per pixel of pictures to learn what something should look like, making the computer try over and over until it gets it right, but at an even higher resolution.

Then, he announced that Electronic Arts and Bioware will be using DLSS for its upcoming title, Anthem. A trailer for the game was shown, high on special effects, land masses and textures, reflections and light.

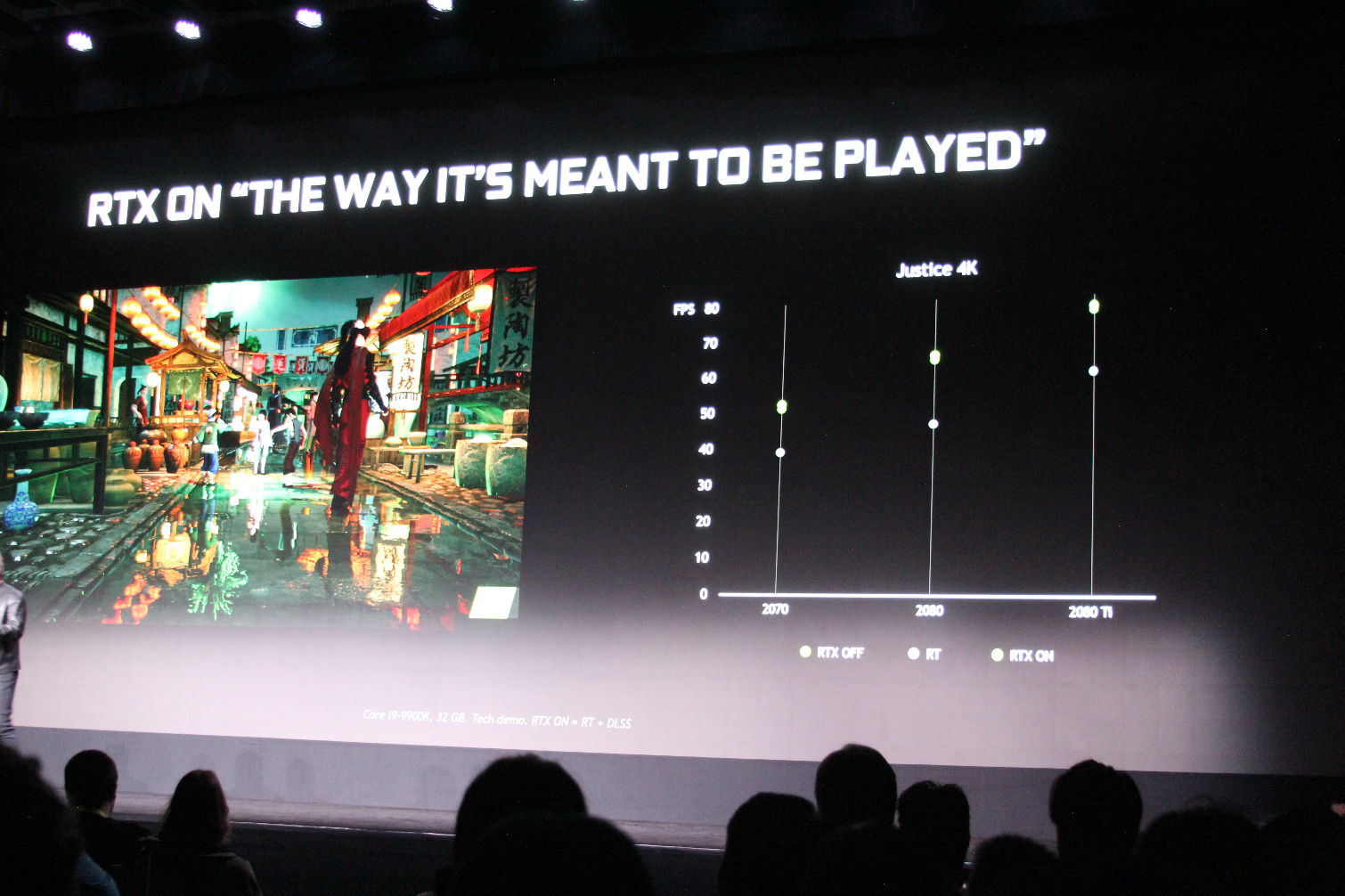

Huang then showed off the new Port Royale benchmark from 3DMark at 1440p with TAA on one side of a screen and DLSS on the other. The DLSS option played a a higher frame rate throughout, usually by 1o to 15 frames per second. DLSS, in effect, learned from the benchmark to increase the quality where TAA created artifacts or had other flaws. Then we saw it in MMORPG Justice, showing reflections in puddles being hit by rain drops, even when the object wasn't visible on-screen. Another impressive effect included light bouncing off water onto the bottom of a bridge. Huang acknowledged that Justice doesn't run as fast with ray-tracing on, but it used DLSS to get back to a frame rate close to what it would be when RTX was off.

"It looks like glass," Huang said giddily, pointing out the most realistic examples. He pointed out that the game was running with RTX on at 1440p, on a brand new GPU. That was the RTX 2060.

Other Uses for Turing Cards

He went on to describe G-Sync Ultimate (described above) and went over the other interests of how gamers use GPUs: 3D animation, VR, streaming and more.

RTX GPUs will be able to accelerate 3D modeling and animation programs like Autodesk Arnold, that RTX 2080 and above cards wlll be able to edit 8K video, that OBS will make pro-quality broadcast streaming from a single computer and announced Turing VRWorks with HTC Vive.

That last bit will use the VirtualLink port, and headsets with eye-tracking can focus on improving resolution rate and performance.

Ansel

After the RTX for mobile reveal, Huang moved onto Ansel. In Justice, he applied filters. He then shared real captures of Battlefield V to show off more ray tracing, and highlighted a billboard in Times Square in New York City showing off the images.

Andrew E. Freedman is a senior editor at Tom's Hardware focusing on laptops, desktops and gaming. He also keeps up with the latest news. A lover of all things gaming and tech, his previous work has shown up in Tom's Guide, Laptop Mag, Kotaku, PCMag and Complex, among others. Follow him on Threads @FreedmanAE and BlueSky @andrewfreedman.net. You can send him tips on Signal: andrewfreedman.01

-

nexushg40417 Say I already own one of the monitors that will be newly supported on Jan 15th for GSYNC. How do I get GSYNC on that monitor I already own?Reply -

AlistairAB Reply21652342 said:RTX2060 faster than the 1070 Ti? Nvidia could be onto a winner here...

Not faster, the same speed. Ignore Wolfenstein 2 that is an outlier. Nvidia's own marketing material has it faster in about half the games and slower in the other half. One thing to keep in mind is that the 1070 ti was an incredible OC'er, so I expect the RTX 2060 to be slightly slower than the 1070 ti in general. -

s1mon7 Nvidia announcing adaptive sync support was surely the highlight of the event. That was a great way to stay competitive for the people eyeing the upcoming Navi and Intel GPUs.Reply

The make it or break it will be whether the driver will work with all of the non "G sync certified" Freesync monitors as well as an AMD or Intel GPU would (the remaining 388 models that they tested). Those are the monitors that most people buy to have at their homes these days, and if Nvidia won't support them properly, they are still at a major disadvantage should AMD or Intel come up with a competitive GPU.

I have an LG Freesync monitor and the only reason I'm not running an AMD GPU with is is because there aren't 4K-capable AMD GPUs out there. As soon as there is one, if Nvidia does not support it, I will switch to the GPU that allows me to use Freesync on my monitor that I already have and don't plan to switch from. -

hotaru251 chances of getting free sync monitor to work with g-sync?Reply

also while nto a fan of RT #350 for a faster than 1070ti is a good deal. -

lifeboat "How do I get GSYNC on that monitor I already own?" You'll just have to upgrade to the latest drivers that will be released on the 15th.Reply -

PuperHacker Well... If it is true that it will outperform a gtx1070ti and have ray tracing, why bother spending $150+ on an rtx2070? It makes no sense to have a performance gap this small... it is just like the gtx1070ti/gtx1080. Hope they don't force stock clocks on every rtx2060 so it doesn't match the rtx2070...Reply -

feelinfroggy777 Reply21652380 said:Say I already own one of the monitors that will be newly supported on Jan 15th for GSYNC. How do I get GSYNC on that monitor I already own?

Nvidia will release a driver update on the 15th that will open up support for your monitor. -

King_V Reply21652527 said:Nvidia announcing adaptive sync support was surely the highlight of the event. That was a great way to stay competitive for the people eyeing the upcoming Navi and Intel GPUs.

The make it or break it will be whether the driver will work with all of the non "G sync certified" Freesync monitors as well as an AMD or Intel GPU would (the remaining 388 models that they tested). Those are the monitors that most people buy to have at their homes these days, and if Nvidia won't support them properly, they are still at a major disadvantage should AMD or Intel come up with a competitive GPU.

I have an LG Freesync monitor and the only reason I'm not running an AMD GPU with is is because there aren't 4K-capable AMD GPUs out there. As soon as there is one, if Nvidia does not support it, I will switch to the GPU that allows me to use Freesync on my monitor that I already have and don't plan to switch from.

Yeah, see, my understanding is probably incomplete, but this is what had me confused.

I thought Adaptive Sync was the general term for the two existing Adaptive Sync technologies - GSync, and FreeSync.

Yet, in the article, it almost made it sound like a separate thing. So, when they say GSync will work with Adaptive Sync monitors that don't have GSync in them, do they mean it'll work with FreeSync monitors, or do they mean something else entirely.

Are they basically saying that they're finally allowing FreeSync support? ie: what some people needed to hack their drivers to do (and Nvidia shut that down real quick), they're finally officially going to support? I'd like to think so, but, I'll admit I'm a bit lost on this.

-

Sam Hain Reply21652488 said:21652342 said:RTX2060 faster than the 1070 Ti? Nvidia could be onto a winner here...

Not faster, the same speed. Ignore Wolfenstein 2 that is an outlier. Nvidia's own marketing material has it faster in about half the games and slower in the other half. One thing to keep in mind is that the 1070 ti was an incredible OC'er, so I expect the RTX 2060 to be slightly slower than the 1070 ti in general.

Also consider this, once RT is enabled, cut those frames down on the RTX 2060 considerably, unless staying @ 1080p; fairs okay there and is of course title-dependent. The RTX 2060 is more or less a next-gen GPU equipped with technology it cannot properly exploit; underpowered. For that, one would realistically need the 2080/2080 Ti, keeping monitor(s) resolution in mind.