Intel Announces Optane DIMMs for Workstations, SSD 665P With 96-Layer QLC, Roadmap

Intel made a slew of announcements today, including workstation support for Optane DC Persistent Memory DIMMs, which will eventually lead to support on the mainstream client desktops, like gaming PCs. These DIMMs slot into the DDR4 interface, just like a stick of RAM, but use Optane memory instead of DRAM to offer up to 512GB of memory capacity per stick.

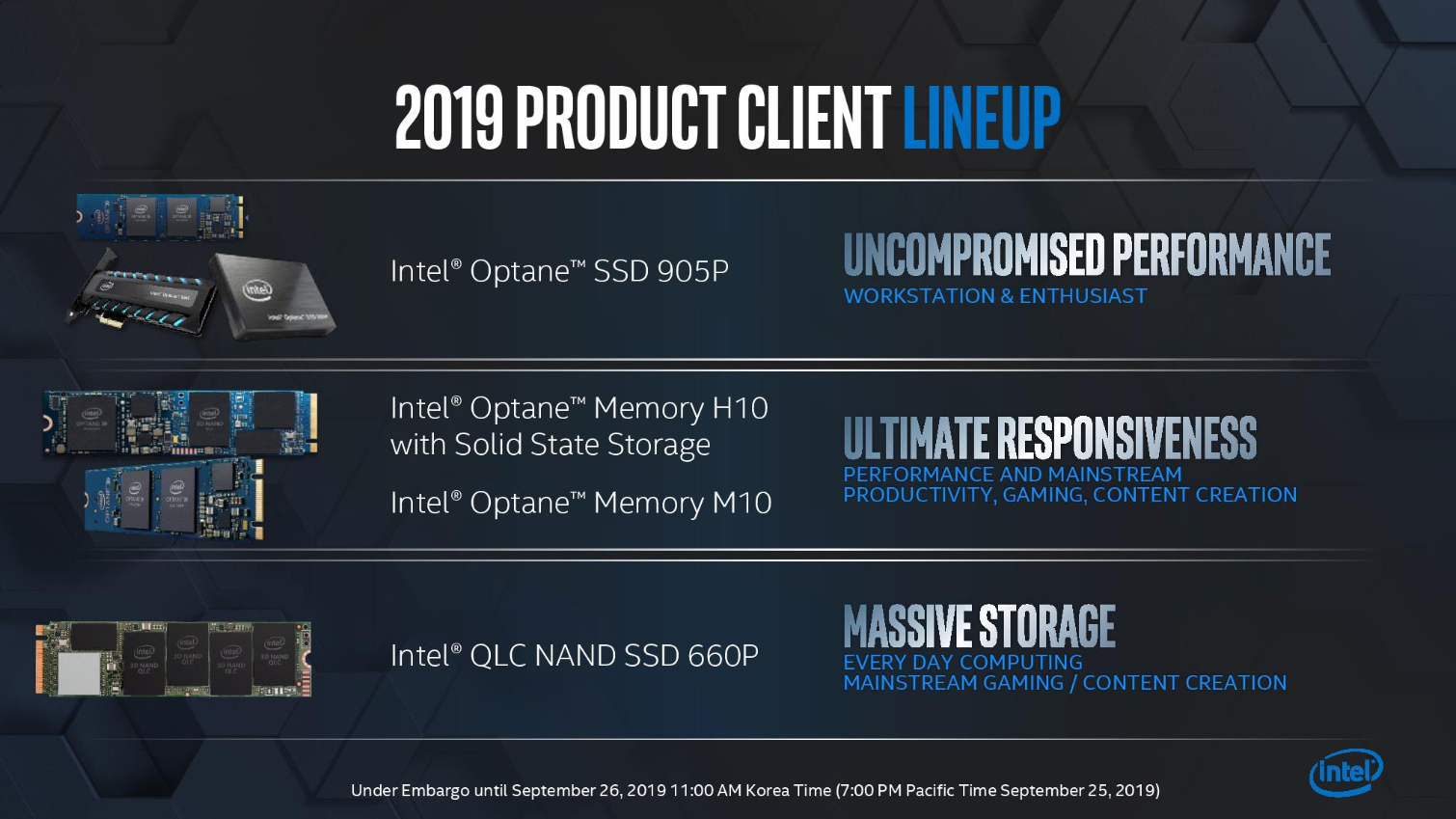

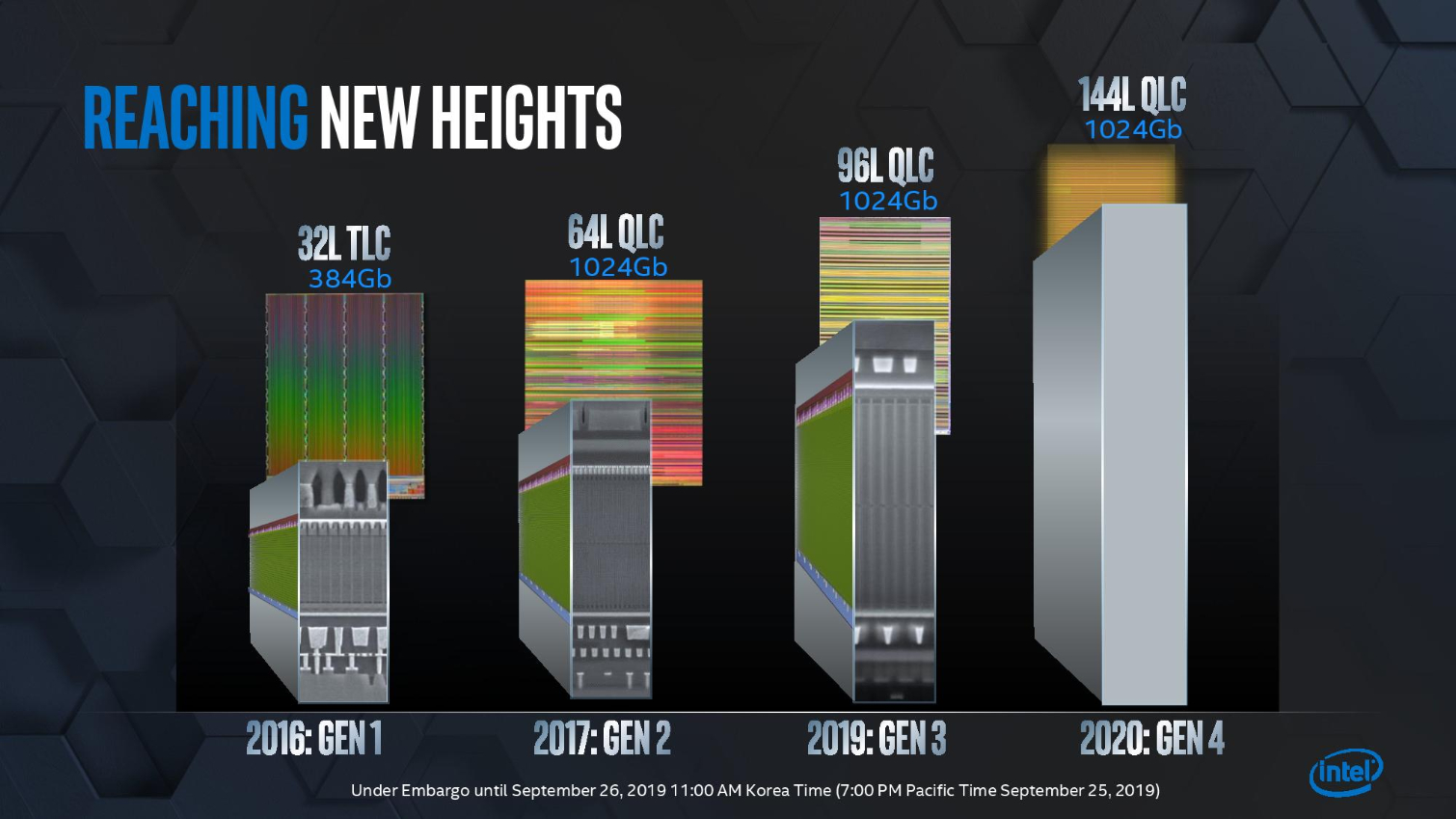

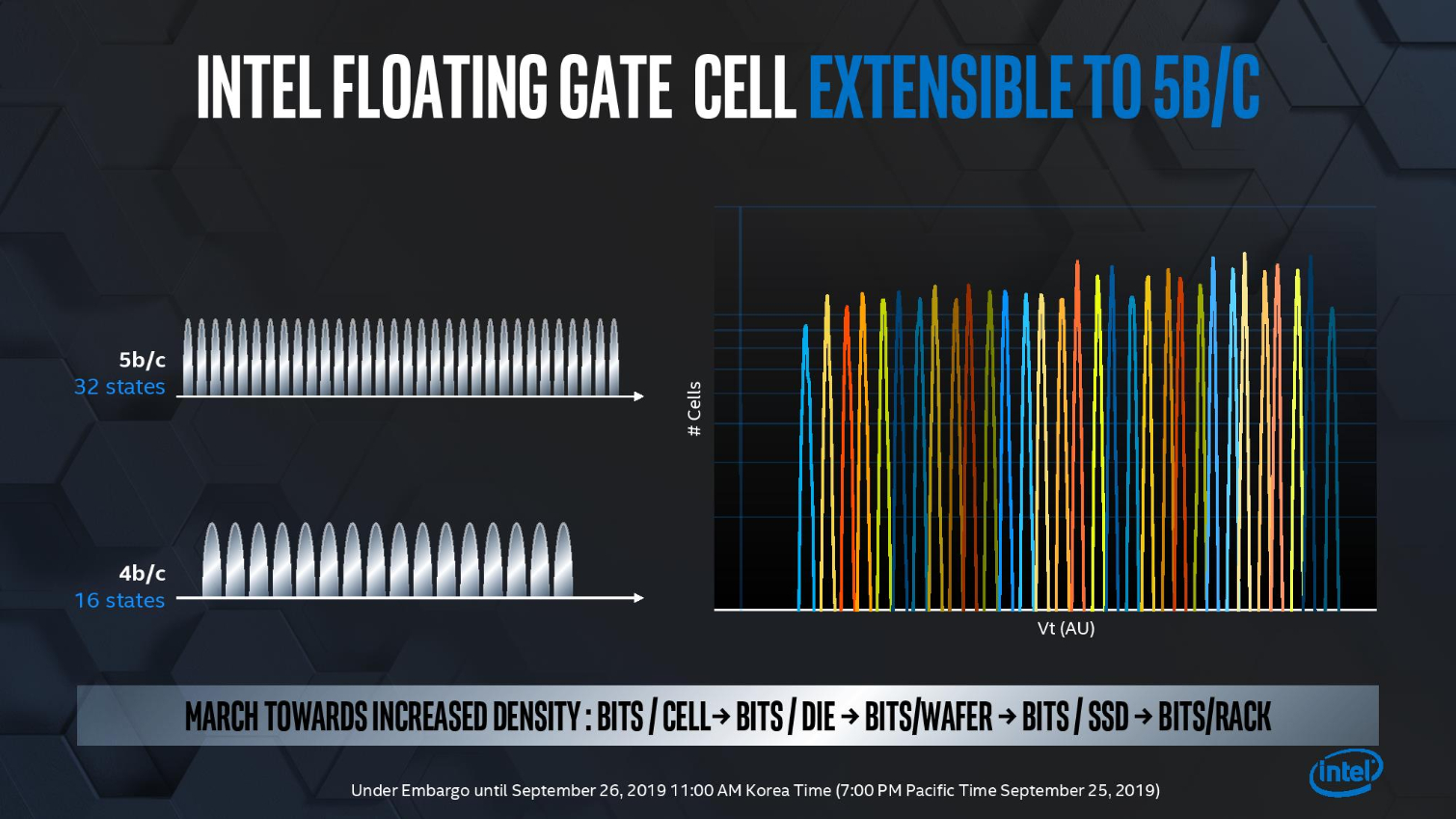

Intel also announced new SSD 665p manufactured with 96-Layer QLC flash and revealed a roadmap outlining its future Optane SSD products and new 144-Layer QLC NAND. The company also announced that it is working towards developing 5-bit-per-cell flash, otherwise known as penta-level cell (PLC), to offer a path to increased storage density and lower-priced storage.

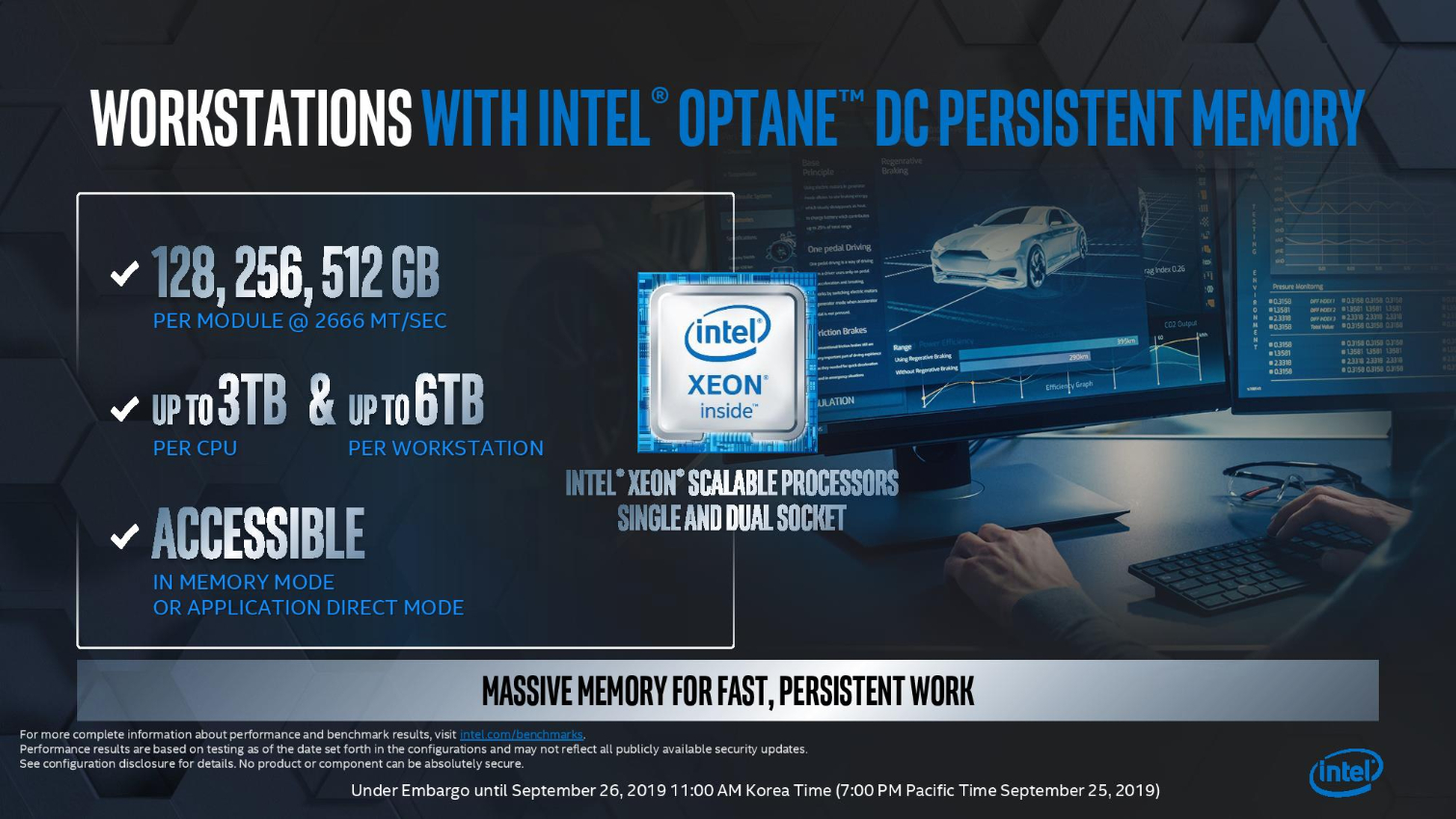

Intel's Optane DC Persistent Memory DIMMs come in three capacities of 128, 256, and 512GB. That's a massive capacity increase compared to current DDR4 memory sticks and can be used as either memory or storage, retaining data even when power is removed. Intel designed the DIMMs to bridge both the performance and pricing gap between storage and memory, with the underlying 3D XPoint memory being fast enough to serve as a slower tier of DRAM, though it does require some tuning of the application and driver stacks.

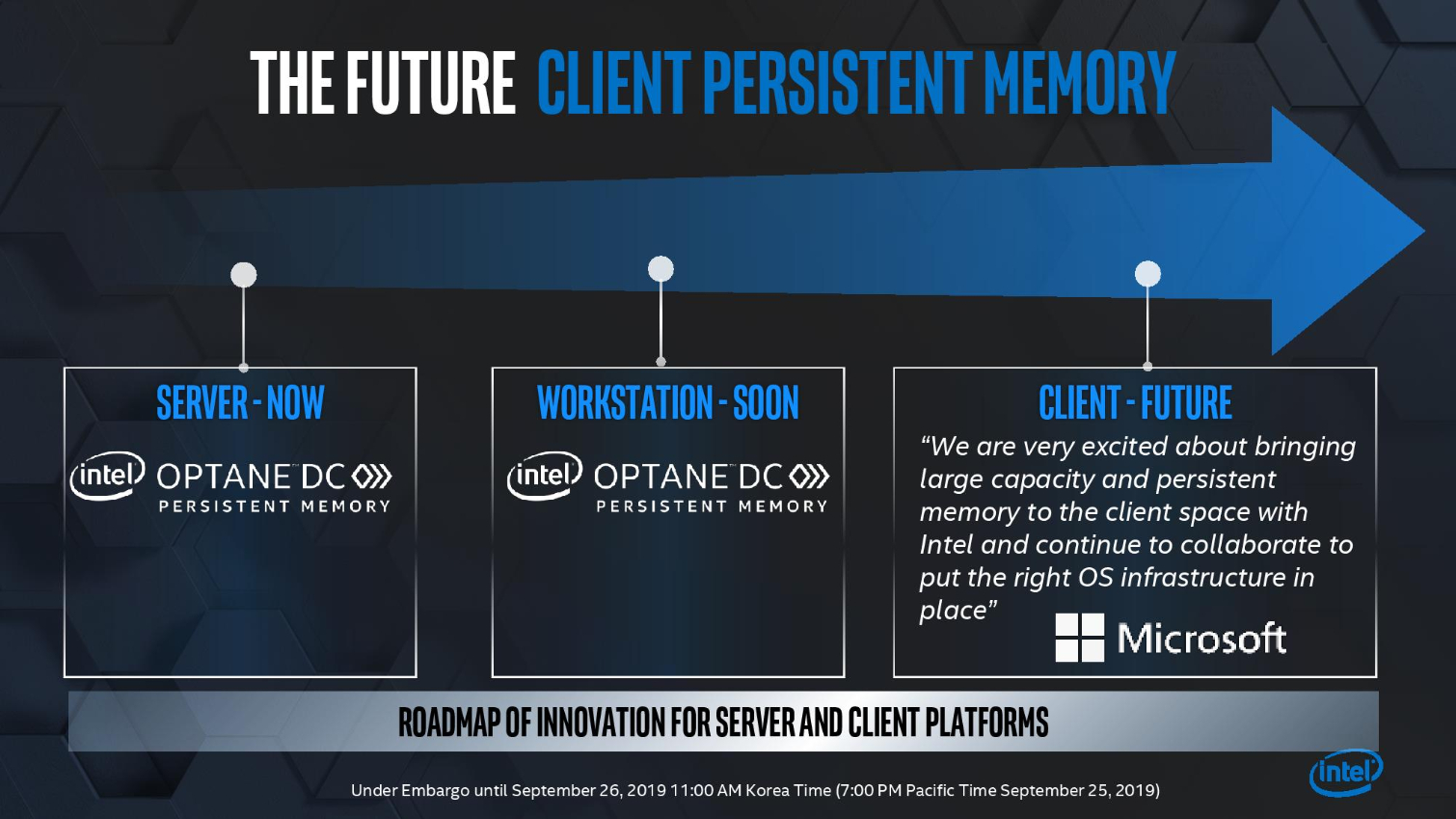

Using Optane memory instead of DRAM promises radical new capabilities and much lower pricing, but the technology has been confined to use in servers, like the Cascade Lake platform. Now Intel is bringing Persistent DIMMs to the workstation market, and then the desktop. Intel hasn't announced a firm arrival date for workstations with the new technology but says that it enables up to 3TB of memory for a single-socket workstation, while dual-socket workstations will have up to 6TB of memory capacity. We have seen numerous server designs that use watercooling for Optane DIMMs, as they require copious amounts of airflow to avoid throttling, so we can expect new workstations to require active cooling for the DIMMS due to much lower airflow rates in the chassis than servers.

Intel's DIMMs are working their way down from the data center to workstations, and the company plans to bring them down a step further into mainstream desktop platforms. That means you'll soon be able to buy a 512GB memory stick for your desktop rig, but while Intel is working with Microsoft to enable the capability through the current ecosystem development initiatives, there is no strict timeline for Optane DIMMs to land on the desktop.

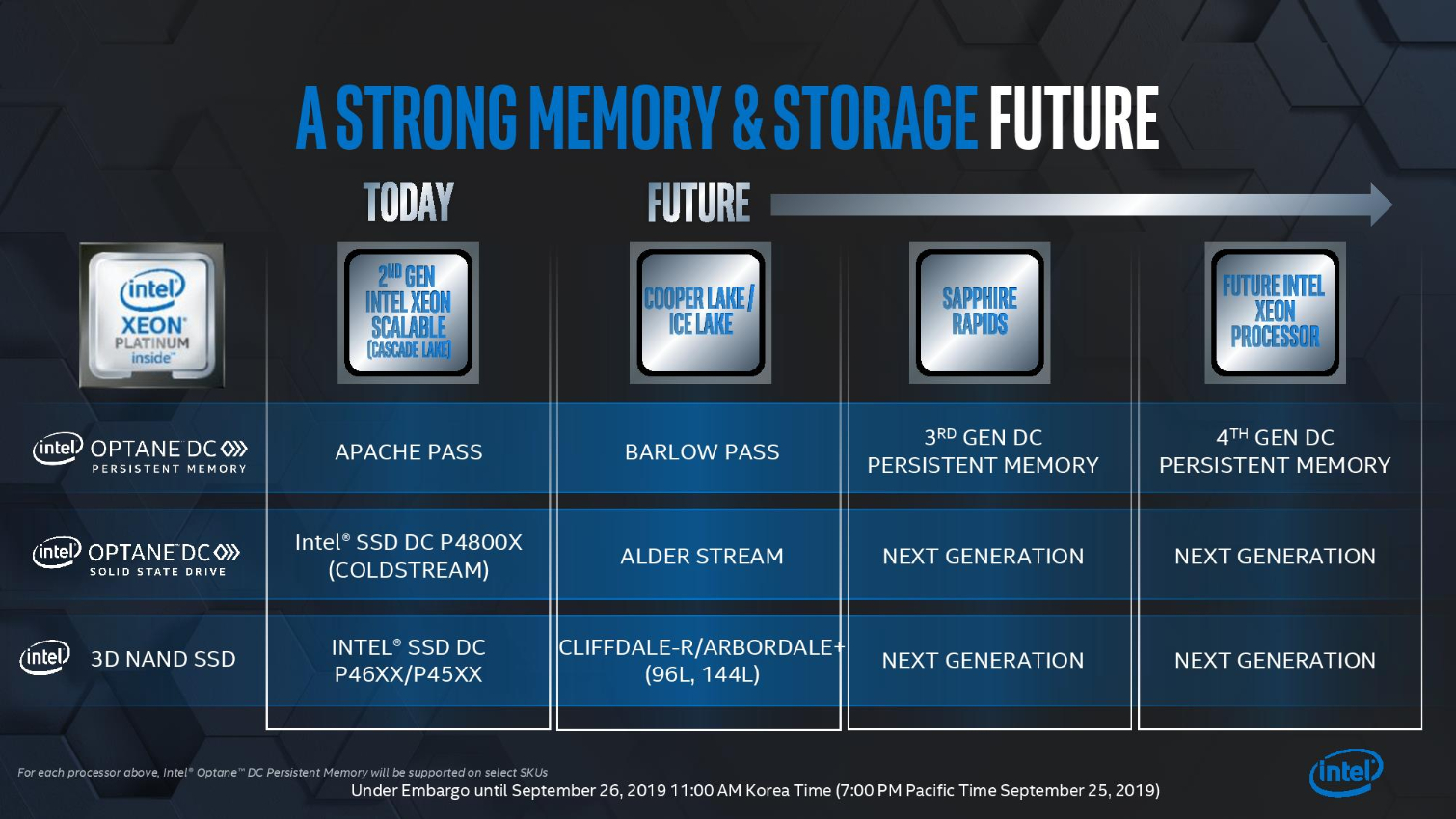

Intel's will follow its first-gen Apache Pass DIMMs with second-gen Barlow Pass modules that will arrive with the 14nm Cooper Lake and 10nm Ice Lake data center processors in 2020. We can also expect those same modules to work with future workstations.

Intel's "Cold Stream" Optane DC P4800X SSDs, which use the same Optane media but serve as fast storage devices, have been shipping in volume for some time. However, the company is already moving forward with its next-gen Alder Stream SSDs, which it has running in its labs. Intel hasn't provided firm specifications for the new SSDs, but touts improved throughput, likely through the move to a PCIe Gen 4 interface, as a key advance. As we've seen with the existing data center models, we can expect those SSD controller improvements to filter down to the client SSD products when Intel refreshes its Optane 900p and 905p.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

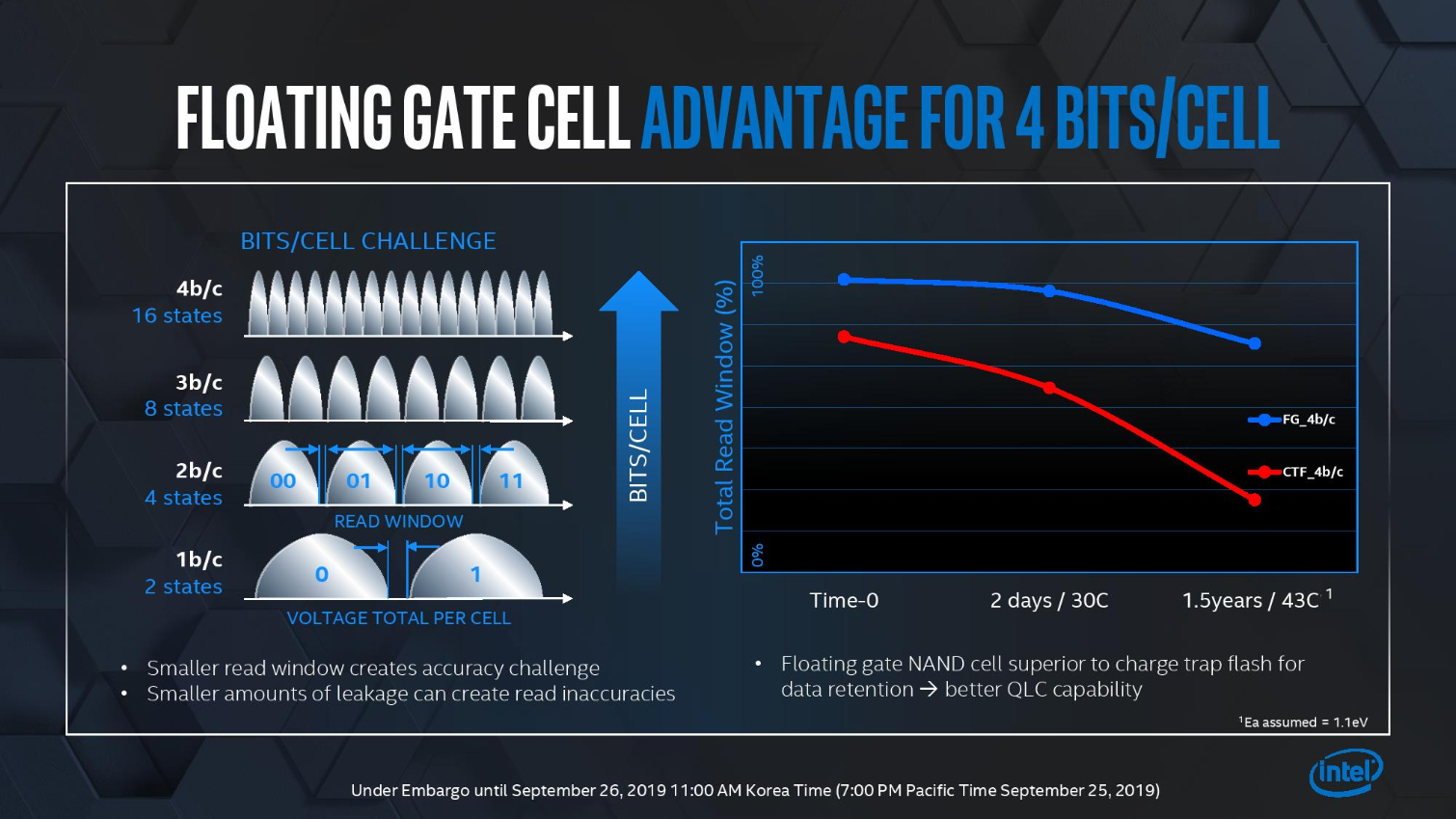

Intel's SSD 660p uses 64-Layer QLC flash, which stores four bits per cell, to provide increased storage density and lower pricing, albeit at the loss of some performance. The firm plans to release a new SSD 665p with 96-Layer QLC flash, which will expand upon the inherent price advantages of denser media. Intel didn't divulge pricing, performance, or a release date, but it's logical to expect to hear more about these SSDs around the CES timeframe.

Intel is also working on 144-layer QLC flash, and like its soon-to-be ex-manufacturing partner Micron, the company is also developing penta-level flash that stores five bits-per-cell.

Intel is also moving forward on the Optane media front, with a new design center opening in Rio Rancho, New Mexico. Intel didn't mention any specific focus areas of the center, but we can expect this to include the next-gen 3D XPoint media. Intel has numerous paths forward to improve the media, such as higher stacking and/or a smaller lithography among the most obvious areas of development. The company is currently largely bound by the performance of its SSD controllers, meaning they don't allow end devices to extract the full performance of the underlying 3D XPoint memory, so its rational to expect more advances on the SSD controller front before we see the next-gen Optane media arrive.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

jimmysmitty One step closer. While not as fast as traditional memory these will be a big game changer for storage as they are vastly faster than even PCIe 4.0 x4.Reply -

DavidC1 PCIe 4.0 doesn't even compare. While the bandwidth is there, the latency is not. The Optane drives are at 10us. These DIMMs are an additional 20-40x faster in terms of latency.Reply

You could get the bandwidth to be at 500GB/s, but it'll still be unusable for system memory if the latencies are in the 100us range as with SSDs. -

jimmysmitty ReplyDavidC1 said:PCIe 4.0 doesn't even compare. While the bandwidth is there, the latency is not. The Optane drives are at 10us. These DIMMs are an additional 20-40x faster in terms of latency.

You could get the bandwidth to be at 500GB/s, but it'll still be unusable for system memory if the latencies are in the 100us range as with SSDs.

These are not Optane drives, they are Optane DIMMs. They are basically non-volatile memory. They are still in the ns for latency, although they are higher then regular DRAM. However most systems allow for mixed setups with some DRAM and some Optane DIMMs, at least the servers have. So it might be possible to have half of the DIMMs be faster DRAM and the others be larger size Optane DIMMs allowing for storage that has vastly higher bandwidth than NVMe and vastly faster latency than NVMe (average is 20ms) -

DavidC1 Replyjimmysmitty said:These are not Optane drives, they are Optane DIMMs.

That's pretty much what I said. -

bit_user Reply

I'm not sure where you got 20 ms for an average NVMe, but that ain't right. Maybe you meant 20 microseconds, but 20 ms is hard disk territory. 20 us is in the ballpark, for SSD reads.jimmysmitty said:They are still in the ns for latency, although they are higher then regular DRAM. However most systems allow for mixed setups with some DRAM and some Optane DIMMs, at least the servers have. So it might be possible to have half of the DIMMs be faster DRAM and the others be larger size Optane DIMMs allowing for storage that has vastly higher bandwidth than NVMe and vastly faster latency than NVMe (average is 20ms)

Here's a fairly recent measurement of SATA drive IOPS:

Source: https://www.tomshardware.com/reviews/crucial-mx500-ssd-review-nand,5390-3.html

So, if you just take the reciprocal of the QD1 IOPS, then you get 83 to 183 usec. However, that includes the time to read & transfer the whole 4k of data, as well as potentially traversing the entire OS I/O stack & probably also the filesystem driver. I don't know what the standard is for measuring read latency, but it might be less.

Here's the same measurement of high-end NVMe drives, from the Intel 905P review:

Source: https://www.tomshardware.com/reviews/intel-optane-ssd-905p,5600-2.html

Again, the same math for the 905P indicates 14.8 usec for the fastest Optane NVMe and 58.1 to 98.5 usec for the NAND-based NVMe drives.

As for how latencies and bandwidth compare between Optane DIMMs and DDR4, there's a handy table in this article:

https://www.tomshardware.com/news/intel-optane-dimm-pricing-performance,39007.html

According to that, the bandwidth of a single Optane DIMM is roughly in the same ballpark as some of the high-end PCIe 4.0 NVMe drives - not vastly higher. AFAIK, Intel CPUs only support up to two channels, meaning your max is only double that.

And latency is about 100x lower, for Optane DIMMs vs NVMe. However, I'm certain the measurement methodology for the DIMMs is just like a memory access. They can't be going through the OS, any filesystem, or even the kernel. And it's not going to be 4k, either. If you would measure the 905P in the same way, maybe the difference could be brought to within 1 order of magnitude. -

TerryLaze Reply

That's not even the important part of it.jimmysmitty said:One step closer. While not as fast as traditional memory these will be a big game changer for storage as they are vastly faster than even PCIe 4.0 x4.

The CPU will be aware that the memory addresses of the optane dim are storage so all the OS calls of gofer this file gofer that file will fall away,the CPU will spend less time (as in none at all) with senseless copying around files from storage to ram only to copy them back after the CPU worked on them. -

bit_user Reply

That requires OS support, which will take time.TerryLaze said:That's not even the important part of it.

The CPU will be aware that the memory addresses of the optane dim are storage so all the OS calls of gofer this file gofer that file will fall away,the CPU will spend less time (as in none at all) with senseless copying around files from storage to ram only to copy them back after the CPU worked on them.

https://www.phoronix.com/scan.php?page=news_item&px=Intel-PMEMFILE

Then, if you want apps to use the data in-place, that will take yet more time (unless they're already written to use mmap()). -

TerryLaze Reply

The very article you link to states that intel tries to keep the kernel i.e OS out of it to get better performance.bit_user said:That requires OS support, which will take time.

https://www.phoronix.com/scan.php?page=news_item&px=Intel-PMEMFILE

Then, if you want apps to use the data in-place, that will take yet more time (unless they're already written to use mmap()).

It's all about the CPU having direct access without having to go through OS calls.

Apps don't have to be optimized for it either,at least the apps us common people care about,they will automatically load up- and do all of their I/O -faster.

Very specialized very I/O heavy apps will have to be specially written to take full advantage but that's the worry of server/workstation IT.

" Intel's new user-space file-system for persistent memory is designed for maximum performance and thus they want to get the kernel out of the way. " -

jimmysmitty ReplyTerryLaze said:The very article you link to states that intel tries to keep the kernel i.e OS out of it to get better performance.

It's all about the CPU having direct access without having to go through OS calls.

Apps don't have to be optimized for it either,at least the apps us common people care about,they will automatically load up- and do all of their I/O -faster.

Very specialized very I/O heavy apps will have to be specially written to take full advantage but that's the worry of server/workstation IT.

" Intel's new user-space file-system for persistent memory is designed for maximum performance and thus they want to get the kernel out of the way. "

I am all for best performance but there is a downside to bypassing the kernal. If written wrong it could cause hard crashes. Its much like the old days of gaming where a game could crash a system while now it will typically crash the program and API instead.

However I have some faith that since Intel is heavily developing these for HPCs and enterprise first they would work to help prevent that sort of issue. Can't have a poorly written application taking a server down. -

bit_user Reply

The OS must necessarily be involved at some point, for security, if nothing else. I believe the goal is to remove the OS from the "fast path" (i.e. individual data-accesses).TerryLaze said:The very article you link to states that intel tries to keep the kernel i.e OS out of it to get better performance.

It's all about the CPU having direct access without having to go through OS calls.

Apps currently make OS calls. So, either the user has to use a shim, like that article cites, or the OS needs to be modified to do effectively the same thing. So, I think we're looking at having some level of OS support, before this stuff is ready for prime time.TerryLaze said:Apps don't have to be optimized for it either,at least the apps us common people care about,they will automatically load up- and do all of their I/O -faster.