AMD-Powered PS5 Demo Puts Your PC to Shame

Unreal Engine 5 demo shows off fully dynamic lighting, extreme polygon counts, and 'cinematic' 8K textures.

Epic Games announced the successor to its ubiquitous Unreal Engine 4 game engine today, showing off a playable real-time demo running on a PlayStation 5 developer platform. That gives us insight not only into UE5's capabilities, but also just what the PS5 and RDNA 2 powered hardware can do. Specifically, it sheds even more light on AMD's upcoming Big Navi / RDNA 2 architecture, which will compete with Nvidia Ampere for the battle to be the best graphics card later this year.

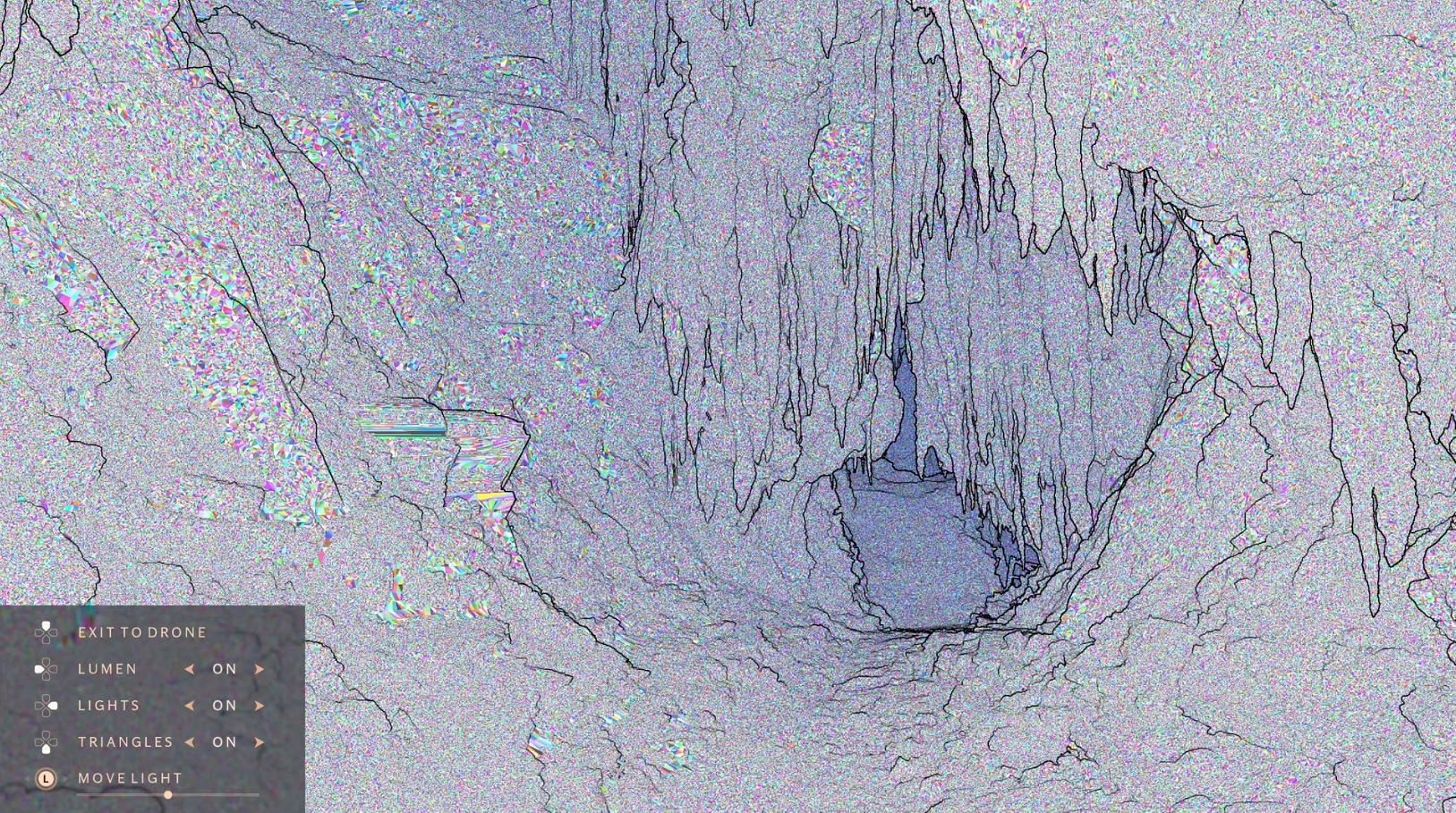

Titled Lumen in the Land of Nanite, the new Unreal Engine 5 (UE5) demo primarily focuses on UE5's 'Nanite' feature, which lets it display millions of polys on screen at once, automatically scaling down polygon counts from potentially billions of polygons. The demo also showed off the new 'Lumen' feature, which allows for fully dynamic lighting with no baking required. We take it that means ray tracing, though the demo never explicitly states whether that's the case.

Before we get into specifics, it's worth remembering that tech demos frequently outperform full games released around the same time. For example, Square Enix's 2012 Agni's Philosophy real-time tech demo showed off graphics more similar to 2016's Final Fantasy XV than the PS4's 2013 launch titles. This is because tech demos have the advantage of being tailor-made to show off game engines and the hardware running them in their best light. They don't have to worry about packing in all the assets and other mechanics a full game requires.

Still, it's hard to ignore a new game engine from the studio behind Fortnite, whose Unreal Engine 4 powers graphical standouts like Dragon Ball FighterZ and Hellblade: Senua's Sacrifice. Especially when it promises real-time environments running on PS5 that would have been pre-rendered just a generation ago.

Lumen in the Land of Nanite is about 10 minutes long, and takes us through a series of live benchmarks for UE5 and the PS5 contextualized as a Tomb Raider-esque game. This means caves full of craggy rocks to show off high poly counts, dramatically sunlit chambers to show off the lighting, crumbling cliff faces to show off spatialized audio, a room with thousands of cockroaches that all react to player input, and a particularly stunning flight sequence at the end with an "if you see it, you can go there" promise of real-time rendering even on distant objects.

One standout moment at the beginning shows off all the triangles behind a Nanite powered cave system that uses cinematic 8K assets usually reserved for film. Each asset has about a million triangles each, leading to over a billion source triangles per frame overall. This is because cinematic assets expect to be pre-rendered rather than output in real-time.

The video says that "Nanite crunches this down losslessly to around 20 million drawn triangles," with the demo showing off pixel-sized triangles stretching across entire the screen as a result. Keep in mind, even at 4K resolution, there are only 8.3 million pixels on your screen. So "lossless" here means discarding the extra geometric detail that won't actually influence the final image.

All polygons can be fundamentally broken down into triangles, so more triangles means more detail. And that's a lot of triangles, certainly more than assets built with in-game performance in mind traditionally achieve.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Given RDNA 2 will support the DirectX 12 Ultimate API, we assume the technology behind the high polygon counts uses mesh shaders. Nvidia introduced the feature with its Turing architecture back in 2018, but so far it hasn't seen much use out of the Asteroids tech demo. With it becoming part of the DX12 Ultimate official spec, plus AMD's support of the feature on the next-gen console hardware, it looks like polygon counts are about to go way up.

Later on comes a breath of fresh air for game designers, which is the completely dynamic lighting powered by Lumen. Normally, game designers have to make assets for their games called lightmaps, which essentially places pre-rendered lighting throughout a game through a process called baking. Aside from not being as versatile and looking less realistic, it's a lengthy part of designing a level that can extend work for days (speaking from personal experience).

Lumen promises lighting systems that can move and adjust accordingly in real-time, no lightmapping required. In the demo, Epic shows this off through an impressive spotlight that instantly changes the direction light bounces off the environment as the designers move it around in real-time during gameplay. It's a convincing effect, and its ability to work with Nanite to create pixel-accurate shadows is just the icing on the cake.

Interestingly, while the UE5 video says this is "fully dynamic multi-bounce global illumination," it never mentions ray tracing. Ray tracing is one way to get accurate multi-bounce GI, but there are approximations that can be faster if less accurate. Whether UE5 requires ray tracing for the lighting effects isn't clear, but we know UE4 already supports ray tracing and PS5 has ray tracing hardware, so that's not too much of a stretch.

I think it will take a couple of years before we see games that look like this, which is fair. Xbox Series X will look just as good even though this demo definitely relies on the super-fast PS5 I/O. But she still squeezes through a loading corridor, so what is even the point? pic.twitter.com/HJiPRb2Pp3May 13, 2020

3D Modelers should be just as excited by a section later on in the video that shows off a high-poly asset seamlessly imported directly into the demo from sculpting software ZBrush, which contains "more than 33 million triangles" with no need to bake in normal maps or LODs.

Similar to lightmapping, normal maps allow 3D modelers to pre-render detail into a model without needing the engine to render more polygons in real-time, while LODs control how much detail games subtract from a model as the player moves further away. Both of these are lengthy processes (also speaking from personal experience, here) that are nonetheless required to achieve acceptable performance. Being able to prioritize fidelity over optimization will be a workflow game changer.

What's even more impressive is when the demo then shows off a room with 500 copies of that same 33 million triangle statue in it, with no discernible drop in performance.

The demo also shows off fluid dynamics, enhanced audio reverb, improved physics, and a room full of Nanite-powered bug NPCs that move in response to a player-operated light source. But the show-stopper comes at the end, and doesn't require as much technical knowhow as the rest.

Displaying off a complex series of real-time buildings that stretch into the distance, the demo has the player fly through and interact with them all as they head toward a goal in the horizon, promising a level of interaction with backgrounds that traditional pre-rendered solutions can't achieve, all while maintaining the same level of detail as everything else in the demo.

Throughout all of this, the demo doesn't appear to drop frames, though some social media users are expressing doubt with the UE5's capabilities by calling out the presence of old-school tricks such as the 'loading corridor,' which gates off the player's progress while environments load. Also, the uploaded video only runs at 30 FPS, though it does have 4K support.

For our purposes, what's most interesting here is the showcase of what the PS5's RDNA 2 GPU can support. Aside from what Epic details in the video, we've noticed similarities between this demo and Nvidia's Mesh Shader Asteroids demo from 2018.

The Asteroids demo shows off the Turing GPU architectures's ability to simultaneously render complex scenery and output high framerates. Mesh shaders shift performance bottlenecks off the CPU and onto mesh shading GPU programs, allowing for substantially higher triangle counts by using programmable thread groups, rather than processing each vertex in a scene using a fixed function pipeline.

That's a lot of words to say "developers will have more control over how GPUs will render their geometry." So, like in the UE5 demo, the Asteroids demo displays a scene with 3.5 trillion source triangles and 50 million drawn triangles. This means RDNA 2 is likely either using mesh shading itself, or a similar technology exposed through the Vulkan API. Either way, it means the technique should see use in future games.

Given the showcase of the PlayStation 5 hardware and UE5, it looks like the Big Navi GPUs and Nvidia GPUs are going to get a workout in the coming years. The new features should drastically improve game development workflows, all while delivering more realistic environments to players. Holding a Master's degree in game design, I've noticed how much detail gets lost in the optimization process, and the thought of just not having to worry about it anymore has me reeling. That is, assuming you can do that and still hit 60 or 120 fps, which the PS5 and Xbox Series X are also promising as key features.

Michelle Ehrhardt is an editor at Tom's Hardware. She's been following tech since her family got a Gateway running Windows 95, and is now on her third custom-built system. Her work has been published in publications like Paste, The Atlantic, and Kill Screen, just to name a few. She also holds a master's degree in game design from NYU.

-

deesider If developers are intending to drop sculpts straight from zbrush into the game - expect new games to top 500GB!Reply -

TCA_ChinChin Reply

A hilarious dystopia. Imagine using 16 tb hdds just to store your most played games.deesider said:If developers are intending to drop sculpts straight from zbrush into the game - expect new games to top 500GB! -

Deicidium369 Reply

Lol, yeah, no.Admin said:Unreal Engine 5 demo showing off what PlayStation 5, Zen 2, and RDNA 2 can do on next-gen console and current PC hardware.

AMD-Powered PS5 Demo Puts Your PC to Shame : Read more

AMD RDNA2 ray tracing is done in the cloud. -

InvalidError Reply

I'm sure they'll come up with some forms of geometry compression to greatly reduce size while retaining most details. 100GB per game is already getting ridiculous IMO.deesider said:If developers are intending to drop sculpts straight from zbrush into the game - expect new games to top 500GB! -

AlistairAB This is really cool, a kind of mesh shaders approach using screen space (pixel coverage count) to determine real on the fly dynamic LoD changes? That's what it seems like. And then using fast i/o from the SSD to keep original cinematic LoD models available for those mesh shaders. Combine that with full global illumination, and we didn't even need RT for anything.Reply -

azcn2503 This looks great! Whenever RDNA 2 cards drop and a Linux driver becomes available, I'll be upgrading from this GTX 970.Reply -

rantoc According to Sweney/Epic this runs good on a 2070S so a proper wording would been "Put alot of PC to shame"... since if a 2070S runs this good... i wounder what a 2080TI would do for instance... put PS5 to shame?Reply -

gggplaya ReplyInvalidError said:I'm sure they'll come up with some forms of geometry compression to greatly reduce size while retaining most details. 100GB per game is already getting ridiculous IMO.

It's definitely going to be much more than 100GB per game on nextgen. This is why Microsoft is going to sell those 1TB SSD cards with their next gen console. The cards connect to the internal PCIe bus directly, so it's NVMe. -

Nick_C Reply

I'd be interested in a link to a source for that information.Deicidium369 said:AMD RDNA2 ray tracing is done in the cloud. -

jeremyj_83 Reply

Pushing the idea that RDNA2 does ray tracing in the cloud again. That slide that you linked a month or so ago disproves your statement. When you look at what it says, under Hardware it states Next Gen RDNA, that is most likely RDNA2 so the ray tracing is done there. Later on it says it will be cloud based which will free up your resources for other things.Deicidium369 said:Lol, yeah, no.

AMD RDNA2 ray tracing is done in the cloud.