Newly Revealed RISC-V Vector Unit Could Be Used for AI, HPC, GPU Applications

Semidynamics announces high-performance RISC-V IP.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Semidynamics has introduced one of the industry's first RISC-V vector units that could be used for highly parallel processors, such as those used for artificial intelligence (AI), high-performance computing (HPC), and even graphics processing if equipped with appropriate special-purpose IP. The announcement marks an important milestone in the development of the RISC-V ecosystem.

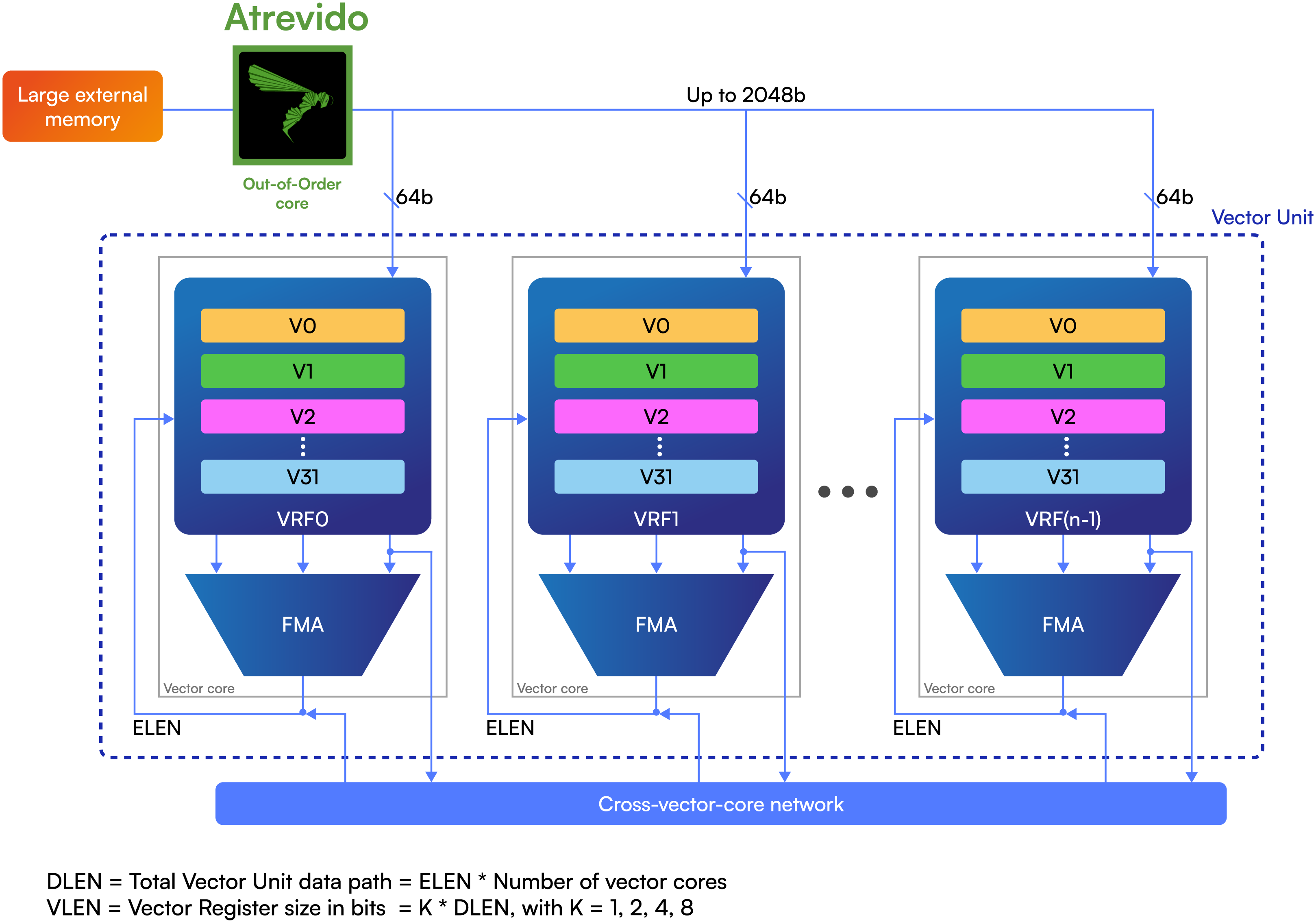

Semidynamics' vector unit is fully compatible with the RISC-V Vector Specification 1.0 and offers a plethora of additional, adaptable features that enhance its data processing capabilities. The VU is made up of several 'vector cores', which are comparable to GPU cores from AMD, Intel, and Nvidia, and are designed to execute multiple computations simultaneously. Each vector core is equipped with arithmetic units that can perform operations such as addition, subtraction, fused multiply-add, division, square root, and logical operations.

The vector core developed by Semidynamics can be customized to support various data types, including FP64, FP32, FP16, BF16, INT64, INT32, INT16, or INT8, depending on the specific needs of the customer's application. Customers can also choose the number of vector cores to be incorporated into the Vector Unit, with options of 4, 8, 16, or 32 cores, providing a broad spectrum of power and performance-area trade-off possibilities.

Semidynamics' Vector Unit features a high-speed network that connects all vector cores, facilitating data shuffling for specific RISC-V instructions. Uniquely, Semidynamics allows customization of the vector register bit size (VLEN), offering 2X, 4X, and 8X ratios in addition to the standard 1X. When VLEN is larger than the total data path width (DLEN), vector operations take multiple cycles, which can help manage memory latencies and reduce power use.

"This unleashes the ability for the Vector Unit to process unprecedented amounts of data bits," said Roger Espasa, CEO and founder of Semidynamics. "And to fetch all this data from memory, we have our Gazzillion technology that can handle up to 128 simultaneous requests for data and track them back to the correct place in whatever order they are returned. Together our technologies take RISC-V to a whole new level with the fastest handling of big data currently available that will open up opportunities in many application areas of High-Performance Computing such as video processing, AI and ML."

Semidynamics says that its Vector Unit can be paired with its Atrevido out-of-order core (and upcoming in-order cores) to build CPUs or other applications that need both vector processing and general-purpose processing.

"Our recently announced Atrevido core is unique in that we can do 'Open Core Surgery' on it," said Espasa. "This means that, unlike other vendors’ cores that are just configurable from a set of options, we actually open up the core and change the inner workings to add features or special instructions to create a totally bespoke solution. We have taken the same approach with our new Vector Unit to perfectly complement the ability of our cores to rapidly process massive amounts of data."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Semidynamics is one of a few companies that develop IP for AI and HPC applications. In addition to SiFive, only Tenstorrent is developing high-performance RISC-V IP that can be used to build processors and AI accelerators. Meanwhile, unlike Semidynamics, Tenstorrent offers its own hardware processors, not just licensable IP.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user ReplyThe VU is made up of several 'vector cores', which are comparable to GPU cores from AMD, Intel, and Nvidia

Using a general-purpose ISA in a GPU only makes sense for specialized applications. If your goal is to build a highly-scalable accelerator which can effectively compete with purpose-built GPUs, then you will befall the same fate as Xeon Phi. General-purpose ISA drags in too much overhead that purebred GPUs don't have to deal with.

Moreover, if they're trying to efficiently tackle AI workloads, then they'll need matrix-multiply hardware. Vector-level acceleration is no longer enough.

only Tenstorrent is developing high-performance RISC-V IP that can be used to build processors and AI accelerators.

Ah, but they didn't get rid of their TenSix cores. Those are the main workhorse of Tenstorrent's accelerators. From the linked article:

"In addition to a variety of RISC-V general-purpose cores, Tenstorrent has its proprietary Tensix cores tailored for neural network inference and training. Each Tensix core comprises of five RISC cores, an array math unit for tensor operations, a SIMD unit for vector operations, 1MB or 2MB of SRAM, and fixed function hardware for accelerating network packet operations and compression/decompression."

In addition to the matrix/tensor unit, the local SRAM is also key. That's something which doesn't fit in well with general-purpose CPUs. They can have cache, but cache has additional latencies and worse energy efficiency. -

So I assume this Gazzillion technology should remove the latency issues that can occur when using CXL technology to enable far away memory to be accessed at the supercharged rates that it was designed to deliver.Reply

According to the company, the Gazzillion technology was specifically designed for Recommendation Systems that are a key part of Data Centre Machine Learning.

So by supporting over a hundred misses per core, a SoC can be designed which can deliver highly sparse data to the compute engines without a large silicon investment. The core can also be configured from 2-way up to 4-way to help accelerate the not-so-parallel portions of Recommendation Systems.

Also, with its complete MMU support, Atrevido should also be Linux-ready, while supporting cache-coherent, and multi-processing environments from two and up to hundreds of cores. -

bit_user Reply

They said:Metal Messiah. said:So I assume this Gazzillion technology should remove the latency issues that can occur when using CXL technology to enable far away memory to be accessed at the supercharged rates that it was designed to deliver.

"to fetch all this data from memory, we have our Gazzillion technology that can handle up to 128 simultaneous requests for data and track them back to the correct place in whatever order they are returned."

So, two questions I have:

Is this specific to the core or the memory controller?

How does it compare with the OoO load queues in the latest x86 and ARM CPUs?

I'm guessing they mean the core. I'll just pick one point of comparison, but data on Zen 4 and recent ARM cores probably shouldn't be too hard to find:

"Intel claims the load queue has 240 entries in SPR. I assume the same applies to Golden Cove. We measured 192 entries."

Source: https://chipsandcheese.com/2023/01/15/golden-coves-vector-register-file-checking-with-official-spr-data/

They have more to say about it, if you're interested. However, it tells us that if there's anything special about "Gazzillion technology" it might be that it's large relative to the size & complexity of their core - not in the absolute sense.

And no, I don't think it's nearly enough to hide CXL latency. GPUs use SMT for latency-hiding, which I think is the only approach with a chance of hiding that much latency. For good throughput, most of your data shouldn't be on the remote side of a CXL link. A quick glance at GPU memory bandwidth tells you it doesn't have nearly enough to act as a substitute. Gaming GPUs have up to 1 TB/s; datacenter GPUs have more like 3.2 TB/s. A 16-lane CXL link only gives you 64 GB/s - off by more than an order of magnitude.

That's called marketing.Metal Messiah. said:According to the company, the Gazzillion technology was specifically designed for Recommendation Systems that are a key part of Data Centre Machine Learning.

; ) -

bit_user Reply

Canonical is a parasite. They mostly just ride the coat tails of Debian and don't contribute nearly as many upstream patches or projects as Redhat or Suse.PlaneInTheSky said:Canonical was smart developing Ubuntu for Risc.

Besides kernel contributions, most of the userspace Linux ecosystem (Gnome, systemd, pipewire, NetworkManager, etc.) is being developed by Redhat. I don't love everything they're doing, but there are too few others.

IIRC, the only thing Canonical recently did was their own container packaging (Snap) that's incompatible with other efforts (Flatpak, AppImage). -

Replybit_user said:So, two questions I have:

Is this specific to the core or the memory controller?

I guess core, or maybe even both ! Ok, let me go through that other link which you gave as well.

Assuming if Gazzillion technology can handle highly sparse data with long latencies and with high bandwidth CXL memory systems that are typical of current machine learning applications, then this could add tiny buffers in key places to ensure that the cache lines keep the core running at full speed rather than waiting for data.

Btw, Atrevido supports 64-bit native data path and 48-bit physical address paths.

bit_user said:That's called marketing.

; )

Of course ! Or more like a promotion. 😃