OEMs Target Miners with RTX 2060 12GB, But Gamers Need It More

At least one AIB said their RTX 2060 12GB cards are mostly for miners.

Officially, it appears the GeForce RTX 2060 12GB cards are going on sale today. And by "on sale" we mean "sold out unless you're a miner." Perhaps that's a bit unfair, but we asked around in advance of the rumored launch — a launch Nvidia basically didn't comment on or provide any details about — and were repeatedly told that the various graphics card companies weren't planning on sampling the RTX 2060 12GB to reviewers. That's fine on one level, as it's not like this is going to suddenly jump into our list of the best graphics cards, but the whole situation is a bit weird. But then things took a turn for the worst.

One OEM (who shall remain nameless) specifically told me, "This will be more of a mining focused card so HQ is not going to do a big media push on it." Wait, what? A mining-focused RTX 2060 with 12GB of VRAM? How in the hell is that a mining focused card?

Let me explain. We've tested the best GPUs for mining, which mostly means seeing how the various GPUs perform at Ethereum mining — that's still the most profitable option, especially with the latest delay to the proof of stake transition. Ethereum hashing ends up being highly dependent on memory bandwidth. A GPU with twice the memory bandwidth generally can do the ethash calculations twice as fast. Which means the RTX 2060 12GB absolutely shouldn't be a miner focused card, unless the AIBs know they can get more money for it by selling direct to miners.

The original RTX 2060 comes with 6GB of GDD6 memory clocked at 14Gbps. Doubling the memory might mean there are a few mining algorithms that would be able to run better... but right now Ethereum only needs a bit more than 4GB of VRAM, and the size of the DAG (directed acyclic graph) at the heart of ethash won't exceed 6GB until around May 2023. By that time, I sincerely hope Ethereum will finally switch to proof of stake, but given the repeated delays maybe that won't happen.

Still, 6GB VRAM should be sufficient for at least two more years of mining. Doubling that to 12GB won't really help matters, and what's more, the RTX 2060 isn't even particularly fast at mining. After tuning, we managed about 33 MH/s. You know what can also do 33 MH/s? The GTX 1660 Super. That's because it has the same 192-bit memory interface.

If you're using standard mining software, the hashrate limiter on the RTX 3060 GPUs might mean you only get about 33 MH/s as well (as opposed to the 48 MH/s you could get without LHR). But that still makes the RTX 2060 12GB at best a match for the RTX 3060 LHR when it comes to mining. But then it still uses more power, and it should cost more than the RTX 2060 6GB cards that already exist while providing no tangible benefit.

But you know who might actually want an RTX 2060 12GB? Gamers. Sure, that's partly because the cards that they'd really like to buy, like the RTX 3060 and RX 6700 XT, are all woefully overpriced or sold out. But based on our GPU benchmarks hierarchy, at least in situations where the RTX 2060 doesn't run out of VRAM, the RTX 3060 is only about 20% faster. Doubling the VRAM to 12GB means it would be pretty much universally 15–20% slower (sometimes less), presumably at a lower price. That actually sounds pretty good to me right now, and also makes the whole situation even more troubling.

There are credible rumors that we'll see RTX 3050 desktop cards some time in January 2022. Unlike the mobile RTX 3050 and RTX 3050 Ti, the desktop cards will purportedly still use GA106 silicon instead of GA107. Except they're rumored to have 8GB of VRAM, which means they might be harvested GA106 with only four of the memory channels active (out of a potential eight), giving just a 128-bit memory interface. Alternatively, they might have 1GB chips and a 256-bit interface, but that would also mean a more expensive PCB (printed circuit board), which goes against the idea of making a budget GPU.

Anyway, based on the rumored specs, an RTX 3050 desktop card would very likely deliver performance that's — wait for it! — about 15–20% slower than the RTX 3060. Actually, if it's a 128-bit interface, it might be more than 20–30% slower, meaning the RTX 2060 12GB would actually be faster than the future RTX 3050.

Ultimately, what we really need right now is more graphics cards in the hands of gamers. But how do you tell the difference between a gamer and a miner, or a gamer and a scalper? Unless someone admits to being one of the latter, about the only thing a company can really do is to try and make it more difficult for miners and scalpers to buy cards, but why should they do that if they can make more money off of such customers?

We're still trying to acquire an RTX 2060 12GB card for review. Performance should land a bit above the RTX 2060 6GB and a bit below the RTX 2060 Super, except in the select situations (e.g., Battlefield 2042) where 8GB of VRAM isn't quite sufficient for certain settings. Now granted, you could just turn down a few settings, but it is possible to find games and settings where performance of the 6GB cards drops off a cliff.

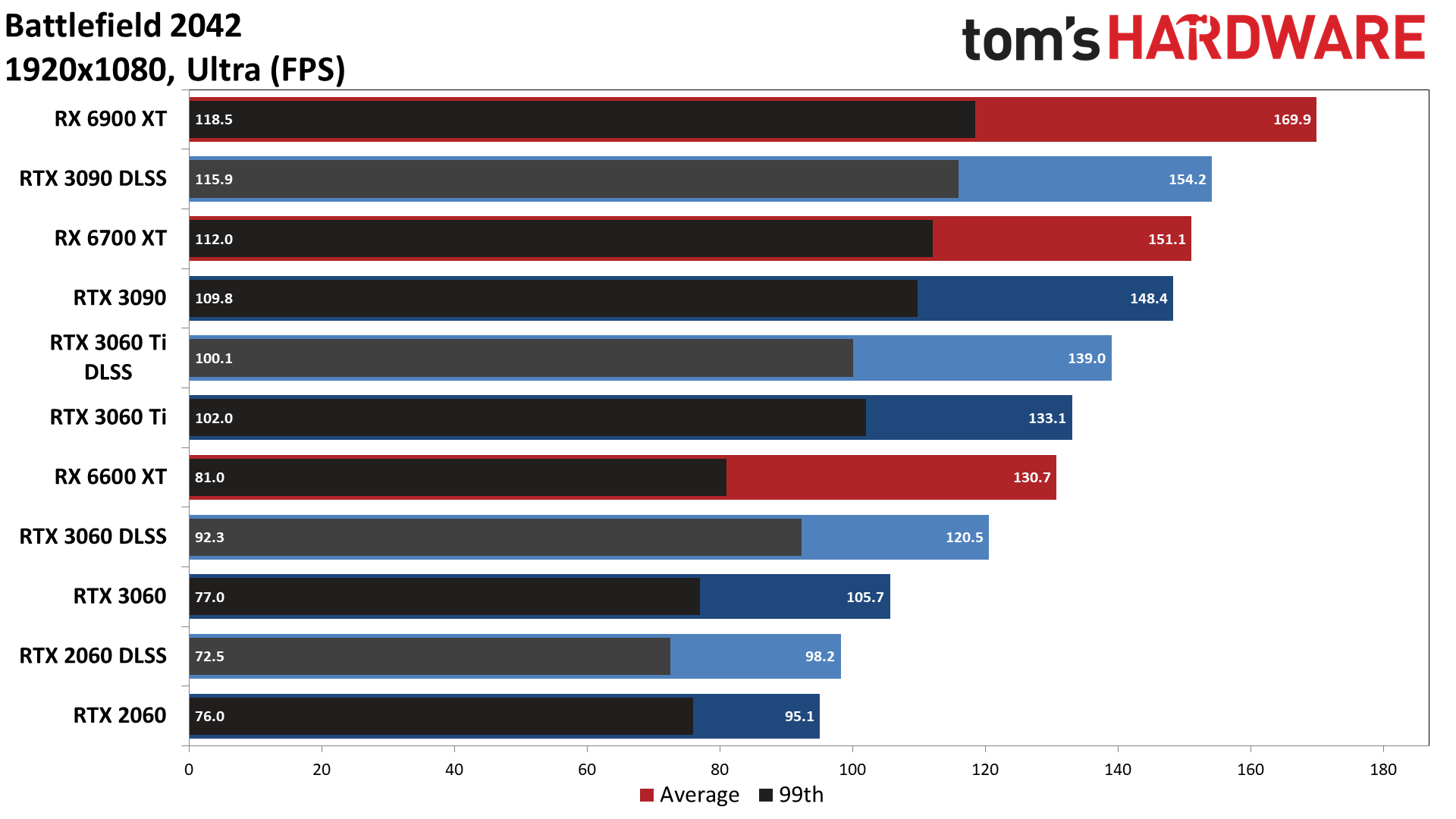

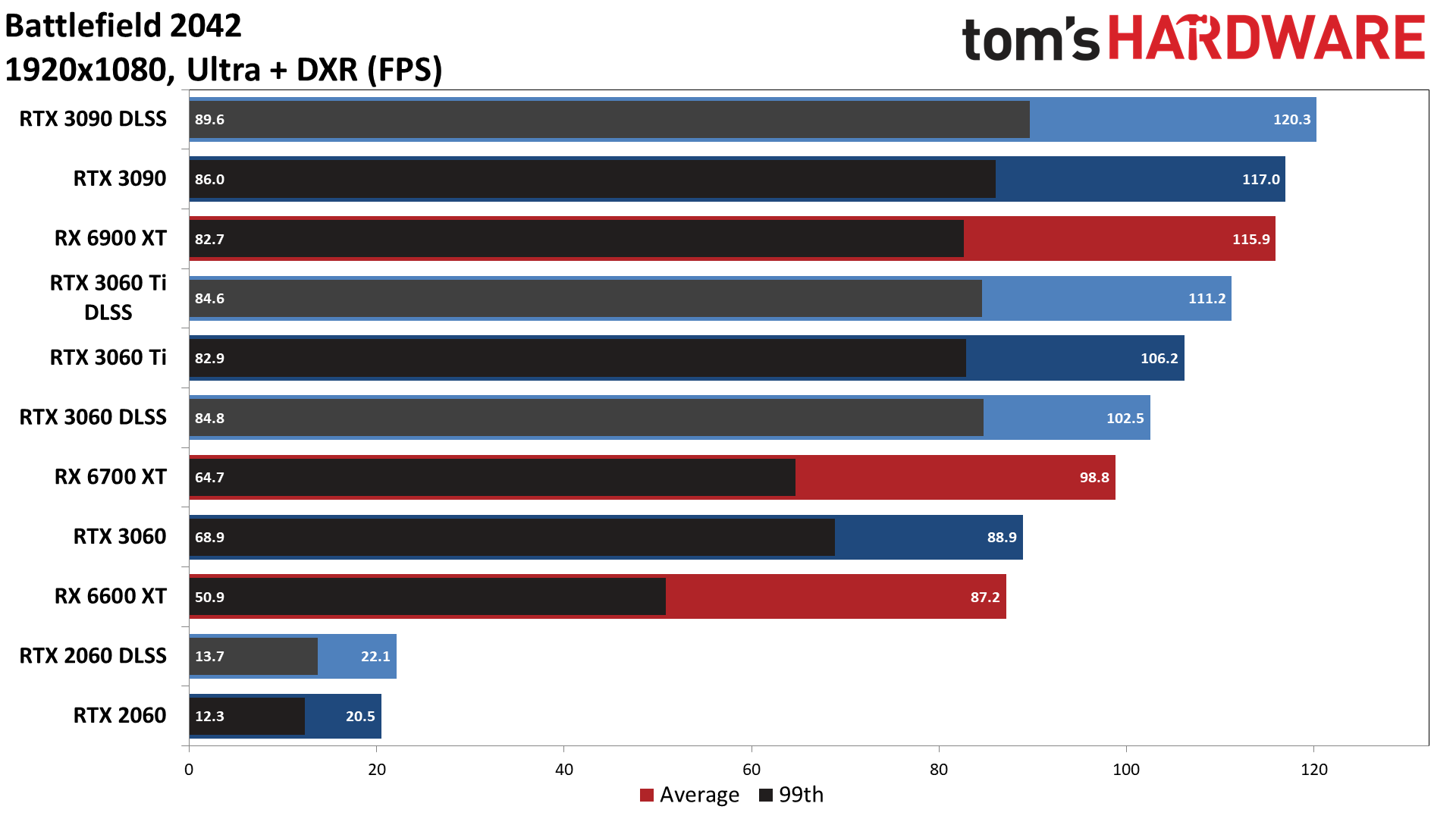

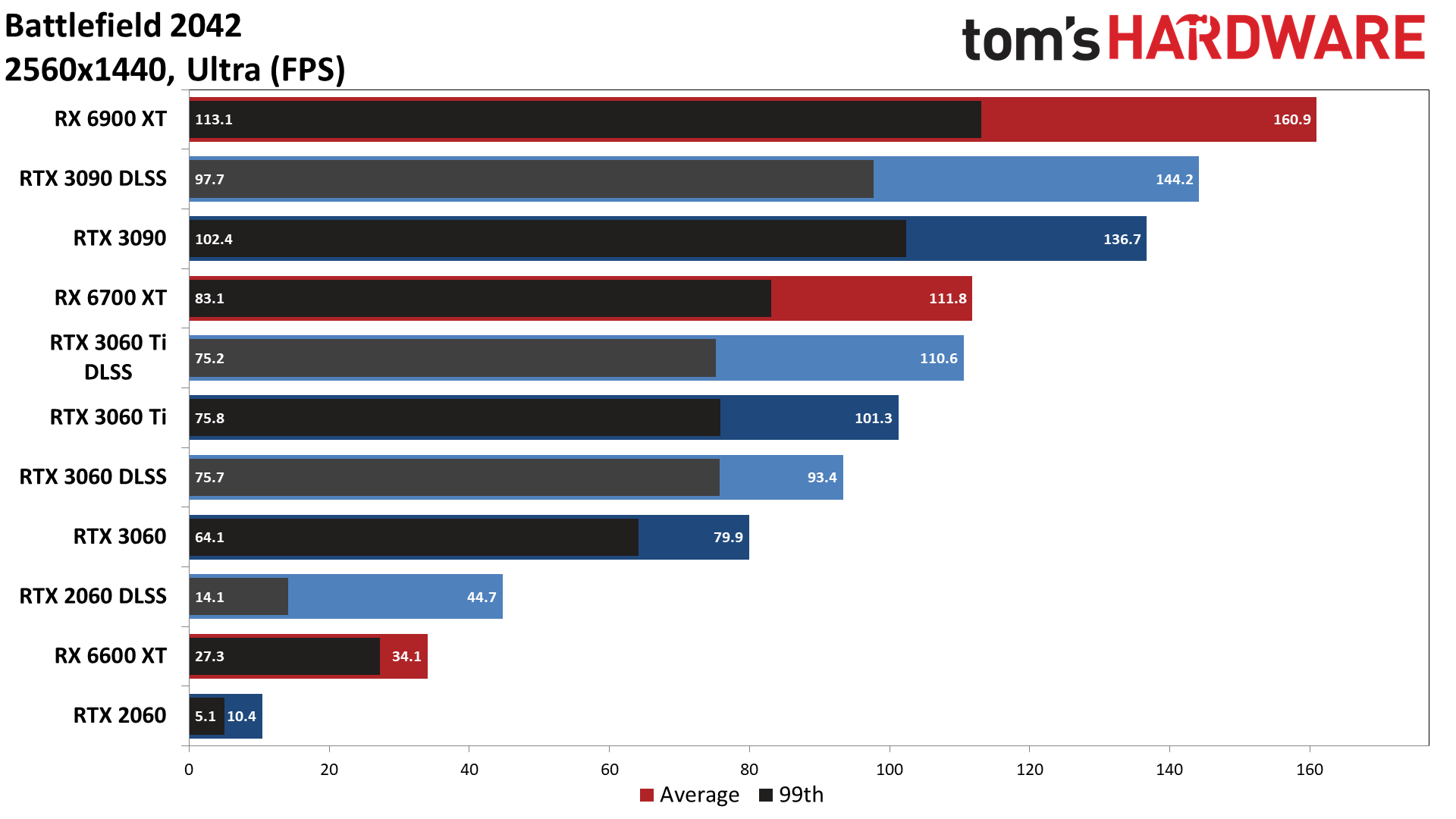

Pay specific attention to the RTX 3060 and RTX 2060 in these charts.

Pay specific attention to the RTX 3060 and RTX 2060 in these charts.

Pay specific attention to the RTX 3060 and RTX 2060 in these charts.

Based on my testing, at 1080p ultra, the RTX 3060 got 106 fps and the RTX 2060 got 95 fps. Turn on DXR and the 3060 performance drops 17% to 89 fps, but the RTX 2060 drops nearly 80% to just 20.5 fps. If the 2060 had 12GB VRAM, it should still only be about 10–15% slower, meaning around 75–80 fps. Not even DLSS can help, because the problem is all the RT stuff consumes memory along with the textures.

Okay, ray traced ambient occlusion isn't really necessary, but it's not just RTAO. Battlefield 2042 runs at 80fps at 1440p ultra (without RTAO) on the 3060, but 10 fps on the 2060 6GB with those same settings. Normally, when VRAM capacity isn't the bottleneck, the 3060 is only about 15–20% faster than the RTX 2060, meaning 1440p with DLSS Quality should have allowed the 2060 to run 1440p ultra at 75-80 fps, but instead it dropped to 45 fps.

And again, I'm not just pointing at Battlefield 2042. There are other games that exhibit similar behavior (Godfall immediately springs to mind, along with Microsoft Flight Simulator), and with the latest consoles having more RAM, the number of games that will push well beyond 6GB is only going to grow. A 15% drop in performance, from a 3060 to a 2060 12GB, is not insurmountable. A 50-80% drop, though? Yeah, you'll need to do a lot more than just tweak a couple of settings.

The bottom line is that the RTX 2060 remains a very competent gaming GPU, and with 12GB it eliminates one of its biggest bottlenecks. In a time of GPU shortages, and with GPU prices often inflated by 50–100%, having any decent option for gamers would be better than nothing. The 2060 12GB won't ever be faster than an RTX 3060, but assuming the RTX 3050 ships with a 128-bit memory interface, I will be very surprised if the RTX 2060 12GB doesn't end up being faster. How much will either card cost, at retail? That remains the $500 question.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- Thanks for writing about this.Reply

They (nVidia and partners) trying to hide the bleeding dagger, but the floor is already splattered and red. I hope more people realizes that Companies are not your darlings nor your friends. Hold them accountable and don't forget their transgressions.

Regards. -

Augusstus If this is true and not just a made up clickbait triggering article, then at least name said company so we can decide who to boycott.Reply

Mod Edit - Language -

Eximo I assumed these cards were mostly going to end up in OEM systems anyway. Not directly on the market.Reply -

cryoburner Reply

Probably all of them, even if only one admitted it. I don't see why they would want to hurt relations with a company they are asking to send them review samples, just because they provided them with a bit of additional information.Augusstus said:If this is true and not just a made up clickbait triggering article, then at least name said company so we can decide who to boycott.

Though really, it makes perfect sense that they wouldn't sample these to reviewers. The 2060 is a nearly 3 year old card at this point, so there isn't going to be a big marketing push for it. And it will most likely perform very similar to the existing 2060 in nearly all of today's games that don't need the extra VRAM at the resolutions a 2060 can handle. The extra VRAM was most likely just added so that they could justify slapping a higher MSRP on the card, more than anything. -

Endymio --"One OEM (who shall remain nameless) specifically told me, "This will be more of a mining focused card so HQ is not going to do a big media push on it." Wait, what? A mining-focused RTX 2060 with 12GB of VRAM? How in the hell is that a mining focused card? " --Reply

I'll translate for you, Jarred. These cards are, like nearly all other products, going to be sold for what the market will bear, rather than the "suggested" MSRP. The OEM knows the reaction from the gamer market will be to whine, complain, screech, browbeat, and threaten them, whereas miners will simply silently fork over payment. If you doubt this, read the above posts ... or the depressingly similar entitlement-mentality posts of prior threads.

Any gamers who don't like the situation -- you have only yourselves to blame. -

escksu ReplyAugusstus said:If this is true and not just a made up clickbait triggering article, then at least name said company so we can decide who to boycott.

Mod Edit - Language

Boycott? It doesn't work. There are just so few GPU manufacturers.... And then, all are the same. If gamers decide to boycott a particular brand, then miners will be more than happy to snap up the additional cards. -

escksu ReplyEndymio said:--"One OEM (who shall remain nameless) specifically told me, "This will be more of a mining focused card so HQ is not going to do a big media push on it." Wait, what? A mining-focused RTX 2060 with 12GB of VRAM? How in the hell is that a mining focused card? " --

I'll translate for you, Jarred. These cards are, like nearly all other products, going to be sold for what the market will bear, rather than the "suggested" MSRP. The OEM knows the reaction from the gamer market will be to whine, complain, screech, browbeat, and threaten them, whereas miners will simply silently fork over payment. If you doubt this, read the above posts ... or the depressingly similar entitlement-mentality posts of prior threads.

Any gamers who don't like the situation -- you have only yourselves to blame.

Yeah. I have to remind everyone that this is a free market and a gaming card is not considered an essential item. This means prices are determined by supply and demand. Be it a miner or gamer, they are just people who wants these card for their own purpose and you cannot say who should have it. So, yes, you have only yourselves to blame. -

Sleepy_Hollowed Video card manufacturers are never going to feel any hurt until crypto mining stops, but the video game industry for PCs is going to get hurt pretty bad soon if this keeps up.Reply

I mean, good, since I’d rather Indies with low GPU requirements get all the money, but it’s going to be a huge impact, this is just not sustainable.