Gamer Pairs RTX 4070 with Pentium. DLSS 3 Makes it Playable

Frame generation takes a load off the CPU.

When you're building a PC for gaming, you want to put a lot of your budget into buying one of the best graphics cards, but you need to save a few dollars for a CPU that's capable of keeping up. Normally, you wouldn't pair a $599 GPU with an $89 CPU, because the processor would hold performance back.

However, in the interest of science, YouTuber and Gamer RandomGaminginHD put a new RTX 4070 into a test system alongside and a Pentium Gold G7400 and the results were surprisingly playable, thanks mostly to the card's DLSS3 frame generation feature. You can see the output in detail in the video below.

The Pentium Gold G7400 CPU features just two physical cores and four threads total with a base frequency of 3.7 GHz. The chip, which currently goes for $89 in the U.S., launched in 2022 and runs in the same LGA 1700 socket as an Intel 12th or 13th Gen Core CPU. So it's not an old product, just a really cheap one.

Just launched last week, the RTX 4070 uses an AD104 GPU with 5,888 GPU cores, 184 Tensor Cores and a boost clock of 2,475 MHz, along with 12GB of VRAM. In our review of the RTX 4070, we noted that the card offers excellent ray tracing and AI for a fairly-reasonable (by today's standards) $599.

RandomGaminginHD begins his video by showing some games that run decently with this fast GPU / slow CPU combo and no frame generation. He demos Forza Horizon 5 running at 1440p ultra with an 83 fps average and 44 fps 1% low. Red Dead Redemption 2 at 1440p Console Quality settings averages 64 fps with a 35 fps 1% low. Many gamers would argue that any frame rate above 30 fps is playable while 60 fps is the minimum smooth experience.

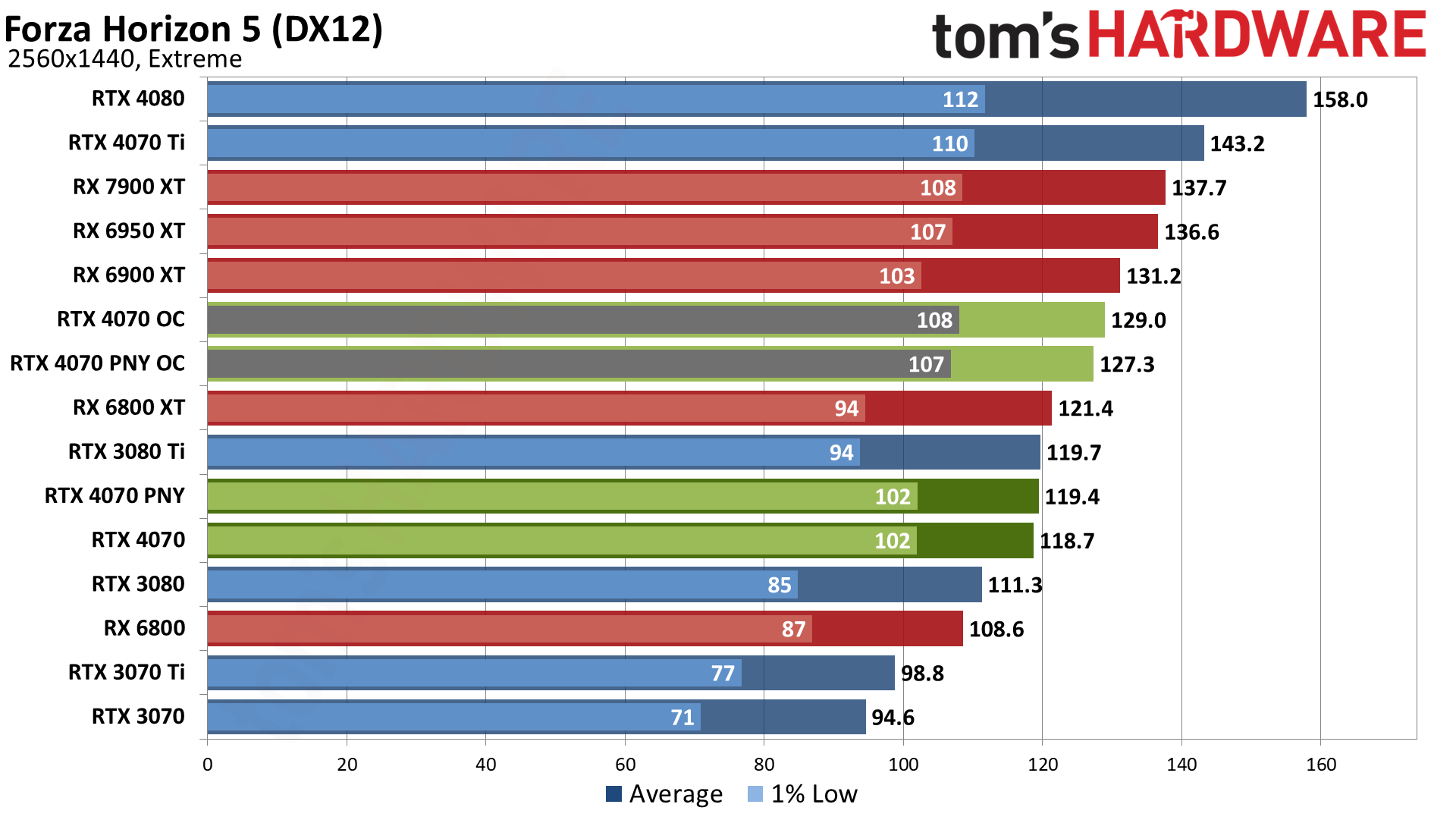

When we tested an RTX 4070 with a Core i9-13900K CPU, which ensures that there is no CPU bottleneck, we got much higher numbers. Forza Horizon running 1440p Extreme (higher than ultra) ran at an average of 118.7 fps with a 102 fps 1% low. Red Dead Redemption 2 running at 1440p Max settings played at an average of 85.6 fps with a 1% low of 66 fps.

However, RandomGaminginHD shows that many games are more CPU-dependent and will give you an unplayable experience with a Pentium CPU. For example, he demos Kingdom Come Deliverance running at an average of 45 fps with a 1% low of just 10 fps. That low rate would be slide-show like.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nvidia's RTX 4000 series GPUs, including the RTX 4070, are the first to feature the company's DLSS 3 (Deep Learning, Super Sampling) technology and its Optical Multi Frame Generation feature, which uses AI to create additional frames using the card's tensor cores. Because all of this processing takes place on the GPU, frame generation should help with CPU-limited games.

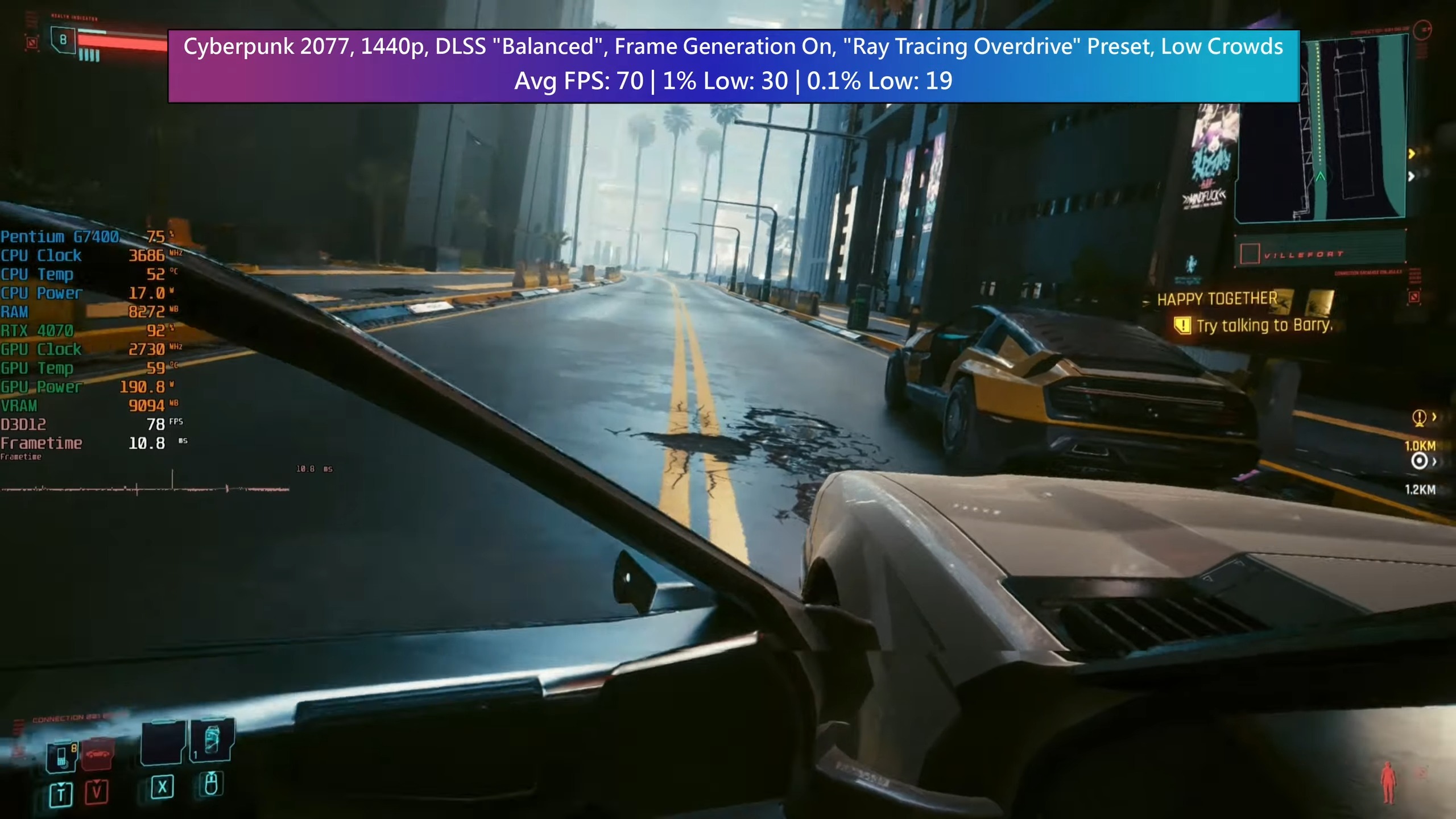

To see how much of a difference frame generation makes for a low-performance CPU, RandomGaminginHD showed Cyberpunk 2077 playing at 1440p with ray tracing overdrive and low crowds. Without frame generation, the game ran at an average frame rate of 43 fps with an unplayable 1% low of 25 fps. However, with frame generation on, those numbers jumped up to 70 fps average with a 30 fps 1% low.

However, not all games become playable when frame generation is enabled. In the video, we see Witcher 3 running at a 55 fps average with a horrible 1% low of 3 fps, despite having frame generation on. It's also important to note that the game itself must support DLSS 3 and, right now, only a few dozen games do so.

So, what we learned from RandomGamesinHD's video is that you can use a low-end, Pentium GPU with a medium-end card like the RTX 4070 and some demanding games will be playable. This is a highly unlikely situation in the real world, unless you had a very low-end PC, had just enough money to buy a GPU and decided to delay your CPU upgrade for a few weeks. However, in general, you should go with a better processor, preferably one of the best CPUs for gaming.

Avram Piltch is Managing Editor: Special Projects. When he's not playing with the latest gadgets at work or putting on VR helmets at trade shows, you'll find him rooting his phone, taking apart his PC, or coding plugins. With his technical knowledge and passion for testing, Avram developed many real-world benchmarks, including our laptop battery test.

-

bit_user It's an interesting experiment, I suppose... but rather pointless, when the G7400 is currently selling for about the same price as an i3-12100F.Reply

I guess if you had a prebuilt with the G7400, it shows what kind of gaming performance you could hope to achieve, but then I doubt such a low-end prebuilt would accommodate a card like the RTX 4070.

Another thing it tells us is how dependent games have now become on > 2C/4T. -

Thunder64 ReplyIn our review of the RTX 4070, we noted that the card offers excellent ray tracing and AI for a fairly-reasonable (by today's standards) $599.

The media should not be excusing these prices. People on forums certainly aren't and luckily see through the nonsense. -

atomicWAR Reply

Yeah core usage is jumping. If history is any indication I suspect we'll be mostly stuck with 6/8 cores in gaming until consoles (next gen or gen after?) have more cores onboard themselves. Though with ray and soon path tracing taking over (currently 43% of Nvidia gamers if Nvidia is to be believed)I could be wrong. I know MS claims their next console will be all in on AI so who knows what direction/implementations will be used though I suspect most of it will be via the gpu portion of the soc.bit_user said:Another thing it tells us is how dependent games have now become on > 2C/4T. -

BFG-9000 Should interpolated frames be considered part of the framerate? nVidia seems to be using it mainly to show a framerate-doubling performance boost from the 40 series over the 30 series.Reply

The reviews I've seen show the extra latency (from delaying the showing of an already rendered frame until the system can generate an interpolated one and insert it every other frame) isn't a problem as letting the card do it is way faster than any TV setting doing the same, but the image quality loss can be severe if it's the entire background/POV moving at a quick pace. Hopefully that can be improved upon, as it really seems to be a fine technology that can accurately insert fake frames in-between the real frames that the game engine actually produced, exactly where they would've been if the engine could've run faster. It's currently best for making very small objects streaking across the screen appear to move smoothly and unblurred.

As for lumping the fake frames in with framerate? C'mon--this is obviously nVidia's solution to their cards and drivers needing way more CPU power than AMD's -

bit_user Reply

I honestly didn't believe the current-gen consoles would feature 8x Zen2 cores. So, I guess I should probably double-down and state right here & now that the next-gen models will hold at 8 cores.atomicWAR said:If history is any indication I suspect we'll be mostly stuck with 6/8 cores in gaming until consoles (next gen or gen after?) have more cores onboard themselves.

: D

Do we really think games need more than 8x Zen 4 cores? For what? Physics and AI? Add more GPU cores, instead. Keep in mind that PS4 and XBox One got by with lousy, single-threaded Jaguar cores. -

ingtar33 Reply

yeah, that line caught my attention too. makes you wonder if nvidia sponsored this article, sort of reminds me of the "just buy it" argument about the 2xxx series gpus made here.Thunder64 said:The media should not be excusing these prices. People on forums certainly aren't and luckily see through the nonsense. -

Alvar "Miles" Udell Meanwhile AMD and Intel: NO! YOU NEED THE NEW HIGHEST END HARDWARE! YOUR OLD STUFF IS CRAP!!!!Reply

Is this really news to anyone? At higher detail levels and resolutions the CPU is far less important than a GPU, and with quality reduction fakery techniques like DLSS the GPU and CPU are also less dependent.

I'll go back to TH's CPU scaling article with the 3080. Even with a 15 year old quad core processor you can still do 120fps at 2560x1440. You add in things like DLSS and you can "increase" the detail level. Use frame interpolation with DLSS3 and you can "increase" the frame count.

-

jkflipflop98 Yeah that's great if you're only ever going to play games that utilize DLSS. Try streaming said game or rendering a 4K video and you're going back in the hurt locker.Reply -

atomicWAR Replybit_user said:I honestly didn't believe the current-gen consoles would feature 8x Zen2 cores. So, I guess I should probably double-down and state right here & now that the next-gen models will hold at 8 cores.

: D

Do we really think games need more than 8x Zen 4 cores? For what? Physics and AI? Add more GPU cores, instead. Keep in mind that PS4 and XBox One got by with lousy, single-threaded Jaguar cores.

I tend to agree with you. Unless there becomes some killer reason to add cores MS and Sony will likely stick to 8 for at least one more gen if not two. I could see the Switch 2 sporting 12 arm cores if it goes with a nvidia orin soc varient as rumored. But even if it does I doubt it have much affect on x86-64 in gaming due to arm's lower ST performance but I could be wrong. -

bit_user Reply

Nintendo does enough volume that I'd imagine Nvidia wouldn't mind doing a custom SoC for them which cuts back on the core count. When you're talking about volumes like 10 M+ chips, the extra die savings will surely add up.atomicWAR said:I could see the Switch 2 sporting 12 arm cores if it goes with a nvidia orin soc varient as rumored. But even if it does I doubt it have much affect on x86-64 in gaming due to arm's lower ST performance but I could be wrong.

BTW, the Orin Nano has only 6 CPU cores and 1024 CUDA cores. No idea if it's a separate die or just a partially-disabled one, but comparing the prices suggests the former:

https://store.nvidia.com/en-us/jetson/store/?page=1&limit=9&locale=en-us

I'm betting Nintendo is way too cheap to go for the full deal.