Starfield Perf Disparity Between AMD and Nvidia GPUs Analyzed

Chips and Cheese digs deeper into the code execution on several GPUs.

Starfield has received a lot of heat for its supposed lack of optimizations and abnormally poor performance on Nvidia and Intel GPUs. Right now, our initial Starfield benchmarks show the RDNA3-based RX 7000-series cards often punching well above their weight class, or alternatively Nvidia GPUs coming up short of expectations. Chips and Cheese ran some performance analyzers on Starfield to try and determine why the engine favors RDNA 3 GPUs. The analysis centers on cache hit rates, shader scheduling, and other factors — though at present it doesn't really provide a definitive answer as to why AMD's GPUs do better.

The Chips and Cheese article looks at several instances of Starfield's pixel and compute shaders and how they're processed inside AMD's RDNA 3 GPU cores (WGPs) versus Nvidia's RTX GPU cores (SMs). The outlet found that Starfield's pixel and compute shaders currently make better utilization of several aspects of AMD's RDNA 3 GPUs. These include RDNA 3's larger vector register files, superior thread tracking, and the use of an L0, L1, L2, and L3 cache design. Nvidia's RTX 40-series GPUs only have L1 and L2 caches, by comparison, and things are even worse on Nvidia's RTX 30- and 20-series GPUs that have significantly smaller L2 cache sizes.

All these architectural traits appear to help AMD's RDNA 3 graphics cards dominate Nvidia in early Starfield benchmarks. Nvidia's architecture doesn't keep pace with RDNA 3 in many of these cases, causing its GPUs to lag in performance as a result. However, as we've seen from dozens of other examples, there's plenty that can be done to equalize performance margins between Nvidia and AMD GPUs. Whether the developers will reach the expected levels of performance is another matter, and only time will give us that answer.

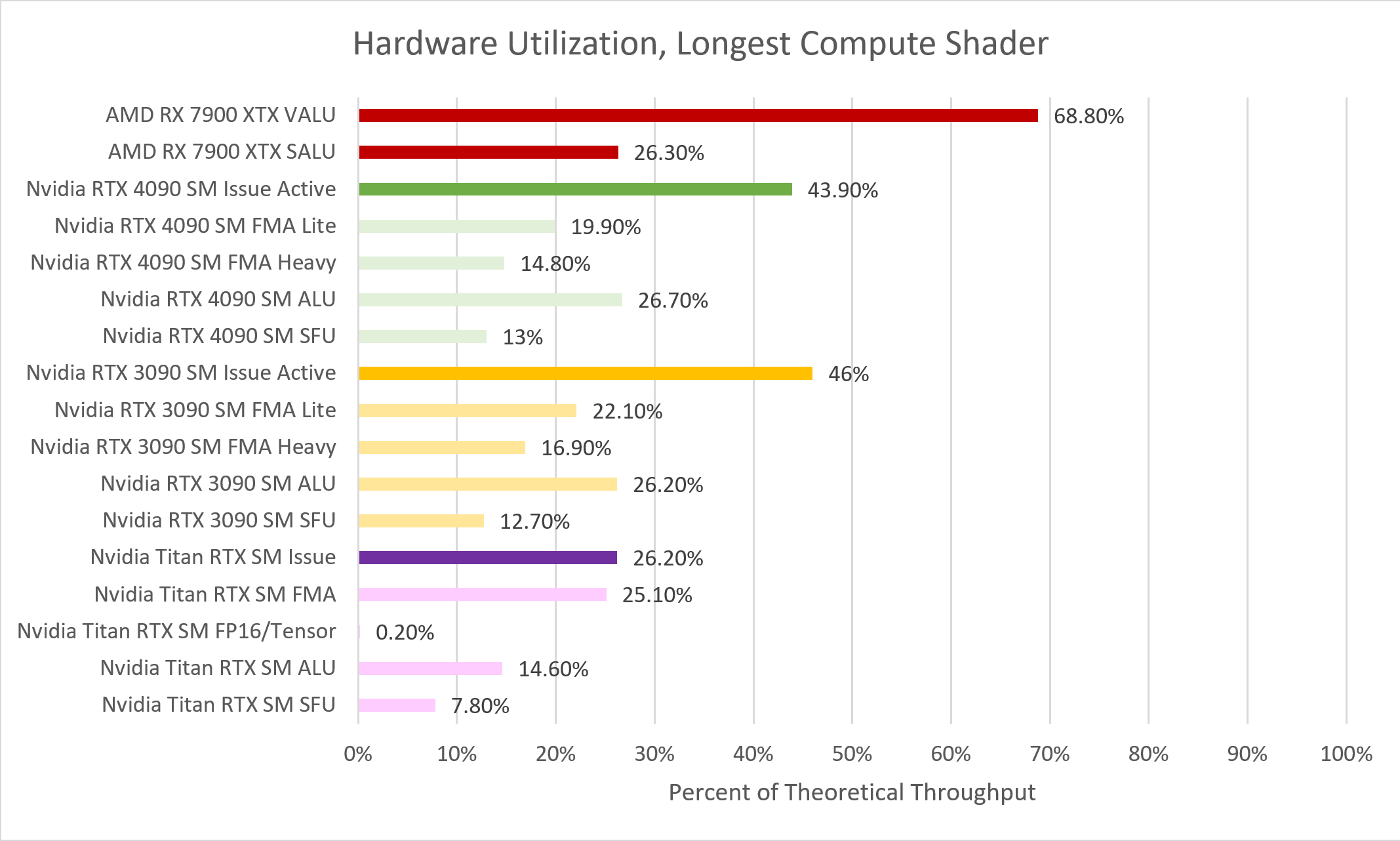

Take the above chart as an example. Chips and Cheese notes, "RDNA 3 enjoys very good vector utilization. Scalar ALUs do a good job of offloading computation, because the vector units would see pretty high load if they had to handle scalar operations too." However, they go on to state, "Ampere and Ada see good utilization, though not as good as AMD’s. Turing’s situation is mediocre. 26.6% issue utilization is significantly lower than what we see on the other tested GPUs."

And that's the crux of the issue. Is the lower GPU utilization seen on Nvidia simply an inherent design aspect of the architectures and the Starfield engine, or can it be fixed? Or perhaps more cynically: Will the engine be fixed? Bethesda has stated that it's currently working with AMD, Intel, and Nvidia driver teams to improve performance, which is as good a place to start as any. All code is not created equal, and some specific tuning was likely already done by AMD thanks to their partnership with Bethesda on the game. That's probably why the game runs better on AMD GPUs. Now Intel and Nvidia need to work on ways to do the same thing for their architectures.

As it stands now, we found the RX 7900 XTX matched and often exceeded the performance of Nvidia's RTX 4090, even though the latter has significantly more cores and processing power than AMD's flagship. Nvidia has already helped reduce the performance disparity by enabling Resizable BAR support a few days back, but that's only the bare beginnings of improving the situation. We also found that AMD's RDNA 2 RX 6000-series GPUs perform abnormally well compared to Nvidia GPUs, with the RX 6800 as an example matching or exceeding the performance of an RTX 4070. Chips and Cheese did not test any AMD RDNA 2 GPUs to attempt to determine why that might be.

What we do know is that Nvidia's GPUs currently perform worse than AMD's GPUs — not just with the latest RDNA 3 and Ada Lovelace, but going as far back as Pascal (GTX 1070 Ti) and Polaris (RX 590). Chips and Cheese concludes, "There’s no single explanation for RDNA 3's relative overperformance in Starfield. Higher occupancy and higher L2 bandwidth both play a role, as does RDNA 3's higher frontend clock. However, there's really nothing wrong with Nvidia's performance in this game, as some comments around the internet might suggest."

We would respectfully disagree. There is clearly a problem with Nvidia's performance right now, and Starfield's performance in general. Requiring an RX 6800 or RTX 4070 just to break 60 fps at 1080p ultra is not typical behavior for a game with this level of graphics fidelity. It might be the limitations of Nvidia's (and Intel's) architectures to some degree, but it's also a safe bet that there are changes and optimizations that can be made to improve Starfield performance, and we'll likely see those enhancements as the months and years tick by. What we can't say is where things will eventually end up — this is, after all, a Bethesda game.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

NeoMorpheus ReplyAs it stands now, we found the RX 7900 XTX matched and often exceeded the performance of Nvidia's RTX 4090

And of course, that cannot go unpunished!

I have to say, really interesting how much digital ink and hate has been spilled over this just because AMD sponsored this game.

But when its the other way around (The Ascent not having FSR or XESS support for example and one of way too many) and nothing much its said.

The outlet found that Starfield's pixel and compute shaders currently make better utilization of several aspects of AMD's RDNA 3 GPUs. These include RDNA 3's larger vector register files, superior thread tracking, and the use of an L0, L1, L2, and L3 cache design. Nvidia's RTX 40-series GPUs only have L1 and L2 caches, by comparison, and things are even worse on Nvidia's RTX 30- and 20-series GPUs that have significantly smaller L2 cache sizes.

Now that makes me think that all other games are simply not optimized at all for AMD, so lets rage about that!

We are in some really strange times when we are now demanding support for proprietary tech that limits our options.

Oh well. -

GoldyChangus Reply

Yes, that obviously has nothing to do with nobody caring or knowing about The Ascent, as it's surely as popular as Starfield from the indie developer Bethesda. The media is trying to hide something!!! The media even keeps trying to claim that FSR is a worthless technology, just because it is! The gall! The narrative!NeoMorpheus said:And of course, that cannot go unpunished!

I have to say, really interesting how much digital ink and hate has been spilled over this just because AMD sponsored this game.

But when its the other way around (The Ascent not having FSR or XESS support for example and one of way too many) and nothing much its said.

Now that makes me think that all other games are simply not optimized at all for AMD, so lets rage about that!

We are in some really strange times when we are now demanding support for proprietary tech that limits our options.

Oh well.

Regards, -

Makaveli In a sea of tech tubers that was a great written technical article I miss these so much as youtube has taken over.Reply -

ReplyNeoMorpheus said:I have to say, really interesting how much digital ink and hate has been spilled over this just because AMD sponsored this game.

And what exactly did that sponsorship get us?

A poorly optimised game, that has display issues even on AMD GPUs.

When the most powerful card of this generation comes second place to 7900 XTX, you KNOW there's something fundamentally wrong with the game. -

Dr3ams Reply

FSR is not worthless if it works...and it does work. Other upscalers may perform better, but that usually happens when a game has been optimized for a sponsoring hardware manufacturer.GoldyTwatus said:The media even keeps trying to claim that FSR is a worthless technology, just because it is!

Regards, -

JarredWaltonGPU Reply

FSR 1 isn't that great. It's basically a well-known upscaling algorithm (Lanczos), tweaked for edge detection and with a sharpening filter. It's super lightweight, but definitely doesn't provide "near native" quality by any stretch. FSR 2 significantly altered the algorithm and looks better, but with a higher performance impact. It still can't match DLSS 2 upscaling in overall quality, though at times it's at least close, particularly in quality mode when targeting 4K.Dr3ams said:FSR is not worthless if it works...and it does work. Other upscalers may perform better, but that usually happens when a game has been optimized for a sponsoring hardware manufacturer.

I believe Unreal Engine is working on its own internal temporal upscaling solution. It would basically be like FSR 2 in that it would work on any GPU, leveraging the GPU compute shaders to do the computations. I'd be super impressed if Epic took the extra step of having the algorithm leverage tensor / matrix hardware if available, but I seriously doubt that will happen. Whether it will look as good as FSR 2, or better, remains an open question. -

setx ReplyWe would respectfully disagree.

I would disagree disrespectfully. The Chips and Cheese did actual research, which Tom's is incapable to do for many years now, but you just state that their conclusion is wrong based on nothing. -

evdjj3j Reply

I spent about 15 hrs playing on FSR before downloading the DLSS mod and even unoptimized DLSS is leaps and bounds better looking than FSR. I just bought a new GPU and I was strongly considering buying a 7800XT until I played the game with a DLSS mod.Dr3ams said:FSR is not worthless if it works...and it does work. Other upscalers may perform better, but that usually happens when a game has been optimized for a sponsoring hardware manufacturer. -

HyperMatrix Reply

Is this "hate" any different than the negative coverage Nvidia (rightfully) got for years for having massive levels of tessellation in sponsored games that resulted in unnecessary performance hits to AMD cards?NeoMorpheus said:And of course, that cannot go unpunished!

I have to say, really interesting how much digital ink and hate has been spilled over this just because AMD sponsored this game.

You're missing the point. Chips and Cheese explained what's causing the disparity, but nothing about why those conditions exist. What Tom's Hardware is saying is that regardless of the reason, the performance is not acceptable. I would go even further and say that the game was intentionally designed around AMD architecture with little care given to how this would impact the other 80%+ of PC gamers.setx said:I would disagree disrespectfully. The Chips and Cheese did actual research, which Tom's is incapable to do for many years now, but you just state that their conclusion is wrong based on nothing.

This could make sense for Microsoft since these optimizations likely resulted in better performance on AMD powered Xbox consoles, but the problem is that when you see them skip out on adding something as simple as DLSS which could have been implemented in less than a day, you should start to wonder whether the game was intentionally left unoptimized for competing cards. Not to mention even the base game presets include turning FSR2.0 on in order to hit 60fps on many upper end AMD cards.

I think FSR 2.0 (as well as XeSS) should be available as it does provide an upscaling solution to those that otherwise would have no other options. However, the quality of FSR2 even in this sponsored game leaves a lot to be desired. There is so much flickering and shimmering that occurs around edges and transparencies and even cloth moire. I have the DLSS mod installed so I can instantly switch between DLSS and FSR2 with a hotkey and while you can't capture the shimmering and flickering in a picture...it's very much visible to a big extent in video. It's a less than optimal solution and I personally wouldn't use it because of how distracting it is. But I'm sure there are some who can benefit from it to reach playable framerates and I'd be happy for the option to be available for them.Dr3ams said:FSR is not worthless if it works...and it does work. Other upscalers may perform better, but that usually happens when a game has been optimized for a sponsoring hardware manufacturer.