Starfield PC Performance: How Much GPU Do You Need?

FSR 2 upscaling and optimized for AMD.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Starfield — Introduction

Starfield hardly needs an introduction. The latest massive RPG from Bethesda, the expectations are that it will basically deliver "Fallout in space," or alternatively, "Elder Scrolls in space." Even if that's all it provided, people would likely flock to it, but it's supposed to be far more than that. We'll leave the reviewing of the game to others, but we want to see how it runs on some of the best graphics cards, plus some other GPUs for good measure.

We have the Starfield Premium edition, courtesy of AMD, which gives us early access for the next several days — the full release will be on September 6. We're running benchmarks similar to what we did with Diablo IV, and we'll update this article with additional performance results over the coming days. Benchmarking takes time. What sort of hardware will you need, and how does the game look and run on a PC? Let's find out.

Sept 7, 2023: We've added initial Intel Arc results to the 1080p charts. They're... not good. But at least Intel doesn't crash or have as much display corruption as before. A patch and/or new drivers for Intel and Nvidia are definitely needed.

Note also that this is an AMD-promoted game, and while AMD says it doesn't restrict game developers from supporting other tech like DLSS and XeSS, it does partner with developers to "prioritize" AMD technologies. The good news is that FSR 2 works on every GPU; the bad news is that it might not look quite as good as DLSS or XeSS — not XeSS in DP4a mode, mind you, as that's different than XeSS running on Intel Arc's XMX cores.

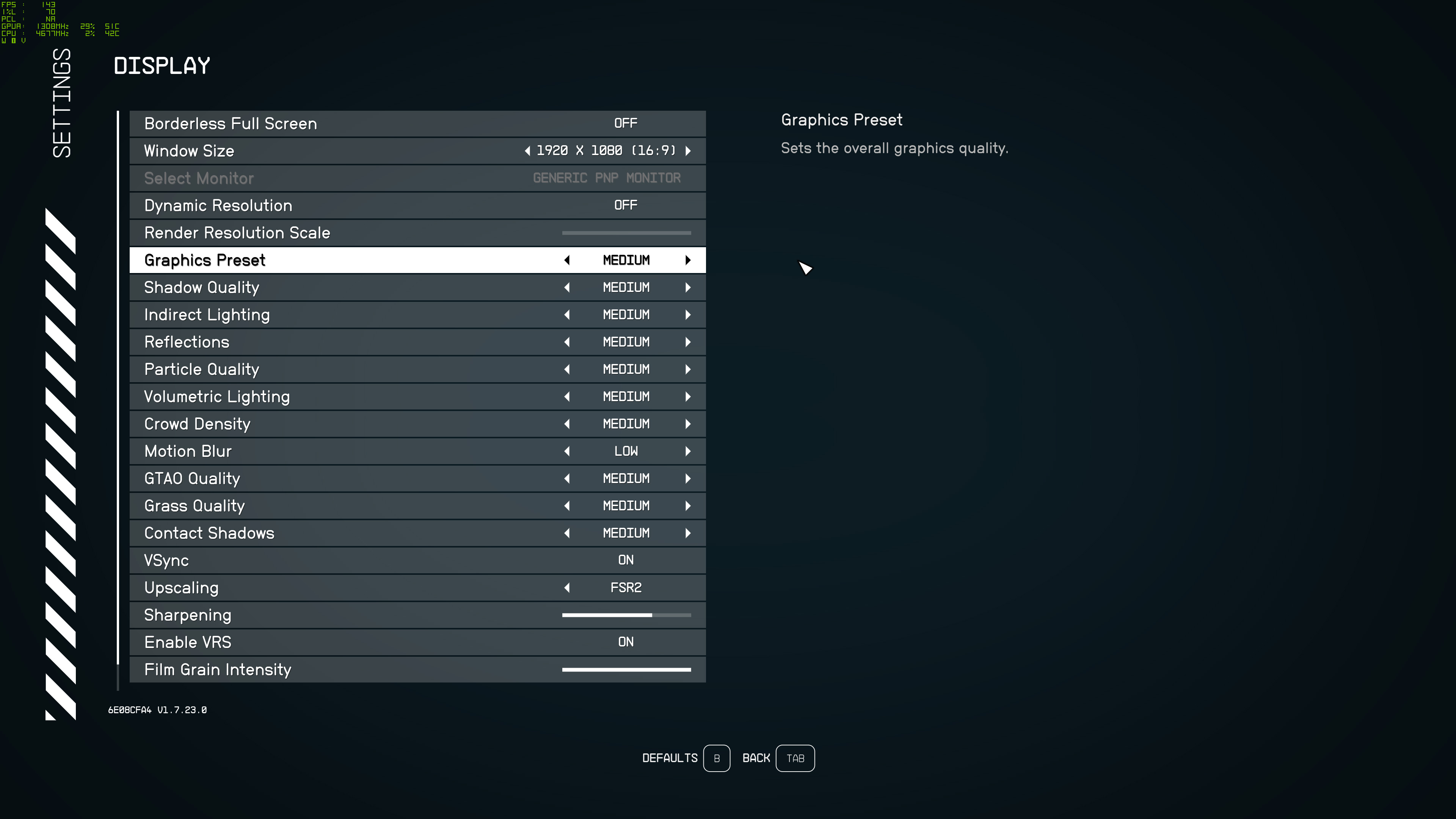

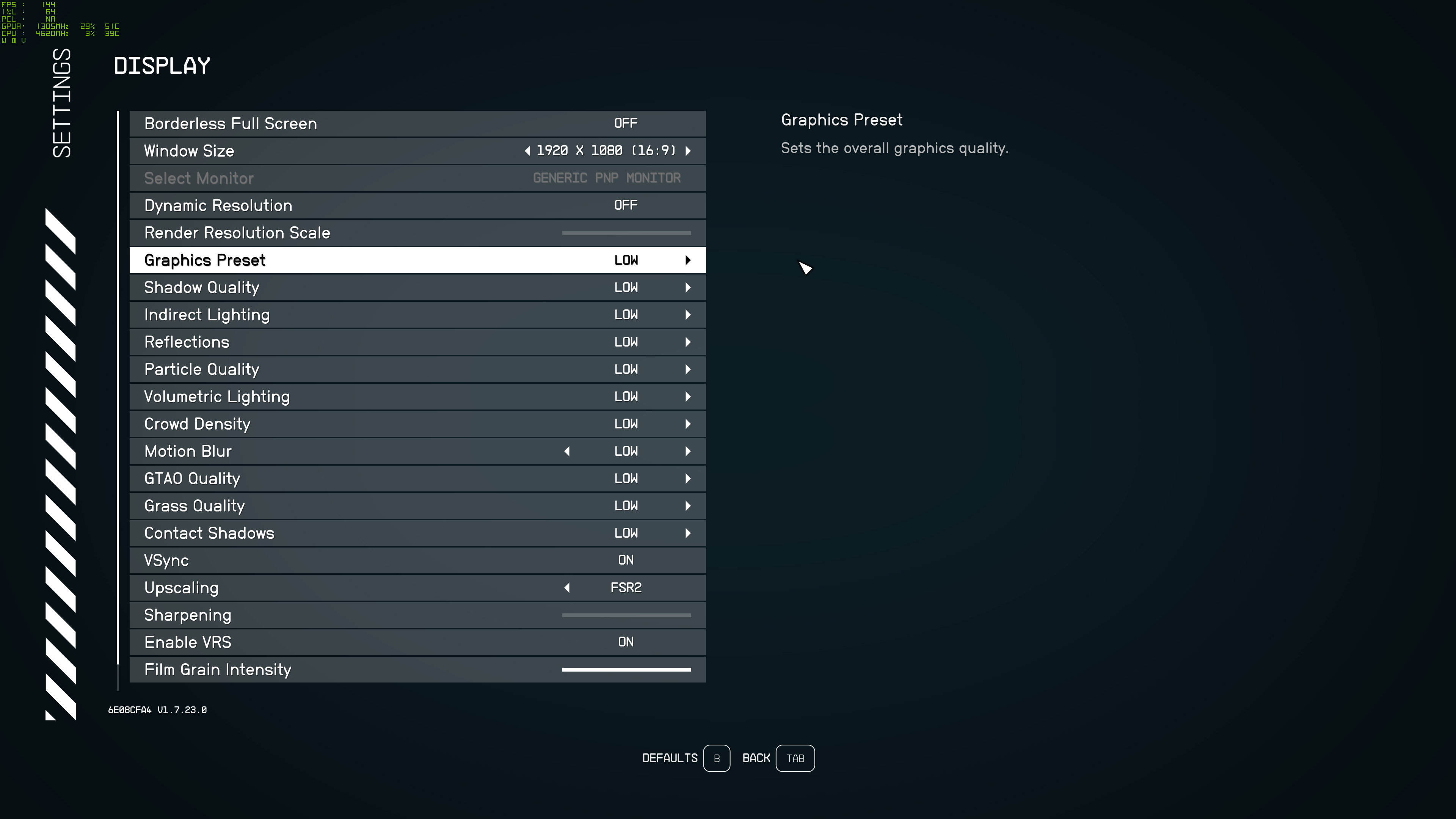

But there's more to it than simply not supporting DLSS or XeSS. By default, Starfield enables some level of upscaling on every GPU and setting. For example, the Low and Medium presets use 50% render resolution, High bumps that to 62%, and Ultra to 75%. So there's relatively tight integration with FSR 2, and that's almost certainly in part because the game often needs it to hit modest framerates, even on high-end hardware.

We're expecting a lot of interest in Starfield, so we plan on testing most "supported" graphics cards on our test PC. We're using high-end hardware, basically as fast as you can get with a modern PC, though AMD's Ryzen 9 7950X3D might exceed it in some cases. (Update: Based on other tests from around the web, it looks like 13900K wins by a decent margin.) In order to avoid swapping drivers too many times, we're starting with AMD GPUs, then Nvidia, then Intel (which now has a "Starfield emergency fix" driver).

Also... performance on non-AMD GPUs right now is very questionable. Based on the system requirements, we'd expect the Nvidia GPUs to be much closer to their AMD counterparts than what we're currently seeing. Either a last-minute update to the game messed things up, or Bethesda needs to put a lot of additional effort into tuning the game to make it run better.

Starfield — System Requirements

The official Starfield system requirements consist of the usual minimum and recommended PC hardware. If you're running a PC that hasn't been updated since 2017, you might have some difficulty getting an optimal experience, but more recent hardware should hopefully be fine. Here's what Bethesda suggests:

Minimum PC Requirements

- Intel Core i7-6800K or AMD Ryzen 5 2600X

- Nvidia GeForce GTX 1070 Ti or AMD Radeon RX 5700

- 16GB of system memory

- 125GB of available SSD storage

- Windows 10 21H1 or later

- DirectX 12

The minimum specs don't look too demanding, with some interesting picks. Core i7-6800K was an Intel HEDT 6-core/12-thread part from 2016— not exactly a super common pick. AMD's Ryzen 5 2600X meanwhile also sports a 6-core/12-thread configuration, but it's far more recent, having launched in 2018. The baseline requirement seems to be at least a 6-core CPU, then, though we imagine you can go lower if you're willing to put up with some stuttering.

The graphics cards are a pretty disparate selection. GTX 1070 Ti launched in 2017, while the RX 5700 came out in 2019. According to our GPU benchmarks hierarchy, AMD's RX 5700 runs about 25–30 percent faster than the GTX 1070 Ti, and as you'll see momentarily, those recommendations are bass-ackward: You need a proportionally more powerful Nvidia GPU, not the other way around. In either case, those are 8GB GPUs, though it looks like the game can work fine on 6GB cards at least. (We'll see about testing a 4GB card maybe later.)

You'll also need 125GB of storage, and an SSD is supposedly required. Will the game run off a hard drive? Probably, but load times could be substantially longer, and loading new parts of a map might cause hitching. Given even 2TB SSDs can be had for under $100 these days, it's not an extreme requirement in our opinion. Likewise, the 16GB of system RAM requirement should also be within reach of most gaming PCs built in the past five years, and probably even longer — I've got a Core i7-4770K PC from 2014 that has 16GB (and it's sitting in a corner gathering dust).

Worth pointing out is that Bethesda doesn't make any mention of what you can expect from a minimum-spec PC. Will it handle 1080p at low settings and 30 fps, or is it more for 1080p at medium settings and 60 fps? Others will have to see about testing such hardware to find out, though I'll at least be able to weigh in on the GPU aspect.

Based on early results, the CPU load looks to be relatively high, so lower-end processors may only manage in the 30–60 fps range. On the GPU side, the minimum recommended cards offer very different experiences right now. The GTX 1070 Ti barely clears 30 fps at 1080p medium, but the RX 5700 puts up a comfortable 50+ fps at the same settings, and even an RX 5600 XT 6GB card did fine. Red good, green bad, in other words.

Recommended PC Requirements

- Intel Core i5-10600K or AMD Ryzen 5 3600X

- Nvidia GeForce RTX 2080 or AMD Radeon RX 6800 XT

- 16GB of system memory

- 125GB of SSD storage

- Windows 10/11 with latest updates

- DirectX 12

- Broadband internet

The recommended specs again make no mention of what sort of settings you'll be able to run, or whether they target 60 fps or only 30 fps. We'd like it to be for 60 fps or more at 1080p and high settings, but right now it looks more like 30-ish fps on the Nvidia card and 80-ish fps on the AMD card at 1080p ultra.

The CPU requirements don't really change all that much. Sure, the Ryzen 5 3600X is faster than the 2600X, and the 10600K likewise should easily outpace the old 6800K, but they're all still 6-core/12-thread parts. That could mean that lower spec CPUs will also be fine, and Bethesda may have mostly been picking parts out of a hat, so to speak.

The GPU recommendations get a significant upgrade, this time to the RTX 2080 or RX 6800 XT. Again, the specs seem to suggest having a faster AMD card would be helpful — the 6800 XT outperforms the RTX 2080 by about 55–65 percent in our GPU benchmarks. Do you really need the faster AMD card, or is it just GPU names picked out of a hat? That's what I'm here to determine. (Spoiler: AMD's GPU works well, but the RTX 2080... yeah, it's much slower than the 6800 XT.)

Everything else basically remains the same, though now there's mention of having broadband internet. That's probably just because downloading 125GB of data over anything else would be a terrible experience. For that matter, even if you have a 100 Mbps connection, that's still going to require about three hours, best-case. Plan accordingly and start your preload early if you can.

Starfield — Test Setup

TOM'S HARDWARE TEST PC

Intel Core i9-13900K

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

Samsung Neo G8 32

GRAPHICS CARDS

AMD RX 7900 XTX

AMD RX 7900 XT

AMD RX 7600

AMD RX 6000-Series

Intel Arc A770 16GB

Intel Arc A750

Nvidia RTX 4090

Nvidia RTX 4080

Nvidia RTX 4070 Ti

Nvidia RTX 4070

Nvidia RTX 4060 Ti

Nvidia RTX 4060

Nvidia RTX 30-Series

We're using our standard 2023 GPU test PC, with a Core i9-13900K and all the other bells and whistles. We're planning on testing Starfield on a bunch of graphics cards, but the boxout only shows the current generation GPUs. We'll get to the others, time permitting, because nothing else could possibly be happening on September 6, right?

For this initial look, we're running AMD's latest Starfield ready 23.8.2 drivers. Nvidia's 537.13 drivers are also supposed to be game ready for Starfield, even though they arrived August 22. We may need to retest with newer drivers on Nvidia, however, as things right now (meaning performance) don't quite look right. Intel also released 4762 drivers for Starfield, which at least lets you run the game without crashing, but performance is also quite poor.

We'll be testing at 1920x1080, 2560x1440, and 3840x2160 for most GPUs. For lower spec cards, we'll give 720p a shot as well. 1080p testing will use the medium and ultra presets (or equivalent), while 1440p and 4K will be tested at ultra settings. In all cases, we're going to set resolution scaling to 100% and turn off dynamic resolution. We'll also test with FSR 2 at 66% scaling while running at 4K, at least for cards where that might make sense. (Scaling at lower resolutions should be similar.)

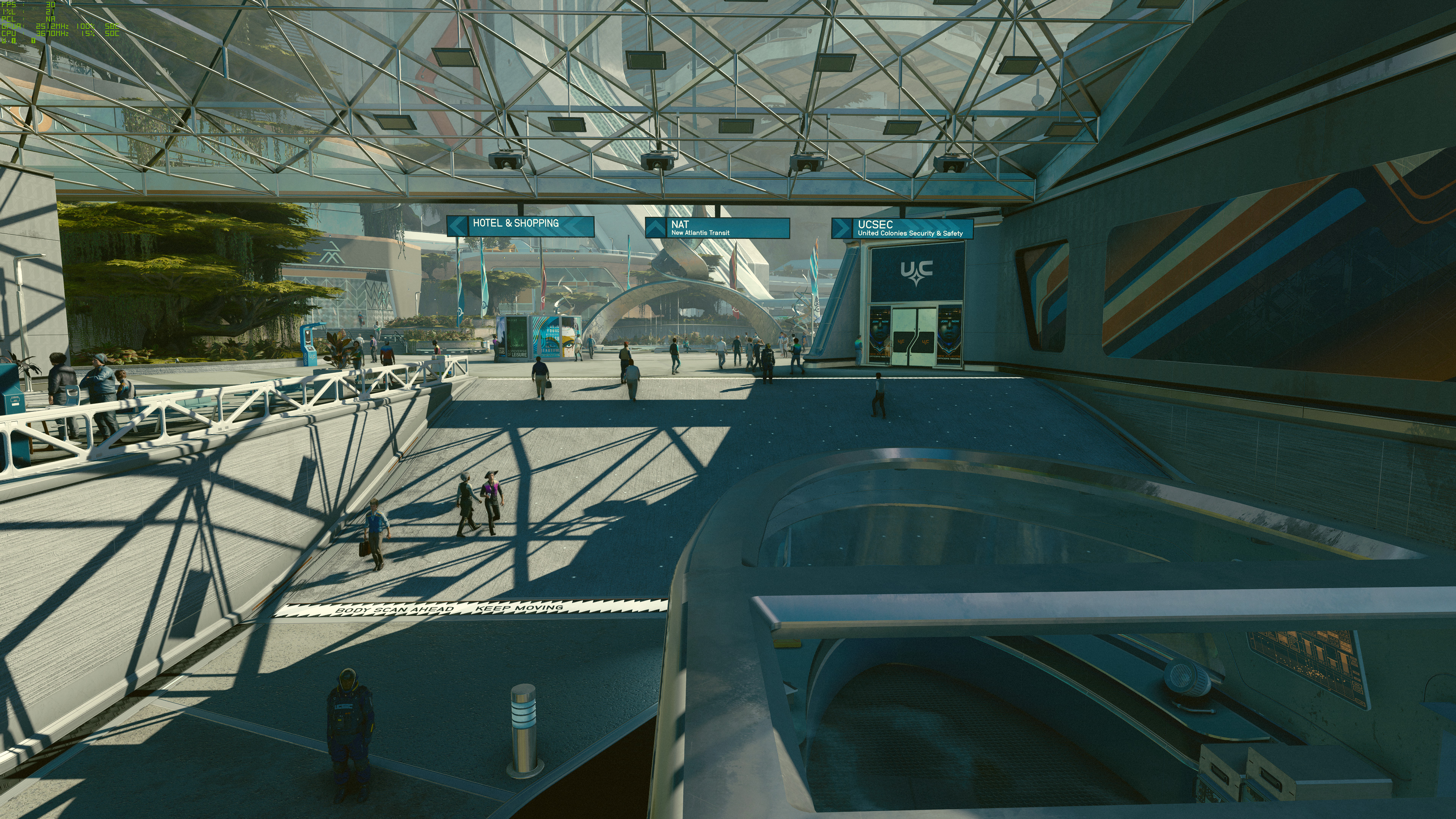

We'll begin with the 1080p results and work our way up, stopping testing on any GPU once it falls below 30 fps. Our test sequence consists of running along the same route for 60 seconds in the New Atlantis spaceport, since Starfield likely doesn't have a built-in benchmark. We tried to find a spot that was a bit more demanding than some other areas, and the large number of NPCs seemed to make this a good fit. Other areas in the game will be more or less demanding.

Given Starfield isn't really a twitch game, anything above 60 fps should be just fine, and even 30 fps or more is playable. We're testing with a Samsung Odyssey Neo G8 32-inch monitor, which supports 4K 240Hz and both FreeSync and G-Sync, though we disable vsync and just run with a static refresh rate to keep things consistent across the various GPUs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Starfield Graphics Card Performance

We'll be updating these charts over the coming days. If you still see this message, it means testing is ongoing. It's very possible we'll need to wait for the first patch or two and then retest, however, as the current results aren't very promising. We're not planning to test every possible GPU, but if there's one you want to see, let us know in the comments.

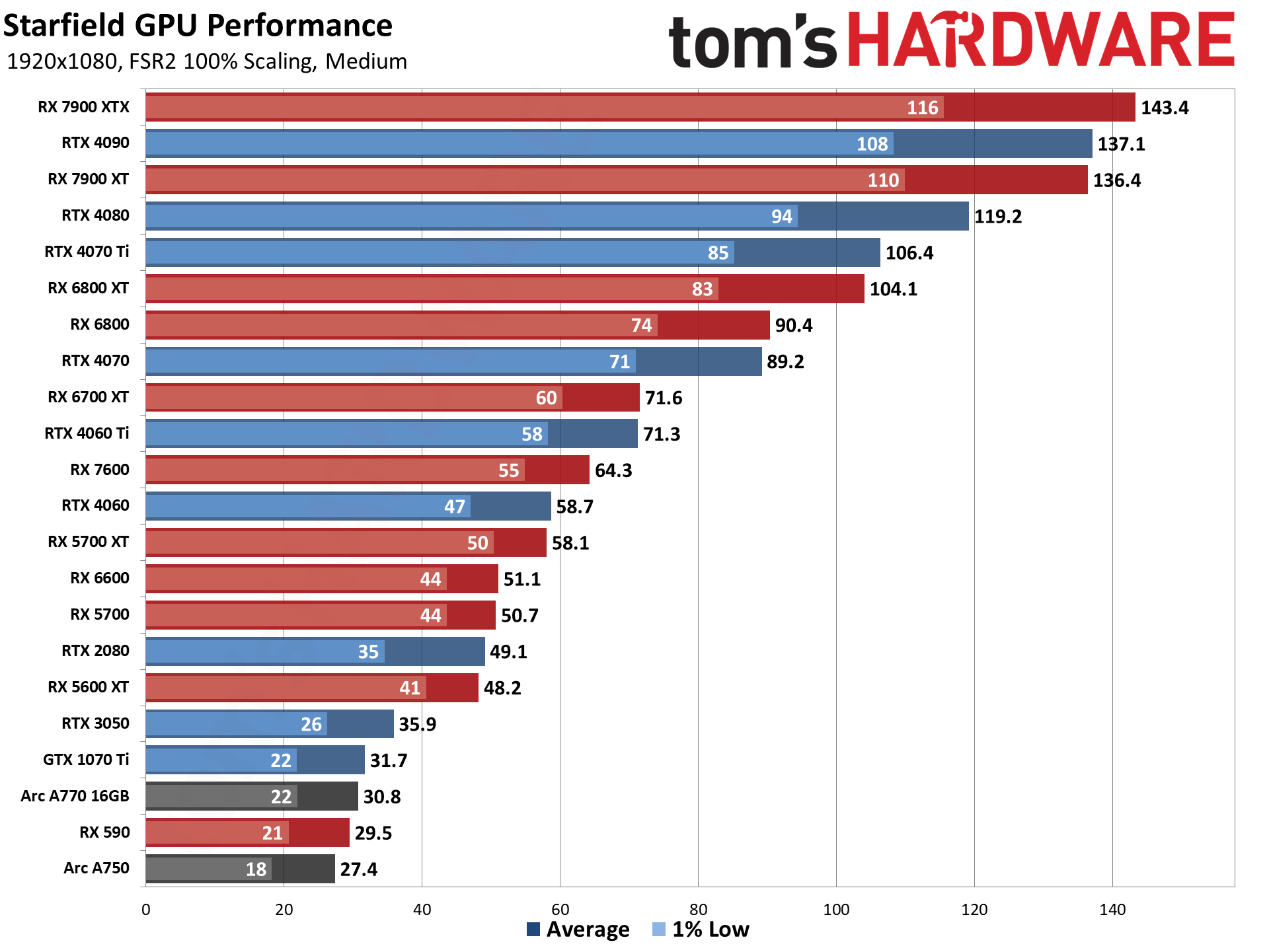

Starfield — 1080p Medium Performance

As our first taste of things to come, anyone familiar with the "typical" rankings of graphics cards should immediately notice some oddities. The RTX 4090 doesn't take the top spot, though 1080p often runs into CPU limitations so that's not necessarily a problem. Except, the RTX 4080, RTX 4070 Ti, and so on down the list for Nvidia all show that we're not hitting CPU limits. The game just runs rather poorly on Nvidia's GPUs right now.

For example, look at the bottom of the chart. GTX 1070 Ti, the minimum recommended Nvidia card, can't even get a steady 30 fps — it averages 32 fps, but with 1% lows of 22 fps. Also, the GTX 3050 lands at just 36 fps. There are definitely games where that card struggles, but it's normally games with ray tracing enabled. Even Minecraft runs at 36 fps at native 1080p with ray tracing. For our standard gaming tests, the RTX 3050 averages 87 fps at 1080p medium.

Look at the RTX 2080 as well. That's the "recommended" Nvidia card, which we'd like to think would mean it can easily handle 1080p ultra, and perhaps even 1440p ultra. AMD's "recommended" RX 6800 XT gets 104 fps at 1080p medium, so it has plenty of gas in the tank, but the RTX 2080 only averages 49 fps. It's actually slower than the "minimum" recommendation AMD RX 5700.

Intel's Arc GPUs aren't doing so hot either. The A770 16GB can't quite match the GTX 1070 Ti, never mind the RTX 3060 levels that it normally reaches. And the Arc A750 ends up as the slowest GPU we tested at 1080p medium. But don't worry, because Bethesda's Todd Howard suggests we "just need to upgrade our PC." [Insert eye-roll emoji here.]

Clearly, there are some problems with how the game runs right now, at least on Nvidia's GPUs. In our normal rasterization test suite, the RTX 2080 typically beats the RX 5700 by around 30%, and it's basically level with the RX 7600. Here, it's 24% slower than the RX 7600.

What if you want to go lower than 1080p medium? Well, at least the GTX 1070 Ti can put up a playable result of around 51 fps at 720p low (still with 100% scaling). The RX 5700 already does just fine at 1080p medium, but for reference even an RX 590 delivers a playable 44 fps at 720p low.

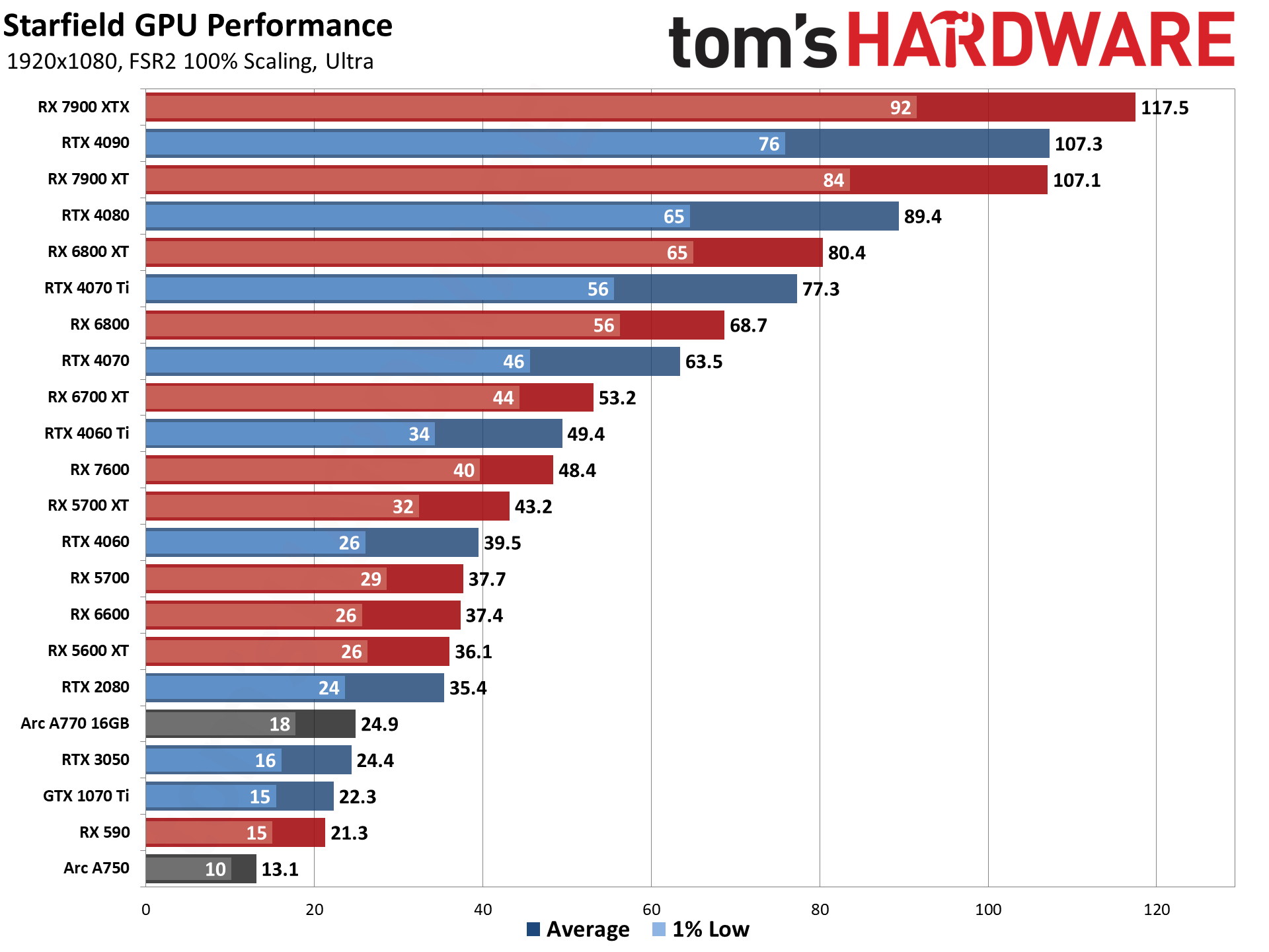

Starfield — 1080p Ultra Performance

Bumping up to the Ultra preset, with resolution scaling at 100% (rather than the default 75%), things go from bad to worse. AMD's RX 6800 clears 60 fps, but the RX 6700 XT comes up short. More critically, the RTX 4070 just barely averages more than 60 fps, but 1% lows are at 46. Normally, at 1080p ultra in a game that only uses rasterization? The RTX 4070 sits at around 130 fps and lands just behind the RX 6800 XT.

The 6800 XT incidentally is the minimum level of GPU we'd recommend for a smooth 1080p ultra sans upscaling experience. Or alternatively, right now, the RTX 4080 is a bit faster than the 6800 XT. Yes, it's only 10% faster in Starfield. In our typical rasterization tests, at 1080p ultra it's normally 30% faster.

If you're only looking to clear 30 fps, the RX 5700 will still suffice, or the RTX 4060. Not to beat a dead horse, but in our GPU benchmarks hierarchy, the 4060 is normally 30% faster in rasterization testing, not 5% faster. So either Bethesda or Nvidia have about 25% worth of optimizations to work on.

It's also interesting to look at Intel Arc. The A770 16GB shows about the appropriate drop in performance compared to medium settings, but then the A750 8GB card appears to run out of VRAM. Maybe that's an Intel driver issue, or maybe the DX12 codebase for Starfield just sucks at memory management on Intel GPUs. Either way, we won't bother with further Arc testing for now and will await further patches and driver updates.

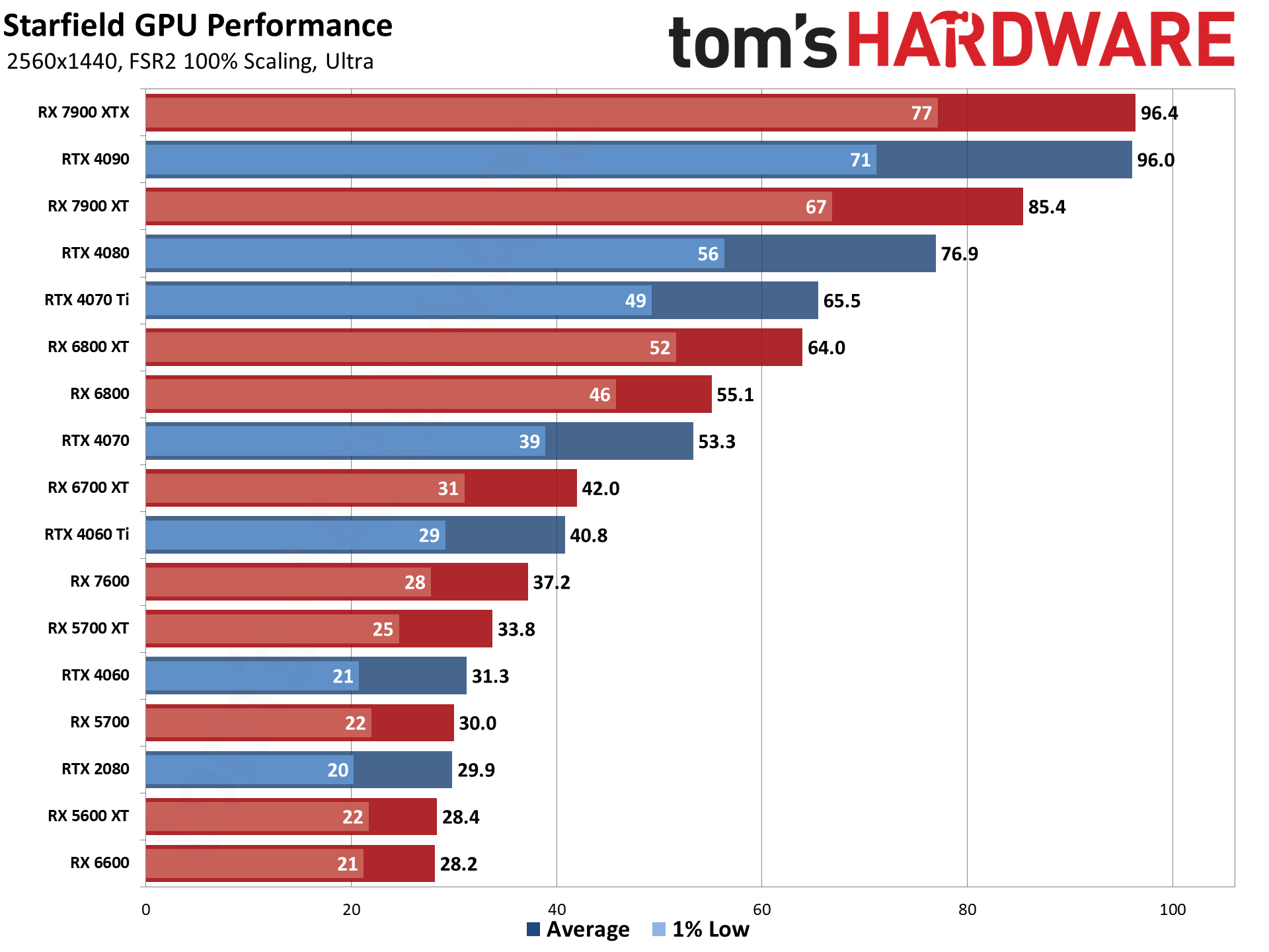

Starfield — 1440p Ultra Performance

At 1440p ultra, the RX 6800 XT still clears 60 fps and basically matches the RTX 4070 Ti. If you want to keep minimums above 60 as well, you'd need at least the RX 7900 XT. Or use upscaling, which appears to be Bethesda's suggestion, but come on: Does Starfield really look that great that we should expect performance more or less in line with what we see from Spider-Man: Miles Morales with all the bells and whistles turned on?

Sure, those are different types of games, and Bethesda isn't known for making highly tuned engines. But this could very well represent a new low for the company.

Elsewhere, the RTX 4090 finally closes the gap with the RX 7900 XTX. Neither one can break into the triple digits, and in our standard rasterization benchmarks the 4090 is normally about 20% faster than AMD's top GPU. It's not even clear what's causing the poor performance, because Nvidia GPUs have plenty of compute, and neither memory bandwidth nor VRAM capacity seem to be primary factors.

If you're merely trying to break into the "playable" 30 fps range, the RX 7600 and RTX 4060 Ti and above will suffice. Don't be surprised if some areas in the game perform quite a bit worse than we're showing, though, and if you don't have a potent CPU you'll probably see much worse 1% lows.

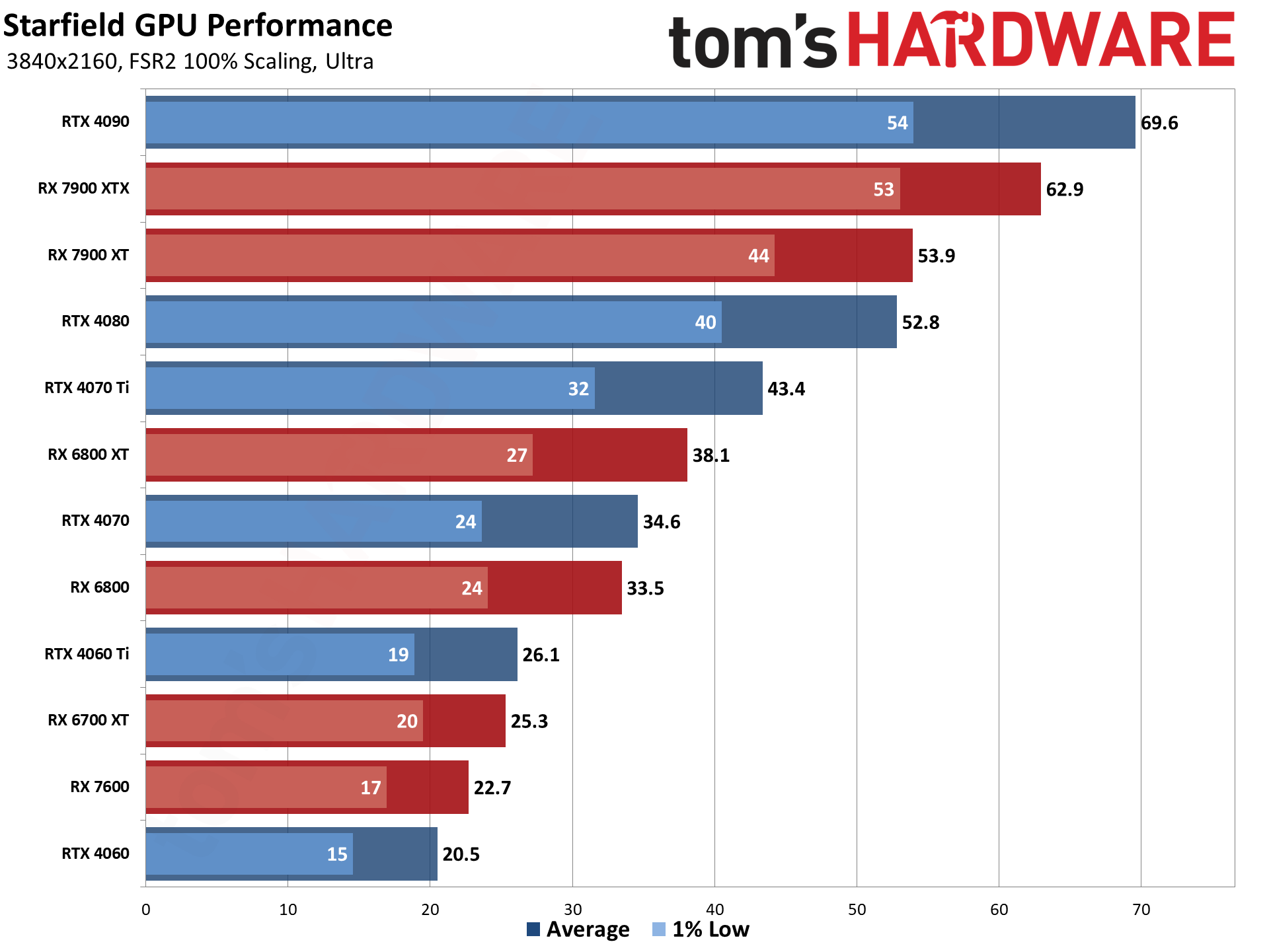

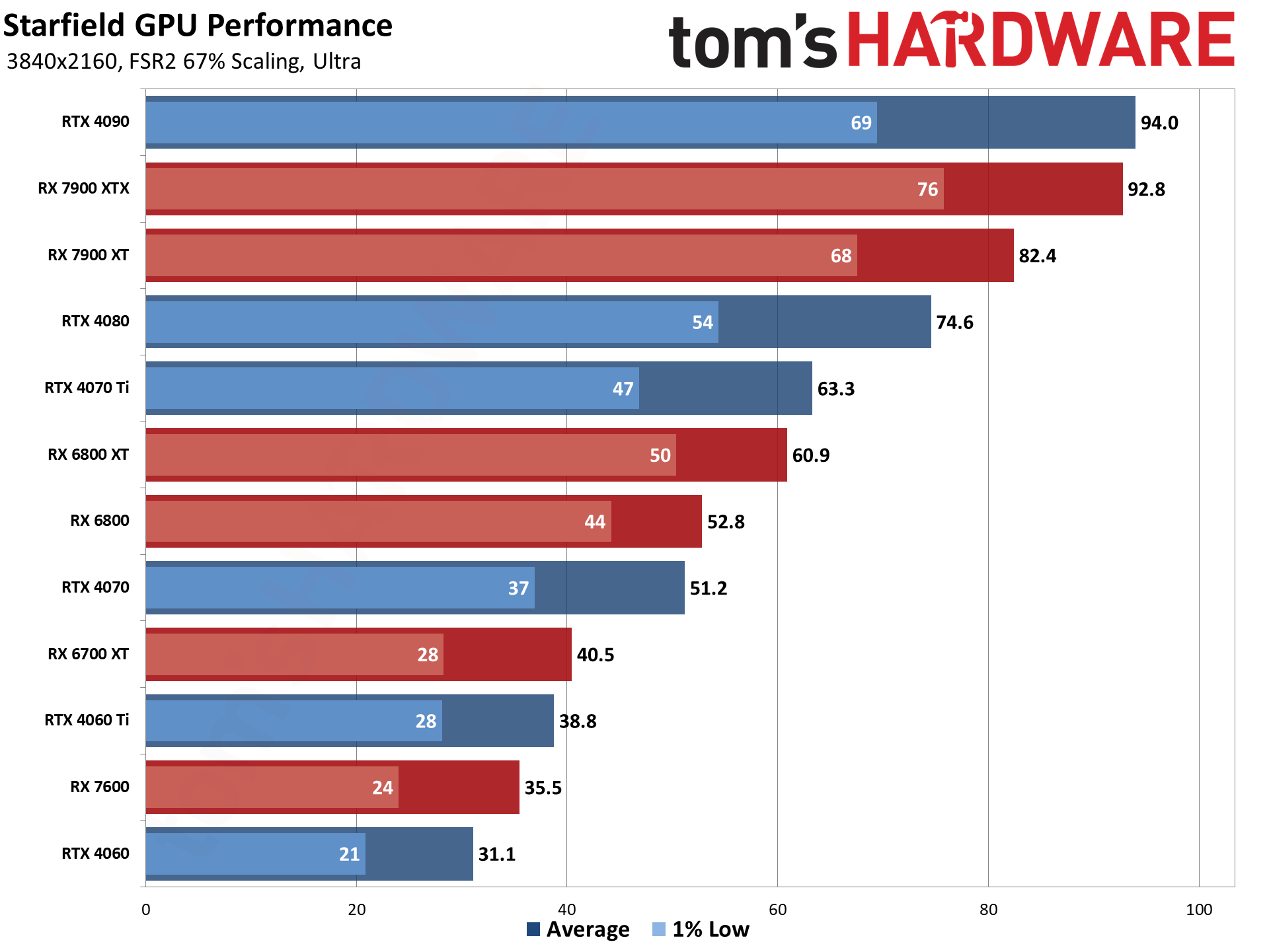

Starfield — 4K Ultra Performance

For native 4K ultra, only the two fastest GPUs from AMD and Nvidia can break 60 fps — and for the first time, Nvidia's top GPU takes the lead. That's not a great showing for Starfield, considering that the 4090 is nearly 30% faster than the 7900 XT at 4K ultra in our standard benchmarks.

For 30 fps, the RX 6800 gets there on average fps, but minimums are in the mid-20s. You'll need an RTX 4070 Ti to get minimums above 30, or probably the RX 6950 XT will also suffice.

But what if you're okay with some upscaling help?

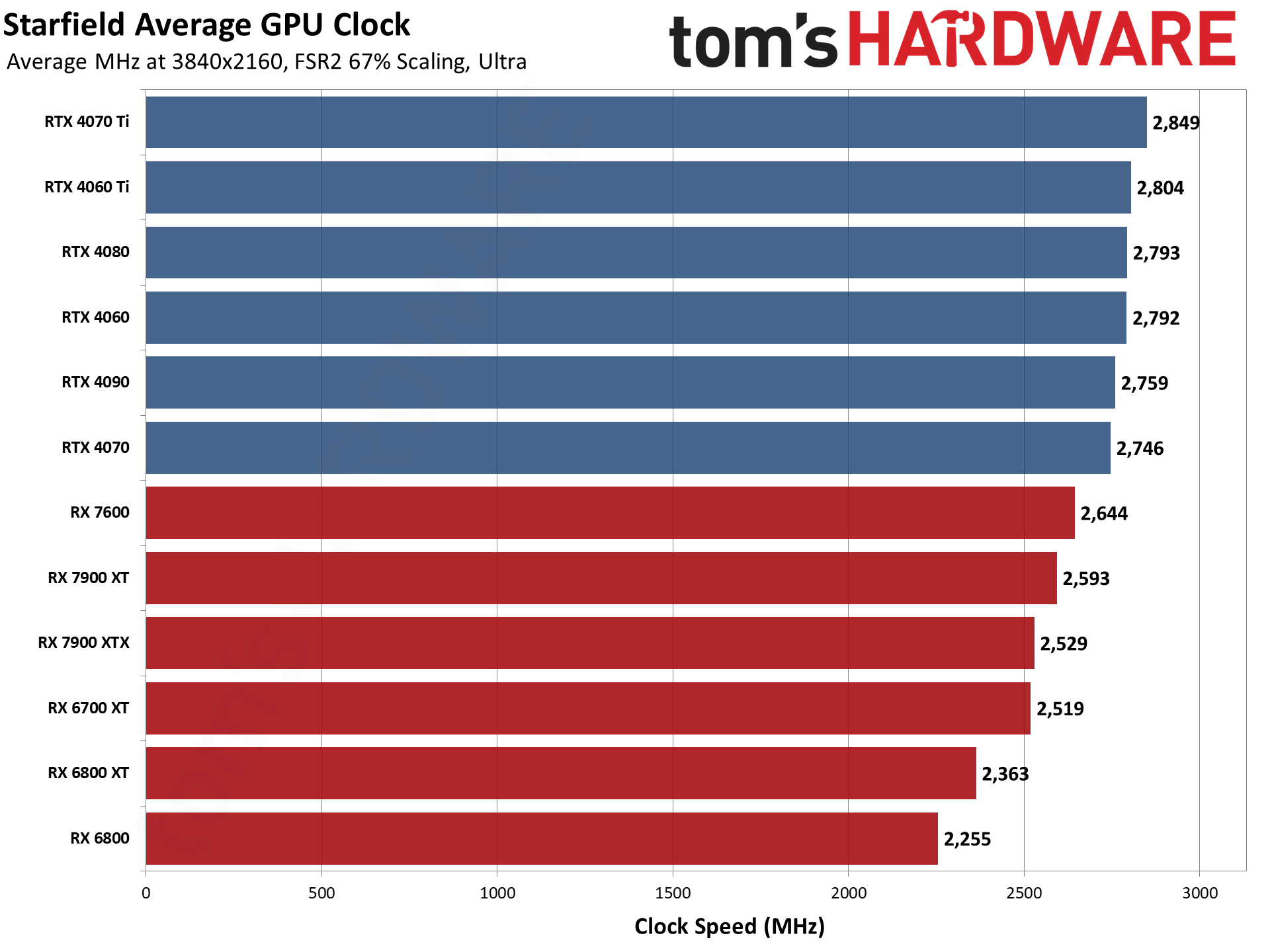

Starfield — 4K Ultra 66% Upscaled Performance

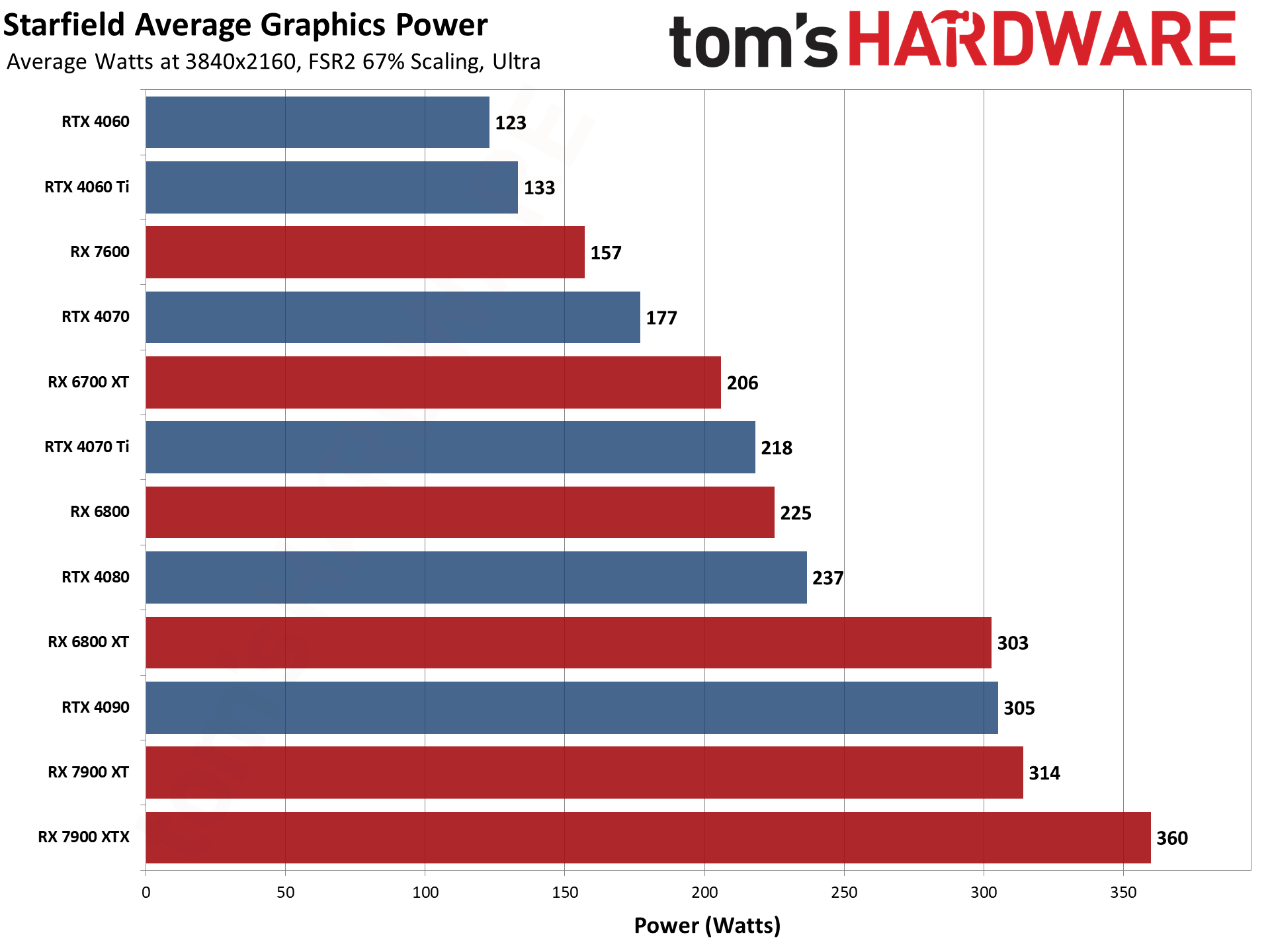

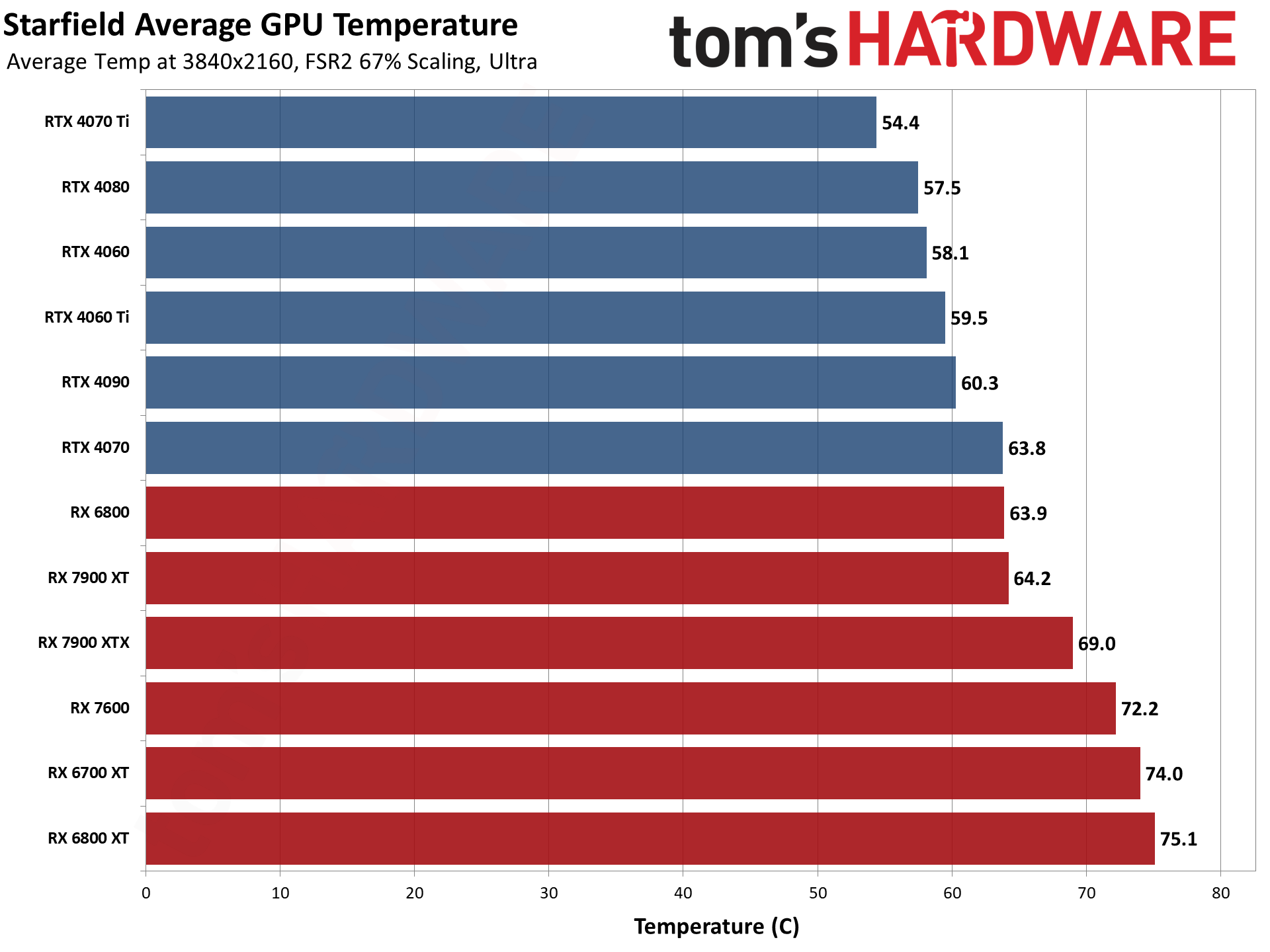

The default resolution scaling factor for the ultra preset is 75%, but we dropped it to 66%. That means we're basically upscaling 1440p to 4K, which is normally what you get from the "Quality" modes from FSR2, DLSS, and XeSS. But Starfield lets you choose any percentage between 50% (4X upscaling) and 100% (no upscaling) — good in some ways, bad in others.

If you look up the page at the 1440p native results, you'll see that 1440p upscaled to 4K performs very nearly the same. There's only about a 1% to 5% difference between the two results. That's interesting, as normally FSR2 upscaling is closer to a 15% performance hit in our experience (outside of CPU bottlenecks).

If you don't mind the stuttering, the RTX 4060 technically averages over 30 fps. For just walking around and talking to people, it's actually playable, though it can be problematic in combat. More realistically, you'd want at least the RTX 4070 or RX 6800 to get a stead 30+ fps, but then that pushes the average framerate all the way into the 50s.

But consider this an early look at performance, as we can't help but feel things are going to improve quite a bit in the coming months.

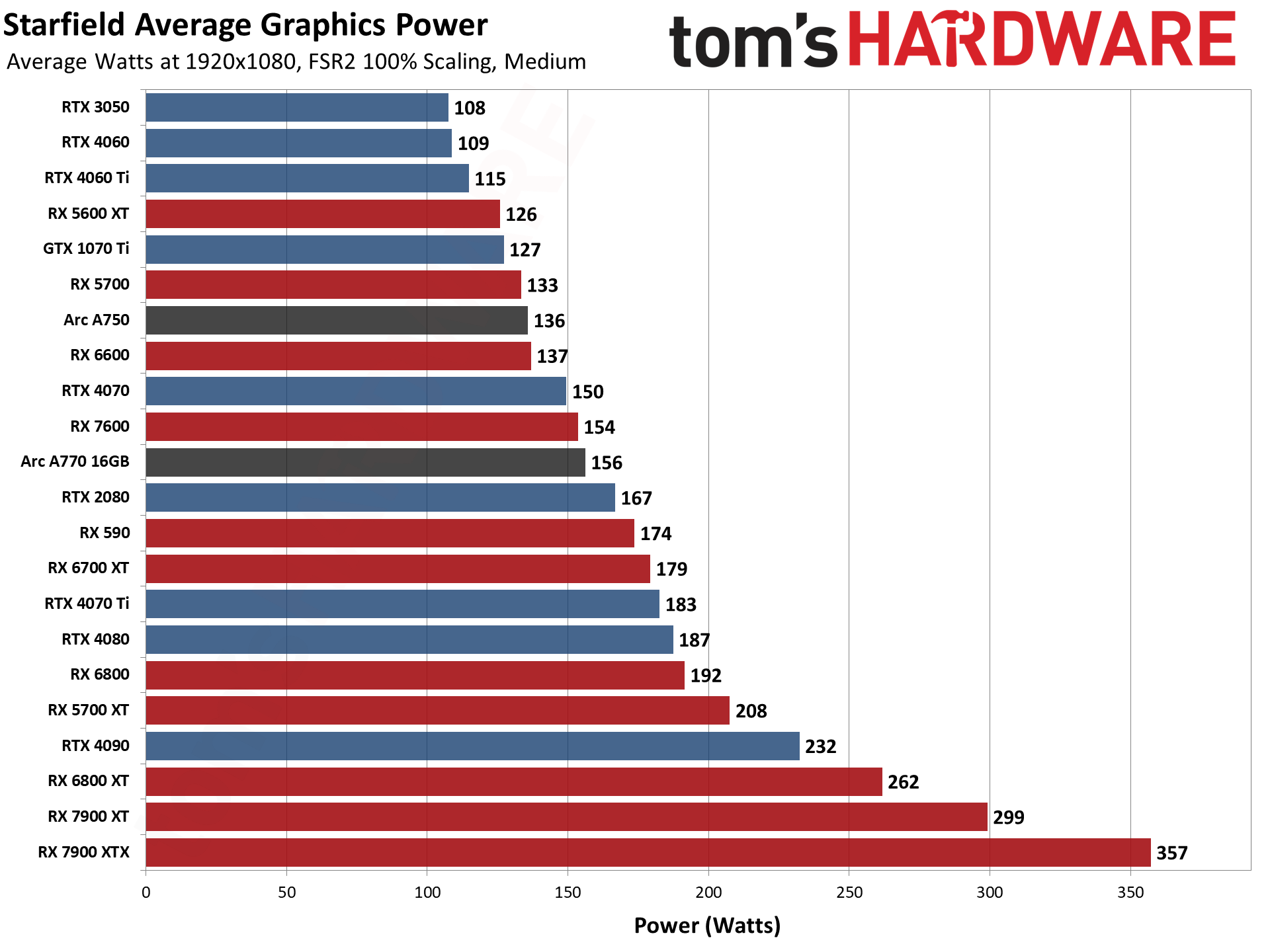

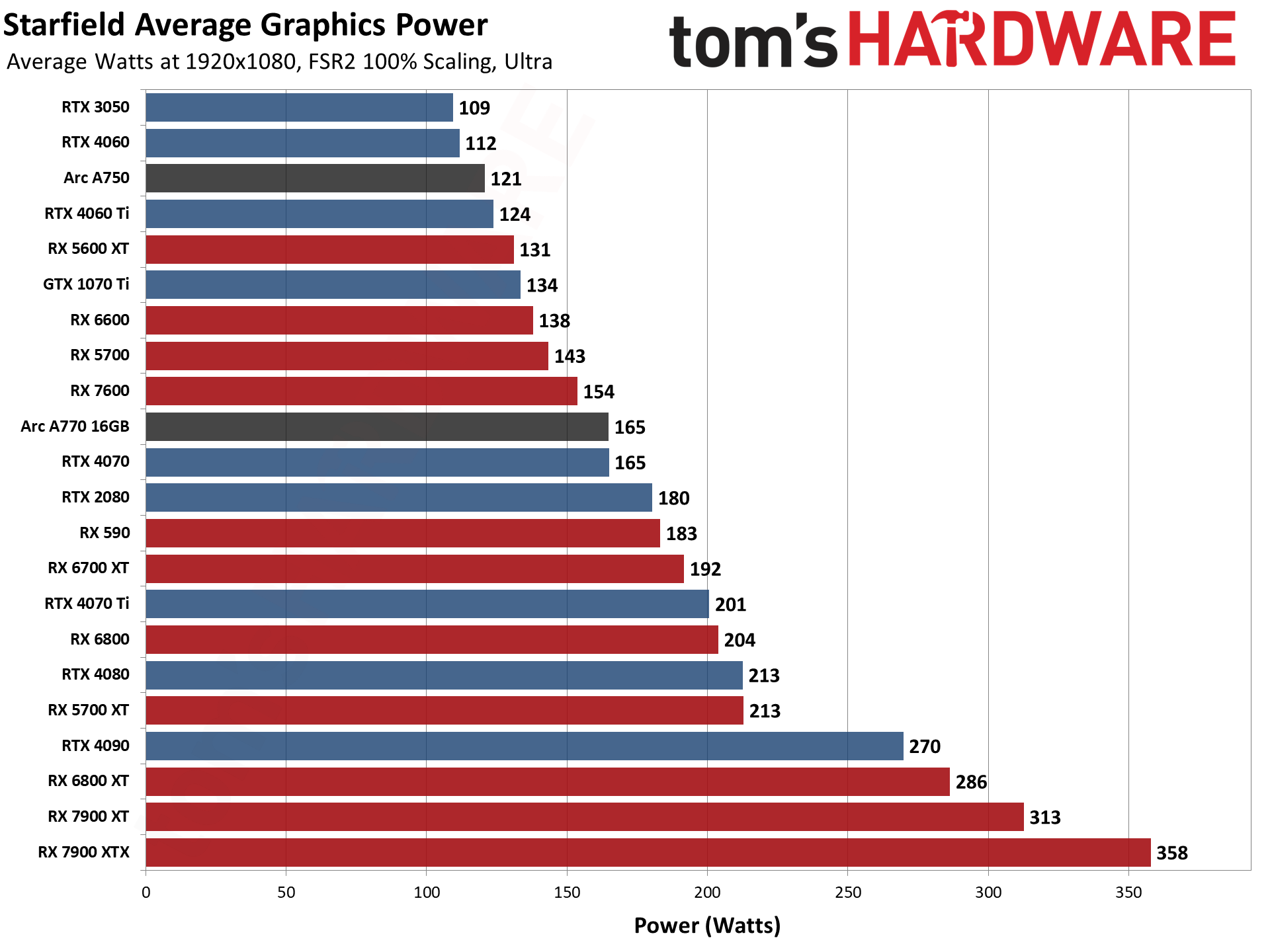

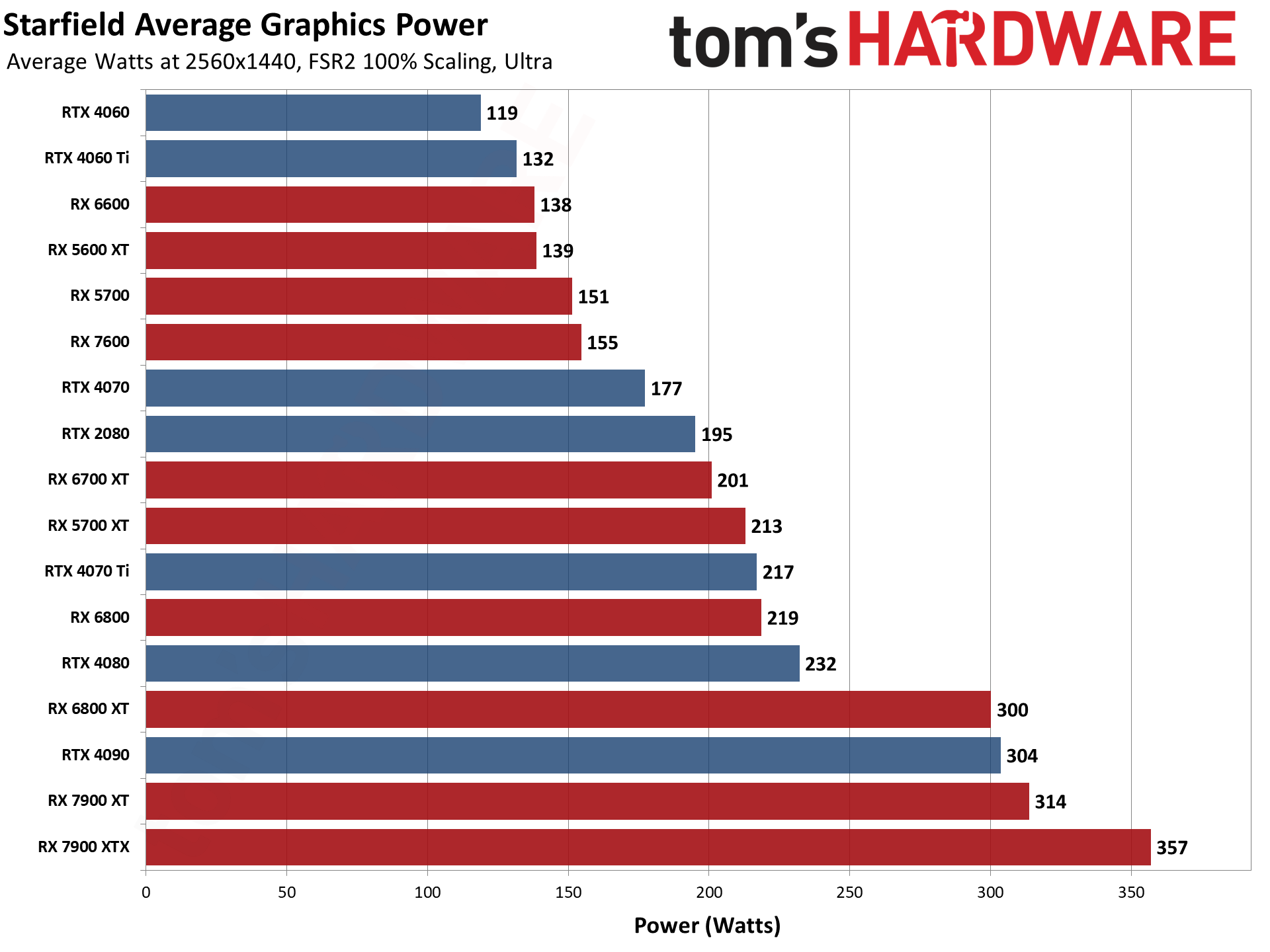

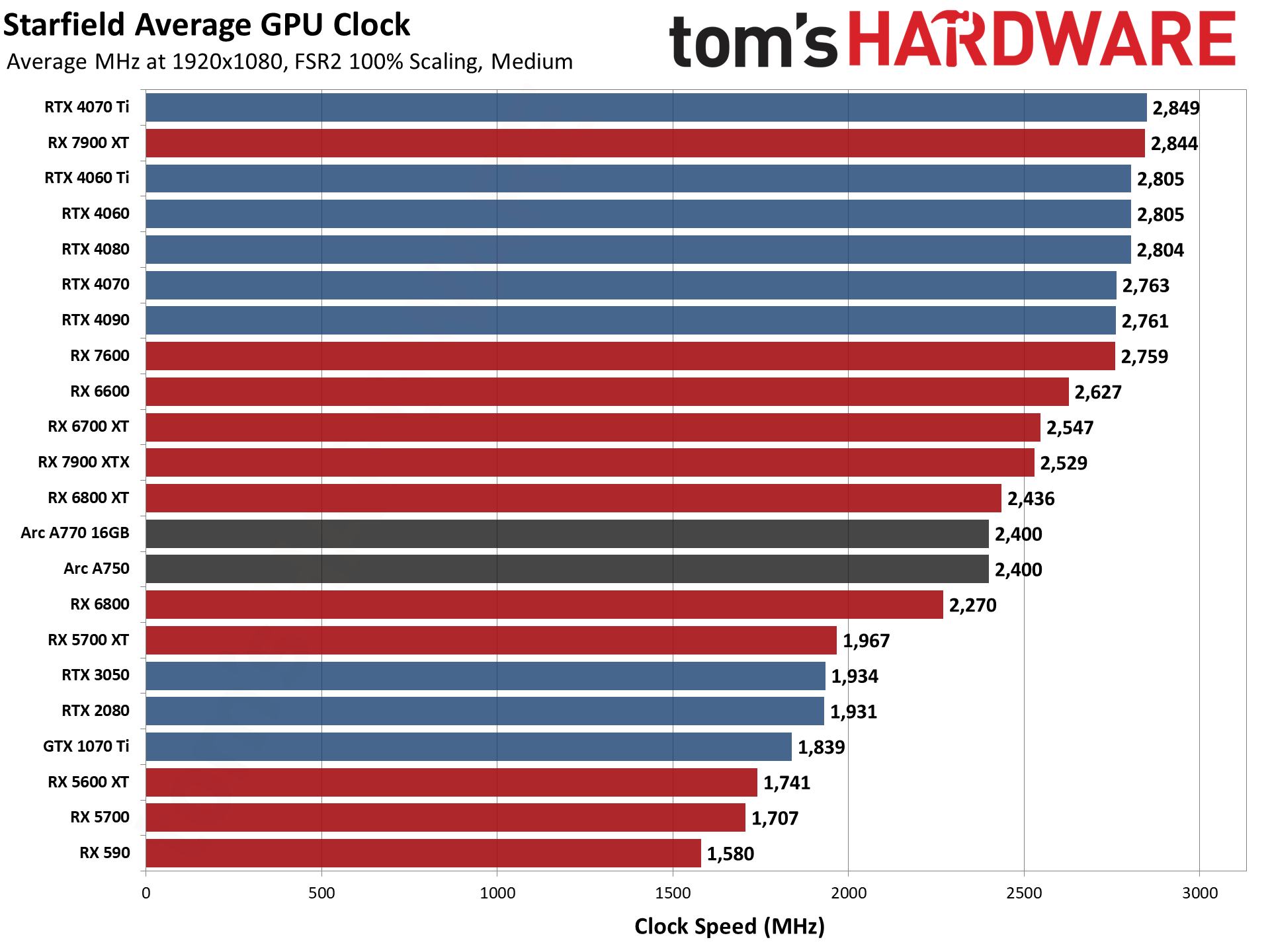

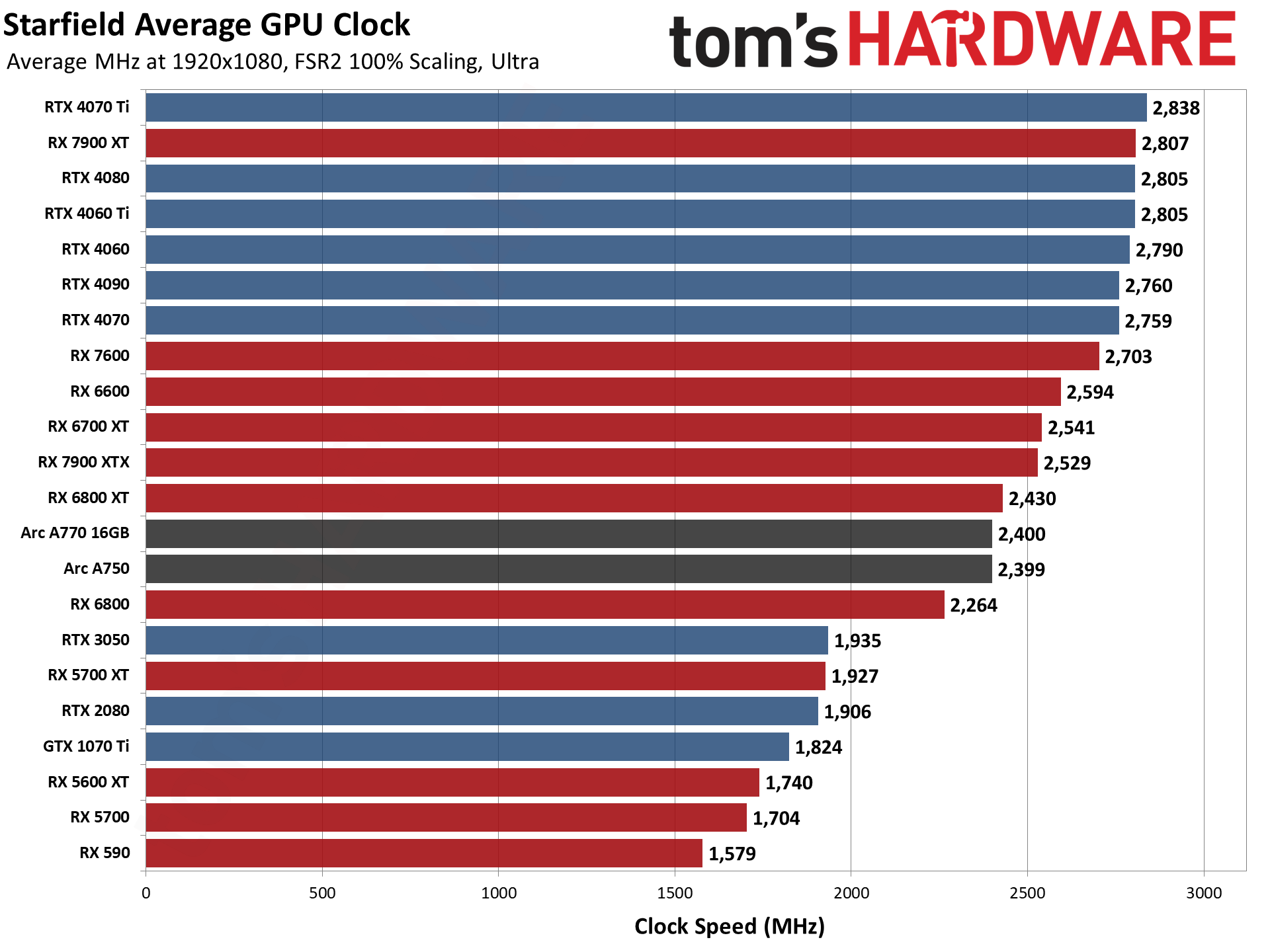

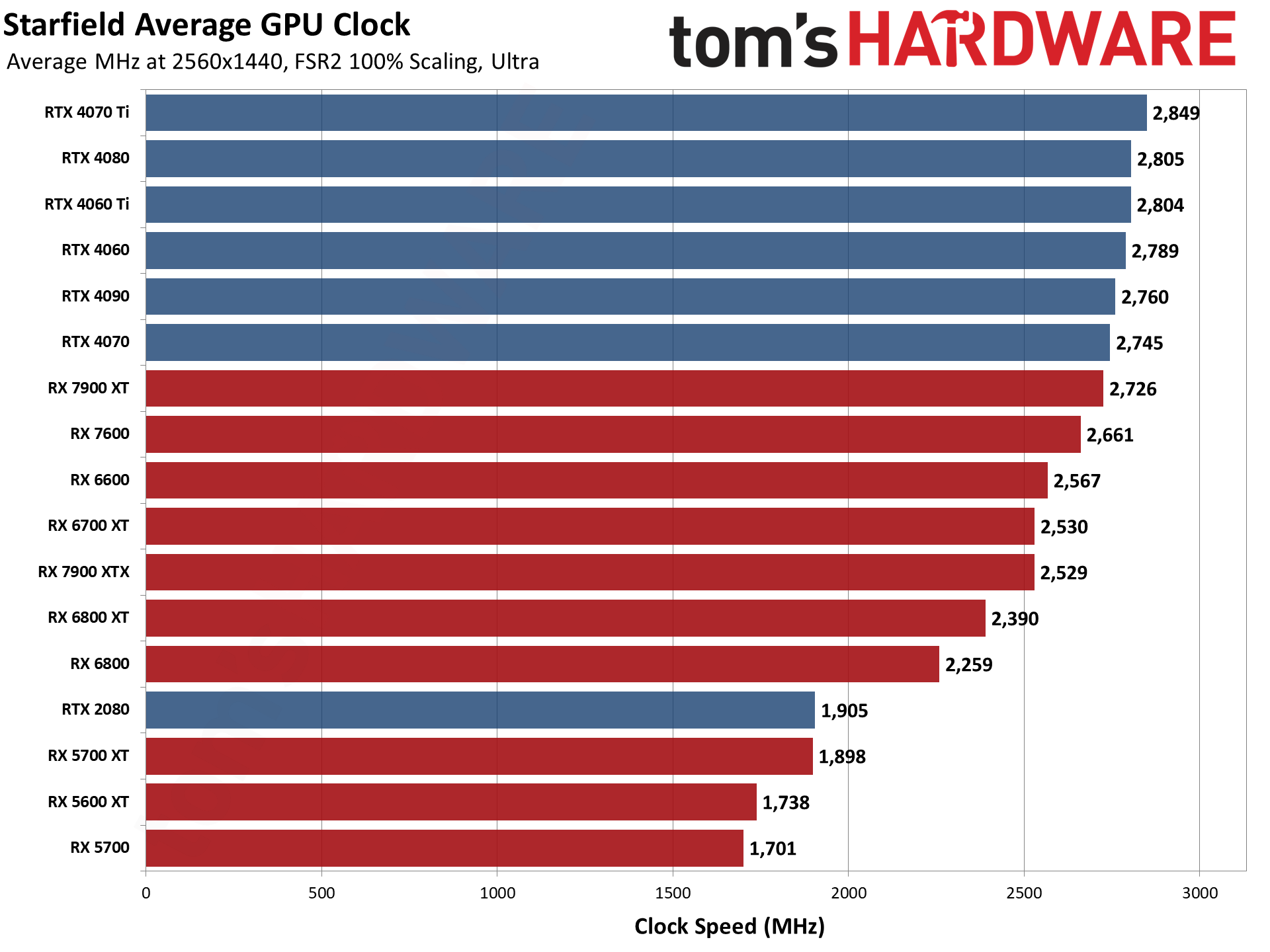

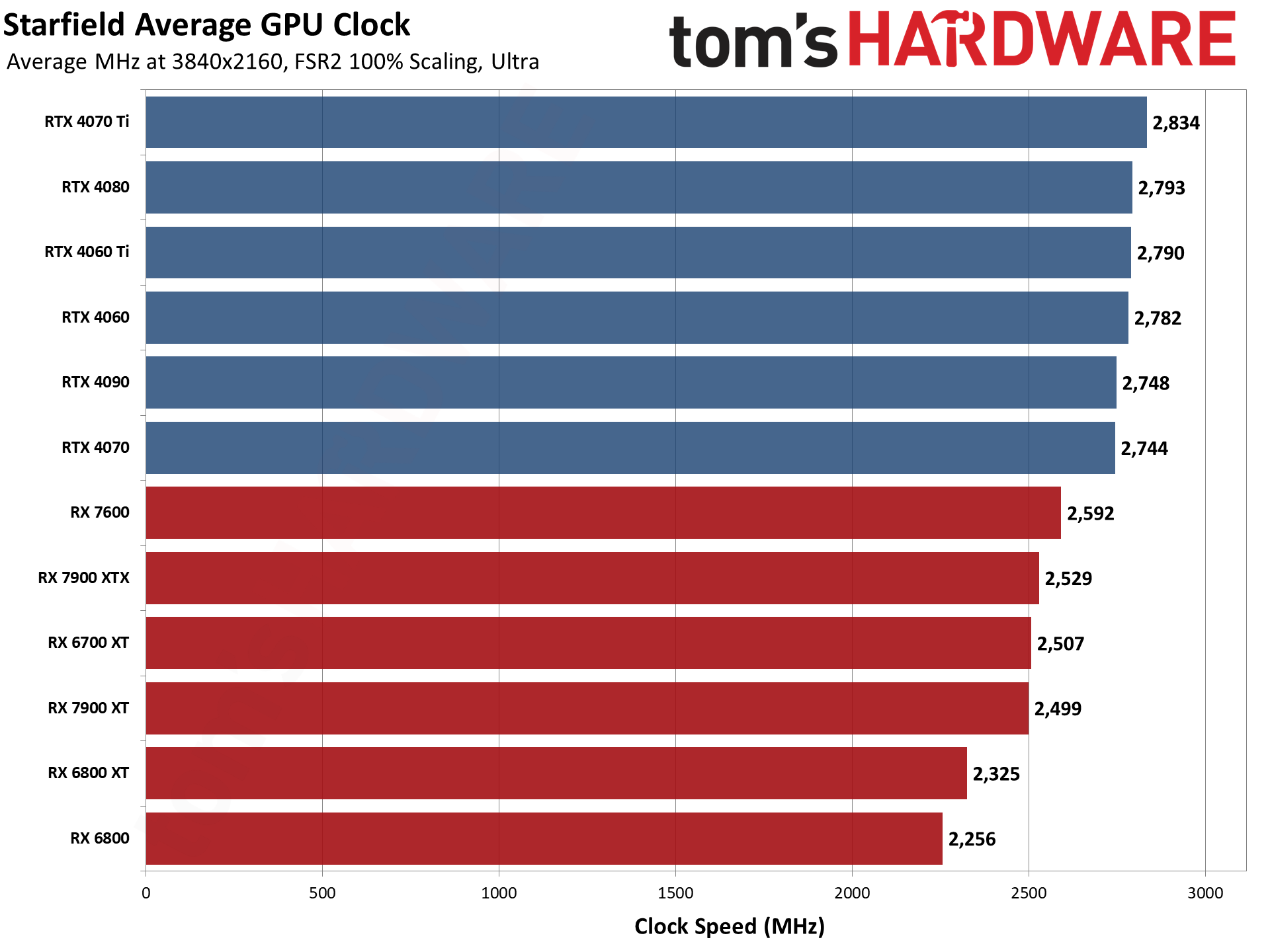

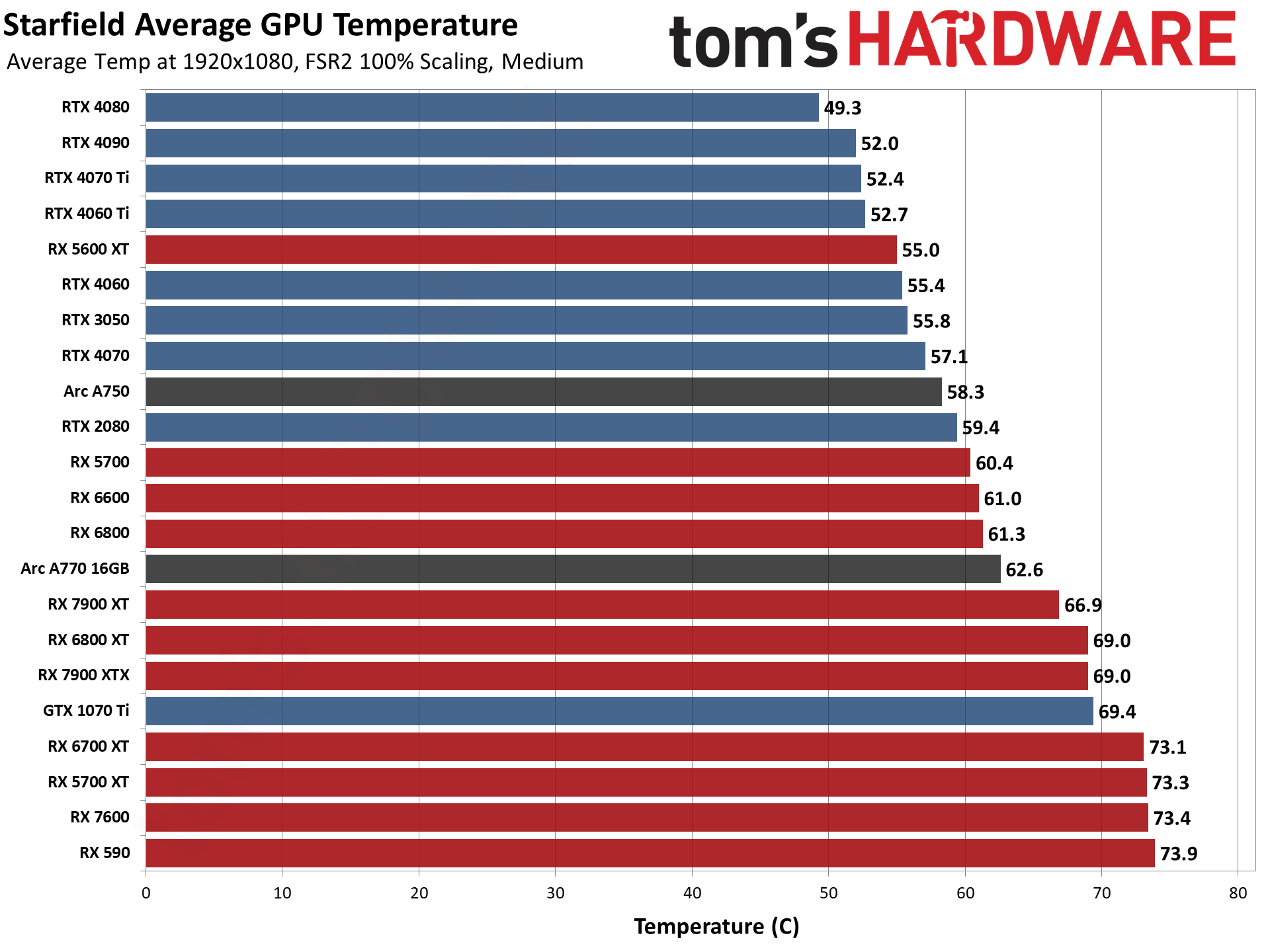

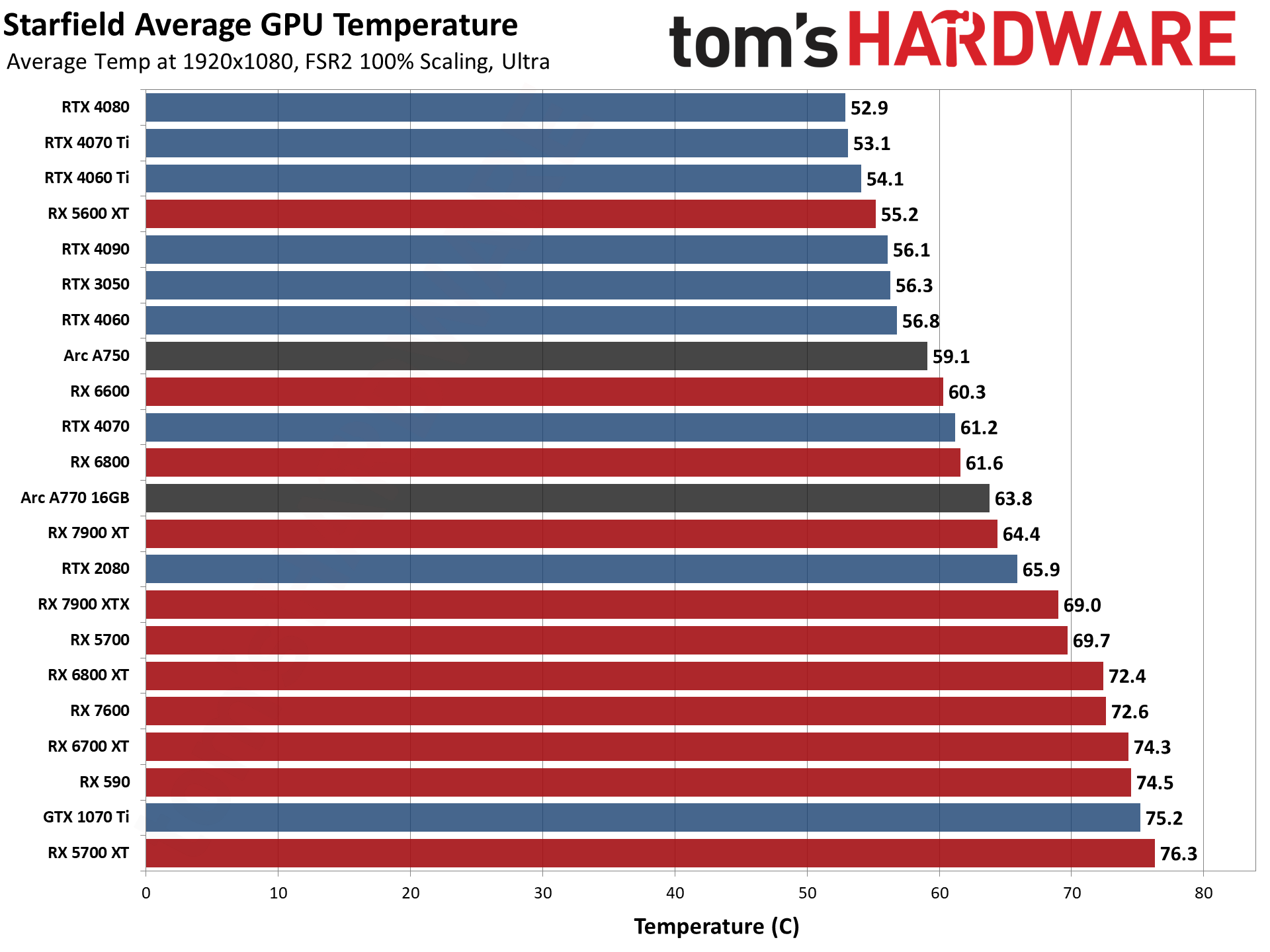

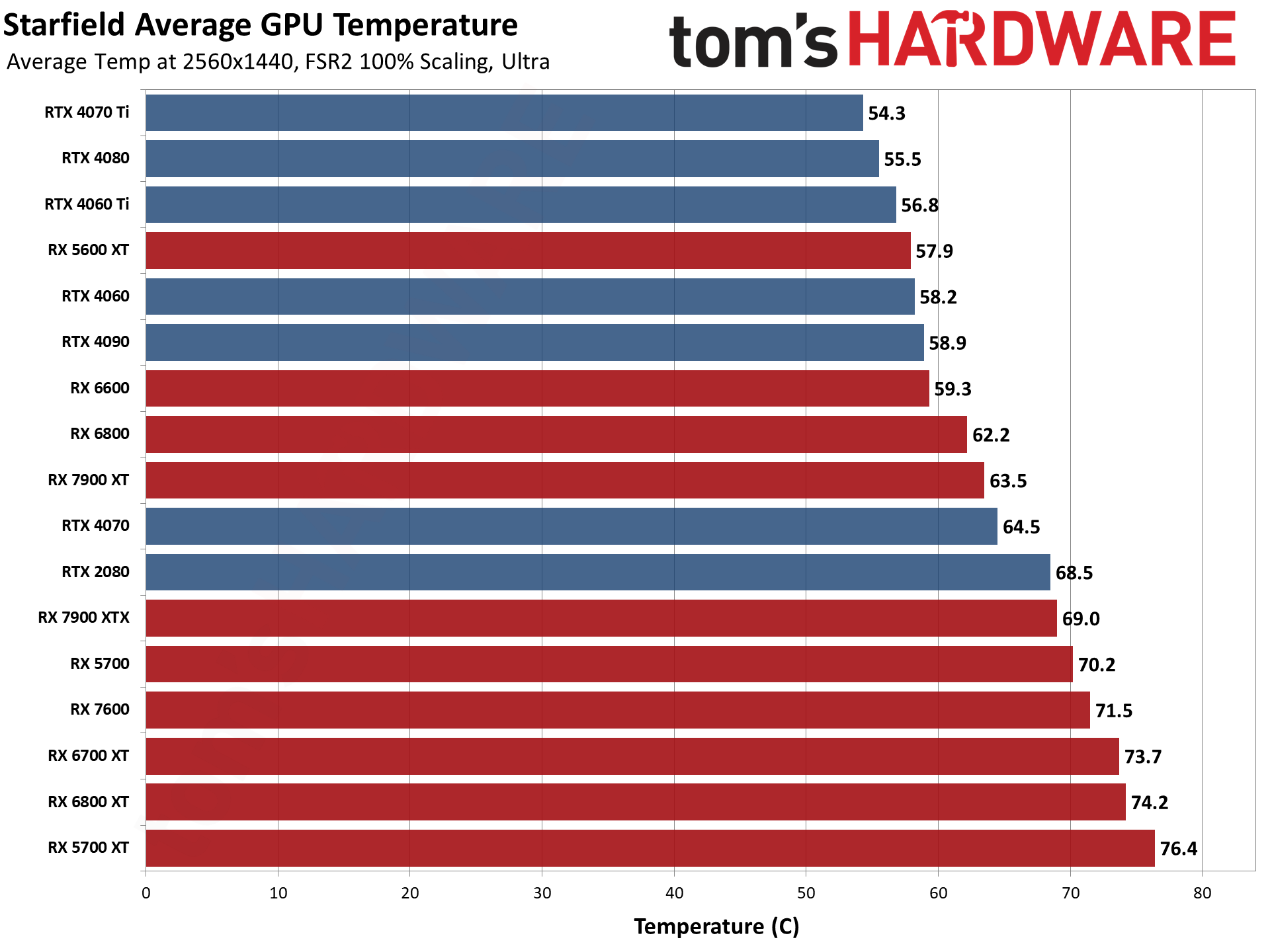

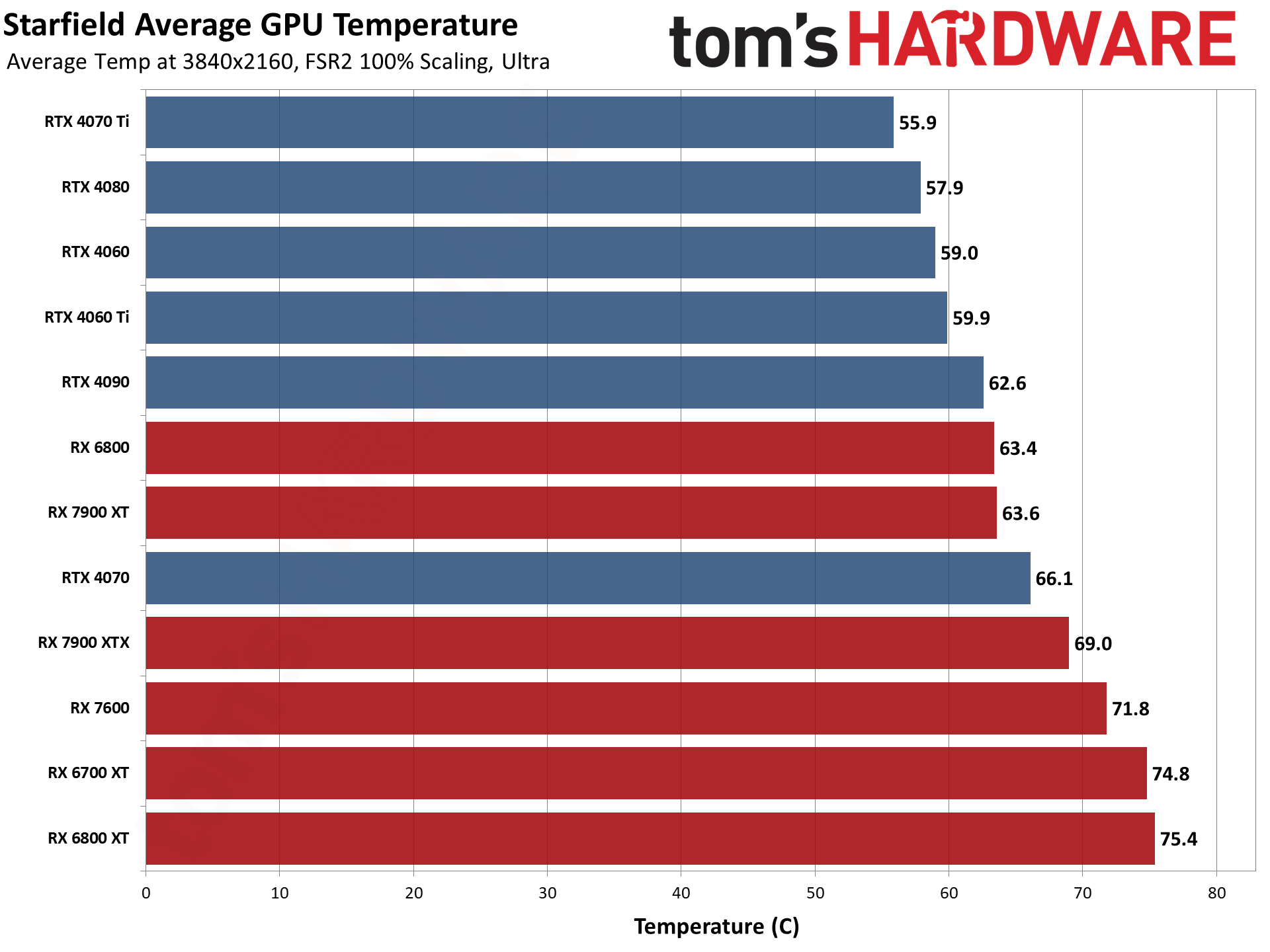

Starfield — Power, Clocks, and Temps

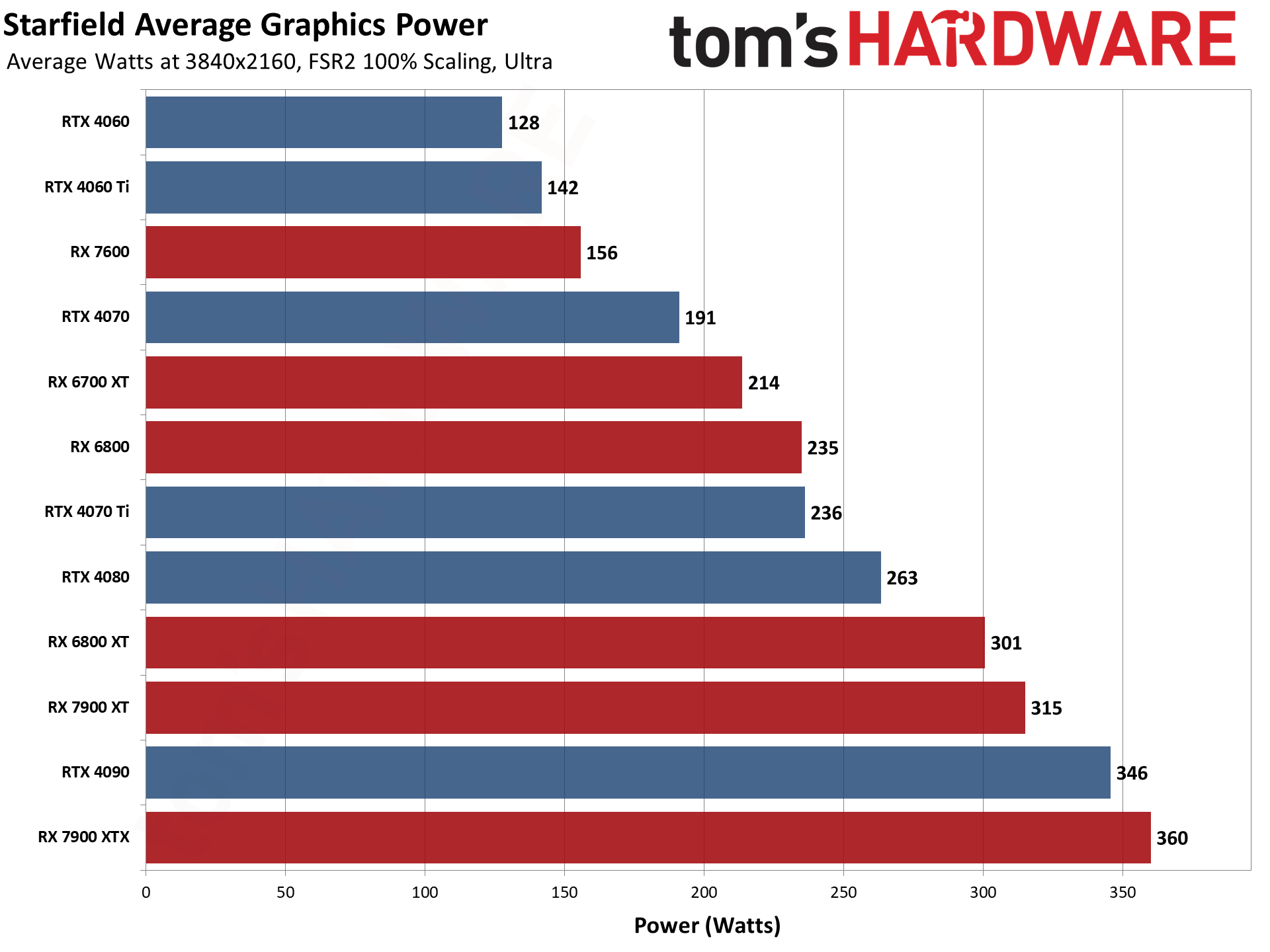

We're providing the power, clock speed, and temperature data from our testing as another point of reference, and the power use in particular is something that warrants a closer look. (We won't analyze the clocks or temperatures, but the charts are there if you want to check them out.)

If a GPU is being properly utilized, its power use should be very close to the card's TGP/TBP (Total Graphics Power / Total Board Power). If something else is holding back performance — the CPU, platform, drivers, or other bottlenecks — power use will typically be far less than the TGP/TBP.

For the AMD GPUs, even at 1080p medium, the cards are at least getting relatively close to their rated power use. As an example, the 7900 XTX draws 357W on average, and it has a 355W TBP rating. Curiously, that perfect match doesn't seem to happen with all the other AMD chips — 7900 XT is rated for 315W but only draws 299W at 1080p medium — but at 1080p ultra and above, team red is making good use of the available power.

What about team green? It's a different story. The RTX 4090 has a 450W TGP rating, but even at 4K ultra it's only drawing 346W, or as little as 232W at 1080p medium. The RTX 4080 is rated for 320W TGP but draws between 187W and 263W in our testing. The same goes for most of the other Nvidia cards, and only the lower end GPUs ever manage to hit their power limits, like the RTX 4060 (115W TGP) that ranges from 109W to 128W — that's a factory overclocked Asus card, incidentally, which has a 125W TGP.

Intel Arc GPUs also have issues in hitting their rated power use. The A750 and A770 cards both have a 225W TBP. At 1080p medium, the A750 uses 136W while the A770 16GB uses 156W. Bump up to ultra settings and the A750 drops to 121W while the A770 increases to 165W. VRAM use does seem to be an issue right now on the 8GB card, though that much VRAM isn't a problem on any of the other GPUs we tested, and even 6GB cards did okay.

Whether it's drivers or something in the game engine, what's clear right now is that Nvidia and Intel GPUs are being underutilized in some fashion. And I'm not talking about measured GPU usage, which is 97% or higher for every GPU. I'm just saying that falling well short of the rated TGP typically indicates a bottleneck elsewhere in the system or code. If that gets fixed, it's a safe bet that overall performance will increase quite a bit as well.

Starfield — Settings and Image Quality

Starfield offers a decent selection of graphics settings to adjust, though there are some oddities. For one, there's no full screen mode. You can do borderless windowed, or windowed. If you want to play at 1080p on a 4K monitor, you'll be best off changing your desktop resolution to 1080p before starting the game — that's what we did for our testing.

Another option that's missing: Anti-aliasing. FSR2 provides that, assuming it's enabled, but it's pretty clear that Starfield uses some form of TAA even if FSR2 isn't turned on. There's just no option to disable it exposed in the game menus. (You can try hacking the StarfieldPrefs.ini file, located in "Documents\My Games\Starfield" but that doesn't show any AA settings either.)

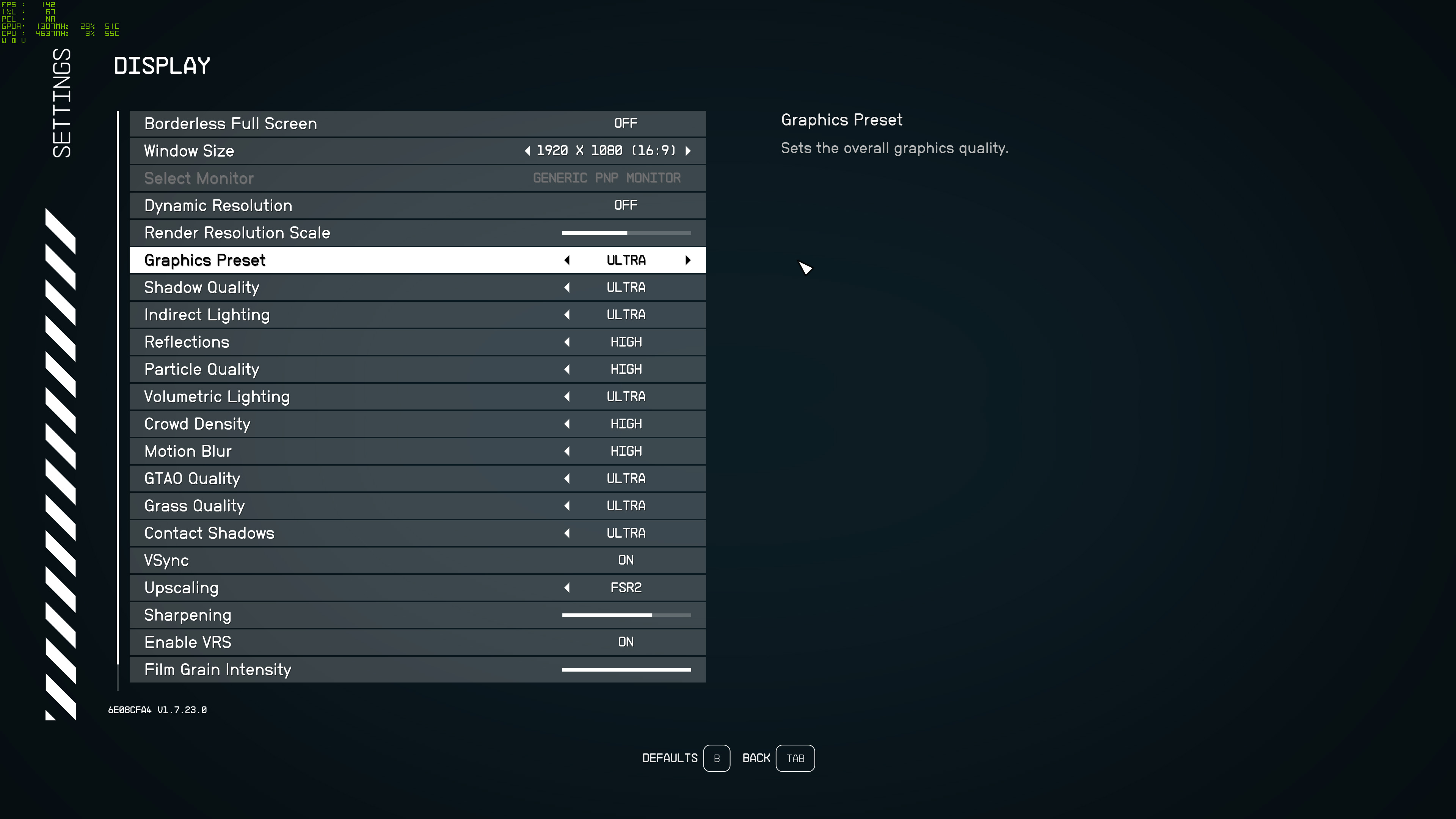

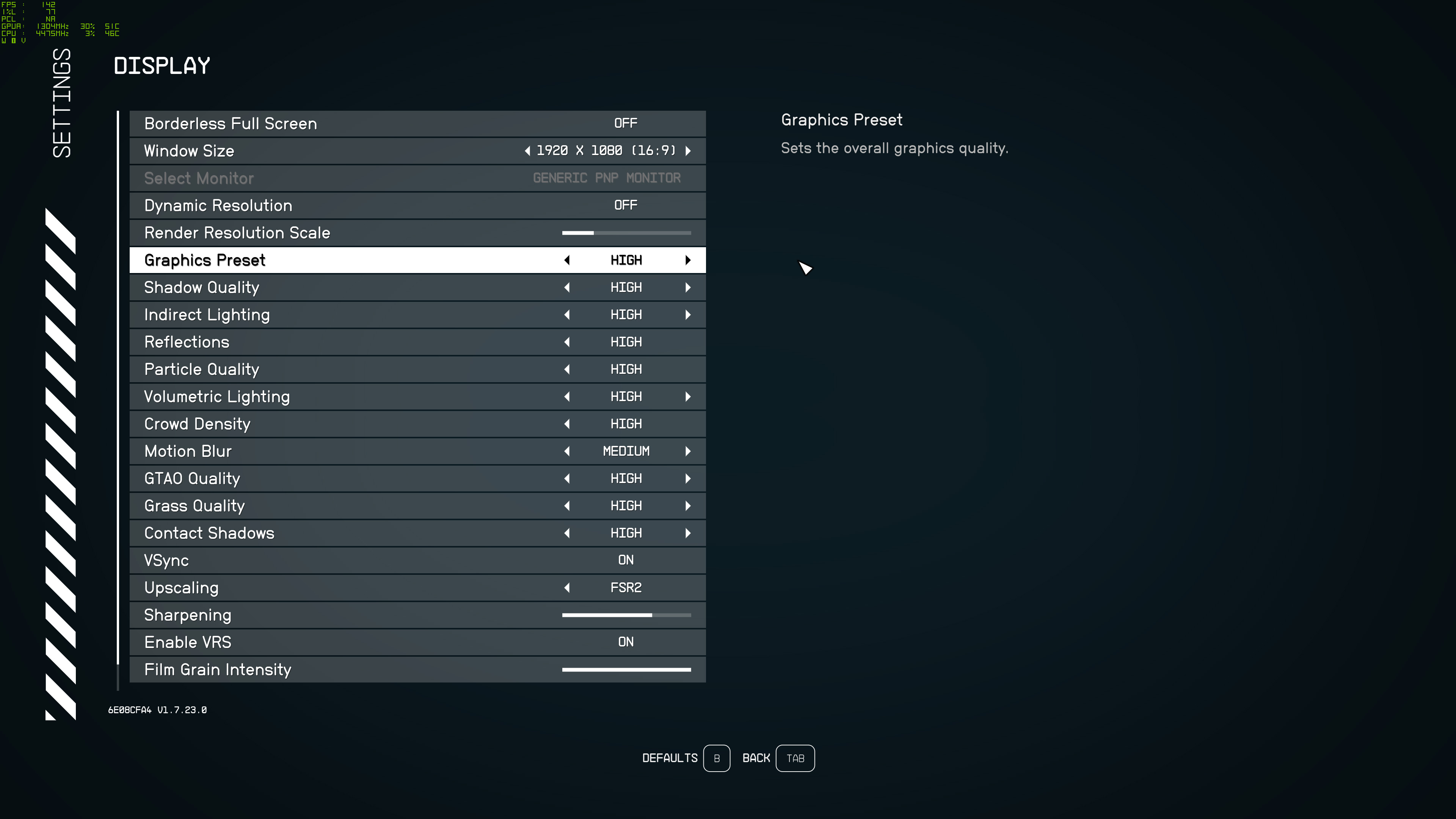

Anyway, there are four graphics presets (plus custom): Low, Medium, High, and Ultra. All of those default to having some level of upscaling, with FSR2 as well as Dynamic Resolution turned on. For our testing above, we disabled Dynamic Resolution and set the Render Resolution Scale to 100%, unless otherwise noted (ie, at 4K with 67% scaling).

Despite the number of settings (twelve, not counting things like Vsync), a lot of them don't impact performance all that much. Going from ultra (maximum) settings everywhere to low (minimum) settings everywhere, but keeping the 100% scaling, performance only improved by about 35%. That was on an RX 6800. but other GPUs show similar gains based on our testing.

Going through the individual settings and turning each one down to minimum, we also didn't see any more than about a 5 percent improvement in performance for most of them. All twelve taken in aggregate make more of a difference, but if you have a card that can run decently at medium settings, it can probably do ultra settings nearly as well. Or put another way, you can turn quality down from Ultra to Medium and only gain a bit of performance, with a similarly slight difference in visual fidelity.

There's one exception to the above: FSR 2 "upscaling." Starfield allows you to enable FSR2 even when the render resolution is set to 100%. This is akin to Nvidia's DLAA, where you get the higher quality temporal AA provided by FSR 2 while still rendering at native resolution. If you switch from FSR 2 to CAS (Contrast Aware Sharpening), which appears to use TAA for anti-aliasing, you'll get about a 15% (give or take) net gain in performance. That applies to all GPUs, and older cards might gain even more, but we do like the overall AA quality of Native FSR 2 and so left it enabled for our testing.

Visually, the biggest difference comes from turning down the Shadow Quality, GTAO Quality, and perhaps Reflections and Volumetric Lighting. As for actual FSR 2 upscaling (meaning, not 100% render resolution), there's no question that it reduces the overall sharpness a bit, even at 75%. At 50%, the artifacts and blurriness become very visible. That's not to say you shouldn't use it, but it's definitely not just "free performance."

Starfield — Initial Performance Thoughts

I've been hunkered down benchmarking Starfield, so I haven't played the game that much yet. What I can say is that general impressions of the game are okay, though the start is a bit sluggish—typical Bethesda RPG, then, in other words. Metacritic shows an 88 aggregate score for the PC version, so critic reviews are quite high from most places. Our sister site, PC Gamer scored it 75, and to me, there are similarities to Mass Effect Andromeda, Cyberpunk 2077, and even No Man's Sky — there's a huge game world (universe) to explore, but so far, I'm not feeling that compelled to do so.

Performance can be fine if you have the right high-end hardware. The RX 7900 XTX hums along at just over 60 fps in my testing, and I'm sure there are many areas where performance will be higher. Turn on a modest level of upscaling with FSR 2, and you can break 90 fps. But for many people with less, *cough*, stellar hardware, it will be a rough takeoff.

Let's also note that, graphically speaking, Starfield doesn't even look that impressive. It's not bad, but at least the parts of the game I've seen aren't pushing new levels of graphics fidelity. Visually, you'd think the game would easily be pushing well over 100 frames per second at maxed-out settings. But this is Bethesda, who had a hard 60 fps cap in Skyrim for ages, and things broke if you exceeded that rate. Maybe we should just be happy Starfield at least attempts to let people make the most of 144 Hz monitors for a change?

Nvidia's RTX 4090 surpasses the RX 7900 XTX, but only at 4K — it's basically a tie at 1440p, and AMD gets the win at 1080p. AMD stated recently that it doesn't prevent its partners from implementing competing technologies like DLSS. But it does have those partners prioritize AMD technologies and hardware. That's pretty common for games that are promoted by AMD or Nvidia, but the discrepancies in performance between GPUs certainly warrant further scrutiny.

In the nine rasterization games we normally use for benchmarking new GPUs, the RTX 4090 leads the 7900 XTX by 30% on average at 4K, 17% at 1440p, and 12% at 1080p. Even Borderlands 3, a game known to heavily favor AMD GPUs — and another game that AMD promoted at launch — still has the 4090 beating the 7900 XTX by 13% at 1080p, 19% at 1440p, and 24% at 4K. To say that Starfield's performance is an outlier right now would be putting it mildly.

Nvidia will very likely provide further optimizations via its drivers to bring Starfield performance up now that the game has been released. Game patches will hopefully also help over the coming weeks and months. Intel needs to fix some things in its drivers as well. But right now, at every meaningful level of comparison, Starfield heavily favors AMD.

Perhaps the optimizations for console hardware are just extra beneficial here, or perhaps Bethesda's engine just works better with AMD GPUs. More likely is that the AMD partnership has resulted in poorly optimized code for non-AMD hardware, and Bethesda didn't put much effort into optimizing performance on other GPUs. Considering about 80% of PC gamers run Nvidia GPUs, that's a big chunk of people that just got snubbed.

If you're in the market for a new GPU and wondering what will run Starfield best, the current answer is AMD. Shocking, we know! Whether that will change in the future remains to be seen. Maybe Starfield will also get native DLSS and XeSS support as well, but let's not count those chickens until they hatch. Conveniently, if you want to buy a new AMD graphics card, you can even get Starfield for free. Happy starfaring!

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

mhmarefat This is a complete disgrace for both Bethesda and AMD. I hope all the money they agreed on was worth it (spoiler: it won't be). Unless some last second patch before the game is released is applied for nvidia and intel users, people are not going to forget this for years to come. GJ AMD and Bethesda for disgracing yourselves.Reply

You have a something better than Fallout 4 graphics running 30 fps 1080p on 2080?! What is PC gaming industry reduced to? -

Dr3ams As I have written in other threads, my mid-range specs (see my signature) play Starfield on ultra at 1440p smoothly. No glitches or stuttering whatsoever. I did have to dial the Render Resolution Scale down to high (which is 62%), because of some shadow issues. In New Atlantis, wandering outdoors, the FPS averaged between 45 and 50. The FPS is higher when you enter a building that requires a load screen. I have tested the game in cities, combat and space combat...again, no glitches or stuttering.Reply

There are some clipping issues that should be addressed by Bethesda. I also have some observations about things that should be changed in future updates.

Third person character movement looks dated. I play a lot of Division 2 (released 2019) and the third person character movement is very good.

Taking cover behind objects in Starfield is poorly thought out.

Ship cargo/storage needs to be reworked. In Fallout 4, you can store stuff in just about every container that belongs to you. Inside the starter ship, Frontier, all the storage spaces can't be opened. The only way to store stuff is by accessing the ship's cargo hold through a menu. No visual interaction whatsoever. I haven't built my own ship yet, so maybe this will change later.

Ship combat needs some tweaking. It would be nice to have a companion fly the ship while the player mounts a side gun.

Movement. The same issue that Fallout has is showing up in Starfield. Walking speed is OK, but running in both the speeds provided is way to fast. This is especially frustrating when trying to collect loot without slow walking through a large area. In settings, there should be a movement speed adjustment. The only way I know to adjust this, is through console commands.I do love this game though and will be spending a lot of hours playing it. :p -

Makaveli lol its nice to have a game optimized for your hardware at launch we usually have the opposite on the red team.Reply

People need to relax it will improve with game patches and driver updates.

as per TPU forum.

"DefaultGlobalGraphicsSettings.json" you get the following predetermined graphics list.

{"GPUs":

} -

-Fran- Thanks a lot Jarred for this set of tests!Reply

If I may: would you please test CPU core scaling and memory scaling (latency and bandwidth) for Starfield? I have a suspicion you may find interesting data in there to share.

Also, a special look at the nVidia CPU driver overhead vs AMD. I have a suspicion some of the AMD favouring comes from that? I'd love to see if that hunch is on the money or not :D

Regards. -

JarredWaltonGPU Reply

The problem is that I only have a limited number of CPUs on hand to test with. I could "simulate" other chips by disabling cores, but that's not always an accurate representation because of differences in cache sizes. Here's what I could theoretically test:-Fran- said:Thanks a lot Jarred for this set of tests!

If I may: would you please test CPU core scaling and memory scaling (latency and bandwidth) for Starfield? I have a suspicion you may find interesting data in there to share.

Also, a special look at the nVidia CPU driver overhead vs AMD. I have a suspicion some of the AMD favouring comes from that? I'd love to see if that hunch is on the money or not :D

Regards.

Core i7-4770K

Core i9-10980XE (LOL)

Core i9-9900K

Core i9-12900K

Ryzen 9 7950X

I might have some other chips around that I've forgotten about, but if so I don't know if I have the appropriate platforms for them. The 9900K is probably a reasonable stand-in for modern midrange chips, and I might look at doing that one, but otherwise the only really interesting data point I can provide would be the 7950X.

You should harass @PaulAlcorn and tell him to run some benchmarks. 🙃 -

steve15180 I find this article interesting for a bit of a different reason. I think the common belief is that Microsoft has written DX12 ( or 11, etc.) as a universal abstraction layer for any GPU to use. As was pointed out Nvidia has the lion's share of the market. So, my question is, is DX12 written so generically that neither card would work well without modifications on the part of the game developer? Since AMD is a game sponsor and assisted with development, it's only fair that the work with AMD to ensure their cards run well. Nvidia does the same with their sponsored titles.Reply

Here's what I'd really like to know. Do we really know who's camp has the better card from an unbiased point of view? One of the complaints I read was complaining that the developer optimized for 3 to 6% of the market. Well they helped the developers through an agreement. Just like the Nvida programs of the same type. So, when we benchmark for which card is best, is one truly better than the other? Outside of "the way it's meant to be played", and AMD's program, do developers develop on mostly Nvidia hardware because that's what's more popular? Do game wind up running better on Nvidia hardware because their used more often for development? If both companies were to put the same effort into both cards to maximize for best performance, how would that look on a benchmark? (Also excluding and deliberate programming which hnders one side vs the other.)

I don't know the answers, but it would be interesting to find out. -

-Fran- Reply

I would love to see how the 12900K behaves with different RAM if I had to pick out of that list. Please do consider it? :DJarredWaltonGPU said:The problem is that I only have a limited number of CPUs on hand to test with. I could "simulate" other chips by disabling cores, but that's not always an accurate representation because of differences in cache sizes. Here's what I could theoretically test:

Core i7-4770K

Core i9-10980XE (LOL)

Core i9-9900K

Core i9-12900K

Ryzen 9 7950X

I might have some other chips around that I've forgotten about, but if so I don't know if I have the appropriate platforms for them. The 9900K is probably a reasonable stand-in for modern midrange chips, and I might look at doing that one, but otherwise the only really interesting data point I can provide would be the 7950X.

You should harass @PaulAlcorn and tell him to run some benchmarks. 🙃

I hope Paul humours our data hunger and gives us some of his time for testing the CPU front on a more complete way.

Still, whatever comes out of this, I'm still thankful for the data thus far.

Regards. -

mhmarefat Reply

DX12 is not universal, it is for Windows OS only. It is (or used to! welcome to 2023 gaming industry where games are released half-finished and have ZERO optimization unless you pay the optimization tax) Game Developer's job to make sure their game runs well on majority of GPUs. It is true that AMD sponsored this game but nowhere "fair" that it should run completely BROKEN on other GPU brands. It is truly shameful. We do not see nvidia sponsored games run trash on AMD GPUs do we? What makes sense is that It should run well on all GPUs but better on the sponsored brand. Current state is a greed-fest predatory behavior.steve15180 said:I find this article interesting for a bit of a different reason. I think the common belief is that Microsoft has written DX12 ( or 11, etc.) as a universal abstraction layer for any GPU to use. As was pointed out Nvidia has the lion's share of the market. So, my question is, is DX12 written so generically that neither card would work well without modifications on the part of the game developer? Since AMD is a game sponsor and assisted with development, it's only fair that the work with AMD to ensure their cards run well. Nvidia does the same with their sponsored titles.

Here's what I'd really like to know. Do we really know who's camp has the better card from an unbiased point of view? One of the complaints I read was complaining that the developer optimized for 3 to 6% of the market. Well they helped the developers through an agreement. Just like the Nvida programs of the same type. So, when we benchmark for which card is best, is one truly better than the other? Outside of "the way it's meant to be played", and AMD's program, do developers develop on mostly Nvidia hardware because that's what's more popular? Do game wind up running better on Nvidia hardware because their used more often for development? If both companies were to put the same effort into both cards to maximize for best performance, how would that look on a benchmark? (Also excluding and deliberate programming which hnders one side vs the other.)

I don't know the answers, but it would be interesting to find out.

Developers mostly optimize for AMD (RDNA 2) because consoles are RDNA 2 based too. -

Dr3ams Reply

FSR will run on Nvidia GPUs. And Starfield doesn't run "completely broken" on other non AMD GPUs, with the exception of Intel. But Intel will be releasing a patch for that.mhmarefat said:DX12 is not universal, it is for Windows OS only. It is (or used to! welcome to 2023 gaming industry where games are released half-finished and have ZERO optimization unless you pay the optimization tax) Game Developer's job to make sure their game runs well on majority of GPUs. It is true that AMD sponsored this game but nowhere "fair" that it should run completely BROKEN on other GPU brands. It is truly shameful. We do not see nvidia sponsored games run trash on AMD GPUs do we? What makes sense is that It should run well on all GPUs but better on the sponsored brand. Current state is a greed-fest predatory behavior.

Developers mostly optimize for AMD (RDNA 2) because consoles are RDNA 2 based too.