Tom's Hardware Verdict

AMD’s Ryzen 5 2600X is faster than the previous-gen models in nearly every respect. The addition of lower cache and memory latencies, along with more sophisticated multi-core boosts, takes AMD’s gaming performance to the next level. The extra threads are helpful for everyday productivity, and the bundled storage software and cooler add even more value.

Pros

- +

Big boost over previous-gen Ryzen

- +

Bundled cooler

- +

Improved memory and cache performance

- +

Backward compatibility with 300-series chipsets

Cons

- -

No value-oriented 400-series motherboards

Why you can trust Tom's Hardware

Ryzen To The Mainstream

AMD's 2000-series Ryzen CPUs are already available, challenging the Coffee Lake-based Core line-up from Intel. As we found in our Ryzen 7 2700X review, a host of improvements made possible by 12nm manufacturing, such as higher frequencies and Precision Boost 2, add more performance in threaded apps. Meanwhile, lower system memory and cache latencies augment AMD's showing in lightly-threaded apps like games. Unlocked multipliers, backward compatibility with older Socket AM4 motherboards, a beefy bundled cooler, and a $330 price tag combine to leave us impressed. The Ryzen 7 2700X offers a great alternative to Intel's Core i7-8700K, which costs more, doesn't come with a thermal solution, and drops into more expensive motherboards (at least if you want to overclock).

Similarly, Ryzen 5 2600X targets Intel's enthusiast-oriented Core i5-8600K, leveraging similar advancements and a more attractive $230 price tag. As we'll see, it's even faster than the first-gen flagship Ryzen 7 1800X in many workloads.

But First, Spectre Variant 2

Unfortunately, due to a lack of communication from AMD, we weren't told that the company had rolled its Spectre Variant 2 patch into shipping X470 platforms. As a result, our Ryzen 7 2700X launch day coverage didn't include Intel CPUs tested with their corresponding patches. Today's review does, however, feature results generated on Intel-based systems with the latest Spectre microcode updates.

Ryzen 5 2600X

Ryzen 2000-series processors, otherwise known by their Pinnacle Ridge code name, are based on the same basic Zen core design as previous-gen models (though AMD now uses Zen+ nomenclature to reference the architecture's various improvements). The CPUs still utilize a dual-CCX configuration, tied together with Infinity Fabric, yielding eight physical cores. The flagship Ryzen 7 2700X comes with all eight of its cores active. For Ryzen 5 2600X, AMD turns two off, creating a six-core, 12-thread configuration with an unlocked ratio multiplier.

As mentioned, Ryzen 5 2600X sells for $230, replacing the $220 Ryzen 5 1600X. It slots into the gap between Core i5-8600K and the Core i5-8400, forcing the chip to contend with Intel's recently-announced Core i5-8600. While we don't have that model in our lab yet, we do have the two nearest Coffee Lake-based competitors in today's benchmark charts.

What do you get, performance-wise, for the extra $10? Ryzen 5 2600X sports the same 3.6 GHz base clock rate and a slightly higher 4.2 GHz Precision Boost 2 frequency (+200 MHz) than 1600X. That might seem minor, but as our benchmarks show, the gains are quite pronounced in threaded workloads. Like its predecessor, the 2600X also features 16MB of L3 cache and a 95W TDP.

| Row 0 - Cell 0 | AMD Ryzen 7 2700X | AMD Ryzen 7 1800X | AMD Ryzen 7 2700 | AMD Ryzen 5 1600X | Ryzen 5 1600 | AMD Ryzen 5 2600X | AMD Ryzen 5 2600 | Intel Core i5-8600K | Intel Core i5-8600 | Intel Core i5-8400 |

| MSRP | $329 | $349 | $299 | $219 | $189 | $229 | $199 | $257 | $224 | $182 |

| Cores/Threads | 8/16 | 8/16 | 8/16 | 6/12 | 6/12 | 6/12 | 6/12 | 6/6 | 6/6 | 6/6 |

| TDP | 105W | 95W | 65W | 95W | 65W | 95W | 65W | 95W | 65W | 65W |

| Base Freq. (GHz) | 3.7 | 3.6 | 3.2 | 3.6 | 3.2 | 3.6 | 3.4 | 3.6 | 3.1 | 2.8 |

| Precision Boost Freq. (GHz) | 4.3 | 4.1 | 4.1 | 4.0 | 3.6 | 4.2 | 3.9 | 4.3 | 4.3 | 4.0 |

| Cache (L3) | 16MB | 16MB | 16MB | 16MB | 16MB | 16MB | 16MB | 9MB | 9MB | 9MB |

| Unlocked Multiplier | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | No |

| Cooler | 105W Wraith Prism (LED) | - | 95W Wraith Spire (LED) | - | 95W Wraith Spire | 95W Wraith Spire | 65W Wraith Stealth | - | Intel | Intel |

Although AMD didn't include thermal solutions with its original Ryzen X-series processors, the company does bundle coolers with its pricier models now. On one hand, it's nice that the 95W Wraith Spire cooler neatly matches the 2600X's thermal design power. On the other, we're not expecting much overclocking headroom from the combination.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Ryzen 5 2600X can drop into either new X470 or older 300-series motherboards. As usual, AMD allows you to overclock on value-minded B-series boards, too. And even though 400-series B-models aren't available yet, they'll undoubtedly offer a lower-priced alternative for overclocking.

Officially, the Ryzen 5 2600X supports up to DDR4-2933 memory, just like Ryzen 7 2700X. This trumps Coffee Lake's Intel-specified DDR4-2666 ceiling (with a few caveats). AMD also sticks with Indium solder between Ryzen 5's die and heat spreader, improving thermal transfer performance. And as we mentioned in our Ryzen 7 2700X review, these new CPUs also include StorMI Technology, which is a software-based tiering solution that blends the low price and high capacity of a hard drive with the speed of an SSD, 3D XPoint (including Intel's Optane parts), or even up to 2GB of RAM.

Precision Boost 2 and XFR2

In a nutshell, AMD is leveraging GlobalFoundries' 12nm process to enhance its design, rather than shrink it. The enhancements offer higher performance or lower power consumption at any given frequency, giving AMD headroom for other improvements.

The company's previous-gen Ryzen processors have Precision Boost, which is similar to Intel's Turbo Boost technology, and eXtended Frequency Range (XFR), capable of delivering a frequency uplift when your cooling solution has thermal headroom to spare.

The new Precision Boost 2 (PB2) and XFR2 algorithms improve performance in threaded workloads by raising the frequency of any number of cores. AMD doesn't share a list of specific multi-core Precision Boost 2 and XFR2 bins because the opportunistic algorithms accelerate to different clock rates based on temperature, current, and load.

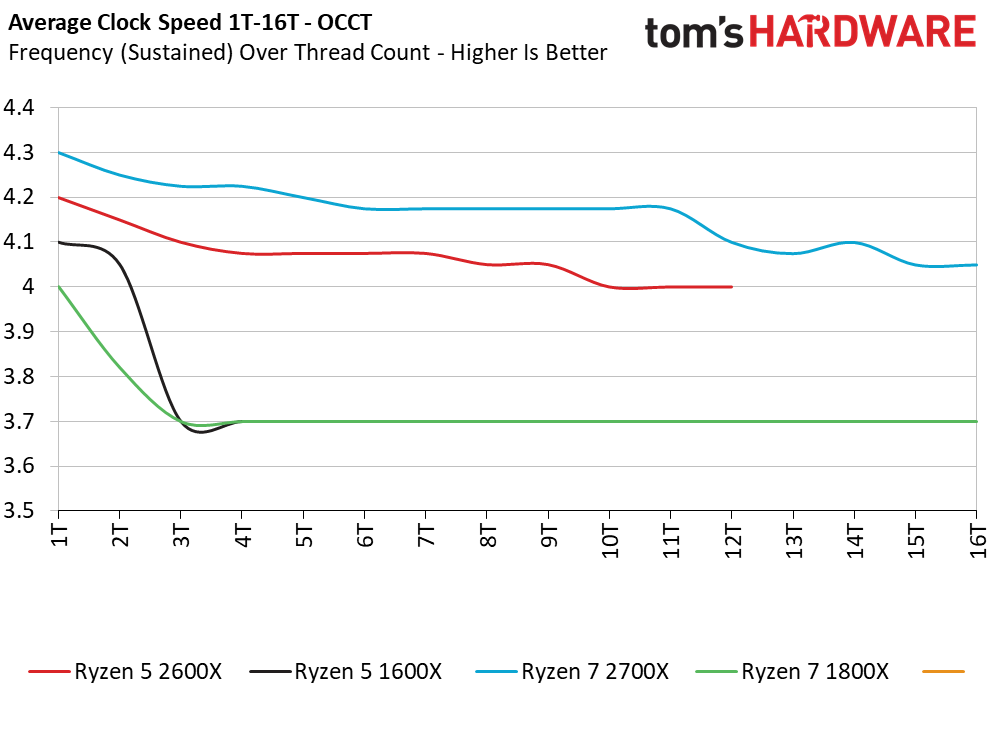

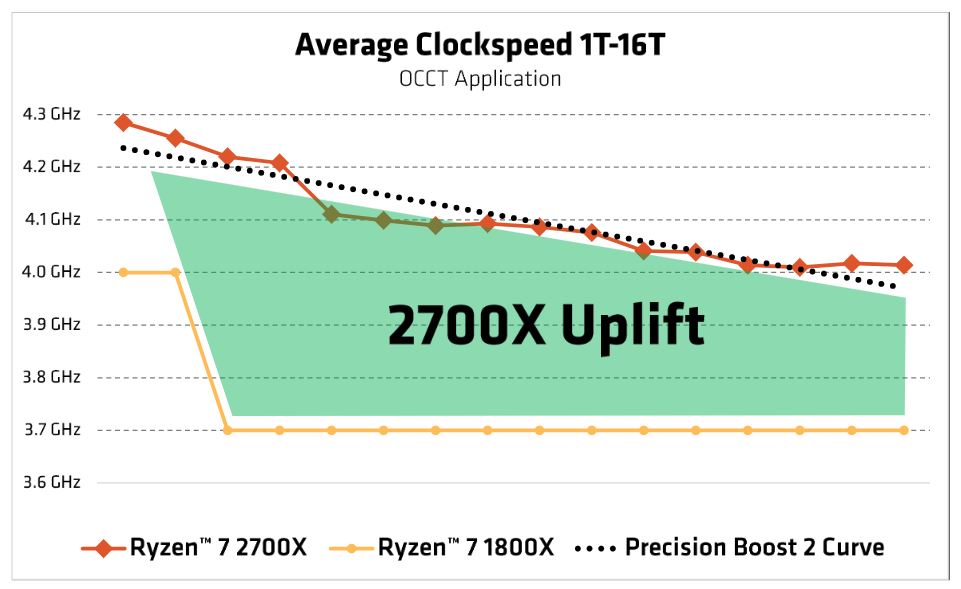

AMD gave us a graph of the PB2 frequencies for Ryzen 7 2700X, but we followed up with our own measurements to compare the current and previous-gen Ryzen 5 models. As you can see, Ryzen 5 2600X offers more robust multi-core frequencies than its predecessor, and our Ryzen 7 2700X measurements largely mirror AMD's. We tested both CPUs with AMD's Precision Boost Overdrive active. The Ryzen 7 2700X does have a higher TDP rating that some older motherboards may struggle with, so PB2 performance will vary based upon the power delivery subsystem.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Ryzen To The Mainstream

Next Page Patching Up, Overclocking & Test Setup

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

nitrium Reply

??? Yes it destroys Intel's performance (not AMD's), but it's off by default in Windows and there is no reason to force it on.20922954 said:HPET.....

-

bbertram99 i was wondering if reviews will now be posting if they checked if it was on or not. I think they should since we don't always know when it being forced. Its not evident until you look. If they don't state its not forced on then we are left wondering.Reply -

toyo What's the point of this? Where's the GTX 1080ti? The 1080 simply result in every CPU being able to feed it enough data so scores are almost similar. Hence anomalies like having the 8400 or 8600k often being better than the 8700K, which should be impossible considering the higher clocks. I mean, this CPU performed the best for 3-4 months, even after Meltdown/Spectre patches/BIOS, and now it suddenly has issues competing with its own family of CPUs that are half that price, really?Reply

Then there's the gaming suite chosen. Old Far Cry? The 5th is out. Where's AC: Origins, notoriously CPU hungry? Overwatch? FFXV?

Hell, even the older Deus Ex or Kingdom Come: Deliverance would have made more sense to test CPUs.

But yes, this shows that for anything below 1080ti, you're good with pretty much all of these CPUs. Yet it doesn't tell the whole story, and soon a new GPU generation will be released, probably introducing many here to GTX 1080ti levels of performance, so testing with it does make sense. -

Paul Alcorn Reply20923085 said:i was wondering if reviews will now be posting if they checked if it was on or not. I think they should since we don't always know when it being forced. Its not evident until you look. If they don't state its not forced on then we are left wondering.

HPET has been disabled by default in Windows for a decade or so now. The OS can call on HPET if it needs it. The performance overhead of HPET is a known quantity, which is why the OS doesn't use it if possible.

We test without HPET disabled, which is enforced by our test scripts to ensure it stays that way. -

nitrium Reply

Agreed. I'm still using an i5 760 (@.3.4GHz) which was released in July 2010. I have had multiple GPU upgrades over the years (as of this moment I'm on an R9 390), so I also would very much like to know if a new CPU is as "future proof" as possible with regards to GPU upgrades.20923162 said:Yet it doesn't tell the whole story, and soon a new GPU generation will be released, probably introducing many here to GTS 1080ti levels of performance, so testing with it does make sense.

-

kilgor98 Would the 8700k cost what it does today without Ryzen? They would still be feeding us quad cores on the same 14nm process.Reply -

bbertram99 Don't see how to quote you PAULALCORN.Reply

Considering Anandtech got caught by the HPET bug and you never see it mentioned in any reviews until now. So now i question each review I have seen and will see unless in mentioned. The credibility of all benchmarks are in question unless it clear HPET is disabled. Good thing you script handles that, thank you for let me know.

Keep on providing great content, I've loved Tom's reviews for a LONG time. -

btmedic04 so can we expect updated benchmarks in the 2700x's review being that the results are skewed by the lack of specter patches on the intel processors?Reply -

Paul Alcorn Reply20923324 said:Don't see how to quote you PAULALCORN.

Considering Anandtech got caught by the HPET bug and you never see it mentioned in any reviews until now. So now i question each review I have seen and will see unless in mentioned. The credibility of all benchmarks are in question unless it clear HPET is disabled. Good thing you script handles that, thank you for let me know.

Keep on providing great content, I've loved Tom's reviews for a LONG time.

No one mentions HPET because it is disabled by default in the OS. If we listed every single feature that we leave alone and do not alter...that would be a long list :)