AMD Ryzen 5 1600X CPU Review

Why you can trust Tom's Hardware

The Infinity Fabric: A Blessing And A Curse

There is much speculation (and plenty of proof) that faster memory improves Ryzen's gaming performance. The theory is that the speed of AMD's Infinity Fabric is tied to the memory clock rate, and through a bit of our targeted testing below, it looks like this is true.

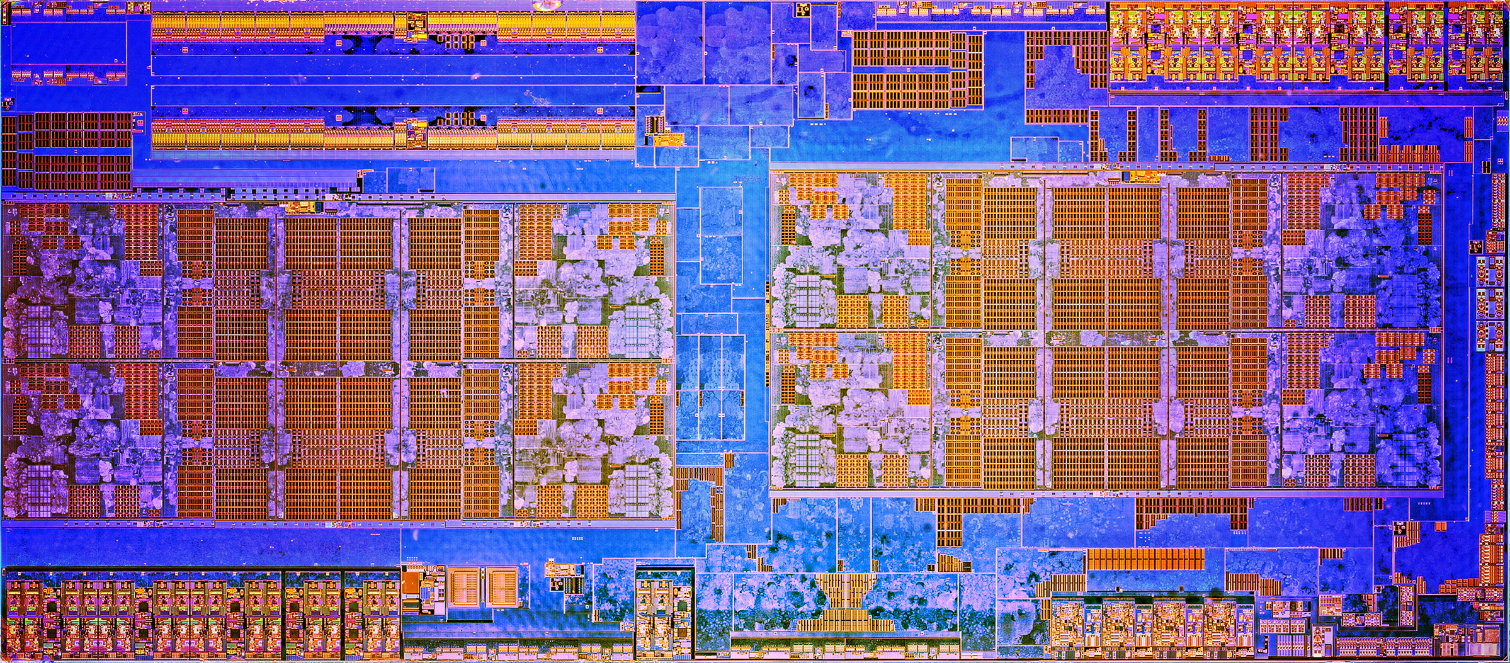

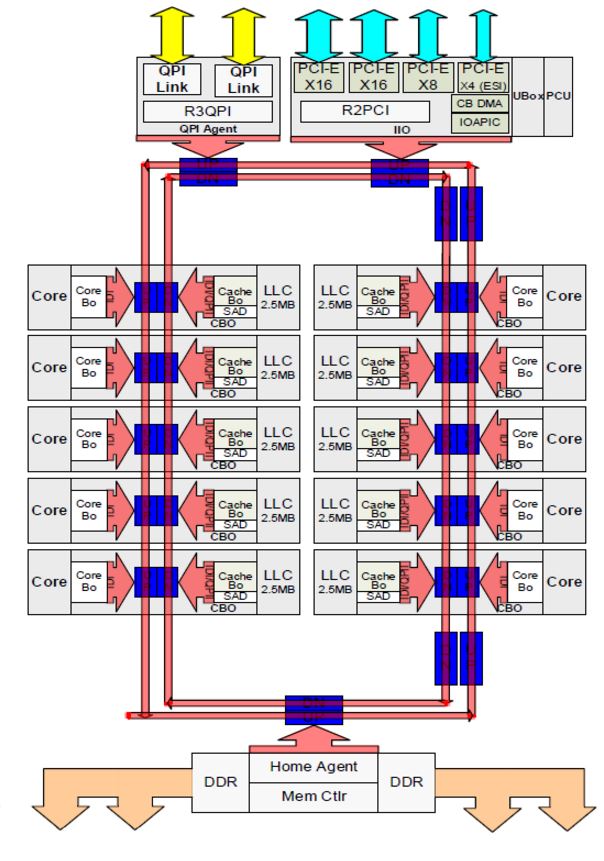

Again, the Zen architecture employs a four-core CCX (CPU Complex) building block. AMD outfits each CCX with a 16-way associative 8MB L3 cache split into four slices; each core in the CCX accesses this L3 with the same average latency. Two CCXes come together to create an eight-core Ryzen 7 die (image below), and they communicate via AMD’s Infinity Fabric interconnect. The CCXes also share the same memory controller. This is basically two quad-core CPUs talking to each other over a dedicated pathway: Infinity Fabric, a 256-bit bi-directional crossbar that also handles northbridge and PCIe traffic. The large amount of data flowing through this pathway requires a lot of scheduling magic to ensure a high quality of service. It's also logical to assume that the six- and four-core models benefit from less cross-CCX traffic compared to the eight-core models.

Data that has to move between CCXes incurs higher latency, so it's ideal to avoid the trip altogether if possible. But threads may be forced to migrate between CPU Complexes, thus suffering cache misses on the local CCX's L3. Threads might also depend on data in other threads running on the CCX next door, again adding latency and chipping away at overall performance.

Intel employs a dual ring bus, seen to the right on a Broadwell die and covered in depth here, which serves much the same purpose. However, it doesn't suffer the same latency penalty between cores due to its contiguous design and dual independent rings. Although AMD hasn't confirmed, we suspect that the Infinity Fabric is a single pathway.

AMD's Infinity Fabric serves a key strategic advantage for the company as it rolls out 32-core Naples processors featuring four CCXes per package. The interconnect is more scalable than Intel's ring bus, which encounters increased latency as more cores are added. But it isn't without weakness (clearly). Intel's design may be more limited in terms of scaling out, but the company already utilizes a mesh topography with its Knight's Landing products (covered here) to solve that. We suspect we'll see something similar from Intel's next-gen desktop products.

Putting Numbers To Theory

Accurately measuring bus latency is tricky. Fortunately, SiSoftware recently introduced its Sandra Business Platinum version that includes a novel Processor Multi-Core Efficiency test. It's able to measure inter-core, inter-module, and inter-package latency in a number of different configurations using Multi-Threaded, Multi-Core Only, and Single-Threaded tests. We use the Multi-Threaded metric with the "best pair match" setting (lowest latency) for our purposes.

First, we measured core-to-core latency on Intel's Hyper-Threaded Core i7-7700K to establish a baseline for AMD's SMT-equipped Ryzen processors. The results from Intel's solution are incredibly consistent between runs. That's in stark contrast to the Ryzen CPUs, which varied from one run to the next.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

| Core i7-7700K Memory Data Rate | Inter-Core Latency | Core-To-Core Latency | Core-To-Core Average |

| 1333 MT/s | 14.8ns | 38.6 - 43.2ns | 41.5ns |

| 2666 MT/s | 14.8ns | 29.4 - 45.5ns | 42.13ns |

| 3200 MT/s | 14.7 - 14.8ns | 40.8 - 46.5ns | 43.08ns |

The inter-core measurement quantifies latency between threads that are resident on the same physical core, while the core-to-core numbers reflect thread-to-thread latency between two physical cores. As we can see, there are slight gains as we increase the memory data rate on our stock Core i7, but they're only in the 4% range. This explains, at least in part, why we don't see explosive gains from overclocked memory on Intel CPUs.

| Core i7-7700K Memory Data Rate | Inter-Core Latency | Core-To-Core Latency | Core-To-Core Average |

| 1333 MT/s | 12.9 - 13.3ns | 38.3 - 41.1ns | 39.59ns |

| 2666 MT/s | 12.9ns | 34.5 - 39.9ns | 37.67ns |

| 3200 MT/s | 12.9ns | 36.1 - 39.2ns | 37.8ns |

After increasing the CPU's clock rate to 5 GHz, we observe a quantifiable reduction in inter-core and core-to-core latency.

| Ryzen 5 1600X Memory Data Rate | Inter-Core Latency Range | Inter-CCX Core-to-Core Latency | Cross-CCX Core-to-Core Latency | Cross-CCX Average Latency | % Increase From 1333 |

| 1333 MT/s | 14.8 - 14.9ns | 40.4 - 42.0ns | 197.6 - 229.8ns | 224ns | Baseline |

| 2666 MT/s | 14.8 - 14.9ns | 40.4 - 42.6ns | 119.2 - 125.4ns | 120.74ns | 46% |

| 3200 MT/s | 14.8 - 14.9ns | 40.0 - 43.2ns | 109.8 - 113.1ns | 111.5ns | 50% |

Repeated tests on our stock Ryzen 5 1600X revealed more run-to-run variance, so these numbers are the average of two test sessions. The inter-core latency measurements represent communication between two logical threads resident on the same physical core, and they're unaffected by memory speed. Inter-CCX measurements quantify latency between threads on the same CCX that are not resident on the same core. We observe slight variances, but inter-CCX latency is also largely unaffected by memory speed.

Cross-CCX quantifies the latency between threads located on two separate CCXes, and as we can see, there is a tremendous penalty associated with traversing the Infinity Fabric. Dialing in a higher memory data rate reduces the Infinity Fabric's latency, though that's subject to diminishing returns.

Doubling the data rate from 1333 to 2666 MT/s grants a 46% performance increase. However, jumping up another 20% to 3200 MT/s only yields a 4% reduction in cross-CCX latency. Moreover, we tested our 4 GHz overclock against the 1600X's stock 3.7 GHz, but latency was largely unaffected.

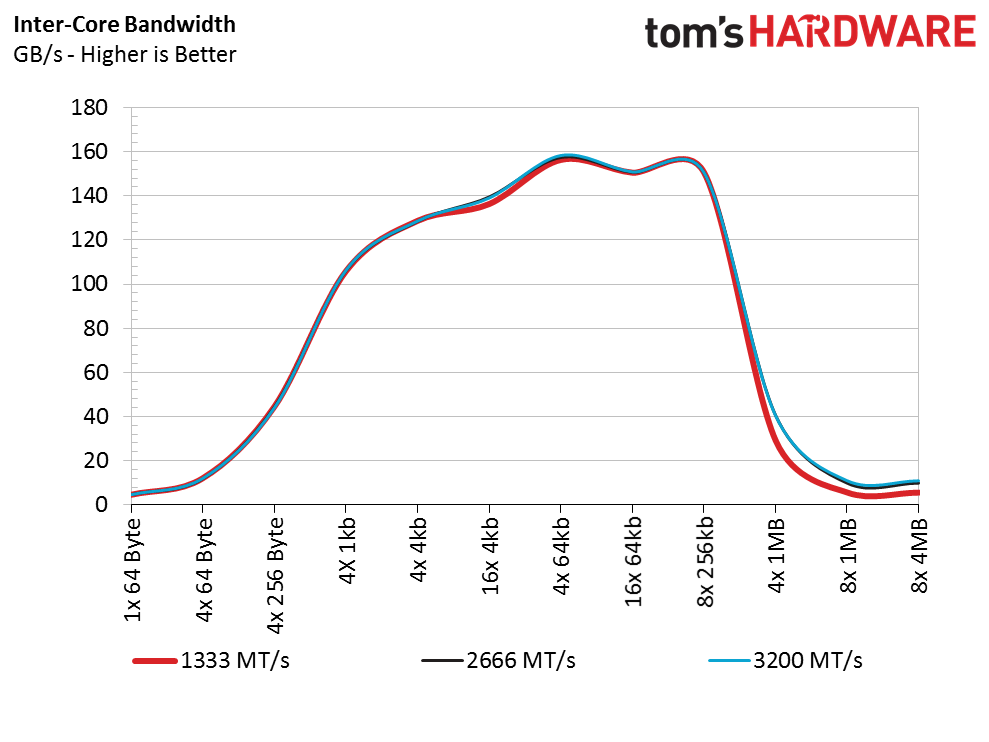

We also measured Inter-Core bandwidth during our tests, and that progresses nicely as smaller chunks of data populate the L1 cache. As the data size increases, it falls back into the L2 and L3 caches, and then finally encounters main memory on the chart's far right.

Though this brief experiment only looks at Ryzen 5 1600X, we're planning another deep-dive story that includes all available Ryzen CPUs. There are so many possible combinations to test, including SMT and various power profiles, that might affect Infinity Fabric performance. Stay tuned.

...And We Still Can't Measure Cache Performance

During the Ryzen 7 1800X launch, it came to light that AMD disagreed with the cache testing methodology employed by leading test software vendors. That still holds true, apparently, even after recent updates to the utilities. From AMD's Ryzen 5 reviewer's guide:

Despite recent application updates, public cache analysis tools continue to produce spurious results for the AMD Ryzen family of processors. Though further investigation is warranted, we believe the public tools are utilizing datasets larger than the available cache size(s) and/or inadvertently mixing DRAM access into cache results. With specific regard to the AMD Ryzen 5 processor, we believe these applications are making erroneous assumptions about the size and/or topology of the 1500X and 1600X cache hierarchy. We continue to work with software vendors to accurize the results.

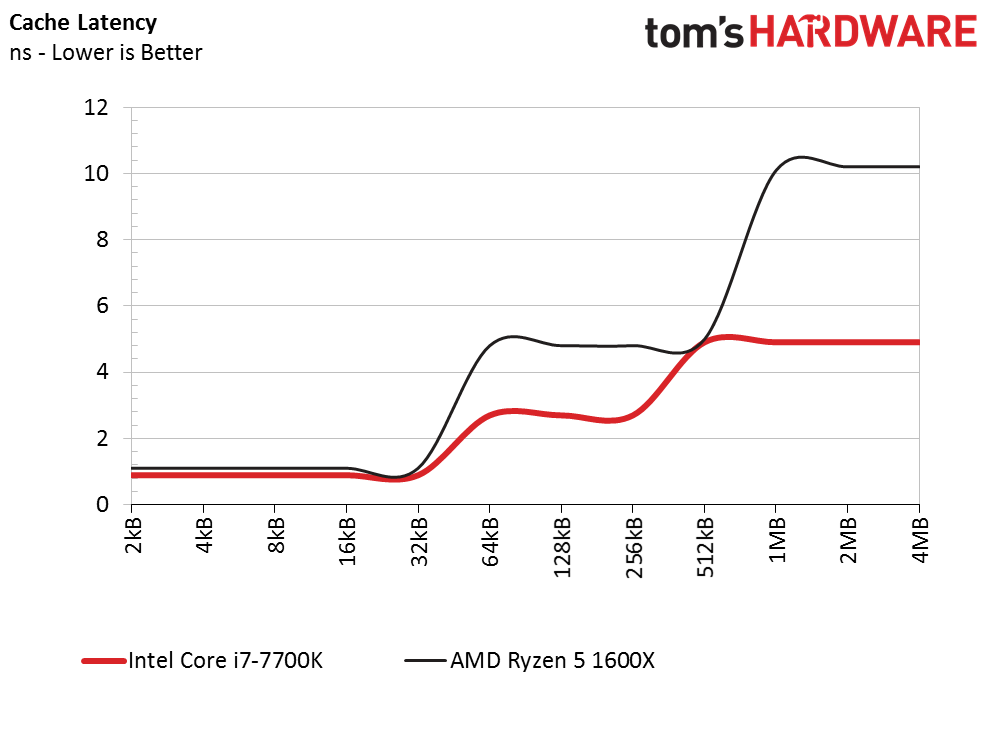

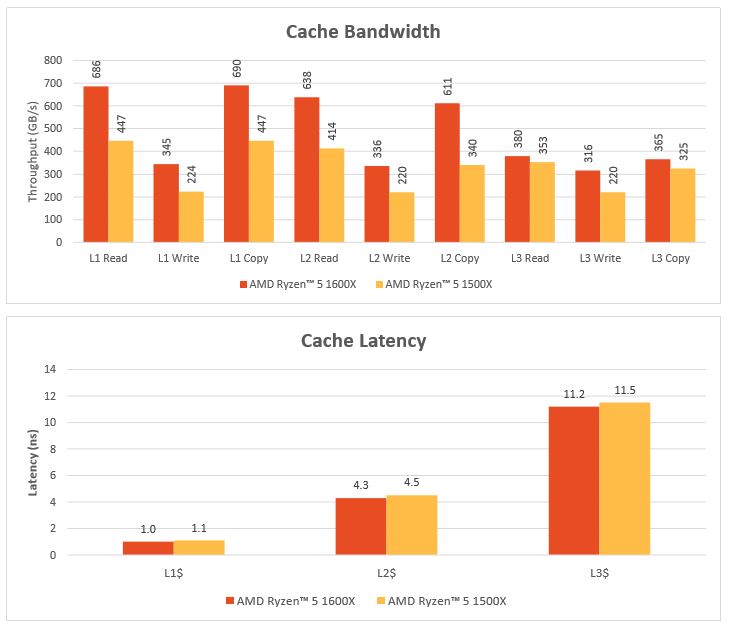

The first chart above comes from our performance data, while the second is AMD's reference results. We used Sandra's cache latency measurement, which produces similar results as AMD's numbers. As you can see in our chart, L1 latency (far left) weighs in at 1.1ns, L2 measures 4.8ns, and L3 registers 10.2ns. By comparison, it appears that Intel enjoys a considerable cache latency advantage.

We also tested bandwidth with other utilities in an attempt to match AMD's results, but noticed large disparities in L2 and L3 cache reporting. We've included AMD's in-house numbers, and are talking to other ISVs to facilitate accurate measurements.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPU Content

Current page: The Infinity Fabric: A Blessing And A Curse

Prev Page Introduction Next Page Overclocking, Creators Update & Test Setup

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

ninja_warrior If you can reliably overclock any of the ryzen 5/7 to 4.0, why would you get the 1600x over the 1700? Comparing a 1600x at 4.0 to a 1700 at 3.0 and then concluding that it's a better CPU when the 1700 can overclock exactly the same seems pretty stupidReply -

bloodroses A little disappointing for the Ryzen 5's imo. You'd think with the reduced core count you'd get better frequencies (and OC'ing) than what you get with the Ryzen 7.Reply

I honestly don't see a reason why to get a Ryzen 5 at this point since the i5 is definitely better for gaming and the Ryzen 7 is better for workstation use. The price alone takes it out of its own market. -

tamban A CPU review with only gaming benchmarks? Tom's hardware really likes Intel's hardware.Reply -

FormatC Reply

Try page 10 :P19547998 said:A CPU review with only gaming benchmarks? Tom's hardware really likes Intel's hardware.

31(!) Workstation benchmarks. Too less?

-

Oranthal How about a real world test where you play a game and run a 1080p stream then compare performance? How about 1440p? How about broadening the scope of testing? Nah just ignore the strength of more cores and focus on single thread work and a few games.Reply -

irish_adam you say that the i5 7600k comes out on top at stock but just on the gaming benchmarks i make it 4-4 with 2 draws. I wouldnt say that it came out on top at all. I would say they are pretty evenly matched at the moment. Also apart from the odd couple from both sides their frame difference was less than 10, at over 100FPS i'd pay good money that no one would be able to distinguish a difference between either system.Reply -

elbert Great review Paul and Igor. Best review I have seen given its the only review with 2 intel cpu's in the price range of Ryzen 5. The RAM info is great which shows that Ryzen gains a real 9ns latency advantage using higher clocked RAM on the Ryzen 5. Given the Ryzen 7 has less cache per core I would expect that gain to be higher.Reply

An issue that does stick out here is high price of the overclocking solution. How does the 7600k fair with a stock intel heatsink compared to the 1600x wraith spiral best overclocks? I think Ryzen has a real price advantage given the cooler required for a reasonable overclock.

Also how does the 7600K compare in games while twitch streaming against the 1600X? -

dstarr3 Reply19548037 said:How about a real world test where you play a game and run a 1080p stream then compare performance? How about 1440p? How about broadening the scope of testing? Nah just ignore the strength of more cores and focus on single thread work and a few games.

Maybe that's your real-world test, but that isn't mine. And am I the only one that can see the workstation benchmarks on page 10? Everyone seems to be ignoring them and then complaining that they aren't there.