Intel Xeon E5-2600 v4 Broadwell-EP Review

Why you can trust Tom's Hardware

Broadwell-EP Architecture

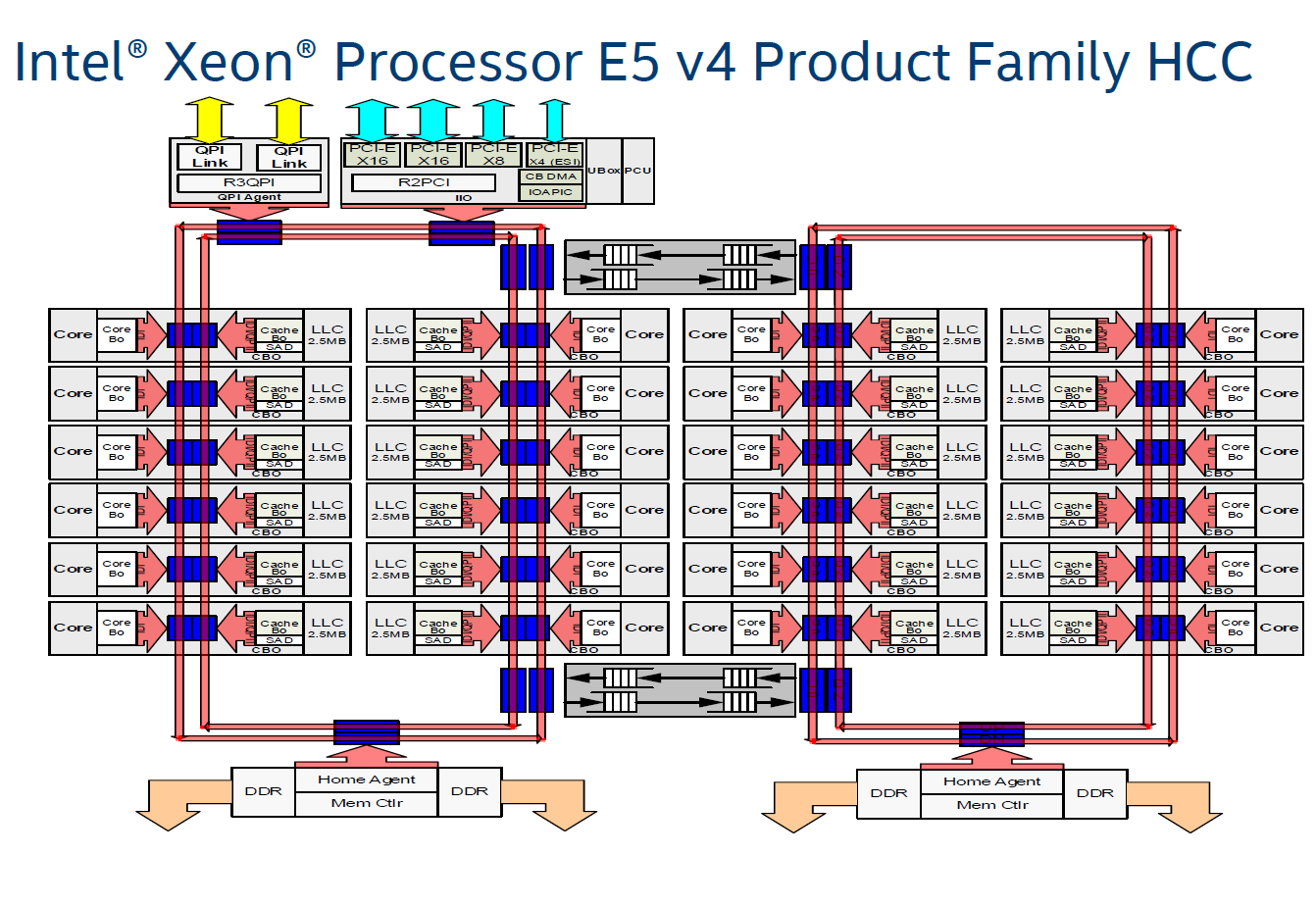

The Broadwell-EP line-up is based on three different die configurations with modular designs. The HCC die measures 18.1x25.2mm and comprises ~7.2 billion transistors. The architecture itself still employs two full rings per HCC die, but now it's symmetrical. In Haswell-EP, the ring on the right serviced two additional cores, creating asymmetry.

Here, Intel connects both bidirectional rings to 12 cores each, and it disables an equal number of cores per ring to create SKUs with fewer cores. As an example, the flagship 22-core Xeon E5-2699 v4 has 11 active cores per ring. As you work your way down the stack, two cores at a time are turned off, one from each side, along with their corresponding slices of last-level cache. That's how Intel creates models with less L3, too.

Each active core is associated with 2.5MB of LLC cache that is shared across its ring, and any core can address any part of the cache. The advantage of two distinct rings is more efficient scheduling; everything that happens on one ring is independent and occurs without any interference from the other ring. Routing ring traffic intelligently, and in the correct direction, is naturally quite important; a transaction on the ring can take up to 12 cycles (depending on how far it has to travel). There's intelligence built in to address this. Without it, if a core needed information in cache to the "south" of it and the traffic went north, that request would have to make a complete loop. Instead, the scheduler correctly routes traffic south, yielding faster access to data in the cache.

Balancing a workload between two rings also reduces the number of cycles that would be required to navigate one larger ring. The only caveat is that routing traffic between rings requires a trip across the buffered switches connecting them at the top and bottom, which incurs a (roughly) five-cycle delay. Each ring has access to its own memory controller (bottom), but only the ring on the left has access to the QPI links and PCIe lanes (top).

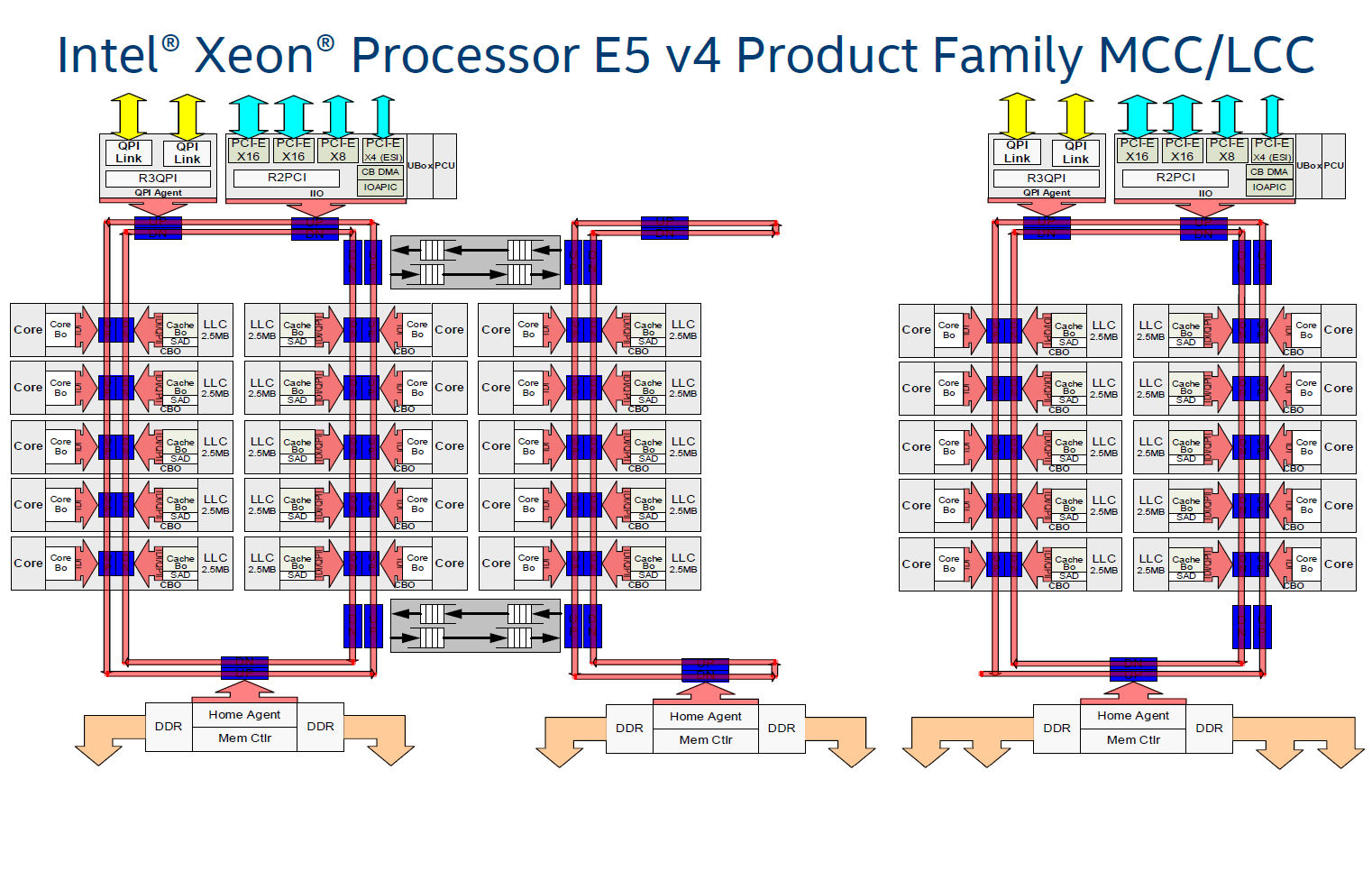

The MCC die measures 16.2x18.9mm and has ~4.7 billion transistors, while the LLC die measures 16.2x15.2mm and employs ~3.2 billion transistors.

Intel drops the number of cores per ring from 12 to 10 on the MCC and LCC configurations, but continues to employ a bidirectional ring structure. The MCC's partially severed ring even gets an additional memory controller. Then Intel does remove the second ring's last traces for the Low Core Count (LCC) die, eliminating it and the other memory controller. This also gets rid of any reason to have the buffered switches, which connected the two rings on the larger dies.

LCC-based models can still address four DDR4 memory channels through the single controller, illustrated by the four arrows emanating from that piece of logic. This results in a small loss of throughput, since there isn't a second memory scheduler to help service transactions. But Intel doesn't quantify the extent of the performance impact.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Performance Boosting Technologies

Broadwell-based CPUs boast a roughly 5.5% IPC boost compared to Haswell. The most notable improvements affect floating-point instruction performance, and include a reduction in Vector FP multiply latency from five cycles to three, improvements to the Radix-1024 divider, split scalar divider and hardware assist for vector gather operations (60 percent fewer).

Other compelling additions include virtualization-centric features like posted interrupts, which reduce VM enter/exit latency by batching the interrupts, and page modification logging, minimizing the overhead of VM-based fault tolerance through rapid checkpointing.

Intel also employs Transactional Synchronization Extensions (TSX) to boost performance, and its new Hardware Controlled Power Management purportedly cuts power consumption. We'll put that claim to the test on page eight.

Orchestration And Security Features

Intel's Resource Director Technology provides enhanced telemetry data, which allows administrators to automate provisioning and increase resource utilization. This includes Cache Allocation Technology, Code and Data Prioritization (CDP), Memory Bandwidth Motioning (MBM) and enhanced Cache Monitoring Technology (CMT).

You also get a spate of enhanced security features, including faster data encryption and decryption, network security and trusted compute pools through Crypto Speedup (ADOX/ADCX), a new random seed generator (RDSEED), Supervisor Mode Access Prevention (SMNAP) and Virtualization Exception (#VE) technology.

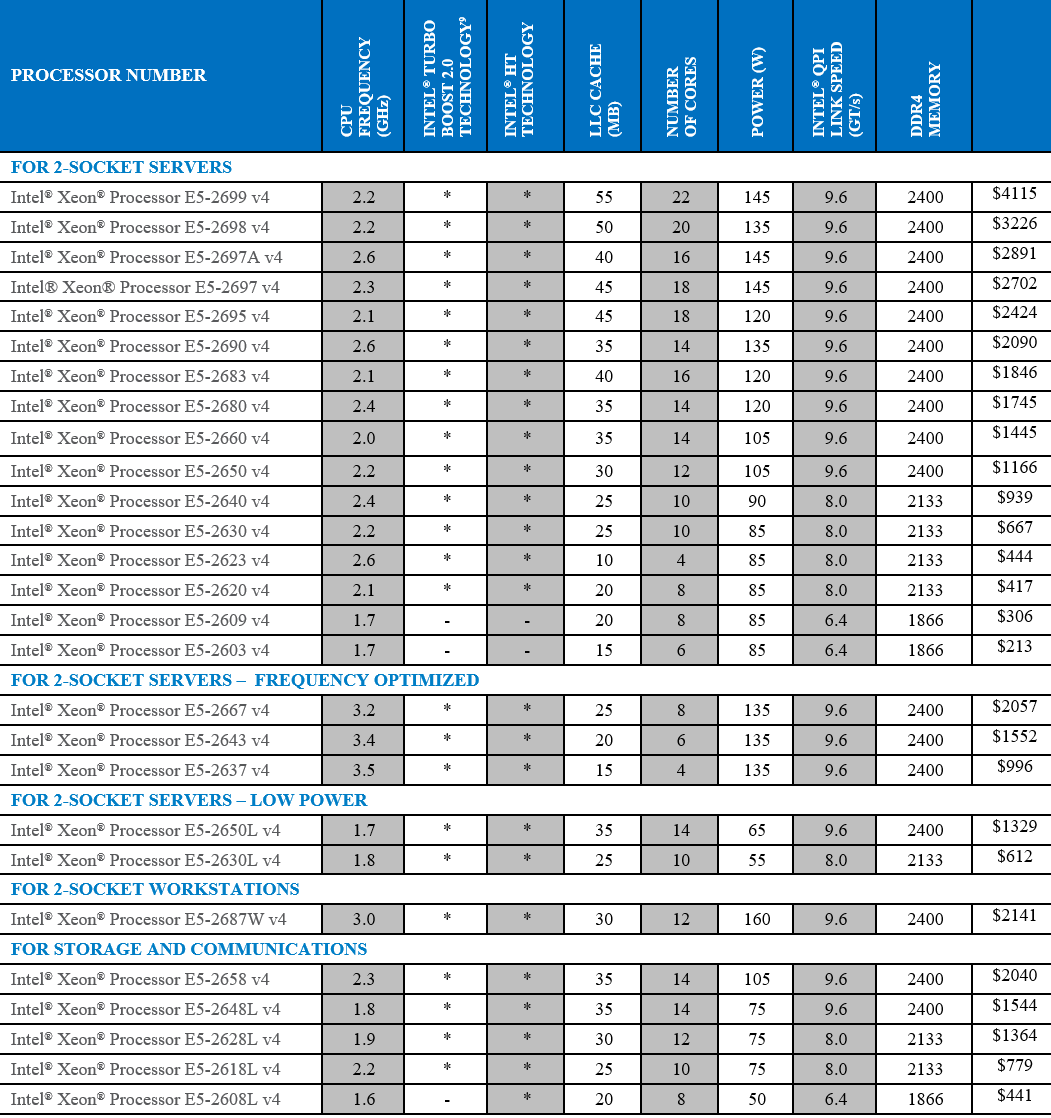

Models And Pricing

Current page: Broadwell-EP Architecture

Prev Page Introduction Next Page Intel Test Platforms And How We Test

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

utroz Hmm well we know that Broadwell-E chips must be coming very very soon if Intel let this info out.Reply -

bit_user Wasn't there supposed to be a 4-core 5.0 GHz SKU? Single-thread performance still matters, in many cases.Reply

-

turkey3_scratch Reply17746082 said:Wasn't there supposed to be a 4-core 5.0 GHz SKU? Single-thread performance still matters, in many cases.

In most server applications it doesn't matter as much as multithreaded performance. If you need single-core strength, getting a consumer chip is actually better, but you probably aren't running a server if single-threaded is your focus. -

PaulyAlcorn ReplyWasn't there supposed to be a 4-core 5.0 GHz SKU? Single-thread performance still matters, in many cases.

I read the rumors on that as well, but nothing official has surfaced as of yet to my knowledge. -

bit_user Reply

Try telling that to high-frequency traders. I'm sure they want the reliability features of Xeons (ECC, for example), but the highest clock speed available.17746141 said:17746082 said:Wasn't there supposed to be a 4-core 5.0 GHz SKU? Single-thread performance still matters, in many cases.

In most server applications it doesn't matter as much as multithreaded performance. If you need single-core strength, getting a consumer chip is actually better, but you probably aren't running a server if single-threaded is your focus.

And the fact that Intel even released low-core high-clock SKUs is an acknowledgement of this continuing need. Clock just not as high as I'd read. With the other specs basically matching the Haswell version, the only difference is ~5% IPC improvement. Seems pretty poor improvement, for a die-shrink.

-

firefoxx04 Would nice to have a quad core xeon that turbos at 4.4ghz just like the 4790k. I had to go with a 4690k when building an autocad system because it only uses one core and needs that core to be fast... this means i have to sacrifice ecc support.Reply -

bit_user Reply

On wccftech (not the most reliable source, I know), they claimed:17746160 said:Wasn't there supposed to be a 4-core 5.0 GHz SKU? Single-thread performance still matters, in many cases.

I read the rumors on that as well, but nothing official has surfaced as of yet to my knowledge.

Model: Intel Xeon E5-2602 V4

Cores/threads: 4/8

Base clock: 5.1 GHz

Turbo clock: TBD

L3 Cache: 5 MB

TDP: 165W

Given what we know about 2.5 MB/core of L3 Cache, the 5 MB figure sounds suspicious. It's conceivable they could disable some to hit the target TDP, I guess.

-

firefoxx04 We cant get skylake to consistently hit 5ghz... why would a xeon chip suddenly hit 5ghz?Reply -

JamesSneed Reply17746312 said:We cant get skylake to consistently hit 5ghz... why would a xeon chip suddenly hit 5ghz?

I'm not saying the 5Ghz rumor is true but Intel has always known which chips can hit higher clocks during certification if the chip is a top end or low end chip cores disabled etc. I'm sure they could cherry pick a few to sell for $$$ if they wanted. Now are they I have no real idea. -

bit_user Reply

Well, I was surprised, too.17746312 said:We cant get skylake to consistently hit 5ghz... why would a xeon chip suddenly hit 5ghz?

There are obviously things you can do in chip design that allow one to reach different timing targets. And I was hoping they might've refined their 14 nm process, since the time the first Broadwells launched. So, I thought, with more TDP headroom afforded by this socket (roughly double what Skylake has to work with), maybe they could do it.

I thought maybe Intel was addressing some pent-up demand for high clockspeed applications. That said, it seemed particularly odd in Broadwell, given that it generally seems oriented towards lower clockspeed / lower power applications.

But maybe it was a typo, or even a blatant lie, in order to track down leakers.