Tachyum's First CPUs Are Late, But It Plans 20 ExaFLOPS Supercomputer With Next-Gen CPUs Anyway

Tachyum now tries to sell a 20 ExaFLOPS/10 AI ZettaFLOPS supercomputer design.

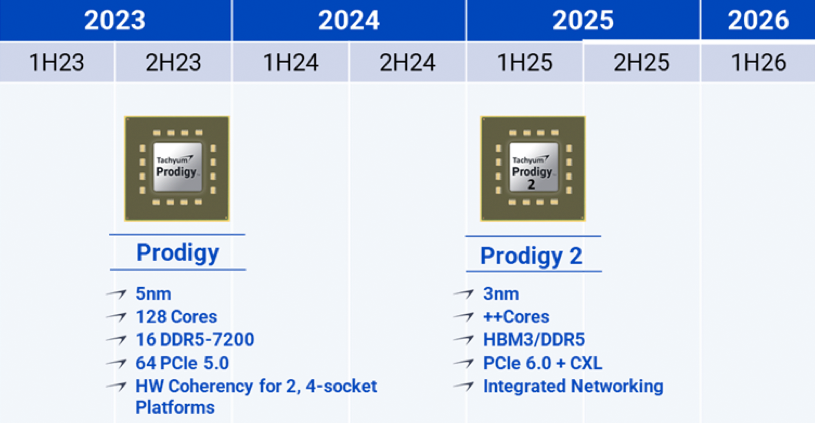

For three years, Tachyum has touted its Prodigy universal processor with promises that it would be better than CPUs and GPUs from the likes of AMD, Intel, and Nvidia. However, the chip is still not in production even though it was originally projected to be in full volume production back in 2021. Now it looks like the company is looking ahead and touting its Prodigy 2-based supercomputer design that promises 20 ExaFLOPS performance in 2025 ~ 2026.

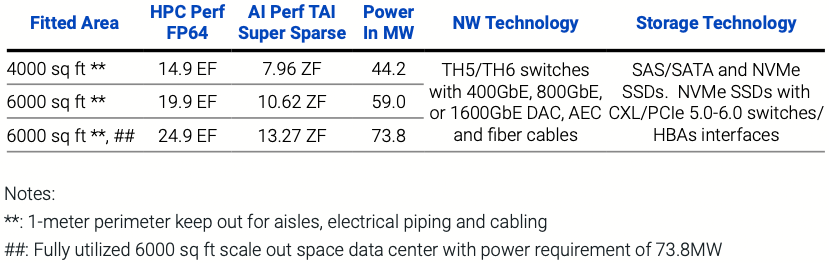

Tachyum's supercomputer design is meant to deliver 20 FP64 vector ExaFLOPS and 10 AI (INT8 or FP8) ZetaFLOPS performance within a power target of 60MW in a footprint of 6,000 square feet in 2025, the company said. The machine will use 64 Prodigy 2-based cabinets and 16 storage racks, but Tachyum did how many Prodigy 2 processors it would need to deliver this much performance.

Tachyum also said it could build a supercomputer offering 24.9 FP64 vector ExaFLOPS and 13.27 AI ZettaFLOPS consuming 73.8MW. To put the number into context, the upcoming El Capitan supercomputer powered by AMD's Instinct MI300 datacenter APUs is set to deliver around 2 FP64 ExaFLOPS.

Last year the U.S. Department of Energy expressed interest in procuring a 20 ExaFLOPS supercomputer with power consumption of 20MW-60MW by 2025. Tachyum said it had submitted a proposal to construct such a system by 2025 but did not elaborate on the details. Since the company's original Prodigy did not meet Tachyum's performance goals (which is why it sued Cadence), it was reasonable to assume the company would use its 2nd-Gen Prodigy (which the company had talked about before) for the machine.

This supercomputer design revealed by Tachyum this week essentially complies with the DoE's requirements though the company does not disclose the performance levels it expects from its Prodigy 2.

Tachyum says its Prodigy processor is the first-ever universal processor that can handle a wide range of demanding computing workloads. The original Prodigy processor contains 128 proprietary 64-bit VLIW cores with two 1024-bit vector units and one 4096-bit matrix unit per core. The flagship Prodigy T16128-AIX processor was expected to provide a maximum of 90 FP64 teraflops for high-performance computing (HPC) and up to 12 'AI petaflops' for AI inference and training (with INT8 or FP8 precision). In addition, each chip was projected to have a power consumption of up to 950W and employ liquid cooling.

Tachyum's Prodigy 2 is expected to increase the number of cores, and add HBM3, PCIe 6.0 + CXL, and integrated networking capabilities.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

"Tachyum provides leading-edge solutions from silicon to complete systems to address the ever-increasing demands for both HPC and AI," said Radoslav Danilak, founder and CEO of Tachyum. "Tachyum-designed supercomputers push the forefront of HPC performance while crossing the zetta-scale barrier for AI, transforming data centers into universal computing centers."

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Even if they finally manage to deliver some working hardware, it still remains to be seen how well it will perform on real-world tasks, using existing codebases. The HPC world has a long history of lofty promises that don't translate well into usable performance.Reply

By comparison, I think it's instructive to see the approach taken by Tenstorrent: start small and build your way up. Not only iterating on the hardware, but also the software, as you go.

Also, I think the bar for HPC is getting set ever higher, because you not only need to deliver leading performance, but also with extremely good efficiency. Intel learned this the hard way: it's very difficult to beat GPUs with something that's not a GPU. Discrete GPUs have all the same sorts of demands: compute, bandwidth, and efficiency. Even though HPC accelerators have diverged from consumer GPUs, they still share a lot of commonality. -

inder2 I watch over this 2 years of only promises and no progress, its like from alice from Wonderland. This man is out of reality, with performance promises...Reply -

inder2 The liberality to publish everything for cash (sci-fi hardware), i congratulate to tom hardware, our employers are specialist ;-)Reply