Talking Threadripper 3990X With AMD's Robert Hallock

AMD filled us in on a few details of the Threadripper 3990X

Update 2/7/2020: Check out our AMD Ryzen Threadripper 3990X review to see how the chip stacks up against the competition.

We had a chance to speak with Robert Hallock, AMD's Senior Technical Marketing Manager, at CES 2020 to learn more about how the company's latest flagship part. AMD had already revealed more details surrounding its Threadripper 3990X, putting firm numbers behind the beastly 64-core 128-thread chip that we now know will come with a suggested pricing of $3,990 and land on February 7, 2020, but as usual, until we have the silicon in our hands, we have plenty of questions.

Will There be a 48-Core Threadripper?

Intel is woefully incapable of responding to AMD's existing 32-core Threadripper 3970X, so dropping the 64-core 3990X is akin to delivering a killing blow in HEDT: AMD says this single processor can outperform two of Intel's $10,000 Xeon 8280's in some workloads.

However, there's a ~$2,000 gap in pricing between the 32-core 3970X and the 64-core 3990X, not to mention the obvious gap of 32 cores. That leaves a middle ground where a 48-core model, which has been purportedly listed in CPU-Z's source code, would make a lot of sense.

However, Hallock told us the company currently has no plans for a 48-core model. That's because, based on last year's Threadripper sales, AMD noticed that customers tend to jump right to the top of the stack or choose the "sweet spot" product, which AMD sees as the 32-core Threadripper 3970X. That means, at least for now, the company doesn't plan to plug that gap in its product stack.

Threadripper 3990X Performance Scaling and Memory Recommendations

| Row 0 - Cell 0 | Cores / Threads | Base / Boost (GHz) | TDP | MSRP |

| Threadripper 3990X | 64 / 128 | 2.9 / 4.3 | 280W | $3,990 |

| EPYC 7742 | 64 / 128 | 2.25 / 3.4 | 225W | $6,950 |

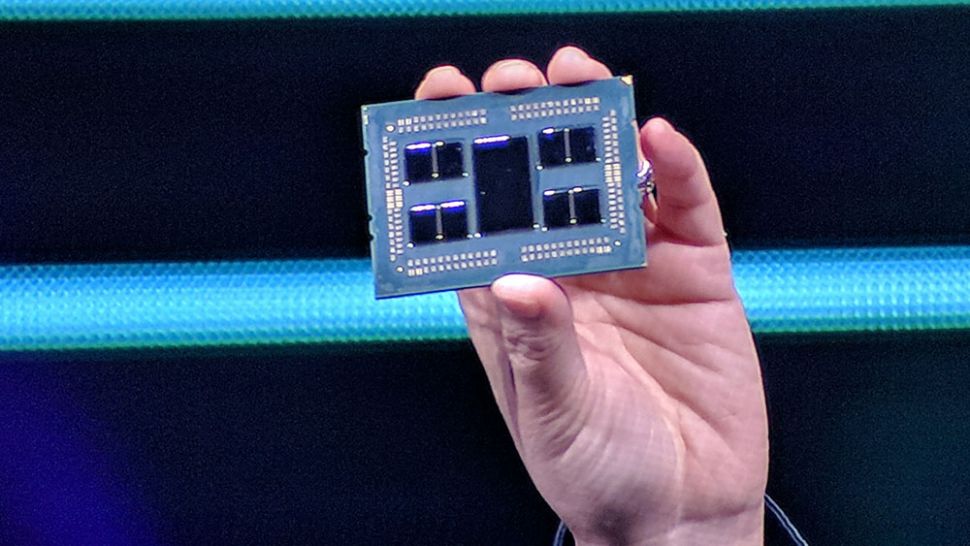

But bringing forward a 64-core part is a daunting engineering challenge, and while the Threadripper 3990X uses the same fundamental design as the high-end EPYC Rome data center chips, it isn't 'just' a re-badged EPYC processor. AMD has pushed the clocks up from the EPYC 7742's 2.25 GHz base and 3.4 GHz boost to an impressive 2.9 GHz base and 4.3 GHz boost.

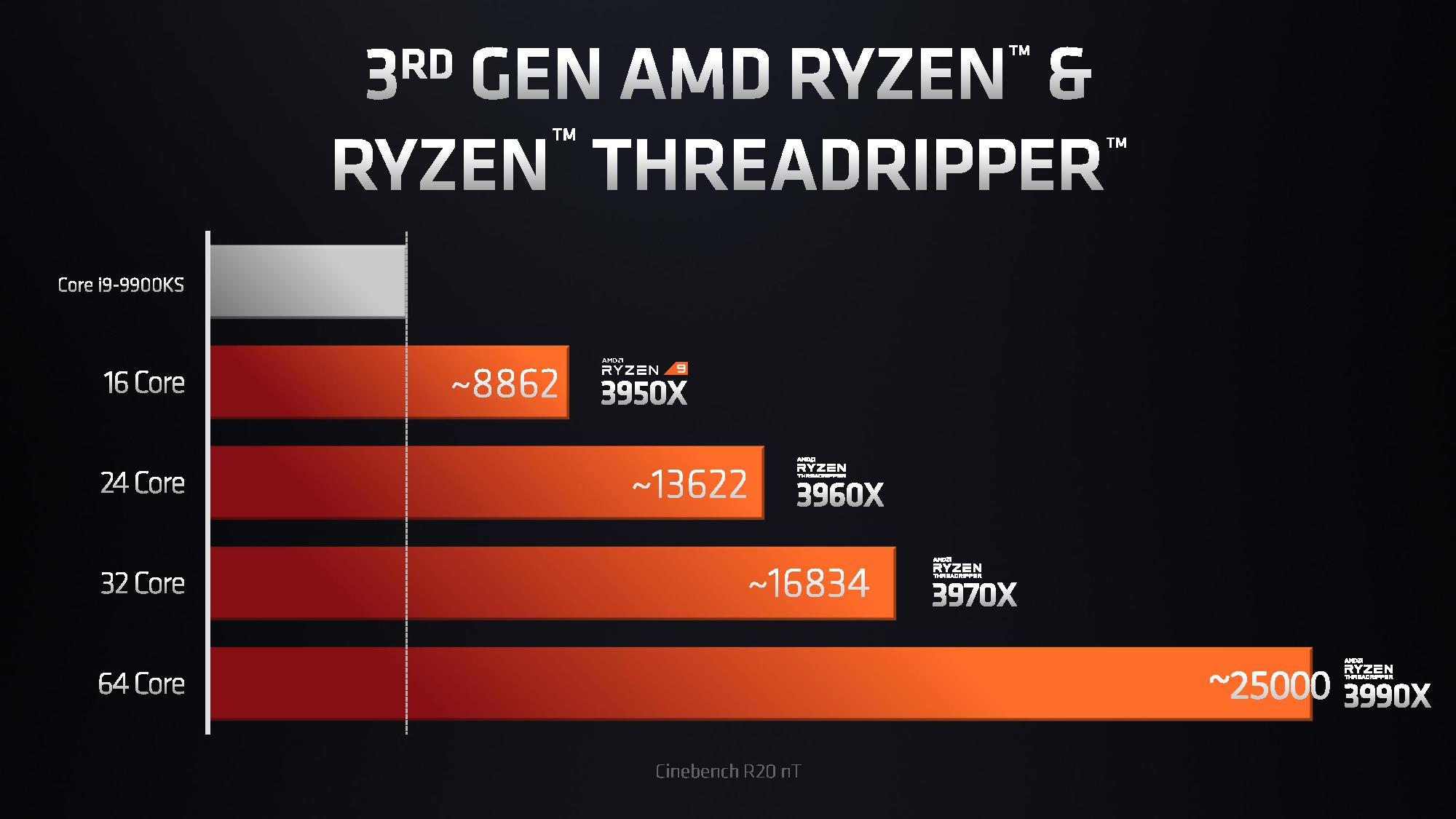

Hallock told us that the 3990X's faster clocks are exceedingly important for some heavy applications, particularly in the rendering field, but as we see with most processors, performance doesn't scale linearly in most threaded workloads. That means doubling the core count from 32 to 64 doesn't result in doubled performance. Even in the scaling-friendly Cinebench tests that AMD shared, we can see that performance only increases ~48%. We asked Hallock if that is a function of power or memory bandwidth limitations, or some combination of both.

Hallock explained that the bottleneck "suddenly shifts to really weird places once you start to increase the core counts." Some areas that typically aren't a restriction, like disk I/O, can hamper performance in high core-count machines. For instance, development houses exclude project files from Windows Defender to reduce the impact of disk I/O during compile workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Hallock doesn't see memory throughput as a limitation in the majority of workloads, but told us that memory capacity per core is actually one of the biggest challenges. As a result, Hallock said that compile workloads require a bare minimum of 1GB per core (64GB total), and that 2GB (128GB total) is better. That means you'll need to budget in a beefy memory kit for a 3990X build.

We drilled down on memory throughput as a potential limitation. The Threadripper 3990X only has access to quad channel memory, while the comparable EPYC Rome data center parts have access to eight channels of DDR4 memory. That's necessary not only for compatibility with TRX40 motherboards, but it also helps maintain segmentation between the consumer and enterprise product stacks.

Without enough memory throughput, processor cores suffer from bandwidth starvation that can result in sub-par performance. As such, memory throughput is important to keep a processors' cores fed with enough data to operate at full speed, so chips with more cores typically require more aggregate throughput during memory-intensive workloads. That ensures that each individual core is adequately fed.

One of AMD's strengths lays in its core-heavy processors, but we've already seen signs of memory throughput restrictions with the dual-channel 16-core Ryzen 9 3950X. For instance, the 16-core 3950X has access to roughly the same aggregate memory throughput as the 12-core 3900X, yielding lower per-core memory bandwidth, but surprisingly, that doesn't seem to have much impact on most applications.

We asked Hallock how having 'only' four channels of memory would impact performance with the 3990X, and how the company has managed to wring so much performance from chips with potentially constrained memory throughput.

"We don't see a lot of memory bandwidth starvation with 64 cores," Hallock explained, though he did specify that some simulation work, like DigiCortex, is faster with more memory throughput. However, most workloads are fine and Hallock feels that concerns about memory throughput are largely a legacy impression, and that DDR4 memory provides plenty of throughput with relatively few channels. AMD has also made architectural decisions to keep data on-chip, like an almost unthinkable 288MB of total cache, a third AGU (Address Generation Unit), and larger opcaches, among other changes, that help keep data in the caches to overcome memory bandwidth challenges.

Workload intensity is another factor that limits scaling, with small compile jobs like the Linux kernel or PHP suffering because the jobs simply run so fast they can't unleash the full power of the cores, while heavier jobs, like compiling Android or the Unreal Engine, are stringent enough over a long period of time to fully exercise the cores.

Those same concepts apply to video encoding: For instance, 8K HEVC encoding scales much better than H.264. Hallock says you can expect a 60% to 70% increase in rendering performance when doubling the core count, and noted that if your render jobs take half an hour, the 3990X probably isn't for you. However, if you measure your render time in hours per frame, the 3990X makes a lot of sense. Rendering is obviously one of the key target applications for the 3990X, so we'll certainly spin up 8K HEVC testing for our upcoming review.

Threadripper 3990X Overclocking

We'll have these chips in the lab soon, so we asked Hallock if there are any significant changes to overclocking, and he recommended we follow the standard practice of using the auto-overclocking PBO (Precision Boost Overdrive) and beefy cooling for the best results.

Hallock said that, generally, you can expect similar memory overclocking to what we see with other Threadripper models, but that also means that the chips follow the standard rule of reduced OC headroom as DIMM capacity increases. That's important given the hefty capacity recommendations, but as Hallock pointed out, workloads that benefit from capacity typically aren't as sensitive to memory performance.

Hallock said a 64GB should overclock to DDR4-3600 fairly easily, while performance with 128GB kits will vary. As expected, it's safe to say you'll see extremely limited overclocking, if any, with 256GB kits. In either case, we don't think most users that drop $4,000 on a processor are particularly focused on overclocking.

Threadripper and the Workstation Market

AMD has the undisputed performance lead in workstation-class performance: Cascade Lake-X simply doesn't hold a candle to Threadripper in threaded workloads. However, we don't see many workstations built around these powerful chips, especially compared to the number of available Cascade Lake-X OEM workstations on the market.

"That's a slow crank to turn," Hallock remarked, citing that the workstation market handles much like server markets that prize staying power (like proven execution on roadmaps) and have much longer refresh cycles. AMD is still a relative newcomer to this space, and expense validation cycles are also a critical factor: In many cases, validation costs can rival the cost of procuring new hardware. Cascade Lake-X has the benefit of backwards compatibility with systems that have already been through the validation process, which also equates to more staying power in that market segment. Meanwhile, AMD moved to the new TRX40 platform with Threadripper to infuse new capabilities.

In either case, Hallock sees AMD's standing in the market continue to increase as the company takes the performance leadership position, and says the company is certainly getting a lot more 'cold calls' than it ever has from customers interested in the platform.

Threadripper 3990X or EPYC Rome?

Finally, the single-socket EPYC platform is extremely compelling due to its hefty ration of I/O combined with its class-leading performance density; there simply isn't a single-socket Intel platform that offers such a well-rounded set of capabilities. However, the 3990X looks, and from a performance standpoint, acts, a lot like the EPYC Rome flagship parts. That could create a potential problem: comparable EPYC processors cost roughly $3,000 more than the 3990X. That means AMD has to walk a fine line that gives the 3990X differentiated value for professional users but also doesn't give data center customers a reason to opt for the 3990X instead of the more expensive EPYC parts.

We asked Hallock what would compel a data center user to select a single-socket EPYC platform over a Threadripper 3990X, and Hallock explained that boils down to memory throughput-bound workloads:

"If you're doing design simulation, like computational fluid dynamics, cellular simulation, brain neuron simulations, take EPYC because the extra memory channels will put you over the hump, and those [workloads] are very bandwidth-intensive. If you are not bandwidth-intensive, we've got clock on Threadripper. The VFX market doesn’t care about memory channels at all. It's all capacity and boost. That’s what they care about. And that’s why a single socket product like this, not EPYC, makes sense for VFX."

That differentiation between the two platforms does help with separating the data center and workstation-class lineups, but we're sure that plenty of HPC and HFT (high frequency trading) customers will be interested in the new Threadripper 3990X, not only due to its higher boost frequencies, but also its overclockability. AMD does produce higher-TDP EPYC Rome processors for the high-frequency trading market, but it remains unclear if those parts can use the PBO feature to extract the utmost performance out of exotic cooling solutions, or if AMD allows memory overclocking on those data center chips.

All of this is to say that, despite AMD's reduction in the 3990X's memory channels, its logical to expect that many of these chips will also be highly sought after for HFT applications. Of course, there's zero doubt they will find fast uptake in the VFX market.

It'll be interesting to see how AMD straddles the line between the two markets, but it all starts with the reviews. AMD's official launch date lands on February 7, 2020, and as you can imagine, we'll have the full performance data then.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

GustavoVanni All 3rd gen Ryzen Threadrippers are 280W TDP CPUs.Reply

Not 180W as said in the article.

https://www.amd.com/en/products/cpu/amd-ryzen-threadripper-3990x -

Paul Alcorn ReplyGustavoVanni said:All 3rd gen Ryzen Threadrippers are 280W TDP CPUs.

Not 180W as said in the article.

https://www.amd.com/en/products/cpu/amd-ryzen-threadripper-3990x

Good eye! Thanks, fixed (Ouch, what a noobie mistake) -

bit_user Nice interview, @PaulAlcorn . I like how you hammered on memory bottlenecks - that would be my concern about running a 64-core threadripper.Reply

If Hallock's statements are taken at face value, then I'd guess where Epyc's 8-channels really come into play are:

Dual-CPU setups, where you could actually have more than 64 cores beating on a single CPU's memory.

Servers with high I/O load - such as from NVMe drives, high-density networking setups, or even GPU/AI accelerators. These devices all tend to work mainly to/from buffers in RAM.Still, I'm skeptical that a great number of workloads wouldn't scale better to 64 cores, with 8-channel memory. I look forward to Threadripper memory overclocking benchmarks hopefully shedding more light onto this situation.

Where I think a 48-core Threadripper could make sense is specifically for workloads that do hit bottlenecks at 64-cores. In those cases, 48 cores should offer a bit more performance than 32, but would be more cost-effective and perhaps also more power-efficient.