Why you can trust Tom's Hardware

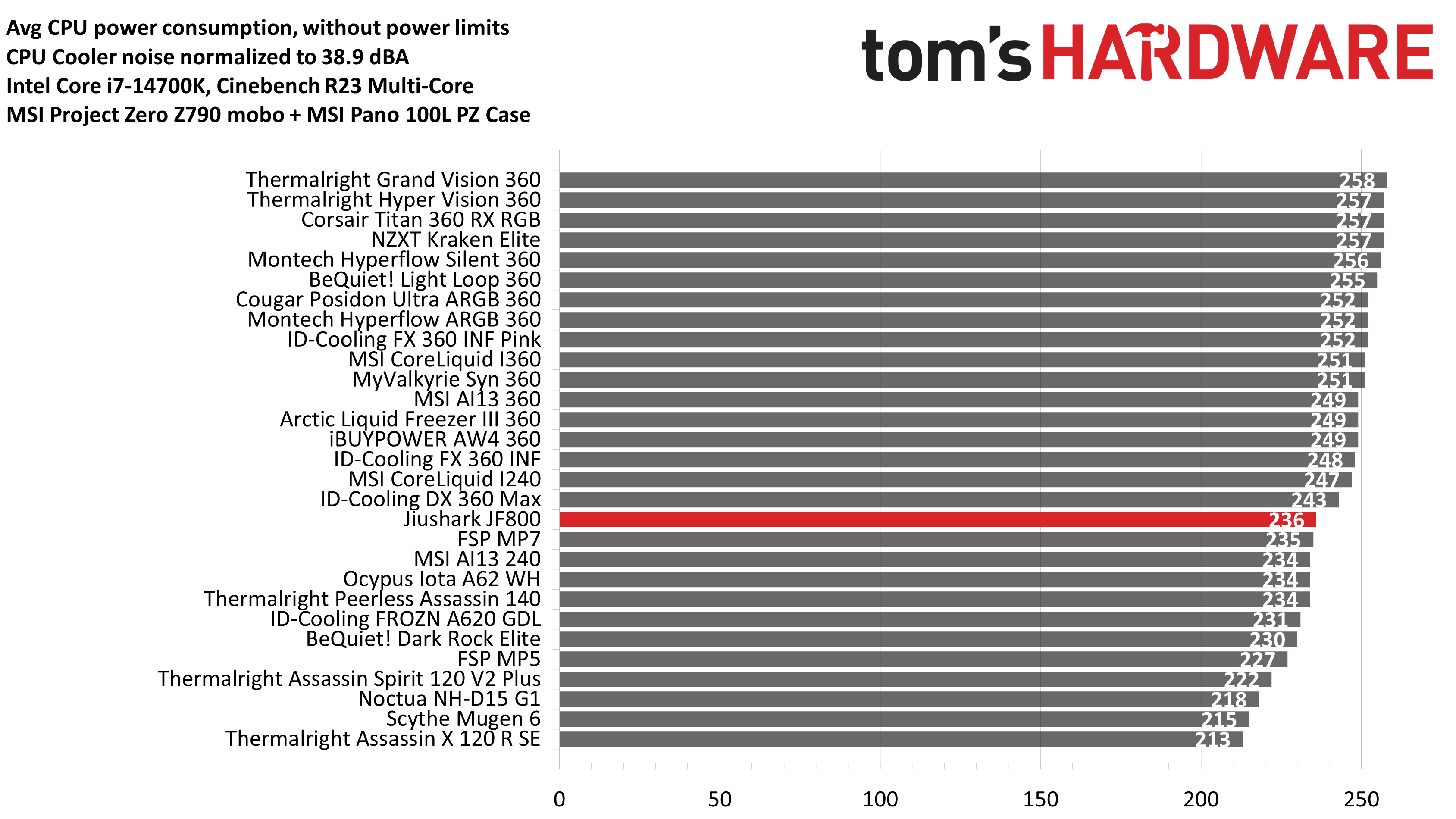

CPU-only thermal results without power limits

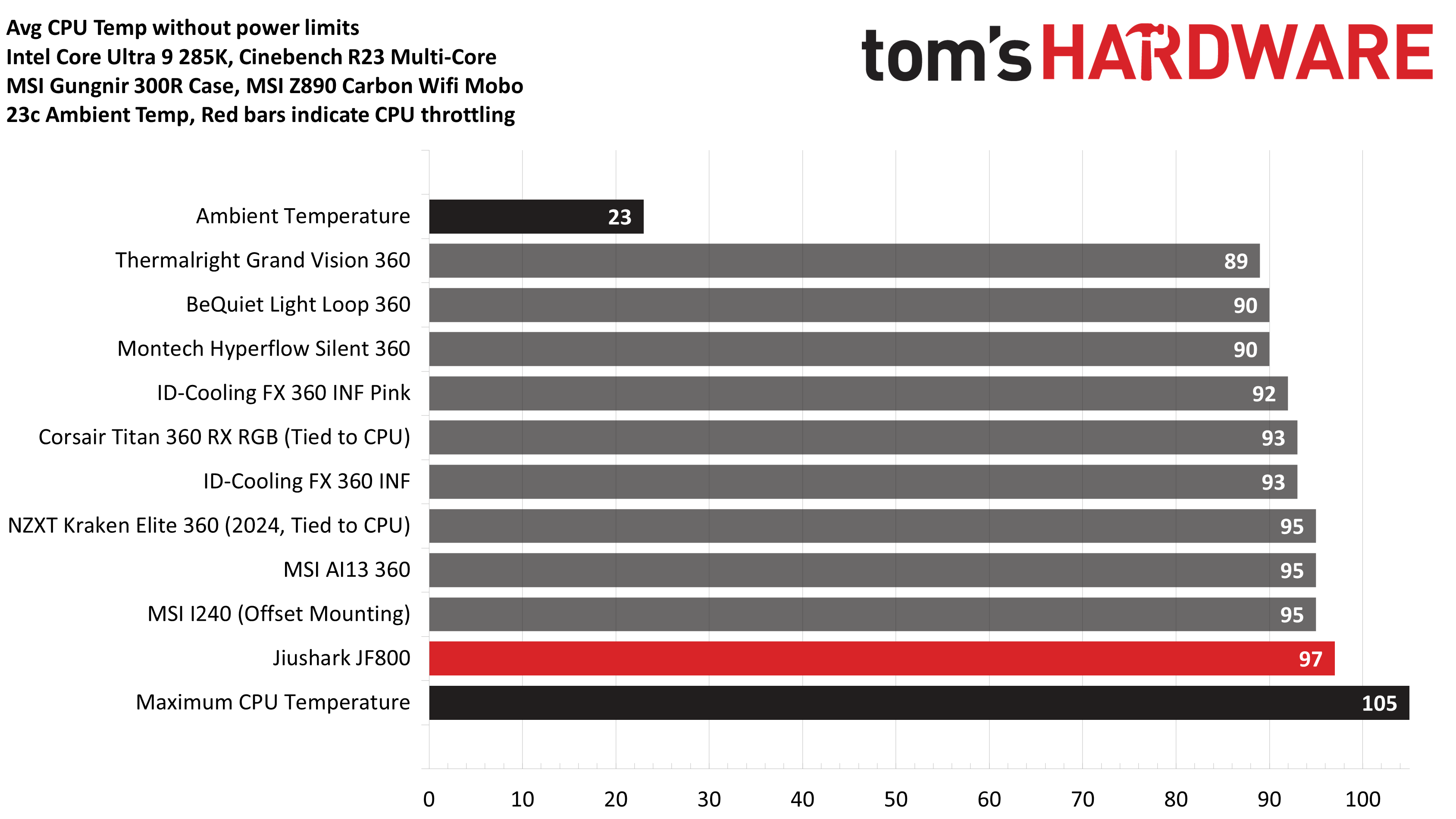

Without power limits enforced on Intel’s Core Ultra 9 285K and i7-14700K CPUs, the CPU will hit its peak temperature (TJ Max) and thermally throttle with with almost all air coolers and even most liquid coolers on the market. For the best liquid coolers on the market, the results of this test will be shown using the CPU’s temperature. However, when the CPU reaches its peak temperature, I’ve measured the CPU package power to determine the maximum wattage cooled to best compare their performance. It’s important to note that thermal performance can scale differently depending on the CPU it’s being tested with.

We’ll start by looking at the performance of this cooler with Intel’s Arrow Lake Core Ultra 9 285K, as these are rather interesting!

Normally, when I’ve tested air coolers with Intel’s Core Ultra 9 285k, they run with the CPU operating at peak temperature and experience small amounts of thermal throttling as a result. Every air cooler I’ve tested thus far has demonstrated this behavior – until now! When I tested Jiushark’s JF800 did momentarily peak at maximum temperature, but operated without throttling – the first air cooler I’ve tested to accomplish this.

That said, Arrow Lake’s thermal characteristics have changed a bit since the launch due to both Windows and BIOS updates, which have altered its behavior. I’m going to need to retest coolers like Thermalright’s Peerless Assassin 140 as a result. It’s quite possible that other coolers can also manage this feat with Arrow Lake now.

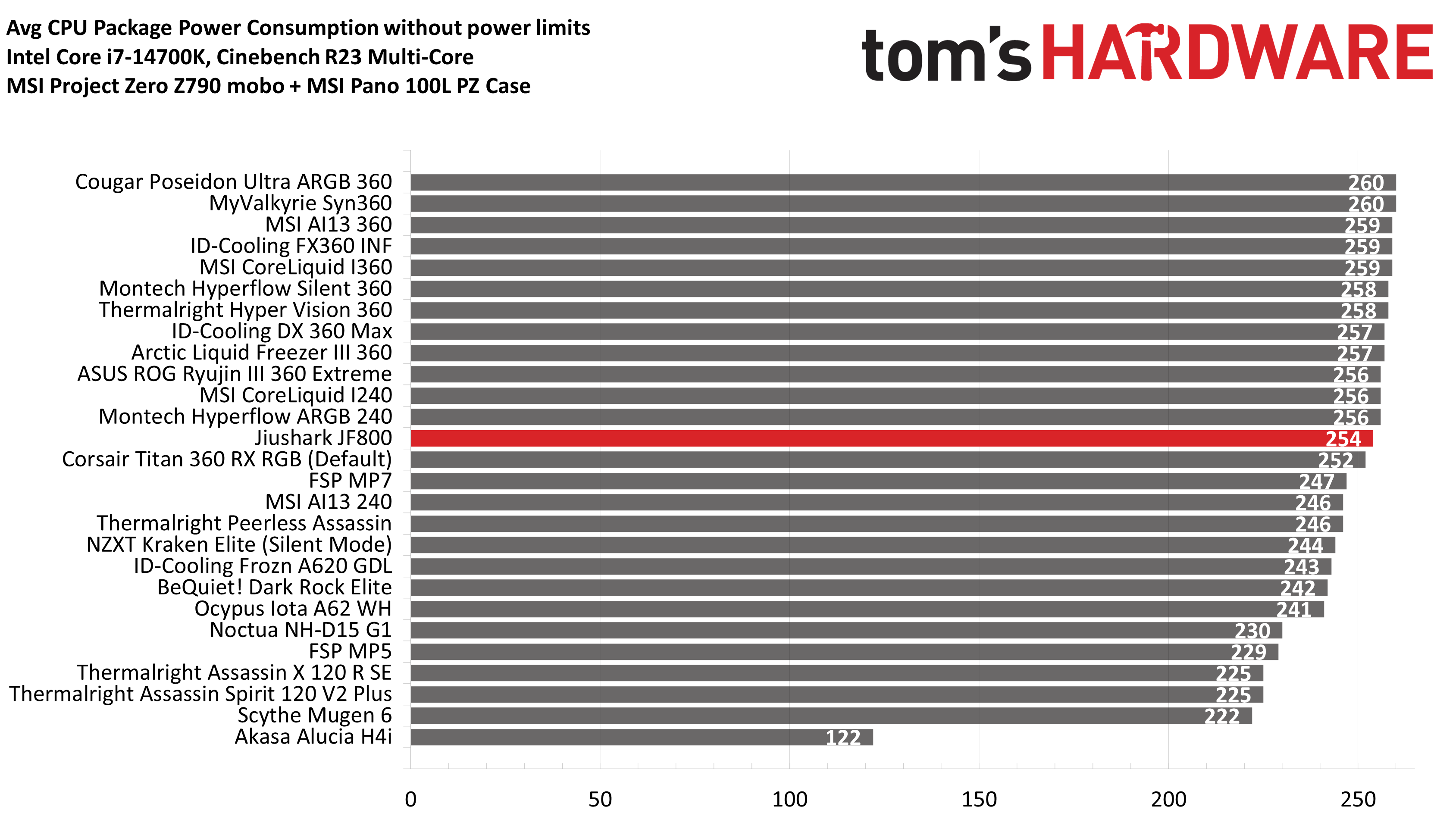

Again, CPU coolers can scale (and perform) differently depending on the CPU they are paired with due to differences in manufacturing processes and the location of hotspots on the CPU. We’ve also tested Jiushark’s JF800 Diamond with the harder-to-cool Raptor Lake Core i7-14700K to see if its amazing performance would hold up with other CPUs.

With 254W cooled during the course of testing, Jiushark’s JF800 Diamond is again impressive! This is better cooling performance than I’ve seen from any other air cooler and competitive with many AIOs!

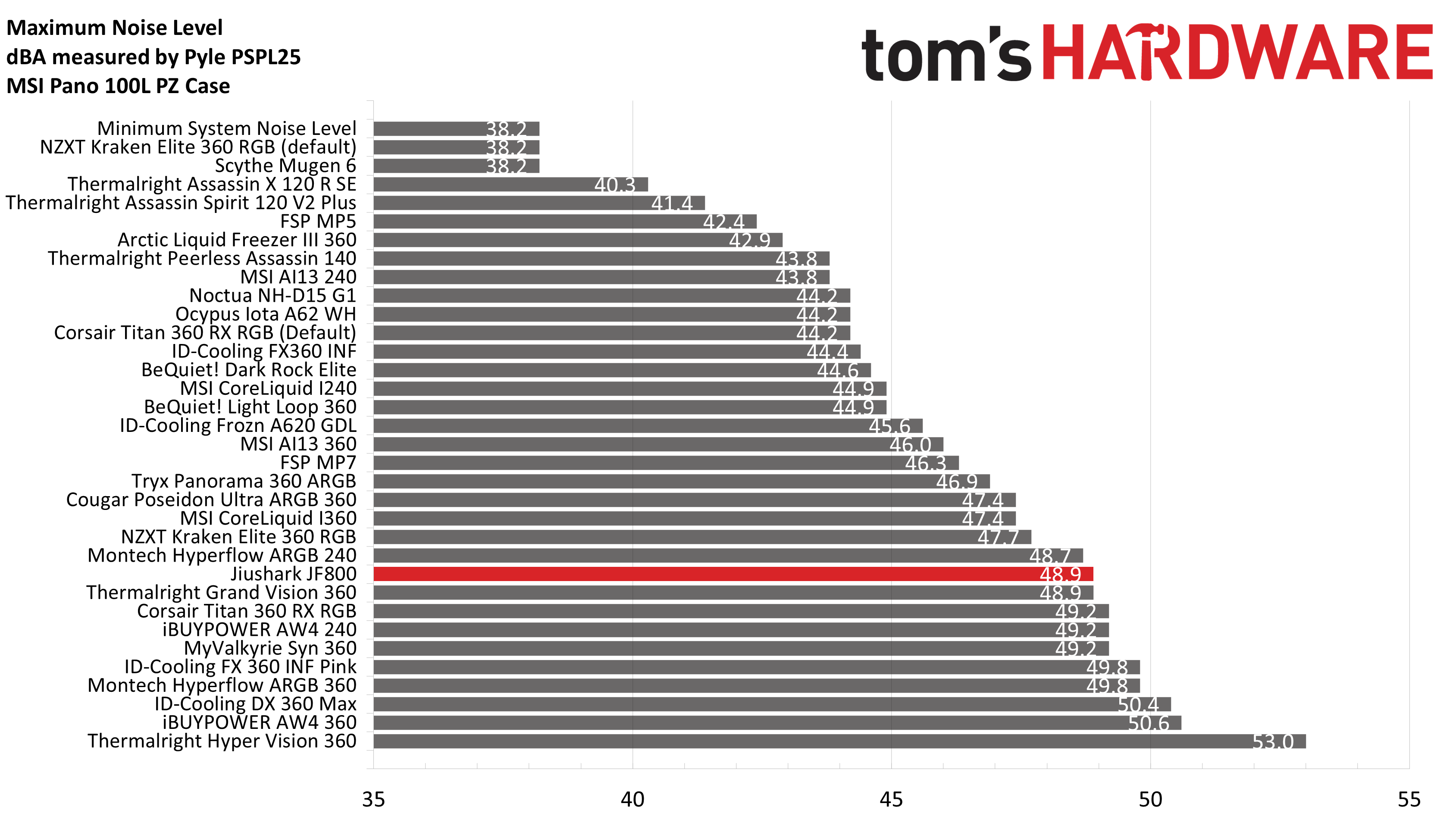

To achieve these levels of thermal performance, the fans of this cooler reach up to 48.9 dBA. This is much louder than it needs to be. I don’t understand why Jiushark allowed these fans to run so loudly, especially since – as the next section will show – they perform well even when limited to low noise levels.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

CPU-only thermal results with noise normalized to 38.9 dBA

Finding the right balance between fan noise levels and cooling performance is important. While running fans at full speed can improve cooling capacity to some extent, the benefits are limited and many users prefer a quieter system.

With this noise-normalized test, I’ve set noise levels to 38.9 dBA using the i7-14700K system. This level of noise is low, but slightly audible to most people. Jiushark’s JF800 continued to offer the best performance we’ve seen from any air cooler here, with 236W cooled during this test.

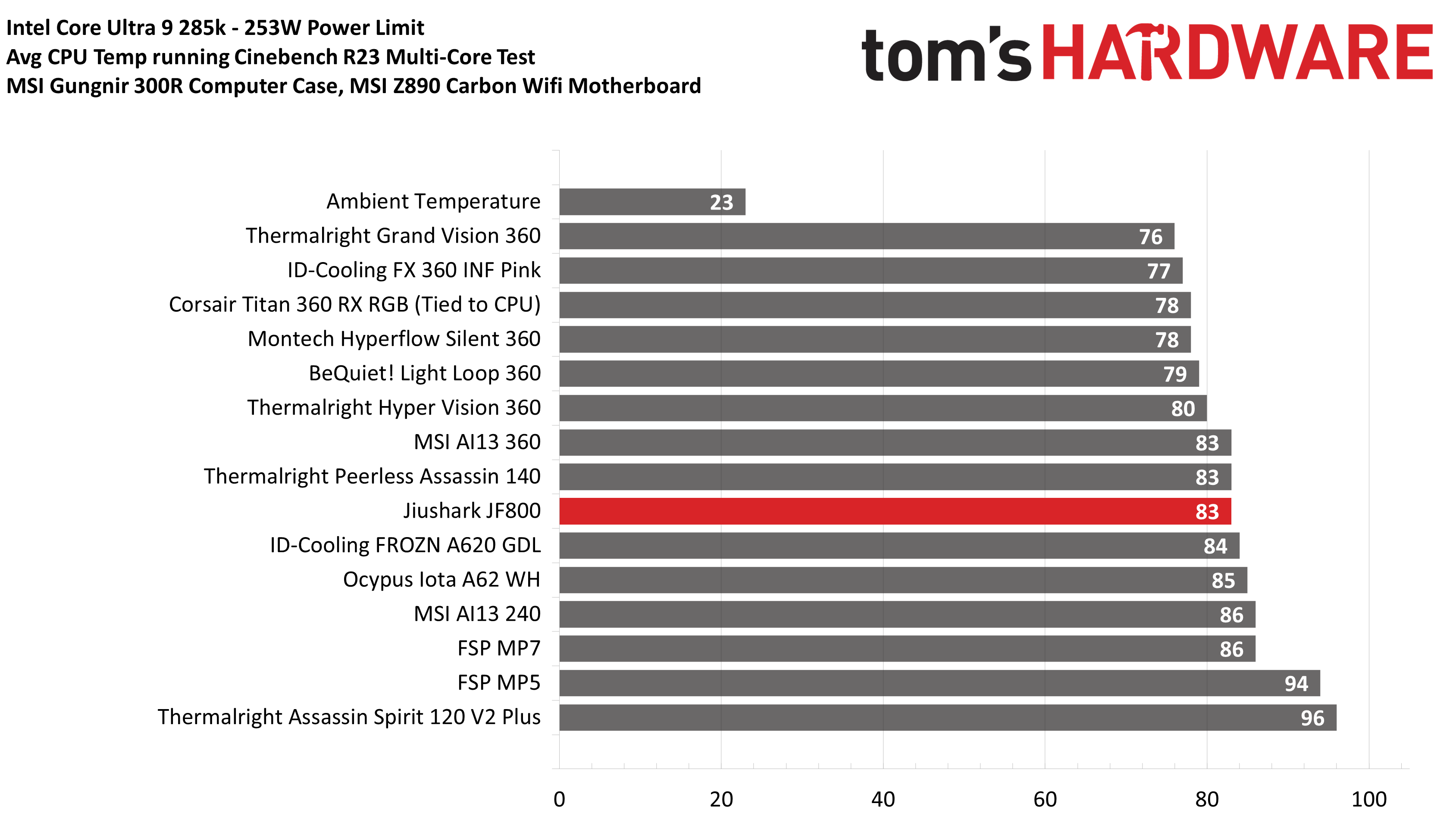

253W results

My recent reviews have focused more on tests with both the CPU and GPU being stressed, but many of y’all have indicated that you would like to see more CPU-only tests. In response, I’ve started testing Intel’s “Arrow Lake” Core Ultra 9 285K with a 253W limit.

Testing with the default power limits of 253W shows very good performance, on par with Thermalright’s Peerless Assassin 140!

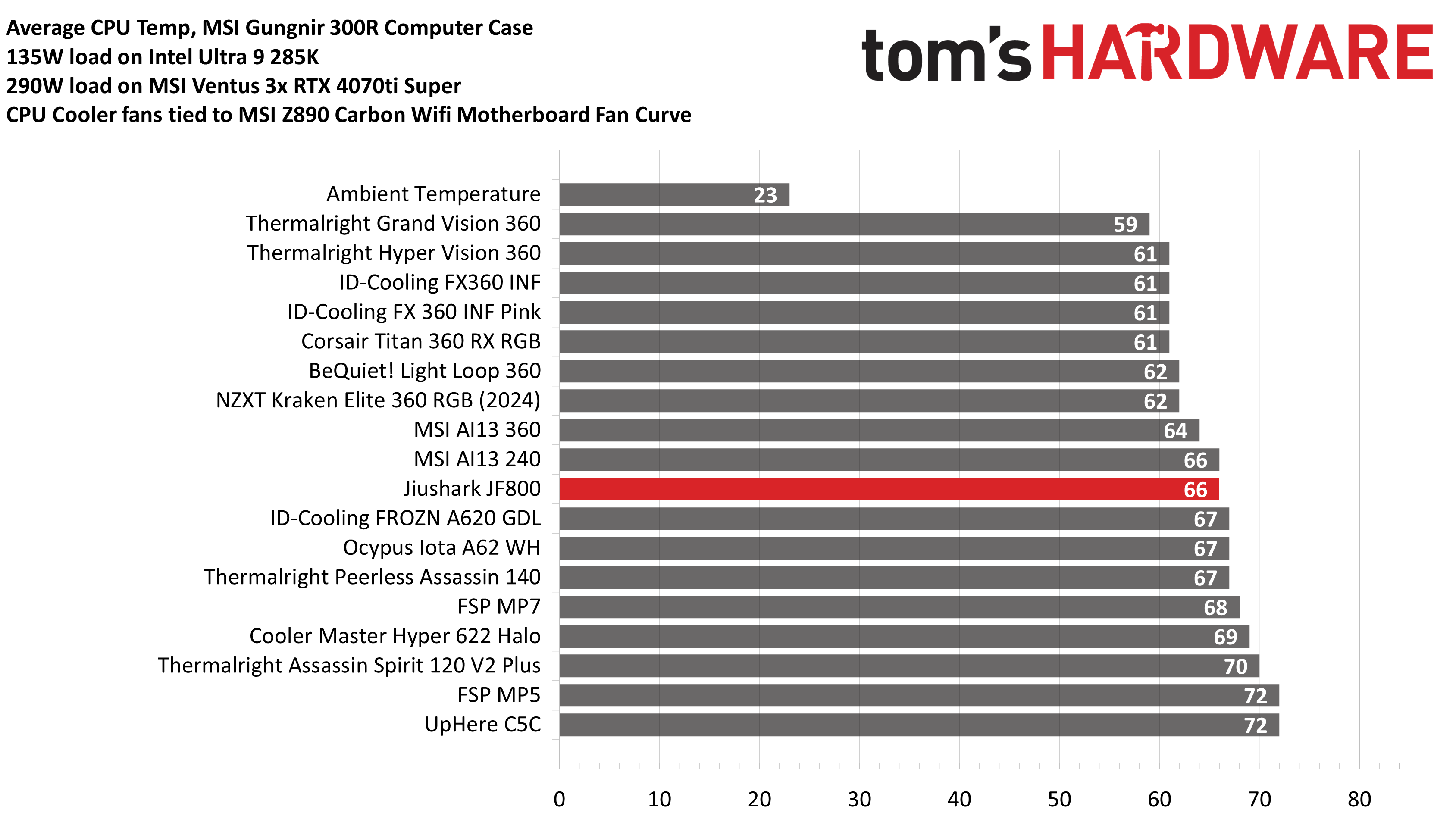

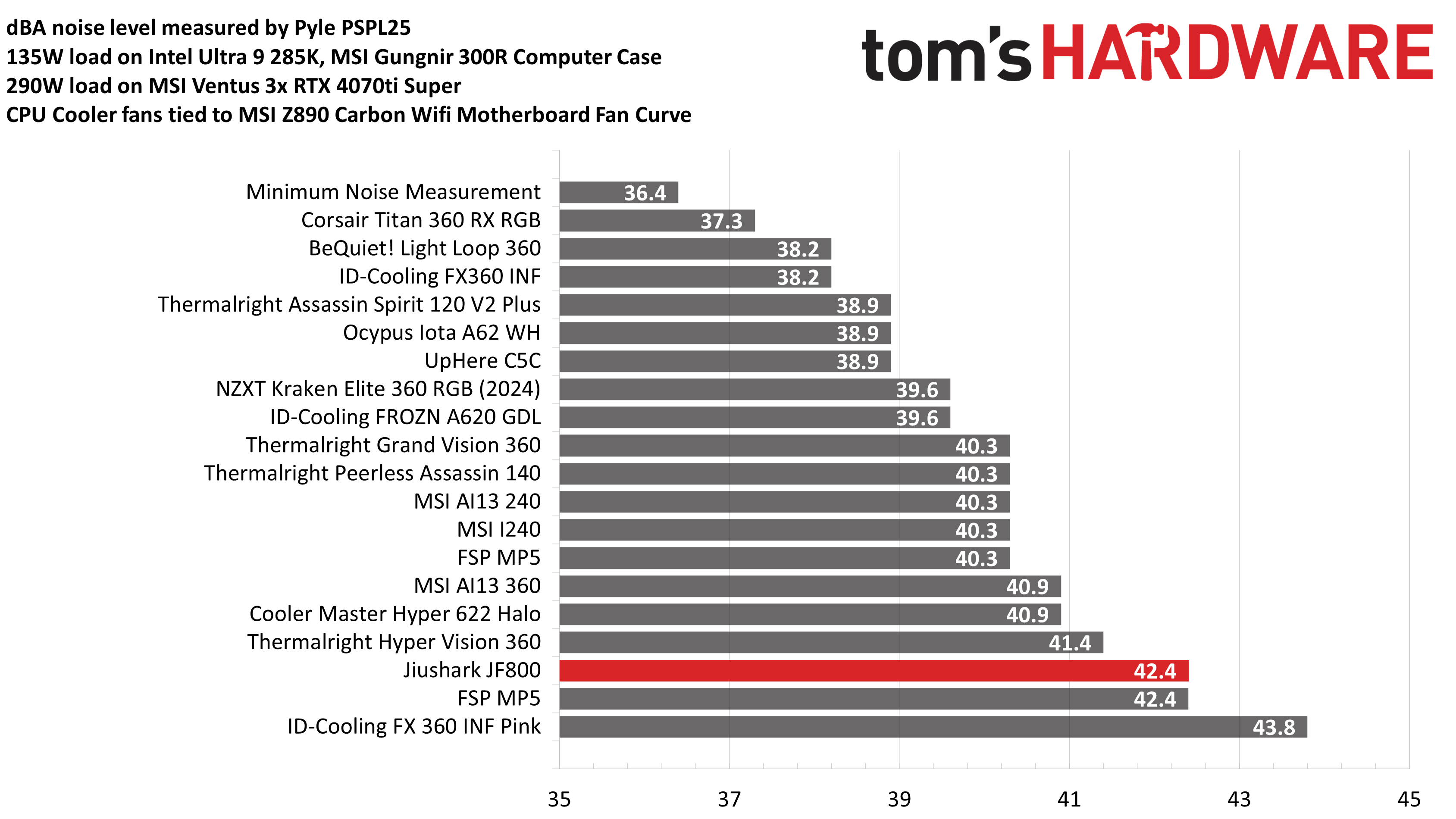

135W CPU + 290W GPU results

Testing a CPU Cooler in isolation is great for synthetic benchmarks, but doesn’t tell the whole story of how it will perform. I’ve incorporated two tests with a power limit imposed on the CPU, while also running a full load on MSI’s GeForce RTX 4070 Ti Super 16G VENTUS 3X.

The CPU power limit of 135W was chosen based on the worst CPU power consumption I observed in gaming with Intel’s Core Ultra 9 285K, which was in Rise of the Tomb Raider.

In this test, the JF800 Diamond continues to impress. With a temperature recorded of 66 degrees Celsius, it again takes the spot for the best air cooler, outperforming rival options from ID-Cooling, Thermalright, and Ocypus! Noise levels weren’t bad, but they were near the loudest of the results we’ve recorded thus far.

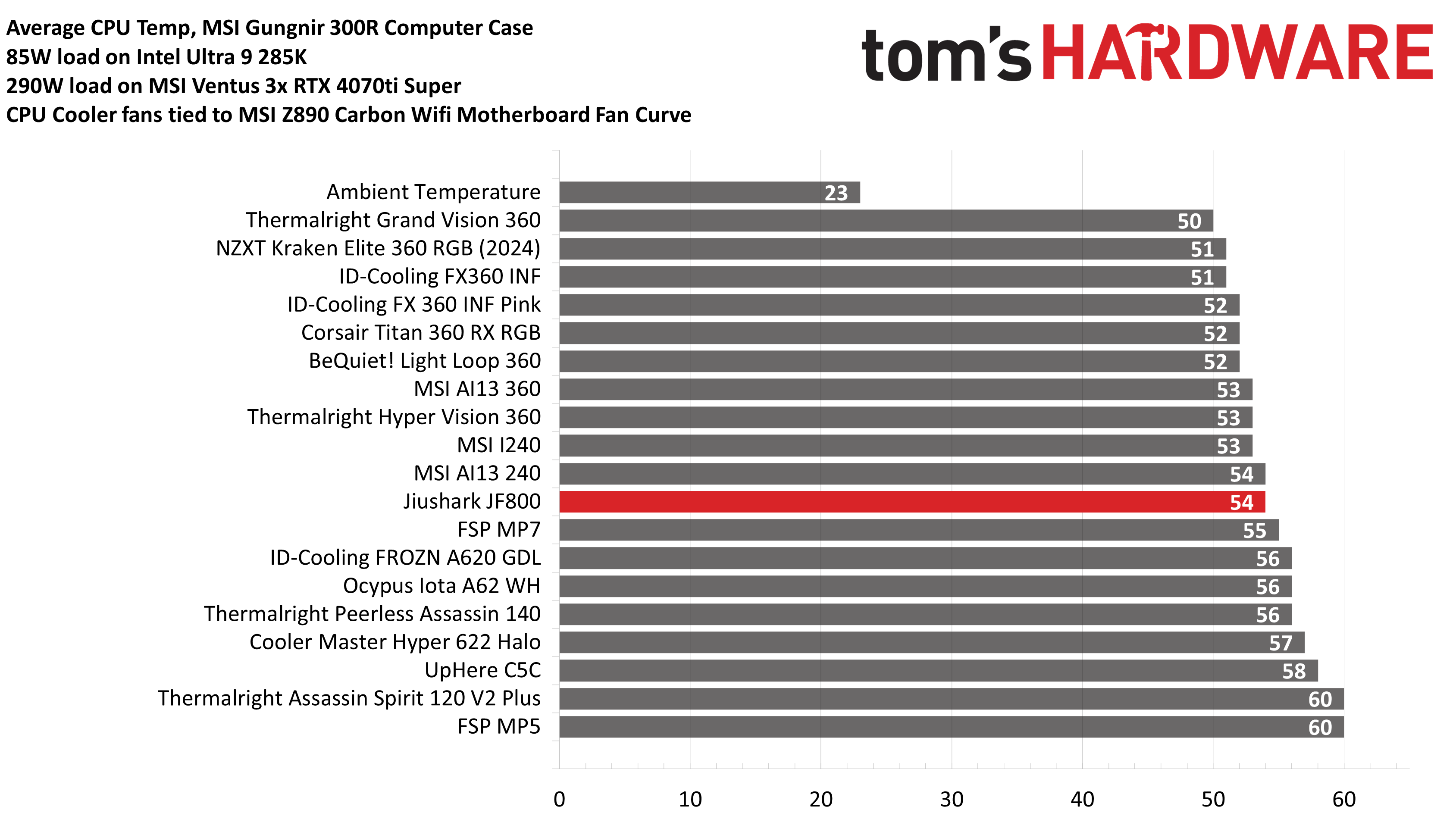

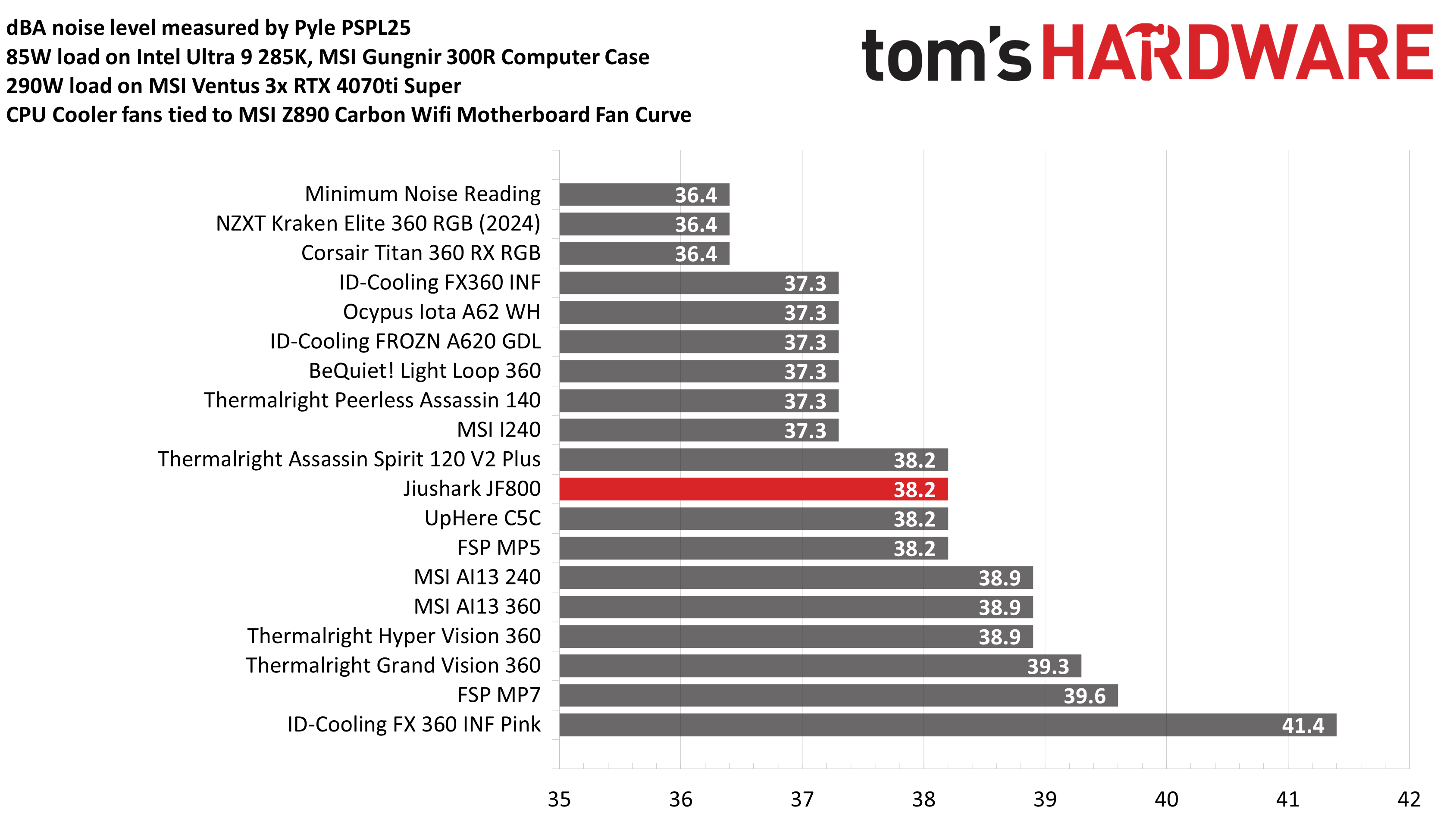

85W CPU + 290W GPU results

Our second round of CPU + GPU testing is also performed with Arrow Lake. The power limit of 85W was chosen based on typical power consumption in gaming scenarios using the Core Ultra 9 285K CPU. This should be fairly easy for most coolers. The main point of this test is to see how quietly (or loudly!) a cooler runs in low-intensity scenarios.

With a CPU temperature of 54 degrees C, Jiushark retains its lead over other air coolers. Its noise level was also relatively low in this test, averaging 38.2 dBA.

Conclusion

Jiushark’s JF800 Diamond is the best air cooler on the market that you can’t (easily) purchase. It outperforms its competitors in every thermal benchmark, even when the fans are noise-normalized. My only complaint is the lack of availability, at least in the U.S. If you want this amazing CPU cooler, you’ll need to import it from AliExpress or another Asian distributor, at least until there is better availability stateside.

Albert Thomas is a contributor for Tom’s Hardware, primarily covering CPU cooling reviews.

-

bit_user Thanks for the review, Albert!Reply

I'm always intrigued by how much variation there is in the performance between coolers that even share the same overall design. I know there are lots of variables to optimize and manufacturing precision can also play a big role, but I do wonder just what are certain coolers doing differently or better that's really making the difference.

Also, in the interest of transparency, I wish it would be clearly stated whether this was a provided review sample or purchased on the open market. The distinction is important, because there's a greater likelihood that provided review samples are cherry-picked top-binned units and possibly even non-representative of what most people would experience. -

obvious oscar Reply

It's always good practice to let users know where, how and for how much the itens were sourced. Realistically don't think there's much binning involved with Air Cookers, is this was a CPU or GPU yeah binning could be massive, but a cooler ? I'd be more concerned about a review being too glowing because - Yey free stuff.bit_user said:Thanks for the review, Albert!

I'm always intrigued by how much variation there is in the performance between coolers that even share the same overall design. I know there are lots of variables to optimize and manufacturing precision can also play a big role, but I do wonder just what are certain coolers doing differently or better that's really making the difference.

Also, in the interest of transparency, I wish it would be clearly stated whether this was a provided review sample or purchased on the open market. The distinction is important, because there's a greater likelihood that provided review samples are cherry-picked top-binned units and possibly even non-representative of what most people would experience. -

bit_user Reply

My concern is to do with all of the variables in manufacturing. It seems to me that each point of contact is a potential source of performance variation. So, that primarily covers the interface between the base and heat pipes and the interface between the heatpipes and fin stacks.obvious oscar said:Realistically don't think there's much binning involved with Air Cookers, is this was a CPU or GPU yeah binning could be massive, but a cooler ?

Secondly, the heat pipes and their fill could be another source of significant variation. I don't know exactly what goes into making the capillaries inside the heatpipes and exactly how reproducible it is, but then you also need to fill them with exactly the right amount of fluid and gas, before sealing them.

If manufacturing isn't a significant factor in performance, then I'm at a loss to explain the significant variation in performance between heatsinks from different manufacturers that look quite similar and appear to have similar parameters, to the extent that we can measure.

It'd be great if a system builder who handles dozens, hundreds, or more of these things would be able to test many units of a few different brands and give us some visibility into the distribution within each heatsink model. Otherwise, I feel like we're flying blind. -

thestryker Until the tariff situation changes it seems unlikely these will be available to NA. When I checked AliExpress there aren't any Jiushark products coming up and the hardware sellers I have bookmarked are showing +45% cost on anything coming to the US.Reply -

Albert.Thomas Reply

While I can understand your perspective, the truth is that sampling golden bins in the cooling industry really does not happen. Products sampled before launch are actually more likely to have defects because they'll be from the first production run, and many companies actually just buy their own products from Amazon and have them shipped to me when they want me to test something. While I concede that it is theoretically possible, in practice it doesn't really happen.bit_user said:Also, in the interest of transparency, I wish it would be clearly stated whether this was a provided review sample or purchased on the open market. The distinction is important, because there's a greater likelihood that provided review samples are cherry-picked top-binned units and possibly even non-representative of what most people would experience.

Just as an example of this: Right now, the management of Tom's Hardware is kinda (understandably) pissed at me because I had a moment of uncensored, unfiltered anger towards a certain manufacturer after losing all faith in them because they keep wasting my time (and therefore, money) with defective products. This manufacturer has been sending me "samples" over the last year, and at this moment the defect rate of those sampled products is 80%. -

BFG-9000 That is completely ridiculous considering how hand-selecting a well-performing sample for reviewers would be so easy, produce good numbers and more sales. It's a low marketing expense with high potential return, and all they would need to do is send out all of the better testing units to Amazon first.Reply

It is well known that most of the car manufacturers used to send hand-tuned "ringers" to all of the car magazines. Perhaps they still do, given how many EV reviews seem to gush over how they easily get more miles out of a charge than it is rated for. That's probably why reviews from places that buy their own samples such as Project Farm or even Consumer Reports have always been more valued.

While everybody loves a bargain and some of the very cheap coolers test great in reviews, it's the lower manufacturing variability that keeps premium brands such as Noctua in business. For a higher price, at least you know what you are going to get. -

bit_user Reply

I agree with the rest of your post, but I'm not sure about this part. It's well-known that the capacity of lithium batteries decreases with use and it's plausible that manufacturers specify the typical range, during the life of the batteries. This would yield a greater range at the beginning, but a lower range near the end. I'm not saying you're wrong, but that is one alternative explanation. Now, this is pretty far outside of my expertise and getting off-topic, so I'll just leave it at that.BFG-9000 said:It is well known that most of the car manufacturers used to send hand-tuned "ringers" to all of the car magazines. Perhaps they still do, given how many EV reviews seem to gush over how they easily get more miles out of a charge than it is rated for.

Again, this is very plausible to me, however what I'd love to see is someone able to back it up with data (e.g. Puget Systems). I guess anyone with the capability to test cooler variability either is too cheap/lazy to do it or has incentives not to share what they know. The funny thing is that Puget isn't too shy about sharing failure data on things like DRAM, SSDs, and even CPUs.BFG-9000 said:While everybody loves a bargain and some of the very cheap coolers test great in reviews, it's the lower manufacturing variability that keeps premium brands such as Noctua in business. For a higher price, at least you know what you are going to get.

Some of those system builders do a burn-in test, before systems ship out. By measuring CPU package power and temperature, they could account for how much variability exists both among the CPUs and coolers (although it would require additional work to know how much of the cooler variation is due to installation vs. the coolers themselves). -

bit_user Reply

Thanks for sharing this detail. I still would appreciate more transparency (i.e. which review samples are shipped directly to you vs. purchased via Amazon), but I understand you're limited in how much you can speak about.Albert.Thomas said:While I can understand your perspective, the truth is that sampling golden bins in the cooling industry really does not happen. Products sampled before launch are actually more likely to have defects because they'll be from the first production run, and many companies actually just buy their own products from Amazon and have them shipped to me when they want me to test something.

I'm not saying there definitely is cherry-picking going on, but what I'd like is to have some evidence of when it probably isn't. If I know you received a cooler, with the order fulfilled by Amazon (or another online store), then it would give me more confidence in this regard.

Again, I'm glad to have your reviews. I hope you can reach an agreement with the editors about the level of detail and the best way to share information about products exhibiting quality issues. -

Energy96 AIO liquid coolers are so easy to install and relatively inexpensive now it’s hard to justify air cooling anymore in any DIY PC.Reply -

thestryker Reply

It's extremely hard to cherry pick the heatsink itself which is the most important part of an air cooler. While there certainly is more variance for fans it'd be pretty arduous to cherry pick those unless the company was using multisourced or changing fans and not disclosing. This is probably why nobody is particularly concerned with it when it comes to reviews.bit_user said:I'm not saying there definitely is cherry-picking going on, but what I'd like is to have some evidence of when it probably isn't.

The QC aspect is never going to show up in a review unless the company is regularly shipping bad products. If a company has a history of inconsistency I'd say that's something to certainly note in a review.