Tachyum builds the final Prodigy FPGA prototype, delays Prodigy processor to 2025

Delayed, again

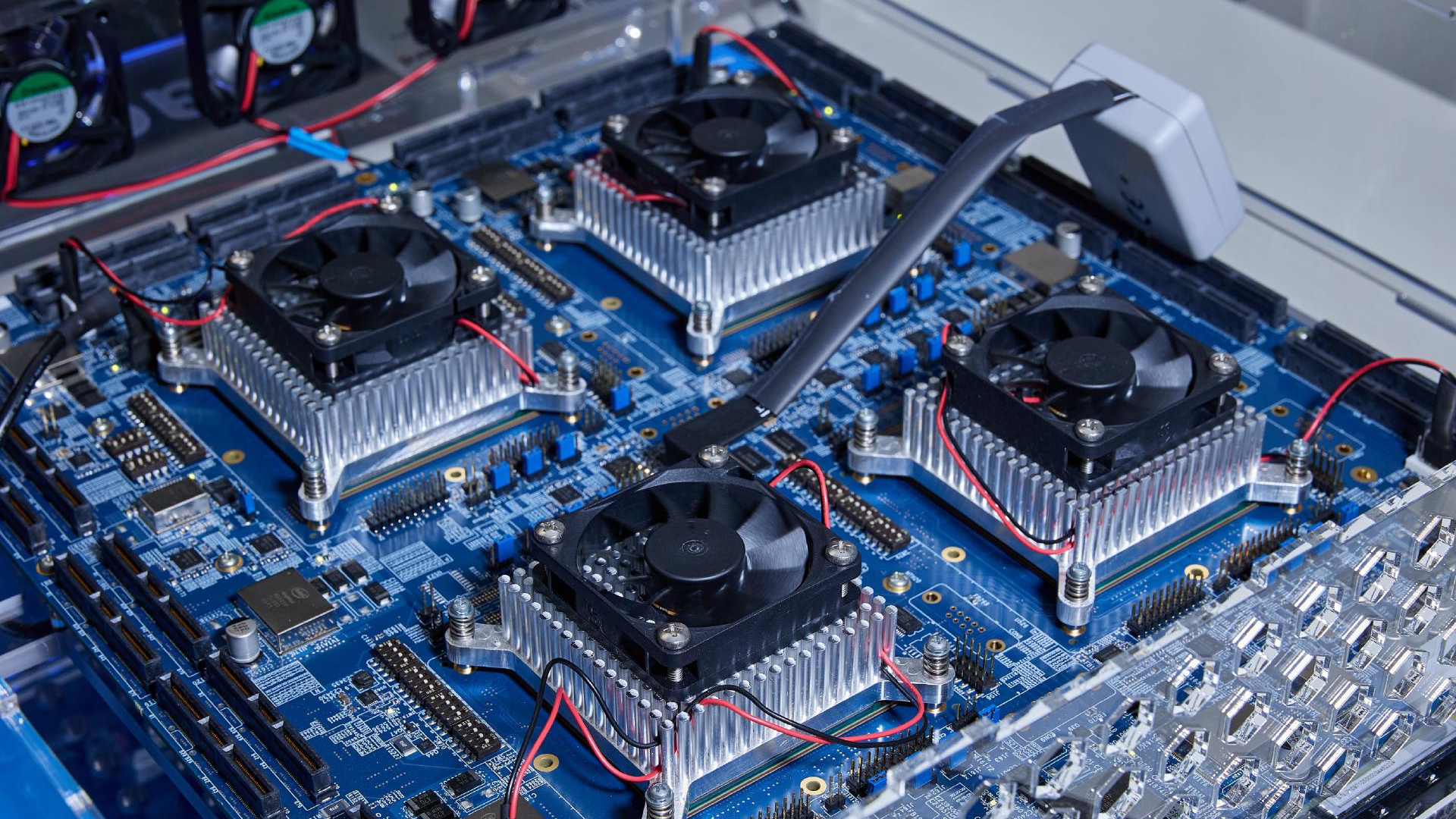

Tachyum said this week it had completed the final build of its Prodigy FPGA emulation system, which is an important milestone for any design. In addition to that, the company said that it would delay the start of production for its universal 192-core Prodigy processsors from 2024 to 2025, but stressed that it still expects general availability of servers featuring its processors next year.

"Reaching this point of our development journey prior to tape-out and volume production of Prodigy processors next year is extremely gratifying," said Dr. Radoslav Danilak, founder and CEO of Tachyum.

This final hardware prototype is crucial for achieving over '10 quadrillion cycles in reliability tests,' a milestone Tachyum aims to hit before the Prodigy chips are taped out. These units will help ensure that the chips meet extreme reliability demands before entering full production.

Key updates in the final FPGA-based build include support for an increased core count beyond 128, following a previous upgrade to 192 cores last year. Additional enhancements have been made to support larger-capacity DIMMs, improve debugging processes, simplify communication through modified BMC-UEFI hardware, and replace board-to-board connectors for a better experience.

"Our commitment to delivering the world's smallest, fastest and greenest general-purpose chip has remained unwavering," Danilak added. "Ensuring this happens Day One of launch has been a priority for us and we are excited to be on the precipice of this industry-altering release."

The universal Prodigy processor promises to equally perform well with general purpose, graphics, and AI/ML workloads was initially set to launch in 2020, following a planned tape-out in 2019. However, its release has faced multiple delays, with the schedule pushed back from 2021 to 2022, then to 2023, and later to 2024. Earlier this year, Tachyum announced that it would begin mass production of the Prodigy processors in the second half of 2024, though this vague timeline could extend to December. Now, the company has apparently updated its plans again, indicating that mass production will instead start in 2025, which means that it will likely miss its target to start sampling of reference servers featuring the Prodigy processor in Q1 2025. However, it is still unclear from Tachyum's recent announcements whether the chip is on track to tape out in 2024.

Tachyum asserts that its processor can achieve up to 4.5 times the performance of the top x86 processors for cloud tasks, three times the performance of the leading GPUs for high-performance computing, and six times the performance for AI applications. However, despite these ambitious claims, no prototypes have been publicly demonstrated to confirm that the processor's architecture is both functional and capable of delivering these results.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

das_stig Love the retro heatsinks from the 1980s, wonder what the heat and power requirements are?Reply -

Findecanor There is currently no shortage of semiconductor design firms that claim to have designed wide CPU cores, often with proprietary solutions for accelerating AI.Reply

Most of these are running RISC-V, and can thus take advantage of a shared ecosystem.

Yet, the adoption rate of these is worryingly low. Some firms have sitting on cores for two years that have yet to be taped out because there hasn't been a client that has found it worthwhile to fund its manufacture.

What sets Tachyum Prodigy apart from these?

I have yet to hear about it offering anything that RISC-V and ARM doesn't, and thus it should have an even worse uphill battle.

Are there benchmarks that prove its superiority?

How can it benefit customers' applications in ways that others can't? -

slightnitpick ReplyTachyum asserts that its processor can achieve up to 4.5 times the performance of the top x86 processors for cloud tasks, three times the performance of the leading GPUs for high-performance computing, and six times the performance for AI applications.

It's a huge claim to say that a general-purpose machine is more capable at dedicated tasks than a dedicated machine. How are they measuring this, and what are they measuring against? What are "cloud tasks", and are x86 processors the baseline for cloud tasks? What is GPU use in high-performance computing? And what kind of AI applications (training or inference)? And how is having this all on one processor better than having it on discrete processors? Just the speed of the data interconnect? -

bit_user Reply

I've seen this story play out again and again. Some upstart thinks they can do a better job than the mainstream guys. However, they're woefully optimistic about how long it will take. By the time they finally get a chip taped out and debugged, the broader computing market has at least caught up to them, and usually even passed them by.slightnitpick said:It's a huge claim to say that a general-purpose machine is more capable at dedicated tasks than a dedicated machine. How are they measuring this, and what are they measuring against? What are "cloud tasks", and are x86 processors the baseline for cloud tasks? What is GPU use in high-performance computing? And what kind of AI applications (training or inference)? And how is having this all on one processor better than having it on discrete processors? Just the speed of the data interconnect?

These guys started out with a more ambitious microarchitecture, but watered it down to better adapt it to handle general-purpose "cloud" compute loads. IIRC, what they ended up with doesn't strike me as all that different from anything else on the market, except for their integrated tensor product units. Unfortunately, it still has a proprietary ISA, which was a really bad move. I doubt it has much benefit over RISC-V, if any, yet it requires custom tooling and lots of software porting to get an ecosystem up and running on it.

If they're lucky, they'll get enough government contracts to string them along, but I doubt they'll ever get a product to market while it's truly compelling. -

gg83 Reply

The only contender I expect to win is tenstorrent. They have some really smart people and smart money behind them. What do you think?bit_user said:I've seen this story play out again and again. Some upstart thinks they can do a better job than the mainstream guys. However, they're woefully optimistic about how long it will take. By the time they finally get a chip taped out and debugged, the broader computing market has at least caught up to them, and usually even passed them by.

These guys started out with a more ambitious microarchitecture, but watered it down to better adapt it to handle general-purpose "cloud" compute loads. IIRC, what they ended up with doesn't strike me as all that different from anything else on the market, except for their integrated tensor product units. Unfortunately, it still has a proprietary ISA, which was a really bad move. I doubt it has much benefit over RISC-V, if any, yet it requires custom tooling and lots of software porting to get an ecosystem up and running on it.

If they're lucky, they'll get enough government contracts to string them along, but I doubt they'll ever get a product to market while it's truly compelling. -

bit_user Reply

I think a good example to watch is the Japanese supercomputing industry. They've long maintained their indigenous capability to build and deploy supercomputers probably as much for geopolitical reasons as economic ones. Therefore, the mere idea of a European HPC/cloud CPU makes sense. However, it doesn't exist in a vacuum. There's also this:gg83 said:When will the investors just back out?

https://www.european-processor-initiative.eu/general-purpose-processor/

Tachyum seems further along, but that might not always be the case. However, even if they do get passed by the EPI, Slovakia doesn't necessarily share the same geopolitical orientation as backers of the EPI and therefore might choose to maintain Tachyum on its own or pursue export markets that don't interest the EPI.

In summary, if we looked at them merely a business, I'd say they're probably headed off a cliff. However, supercomputing initiatives shouldn't be viewed absent its political context.