Nvidia GeForce RTX 4090 and 5090 prototypes exposed — development and testing cards had four 16-pin power connectors

Early test hardware often comes with extra debugging features.

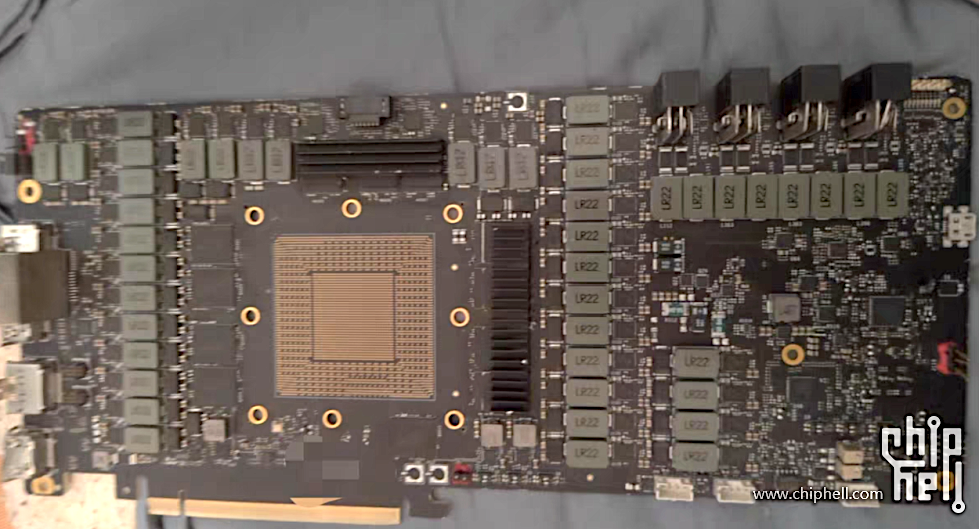

A leaker from the Chipell forums (via HXL) published images of Nvidia-designed PCBs (printed circuit boards) that were used to bring up the new Blackwell RTX 50-series GPUs while the company was developing its latest graphics cards. The boards feature debugging interfaces alongside overwhelming power delivery circuitry, with the latter allowing designers to better understand how particular GPUs work and respond to various factors.

🥲nvidia engineering samplesAD102: 4x 16pinGB202: 4x 16pinproduction card4090:1x 16pin5090:1x 16pinhttps://t.co/c4RAWWfO4u pic.twitter.com/ReybcTsdeUFebruary 11, 2025

As an example of this, development/bring up boards for Nvidia's GeForce RTX 4090 (AD102) and RTX 5090 (GB202) — according to blogger Panzerlized — feature four 16-pin 12VHPWR and 12V-2x6 power connectors, respectively. By contrast, development cards for the GeForce RTX 3090 (GA102) and RTX 3080 (GA104) featured four or three 8-pin power connectors. So it's not just the latest GPUs, as older-generation products also featured an 'excessive' number of power connectors.

The higher number of power connectors on development/bring-up boards is not surprising or indicative of any underlying problems. Engineers tend to power up such graphics cards in stages, including core voltages, uncore voltages, memory voltages, I/O rails, and so on. This is done to verify that all power the rails initialize correctly, and that no short-circuit or out-of-spec behavior occurs.

The cards also feature debugging interfaces, test points, and instrumentation headers to allow for in-depth measurement and monitoring. All these are present on the cards pictured. Finally, there are plenty of jumpers on the PCBs to reconfigure various things.

As an added bonus, these boards can deliver an excessive amount of power to the GPUs. This can help Nvidia and its partners determine the exact capabilities and limits of the processors, as well as testing their performance under various conditions. For example, a prototype GeForce RTX 4090 had as many as 45 power phases along with four 12VHPWR power connectors. That's enough to deliver up to 2400 watts to the card, which far exceeds the amount of power required by the end product. (And we suspect Nvidia never actually tried to feed 2400W into a prototype.)

Some extreme overclockers would probably be excited to acquire such a graphics cards. The excessive power delivery setup could help with liquid nitrogen cooling, as one possibility. However, these development/bring up boards usually come with older BIOS versions that only work with select GPUs, and due to their early nature there's a good chance that they won't fully work or behave as expected. Getting drivers that support such cards could also be difficult.

Regardless, it's interesting to see the development boards used by Nvidia and its graphics cards partners. Designing, prototyping, testing, debugging, and finalizing modern computer components can be a very complex process, and even with all the work that goes into the launch of a new GPU, issues can crop up once cards are in the hands of thousands of gamers with a wide variety of hardware configurations.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

ezst036 This would be the only card with that connector on it that I would feel comfortable purchasing.Reply

Two should be the standard though. But really why not just get rid of this stupid thing altogether.

I just want one so nothing melts. That's all.Admin said:Some extreme overclockers would probably be excited to acquire such a graphics cards. -

iocedmyself Traditional 8pin connectors are rated for 150w (12v @ 12.5a) BUT can actually safely deliver a sustained load of 225w per connector. That's a 50% buffer.Reply

Compare that to the dumb thing Nvidia is determined to force on the market, that's rated to 600w, with a peak maximum of 687w, that's only a 14.5% buffer.

JayzTwoCents has already made multiple video's showing that his stock configuration AIB 5090 is pulling 630w. Debauer got his hands on one of the FE 5090's that melted at both ends, and went on to test his own FE card, finding that the power distribution across the 6 pins actually providing power was....not right, with one wire pulling 23amps (276w) and another pulling 20 (240w) while the others were only pulling a few amps each. Resulting in those load wires getting up to 90c+ almost immediately, because they're only supposed to be operating at 8a per pin.

Even were the thing to be functioning properly, the headroom is insufficient.....but they're not. The connector MIGHT be defensible on the products lower in the stack.....but they're also not really justifiable in any technical sense. It seems like the determination for this connector comes down either to "form over function" or more likely a cost cutting measure so Nvidia can keep pushing their margins ever skyward. -

YSCCC This just sounds more and more hilarious, I can see some little devils in the backgroud mocking.Reply -

Nikolay Mihaylov The most thorough explanation I've found so far is from Tech Tuber Buildzoid: kb5YzMoVQyw:104View: https://www.youtube.com/watch?v=kb5YzMoVQyw&t=104Reply

It's pretty damning in the sense that there's no obvious reason why a $2000 card doesn't have sense resistors for each of the 6 lines, or at the very least, one for each pair of lines, like the 3090. -

spongiemaster Reply

150W per connector, not per 12v wire. De8uer's testing shows a clear load balancing issue where one wire is carrying way too much current while some others are not doing anything. The power going through the connector is within spec, but what that one wire is pulling is not. If you cut 2 of the 12v wires in an 8 pin connector and run furmark, it's not going to be happy either. It may not catastrophically melt, but that is simply a result of pulling 1/4 the power. I've seen plenty of melted 24pin connectors that use the same plug and wire as the 8pin gpu connector.iocedmyself said:Traditional 8pin connectors are rated for 150w (12v @ 12.5a) BUT can actually safely deliver a sustained load of 225w per connector. That's a 50% buffer. -

Elusive Ruse If Nvidia doesn’t come up with a viable option soon, the only other solution I can see is a solution similar to the Asus BTF series with the GPU getting its power from the MOBO.Reply -

YSCCC Reply

Or just make it 2x 12v 2x6 connectors, PSU manufacturers will smile at joyElusive Ruse said:If Nvidia doesn’t come up with a viable option soon, the only other solution I can see is a solution similar to the Asus BTF series with the GPU getting its power from the MOBO. -

iocedmyself Reply

Yes...i said the 8 pin connector is rated for 150w. The 8 pin connectors consist of 3 12vpins, a sense pin, and 4 ground pins. While the 8 pin is only rated for 150w (50w=4.1a x 3pin) it is capable of delivering 225w sustained (75w at 6.25a x 3 pins)spongiemaster said:150W per connector, not per 12v wire. De8uer's testing shows a clear load balancing issue where one wire is carrying way too much current while some others are not doing anything. The power going through the connector is within spec, but what that one wire is pulling is not. If you cut 2 of the 12v wires in an 8 pin connector and run furmark, it's not going to be happy either. It may not catastrophically melt, but that is simply a result of pulling 1/4 the power. I've seen plenty of melted 24pin connectors that use the same plug and wire as the 8pin gpu connector.

Yes it's obviously a load balancing issue, but that issue is stemming from something in the card, or the power connector on the card. The wire itself was tested, functional and in good operating order on a 4090.

Given that JayzTwoCents also observed his 5090 Zotac pulling 630w sustained load at stock setting, which is out of spec for the card, the connector AND the wire....and there's already been two instances of melted connectors out of the few dozen cards that actually made it into consumer hands, AND we already went through this mess with the 4090 and it's considerably lower power draw it's not surprising to see this mess play out again. -

ekio They need to admit this connector was a huge mistake instead of doubling down with it!Reply

GPUs card are all huge, what was this idea to plug them with such a ridiculously small and fragile connector?????

Put true engineers on the job and redo it all.

We are in 2025 and still struggling with burning eletric plugs. That’s absolutely ridiculous! -

thisisaname Reply

Not sure it would still not have 1 wire carrying far to much while the rest carry nearly none.ezst036 said:This would be the only card with that connector on it that I would feel comfortable purchasing.

Two should be the standard though. But really why not just get rid of this stupid thing altogether.

I just want one so nothing melts. That's all.