Nvidia's new tech reduces VRAM usage by up to 96% in beta demo — RTX Neural Texture Compression looks impressive

But there is a performance cost.

Nvidia's RTX Neural Texture Compression (NTC) has finally been benchmarked, demonstrating the technology's capabilities in an actual 3D workload. Compusemble benchmarked Nvidia's new memory compression tech on an RTX 4090 at 1440p and 4K resolution, revealing a whopping 96% reduction in memory texture size with NTC compared to conventional texture compression techniques. You can see the results in the video below.

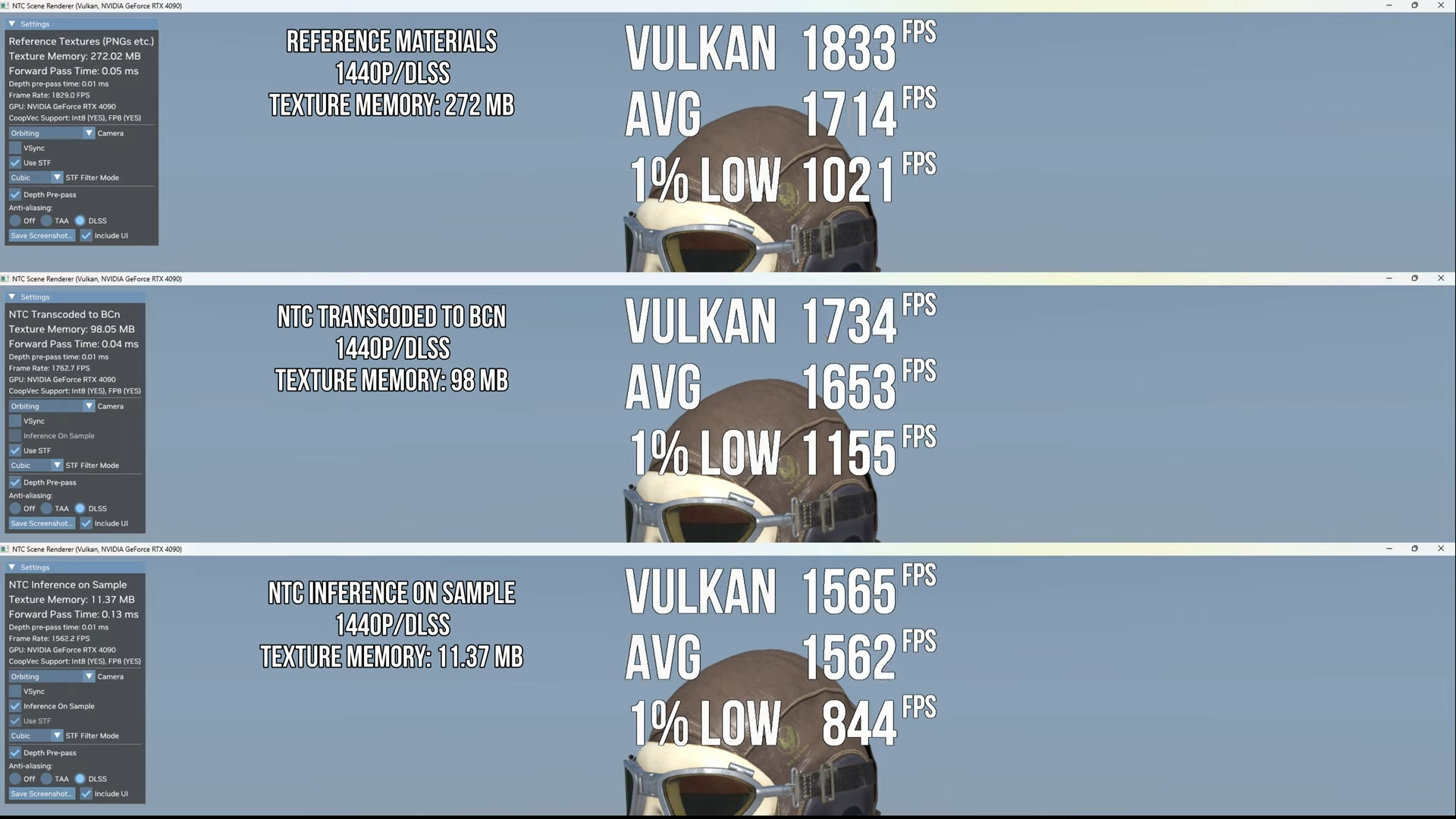

Compusemble tested NTC in two modes: "NTC transcoded to BCn" and "Inference on Sample." The former transcodes textures to BCn on load, while the latter only decompresses the individual texels needed to render a specific view, further reducing texture memory size.

At 1440p with DLSS upscaling enabled, the "NTC transcoded to BCn" mode reduced the test application's texture memory footprint by 64%, from 272MB to 98MB. However, the "NTC inference on sample" mode decreased the texture size significantly to just 11.37MB. This represents a 95.8% reduction in memory utilization compared to non-neural compression, and an 88% reduction compared to the previous neural compression mode.

Compusemble's benchmarks revealed that performance takes a minor hit when RTX Neural Texture Compression is enabled. However, the benchmarker ran this beta software on the prior-gen RTX 4090, not the current-gen RTX 5090, so it is possible that these performance reductions could shrink with the newer architecture.

"NTC transcoded to BCn" mode showed a negligible reduction in average FPS compared to NTC off, though 1% FPS lows were noticeably better than regular texture compression with NTC disabled. "NTC inference on sample" mode took the biggest hit, going from the mid-1,600 FPS range to the mid-1,500 FPS range. 1% lows significantly dropped into the 840 FPS range.

Memory capacity reduction is the same at 1440p with TAA anti-aliasing instead of DLSS upscaling, but the GPU's performance behavior differs. All three modes ran significantly faster than DLSS, operating at almost 2000 FPS. 1% lows in the "NTC inference on sample" mode ran in the mid 1,300 FPS range, a big leap from 840 FPS.

Unsurprisingly, upping the resolution to 4K drops performance significantly. DLSS upscaling enabled shows an average FPS in the 1,100 FPS range in the "NTC transcoded to BCn" mode and just under 1,000 FPS average in the "NTC inference on sample" mode. 1% lows for both modes were in the 500 FPS range. Disabling DLSS in favor of native resolution with TAA anti-aliasing shows an average FPS boost into the 1,700 FPS range with the "NTC transcoded to BCn" mode and an average FPS in the 1,500 range with the "NTC inference on sample" mode. 1% lows for the former NTC mode were just under 1,100 FPS, while the latter mode's 1% lows were just under 800 FPS.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Finally, Compusemble tested cooperative vectors with the "NTC inference on sample" mode at 4K resolution with TAA. Cooperative vectors enabled resulted in an average frame rate in the 1,500 range, disabled average FPS plummets to just under 650 FPS. 1% lows similarly were just under 750 FPS, with cooperative vectors turned on; disabled 1% lows were just above 400 FPS, respectively.

Takeaways

Compusemble's RTX NTC benchmarks reveal that Nvidia's neural compression technology can reduce a colossal amount of a 3D application's memory texture footprint but at the cost of performance, especially in the "inference on sample" mode.

The DLSS vs native resolution performance is the most interesting aspect. The significant frame rate increase at native resolution shows that the tensor cores used to process RTX NTC are being taxed very hard, probably to the point where DLSS upscaling performance is hindered, enough to potentially bottleneck the shader cores. If this didn't, we should see the DLSS mode operating at a higher frame rate than the native 4K TAA benchmarks.

RTX Neural Texture Compression has been in development for at least a few years. The new technology uses the tensor cores in modern Nvidia GPUs to compress 3D applications and video game textures instead of traditional Block Truncation Coding. RTX NTC represents the first massive upgrade in texture compression technology since the 1990s, allowing for up to four times higher-resolution textures than GPUs are capable of running today.

The technology is in beta, and there's no release date. Interestingly, the minimum requirements for NTC appear to be surprisingly low. Nvidia's GitHub page for RTX NTC confirms that the minimum GPU requirement is an RTX 20-series GPU. Still, the tech has also been validated to work on GTX 10 series GPUs, AMD Radeon RX 6000 series GPUs, and Arc A-series GPUs, suggesting we could see the technology go mainstream on non-RTX GPUs and even consoles.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

-Fran- Hm... This feels like we've gone full circle.Reply

Originally, if memory serves me right, the whole point of textures was to lessen the load of the processing power via adding these "wrappers" to the wireframes, because you can always make a mesh and add the colouring information in it (via shader code or whatever) and then let the GPU "paint" it according to what you want it to; you can do that today even, but hardly something anyone wants to do. Textures were a shortcut from all that extra overhead on the processing, but it looks like nVidia wants to go back to the original concept in graphics via some weird "acceleration"?

Oh well, let's see where this all goes to.

Regards. -

oofdragon Hey I had just now this awesome great ideia that they didn't realize... what about just giving players more friggin VRAM in the GPUs???????Reply -

salgado18 So Nvidia is creating a tech that lets them build an RTX 5090 with 4GB of VRAM.Reply

They also created a tech that makes DLSS useless.

And I'm really curious to see how it would run on an RTX 4060, or even lower. And on non-RTX cards.

That drop in 1% lows performance is a bit troubling.

All that said, I really like the tech. A 96% reduction in memory usage with a 10-20% performance hit is actually very good. Meshes, objects and effects will be traded for extremely high resolution textures, or even painted textures instead of tiled images.

Too many questions remain, but I like this. -

Conor Stewart Reply

Not really, it is just compressing the textures in a different and more efficient way that does mean that they will need decompressed when they are used.-Fran- said:Hm... This feels like we've gone full circle.

Originally, if memory serves me right, the whole point of textures was to lessen the load of the processing power via adding these "wrappers" to the wireframes, because you can always make a mesh and add the colouring information in it (via shader code or whatever) and then let the GPU "paint" it according to what you want it to; you can do that today even, but hardly something anyone wants to do. Textures were a shortcut from all that extra overhead on the processing, but it looks like nVidia wants to go back to the original concept in graphics via some weird "acceleration"?

Oh well, let's see where this all goes to.

Regards. -

ingtar33 these textures will be just as "lossless" as mp3s were "lossless" for sound?Reply

somehow i doubt it. since mp3s weren't lossless either. -

rluker5 I like how the performance impact was measured with a ridiculous framerate.Reply

Everything a GPU does takes time. Apparently neural decompressing takes less time than the frametime you would have at 800 something fps. That isn't very much time and I imagine my low vram 3080 could decompress a lot of textures without having a noticeable impact at 60 fps. -

Mcnoobler No matter what Nvidia does, it is always the same. Frame generation? Fake frames. Lossless scaling? AFMF? Soo amazing and game changing.Reply

I think its become apparent that Nvidia owners spend most of their time gaming, and AMD owners spend more of their time all about sharper text and comment fluidity for their social media negativity. No a new AMD GPU will not load the comment section faster. -

bit_user I'm a little unclear on whether there's any sort of overhead not accounted for, in their stats. Like whether there's any sort of model that's not directly represented as the compressed texture, itself. In their paper, they say they don't use a "pre-trained global encoder", which I take to mean that each compressed texture includes the model you use to decompress it.Reply

However, what can say is fishy...

Yeah, the benchmark is weird in that it seems to compare against uncompressed textures. AFAIK, that's not what games actually do.oofdragon said:Nvidia be like: mp3 music, lossless flac music, it's music just the same! 🤓

Games typically use texture compression with something like 3 to 5 bits per pixel. Compared to a baseline 24-bit uncompressed, that's a ratio of 5x to 8x.

https://developer.nvidia.com/astc-texture-compression-for-game-assets

So, this benchmark is really over-hyping the technology. Then again, this is Nvidia and they love to tout big numbers. What else is new?

Edit: I noticed what Nvidia is telling developers about it:

"Use AI to compress textures with up to 8x VRAM improvement at similar visual fidelity to traditional block compression at runtime."

Source: https://developer.nvidia.com/blog/get-started-with-neural-rendering-using-nvidia-rtx-kit/ -

bit_user Reply

Did you happen to notice how small the object is, on screen? The cost of all texture lookups will be proportional to the amount of textured pixels drawn per frame. A better test would've been a full frame scene with lots of objects, some transparency, and edge AA.rluker5 said:I like how the performance impact was measured with a ridiculous framerate.

Everything a GPU does takes time. Apparently neural decompressing takes less time than the frametime you would have at 800 something fps. That isn't very much time