RTX 4090 16-pin meltdown victims resort to DIY solutions to prevent further disaster

The notorious 16-pin (12VHPWR) power connector continues to be a persistent issue for some unlucky users. While user reports of 16-pin meltdowns have diminished recently, they still occur. The most recent case involves an unlucky GeForce RTX 4090 owner who shared his experience on Reddit and presented a DIY solution to avert future incidents.

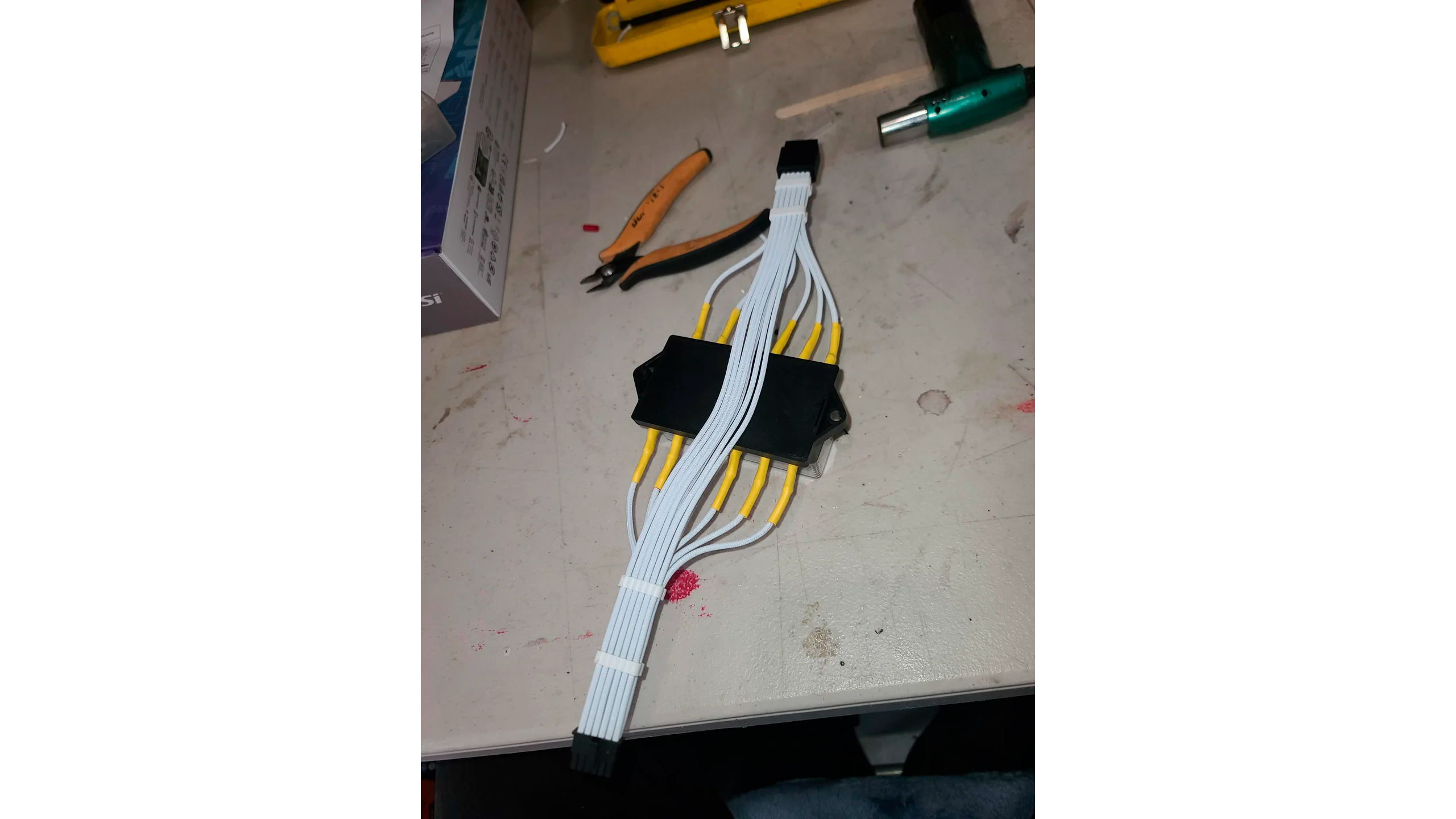

Redditor malcanore18 recounted how the 16-pin power connector on his GeForce RTX 4090 had melted. To avoid it happening in the future, the user improved his 16-pin power cable by integrating six miniature fuses. Although the solution might not be visually appealing, the underlying idea sounds plausible. If one of the pins draws too much power, the corresponding fuse will blow, causing a chain reaction for the other fuses. Although this band-aid solution may seem basic, replacing the fuses is less costly than repairing the graphics card. Another Redditor suggested using resettable fuses instead, as they can automatically reset themselves after an incident and do not require replacement like conventional fuses.

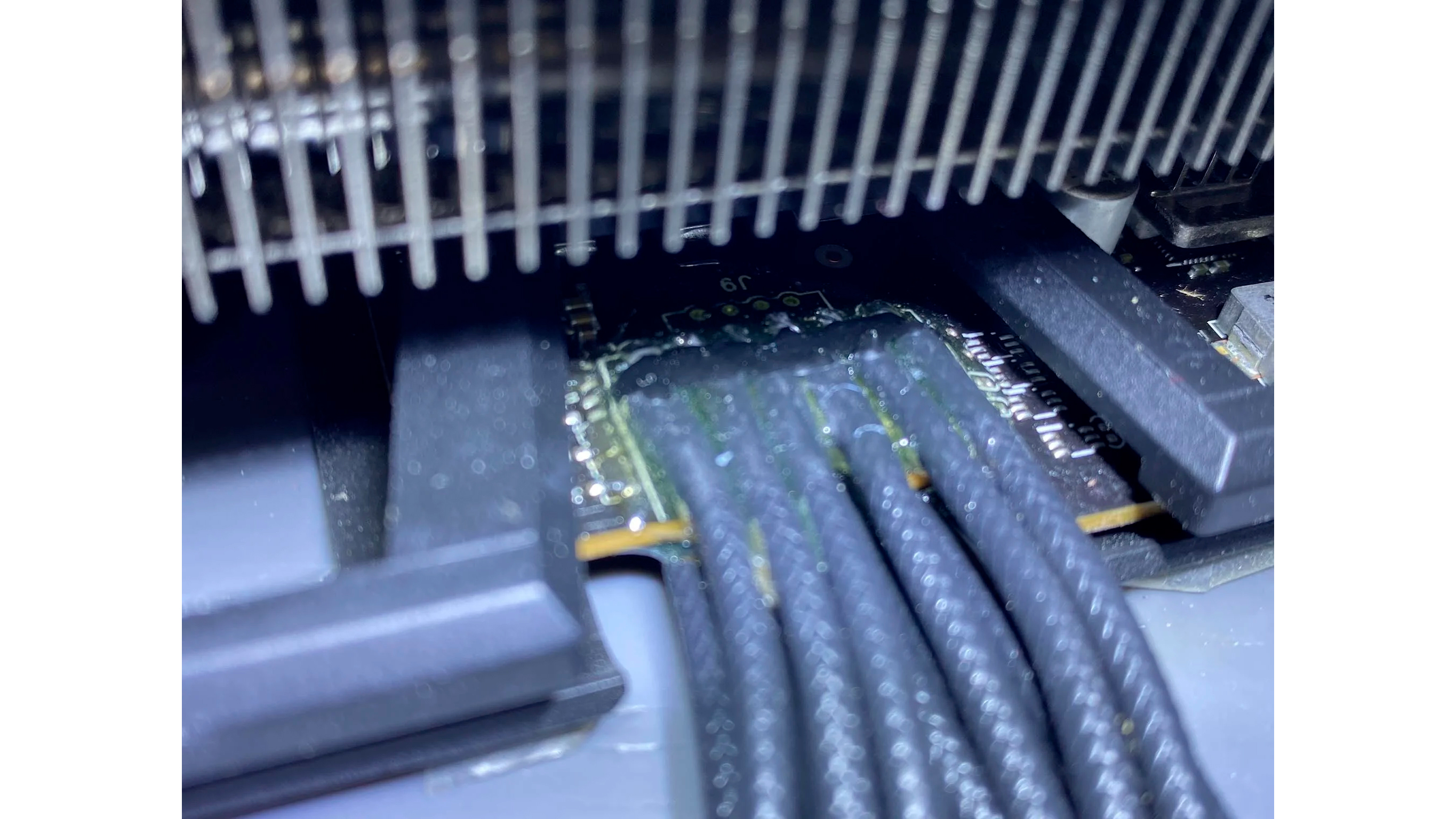

A catastrophe often unites strangers. Redditor jlodvo demonstrated that there can be multiple ways to solve a problem. Instead of fiddling with the 16-pin power connector, he addressed the issue directly. The user reportedly asked someone to remove the power connector and solder each wire directly to his graphics card. This shouldn't pose a problem if the user has a modular power supply that allows him to disconnect the cable from the power supply end whenever he wants to remove his graphics card from the case.

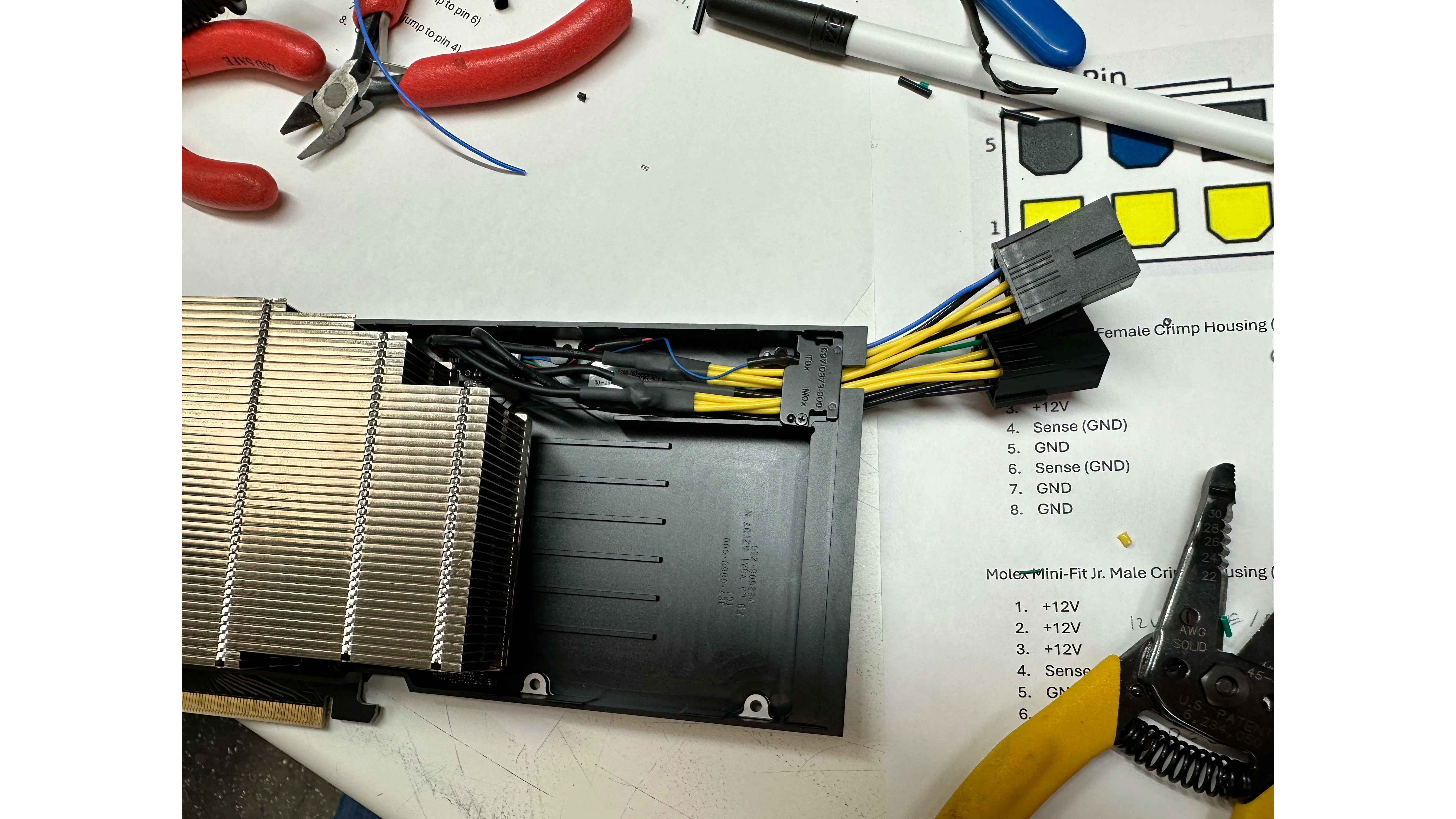

A third Redditor, IMI4tth3w, offered a solution he applied to an L40 with a melted 16-pin power connector, partly due to a faulty third-party cable. He described removing the melted connector and reverse-engineering the cables to utilize two standard 8-pin PCIe power connectors. However, the author cautioned that this method may not apply to all graphics cards. The specific reason it works on the L40 is that this data center graphics card appears to feature a pigtail version of the 16-pin power connector.

It's intolerable that meltdowns remain a problem, particularly with Nvidia graphics cards that cost a small fortune. Although numerous theories exist regarding the cause of these meltdowns, we have yet to receive a definitive answer from Nvidia. In the meantime, many Nvidia graphics card owners remain anxious, uncertain if the smell of burning plastic could eventually emanate from their GeForce RTX 5090 or GeForce RTX 4090 graphics cards one day.

Graphics card and power supply manufacturers have taken specific steps to counteract the problem, such as implementing colored connectors to ensure they're adequately inserted or special sensors inside the power supply. These DIY workarounds for the 16-pin meltdowns look neat, but we don't recommend you try them unless you know exactly what you're doing.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Zhiye Liu is a news editor, memory reviewer, and SSD tester at Tom’s Hardware. Although he loves everything that’s hardware, he has a soft spot for CPUs, GPUs, and RAM.

-

TheyStoppedit Wow. Gamers taking matters into their own hands. Yikes ! Honestly..... really..... it just boils down to human stupidity on NVidias part. It might as well be criminal negligence at this point. You have billions of dollars and you pay high-profile engineers and electricians millions of dollars to build your product, and you still fail grade 9 electricity. der8auer's and buildzoid's videos from February explain this thoroughly. When you have multiple wires powering a device.... THEY ABSOLUTELY UNEQUIVOCALLY MUST BE CURRENT BALANCED. If they are not current balanced, they will melt. You learn this is grade 9 electricity. But it's okay, leave it to a $2.4T company to fail grade 9 electricity.Reply

Our trusty 8-pin connector almost never melted because it was way under-specced, and they were current balanced on the board. If your connector is designed in such a way that it will melt if it's out of place by 1/10th of a millimeter, your connector is garbage. I have said it for years and I will say it again: NVidia, blaming the user isn't going to fix the problem. Acknowledge your mistake, own it, and fix it. I'd hate to see how many 4090 and 5090 owners have melted connectors and don't even know it yet.

Have you ever noticed there were almost never any reports of 3090Ti's melting (450W TDP like the 4090, 12VHPWR connector)? I wonder why that could be? Again.... buildzoids video..... The 3090Ti was FORCED to be current balanced so that if any wires were carrying too much load, the card would not work, thus, no melting. 3090Ti's current balanced. 4090s and 5090s just don't. Instead, they melt, and they will keep melting..... and melting..... and melting..... until either a class action lawsuit or a recall.

Honestly, if I were handed a 5090 for free on a silver platter, I wouldn't take it. No joke. Why? Because I'd be too scared of it melting. I do rendering and leave my GPU overnight on full load rendering. I'm not interested in being woken up by the smell of burnt plastic. It's not a chance I'd be willing to take. It shouldn't be that way. People shouldn't have to abstain from getting a consumer product due to fear of a fire. That shouldn't be the case with any consumer product. If it's not safe, its not safe. Time for a recall/class action lawsuit. -

AngelusF Load-balancing is a bandaid. What it really needs is just a single pair of big-ass wires to handle the current. You know, like domestic appliances.Reply -

alceryes Reply

Load-balancing is part of the solution. The other part is to NOT kill safety margins.AngelusF said:Load-balancing is a bandaid. What it really needs is just a single pair of big-ass wires to handle the current. You know, like domestic appliances.

Something like a redisigned, smaller 8-pin connector that is certified for 300W perhaps? Make it close to a 2x safety margin, incorporate sense pins and all the other enhancements of the 12V-2x6 spec. You'd need two cables for the likes of the 5090, but your chances of melting should be next to none. -

CelicaGT Reply

FWIW a 2X safety margin is the MINIMUM in other industries. I doubt NVIDIA even has a proper electrical (not electronics) P. Engineer on staff. If they did, that connector would never have made muster.alceryes said:Load-balancing is part of the solution. The other part is to NOT kill safety margins.

Something like a redisigned, smaller 8-pin connector that is certified for 300W perhaps? Make it close to a 2x safety margin, incorporate sense pins and all the other enhancements of the 12V-2x6 spec. You'd need two cables for the likes of the 5090, but your chances of melting should be next to none. -

JayGau Reply

I currently use a Corsair adapter for my 4080 that uses two CPU 8-pin power outputs (so 2x 300 W = 600 W) and converts them to the single 12V-2x6 connector. Should be trivial to do the same but instead of going to the 12V-2x6 they would connect to 2x CPU 8-pin layout connectors on the graphics card side. The outputs already exist on most PSUs. Just use the CPU power pin layout instead of the PCIe one and you can power a 5090 with only two cables. Why they didn't do that instead of the 16-pin disaster?alceryes said:Load-balancing is part of the solution. The other part is to NOT kill safety margins.

Something like a redisigned, smaller 8-pin connector that is certified for 300W perhaps? Make it close to a 2x safety margin, incorporate sense pins and all the other enhancements of the 12V-2x6 spec. You'd need two cables for the likes of the 5090, but your chances of melting should be next to none. -

Alvar "Miles" Udell It really is absolutely amazing that the -clearly- faulty designed PCIe 12V-2x6 system is still allowed to be sold worldwide. Thin cables that are easy to break and have no safety margin combined with a lack of per-pin monitoring on the cards are just incredible technical faults.Reply -

terabite Lol, the 6 fuse thing is probably not gonna help if the load gets unbalanced on the ground side. It's not like each gnd+12v pair is separate from each other (at least with a low value resistor, like it should be). One ground pin goes bad and you get all the current through the rest, potentially more through one pin, and we all know what happens.Reply -

JohnyFin Half incidents of melting are on user sides. This happen when you try pack 4090/5090 into small cases (under 200 mm wide) this provoke over bending cable and connector stress. Second half are bad cables from power supplies and bad power supplies...simple. do not put guilty on NvidiaReply -

alceryes Reply

If a product is so poorly designed, that it lends itself to being used improperly, regularly, then the design itself is at fault. Engineering 101.JohnyFin said:Half incidents of melting are on user sides. This happen when you try pack 4090/5090 into small cases (under 200 mm wide) this provoke over bending cable and connector stress. Second half are bad cables from power supplies and bad power supplies...simple. do not put guilty on Nvidia -

Misgar Reply

As an electronics design engineer working on Milspec and Aerospace projects, I looked at the in-line fuse option with interest and wondered what fuses the Redditor picked.Admin said:A couple of GeForce RTX 4090 owners take to Reddit to display their homemade solutions to impede 16-pin power connector meltdowns.

https://cdn.mos.cms.futurecdn.net/ARUTt69k2Z4TUvPwz8FTzG-1200-80.jpg.webp

When selecting a fuse, you have to consider the nominal current rating, the rupture current and the time characteristics.

Doing some very rough calculations, with six lines at 600W (max continuous power) that apparently works out at 100W per conductor, or 8.33A. I've completely ignored all considerations of peak transient currents. I'm not in a test lab with the kit in front of me.

Let's say the guy picks a 10A fuse for each line. Depending on fuse characteristics, this fuse will not rupture until you've put at least 2x (20A) through it, but it could take many seconds to blow at this current. At 3x rated value (30A), it'll blow faster. At 5x, 10x, 20x, faster still.

The graph below is for fuses in the range 500mA to 4A, but it helps to illustrate it takes considerably more than the nominal rated current to blow a fuse.

https://www.allaboutcircuits.com/technical-articles/understanding-the-details-of-fuse-operation-and-implementation/

https://www.allaboutcircuits.com/uploads/articles/techart_fusedetails_2.JPG

From the graph above, the 4A fuse can be expected to blow after 10 seconds at a continuous fault current of 7A. The same 4A fuse should blow after 1ms (millisecond) at a fault current of roughly 22A (which is 5.5x the rated current). The higher the fault current, the shorter the rupture time. Go figure.

Without spending time and experimenting, I'd be cautious about using fuses to protect an RTX 5090. Get the values or characterists wrong and the 12V-2x6 connectors could still overheat. You also have to consider the cascade effect and time lag as each one of the six fuses blows in turn. It should get progressively faster as the number of working fuses decreases, but I'm not sure what'll happen to the GPU.

As others have said, even the new 12V-2x6 can fail and other connectors for heavy gauge, single-wire feeds exist. They might be considerably less flexible and difficult to implement in some case designs, but who wants a fire?

Of the designs I've worked on, pairs of connectors usually cost hundreds or thousands of dollars. They're far too expensive for a commercial GPU, but you don't want things catching fire above the earth's surface. It's bad enough with all that Kerosene or rocket fuel nearby.

I like the idea of soldering all the wires direct to the GPU, but you could end up damaging the card and bang goes your warranty. I wonder what (new) connector they'll be using in 2030?