Benchmarking AMD Radeon Chill: Pumping The Brakes On Wasted Power

The Latest From AMD: Radeon Chill and OCAT

AMD's Crimson ReLive Edition drivers introduce a number of new features. But the one we're focusing on today is called Radeon Chill. It claims to dynamically regulate frame rates based on detected in-game movement. The purpose, of course, is to reduce power consumption when your on-screen experience won't necessarily benefit from higher performance.

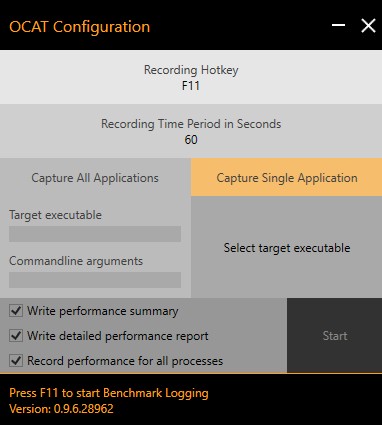

And we're using another product of AMD's development efforts, the Open Capture and Analytics Tool, or OCAT, to benchmark the effect of Radeon Chill. Technically, OCAT does the same thing as our custom front-end for PresentMon, enabling data collection in DirectX 11-, DirectX 12-, and Vulkan-based games.

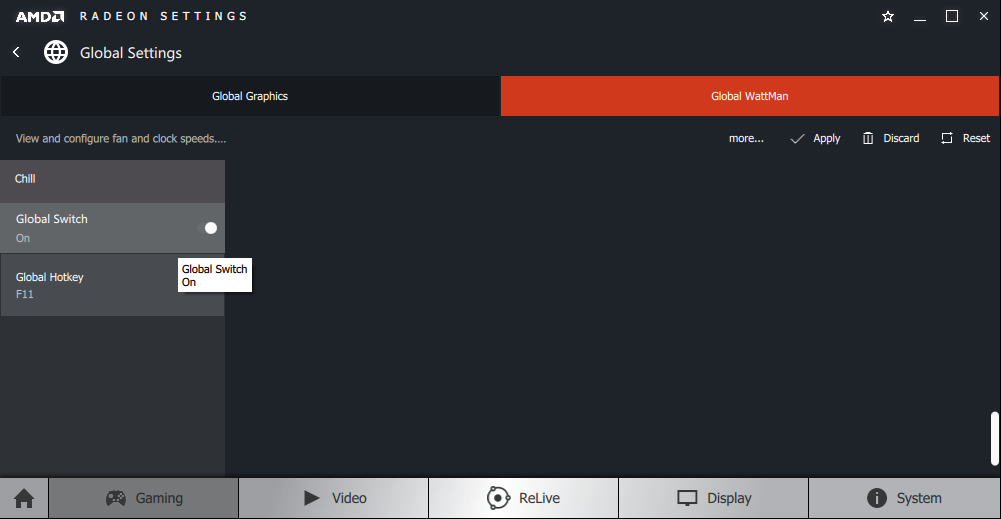

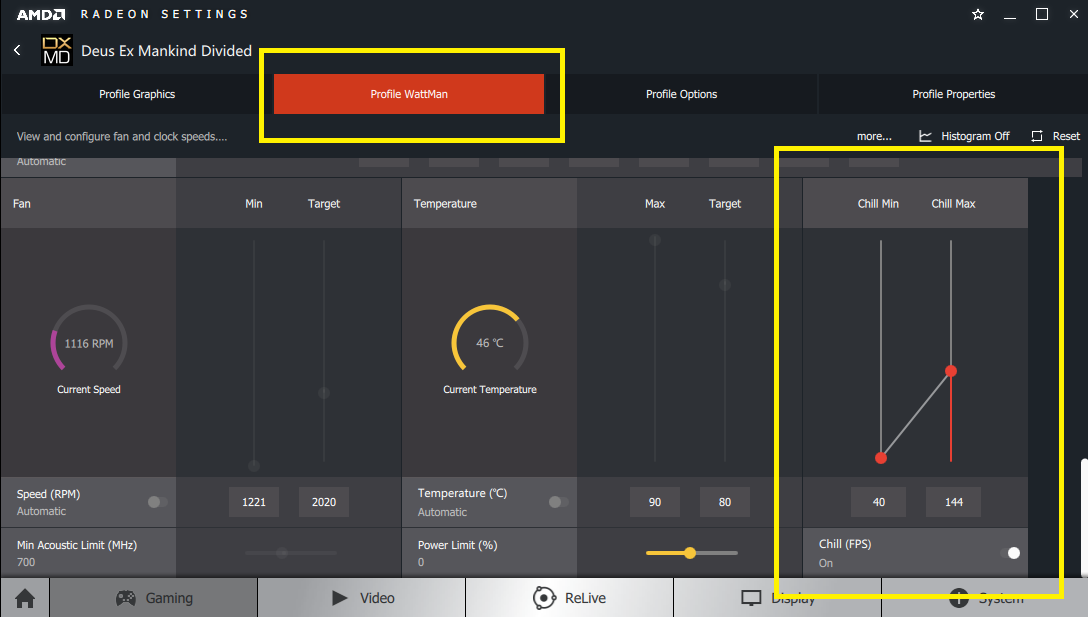

Enabling Radeon Chill

Chill is activated through Radeon Settings, on the WattMan page. You have the option of turning it on globally or disabling it (default), regardless of what's selected in the game profiles.

Ah, but there are restrictions. Radeon Chill only works with DirectX 9 and DirectX 11 games. If you installed the game profiles along with your AMD drivers, you can use the feature on a per-title basis and even specify a target. The minimum frame rate is 40, preventing you from imposing a sub-optimal experience on accident.

Sounds cool, right? Unfortunately, just like Nvidia's Ansel feature, only a handful of games are currently supported. The whitelist includes some big names, so at least AMD is putting its attention in the right place. And we naturally expect the company to continue adding as development resources allow.

Functionality and Features

AMD hasn't given us much in terms of technical specifics, so we're largely left to guess what's going on. We can see that, in fairly static scenes, the frame rate drops. As a result, we measure lower power consumption.

The company's driver appears to respond to mouse or keyboard input, as well as major changes to what happening on-screen. Really, you don't even perceive the lower frame rate when it happens. As soon as the need for more performance is detected, Radeon Chill lets off the brake.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

To illustrate, we picked three scenes from The Witcher 3 to collect performance and power data from. The first benchmark demonstrates when you'd most likely see Chill in action. The scene remains mostly unchanged and the camera remains static. The subject, Geralt, is really just an animated mesh, and mostly remains at rest.

In the second scene, we rotate the camera back and forth around the Y axis by 360°. Then we get Geralt to jump in front of the camera and perform a couple of attacks. This procedure begins again until our one-minute recording is over.

Our expectation is that power consumption will be slightly higher, since a small part of the scene is moving.

But what happens when you move the camera on all three axes, behind Geralt, who is running? Dense forest presents a serious graphics workload in The Witcher 3, so we assume Radeon Chill won't be able to save much power (and we certainly don't want it limiting frame rate when performance is needed).

As you might imagine, Radeon Chill complements AMD's FreeSync technology, since even lower frame rates appear smooth and tear-free. In order to keep our benchmarks comparable, we kept FreeSync disabled. But it's good to know the features work together.

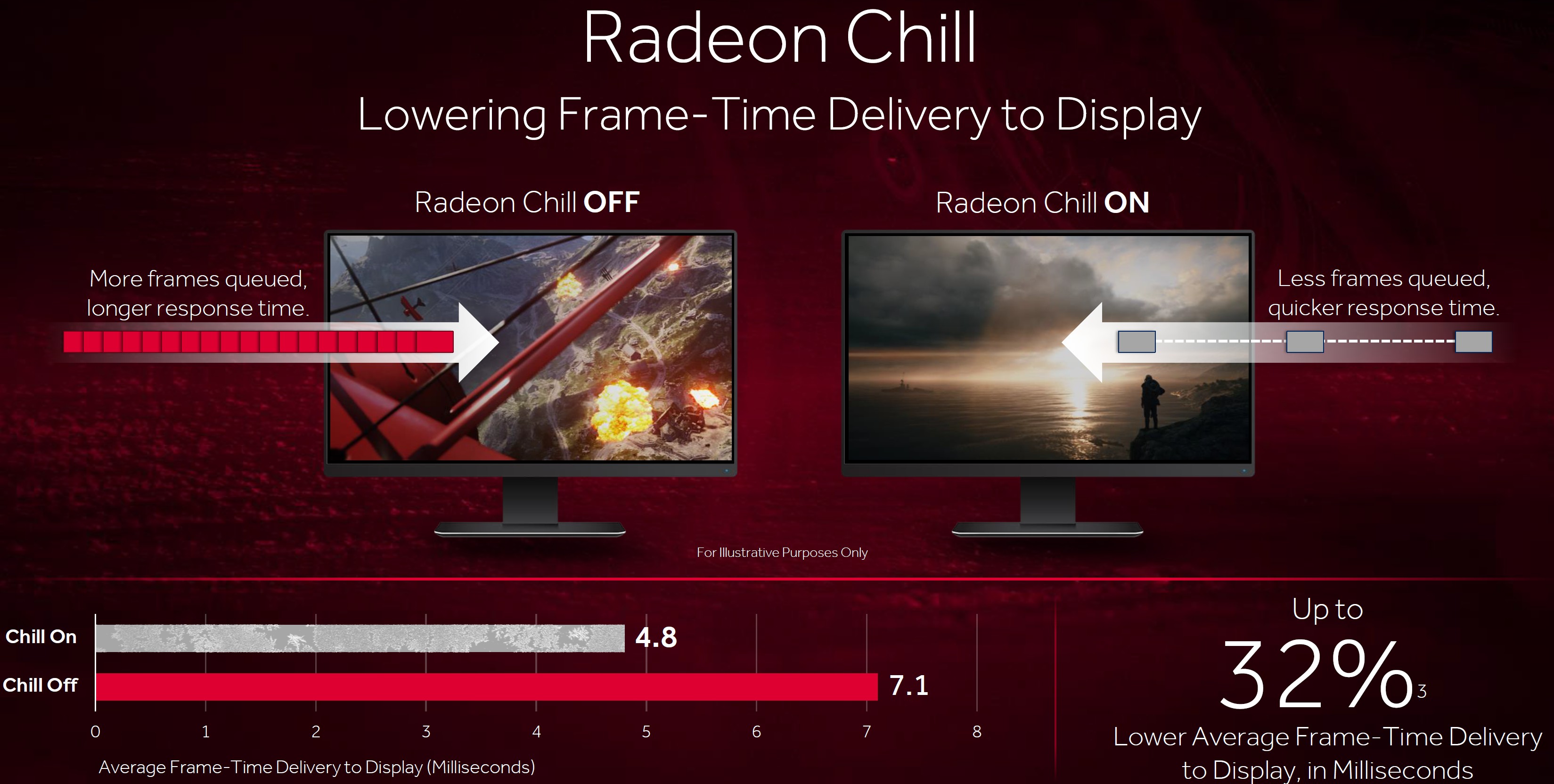

While Radeon Chill's inner workings are difficult to assess subjectively, it appears that AMD is trying to better align frame output to maximize CPU and GPU idle time.

The company claims this actually improves response time because fewer frames are queued.

Benchmark with OCAT

OCAT is a free tool that AMD is making available for benchmarking. It covers everything from DirectX 9 to DirectX 12, OpenGL, Vulkan, and UWP apps. OCAT isn't really new, though: it's basically PresentMon with a clean front-end, adapted to the style of AMD's own software.

Like PresentMon, OCAT creates .csv files with recording results for analysis. Because our performance interpreter is already optimized for PresentMon, it has no trouble with OCAT output.

Test System

We popped MSI's RX 480 Gaming 8G into our test bench. Its almost 190W maximum power consumption is most likely to demonstrate an improvement at the hands of Radeon Chill. The rest of our machine is as follows:

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: Gaming At 3840x2160: Is Your PC Ready For A 4K Display

MORE: How Well Do Workstation Graphics Cards Play Games?

MORE: All Graphics Content

- 1

- 2

Current page: The Latest From AMD: Radeon Chill and OCAT

Next Page Radeon Chill: Performance And Power Consumption

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

torka This is a wonderful advancement. One that makes me feel a lot better about having a GPU that would be considered overkill and a waste of power.Reply -

JackNaylorPE Lotta of articles of late have missing legends but I assumed the perhaps obvious assumption that on Power Consumption graph, black is no chill, and red is chill on.Reply

Isn't this behavior normal ? I notice when playing on my son's box and walking away from game for a minute (twin 970s, 1440p) ,when I come back, the Temp and Power graphs on the LCD panel taken a dive.

I live the idea as this could help solve many of the heat problems such as in the post I just answered, so while I love the concept, I'd love to know what is the impact ?

I think the true test here would be to set up a few gamers and pick a quest:

a) Have 3 run thru the quest with no chill.

b) have 3 gamers run thru the test with chill.

During the test, have the PC plugged into a kil-o-watt meter and record the start and finish kw-hrs

If I sit down for a 3-4 hour W3 session, I'm only stopping for bathroom and snack breaks, and I'm not that old yet where if taking a bio before I start, I'm going to need another one :)

a) Say I do need a bio, or say FedEx arrives with a package, that's a 60 - 90 second afk.

b) No one really spends any time (I think) jumping and spinning.

I would expect that at the end of the tests, the results won't be much different.

Average of 189 watts over 175 minutes of playing time, 5 minutes of afk at 183 or staring at Vistas (no chill) = 189 x 175 + 5 x 183 = 188.83 average draw

Average of 188 watts over 175 minutes of playing time, 5 minutes of afk at 143 or staring at Vistas (chill) = 188 x 175 + 5 x 143 = 187.88

Assuming the usual 100 hour game playing time ....

1.05 watts x 100 hours x 60 minutes x $0.10 avg cost / (1000 watts per kw x 0.87 eff) = $0.72 in power cost savings,

Looking at the performance impact of the 3 conditions:

a) Performance wise, there's a 33 % hit on performance for a 22% energy consumption when standing still and for that I say "who cares", ya want fps for smooth movement and if ya ain't movin' ....

b) There's s a 28% drop in performance for jumping and spinning for a 19% drop in energy consumption which again is of not concern to me but may be bothersome for folks who like to pan around and admire vistas.

c) I actual normal play while traveling or fighting, it has no impact.

Not trying to minimize what AMD has done here, certainly commendable. But during normal active play over all 3 conditions, we see a 0.5% decrease in power over a 3 hour session for a 0.6 % decrease in avg fps (*while running) when you really need it. OTOH, the ROI on the technology is negative ... the impact on performance is far greater, percentage wise, than what ya get back in power saved. -

rwinches So those of us that use the builtin sliders for their intended purpose to match or hardware to the game being played are not "real gamers" especially since we purchased AMD GPUs that have "architectural disadvantages" and should have gone with nVidiaReply

Got it. -

neblogai It is funny how biased the Toms reviewer is again. Instead of finding positives in this new tech, it's possible uses (for example- to save battery life on laptops + WoW) and best case tests, almost every paragraph in the review has rants on how AMD is bad and not as good as nVidia :)Reply -

red77star I saved power and got better performance by buying Nvidia 1080 GTX. A simple solution...Reply -

JackNaylorPE I have to say that's the 1st time I ever saw anyone accuse Tom's of an AMD Bias .... just look at the "Best GFX card articles" over the last few years. You don't think it odd that a site that focuses on gaming would mention the fact that there's a huge difference in OC headroom ? When two cards are in a certain performance / price niche and one OCs 25% and the other 6% , that shouldn't affect a potential buying decision ?Reply

This article read to me like he was trying real hard to say something good about AMD without making it subject to crushing and truthful responses.

Battery life ? during gaming ? Unless gaming is defined as starting the game and staring at the scenery w/o moving, what's the gain here ? If you actually play, with the 0.5% power savings in a typical session, your 90 minute **gaming battery life** just became 89.6 minutes. What exactly do you expect the author to rave about ?

If we are going to talk about bias ... and ignoring the fact that tests weren't done on a Mobile GPU or if the Mobile GPU wasn't already doing this, you will consume 143 watts with your 480 .... but the guy across the aisle on the train w/ his 1060 laptop is consuming 120 watts... and he gets to actually **play** the game. He can really play the game 20% longer than you could "stand still and stare at the scenery" with the 480.

While what AMD has done with this so far is commendable, the greater significance is perhaps they could expand the functionality on this as time goes on beyond AB's power sliders. As of now, it has to be said, it's power saving impact is limited to staring at the screen and ***not playing the game***.

Now if you are playing the game on a lappie and walk away from the screen to hit the bathroom or whatever ... are you going to leave the lappie unattended ?... aren't you going to close the lid which will result in real power savings when lappie sleeps while you gone ?

I love the fact that AMD has starting looking for ways to reduce power consumption, I love that the 4xx series has cut power usage compared with 3xx ... but while complimenting them on "their improvements" the gap between the two is still very wide....and it has widened with the last generation.

In a story between rival football teams where the losing team lost 42 - 21 instead of the 42 - 10 last year, the author will likely compliment the losing team for their improved performance, but for the author not to note "who won the game" would be the author "not doing his job".

I read every article with the mind set "will this change anything" with regard to what I select or what I put in when I build for others. This doesn't change anything. Like most of today's news, especially in the political arena", the headline is misleading. The headline could have just as easily read:

AMD's new Chill Utility saves power as long as you don't Play the Game

It's accurate, more descriptive of what it does ... putting the brakes on doesn't really tell me how well it did. Reading it, they applied the brakes, the braking distance was better ... but let's be realistic, they still hit the deer".

My take on the article was a positive in that it clearly shows that AMD is concerned about this issue. They are working on it. OK, so it won't have much of an impact yet, but they did what they could with the resources they have. It's a start; as it matures, I expect we'll see user selectable settings whereby you can tell it not to exceed a certain power draw....or "I don't need 95 fps.... cut power as needed to keep me at 55-60 fps.

Now I'm curious (question for you Igor) if this didn't arise out of the 480 Fix where the reference 480s were exceeding their power limits. Of course, like with the fix, when you cut power, you cut performance. Right now it's a poor trade off with performance hits far exceeding the power savings reduction on a percentage basis. Hopefully over time it will get better.

Right now, when I do a 480 build, the PSU needs to be 100 watts bigger than a 1060 build. A user with marginal PC would then be in a position to buy a 480 now and set a safe power limit, until such time as he could afford a PSU upgrade. (Recommend the 1060 / 1070 / 1080 in higher end builds / below the $280 niche, its all AMD) So yes, the technology has several possible upsides.

Technology isn't yet in a place where most of everything else is ... hope it never gets there in my lifetime. Today:

-Everybody gets a trophy

-No Dodgeball cause 1st player out might suffer a hit on his self esteem

-Moms are inserting themselves into negotiations for the offsprings 1st job after college, even attending job interviews

-Editoral content is dictated by a "stick to the positives" mandate from the advertising department.

If I could talk to nVidia / AMD on this topic, this is what I'd say

nVidia: You have a distinct advantage in power efficiency, it's real, don't get lazy and keep at it.

AMD: OK, this new technology won't have a real impact for most of us but will for those that like to "stop and smell the roses". We appreciate your attention to this issue and this technology appears to have the potential to have greater effects over time, so i will be watching for further developments. You are not going to "get a trophy", but we must commend you for the attention and effort. Keep going in this direction as well as in GPU / PCB design to further narrow the gap. -

RedJaron What the . . . ? Are you people complaining Igor is bashing AMD actually serious? Did you bother to read the whole thing?Reply

18977649 said:It is funny how biased the Toms reviewer is again. Instead of finding positives in this new tech, it's possible uses (for example- to save battery life on laptops + WoW) and best case tests, almost every paragraph in the review has rants on how AMD is bad and not as good as nVidia :)

Okay, back up your claims. Show me actual examples from the article that say, much less imply, any of this. It explains what Chill is meant to do, a little about how it's supposed to do it ( though it can't explain much since AMD hasn't revealed all the details ), explains the testing methodology, and gives the results. The commentary on the results say the technology pretty much works as advertised, shows a few niggles where it will be limited, and overall says AMD did a good job on it. The only time NVidia is even mentioned is when talking about Ansel and saying NVidia's tech has the same limitation as Chill and that it only works on a limited number of games. That's not a negative, it's a fact about both methods.18977931 said:This tech can't be any good. It doesn't have an Nvidia logo on it. Let's also test just one game, because reasons.

Could this article be any more lazy? Is Tom's THAT desperate to be first?

I don't understand how Tom's can have great articles like that UPS teardown and fix, then publish this garbage.

This crap about blasting others with completely false and unfounded accusations may work on so-called network news, but doesn't fly with people doing actual critical thinking. Either put up or shut up. -

rwinches Here are links that provide proper review and info about the full largest SW release for Radeon.Reply

https://www.techpowerup.com/reviews/AMD/Radeon_Crimson_ReLive_Drivers/5.html

http://www.pcworld.com/article/3147292/software-games/feature-stuffed-radeon-software-crimson-relive-debuts-amds-rivals-to-shadowplay-fraps.html

http://arstechnica.com/gadgets/2016/12/radeon-crimson-relive-driver-details-download/

http://www.digitaltrends.com/computing/amd-crimson-relive-edition-driver-suite-released/

http://www.guru3d.com/articles-pages/amd-radeon-crimson-relive-edition-driver-overview,5.html