Core i7-4770K: Haswell's Performance, Previewed

A recent trip got us access to an early sample of Intel’s upcoming Core i7-4770K. We compare its performance to Ivy Bridge- and Sandy Bridge-based processors, so you have some idea what to expect when Intel officially introduces its Haswell architecture.

Results: Sandra 2013

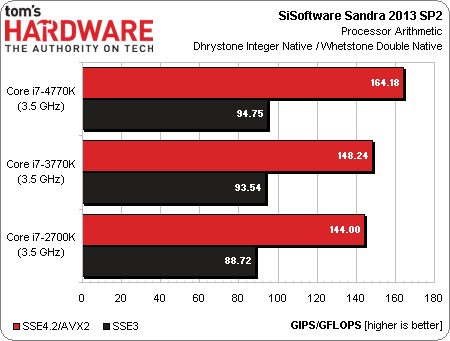

Although Dhrystone isn’t necessarily applicable to real-world performance, a lack of software already-optimized for AVX2 means we need to go to SiSoftware’s diagnostic for an idea of how Haswell’s support for the instruction set might affect general integer performance in properly-optimized software.

The Whetstone module employs SSE3, so Haswell’s improvements over Ivy Bridge are far more incremental.

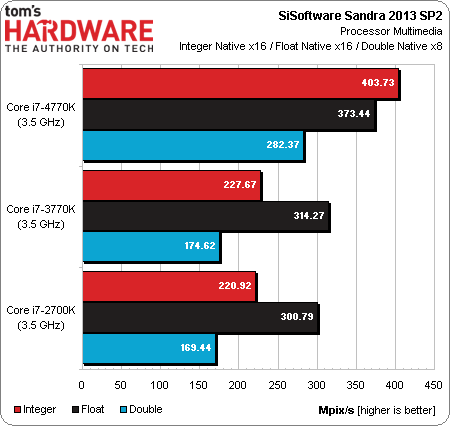

Sandra’s Multimedia benchmark generates an image of the Mandelbrot Set fractal using 255 iterations for each pixel, representing vectorised code that runs as close to perfectly parallel as possible.

The integer test employs the AVX2 instruction set on Intel’s Haswell-based Core i7-4770K, while the Ivy and Sandy Bridge-based processors are limited to AVX support. As you see in the red bar, the task is finished much faster on Haswell. It’s close, but not quite 2x.

Floating-point performance also enjoys a significant speed-up from Intel’s first implementation of FMA3 (AMD’s Bulldozer design supports FMA4, while Piledriver supports both the three- and four-operand versions). The Ivy and Sandy Bridge-based processors utilize AVX-optimized code paths, falling quite a bit behind at the same clock rate.

Why do doubles seem to speed up so much more than floats on Haswell? The code path for FMA3 is actually latency-bound. If we were to turn off FMA3 support altogether in Sandra’s options and used AVX, the scaling proves similar.

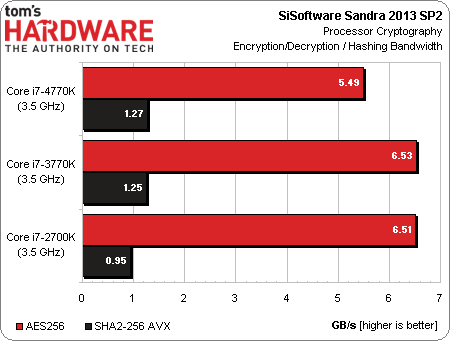

All three of these chips feature AES-NI support, and we know from past reviews that because Sandra runs entirely in hardware, our platforms are processing instructions as fast as they’re sent from memory. The Core i7-4770K’s slight disadvantage in our AES256 test is indicative of slightly less throughput—something I’m comfortable chalking up to the early status of our test system.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Meanwhile, SHA2-256 performance is all about each core’s compute performance. So, the IPC improvements that go into Haswell help propel it ahead of Ivy Bridge, which is in turn faster than Sandy Bridge.

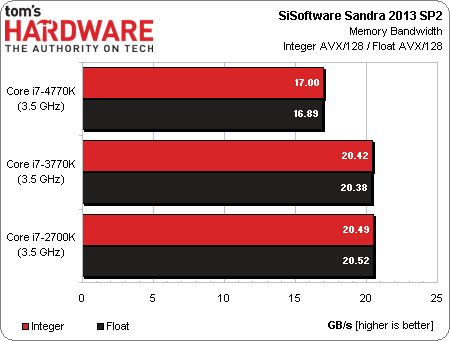

The memory bandwidth module confirms our findings in the Cryptography benchmark. All three platforms are running 1,600 MT/s data rates; the Haswell-based machine just looks like it needs a little tuning.

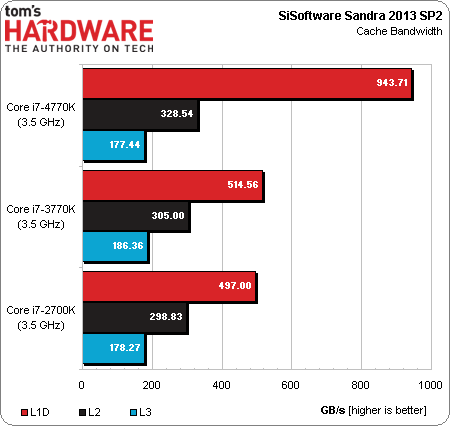

We already know that Intel optimized Haswell’s memory hierarchy for performance, based on information discussed at last year’s IDF. As expected, Sandra’s cache bandwidth test shows an almost-doubling of performance from the 32 KB L1 data cache.

Gains from the L2 cache are actually a lot lower than we’d expect though; we thought that number would be close to 2x as well, given 64 bytes/cycle throughput (theoretically, the L2 should be capable of more than 900 GB/s). The L3 cache actually drops back a bit, which could be related to its separate clock domain.

It still isn’t clear whether something’s up with our engineering sample CPU, or if there’s still work to be done on the testing side. Either way, this is a pre-production chip, so we aren’t jumping to any conclusions.

Current page: Results: Sandra 2013

Prev Page Core i7-4770K Gets Previewed Next Page Results: OpenCL Performance-

twelve25 Obviously with AMD striggling, Intel has no need to really stretch here. This is another simple incremental upgrade. Good jump from socket 1156, but I doubt many 1155 owners will feel the need to buy a new motherboard for this.Reply -

EzioAs Thanks for the preview!Reply

So all of these results are what most people expected already: minimal increase in CPU performance while the iGPU shows significant increase? I'm not surprised really (and I believe most people have speculated this), since Haswell mostly targets the mobile segment.

@twelve25

In my opinion though, unless LGA1156 i5/i7 users really want to upgrade (native USB 3.0, more SATA 3, etc), they can still hold out with their current CPUs. Although upgrading to Haswell rather than IB does make much more sense if they really want to but there's also the reported USB 3.0 bug and we haven't seen the thermals and overclocking capability on this chip so it might actually be a turn off for some people. And yeah, I don't think many SB or IB users will upgrade to Haswell. -

dagamer34 @twelve25 But who does Intel really need to convince here? Trying to chase after people who upgrade every year is a fools errand because its such a small piece of the pie compared to the overall larger market. Besides, most of Intel's resources are clearly going towards making mobile chips better, where there energy really needs to be anyway.Reply -

dagamer34 To add to EzioAs's point, I don't see most people on SB/IVB systems upgrading until Intel makes chips that have a good 10-15% better performance than 4.2-4.5Ghz SB/IVB systems or they decide to go down the APU route like AMD is (and also find/create workloads which an APU would beat those systems). In other words, not for another 2+ years.Reply -

Adroid killerchickensDoes Haswell run hot as Ivy Bridge?That = the million dollar question. Did they do away with the bird poop and return to fluxless solder.Reply

Intel should stop throwing insults to the overclocking crowd. We will pay another 10$ for the fluxless solder. -

mayankleoboy1 @ Chris Angelini : Man, you are amazing for this preview! +1 to Toms.Reply

There is no surprise at Intel excluding TSX from the unlocked K parts. They removed teh VT-d in the Sb/IB too. Just so that people not use teh $300 chip in servers, but have to buy th e$2000 chip.

Intel are fucked up

i dont think Intel will be too happy with Toms for this preview.... -

sixdegree Good preview. I kinda hoped that Toms includes the power consumption figure for Haswell. It's the biggest selling point of Haswell, after all.Reply -

mayankleoboy1 sixdegreeGood preview. I kinda hoped that Toms includes the power consumption figure for Haswell. It's the biggest selling point of Haswell, after all.Reply

Power consumptions is a lot dependent on the BIOS optimizations, which are far from final.