AMD FX-8350 Review: Does Piledriver Fix Bulldozer's Flaws?

Last year, AMD launched its Bulldozer architecture to disappointed enthusiasts who were hoping to see the company rise to its former glory. Today, we get an FX processor based on the Piledriver update. Does it give power users something to celebrate?

The Piledriver Architecture: Improving On Bulldozer

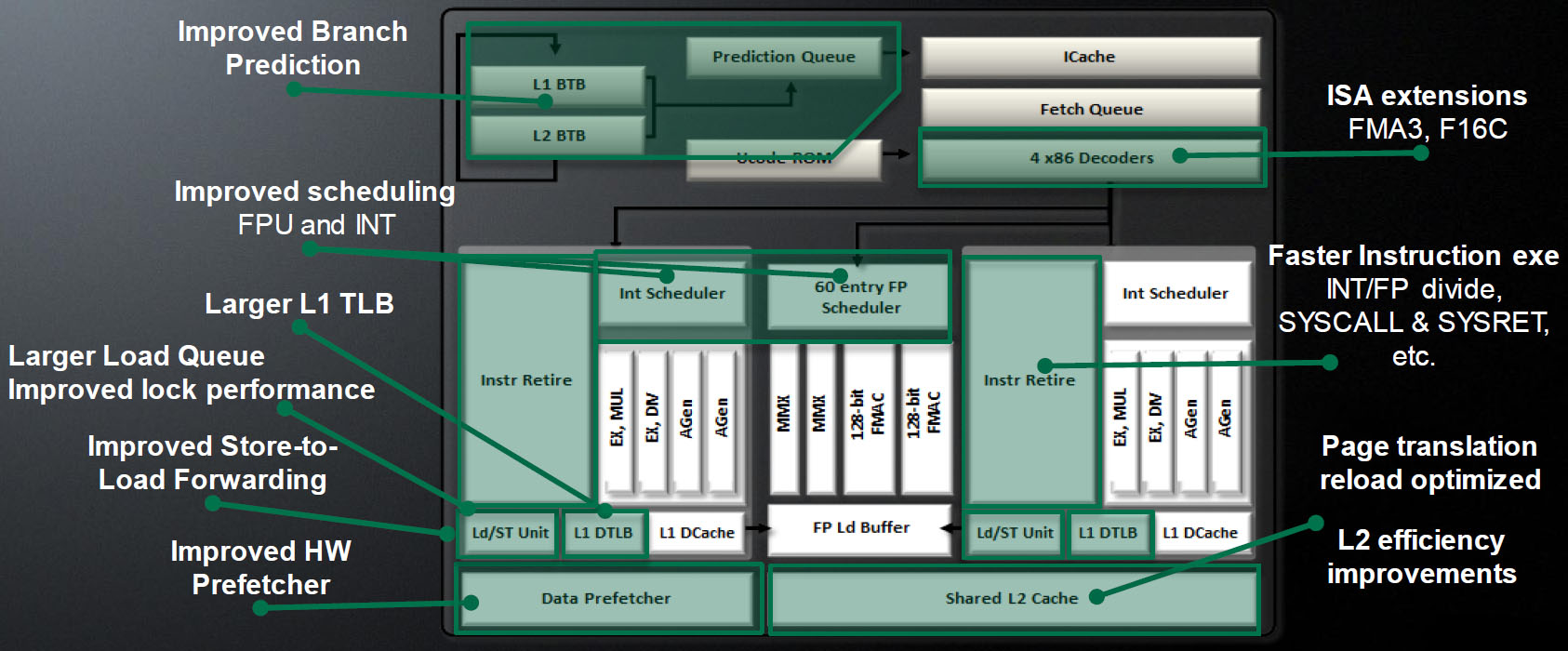

The very foundation of AMD’s current x86 architecture was covered in great depth back when I reviewed the FX-8150 (AMD Bulldozer Review: FX-8150 Gets Tested). All of those tenets carry over to the company’s Piledriver update. However, we know that AMD’s engineers learned a number of lessons as they took the original Bulldozer concept from theories and diagrams to actual silicon. We also know that process technology evolved over the last year, even if the company continues to use a 32 nm node for manufacturing its Vishera-based CPUs. It should come as no surprise, then, that today’s reformulation is the result of several tweaks flagged for improvement a long time ago.

Front-End Improvements

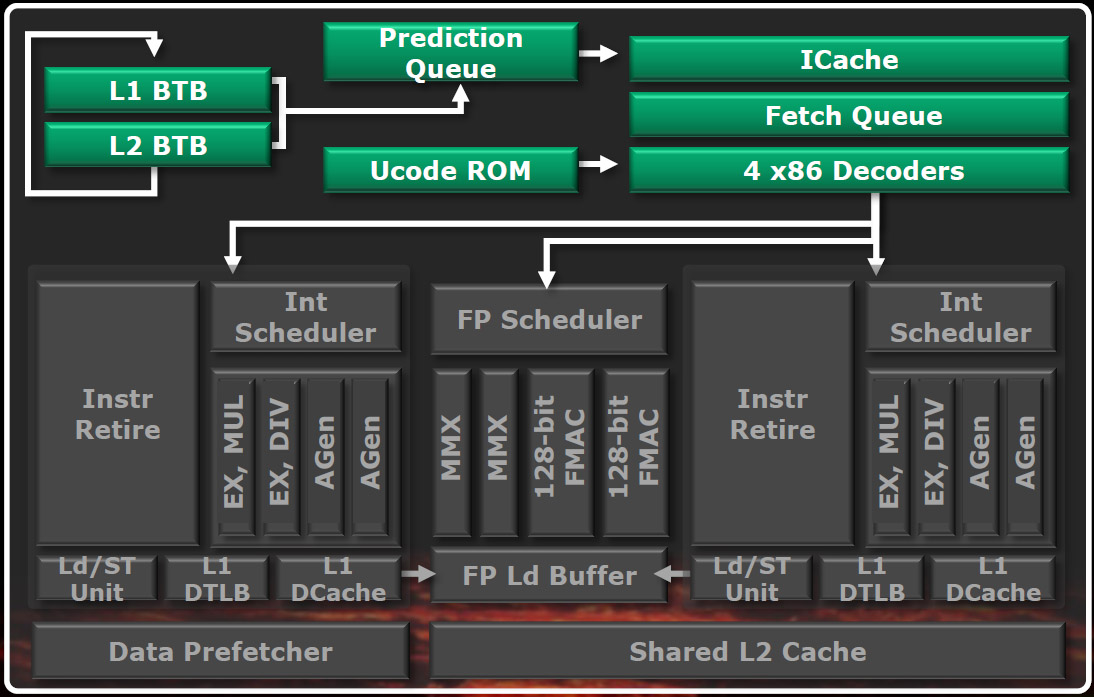

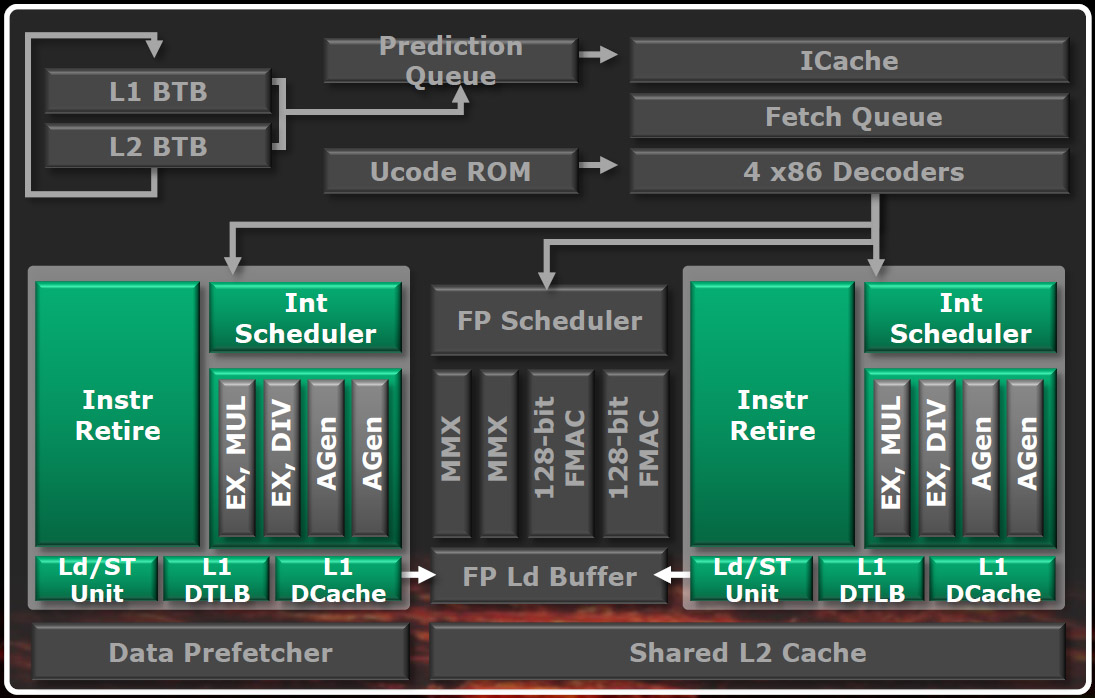

In the days that followed AMD’s Bulldozer introduction, branch prediction was suggested as one of the architecture’s possible weaknesses. The module concept involves certain shared resources feeding two execution threads, and the architects attempted to minimize bottlenecks in the front-end by implementing one prediction queue per thread behind a 512-entry L1 and 5000-entry L2 branch target buffer. For Piledriver, the company claims the accuracy of its predictor is better.

Piledriver adds support for a couple of ISA extensions that we first covered in our mobile Trinity-based APU coverage. The fused multiply-add was introduced a year ago in Bulldozer. That specific version was called FMA4, though, and allowed an instruction to have four operands. But Intel only plans to support a simpler three-operand FMA3 instruction set in its upcoming Haswell architecture, so AMD preempts that addition with Piledriver. The other extension, F16C, enables support for converting up to four half-precision to floating-point values at a time. Intel’s Ivy Bridge architecture already includes this, so its implementation on Piledriver simply catches AMD up. Not that Bulldozer was suffering without FMA3/F16C; compiler support was only added in Visual Studio 2012.

Inside The Integer Cluster

The two integer clusters in each compute module feature an out-of-order load/store unit capable of two 128-bit loads/cycle or one 128-bit store/cycle. AMD discovered that there were certain cases where Bulldozer wouldn’t catch store data already in a register file. By rectifying this, instructions are fed into the integer clusters more quickly.

Within each integer core, we’re still dealing with two execution units and two address generation units (referred to simply as AGens). Those AGens are more capable this time around in that they’re able to perform MOV instructions. When AGen activity is light, the architecture will shift MOVs over to those pipes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

One of the most notable changes is a larger translation lookaside buffer for the L1 data cache, which grows from 32 entries to 64. Because the L2 TLB has fairly high 20-cycle latency, improving the hit rate in L1 can yield significant performance gains in workloads that touch large data structures. This is particularly important in the server space, but AMD’s architects say they noticed certain games demonstrating sensitivity to this too, which isn’t something they had expected.

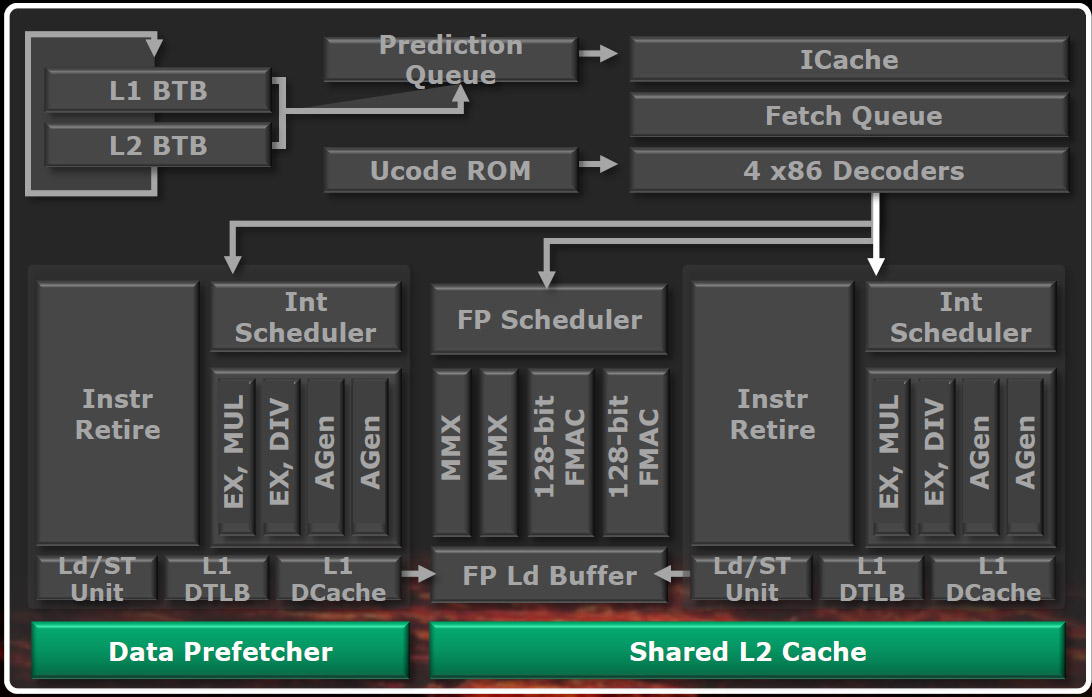

L2 Cache Optimizations

Hardware prefetching into the L2 is improved as well. Minimum latency doesn’t change, which is why cache latency doesn’t look any better in our Sandra 2013 benchmark. However, as the prefetcher and L2 are used more effectively, average latency (much more difficult to measure with a diagnostic) should be expected to drop, AMD claims. The same Sandra 2013 module also reflects very little change in L3 latency, and Vishera’s architects confirm that no changes were made to the L3 cache shared by all modules on an FX package.

Putting It All Together: Five Architectures At 4 GHz

What effect do all of those adjustments have on Piledriver's per-cycle performance? We ran five different architectures at 4 GHz to compare their relative results.

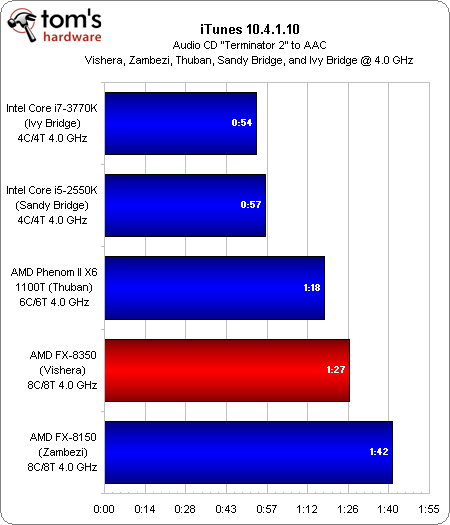

In iTunes, which we know to be single-threaded, the FX-8350 demonstrates significant gains over the Bulldozer-based FX-8150. But a Phenom II X6 1100T operating at the same frequency is still faster. And that's before we look at the Sandy and Ivy Bridge architectures, which jump way out in front of anything from AMD.

Notice that the Core i7 is listed as a quad-core CPU capable of addressing four threads. I disabled Hyper-Threading in this test to isolate core performance. Had it been turned on, Intel's client flagship would have likely finished in first place.

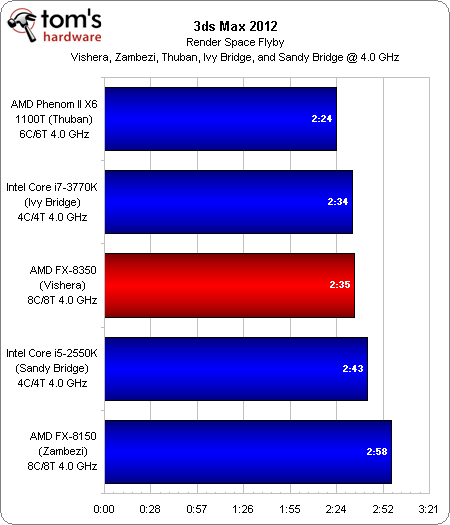

Nevertheless, we're most interested in the gain realized by shifting from FX-8150 to FX-8350, and it is significant. Again, though, Thuban's six cores manage to outmaneuver Vishera's quad-module configuration. AMD is using a clock rate advantage to keep this latest architecture in front of its older design. Thuban really doesn't want to run at such high frequencies, even as it's able to get more done per cycle.

Current page: The Piledriver Architecture: Improving On Bulldozer

Prev Page Overclocking And Platform Compatibility Next Page Hardware And Software Setup-

amuffin Looks like AMD did pretty well with the 8350.Reply

I now really don't see people purchasing it though....people will be buying the 8320. -

kracker Interesting, nice improvement over BD, it spars very closely or beats the i5-3570K sometimes, It really can't compete with intel's high end, but nevertheless good job AMD!Reply -

sixdegree AMD is doing good with the pricing this time. This is what AMD should be: aggressively priced CPU with added features.Reply -

esrever The price is actually nice this time. Hopefully AMD sticks around and gives good deals like this for years to come.Reply -

Nice job AMD. It just kept itself afloat! Not performance killer, but good enough to get a chunk of desktop sales just in time for the holiday season. Probably wouldn't buy it over an Intel system because most apps are still quite single threaded, but I would certainly consider it. Welcome back to the race AMD. Keep up the good work!Reply

-

najirion so... amd will still keep my local electric provider happy. Good job AMD but I think FM2 APUs are more promising. The fact that APUs alone can win against intel processors if discrete graphics is not involved. Perhaps AMD should focus in their APU line like integrating better gpus in those apus that will allow dual 7xxx graphics and not just dual 6xxx hybrid graphics. The entire FX architecture seems to have the issue with its high power consumption and poor single-thread performance. Better move on AMD...Reply -

dscudella I would have liked to see more Intel offerings in the Benchmarks. Say an i3-2120 & i3-3220 for comparisons sake as they'll be cheaper than the new Piledrivers.Reply

If more games / daily use apps start using more cores these new AMD's could really take off. -

EzioAs Interesting. Probably not a gamers first choice but for users who regularly use multi-threaded programs, the 8350 should be very compelling. About $30 cheaper than a 3570K and can be overclock as well, video/photo editors should really consider this. It doesn't beat current Intel CPUs in power efficiency but at least it's significantly more efficient than Bulldozer.Reply

Thanks for the review.

Btw Chris, how many cups of joe did you had to take for the overclocking testing? ;) -

sorry just not overly impressed.Reply

5-12% performance increase 12% less power - sound familiar?

the only difference this time was less hype before the release. (lesson well learned AMD!)

-

gorz I think the fx-4300 is going to be the new recommended budget gaming processor. Good price that is only going to get lower, and it has overclocking.Reply