Benchmarking GeForce GTX Titan 6 GB: Fast, Quiet, Consistent

We've already covered the features of Nvidia's GeForce GTX Titan, the $1,000 GK110-powered beast set to exist alongside GeForce GTX 690. Now it's time to benchmark the board in one-, two-, and three-way SLI. Is it better than four GK104s working together?

GK110 Steps Out: General-Purpose Compute

One of our biggest exceptions to the GeForce GTX 680 was its compute performance. The GK104 GPU was designed with gaming in mind, which is why each of its SMX blocks only sports eight FP64-capable units. GK110 conversely gets 64 FP64 CUDA cores per SMX, totaling 896 across the GPU.

As we know, though, Nvidia limits those units to 1/8 clock rates by default—not to be nefarious, but to create more thermal headroom for higher clock rates. That’s why, if you want the card’s full compute potential, you need to toggle a driver switch. Doing this, in my experience so far, basically disables GPU Boost, limiting your games to the card’s base clock rate.

SiSoftware Sandra 2013

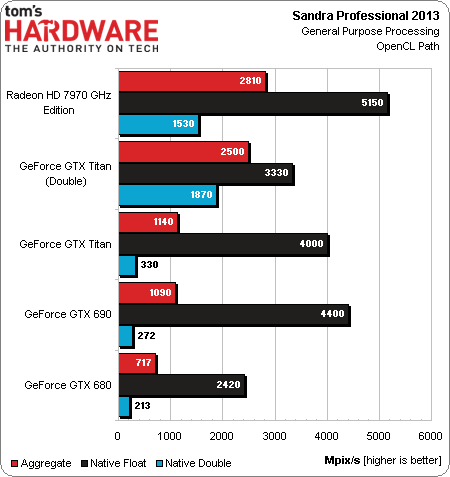

So, let’s start by looking at SiSoftware’s Sandra 2013, and the GP Processing module using OpenCL:

AMD’s Radeon HD 7970 GHz Edition kicks out killer FP32 numbers. But let’s ignore that for a second and compare the Titan at its default setting to the Double-precision mode enabled in Nvidia’s driver. Notice that FP32 performance goes down, corresponding to a pretty significant loss of clock rate. However, double-precision performance shoot up to 1,870 Mpix/s.

Let’s convert those numbers to operations. Assuming ~11 instructions per iteration of the Mandelbrot set that Sandra uses, the GeForce GTX Titan achieves about 1.14 TFLOPS of FP64 performance. Radeon HD 7970 GHz Edition hits 934 GFLOPS.

Now, realistically, those figures are going to be meaningful on the desktop to folks running Wolfram Mathematica or some other software package that needs a lot of precision. FP32 is much more prevalent. Our Sandra GP test shows AMD with a compelling lead there, achieving 3.14 TFLOPS in the native float component. Nvidia’s best result comes from the GeForce GTX 690, which manages 2.68 TFLOPS.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

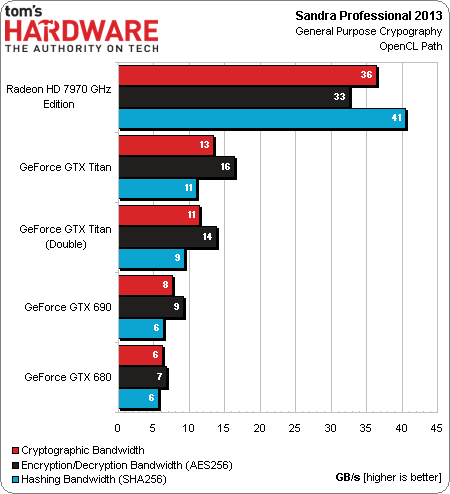

AMD is unmatched in the Cryptography module, which employs OpenCL.

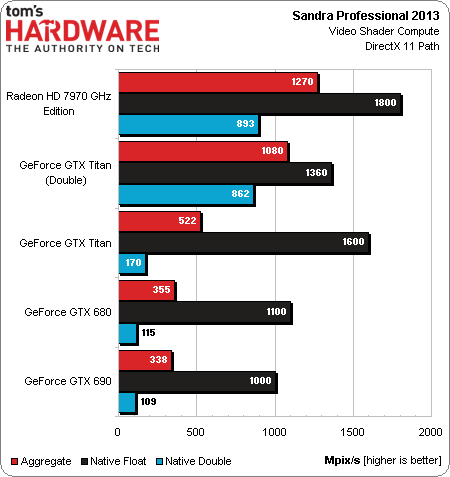

The Video Shader sub-test employs Shader Model 5.0 to generate Mandelbrot set fractals using 32- and 64-bit precision. AMD scores a victory in both disciplines, yielding an aggregate win. However, GeForce GTX Titan comes very close to the Tahiti-based card’s double-precision performance once we flip the switch in Nvidia’s driver, even though this hurts its native float result.

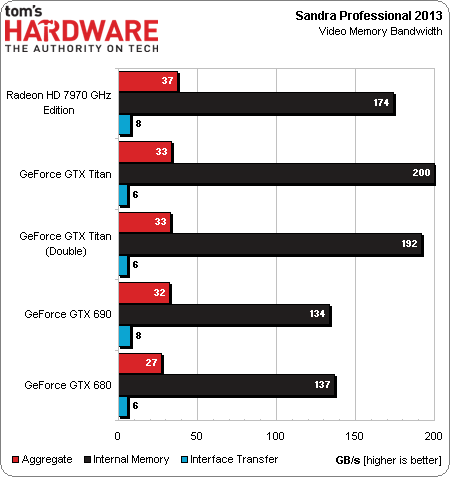

GeForce GTX Titan and Radeon HD 7970 GHz Edition offer identical theoretical memory bandwidth, though Nvidia’s card seems to realize a bit more of it. Conversely, Nvidia disables PCI Express 3.0 signaling on Sandy Bridge-E-based platforms, while AMD does not. That’s why you see the Radeon HD 7970 GHz Edition pushing higher interface transfer speeds. Nvidia maintains this is due to an issue with Intel’s PCI Express controller that may surface in multi-card configurations, where stuttering occurs.

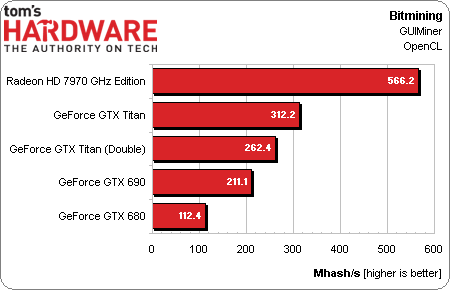

Bitmining

Our GUIMiner-based bitcoin mining test doesn’t require stellar floating-point performance, but rather tends to map well to the General Purpose Cryptography results we saw from Sandra. AMD’s hashing throughput is far and away superior to Nvidia’s, and that outcome reflects in the Mhash/s measurement from GUIMiner. GeForce GTX Titan nearly triples the 680’s result, but still can’t come close to what a Radeon HD 7970 GHz Edition can do.

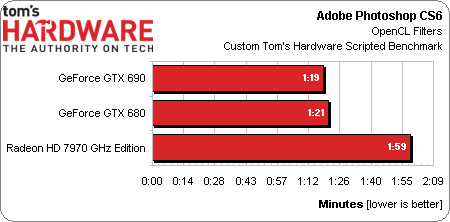

Photoshop CS6

Unfortunately, from there, our OpenCL-based testing started falling apart.

We use a scripted Photoshop CS6 test that runs through the software’s OpenCL-accelerated filters. Both the GeForce GTX 680 and 690 perform really well in this test, besting AMD’s Radeon HD 7970 GHz Edition by a notable margin. However, GeForce GTX Titan gets part of the way through and then crashes Photoshop with an error message that the graphics driver encountered a problem.

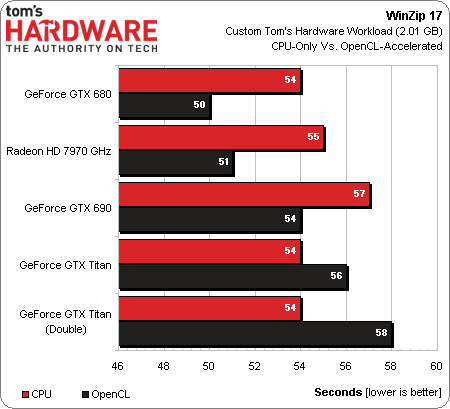

WinZip 17

Our WinZip 17-based benchmark, which involves compressing the same 2.1 GB folder full of files to a RAM drive, does complete on GeForce GTX Titan. However, enabling OpenCL actually slows down the test. On all other cards, OpenCL acceleration shaves off a few seconds.

Potential gains are quite a bit higher in WinZip. The app only benefits from GPU acceleration when files larger than 8 MB are compressed, so mixed folders of smaller data don’t enjoy as much of a speed-up. We see enough of a performance boost from the other cards to know something is definitely wrong on the Titan card, though.

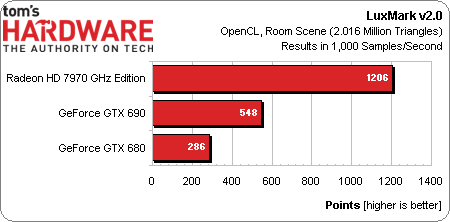

LuxMark 2.0

Like Photoshop, LuxMark crashes outright under the GeForce GTX Titan. As a result, we aren’t able to see how it compares to AMD’s Radeon HD 7970 GHz Edition, which runs away with a compelling lead.

In all, our general-purpose compute testing is overwhelmingly disappointing. We can clearly see in Sandra 2013 that GK110 has plenty of potential, flying past the GK104-powered GeForce GTX 680. But a serious of failures prevent us from judging the GPU’s performance in more real-world workloads. For a card long-expected to reconcile Nvidia’s position against Tahiti in these disciplines, Titan falls short right out of the gate.

We did bring these issues up with Nvidia, and were told that they all stem from its driver. Fortunately, that means we should see fixes soon.

Current page: GK110 Steps Out: General-Purpose Compute

Prev Page Results: Two- And Three-Way SLI Performance Next Page Heat And Noise-

Novuake Pure marketing. At that price Nvidia is just pulling a huge stunt... Still an insane card.Reply -

whyso if you use an actual 7970 GE card that is sold on newegg, etc instead of the reference 7970 GE card that AMD gave (that you can't find anywhere) thermals and acoustics are different.Reply -

cknobman Seems like Titan is a flop (at least at $1000 price point).Reply

This card would only be compelling if offered in the ~$700 range.

As for compute? LOL looks like this card being a compute monster goes right out the window. Titan does not really even compete that well with a 7970 costing less than half. -

downhill911 If titan costs no more than 800USD, then really nice card to have since it does not, i call it a fail card, or hype card. Even my GTX 690 make more since and now you can have them for a really good price on ebay.Reply -

spookyman well I am glad I bought the 690GTX.Reply

Titan is nice but not impressive enough to go buy. -

hero1 jimbaladinFor $1000 that card sheath better be made out of platinum.Reply

Tell me about it! I think Nvidia shot itself on the foot with the pricing schim. I want AMD to come out with better drivers than current ones to put the 7970 at least 20% ahead of 680 and take all the sales from the greedy green. Sure it performs way better but that price is insane. I think 700-800 is the sweet spot but again it is rare, powerful beast and very consistent which is hard to find atm. -

raxman "We did bring these issues up with Nvidia, and were told that they all stem from its driver. Fortunately, that means we should see fixes soon." I suspect their fix will be "Use CUDA".Reply

Nvidia has really dropped the ball on OpenCL. They don't support OpenCL 1.2, they make it difficult to find all their OpenCL examples. Their link for OpenCL is not easy to find. However their OpenCL 1.1 driver is quite good for Fermi and for the 680 and 690 despite what people say. But if the Titan has troubles it looks like they will be giving up on the driver now as well or purposely crippling it (I can't imagine they did not think to test some OpenCL benchmarks which every review site uses). Nvidia does not care about OpenCL Nvidia users like myself anymore. I wish there more people influential like Linus Torvalds that told Nvidia where to go.