Nvidia GeForce RTX 2070 Founders Edition Review: Replacing GeForce GTX 1080

Why you can trust Tom's Hardware

Deep Learning Super-Sampling: Faster Than GeForce GTX 1080 Ti?

Before we get into the performance of GeForce RTX 2070 across our benchmark suite, let’s acknowledge the elephant in the room: a month in, there still aren't any games with real-time ray tracing or DLSS to test. We do, however, have access to a demo of Final Fantasy XV Windows Edition with DLSS support. Details of the implementation are somewhat light, aside from a note that DLSS allows Turing-based GPUs to use half the number of input samples for rendering. The architecture’s Tensor cores fill in the rest to create a final image.

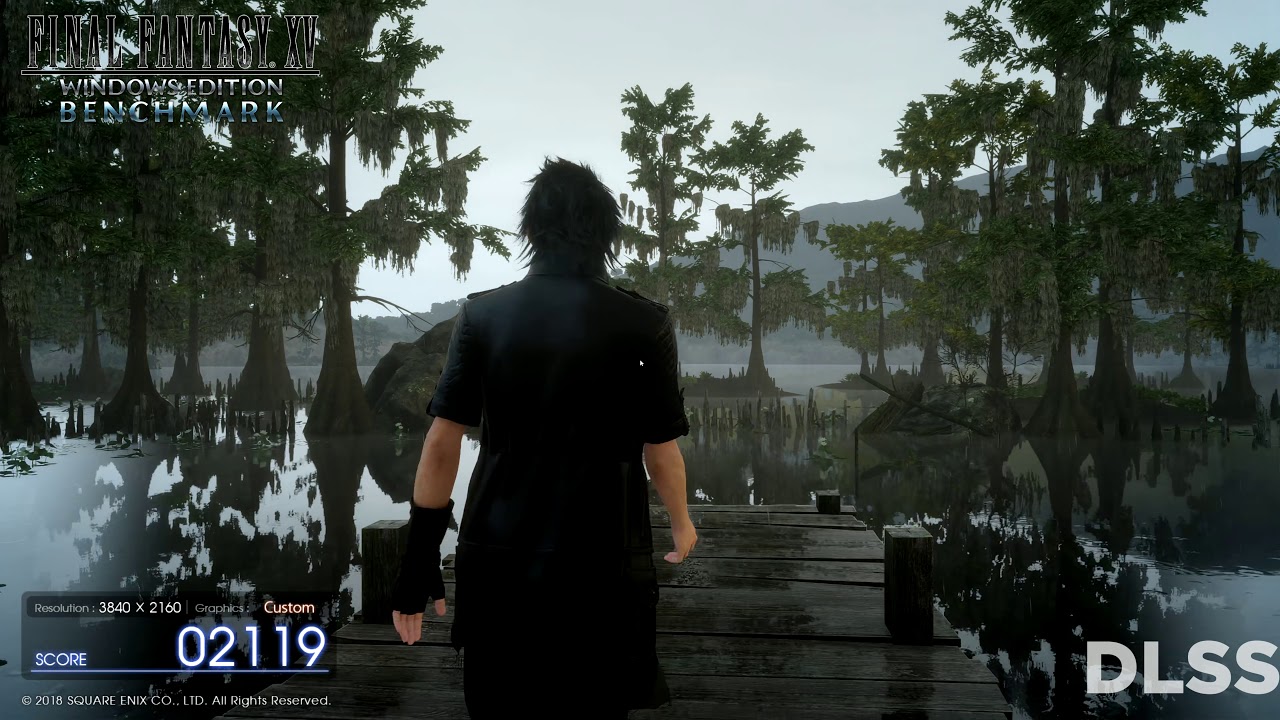

Nvidia says the demo runs at 4K and maximum graphics fidelity no matter what but doesn’t provide any way to see what the quality settings include. The HUD simply shows Resolution: 3840x2160, Graphics: Custom, and a score that increases as the demo runs. Unfortunately, there's no way for us to specify 2560x1440 (a more practical target for GeForce RTX 2070).

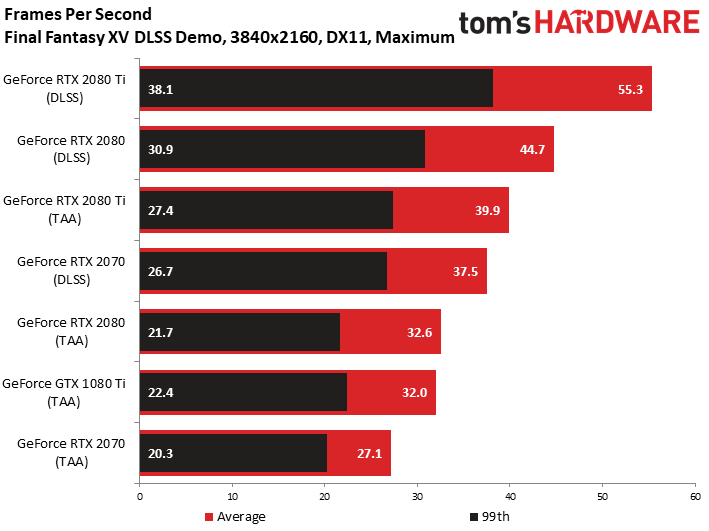

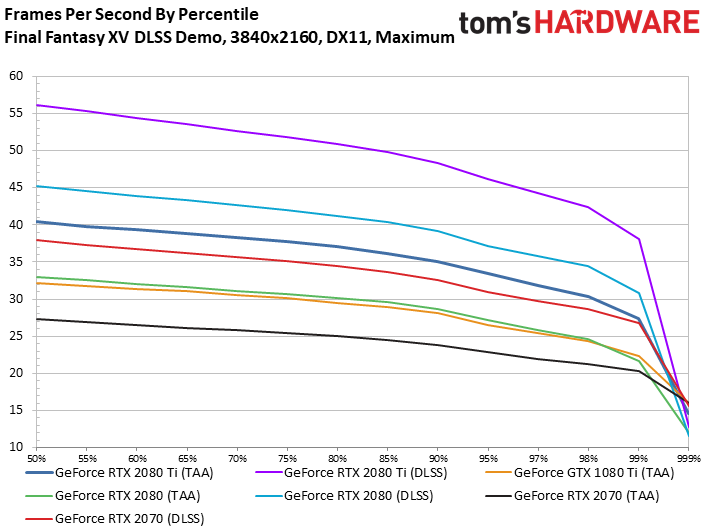

Despite the over-ambitious resolution, GeForce RTX 2070 Founders Edition picks up a 38% speed-up with DLSS active compared to applying TAA at 4K. That makes it faster than GeForce GTX 1080 Ti and RTX 2080 using TAA. Prognosticating one year into the future, TU106's Tensor cores may surprise enthusiasts and become this architecture's most useful feature, particularly if real-world optimizations for DLSS prove as compelling as Nvidia's demos.

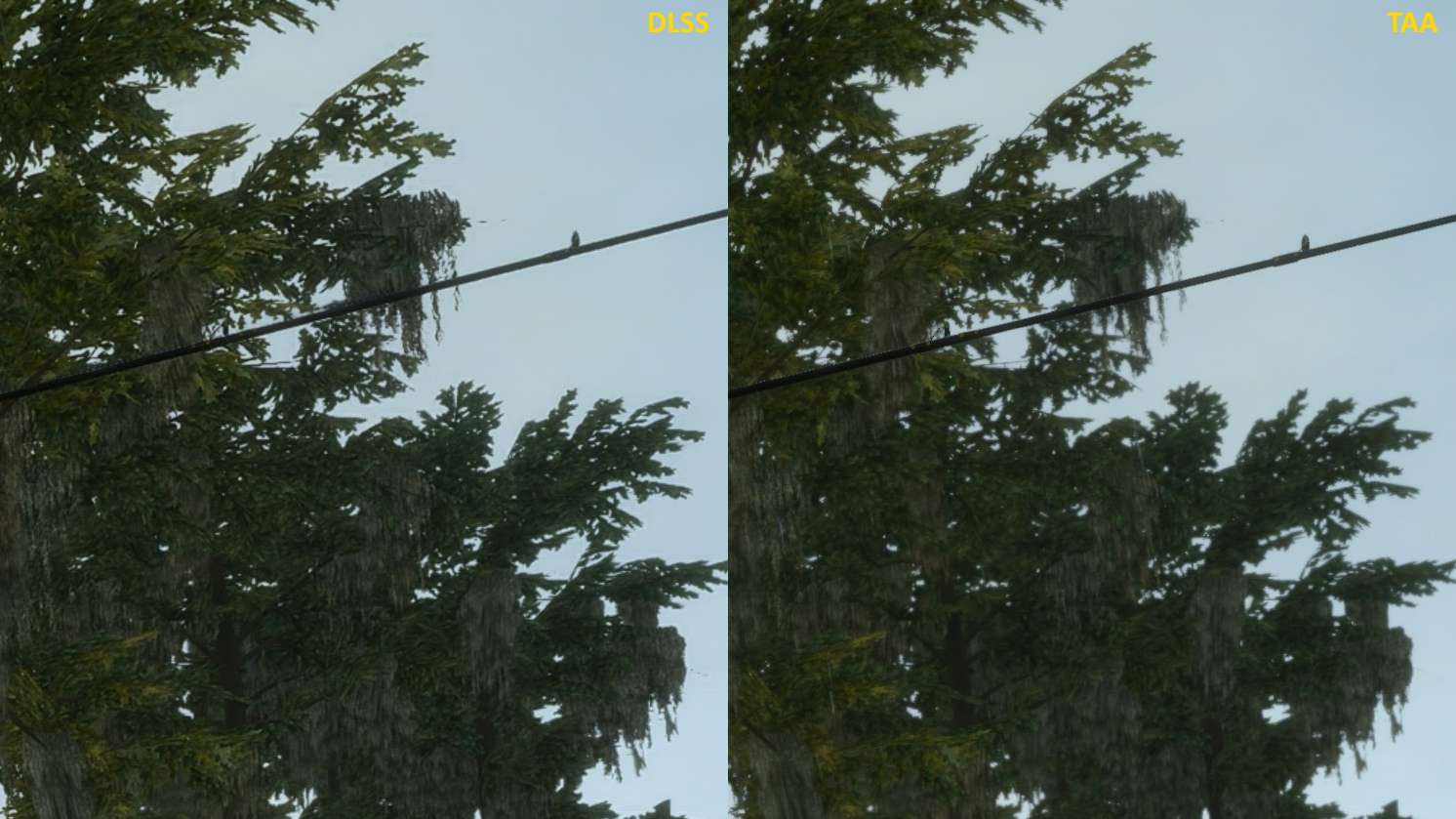

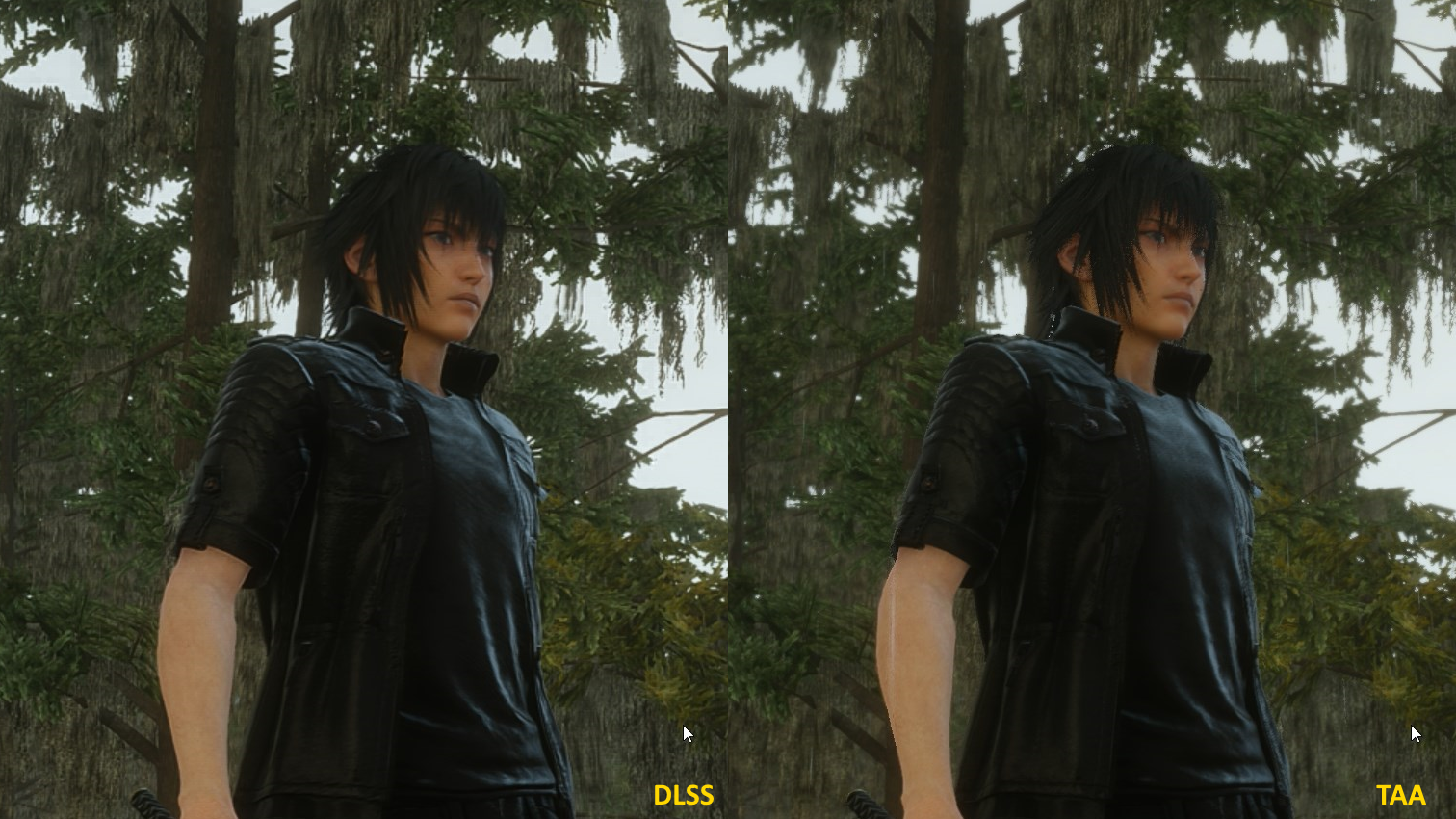

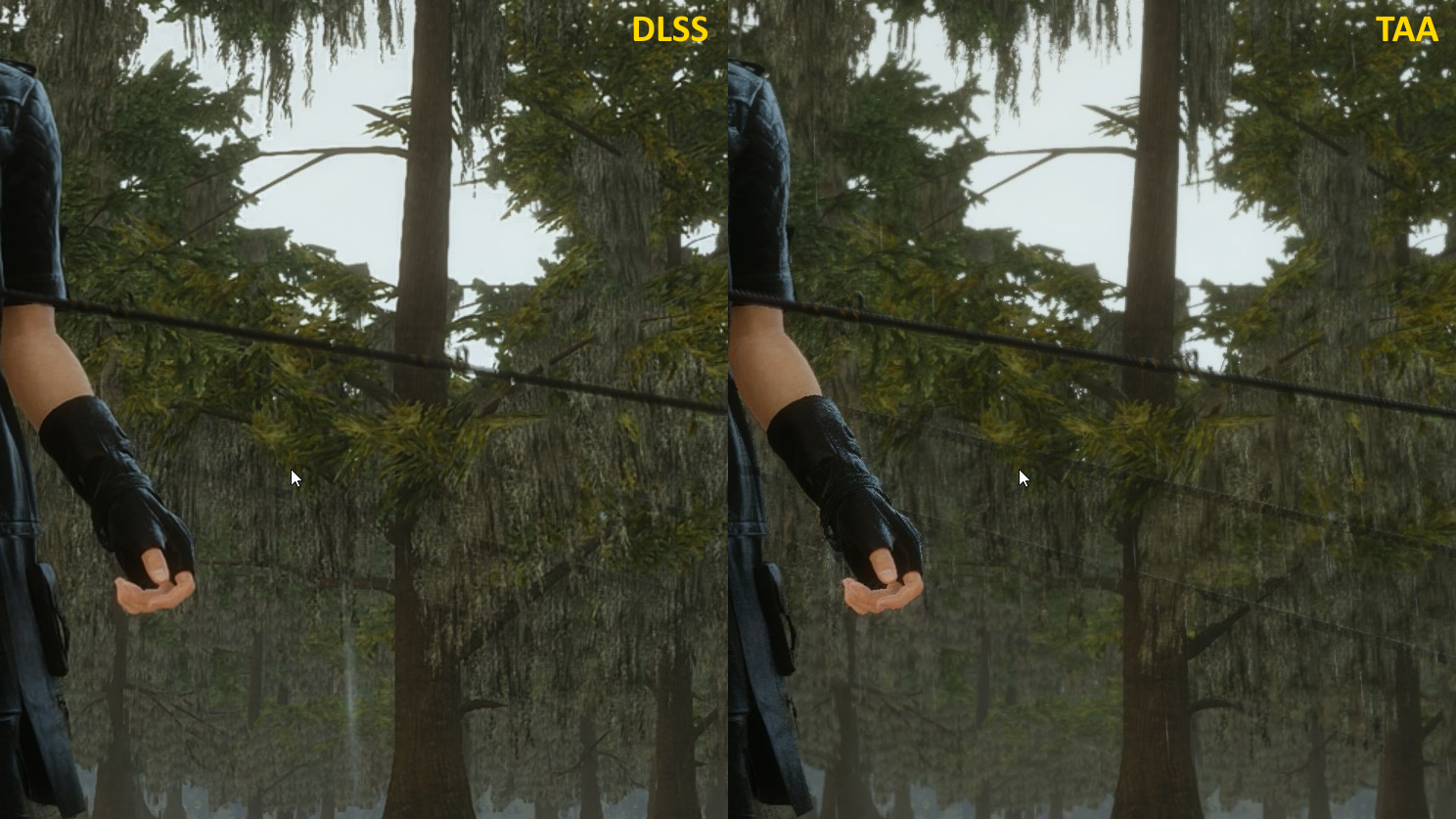

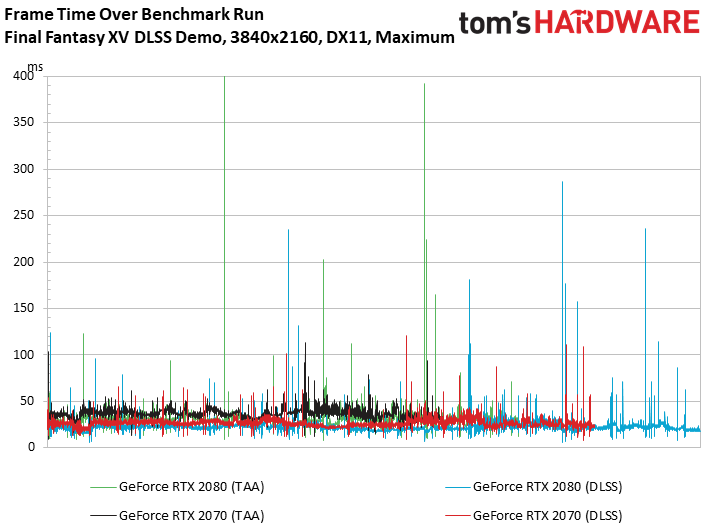

But until DLSS really proves itself, we anticipate gamers distrusting the idea that input samples can be removed to save on rendering budget and then filled in using AI. We pored over the demo, running both versions over and over to identify any differences that stood out.

In the clip below, we make two observations. First, Noct’s textured shirt is affected by banding/shimmering due to DLSS. In the TAA version, his chest does not exhibit the same effect. Second, as Noct casts his fishing rod, there’s a pronounced ghosting artifact that remains on-screen with TAA active. DLSS does away with this entirely. Neither solution is perfect.

Might the Final Fantasy’s DLSS implementation improve over time? According to Nvidia, the model for DLSS is trained on a set of data that eventually reaches a point where the quality of its inferred results flattens out. So, in a sense, the DLSS model does mature. But the company’s supercomputing cluster is constantly training with new data on new games, so improvements may roll out as time goes on. If there are areas that demonstrate an issue of some sort, the DLSS model can be reviewed and tweaked. This may involve providing additional “correct” data to train with.

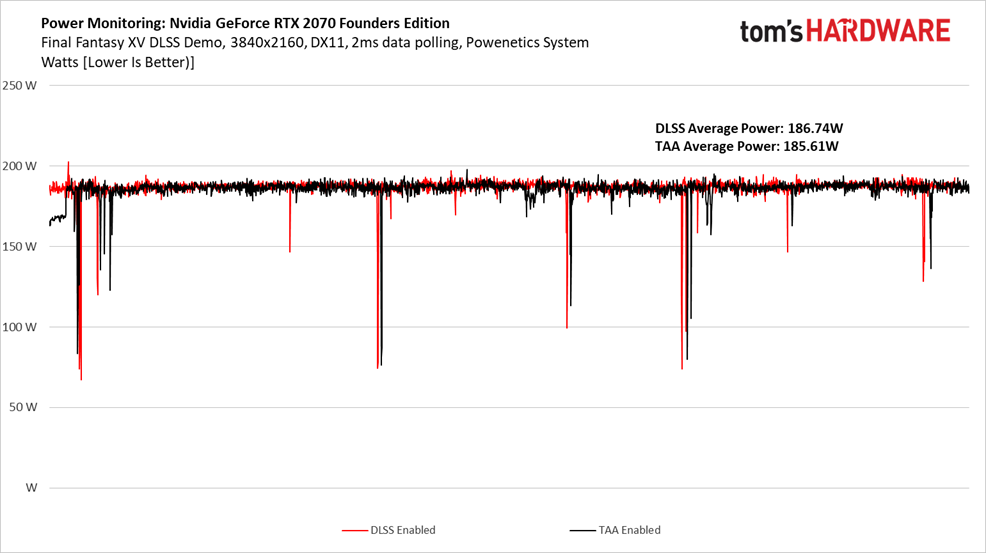

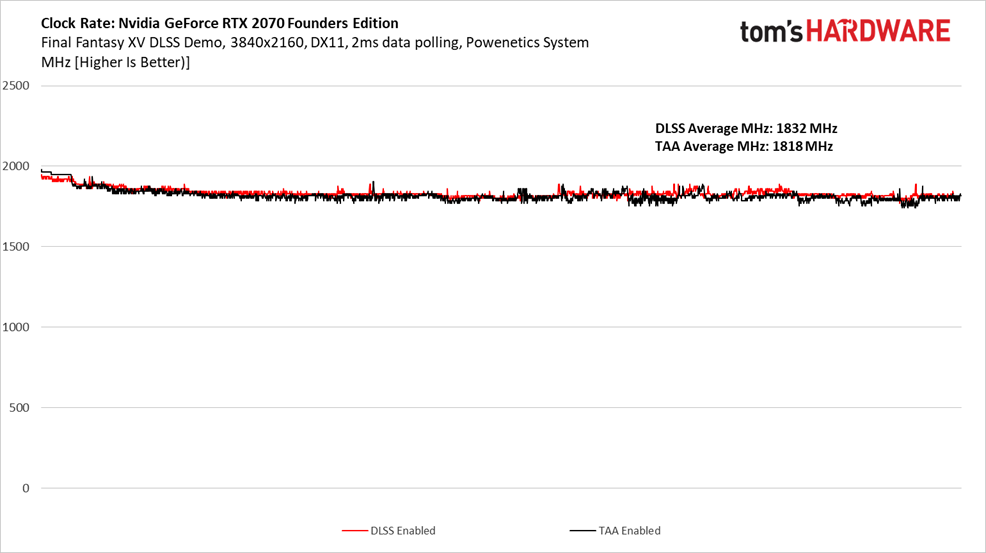

Beyond DLSS' implications for performance and image quality, we were also curious whether utilizing TU106's Tensor cores affected clock rates or power consumption.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Comparing 300 seconds of the Final Fantasy XV demo, power consumption looks very similar using DLSS and TAA. Notably, the dips between scenes consistently drop lower with DLSS enabled.

The same goes for clock rate, though we might guess that TU106 is able to run at a slightly higher frequency because it's only rendering a fraction of the input samples and using Tensor cores to fill in the rest.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Deep Learning Super-Sampling: Faster Than GeForce GTX 1080 Ti?

Prev Page Meet TU106: The Engine Powering GeForce RTX 2070 Next Page Results: Ashes of the Singularity and Battlefield 1-

coolio0103 200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardwareReply -

80-watt Hamster Frustrating how, in two generations, Nvidia's *70-class offering has gone from $330 to $500 (est). Granted, we're talking more than double the performance, so it can be considered a good value from a strict perf/$ perpsective. But it also feels like NV is moving the goalposts for what "upper mid-range" means.Reply -

TCA_ChinChin gtx 1080 - 470$Reply

rtx 2070 - 600$

130$ increase for less than 10% fps improvement on average. Disappointing, especially with increased TDP, which means efficiency didn't really increase so even for mini ITX builds, the heat generated is gonna be pretty much the same for the same performance. -

cangelini Reply21406199 said:200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardware

This quite literally will replace 1080 once those cards are gone. The conclusion sums up what we think of 2070 FE's value, though. -

bloodroses Reply21406199 said:200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardware

Prices will come down on the RTX 2070's. GTX 1080's wont be available sooner or later. Tom's Hardware is correct on the assessment of the RTX 2070. Blame Nvidia for the price gouging on early adopters; and AMD for not having proper competition. -

demonhorde665 wow seriously sick of the elitism at tom's . seems every few years they push up what they deem "playable frame rates" . just 3 years ago they were saying 40 + was playable. 8 years ago they were saying 35+ and 12+ years ago they were saying 25+ was playable. now they are saying in this test that only 50 + is playable? . serilously read the article not just the frame scores , the author is saying at several points that the test fall below 50 fps "the playable frame rate". it's like they are just trying to get gamers to rush out and buy overpriced video cards. granted 25 fps is a bit eye soreish on today's lcd/led screens , but come on. 35 is definitely playable with little visual difference. 45+ is smooth as butterReply

yeah it would be awesome if we could get 60 fps on every game at 4k. it would be awesome just to hit 50 @ 4k, but ffs you don't have to try to sell the cards so hard. admit it gamers on a less-than-top-cost budget will still enjoy 4k gaming at 35 , 40 or 45 fps. hell it's not like the cards doing 40-50 fps are cheap them selves… gf 1070's still obliterate most consumer's pockets at $420-450 bucks a card. the fact is top end video card prices have gone nutso in the past year or two... 600 -800 dollars for just a video card is f---king insane and detrimental to the PC gaming industry as a whole. just 6 years ago you could build a decent mid tier gaming rig for 600-700 bucks , now that same rig (in performance terms) would run you 1000-1200 , because of this blatant price gouging by both AMD and nvidia (but definitely worse on nvidia's side). 5-10 years from now ever one will being saying that 120 fps is ideal and that any thing below 100 fps is unplayable. it's getting ridiculous. -

jeffunit With its fan shroud disconnected, GeForce RTX 2070’s heat sink stretches from one end of the card, past the 90cm-long PCB...Reply

That is pretty big, as 90cm is 35 inches, just one inch short of 3 feet.

I suspect it is a typo. -

tejayd Last line in the 3rd paragraph "If not, third-party GeForce RTX 2070s should start in the $500 range, making RTX 2080 a natural replacement for GeForce GTX 1080." Shouldn't that say "making RTX 2070 a natural replacement". Or am I misinterpreting "natural"?Reply -

Brian_R170 The 20-series have been a huge let-down . Yes, the 2070 is a little faster than the 1080 and the 2080 is a little faster than the 1080Ti, but they're both are more expensive and consume more power than the cards they supplant. Shifting the card names to a higher performance bar is just a marketing strategy.Reply