Nvidia GeForce RTX 2070 Founders Edition Review: Replacing GeForce GTX 1080

Why you can trust Tom's Hardware

Results: Destiny 2 and Doom

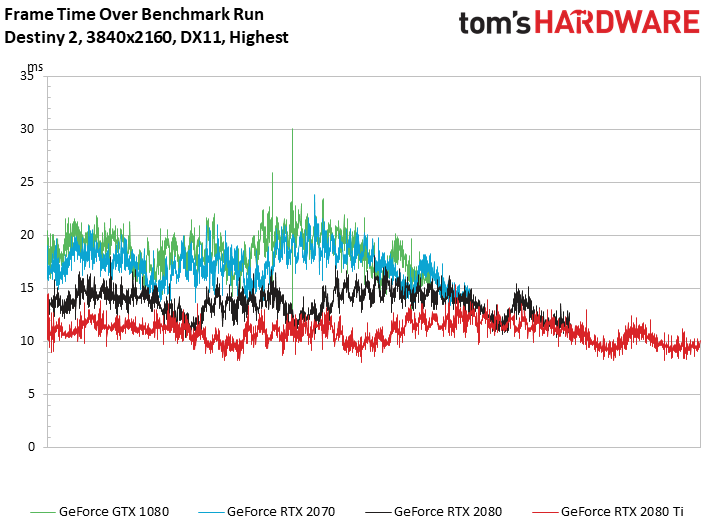

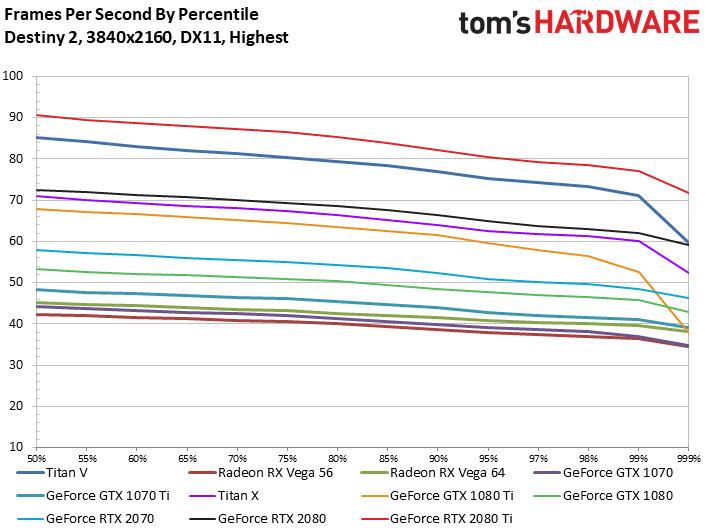

Destiny 2 (DX11)

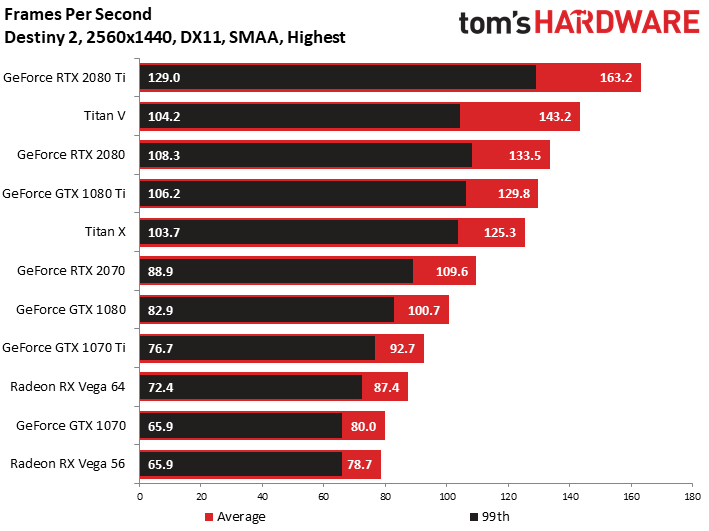

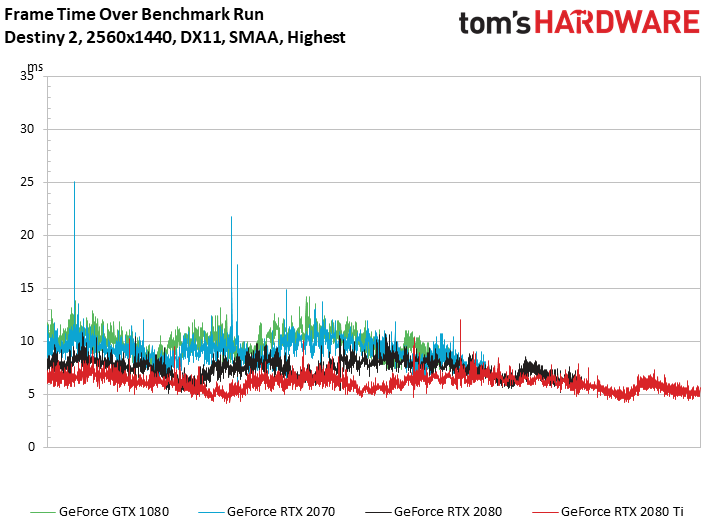

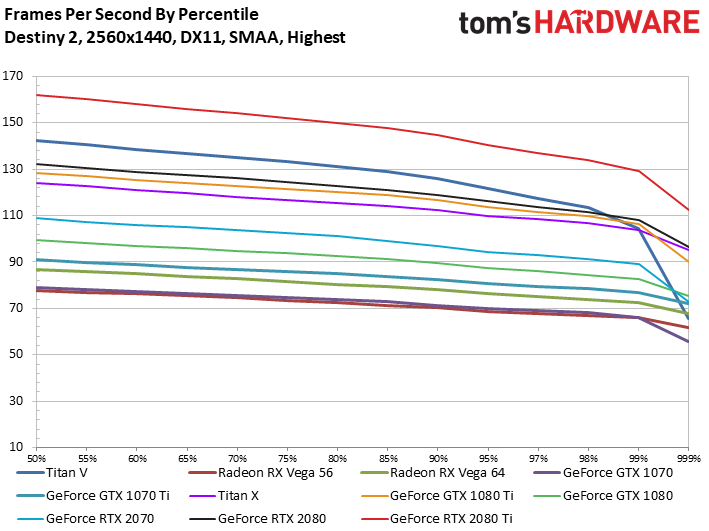

A 37% lead over GeForce GTX 1070 lines up with Nvidia’s internally-generated results comparing RTX 2070 to its generational predecessor. But the real comparison should be to GeForce GTX 1080. In that face-off, RTX 2070 is 9% faster for a 20%-higher price tag.

To RTX 2070’s credit, we’re glad to see 100+ FPS average performance in Destiny 2 using the game’s Highest quality preset. At the right price, this card could be a real winner (particularly since its nearest competition from AMD, Radeon RX Vega 64, is only 80% as fast).

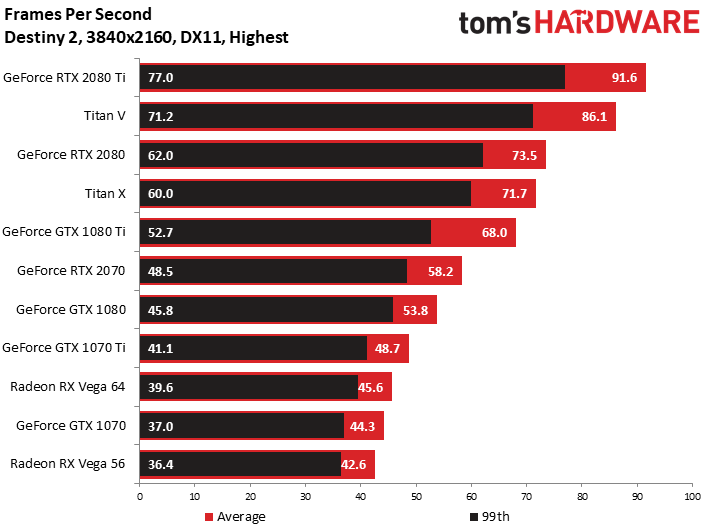

Turning off SMAA allows the GeForce RTX 2070 Founders Edition to maintain playable frame rates at 4K in Destiny 2. The lead over GTX 1080 is a mere 8%, but a 28% lead over the $500 Radeon RX Vega 64 does speak to Nvidia’s competitive positioning.

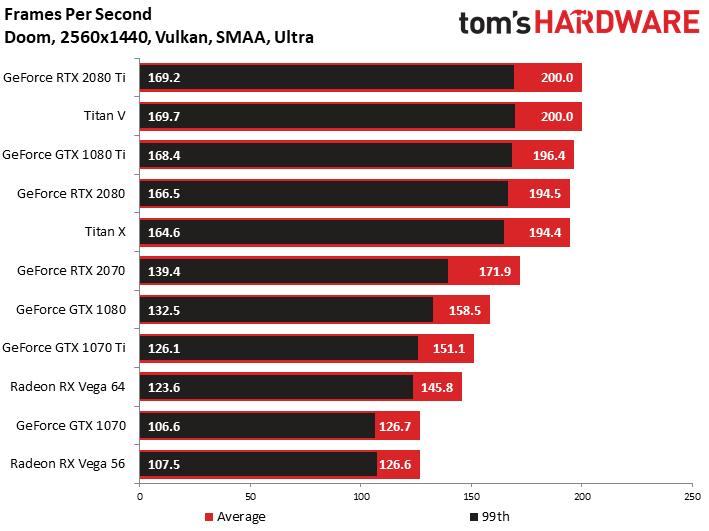

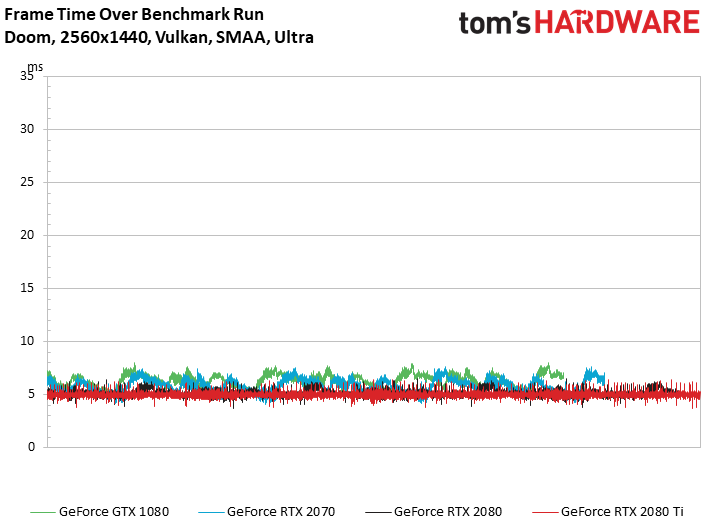

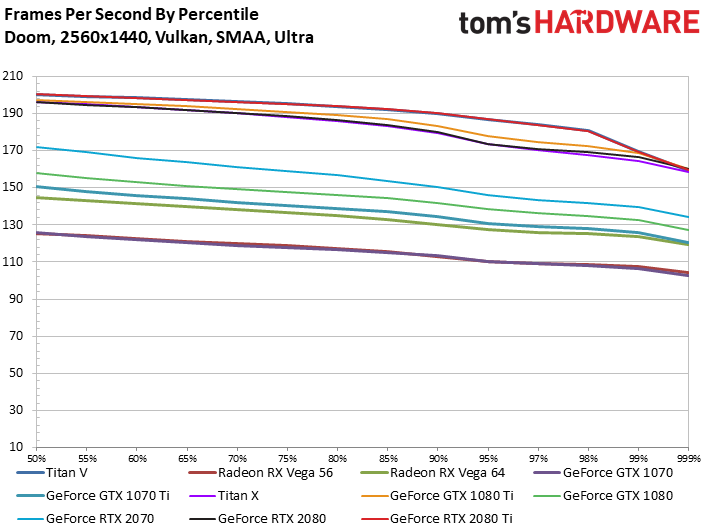

Doom (Vulkan)

Although the five fastest graphics cards in our chart are limited by Doom’s 200 FPS limit, GeForce RTX 2070 Founders Edition is not. An 8% lead over GTX 1080 and an 18% advantage compared to Radeon RX Vega 64 translate to perfectly smooth frame rates in Doom at 2560x1440.

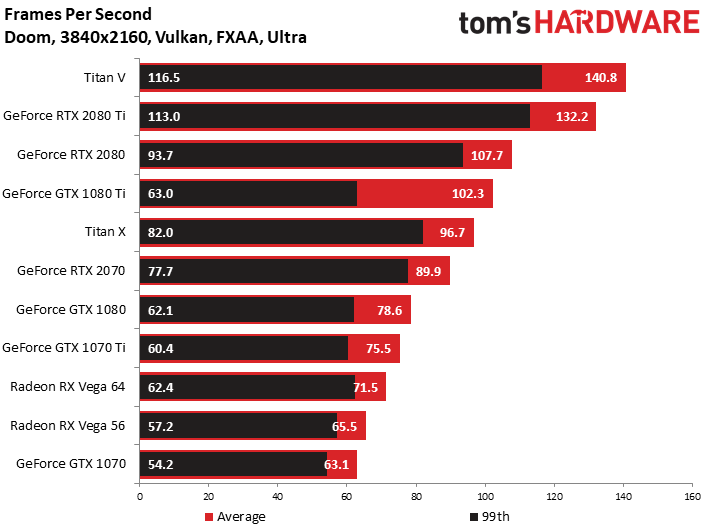

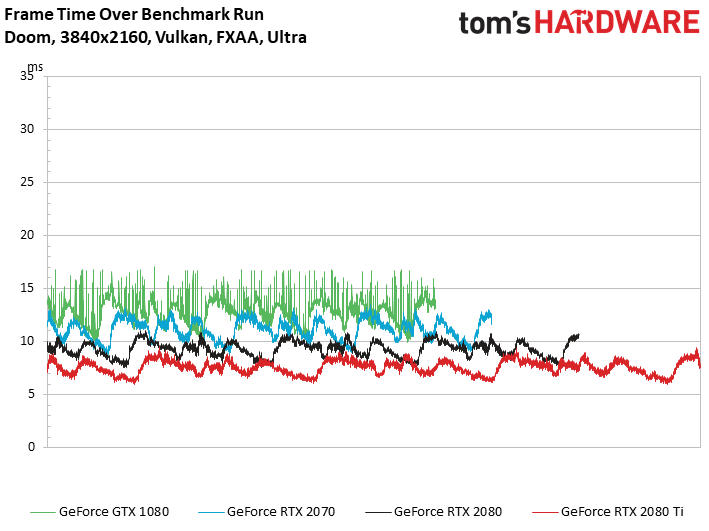

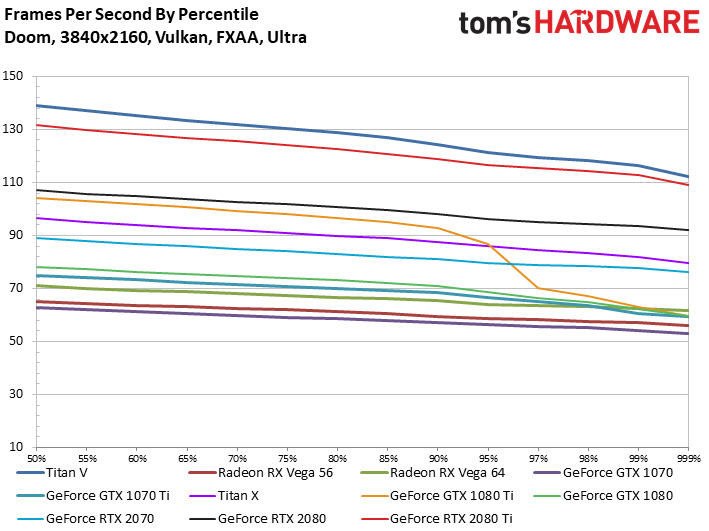

Upping the resolution to 3840x2160 imposes a more taxing graphics workload, knocking frame rates down across the board. Now, GeForce RTX 2070 is 14% faster than GTX 1080 and 26% quicker than Radeon RX Vega 64.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

MORE: All Graphics Content

Current page: Results: Destiny 2 and Doom

Prev Page Results: Ashes of the Singularity and Battlefield 1 Next Page Results: Far Cry 5 and Forza Motorsport 7-

coolio0103 200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardwareReply -

80-watt Hamster Frustrating how, in two generations, Nvidia's *70-class offering has gone from $330 to $500 (est). Granted, we're talking more than double the performance, so it can be considered a good value from a strict perf/$ perpsective. But it also feels like NV is moving the goalposts for what "upper mid-range" means.Reply -

TCA_ChinChin gtx 1080 - 470$Reply

rtx 2070 - 600$

130$ increase for less than 10% fps improvement on average. Disappointing, especially with increased TDP, which means efficiency didn't really increase so even for mini ITX builds, the heat generated is gonna be pretty much the same for the same performance. -

cangelini Reply21406199 said:200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardware

This quite literally will replace 1080 once those cards are gone. The conclusion sums up what we think of 2070 FE's value, though. -

bloodroses Reply21406199 said:200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardware

Prices will come down on the RTX 2070's. GTX 1080's wont be available sooner or later. Tom's Hardware is correct on the assessment of the RTX 2070. Blame Nvidia for the price gouging on early adopters; and AMD for not having proper competition. -

demonhorde665 wow seriously sick of the elitism at tom's . seems every few years they push up what they deem "playable frame rates" . just 3 years ago they were saying 40 + was playable. 8 years ago they were saying 35+ and 12+ years ago they were saying 25+ was playable. now they are saying in this test that only 50 + is playable? . serilously read the article not just the frame scores , the author is saying at several points that the test fall below 50 fps "the playable frame rate". it's like they are just trying to get gamers to rush out and buy overpriced video cards. granted 25 fps is a bit eye soreish on today's lcd/led screens , but come on. 35 is definitely playable with little visual difference. 45+ is smooth as butterReply

yeah it would be awesome if we could get 60 fps on every game at 4k. it would be awesome just to hit 50 @ 4k, but ffs you don't have to try to sell the cards so hard. admit it gamers on a less-than-top-cost budget will still enjoy 4k gaming at 35 , 40 or 45 fps. hell it's not like the cards doing 40-50 fps are cheap them selves… gf 1070's still obliterate most consumer's pockets at $420-450 bucks a card. the fact is top end video card prices have gone nutso in the past year or two... 600 -800 dollars for just a video card is f---king insane and detrimental to the PC gaming industry as a whole. just 6 years ago you could build a decent mid tier gaming rig for 600-700 bucks , now that same rig (in performance terms) would run you 1000-1200 , because of this blatant price gouging by both AMD and nvidia (but definitely worse on nvidia's side). 5-10 years from now ever one will being saying that 120 fps is ideal and that any thing below 100 fps is unplayable. it's getting ridiculous. -

jeffunit With its fan shroud disconnected, GeForce RTX 2070’s heat sink stretches from one end of the card, past the 90cm-long PCB...Reply

That is pretty big, as 90cm is 35 inches, just one inch short of 3 feet.

I suspect it is a typo. -

tejayd Last line in the 3rd paragraph "If not, third-party GeForce RTX 2070s should start in the $500 range, making RTX 2080 a natural replacement for GeForce GTX 1080." Shouldn't that say "making RTX 2070 a natural replacement". Or am I misinterpreting "natural"?Reply -

Brian_R170 The 20-series have been a huge let-down . Yes, the 2070 is a little faster than the 1080 and the 2080 is a little faster than the 1080Ti, but they're both are more expensive and consume more power than the cards they supplant. Shifting the card names to a higher performance bar is just a marketing strategy.Reply