Why you can trust Tom's Hardware

Is 8GB of VRAM Insufficient?

One of the biggest complaints you're sure to hear about the RTX 4060 is that it only has 8GB of VRAM. You may also hear complaints about the 128-bit memory interface, which is related but also a separate consideration. Dropping down to a 128-bit bus on the 4060 definitely represents a compromise, but there are many factors in play.

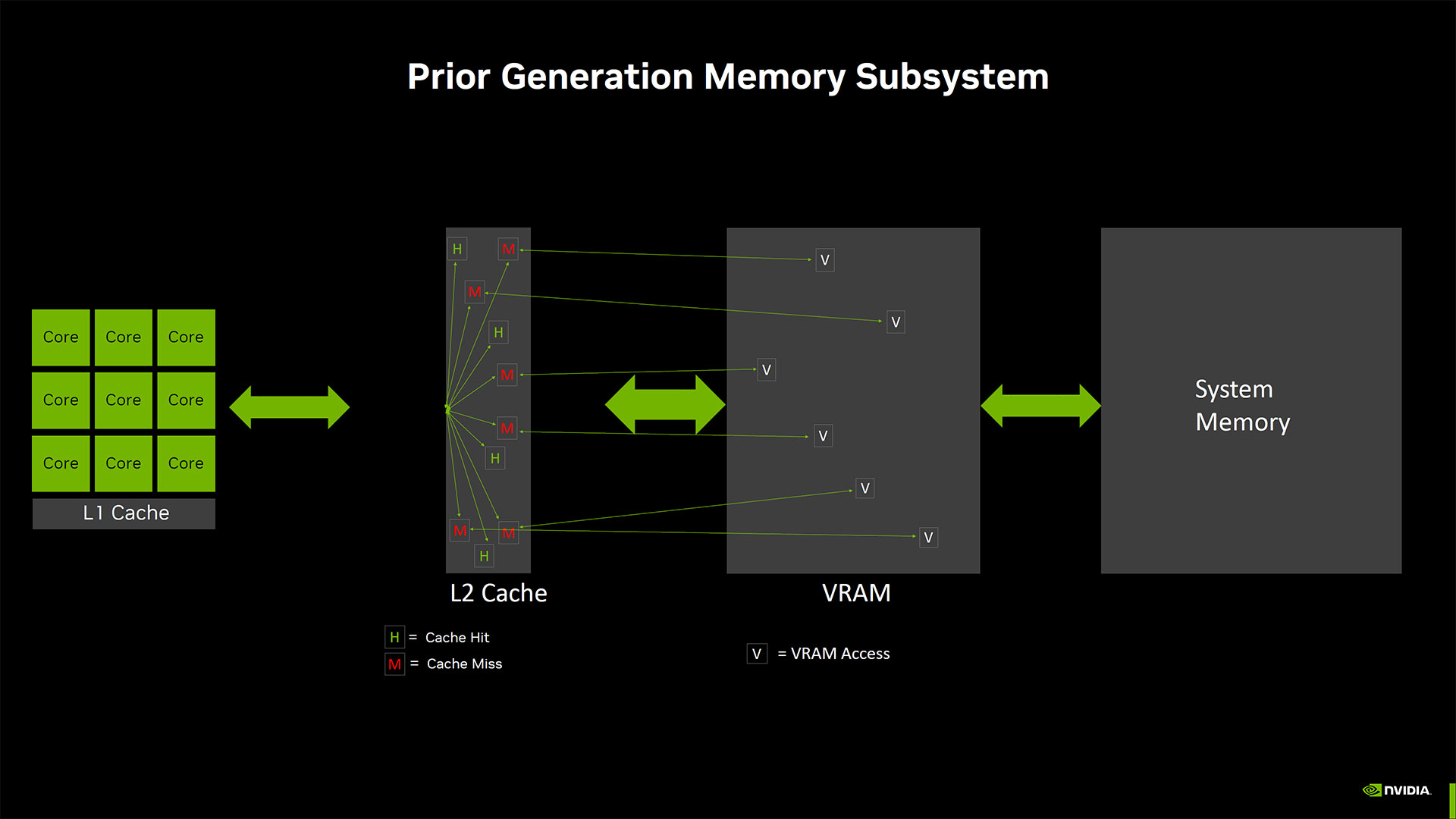

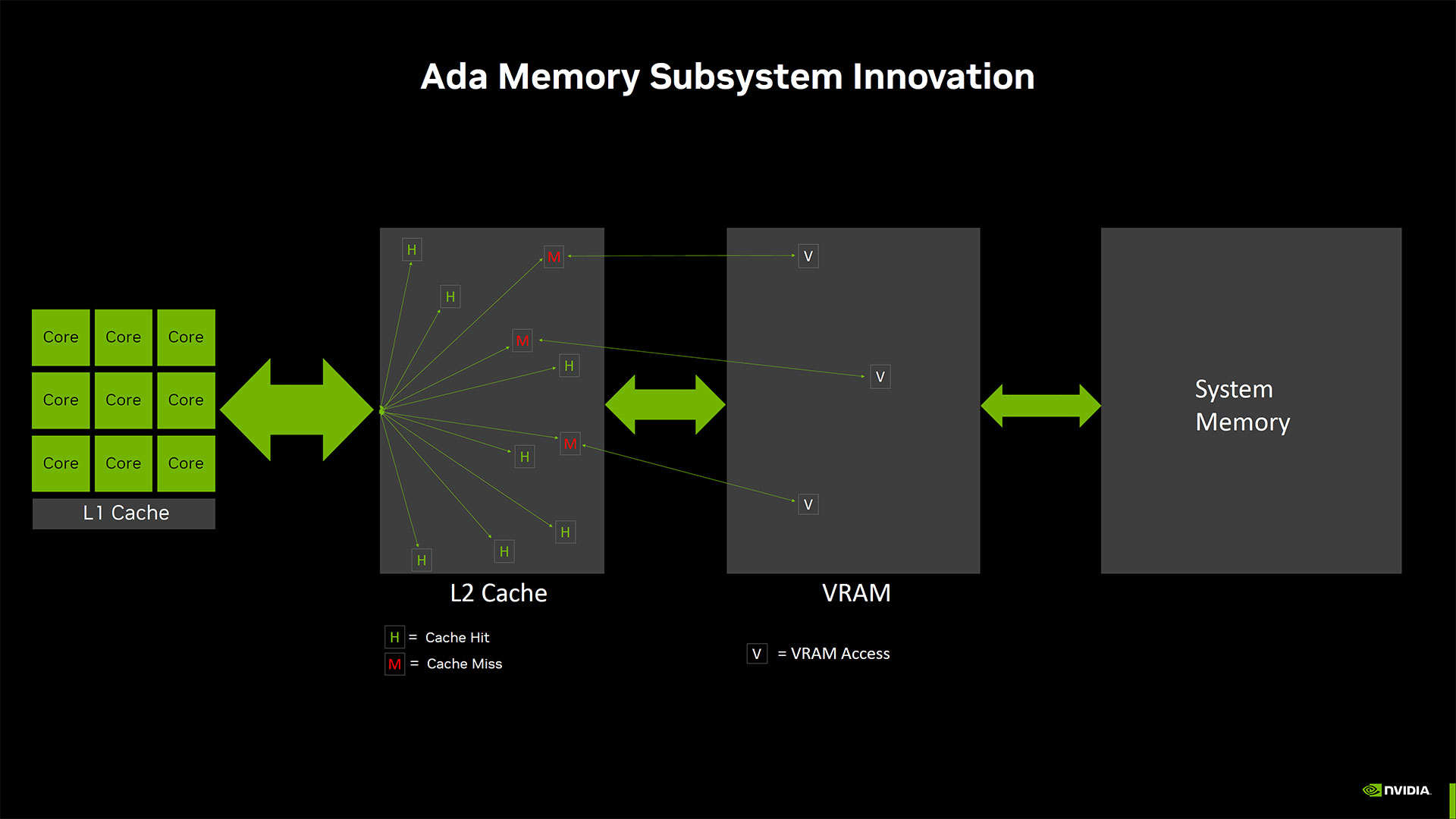

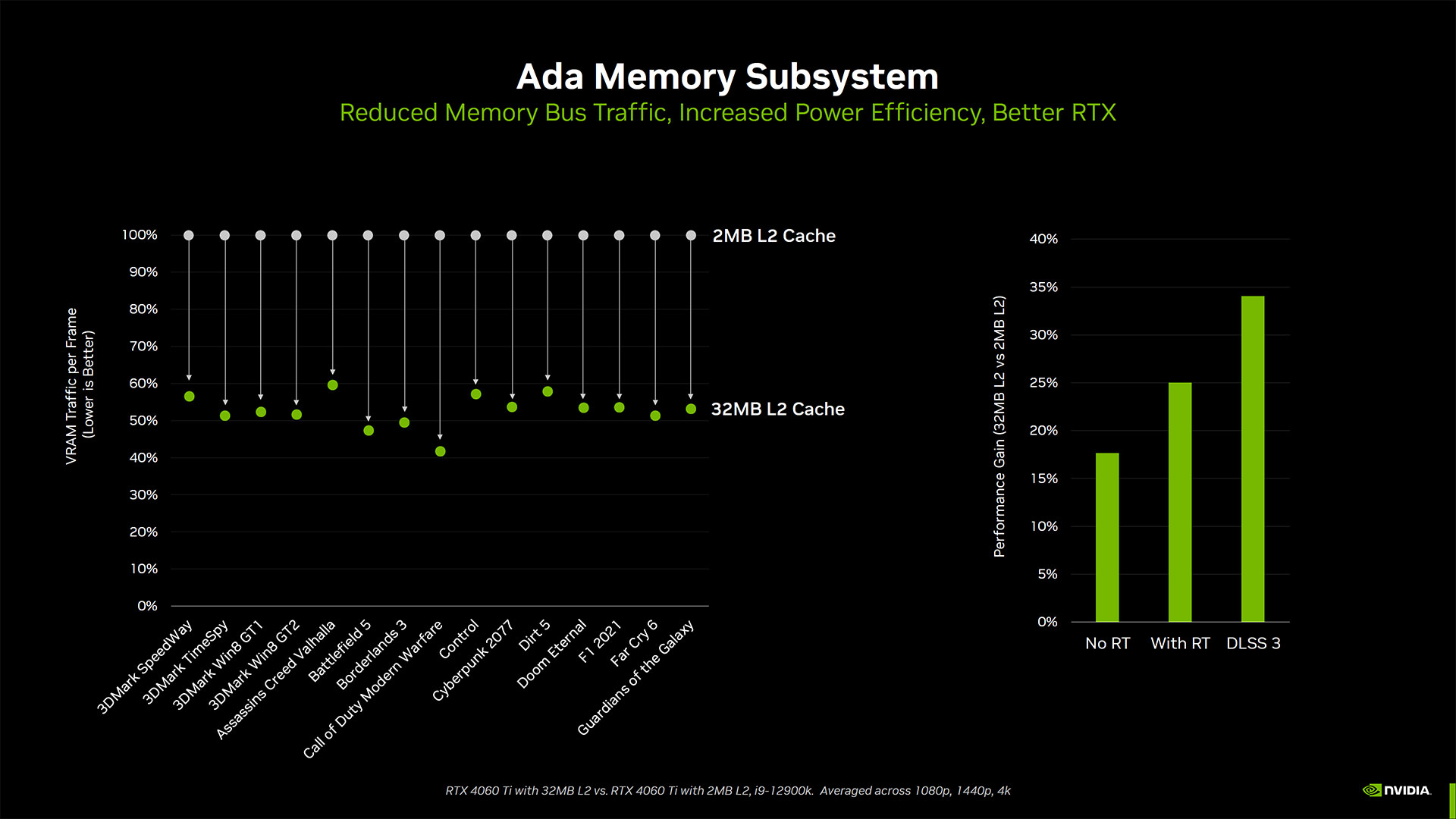

First, we need to remember that the significantly larger L2 cache really does help a lot in terms of effective memory bandwidth. Every memory access fulfilled by the L2 cache means that the 128-bit interface didn't even come into play. Nvidia says that the hit rate of the larger 24MB L2 cache on the RTX 4060, compared to what the hit rate would have been with an Ampere-sized 2MB L2, is 67% higher. Nvidia isn't lying, and real-world performance metrics will prove this point — AMD also lists effective bandwidth on many of its RDNA 2 and RDNA 3 GPUs, for the same reason.

At the same time, there are edge cases where the larger L2 cache doesn't help as much. Running at 4K or even 1440p will reduce the cache hit rates, but certain applications simply hit memory in a different way that can also reduce hit rates. We've heard that emulation is one such case, and hashing algorithms (i.e. cryptocurrency mining, may it rest in peace) are another. But for typical PC gaming, the 8X larger L2 cache (relative to the 3060) will help a lot in reducing GDDR6 memory accesses.

The other side of the coin is that a 128-bit interface inherently limits how much VRAM a GPU can have. If you use 1GB chips, you max out at 4GB (e.g. the GTX 1650). The RTX 4060 uses 2GB chips, which gets it up to 8GB. Nvidia could have also put chips on both sides of the PCB (which used to be quite common), but that increases complexity and it would bump the total VRAM all the way up to 16GB. That's arguably overkill for a $300 graphics card, even in 2023.

Here's the thing: You don't actually need more than 8GB of VRAM for most games, when running at appropriate settings. We've explained why 4K requires so much VRAM previously, and that's part of the story. The other part is that many of the changes between "high" and "ultra" settings — whatever they might be called — are often of the placebo variety. Maxed out settings will often drop performance 20% or more, but image quality will look nearly the same as high settings.

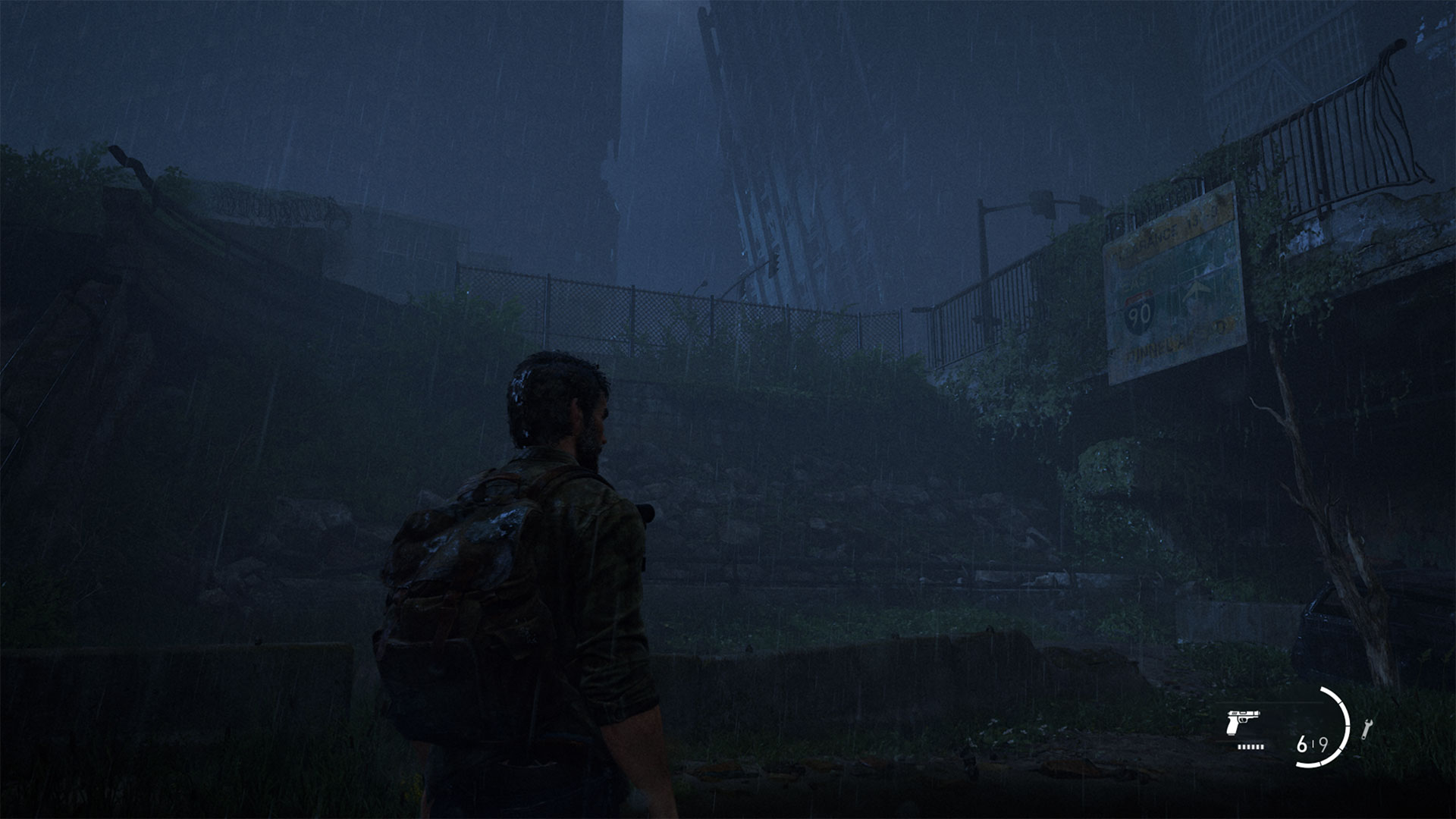

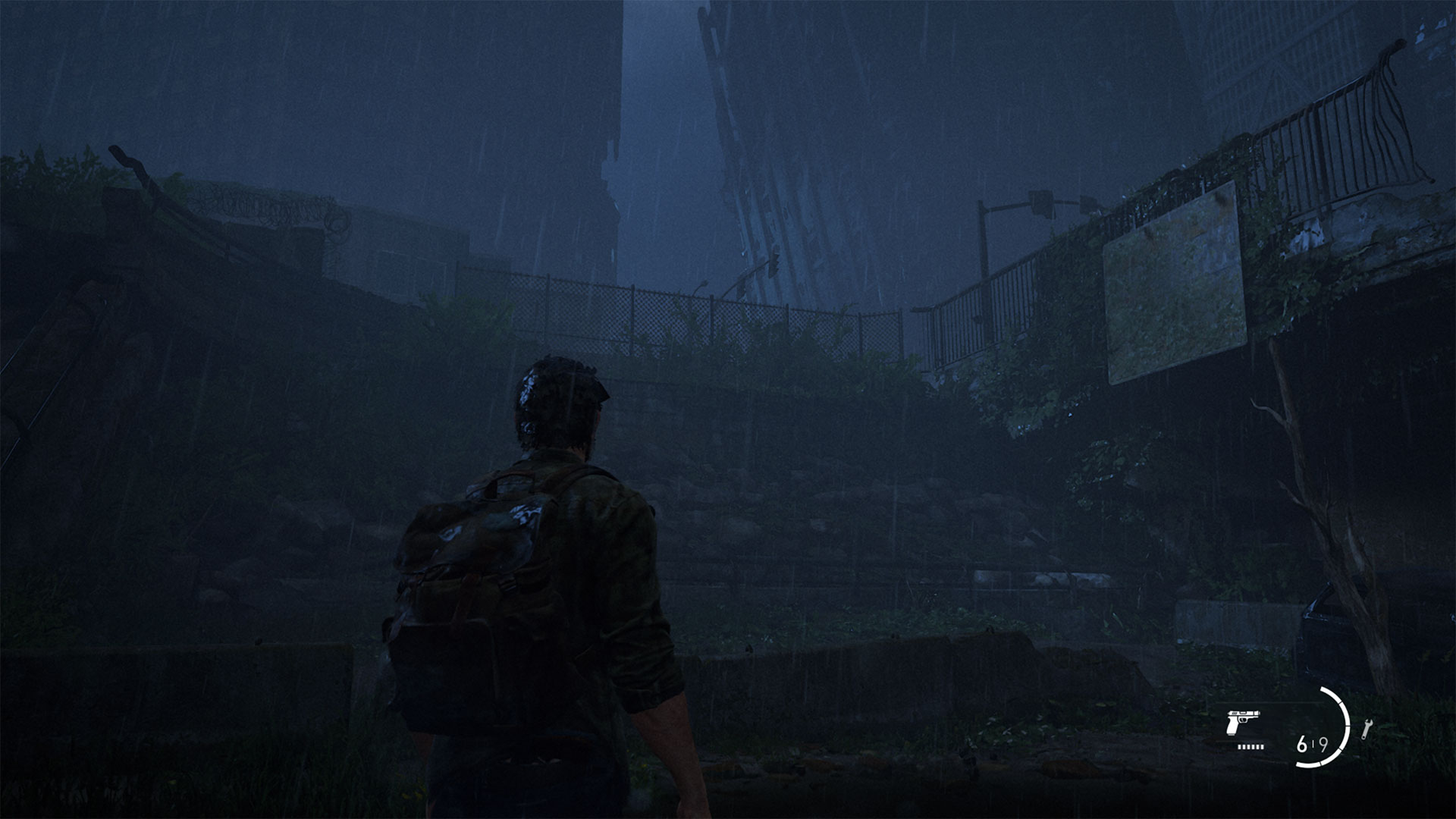

Look at the above gallery from The Last of Us, Part 1 as an example. This is a game that can easily exceed 8GB of VRAM with maxed out settings. The most recent patch improved performance, however, and 8GB cards run much better now — almost like the initial port was poorly done and unoptimized! Anyway, we've captured the five presets, and if you look, there aren't really any significant differences between Ultra and High, and you could further reduce the differences by only turning down texture quality from ultra to high.

The placebo effect is especially true of HD texture packs, which can weigh in at 40GB or more but rarely change the visuals much. If you're running at 1080p or 1440p, most surfaces in a game will use 1024x1024 texture resolutions or lower, because the surface doesn't cover more than a thousand pixels. In fact, most surfaces will likely opt for 256x256 or 128x128 textures, and only those that are closest to the viewport will need 512x512 or 1024x1024 textures.

What about the 2048x2048 textures in the HD texture pack? Depending on the game, they may still get loaded into VRAM, even if the mipmapping algorithm never references them. So you can hurt performance and cause texture popping for literally zero improvement in visuals.

Related to this is texture streaming, where a game engine will load in low resolution textures for everything, and then pull higher resolution textures into VRAM as needed. You can see this happening in some games right as a level loads: Everything looks a bit blurry and then higher quality textures "pop" in.

If the texture streaming algorithm still tries to load higher resolution textures when they're not needed, it may evict other textures from VRAM. That can lead to a situation where the engine and GPU will have to shuttle data over the PCIe interface repeatedly, as one texture gets loaded and kicks a different texture out of VRAM, but then that other texture is needed and has to get pulled back into VRAM.

Fundamentally, how all of this plays out is up to the game developers. Some games (Red Dead Redemption 2, as an example) will strictly enforce VRAM requirements and won't let you choose settings that exceed your GPU's VRAM capacity — you can try to work around this, but the results are potentially problematic. Others, particularly Unreal Engine games, will use texture streaming, sometimes with the option to disable it (e.g. Lord of the Rings: Gollum). This can lead to the aforementioned texture popping.

If you're seeing a lot of texture popping, or getting a lot of PCI Express traffic, our recommendation is to turn the texture quality setting down one notch. We've done numerous tests to look at image quality, and particularly at 1080p and 1440p, it can be very difficult to see any difference between the maximum and high texture settings in modern games.

For better or worse, the RTX 4060 is the GPU that Nvidia has created for this generation. Many of the design decisions were probably made two years ago. Walking back from 12GB on the 3060 to 8GB on the 4060 is still a head scratcher, unfortunately.

The bottom line is this. If you're the type of gamer that needs to have every graphics option turned on, we're now at the point where a lot of games will exceed 8GB of VRAM use, even at 1080p. Most games won't have issues if you drop texture quality (and possibly shadow quality) down one notch, but it still varies by game. So if the thought of turning down any settings triggers your FOMO reflex, the RTX 4060 isn't the GPU for you.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: RTX 4060: Is 8GB of VRAM Insufficient?

Prev Page Nvidia GeForce RTX 4060 Introduction Next Page Asus GeForce RTX 4060 Dual OC

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

healthy Pro-teen Nvidia's marketing be like: In germany you could maybe save $132 over 4 years if you play 20hours/week due to energy costs compared to the 3060. That is hilarious and obviously the tiny 50 tier die is efficient lol.Reply -

tennis2 @JarredWaltonGPU I feel compelled to give you huge props for the stellar write-up!Reply

Only made it halfway through the article, so I'll have to come back when I have time to finish, but wow....there's so much good stuff here. I hope everyone takes the time to fully read this article. I know that can get tricky once the "architecture launch" article is done for a generation. It's nice to be able to learn some new things from a "standard" GPU review even after I've been reading reviews from multiple sites of every GPU launched for the past.....10+ years. -

lmcnabney The expectations for GPUs going back decades is that the new generation will provide performance in-line with one or sometimes two tiers above it from the prior generation. That means the 4060 should perform somewhere between a 3060ti and 3070. It doesn't. It can't even meet the abilities of the 3060ti. This card should not receive a positive review because it fails at meeting the minimum performance standard.Reply -

oofdragon Utterly crap.. can't even match a 3060Ti, but of course since that amazing accomplishment goes to the failure 4060Ti. Meanwhile anyone can get a 6700XT with 12GB VRAM for half the price. FAIL FAIL FAIL FAIL FAIL FAIL FAILReply -

Elusive Ruse Thanks for the review, however your test suite is outdated. You need to consider bringing a host of newer releases.Reply -

DSzymborski Replyoofdragon said:Utterly crap.. can't even match a 3060Ti, but of course since that amazing accomplishment goes to the failure 4060Ti. Meanwhile anyone can get a 6700XT with 12GB VRAM for half the price. FAIL FAIL FAIL FAIL FAIL FAIL FAIL

You're comparing a used GPU to a new GPU. That increased risk is a fundamental difference which has to be addressed in any comparison. -

Elusive Ruse Reply

You can get a new one around $310-$320DSzymborski said:You're comparing a used GPU to a new GPU. That increased risk is a fundamental difference which has to be addressed in any comparison. -

DSzymborski ReplyElusive Ruse said:You can get a new one around $310-$320

He's literally quoting $200 above as his point of comparison. That's a used card. -

randyh121 Reply

lol the 6700XT is nearly 500$ and the 4060 runs betteroofdragon said:Utterly crap.. can't even match a 3060Ti, but of course since that amazing accomplishment goes to the failure 4060Ti. Meanwhile anyone can get a 6700XT with 12GB VRAM for half the price. FAIL FAIL FAIL FAIL FAIL FAIL FAIL

keep lying to yourself amd-cope