OpenGL 3 & DirectX 11: The War Is Over

Compute Shader And Texture Compression

We mentioned this open secret in the conclusion of our article on CUDA. Microsoft wasn’t about to let the GPGPU market get away and now has its own language for using the GPU to crunch other tasks besides drawing pretty pictures. And guess what? The model they chose, like OpenCL, appears to be quite similar to CUDA, confirming the clarity of Nvidia’s vision. The advantage over the Nvidia solution lies in portability—a Compute Shader will work on an Nvidia or ATI GPU and on the future Larrabee, plus feature better integration with Direct3D, even if CUDA does already have a certain amount of support. But we won’t spend any more time on this subject, even if it is a huge one. Instead, we’ll look at all this in more detail in a few months with a story on OpenCL and Compute Shaders.

Improved Texture Compression

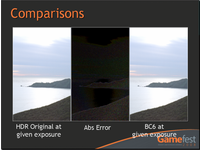

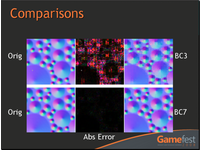

First included with DirectX 6 10 years ago, DXTC texture compression quickly spread to GPUs and has been used massively by developers ever since. Admittedly, the technology developed by S3 Graphics was effective, and the hardware cost was modest, which no doubt explains its success. But now needs have changed. DXTC wasn’t designed with compressing HDR image sources or normal maps in mind. So Direct3D’s goal was twofold: enabling compression of HDR images and limiting the “blockiness” of traditional DXTC modes. To do that, Microsoft introduced two new modes: BC6 for HDR images and BC7 for improving the quality of compression for LDR images.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Compute Shader And Texture Compression

Prev Page Tessellation Next Page Shader Model 5-

Gatekeeper_Guy Cool, but it will be a few years before we see at DX11 graphic card on the market.Reply -

stridervm Sadly, I agree by the author's opinions. Not simply for, but because it still give away the idea that PC gaming cannot be considered serious..... Unless you're using Windows, which is proprietary, the only viable alternative cannot be used because of the fear of losing compability. I just hope this can be remedied before Microsoft becomes.... Unreasonable and becomes power hungry..... If it isn't already. Look at how Windows systems cost now compared to the the alternative.Reply -

johnbilicki DirectX 11 will be available on Windows 7 and Vista? Great news indeed! Normal noobs will be able to own super noobs who are standing around looking at over-detailed shrubs.Reply

As for real gamers, we'll stick with XP until either Microsoft gets smart and clones XP and only adds on Aero or OpenGL gets it's act together and Linux becomes a viable gaming platform. It would be nice if it became a viable anything-other-then-a-web-server viable platform though. Linux gurus, feel free to let us know in sixty years that I won't have to explain to my grandmother how to type console commands to install a copy of Opera.

OpenGL can go screw backwards compatibility, look what it's done to (competent) web designers who are stuck dealing with Internet Explorer.

All the bad news about DirectX, OpenGL, and DRM makes me wonder if these companies want us to pirate the hell out of everything. At this rate "next generation" consoles might actually become the next generation consoles! -

jimmysmitty Gatekeeper_GuyCool, but it will be a few years before we see at DX11 graphic cardhttp://en.wikipedia.org/wiki/Video_card on the market.Reply

I thought the article said that DX11 is supposed to be compatable with previous gen hardware.

I know the Gemoetry Shader with Tesselation is already in all of the ATI Radeon HD GPUs so thats one thing it will support.

But no SP 5.0 support. I have heard that Intels GPU, Larrabee will support DX11. So that would mean late 2009/2010 will have at least one and that should mean that ATIs HD5K series and nVidias next step should includ support if they were smart and jumped on the wagon early. -

OpenGL may not have gotten the changes it needed to compete with DirectX as a gaming graphics API. But then you have people like Tim Sweeney telling us that graphics APIs are not going to be relevant that much longer (http://arstechnica.com/articles/paedia/gpu-sweeney-interview.ars).Reply

Direct3D 10 has changed very little in the industry so far, predictably only a very small number of games us it. And those who do can do most of it on Direct3D 9 as well. Maybe MS learned by now that releasing a new API on only the latest platform is a huge mistake, but it will still be a while before people will adapt their new API. And if Tim Sweeney's predictions come true, it will likely not happen at all. -

martel80 johnbilickiLinux gurus, feel free to let us know in sixty years that I won't have to explain to my grandmother how to type console commands to install a copy of Opera.I have been able to "accidentally" (because I'm no Linux guru, you know) install Opera through the Synaptic Package Manager on Ubuntu. So please, stop talking nonsense. :)Reply

The process was a bit different but overall faster than under Windows. -

dx 10 may not appear major but for devs its actually is.. no more checking cap bits.. that is a big improvment. dx10 is alot more strict in terms of what the drivers should do and thats good. doing away with fixed functions is also greatReply

however hardware tesselation if huge.. dx 11 also allows for hardware voxel rendering /raymarching thru compute shaders and alot of other stuff.. as apis become more general im sure the pace of new apis: will slow down, but that's not a indicator that pc gaming is dying (un informed people have claimed that the pc is dead since the ps1)

as for the windows/other platforms discussion, it is not the fault of microsoft that there is no viable alternative on other platforms. if someone chose to compete with microsoft, they could. but no one seems willing. what really should be done is a port/implementation of dx11 in open source..

however, in the cut throat buisness of game engines and gpu drivers, i seriously doubt that open source systems will ever be at the forefront of gaming -

phantom93 DX11 is compatiable with DX10 hardware. It should work when it is released unless they have bugs.Reply -

spaztic7 From my understanding, all HD4800 serious are DX11 compatible... and the HD4800 line is ray tracing compatible at ray tracings frame cap. I do not know about the 4600 line, but I don’t see why they wouldn’t be.Reply -

GAZZOO ow PC gameing is dieing allright the major game componies are starting to squeese out the PC games from there production list useing the excuse that they are loosing money through pirecy but what they are realy doing is cutting out one version forcing PC gamers to evolve into console playersReply

And from this article I get the impresion that microsoft has a hand in it aswell by making sure that the console games end up running better or as good as PC games

I think if the origenal Opengl was alowed to proceed years ago and if the follow up was taken and there wasnt any sabotage happening then the PC and its performance with mutly CPU GPU and the tecnolegy evolving with the progamers and propper apis in this area would have left the console market in the shade but this way Microsoft is eliminating other similar competion Apple

To cut to the chace Apple and OpenGl is getting the Microsft squeese and who has an interest in a console product :-)

I guess I might be one of the old dinosorse but I still am a PC gamer through and through even though I am grampar foda I love buiding PC units and playing well I havent been to a net game in a couple of years Pizza and beer he he heee But Il be buggered If I will lie down and die because of big buisness

Gazza