The OCZ RevoDrive 3 X2 Preview: Second-Gen SandForce Goes PCIe

What's Important: Steady State Performance

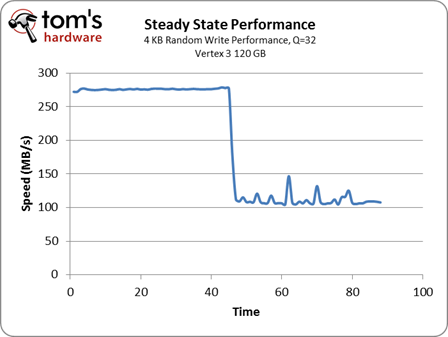

Steady State Performance

SSD manufacturers prefer us to benchmark drives the way they behave fresh out of the box because solid-state drives slow down once you start using them. If you give a SSD enough time, though, it will enter into a steady state, where performance starts to reflect more consistent long-term use. In general, reads are a little faster; writes are slower, while erases are the slowest yet.

We want to move away from benchmarking SSDs fresh out of the box because you only really get that performance for a limited time. After that, you end up with "steady state" performance until you perform a secure erase and start all over again. Now, I don't know about you, but we don't reinstall Windows 7 on our workstations every week. So, while performance right out of the box is interesting to look at, it's really not that important in the grand scheme of things. Steady state performance is what ultimately matters.

While this is a new move for us, IT professionals have long used this approach to evaluate SSDs. That's why the consortium of producers and consumers of storage products, Storage Networking Industry Association (SNIA), recommends benchmarking steady state performance. It's really the only way to examine the true performance of a SSD in a way that represents what you'll actually see over time.

There are multiple ways to get to a SSD’s steady state, but we're going to use a proprietary benchmark storage benchmark from Intel. This is a trace-based benchmark, which means that we're using an I/O recording to measure relative performance. Our trace, which we're dubbing Storage Bench v1.0, comes from a two-week recording of my own personal machine, and it captures the level of I/O that you would see during the first two weeks of setting up a computer.

Installation includes:

- Games like Call of Duty: Modern Warfare 2, Crysis 2, and Civilization V

- Microsoft Office 2010 Professional Plus

- Firefox

- VMware

- Adobe Photoshop CS5

- Various Canon and HP Printer Utilities

- LCD Calibration Tools: ColorEyes, i1Match

- General Utility Software: WinZip, Adobe Acrobat Reader, WinRAR, Skype

- Development Tools: Andriod SDK, iOS SDK, and Bloodshed

- Multimedia Software: iTunes, VLC

The I/O workload is somewhat moderate. I read the news, browse the Web for information, read several white papers, occasionally compile code, run gaming benchmarks, and calibrate monitors. On a daily basis, I edit photos, upload them to our corporate server, write articles in Word, and perform research across multiple Firefox windows.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The following are stats on the two-week trace of my personal workstation:

| Statistics | Storage Bench v1.0 |

|---|---|

| Read Operations | 7 408 938 |

| Write Operations | 3 061 162 |

| Data Read | 84.27 GB |

| Data Written | 142.19 GB |

| Max Queue Depth | 452 |

According to the stats, I'm writing more data than I'm reading over the course of two weeks. However, this needs to put into context. Remember that the trace includes the I/O activity of setting up the computer. A lot of this information is considered one-touch, since it isn't accessed repeatedly. If we exclude the first few hours of my trace, the amount of data written drops by over 50%. So on a day-to-day basis, my usage pattern evens out to include a fairly balanced mix of read and writes (~8-10 GB/day). That seems pretty typical for the average desktop user, though this number is expected to favor reads among the folks consuming streaming media on a larger and more frequent basis.

On a separate note, we specifically avoided creating a really big trace by installing multiple things over the course of a few hours, because that really doesn't capture real-world use. As Intel points out, traces of this nature are largely contrived because they don't take into account idle garbage collection, which has a tangible effect on performance (more on that later).

Current page: What's Important: Steady State Performance

Prev Page Test Setup Next Page Storage Bench v1.0: Real-World Analysis-

warmon6 ReplyIt's not a business-class product. It's for the power user who is able to tax it using the right workload. If you're not one of those folks, the RevoDrive 3 X2 is seriously overkill.

OVERKILL?!?!

Nothing is overkill in the computer arena in terms of performance. :p

Just the price can be over kill. o.0 -

acku Santa is going to need a bigger expense account... :)Reply

Personally, I'm hoping that OCZ adds TRIM prior to September.

Cheers,

Andrew Ku

TomsHardware.com -

greenrider02 I saw defense of the Vertex 3's occasional low numbers, but no mention of the solid (and sometimes better) performance that the cheaper and more miserly Crucial m4 showed throughout your tests.Reply

Perhaps you have some bias towards the Vertex 3 that needs reconsideration?

Other than that, $700 seems like a fair price when considering the performace difference, especially if utilized properly, for instance as a high traffic web/corporate server -

acku greenrider02I saw defense of the Vertex 3's occasional low numbers, but no mention of the solid (and sometimes better) performance that the cheaper and more miserly Crucial m4 showed throughout your tests.Perhaps you have some bias towards the Vertex 3 that needs reconsideration?Other than that, $700 seems like a fair price when considering the performace difference, especially if utilized properly, for instance as a high traffic web/corporate serverReply

If you read the first page then you know that I give a nod to Vertex 3s as the fastest MLC based 2.5" SSD. I consider that plenty of love. :).

We'll discuss the lower capacity m4s in another article. FYI, I suggest that you read page 5 and page 6. We are not testing FOB. We are testing steady state. That's part of the reason the SF-based drives are behaving differently with incompressible data.

On your second point, this is in no way targeted toward an enterprise environment (that's what Z-drives are for). There is no redundancy in the array if a single SF controller fails. The whole card is a dud afterward. You can add higher level redundancy, but enterprise customers have so far been nervous on SandForce products. Plus, there's a general preference for hardware vs. software redundancy. (That's them talking not me). Overall, this makes it unacceptable for any enterprise class workload.