Investigation: Is Your SSD More Reliable Than A Hard Drive?

Decrypting Failure Statistics: ZT Systems (~155 000 SSDs)

Hard drives and SSDs are both affected by different factors as a result of their respective architectures. When we're thinking about reasons to worry about hard drive health, we gravitate to the fact that they're based on mechanical, moving parts that, although designed to very specific tolerances, are destined to wear out over time.

We also know that SSDs don't have those issues. Their solid-state nature alleviates the fear of damage due to crashing heads or a worn motor. But because SSDs are virtualized (in that you can't physically map out a static LBA space as you can on a hard drive), there are other variables in play that tend to affect reliability. Firmware is the most significant, and we see its impact in play almost every time an SSD problem is reported.

Over the past three years, Intel's SSD-related bugs have all been stomped out over time with newer firmware. Crucial's issues with link power management on the m4 were solved with a new firmware. And we've seen SandForce's most well-known partner, OCZ, address a number of customer complaints with various firmware versions. In fact, the SandForce situation is particularly unique. Because drive vendors are able to tweak the controller's firmware as a means of differentiation, SandForce-based drives from any given company could conceivably have different issues. That certainly complicates the issue of reliability (or at least consistency).

Specific issues aside, this discussion feeds into our point that there's a need to compare failure numbers across brands. The problem with this is that the way each vendor, reseller, and customer does the math is slightly different, making a true comparison almost impossible.

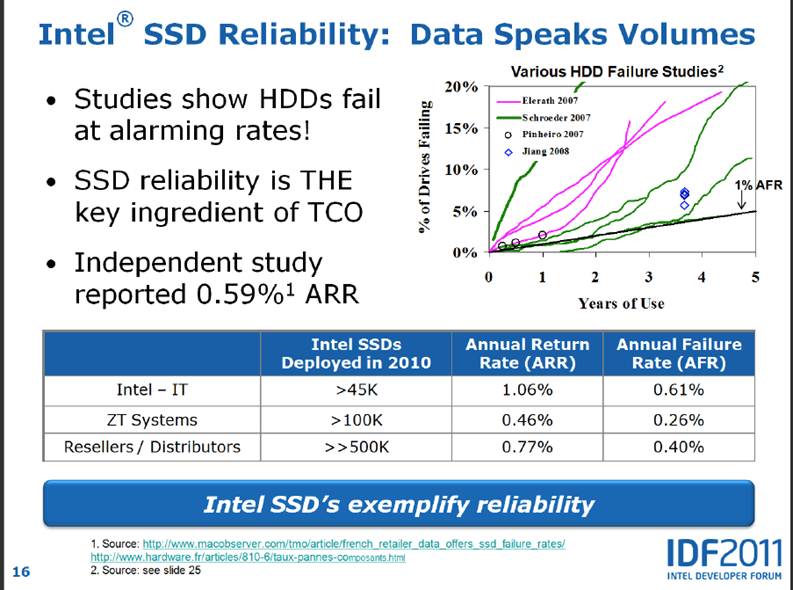

For example, we were extremely impressed by Intel's reliability presentation at IDF 2011. But in discussions with ZT Systems, the company Intel cited, we discovered that the 0.26% AFR figure doesn't actually take age into account and only covers validated errors. Frankly, if you're an IT manager, you care about unvalidated errors, too. There are situations where you send a defective product back and the manufacturer claims there's no error. This doesn't mean that the drive is problem-free, because it could be suffering from a configuration- or application-oriented issue. We've seen plenty of real-world examples to know that this continues to be a problem.

Unvalidated errors are typically 2x to 3x higher than validated errors. Indeed, ZT System claims an unvalidated failure rate of 0.43% for 155 000 X25-Ms, but again this figure isn't normalized for age, as drives were deployed in groups. According to the company's CTO, Casey Cerretani, the final numbers are still being tabulated, but early estimates peg the unvalidated AFR for the first year closer to 0.7%. Of course, this still doesn't take long-term reliability into account, which is one reason it's difficult to fairly compare solid-state and hard disk drives.

The bottom line is that we now know different reporting methods easily affect the perception of reliability when it comes to digesting vendor data. Moreover, only time will tell if the SSD reliability story will hold up against hard drives. And now you know why they're not as directly comparable as some of the information presented might suggest.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Decrypting Failure Statistics: ZT Systems (~155 000 SSDs)

Prev Page Is Reliability Important? Next Page Final Words-

hardcore_gamer Endurance of floating gate transistor used in flash memories is low. The gate oxide wears out due to the tunnelling of electrons across it. Hopefully phase change memory can change things around since it offers 10^6 times more endurance for technology nodesReply -

acku Reply10444003 said:Endurance of floating gate transistor used in flash memories is low. The gate oxide wears out due to the tunnelling of electrons across it. Hopefully phase change memory can change things around since it offers 10^6 times more endurance for technology nodes

As we explained in the article, write endurance is a spec'ed failure. That won't happen in the first year, even at enterprise level use. That has nothing to do with our data. We're interested in random failures. The stuff people have been complaining about... BSODs with OCZ drives, LPM stuff with m4s, the SSD 320 problem that makes capacity disappear... etc... Mostly "soft" errors. Any hard error that occurs is subject to the "defective parts per million" problem that any electrical component also suffers from.

Cheers,

Andrew Ku

TomsHardware.com -

slicedtoad hacker groups like lulsec should do something useful and get this kind of internal data from major companies.Reply -

jobz000 Great article. Personally, I find myself spending more and more time on a smartphone and/or tablet, so I feel ambivalent about spending so much on a ssd so I can boot 1 sec faster.Reply -

You guys do the most comprehensive research I have ever seen. If I ever have a question about anything computer related, this is the first place I go to. Without a doubt the most knowledgeable site out there. Excellent article and keep up the good work.Reply

-

acku slicedtoadhacker groups like lulsec should do something useful and get this kind of internal data from major companies.Reply

All of the data is so fragmented... I doubt that would help. You still need to take a fine toothcomb to figure out how the numbers were calculated.

gpm23You guys do the most comprehensive research I have ever seen. If I ever have a question about anything computer related, this is the first place I go to. Without a doubt the most knowledgeable site out there. Excellent article and keep up the good work.

Thank you. I personally love these type of articles.. very reminiscent of academia. :)

Cheers,

Andrew Ku

TomsHardware.com -

K-zon I will say that i didn't read the article word for word. But of it seems that when someone would change over from hard drive to SSD, those numbers might be of interest.Reply

Of the sealed issue of return, if by the time you check that you had been using something different and something said something else different, what you bought that was different might not be of useful use of the same thing.

Otherwise just ideas of working with more are hard said for what not to be using that was used before. Yes?

But for alot of interest into it maybe is still that of rather for the performance is there anything of actual use of it, yes?

To say the smaller amounts of information lost to say for the use of SSDs if so, makes a difference as probably are found. But of Writing order in which i think they might work with at times given them the benefit of use for it. Since they seem to be faster. Or are.

Temperature doesn't seem to be much help for many things are times for some reason. For ideas of SSDs, finding probably ones that are of use that reduce the issues is hard from what was in use before.

When things get better for use of products is hard placed maybe.

But to say there are issues is speculative, yes? Especially me not reading the whole article.

But of investments and use of say "means" an idea of waste and less use for it, even if its on lesser note , is waste. In many senses to say of it though.

Otherwise some ideas, within computing may be better of use with the drives to say. Of what, who knows...

Otherwise again, it will be more of operation place of instances of use. Which i think will fall into order of acccess with storage, rather information is grouped or not grouped to say as well.

But still. they should be usually useful without too many issues, but still maybe ideas of timiing without some places not used as much in some ways. -

cangelini To the contrary! We noticed that readers were looking to see OWC's drives in our round-ups. I made sure they were invited to our most recent 120 GB SF-2200-based story, and they chose not to participate (this after their rep jumped on the public forums to ask why OWC wasn't being covered; go figure).Reply

They will continue to receive invites for our stories, and hopefully we can do more with OWC in the future!

Best,

Chris Angelini -

ikyung Once you go SSD, you can't go back. I jumped on the SSD wagon about a year ago and I just can't seem to go back to HDD computers =Reply