Investigation: Is Your SSD More Reliable Than A Hard Drive?

What Do We Know About Storage?

SSDs are a relatively new technology (at least compared to hard drives, which are almost 60 years old). It’s understandable that we would compare the new kid on the block against tried and true. But what do we really know about hard drives? Two important studies shed some light. Back in 2007, Google published a study on the reliability of 100 000 consumer PATA and SATA drives used in its data center. Similarly, Dr. Bianca Schroeder and adviser Dr. Garth Gibson calculated the replacement rates of over 100 000 drives used at some of the largest national labs. The difference is that they also cover enterprise SCSI, SATA, and Fibre Channel drives.

If you haven’t read either paper, we highly recommend at least reading the second study. It won best paper at the File and Storage Technologies (FAST ’07) conference. For those not interested in pouring over academic papers, we’ll also summarize.

MTTF Rating

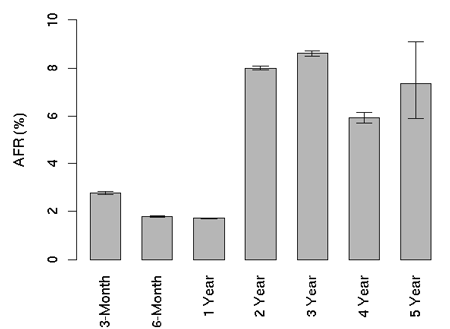

You remember what MTBF means (here's a hint: we covered it on page four of OCZ's Vertex 3: Second-Generation SandForce For The Masses), right? Let’s use the Seagate Barracuda 7200.7 as an example. It has a 600 000-hour MTBF rating. In any large population, we'd expect half of these drives to fail in the first 600 000 hours of operation. Assuming failures are evenly distributed, one drive would fail per hour. We can convert this to an annualized failure rate (AFR) of 1.44%.

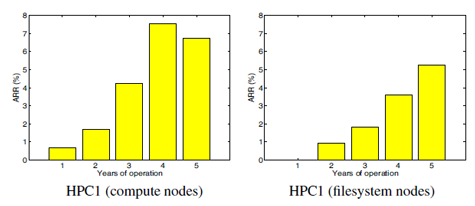

But that’s not what Google or Dr. Schroeder found, because failures do not necessarily equal disk replacements. That is why Dr. Schroeder measured the annualized replacement rate (ARR). This is based on the number of actual disks replaced, according to service logs.

While the datasheet AFRs are between 0.58% and 0.88%, the observed ARRs (annualized replacement rates) range from 0.5% to as high as 13.5%. That is, the observed ARRs by data set and type, are by up to a factor of 15 higher than datasheet AFRs.

Drive makers define failures differently than we do, and it’s no surprise that their definition overstates drive reliability. Typically, a MTBF rating is based on accelerated life testing, return unit data, or a pool of tested drives. Vendor return data continues to be highly suspect, though. As Google states, “we have observed… situations where a drive tester consistently ‘green lights’ a unit that invariably fails in the field.”

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Drive Failure Over Time

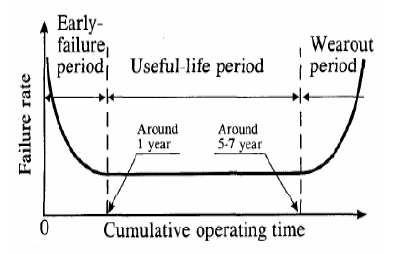

Most people assume that the failure rate of a hard drive looks like a bathtub curve. At first, you see many drives fail in the beginning due to a phenomenon referred to as infant mortality. After that initial period, you expect to see low failure rates. At the other end, there’s a steady rise as drives finally wear out. Neither study found that assumption to be true. Overall, they found that drive failures steadily increase with age.

Enterprise Drive Reliability

When you compare the two studies, you realize that the 1 000 000 MTBF Cheetah drive is much closer to a datasheet MTBF of 300 000 hours. This means that “enterprise” and “consumer” drives have pretty much the same annualized failure rate, especially when you are comparing similar capacities. According to Val Bercovici, director of technical strategy at NetApp, "…how storage arrays handle the respective drive type failures is what continues to perpetuate the customer perception that more expensive drives should be more reliable. One of the storage industry’s dirty secrets is that most enterprise and consumer drives are made up of largely the same components. However, their external interfaces (FC, SCSI, SAS, or SATA), and most importantly their respective firmware design priorities/resulting goals play a huge role in determining enterprise versus consumer drive behavior in the real world."

Data Safety and RAID

Dr. Schroeder’s study covers the use of enterprise drives used in large RAID systems in some of the biggest high-performance computing labs. Typically, we assume that data is safer in properly-chosen RAID modes, but the study found something quite surprising.

The distribution of time between disk replacements exhibits decreasing hazard rates, that is, the expected remaining time until the next disk was replaced grows with the time it has been since the last disk replacement.

This means that the failure of one drive in an array increases the likelihood of another drive failure. The more time that passes since the last failure means the more time is expected to pass until the next one. Of course, this has implications for the RAID reconstruction process. After the first failure, you are four times more likely to see another drive fail within the same hour. Within 10 hours, you are only two times more likely to experience a subsequent failure.

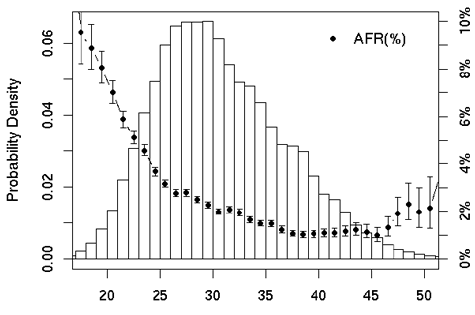

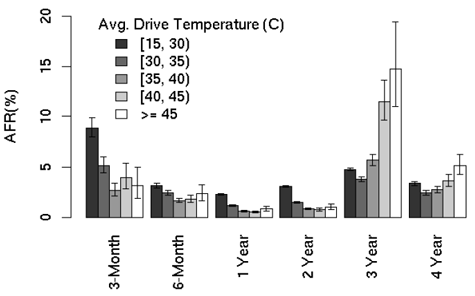

Temperature

One of the stranger conclusions comes from Google’s paper. The researchers took temperature readings from SMART—the self-monitoring, analysis, and reporting technology built into most hard drives—and they found that a higher operating temperature did not correlate with a higher failure rate. Temperature does seem to affect older drives, but the effect is minor.

Is SMART Really Smart?

The short answer is no. SMART was designed to catch disk errors early enough so that you can back up your data. But according to Google, more than one-third of all failed drives did not trigger an alert in SMART. This isn't a huge surprise, as many industry insiders have been suspecting this for years. It turns out that SMART is really optimized to catch mechanical failures. Much of a disk is still electronic, though. That's why behavioral and situational problems like power failure go, unnoticed while data integrity issues are caught. If you're relying on SMART to tell you of an impending failure, you need to plan for additional layer of redundancy if you want to ensure the safety of your data.

Now let's see how SSDs stack up against hard drives.

Current page: What Do We Know About Storage?

Prev Page SSD Reliability: Is Your Data Really Safe? Next Page A Peek Into SSD Reliability-

hardcore_gamer Endurance of floating gate transistor used in flash memories is low. The gate oxide wears out due to the tunnelling of electrons across it. Hopefully phase change memory can change things around since it offers 10^6 times more endurance for technology nodesReply -

acku Reply10444003 said:Endurance of floating gate transistor used in flash memories is low. The gate oxide wears out due to the tunnelling of electrons across it. Hopefully phase change memory can change things around since it offers 10^6 times more endurance for technology nodes

As we explained in the article, write endurance is a spec'ed failure. That won't happen in the first year, even at enterprise level use. That has nothing to do with our data. We're interested in random failures. The stuff people have been complaining about... BSODs with OCZ drives, LPM stuff with m4s, the SSD 320 problem that makes capacity disappear... etc... Mostly "soft" errors. Any hard error that occurs is subject to the "defective parts per million" problem that any electrical component also suffers from.

Cheers,

Andrew Ku

TomsHardware.com -

slicedtoad hacker groups like lulsec should do something useful and get this kind of internal data from major companies.Reply -

jobz000 Great article. Personally, I find myself spending more and more time on a smartphone and/or tablet, so I feel ambivalent about spending so much on a ssd so I can boot 1 sec faster.Reply -

You guys do the most comprehensive research I have ever seen. If I ever have a question about anything computer related, this is the first place I go to. Without a doubt the most knowledgeable site out there. Excellent article and keep up the good work.Reply

-

acku slicedtoadhacker groups like lulsec should do something useful and get this kind of internal data from major companies.Reply

All of the data is so fragmented... I doubt that would help. You still need to take a fine toothcomb to figure out how the numbers were calculated.

gpm23You guys do the most comprehensive research I have ever seen. If I ever have a question about anything computer related, this is the first place I go to. Without a doubt the most knowledgeable site out there. Excellent article and keep up the good work.

Thank you. I personally love these type of articles.. very reminiscent of academia. :)

Cheers,

Andrew Ku

TomsHardware.com -

K-zon I will say that i didn't read the article word for word. But of it seems that when someone would change over from hard drive to SSD, those numbers might be of interest.Reply

Of the sealed issue of return, if by the time you check that you had been using something different and something said something else different, what you bought that was different might not be of useful use of the same thing.

Otherwise just ideas of working with more are hard said for what not to be using that was used before. Yes?

But for alot of interest into it maybe is still that of rather for the performance is there anything of actual use of it, yes?

To say the smaller amounts of information lost to say for the use of SSDs if so, makes a difference as probably are found. But of Writing order in which i think they might work with at times given them the benefit of use for it. Since they seem to be faster. Or are.

Temperature doesn't seem to be much help for many things are times for some reason. For ideas of SSDs, finding probably ones that are of use that reduce the issues is hard from what was in use before.

When things get better for use of products is hard placed maybe.

But to say there are issues is speculative, yes? Especially me not reading the whole article.

But of investments and use of say "means" an idea of waste and less use for it, even if its on lesser note , is waste. In many senses to say of it though.

Otherwise some ideas, within computing may be better of use with the drives to say. Of what, who knows...

Otherwise again, it will be more of operation place of instances of use. Which i think will fall into order of acccess with storage, rather information is grouped or not grouped to say as well.

But still. they should be usually useful without too many issues, but still maybe ideas of timiing without some places not used as much in some ways. -

cangelini To the contrary! We noticed that readers were looking to see OWC's drives in our round-ups. I made sure they were invited to our most recent 120 GB SF-2200-based story, and they chose not to participate (this after their rep jumped on the public forums to ask why OWC wasn't being covered; go figure).Reply

They will continue to receive invites for our stories, and hopefully we can do more with OWC in the future!

Best,

Chris Angelini -

ikyung Once you go SSD, you can't go back. I jumped on the SSD wagon about a year ago and I just can't seem to go back to HDD computers =Reply