IBM demonstrates useful Quantum computing within 133-qubit Heron, announces entry into Quantum-centric supercomputing era

Quantum computing as the core of a symbiotic balance between classical computing and the new universes of quantum utility.

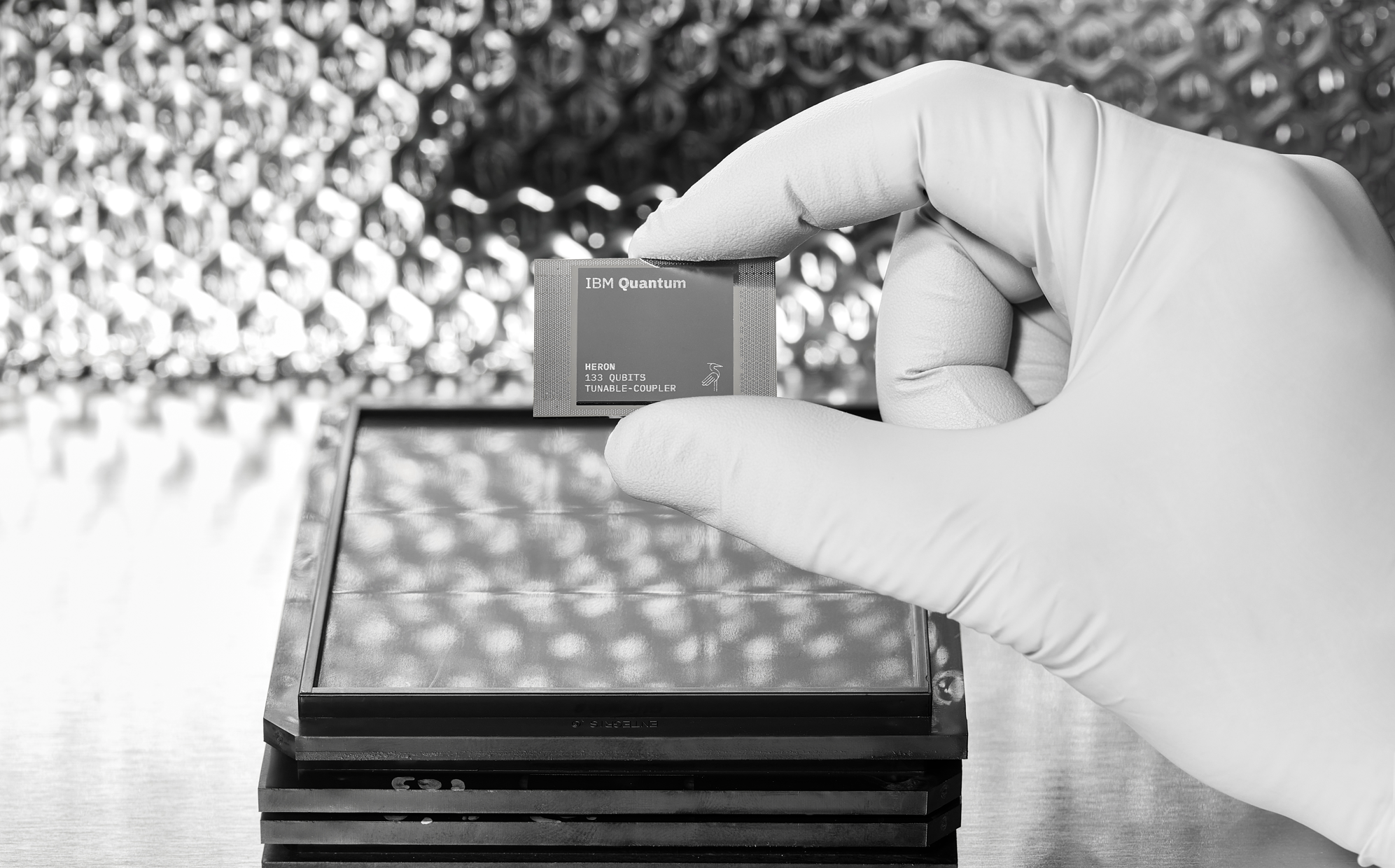

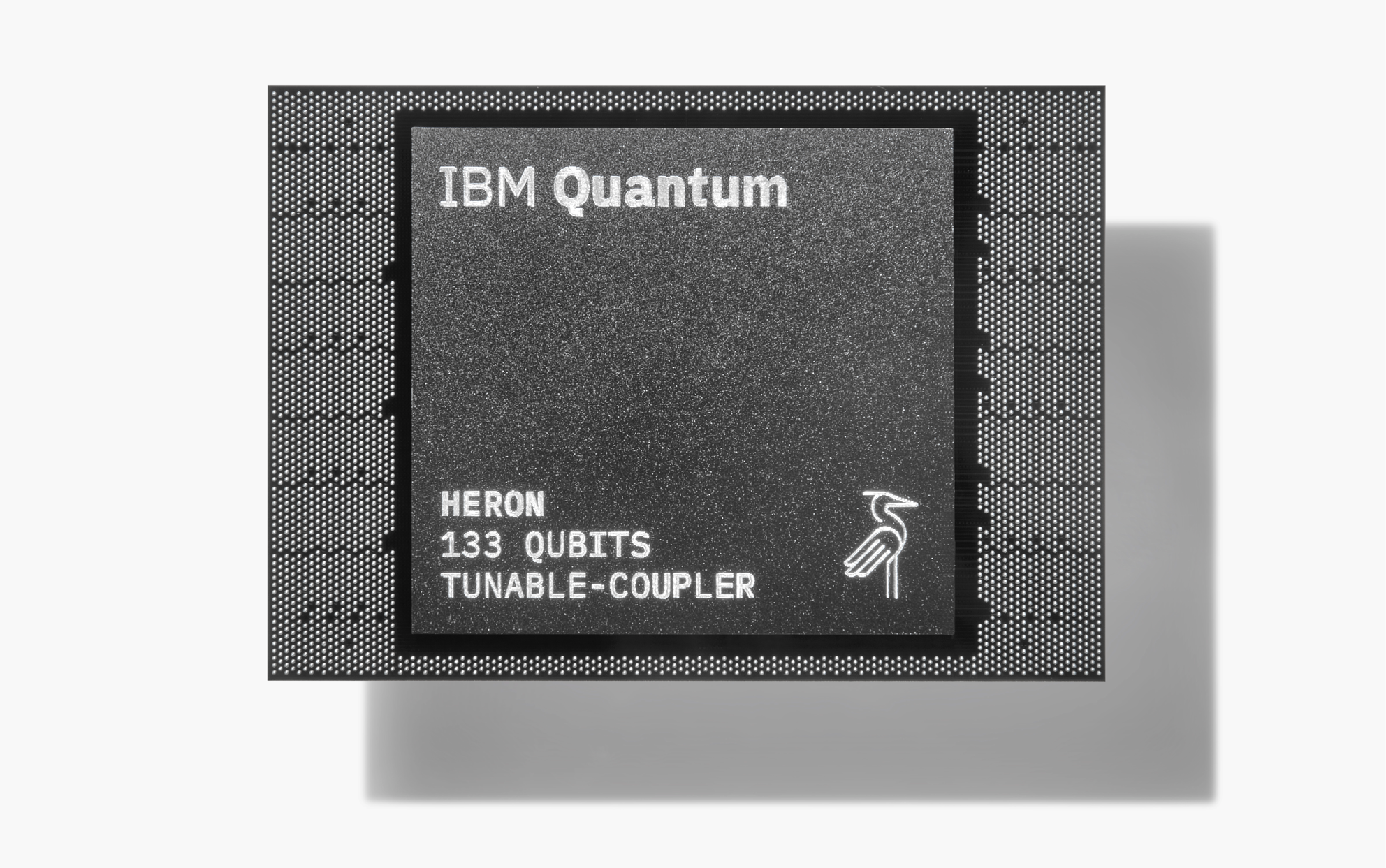

At its Quantum Summit 2023, IBM took the stage with an interesting spirit: one of almost awe at having things go their way. But the quantum of today – the one that’s changing IBM’s roadmap so deeply on the back of breakthrough upon breakthrough – was hard enough to consolidate. As IBM sees it, the future of quantum computing will hardly be more permissive. IBM announced cutting-edge devices at the event, including the 133-qubit Heron Quantum Processing Unit (QPU), which is the company's first utility-scale quantum processor, and the self-contained Quantum System Two, a quantum-specific supercomputing architecture. And further improvements to the cutting-edge devices are ultimately required.

Each breakthrough that afterward becomes obsolete is another accelerating bump against what we might call quantum's "plateau of understanding." We’ve already crested this plateau with semiconductors, so much so that the latest CPUs and GPUs are reaching practical, fundamental design limits where quantum effects start ruining our math. Conquering the plateau means that utility and understanding are now enough for research and development to be somewhat self-sustainable – at least for a Moore’s-law-esque while.

IBM’s Quantum Summit serves as a bookend of sorts for the company’s cultural and operational execution, and its 2023 edition showcased an energized company that feels like it's opening up the doors towards a "quantum-centric supercomputing era." That vision is built on the company's new Quantum Processing Unit, Heron, which showcases scalable quantum utility at a 133-qubit count and already offers things beyond what any feasible classical system could ever do. Breakthroughs and a revised understanding of its own roadmap have led IBM to present its quantum vision in two different roadmaps, prioritizing scalability in tandem with useful, minimum-quality rather than monolithic, hard-to-validate, high-complexity products.

IBM's announced new plateau for quantum computing packs in two particular breakthroughs that occurred in 2023. One breakthrough relates to a groundbreaking noise-reduction algorithm (Zero Noise Extrapolation, or ZNE) which we covered back in July – basically a system through which you can compensate for noise. For instance, if you know a pitcher tends to throw more to the left, you can compensate for that up to a point. There will always be a moment where you correct too much or cede ground towards other disruptions (such as the opponent exploring the overexposed right side of the court). This is where the concept of qubit quality comes into account – the more quality your qubits, the more predictable both their results and their disruptions and the better you know their operational constraints – then all the more useful work you can extract from it.

The other breakthrough relates to an algorithmic improvement of epic proportions and was first pushed to Arxiv on August 15th, 2023. Titled “High-threshold and low-overhead fault-tolerant quantum memory,” the paper showcases algorithmic ways to reduce qubit needs for certain quantum calculations by a factor of ten. When what used to cost 1,000 qubits and a complex logic gate architecture sees a tenfold cost reduction, it’s likely you’d prefer to end up with 133-qubit-sized chips – chips that crush problems previously meant for 1,000 qubit machines.

Enter IBM’s Heron Quantum Processing Unit (QPU) and the era of useful, quantum-centric supercomputing.

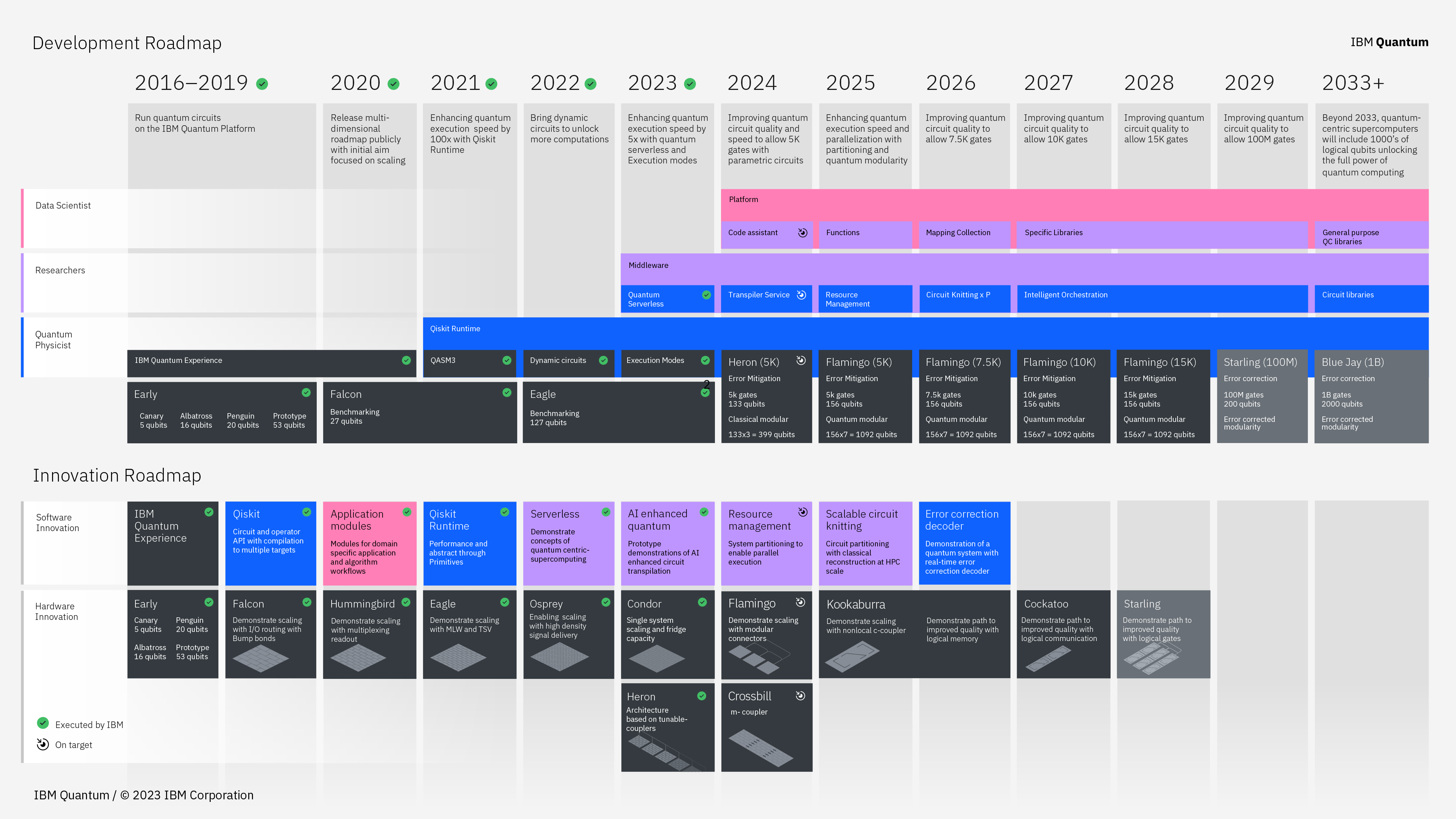

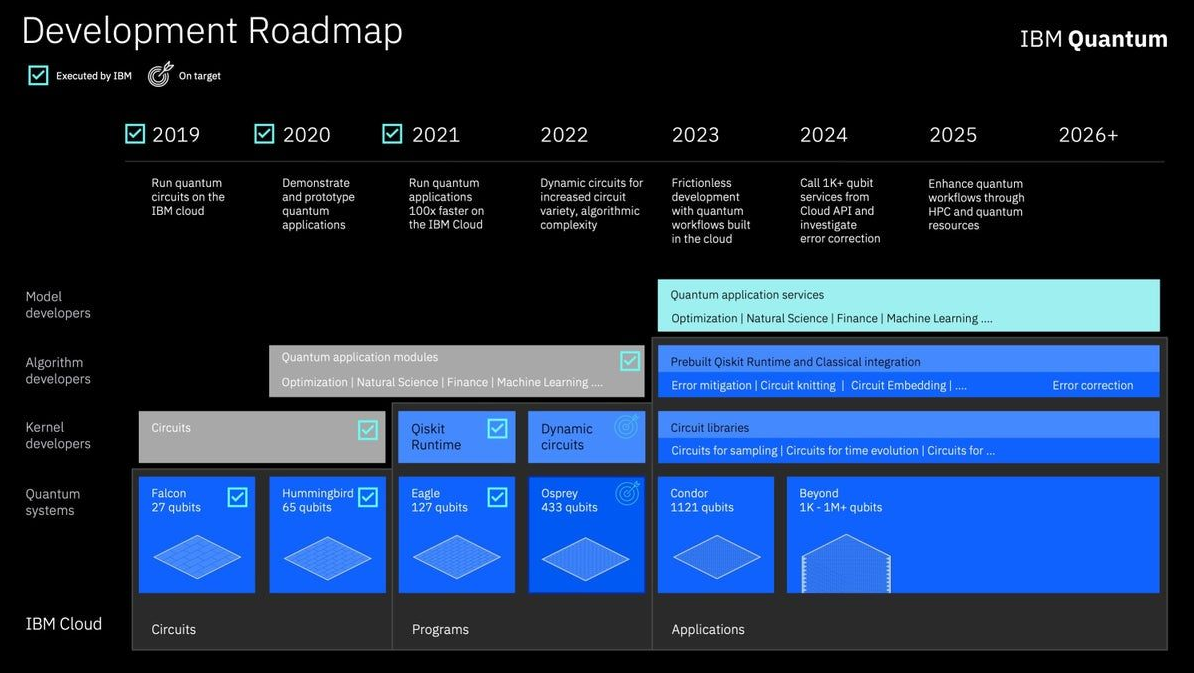

The Quantum Roadmap at IBM’s Quantum Summit 2023

IBM Quantum Summit 2023 - Heron, Quantum System Two, and the Next Ten Years

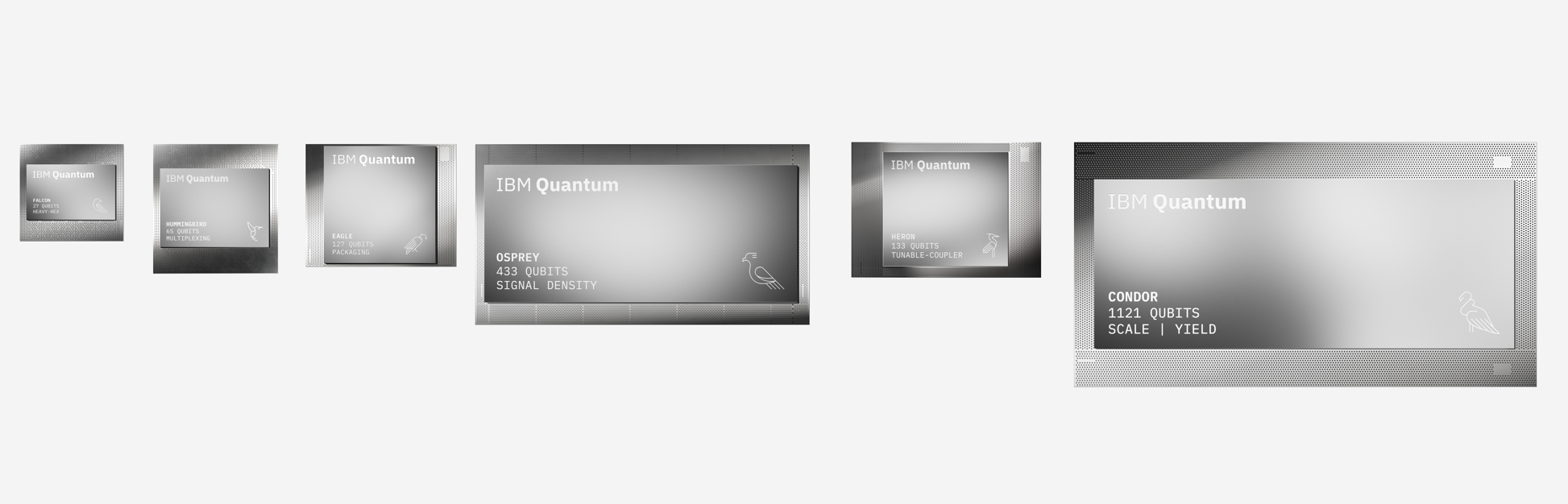

IBM's roadmap pre-Heron success.

The two-part breakthroughs of error correction (through the ZNE technique) and algorithmic performance (alongside qubit gate architecture improvements) allow IBM to now consider reaching 1 billion operationally useful quantum gates by 2033. It just so happens that it’s an amazing coincidence (one born of research effort and human ingenuity) that we only need to keep 133 qubits relatively happy within their own environment for us to extract useful quantum computing from them – computing that we wouldn’t classically be able to get anywhere else.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The “Development” and “Innovation” roadmap showcase how IBM is thinking about its superconducting qubits: as we’ve learned to do with semiconductors already, mapping out the hardware-level improvements alongside the scalability-level ones. Because as we’ve seen through our supercomputing efforts, there’s no such thing as a truly monolithic approach: every piece of supercomputing is (necessarily) efficiently distributed across thousands of individual accelerators. Your CPU performs better by knitting and orchestrating several different cores, registers, and execution units. Even Cerebra’s Wafer Scale Engine scales further outside its wafer-level computing unit. No accelerator so far – no unit of computation - has proven powerful enough that we don’t need to unlock more of its power by increasing its area or computing density. Our brains and learning ability seem to provide us with the only known exception.

IBM’s modular approach and its focus on introducing more robust intra-QPU and inter-QPU communication for this year’s Heron shows it’s aware of the rope it's walking between quality and scalability. The thousands of hardware and scientist hours behind developing the tunable couplers that are one of the signature Heron design elements that allow parallel execution across different QPUs is another. Pushing one lever harder means other systems have to be able to keep up; IBM also plans on steadily improving its internal and external coupling technology (already developed with scalability in mind for Heron) throughout further iterations, such as Flamingo’s planned four versions which still “only” end scaling up to 156 qubits per QPU.

Considering how you're solving scalability problems and the qubit quality x density x ease of testing equation, the ticks - the density increases that don't sacrifice quality and are feasible from a testing and productization standpoint - may be harder to unlock. But if one side of development is scalability, the other relates to the quality of whatever you’re actually scaling – in this case, IBM’s superconducting qubits themselves. Heron itself saw a substantial rearrangement of its internal qubit architecture to improve gate design, accessibility, and quantum processing volumes – not unlike an Intel tock. The planned iterative improvements to Flamingo's design seem to confirm this.

Utility-Level Quantum Computing

There’s a sweet spot for the quantum computing algorithms of today: it seems that algorithms that fit roughly around a 60-gate depth are complex enough to allow for useful quantum computing. Perhaps thinking about Intel’s NetBurst architecture with its Pentium 4 CPUs is appropriate here: too deep an instruction pipeline is counterproductive, after a point. Branch mispredictions are terrible across computing, be it classical or quantum. And quantum computing – as we still currently have it in our Noisy Intermediate-Scale Quantum (NISQ)-era – is more vulnerable to a more varied disturbance field than semiconductors (there are world overclocking records where we chill our processors to sub-zero temperatures and pump them with above-standard volts, after all). But perhaps that comparable quantum vulnerability is understandable, given how we’re essentially manipulating the essential units of existence – atoms and even subatomic particles – into becoming useful to us.

Useful quantum computing doesn’t simply correlate with an increasing number of available in-package qubits (announcements of 1,000-qubit products based on neutral atom technology, for instance). But useful quantum computing is always stretched thin throughout its limits, and if it isn’t bumping against one fundamental limit (qubit count), it’s bumping against another (instability at higher qubit counts); or contending with issues of entanglement coherence and longevity; entanglement distance and capability; correctness of the results; and still other elements. Some of these scalability issues can be visualized within the same framework of efficient data transit between different distributed computing units, such as cores in a given CPU architecture, which can themselves be solved in a number of ways, such as hardware-based information processing and routing techniques (AMD’s Infinity Fabric comes to mind, as does Nvidia's NVLink).

This feature of quantum computing already being useful at the 133-qubit scale is also part of the reason why IBM keeps prioritizing quantum computing-related challenges around useful algorithms occupying a 100 by 100 grid. That quantum is already useful beyond classical, even in gate grids that are comparably small to what we can achieve with transistors, and points to the scale of the transition – of how different these two computational worlds are.

Then there are also the matters of error mitigation and error correction, of extracting ground-truth-level answers to the questions we want our quantum computer to solve. There are also limitations in our way of utilizing quantum interference in order to collapse a quantum computation at just the right moment that we know we will obtain from it the result we want – or at least something close enough to correct that we can then offset any noise (non-useful computational results, or the difference of values ranging between the correct answer and the not-yet-culled wrong ones) through a clever, groundbreaking algorithm.

The above are just some of the elements currently limiting how useful qubits can truly be and how those qubits can be manipulated into useful, algorithm-running computation units. This is usually referred to as a qubit’s quality, and we can see how it both does and doesn’t relate to the sheer number of qubits available. But since many useful computations can already be achieved with 133-qubit-wide Quantum Processing Units (there’s a reason IBM settled on a mere 6-qubit increase from Eagle towards Heron, and only scales up to 156 units with Flamingo), the company is setting out to keep this optimal qubit width for a number of years of continuous redesigns. IBM will focus on making correct results easier to extract from Heron-sized QPUs by increasing the coherence, stability, and accuracy of these 133 qubits while surmounting the arguably harder challenge of distributed, highly-parallel quantum computing. It’s a one—two punch again, and one that comes from the bump in speed at climbing ever-higher stretches of the quantum computing plateau.

But there is an admission that it’s a barrier that IBM still wants to punch through – it’s much better to pair 200 units of a 156-qubit QPU (that of Flamingo) than of a 127-qubit one such as Eagle, so long as efficiency and accuracy remain high. Oliver Dial says that Condor, "the 1,000-qubit product", is locally running – up to a point. It was meant to be the thousand-qubit processor, and was a part of the roadmap for this year’s Quantum Summit as much as the actual focus, Heron - but it’s ultimately not really a direction the company thinks is currently feasible.

IBM did manage to yield all 1,000 Josephson Junctions within their experimental Condor chip – the thousand-qubit halo product that will never see the light of day as a product. It’s running within the labs, and IBM can show that Condor yielded computationally useful qubits. One issue is that at that qubit depth, testing such a device becomes immensely expensive and time-consuming. At a basic level, it’s harder and more costly to guarantee the quality of a thousand qubits and their increasingly complex possibility field of interactions and interconnections than to assure the same requirements in a 133-qubit Heron. Even IBM only means to test around a quarter of the in-lab Condor QPU’s area, confirming that the qubit connections are working.

But Heron? Heron is made for quick verification that it’s working to spec – that it’s providing accurate results, or at least computationally useful results that can then be corrected through ZNE and other techniques. That means you can get useful work out of it already, while also being a much better time-to-market product in virtually all areas that matter. Heron is what IBM considers the basic unit of quantum computation - good enough and stable enough to outpace classical systems in specific workloads. But that is quantum computing, and that is its niche.

The Quantum-Centric Era of Supercomputing

Heron is IBM’s entrance into the mass-access era of Quantum Processing Units. Next year’s Flamingo builds further into the inter-QPU coupling architecture so that further parallelization can be achieved. The idea is to scale at a base, post-classical utility level and maintain that as a minimum quality baseline. Only at that point will IBM maybe scale density and unlock the appropriate jump in computing capability - when that can be similarly achieved in a similarly productive way, and scalability is almost perfect for maintaining quantum usefulness.

There’s simply never been the need to churn out hundreds of QPUs yet – the utility wasn’t there. The Canaries, Falcons, and Eagles of IBM’s past roadmap were never meant to usher in an age of scaled manufacturing. They were prototypes, scientific instruments, explorations; proofs of concept on the road towards useful quantum computing. We didn’t know where usefulness would start to appear. But now, we do – because we’ve reached it.

Heron is the design IBM feels best answers that newly-created need for a quantum computing chip that actually is at the forefront of human computing capability – one that can offer what no classical computing system can (in some specific areas). One that can slice through specific-but-deeper layers of our Universe. That’s what IBM means when it calls this new stage the “quantum-centric supercomputing” one.

Classical systems will never cease to be necessary: both of themselves and the way they structure our current reality, systems, and society. They also function as a layer that allows quantum computing itself to happen, be it by carrying and storing its intermediate results or knitting the final informational state – mapping out the correct answer Quantum computing provides one quality step at a time. The quantum-centric bit merely refers to how quantum computing will be the core contributor to developments in fields such as materials science, more advanced physics, chemistry, superconduction, and basically every domain where our classical systems were already presenting a duller and duller edge with which to improve upon our understanding of their limits.

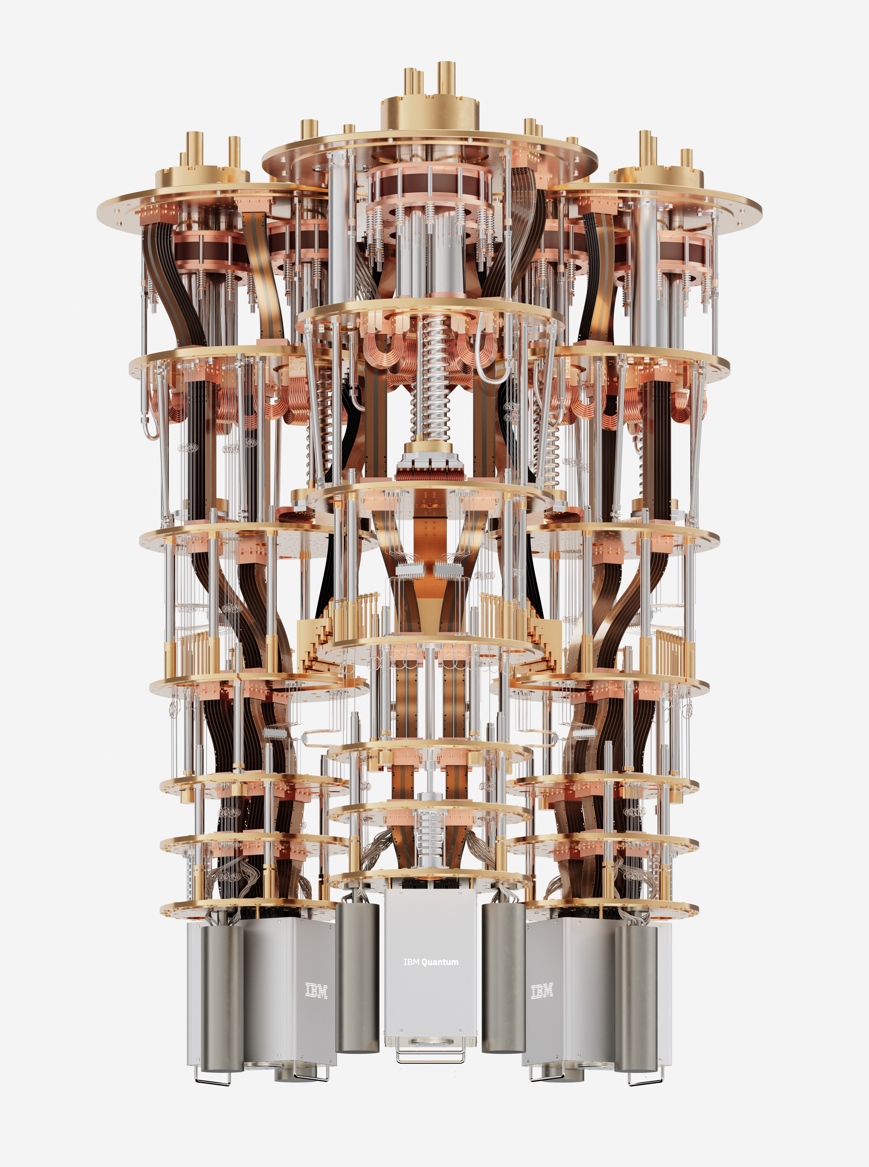

Quantum System Two, Transmon Scalability, Quantum as a Service

However, through IBM’s approach and its choice of transmon superconducting qubits, a certain difficulty lies in commercializing local installations. Quantum System Two, as the company is naming its new almost wholesale quantum computing system, has been shown working with different QPU installations (both Heron and Eagle). When asked about whether scaling Quantum System Two and similar self-contained products would be a bottleneck towards technological adoption, IBM’s CTO Oliver Dial said that it was definitely a difficult problem to solve, but that he was confident in their ability to reduce costs and complexity further in time, considering how successful IBM had already proven in that regard. For now, it’s easier for IBM’s quantum usefulness to be unlocked at a distance – through the cloud and its quantum computing framework, Quiskit – than it is to achieve it by running local installations.

Quiskit is the preferred medium through which users can actually deploy IBM's quantum computing products in research efforts – just like you could rent X Nvidia A100s of processing power through Amazon Web Services or even a simple Xbox Series X console through Microsoft’s xCloud service. On the day of IBM's Quantum Summit, that freedom also meant access to the useful quantum circuits within IBM-deployed Heron QPUs. And it's much easier to scale access at home, serving them through the cloud, than delivering a box of supercooled transmon qubits ready to be plugged and played with.

That’s one devil of IBM’s superconducting qubits approach – not many players have the will, funding, or expertise to put a supercooled chamber into local operation and build the required infrastructure around it. These are complex mechanisms housing kilometers of wiring - another focus of IBM’s development and tinkering culminating in last year’s flexible ribbon solution, which drastically simplified connections to and from QPUs.

Quantum computing is a uniquely complex problem, and democratized access to hundreds or thousands of mass-produced Herons in IBM’s refrigerator-laden fields will ultimately only require, well… a stable internet connection. Logistics are what they are, and IBM’s Quantum Summit also took the necessary steps to address some needs within its Quiskit runtime platform by introducing its official 1.0 version. Food for thought is realizing that the era of useful quantum computing seems to coincide with the beginning of the era of Quantum Computing as a service as well. That was fast.

Closing Thoughts

The era of useful, mass-producible, mass-access quantum computing is what IBM is promising. But now, there’s the matter of scale. And there’s the matter of how cost-effective it is to install a Quantum System Two or Five or Ten compared to another qubit approach – be it topological approaches to quantum computing, or oxygen-vacancy-based, ion-traps, or others that are an entire architecture away from IBM’s approach, such as fluxonium qubits. It’s likely that a number of qubit technologies will still make it into the mass-production stage – and even then, we can rest assured that everywhere in the road of human ingenuity lie failed experiments, like Intel’s recently-decapitated Itanium or AMD’s out-of-time approach to x86 computing in Bulldozer.

It's hard to see where the future of quantum takes us, and it’s hard to say whether it looks exactly like IBM’s roadmap – the same roadmap whose running changes we also discussed here. Yet all roadmaps are a permanently-drying painting, both for IBM itself and the technology space at large. Breakthroughs seem to be happening daily on each side of the fence, and it’s a fact of science that the most potential exists the earlier the questions we ask. The promising qubit technologies of today will have to answer to actual interrogations on performance, usefulness, ease and cost of manipulation, quality, and scalability in ways that now need to be at least as good as what IBM is proposing with its transmon-based superconducting qubits, and its Herons, and scalable Flamingos, and its (still unproven, but hinted at) ability to eventually mass produce useful numbers of useful Quantum Processing Units such as Heron. All of that even as we remain in this noisy, intermediate-scale quantum (NISQ) era.

It’s no wonder that Oliver Dial looked and talked so energetically during our interview: IBM has already achieved quantum usefulness and has started to answer the two most important questions – quality and scalability, Development, and Innovation. And it did so through the collaboration of an incredible team of scientists to deliver results years before expected, Dial happily conceded. In 2023, IBM unlocked useful quantum computing within a 127-qubit Quantum Processing Unit, Eagle, and walked the process of perfecting it towards the revamped Heron chip. That’s an incredible feat in and of itself, and is what allows us to even discuss issues of scalability at this point. It’s the reason why a roadmap has to shift to accommodate it – and in this quantum computing world, it’s a great follow-up question to have.

Perhaps the best question now is: how many things can we improve with a useful Heron QPU? How many locked doors have sprung ajar?

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

bit_user I'll come back and read this in full, later. I just wanted to suggest that longer articles, like this, would benefit from a call-out box (not sure if that's the correct term) that gives a bullet-list summary of the article's key points. Not saying this is one of them, but there are some articles where I have just enough interest to see what it's about, but I'm not invested enough to read a piece of this length or longer.Reply

Perhaps not unlike how the reviews put the rating and pros + cons right up front. In the best case scenario, there might be one or more items in that list which intrigues me and motives me to read at least a portion of the article, in full. -

waltc3 The two biggest marketing buzzwords in use today are "AI" in the #1 spot, followed by "Quantum Computing" in the #2 slot. So-called periodic "breakthroughs" that we see announced regularly are done primarily to achieve continuing investments in the companies making the announcements. Yet final products are still a decade or more away, much as with controlled fusion reactions, etc. Don't hold your breath waiting, is my advice...;)Reply -

DougMcC Reply

Company I work for has had AI solution in production for almost 6 months, making major cost savings. AI may not live up to every promise it has ever made, but it is already making major change happen.waltc3 said:The two biggest marketing buzzwords in use today are "AI" in the #1 spot, followed by "Quantum Computing" in the #2 slot. So-called periodic "breakthroughs" that we see announced regularly are done primarily to achieve continuing investments in the companies making the announcements. Yet final products are still a decade or more away, much as with controlled fusion reactions, etc. Don't hold your breath waiting, is my advice...;) -

waltc3 OK, explain to me the difference between a good production program for your company making cost savings and your company's internal version of "AI". All "AI" is, is computer programming--garbage in, garbage out, common, ordinary computer programming. That's it. "AI" is incapable of doing something it was not programmed to do. We'll see how the "AI" situation looks a year from now, after the novelty and the hype has worn off. That's the thing I object to--this crazy idea that AI can do things far in advance of what it is programmed to do--AI is not sentient, doesn't think, has no IQ, and does only what it is programmed to do. The rest of the AI hype is pure fiction--and it's being spieled right now as hyperbolic marketing. It's so false, it often seems superstitious.Reply -

domih Reply

True. ChatGPT started a giant hype 1+ year ago, with a lot of people not understanding what it actually does and journalists doing in hyperboles for gluing the readers eyeballs to their web site.waltc3 said:OK, explain to me the difference between a good production program for your company making cost savings and your company's internal version of "AI". All "AI" is, is computer programming--garbage in, garbage out, common, ordinary computer programming. That's it. "AI" is incapable of doing something it was not programmed to do. We'll see how the "AI" situation looks a year from now, after the novelty and the hype has worn off. That's the thing I object to--this crazy idea that AI can do things far in advance of what it is programmed to do--AI is not sentient, doesn't think, has no IQ, and does only what it is programmed to do. The rest of the AI hype is pure fiction--and it's being spieled right now as hyperbolic marketing. It's so false, it often seems superstitious.

However AI is an umbrella name that covers a lot of activities.

All "AI" is, is computer programming--garbage in, garbage out, common, ordinary computer programming.

A giant no here. Neural networks in the center of deep learning algorithms is NOT ordinary computer programming. It is not imperative. It is not Object Oriented Programing. It is not functional programming. Lookup the web and/or wikipedia for "neural networks and deep learning". You'll find plenty of basic detailed information (e.g. the sigmoid function, backpropagation, cnn, rnn, fnn, dnn, ann, transformers, etc) There are quite solid mathematical frameworks behind deep learning.

That's it. "AI" is incapable of doing something it was not programmed to do.

Again a giant no here. Look up the web for "ai solving new math problems".

In a more prosaic example, if you feed a neural network with a large number of images with cats, it WILL be capable to recognize a cat it has never seen before. The NN was "programmed" to recognize cats, but NOT to recognize a cat it has never seen before, yet it does recognize it with a pretty high probability. Imperative programming cannot do that.

---

I used AI everyday. Examples:

a. I use AI to generate the documentation of my code. It saves a lot of keyboard data entry. It is usually accurate for simple functions. For more complex algorithms, it fails BUT it gives a draft template I can correct. Writing the documentation is not the only one feature of using AI for coding. In the future, I'll also probably use it to write class skeletons, again saving a lot of typing. I tried using AI for generating unit-tests, it's OK. BUT it limits itself to testing the successful execution path(s). So I still have to write the tests resulting in failures, in other words attempting to cover all the execution paths. My code coverage is currently 90%. I aim at 95+%. Why? Because the type of customers using our products want this level of code coverage, otherwise they reject the proposal. Think large corporations or government agencies looking for cybersecurity solutions.

b. I'm also developing an IT server integrating radiology AI providers with IT systems used in hospitals. Each time I run tests, radiology images (e.g. x-rays) get analyzed by one of several 3rd party AI providers. AI radiology is more and more accepted and requested by practitioners because AI may detect an anomaly that a human radiologist may not see. In the case of cancer-like illnesses, detecting earlier can be life saving for the patients. When a cancer-like anomaly is detected too late (e.g. already at the metastasis phase) the potential for death is much higher. Conversely, treating a cancer-like illness that just started is usually successful. That's the reason health imaging AI is skyrocketing. The human practitioners still make the final analysis and reports. Imaging AI is an additional tool that does not replace the humans.

c. If you use social networks (I don't) or a cell phone (I do), there are AI algorithms to recognize faces in images, or generate suggestions based on your browsing, etc. Again AI is an umbrella name that covers many sub-fields: deep learning, machine learning, image recognition, LLMs, generative AI, etc. -

DougMcC Replywaltc3 said:OK, explain to me the difference between a good production program for your company making cost savings and your company's internal version of "AI". All "AI" is, is computer programming--garbage in, garbage out, common, ordinary computer programming. That's it. "AI" is incapable of doing something it was not programmed to do. We'll see how the "AI" situation looks a year from now, after the novelty and the hype has worn off. That's the thing I object to--this crazy idea that AI can do things far in advance of what it is programmed to do--AI is not sentient, doesn't think, has no IQ, and does only what it is programmed to do. The rest of the AI hype is pure fiction--and it's being spieled right now as hyperbolic marketing. It's so false, it often seems superstitious.

I'll give this my best shot.

Using imperative programming, we can define that if a customer case involves X, Y, Z parameters, it should be routed to Developer Dave for solutioning. Maintaining such a mapping from parameters (in potentially more than 3 dimensions) to developers is hard work and high maintenance. So we actually don't do this, we rely on relatively expert humans to do their best.

That is, until modern AI. Now a modern AI analyzes commits to our codebase, connections between components, our documentation, and our internal wiki, and develops a model I can't say I fully understand of who has expertise on various topics. It more correctly routes cases to developers than the humans did, at a fraction of the cost. -

ttquantia Reply

Yes, sure, "AI", which is really just a little bit more clever statistics than what has been widely available before, is useful for many things.DougMcC said:Company I work for has had AI solution in production for almost 6 months, making major cost savings. AI may not live up to every promise it has ever made, but it is already making major change happen.

But, it is pretty much useless, or of marginal usefulness, for 99 per cent of things people currently do with computers.

And, listening to TV, media, newspapers, AI is supposed to change everything. It will not. For the next couple of years we will continue seeing new things being done with AI which are of questionable utility, and many will slowly disappear after it becomes too obvious that it just does not work. Example: replacing programmers with LLM based methods.

Same with Quantum Computing. It is potentially useful for a very limited and narrow class of computational problems, maybe, and most likely 10 or 20 years from now. Nothing even close to justifying the current hype and investments. -

bit_user Reply

Emergent capabilities are apparently a real phenomenon, in LLMs.waltc3 said:the thing I object to--this crazy idea that AI can do things far in advance of what it is programmed to do

Nobody (serious) has said it is. It turns out that sentience is not required, in order to perform a broad range of cognitive tasks.waltc3 said:AI is not sentient,

Define thinking.waltc3 said:doesn't think,

IQ is a metric. You can have a LLM take an IQ test and get a score. By definition, it does have an IQ.waltc3 said:has no IQ, -

bit_user Reply

This is almost exactly backwards. AI gets better as the amount of compute power and memory increases. Also, as people develop better approaches and generally gain experience with it.ttquantia said:Yes, sure, "AI", which is really just a little bit more clever statistics than what has been widely available before, is useful for many things.

But, it is pretty much useless, or of marginal usefulness, for 99 per cent of things people currently do with computers.

And, listening to TV, media, newspapers, AI is supposed to change everything. It will not. For the next couple of years we will continue seeing new things being done with AI which are of questionable utility, and many will slowly disappear after it becomes too obvious that it just does not work.

Furthermore, the digitization of society is exactly what makes AI so powerful. The availability of vast pools of data has made it very easy to train AI, and the preponderance of web APIs has made it easy to integrate AI into existing systems.

The AI revolution is only getting started. Yes, there was a hype bubble around LLMs, but the industry is continuing to develop and refine the technology and it's only one type of AI method being developed and deployed.

Oh, but some of those are incredibly high-value problems! Lots of drug-related and material science problems fall into the category of things you can tackle only with quantum computing.ttquantia said:Same with Quantum Computing. It is potentially useful for a very limited and narrow class of computational problems,

Not only that, but the implications for things like data encryption are pretty staggering. -

domih If you want to know how AI is used in various industries, visit https://www.insight.tech/.Reply

It is a blog like site sponsored by INTEL. It has an endless series of posts about applied AI.