How Call of Duty: Modern Warfare 2019 Plays on Different Graphics Cards

We tested on everything from integrated graphics to GTX 1080

Call of Duty has rebooted one of its most influential entries for 2019's Call Of Duty: Modern Warfare, promising the classic gunplay the franchise is known for but with the improvement possible in a modern game release.

Config

| CPU | Intel Core i3-4340 or AMD FX-6300 | Row 0 - Cell 2 |

| GPU | Nvidia GeForce GTX 670/Nvidia GeForce GTX 1650 or AMD Radeon HD 7950 - DirectX 12.0 compatible system | Row 1 - Cell 2 |

| RAM | 8GB | Row 2 - Cell 2 |

Requirements

On PC, its minimum requirements are not much higher than other 2019 releases, but what does this mean in practice? Can you play it using an integrated GPU? How does Nvidia's still popular 10th generation perform? Let's find out!

| GPU | Settings | Resolution | Result |

|---|---|---|---|

| GTX 1050 | Lowest | 1080p - 70% Resolution Scale (1344x756) | 60 fps |

| GTX 1060 | Max | 1080p | 70-80 fps |

| . | Low + Medium Textures, Decals and AA | 1080p | 80 fps |

| . | Low + Medium Textures, Decals and AA | 1440p | 70 fps |

| GTX 1080 | Max | 1080p | 90 fps |

| . | Low + High Textures, Decals and AA | 1080p | 100 fps |

| . | Max | 1440p | 80 fps |

| . | Max | 4k | 45 fps |

| . | Low + High Textures, Decals and AA | 4k | 60 fps |

The Setting Screen

The settings screen for Call of Duty Modern Warfare is pretty straight forward, with sections for controlling details and textures, shadow and lightning and post effects, with each individual option clearly labelled from lowest to highest.

Like most current games, Call of Duty: Modern Warfare includes a versatile Render Resolution scaler that allows the resolution of 3D elements to be lowered and then resampled back into the window's native resolution without affecting the UI.

Sadly, the resolution scaler only goes down to 66% of 1080 which is a tad higher than what other modern games allow and an obstacle for older or cheaper GPUs. But as we are about to see, this game is not very friendly to the entry-level in several ways.

Integrated GPUs

AMD Vega integrated GPUs, such as the Vega 8 or Vega 11 are usually great cheap options for 720p or light 1080p gaming.

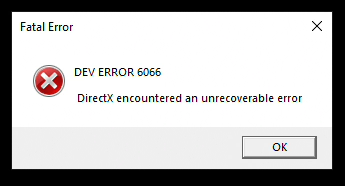

Strangely, the current version of Modern Warfare might have some issue with these options. The game initially seems to start fine, but trying to enter any actual game scene crashes the game with a DirectX error.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

I tested with the Ryzen 3200G + Vega 8 and Ryzen 3400G + Vega 11 using driver 19.9.2 and 19.10.2, but the problem persisted.

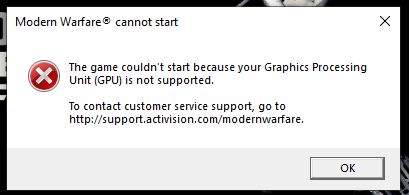

Intel HD graphics sadly did not fare much better. I tried the game using a Dell XPS 13 with Core i7-8550U CPU and Intel UHD 620 graphics which I have used for plenty of games. The game did not start, throwing an incompatibility error message when trying to open.

Call of Duty: Modern Warfare on GTX 1050 Ti

Since the game seems to require a dedicated GPU I decided to begin with the GTX 1050 2GB, one of Nvidia's entry-level gaming GPUs for the 10th generation. The humble 1050 is usually one of the cheapest options to help you get gaming on older games, or newer games at lower settings and Modern Warfare is not an exception.

I paired this with a Ryzen 5 3400G and 16 GB of DDR4 ram and with the lowest settings allowed by the game, and resolution set to 1080 but the scaler to 70% (so a render resolution of 1344x756) and the game maintained a 60 fps cap even at the most-challenging moments. .

For testing, I used the Ground War multiplayer mode since it tends to have the bigger maps, the higher player counts and plenty of vehicles running down constant destruction.

At lowest settings, the game is not exactly pretty on the eyes and the lack of antialiasing and decent lightning is obvious but a lot of people playing competitive games like this one are more interested in function than form. . If that is the case for you as well the GTX 1050 will not disappoint.

The game does like its memory, utilizing almost all the 2 GB of VRAM and over 10 GB of RAM at the lowest settings. Let's see how an upgrade will do.

Call of Duty Modern Warfare on GTX 1060

We jump now to a more powerful GTX 1060 with 6GB. While it is starting to show its age, using a 1060 with 6 GB of VRAM is still a pretty inexpensive way to get decent settings in modern games at 1080.

Modern Warfare is another example of that. From nothing to everything, the game is now able to max out the settings (except for Ray Tracing) at 1080 and still keep 60 fps at a minimum and often more.

Of course, the difference visually is quite impactful, particularly regarding overall lightning, anti-aliasing and full 1080p resolution. Some might prefer to focus on competitive responsiveness over visual settings, and for them, two interesting options emerge.

The first is to prioritize framerate. By lowering all the settings but still leaving textures at medium and bullet decals enabled, we can squeeze the most performance while still leaving some visual flair. This leads to something closer to 80 fps on average which will work great in a 90Hz (or more) monitor with any sort adaptive v-sync.

Another option is to use a higher resolution. I bumped the game to 1440p at the same settings and was happy to see that the GPU was used to its max while keeping a nice 60 fps or more even at the worst moments.

Given the nature of the game, I would lean towards higher framerates but that is dependant on your monitor having a high refresh rate and variable refresh rate, so you can focus on settings or resolution if that is not available.

Call of Duty: Modern Warfare on GTX 1080

Given the previous results, it is not a surprise that a GTX 1080 card can max out the game at 1080p resolution and still provide somewhere between 80 and 90 fps at its most intense moments thus making it a great choice for both graphics and framerates.

Let's explore options from here. Since the computational power is there, we can raise the resolution to 1440p at the same settings and expect 60-70 fps in most scenarios. If you are looking for a balance between framerate and resolution, this might be your best bet.

When I attempted to play at full 4K, the GTX 1080 wasn't as good, sticking to a still respectable average of 45 fps at maximum settings.

If we drop down to the lowest settings, except for medium shadows, high textures, bullet decals enabled, and SMAA anti-aliasing, then we can keep around 55-60 fps. The game still looks decent enough, and as I have mentioned in the past, many people prefer higher framerates over better details.

Bottom Line

Call of Duty: Modern Warfare plays well on budget graphics cards and excels on mid-range models. You can get a smooth 60 fps on even a GTX 1050 at low resolution and settings, and the image quality improves dramatically as you move up to a GTX 1060. A GTX 1080 can even play at 4K with some compromises. However, this game does not work with integrated graphics at all so you can only play on PCs with discrete GPUs.

-

SavageNuke Ive got a R5 2600, RTX 2060 nvme ssd, 860 evo ssd, 16gb 3000mhz cl15 memory. I run the game at 100% /1080p render with everything else on low. I get 150+ fps to power my high refresh monitor. frame times dip occasionally in ground war. but once the map and everything is loaded, it runs like a champ. very optimized on release. good job game ready drivers.Reply -

husker Why bother with AMD integrated graphics if you're not going to also include a dedicated AMD graphics card? If someone didn't know better, they may assume AMD cards/drivers won't work with the game.Reply -

StonerJesus Playing on a Zotac amp 2080.Reply

If left at stock clocks, runs at a uncapped 163fps average in 1080p with maxed settings paired with a 2700x.

I leave it at 120fps capped and my GPU stays below 50C 90% of the time. Ground war causes it to jump to 56C.

This game is pretty optimized.

Runs 1440p at 120hz as well capped. But definitely gets a little hotter. I prefer a more stable system so I keep it at 1080p.

Interestingly, I find that any overclocking of the GPU leaded to direct x 6068 errors and leave it at stock clock speeds. Although my silicon handles a good 225mhz overclock everywhere else. -

Exia00 Replyhusker said:Why bother with AMD integrated graphics if you're not going to also include a dedicated AMD graphics card? If someone didn't know better, they may assume AMD cards/drivers won't work with the game.

There are people that can only afford an APU and besides that if said person is using a cloud gaming service they should be able to play this game on a cloud service like Geforce Now as an example because i have a laptop that has a i5 8250U and a MX150 GPU which i am not able to play most games i own on it but with Geforce Now i am able to play all my games on high settings the only catch is you need a strong connection for it. -

UltimateDeep GeForce now is not available in my location and I definitely need Intel / AMD iGPUs to work right now.Reply -

in_the_loop So I guess I would get 60 FPS@4k all maxed out with my 1080ti, judging by the 1080 numbers?Reply -

AndrewJacksonZA I opened the article to see how this would run on my RX470. Please change the title to something like "How Call of Duty: Modern Warfare 2019 Plays on Different Nvidia Graphics Cards, and on AMD APUs" as you most certainly did not "test on everything from integrated graphics to GTX 1080"Reply

Thumbs down. -

husker ReplyExia00 said:There are people that can only afford an APU and besides that if said person is using a cloud gaming service they should be able to play this game on a cloud service like Geforce Now as an example because i have a laptop that has a i5 8250U and a MX150 GPU which i am not able to play most games i own on it but with Geforce Now i am able to play all my games on high settings the only catch is you need a strong connection for it.

There's nothing wrong with reviewing how the game runs on integrated graphics; indeed that is helpful information. It's just a matter of fairness - if you're going to bring AMD into the conversation you can't only mention it's weakest entry while reviewing Nvidia's best. I know that the author is not attempting to make a direct comparison between the two, or that it was intentional, but the lingering impression of the article is that AMD is somehow bad or irrelevant. -

Exia00 Replyhusker said:There's nothing wrong with reviewing how the game runs on integrated graphics; indeed that is helpful information. It's just a matter of fairness - if you're going to bring AMD into the conversation you can't only mention it's weakest entry while reviewing Nvidia's best. I know that the author is not attempting to make a direct comparison between the two, or that it was intentional, but the lingering impression of the article is that AMD is somehow bad or irrelevant.

I feel like you're quoting the wrong person as i was defending the review as for the guy above saying that "Why bother with AMD integrated graphics " and also the reason why they don't even bother to add in the RX 570 and 580 in the review is because if the 1050 Ti wasn't going to handle the game then the 570 would be like on the lowest setting pretty much.