Inside The World's Largest GPU: Nvidia Details NVSwitch

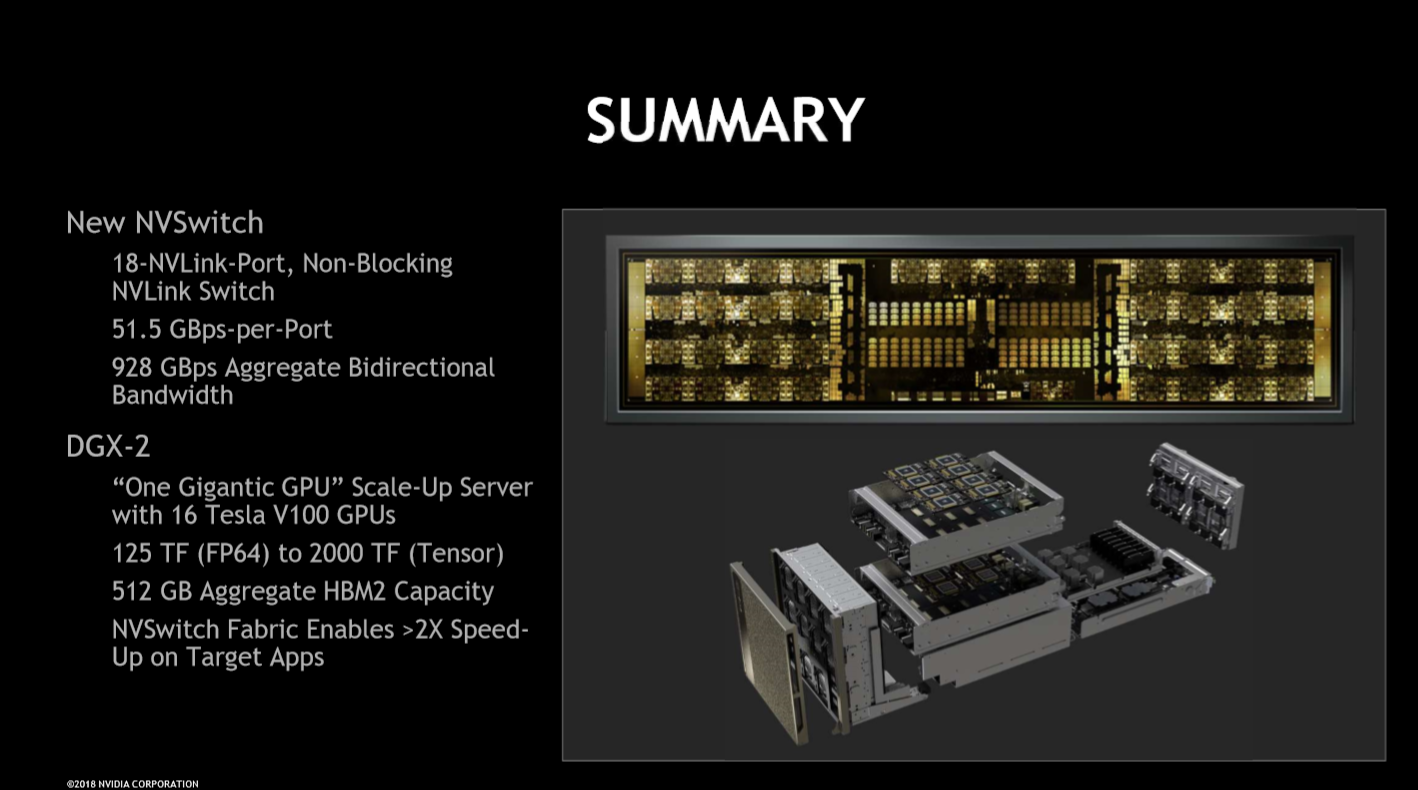

CUPERTINO, CALIF. -- Nvidia shared more details on its NVSwitch technology, the key enabling feature of its DGX-2 servers, here at Hot Chips 2018.

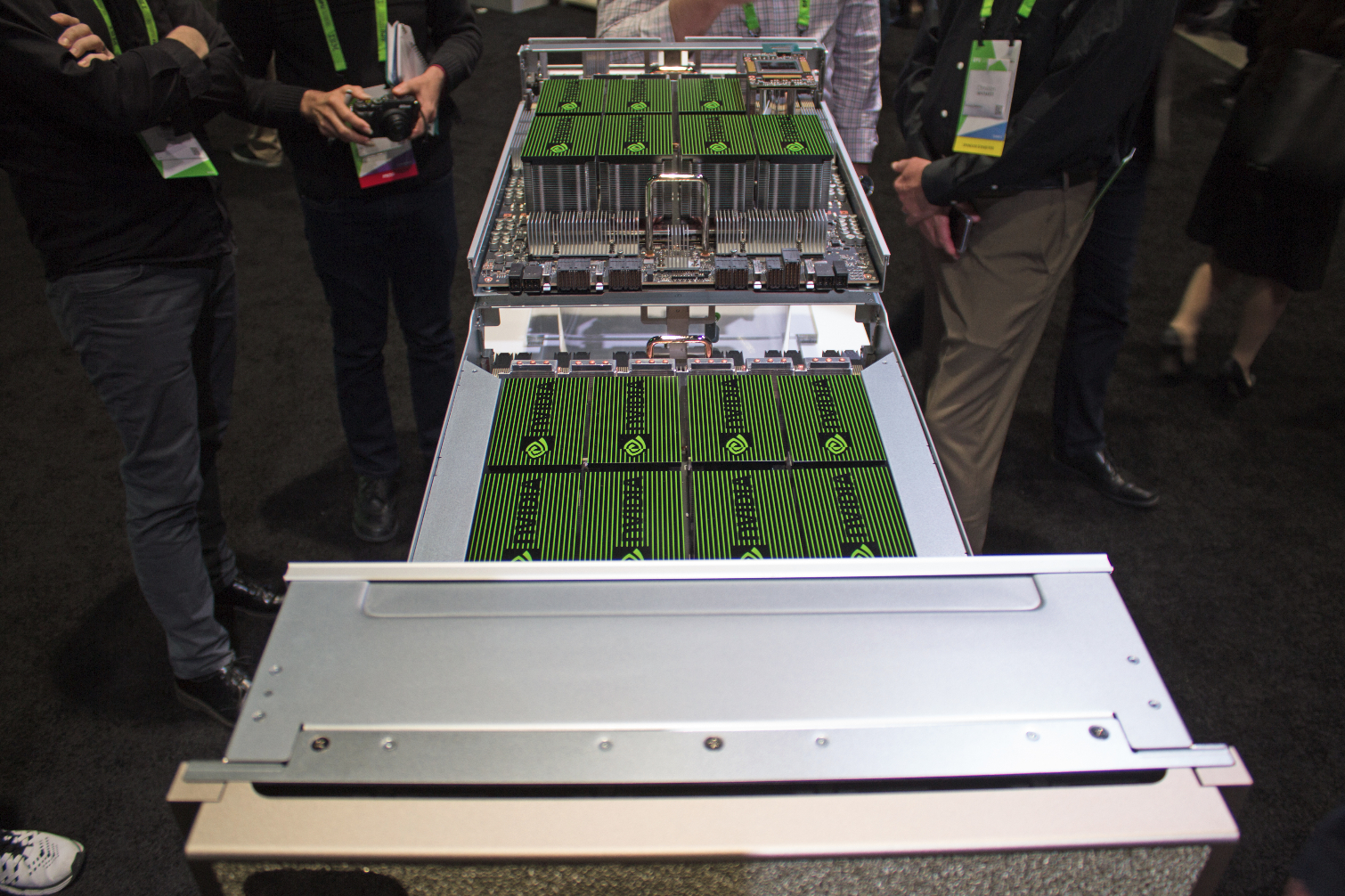

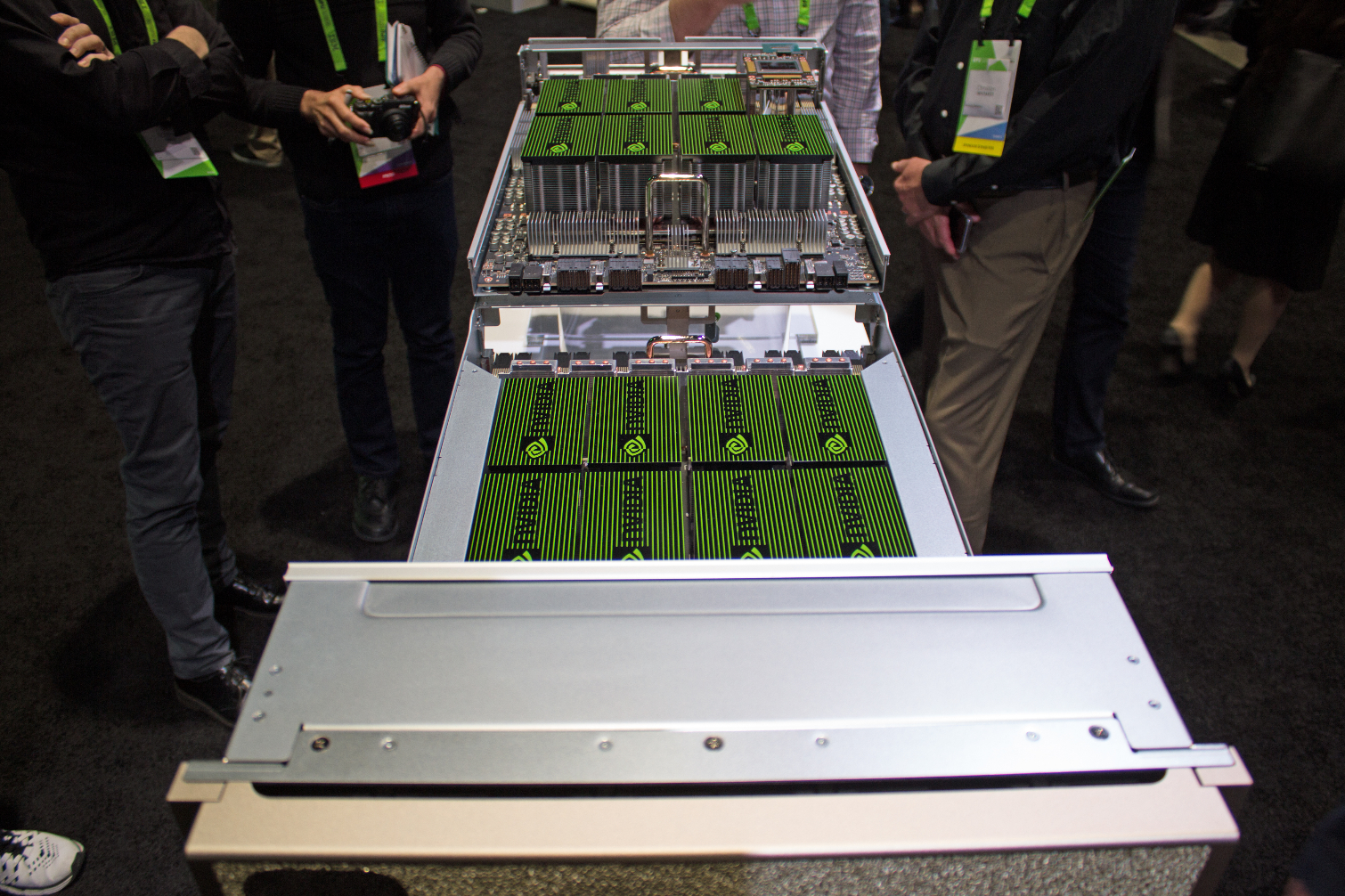

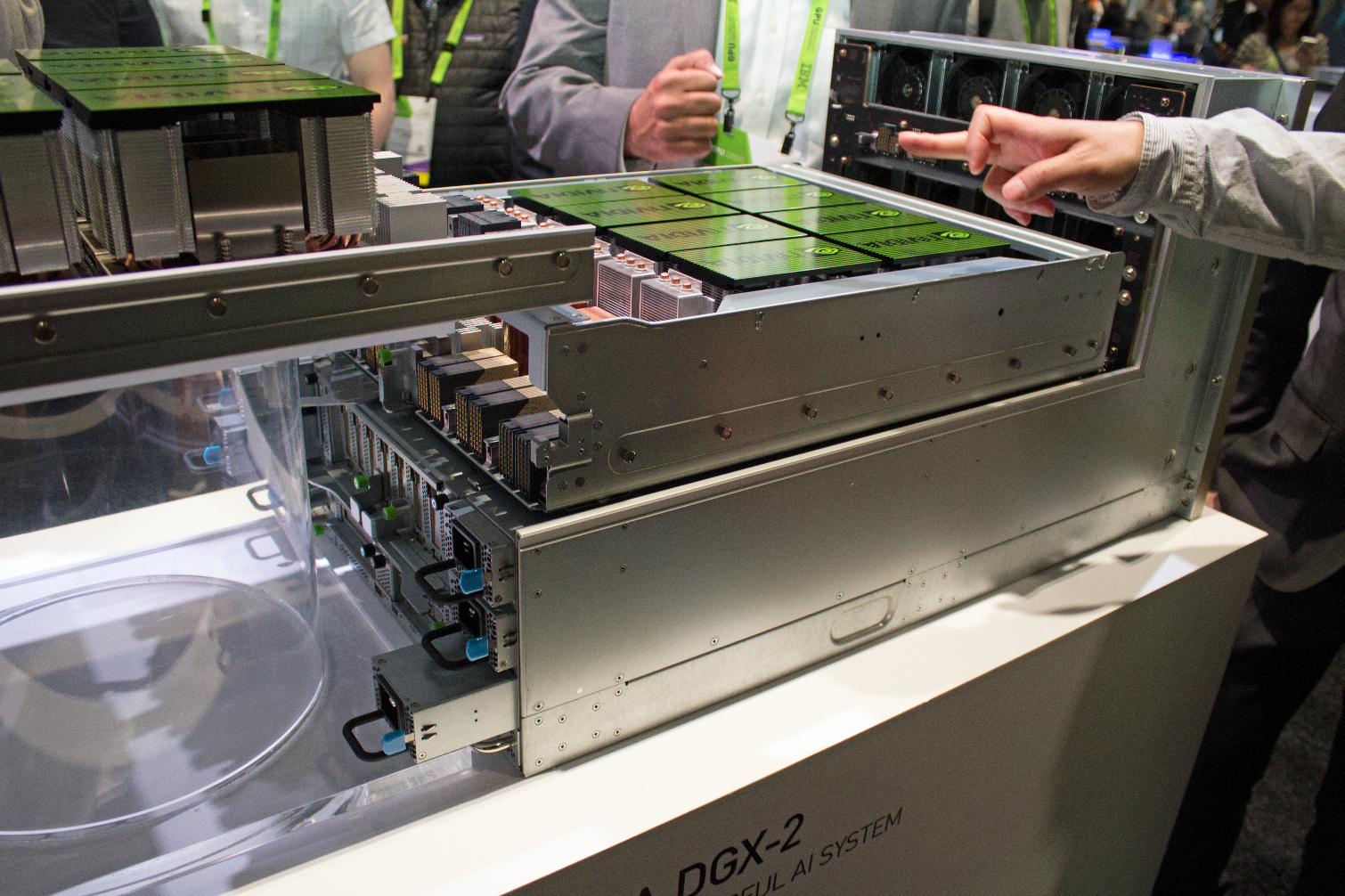

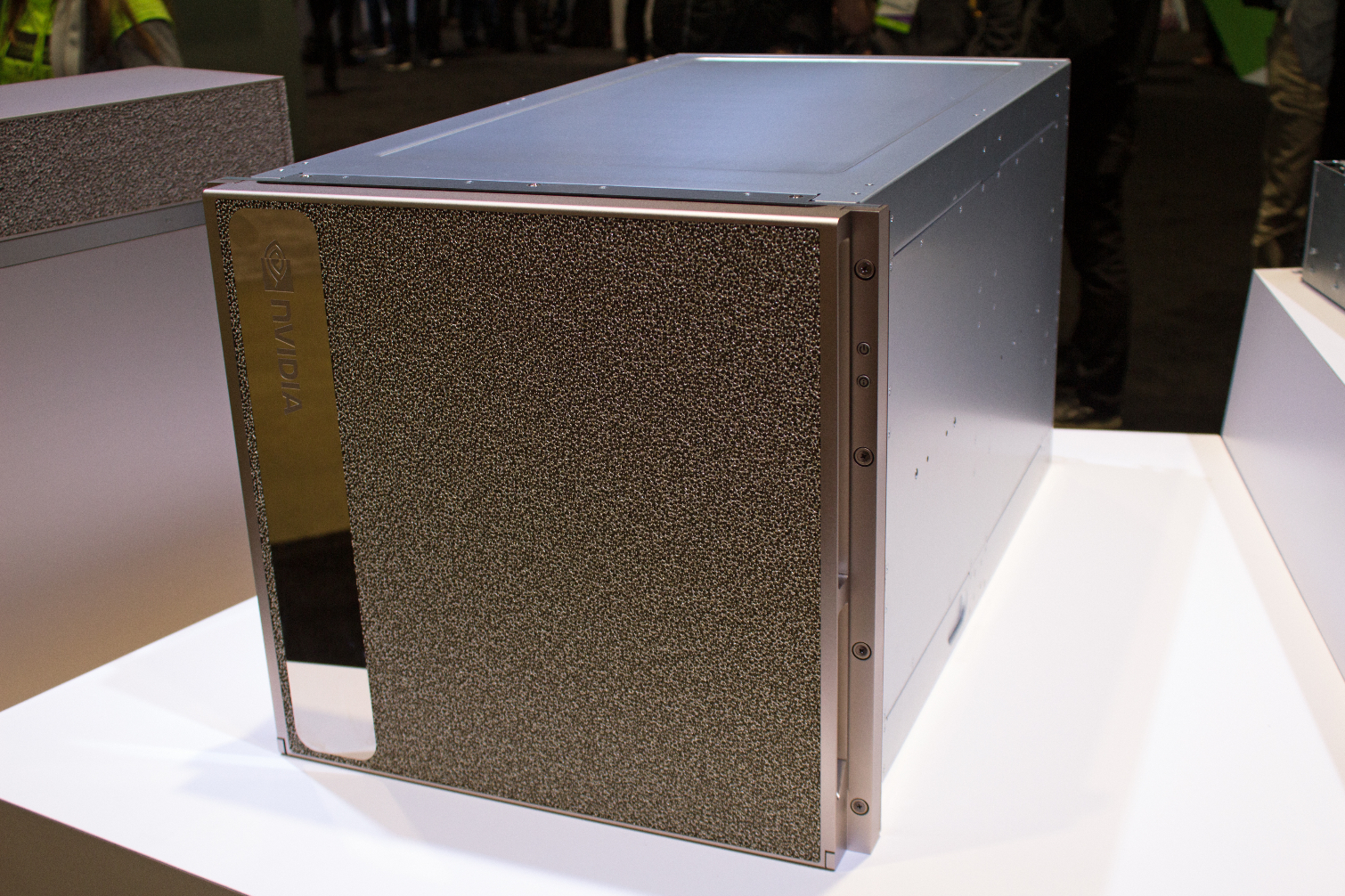

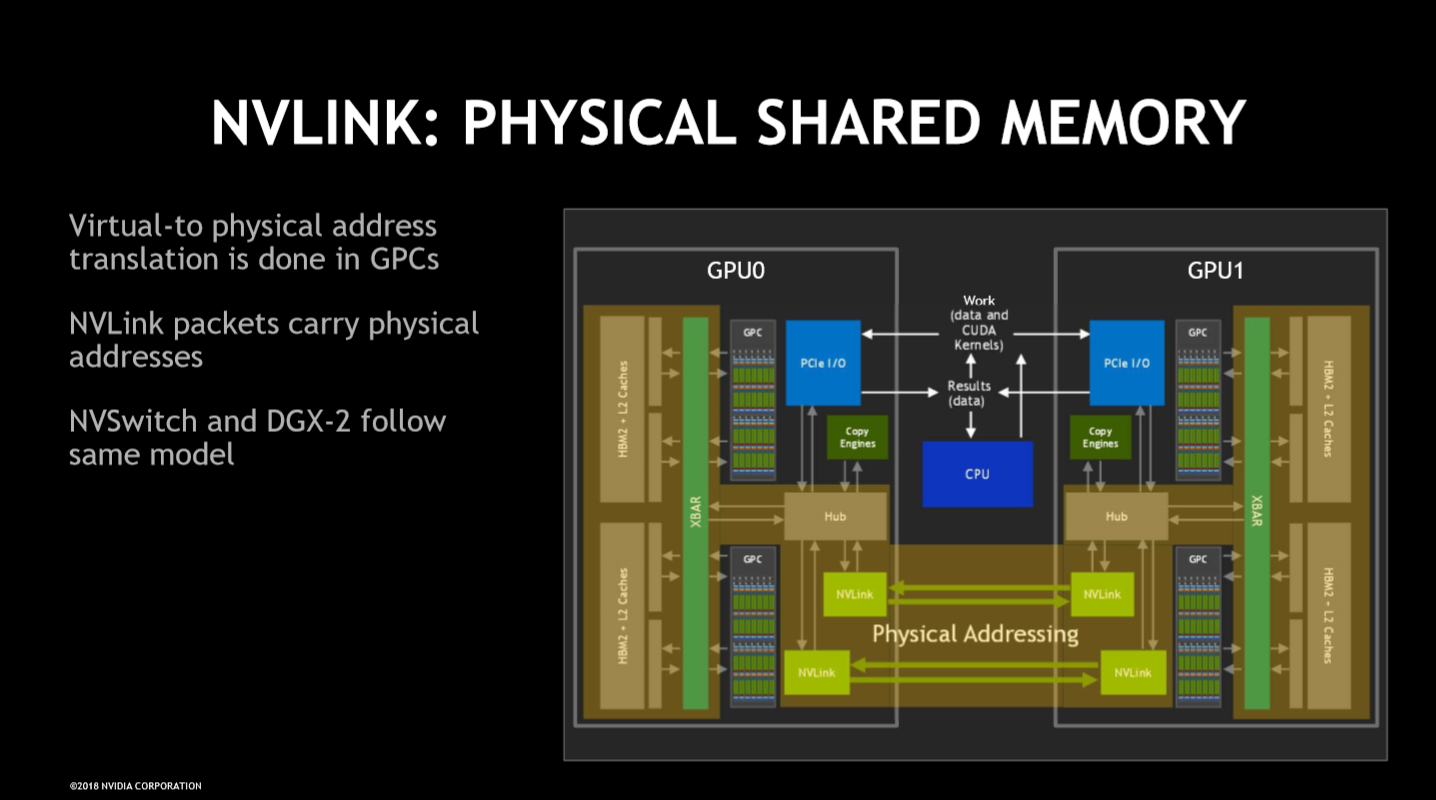

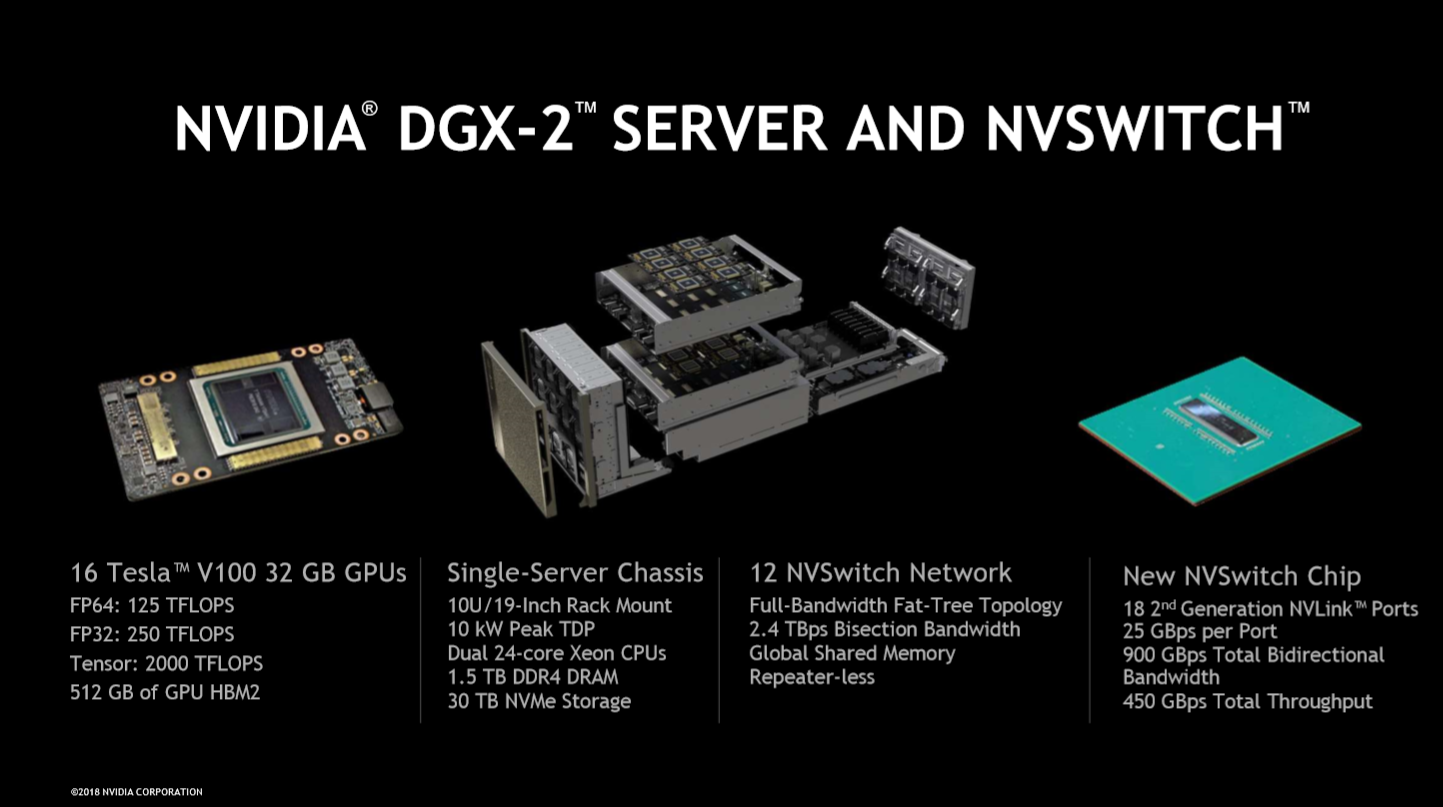

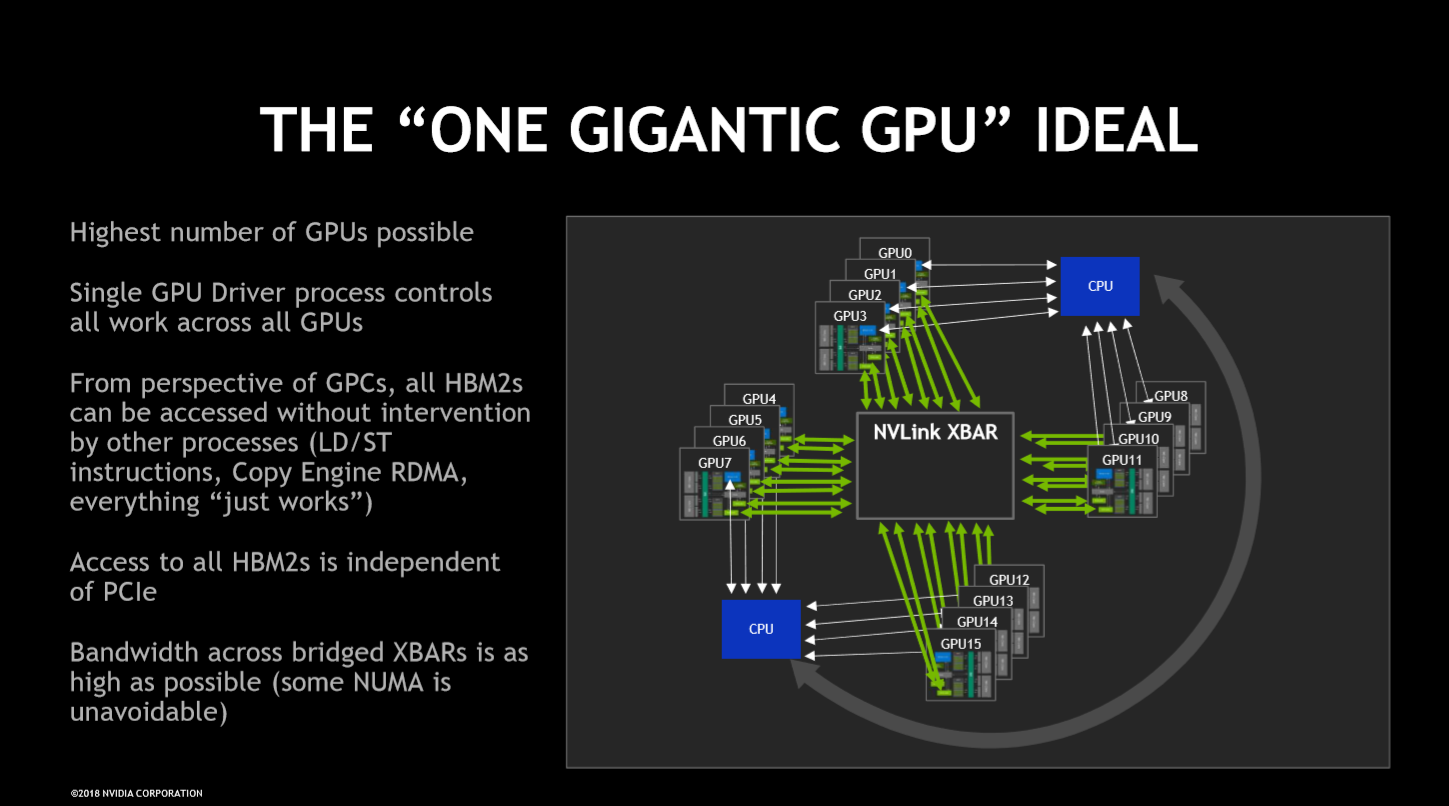

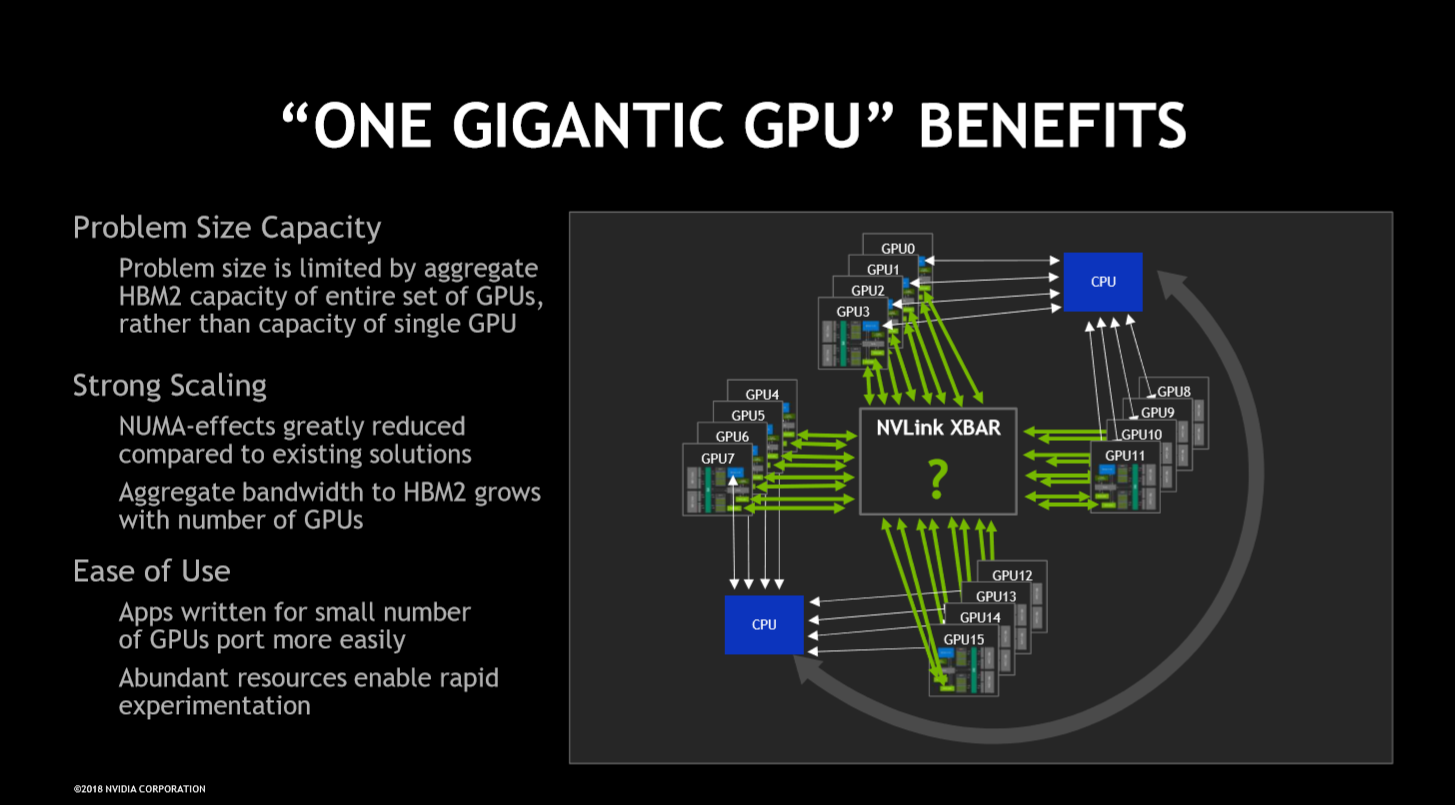

Nvidia CEO Jensen Huang famously introduced the DGX-2 server as the "World's Largest GPU" at GTC 2018. Huang based his fantastic claim on the fact that the system, which actually has 16 powerful Tesla V100 GPUs tied together with a flexible new GPU interconnect, presents itself to the host system as one large GPU with a unified memory space.

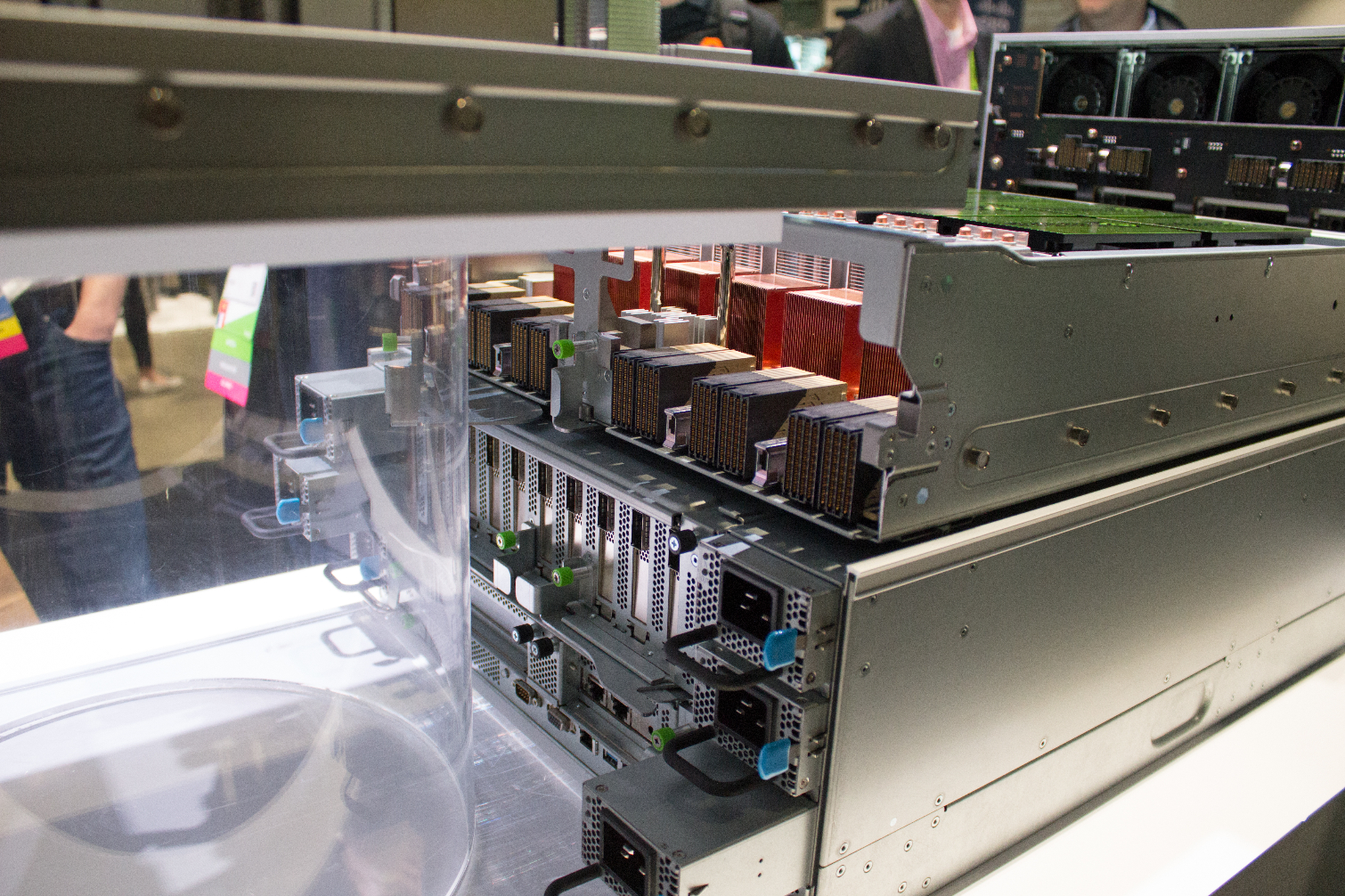

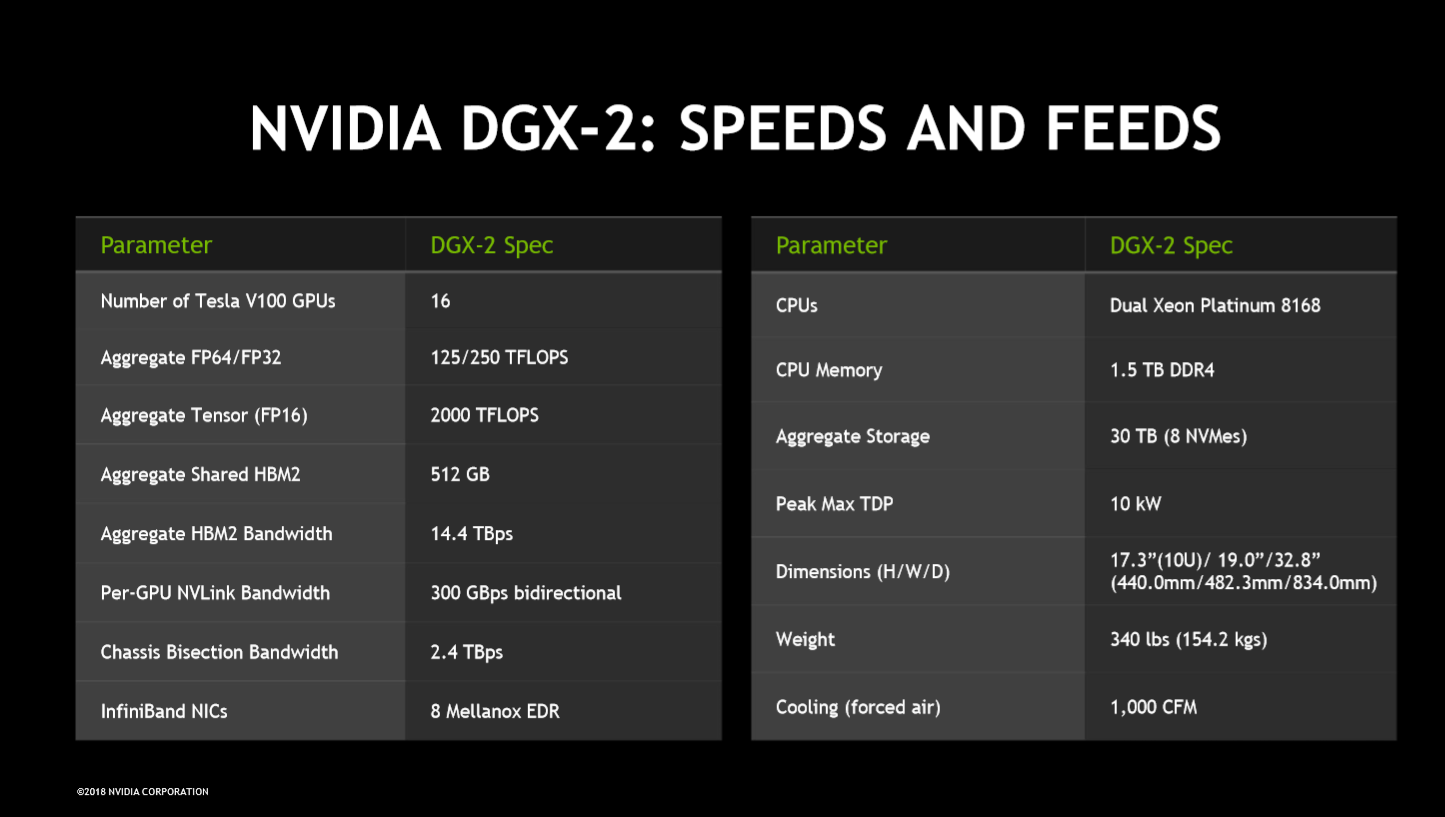

The DGX-2 comes with mind-bending specs and an eye-watering price tag of $400,000 to match. Pricing is actually very competitive with competing solutions used by leading-edge data centers and AI researchers. AI development is in a rapid state of flux, and new advances come on an almost weekly basis. Many of these new deep learning models are much larger than previous versions and require access to more memory capacity and sheer computational horsepower.

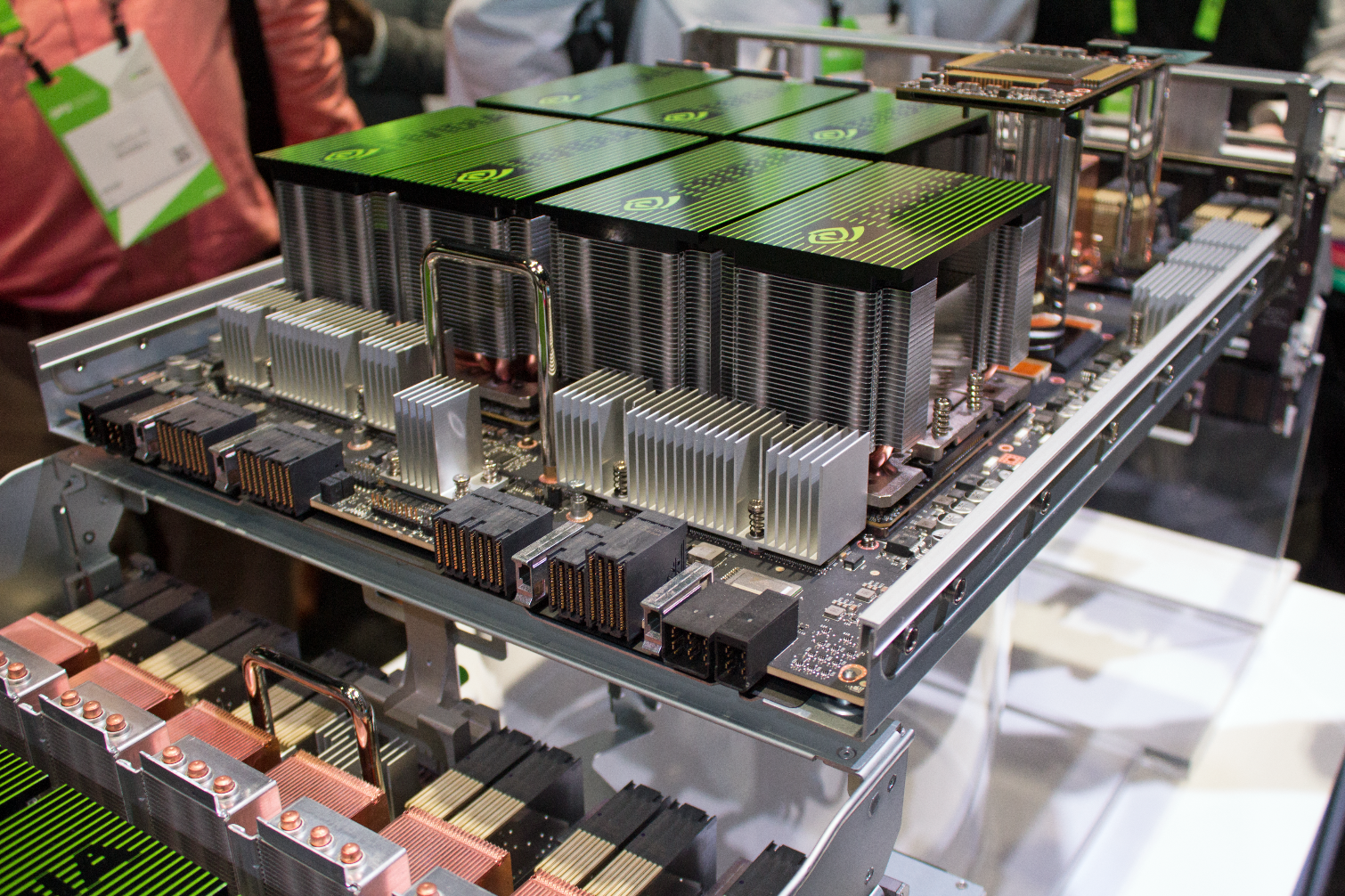

Nvidia's solution is to tie sixteen GPUs together with a universal memory interface and a massive 512GB of HBM2 memory. The DGX-2 wields 81,920 CUDA cores and an additional 12,240 Tensor cores chip in for AI workloads. These beefy resources require an astonishing 10kW of power.

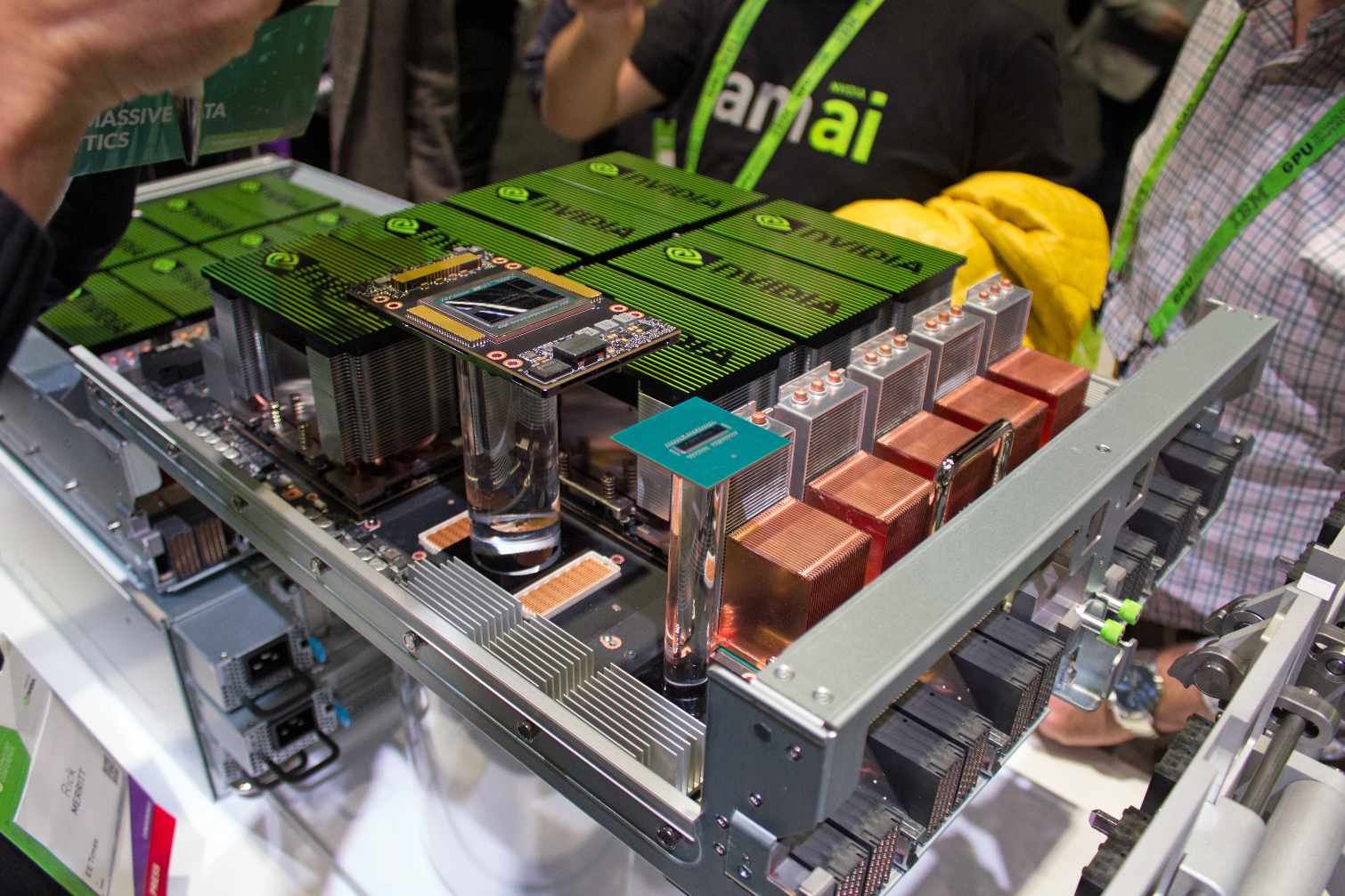

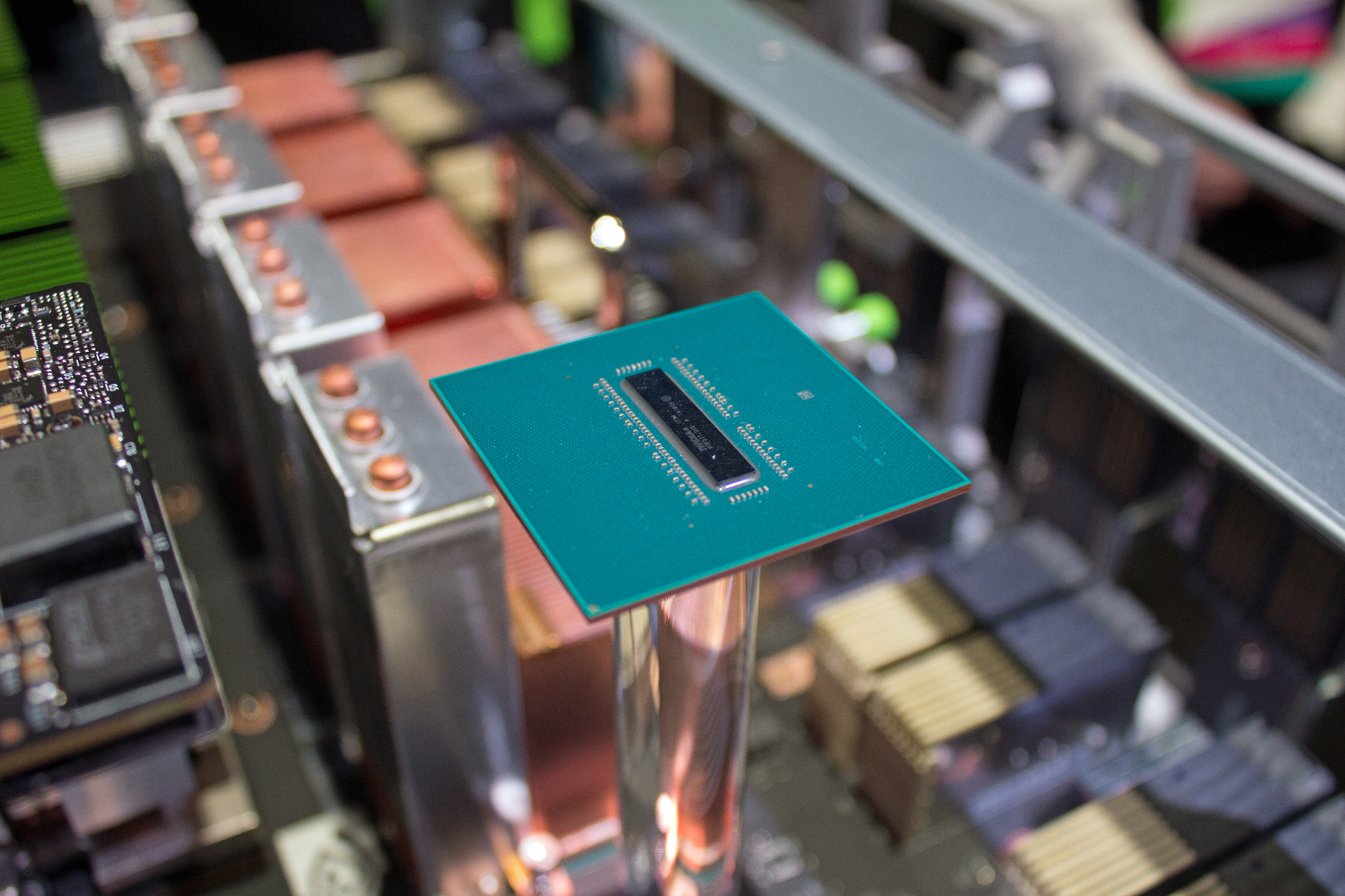

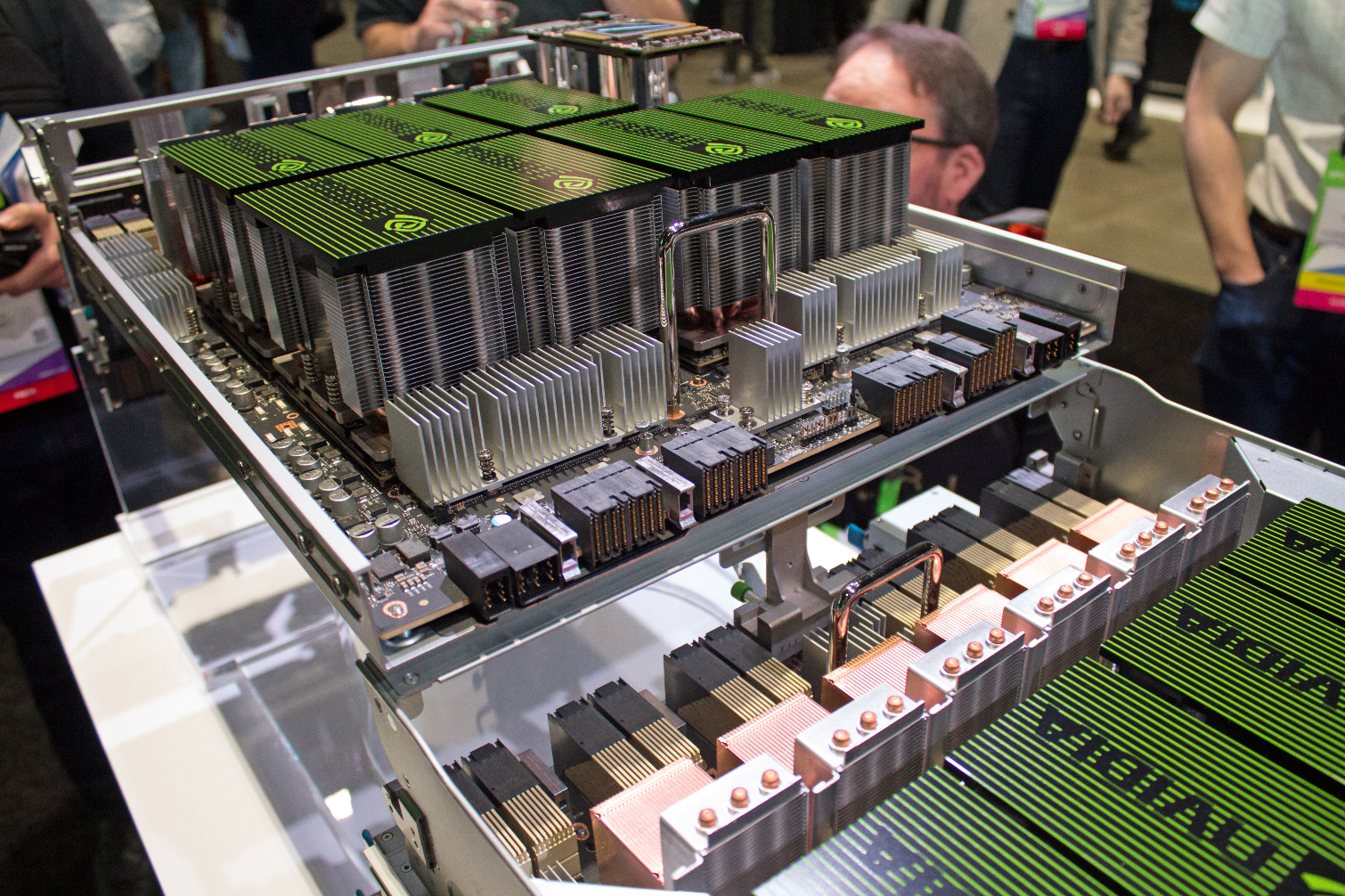

The Tesla V100 is a formidable beast: the 815mm2 die is the size of a full reticle and wields 21 billion transistors. The massive die perches atop Nvidia's new SXM3 package found in the DGX-2 system. The updated package runs at 350W (a 50W increase over the older model) and comes with 32GB of HBM2. Nvidia confirmed the extra 50W of power is dedicated to boosting the GPUs clock rate, but the company isn't sharing specific frequencies.

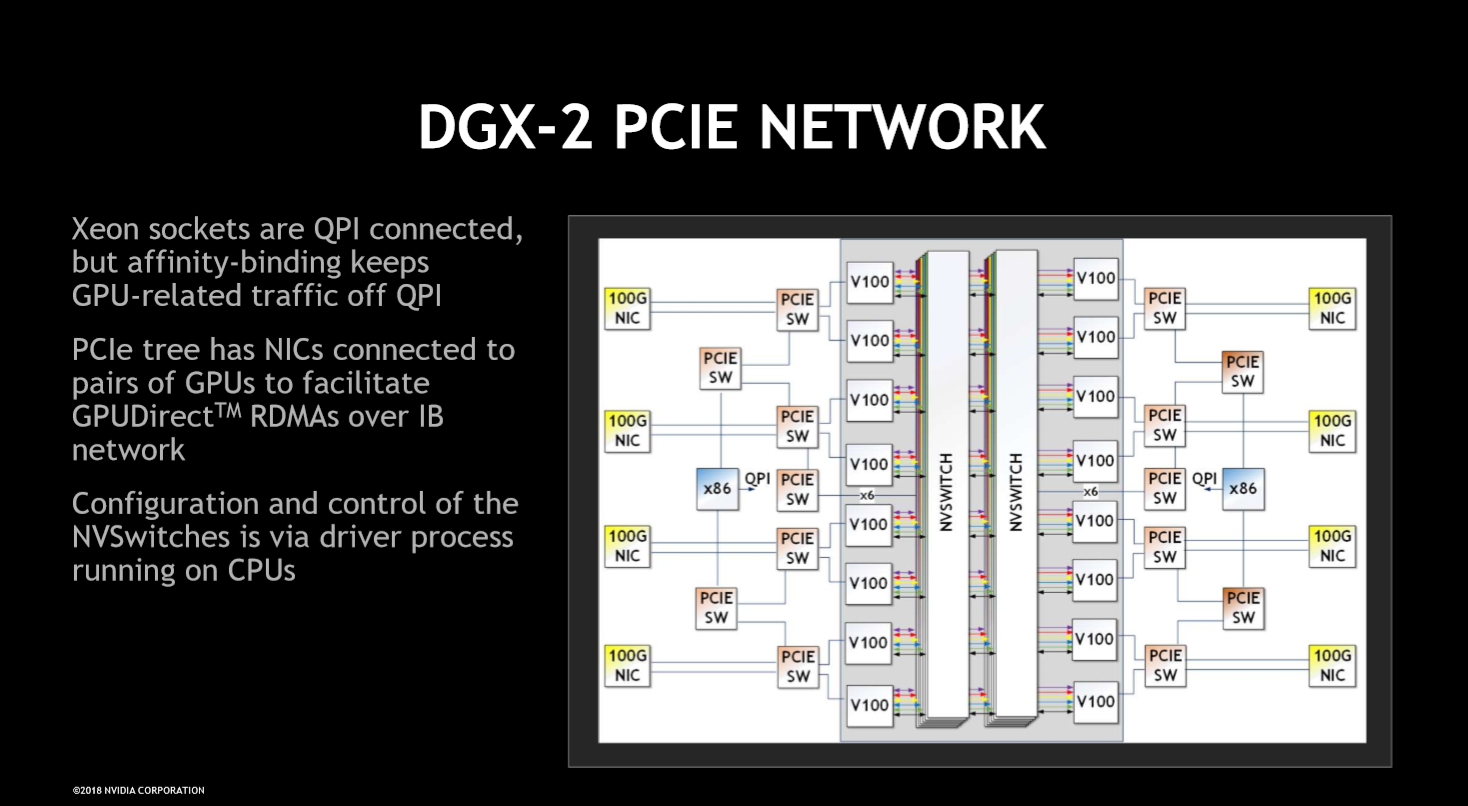

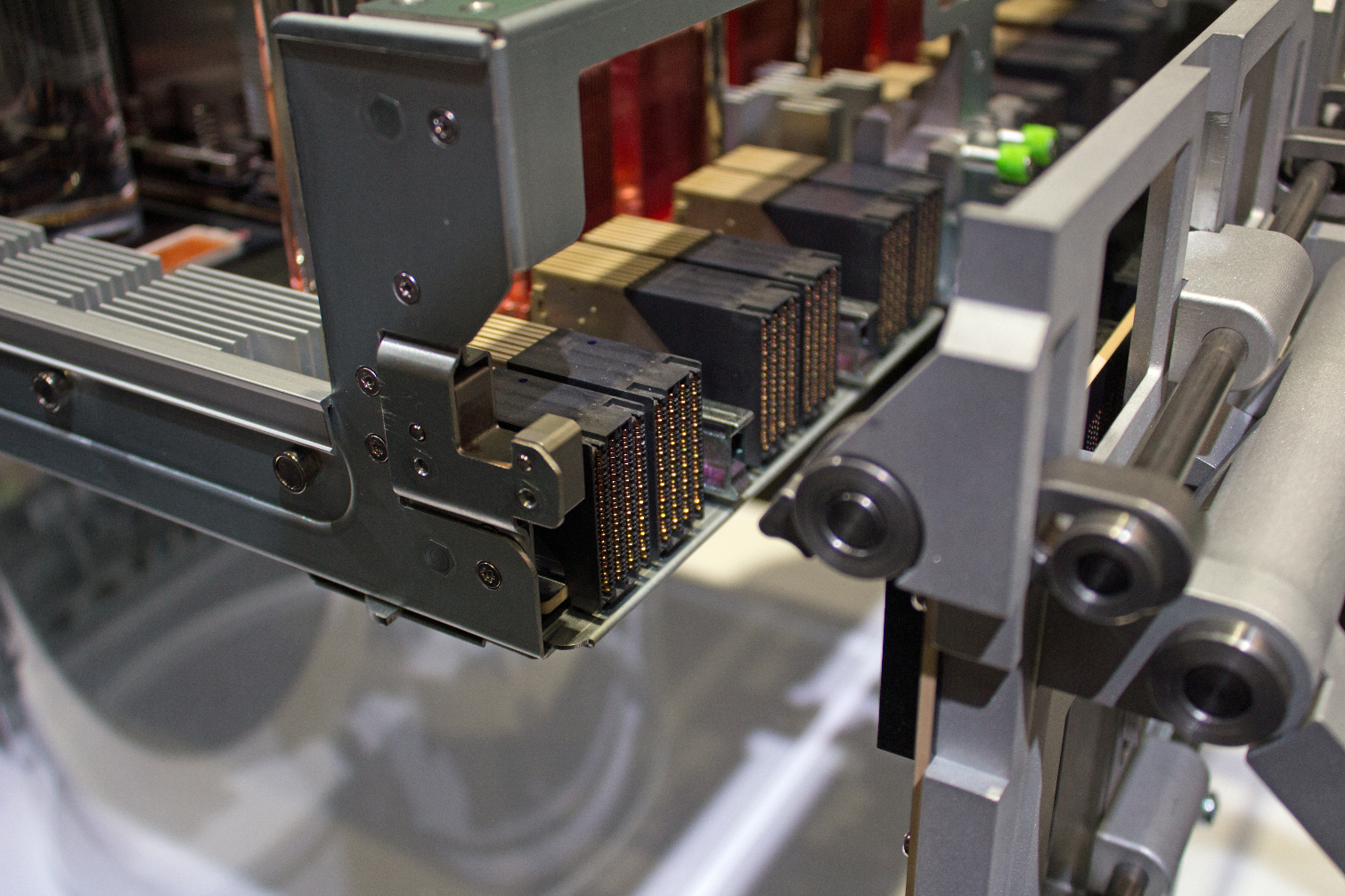

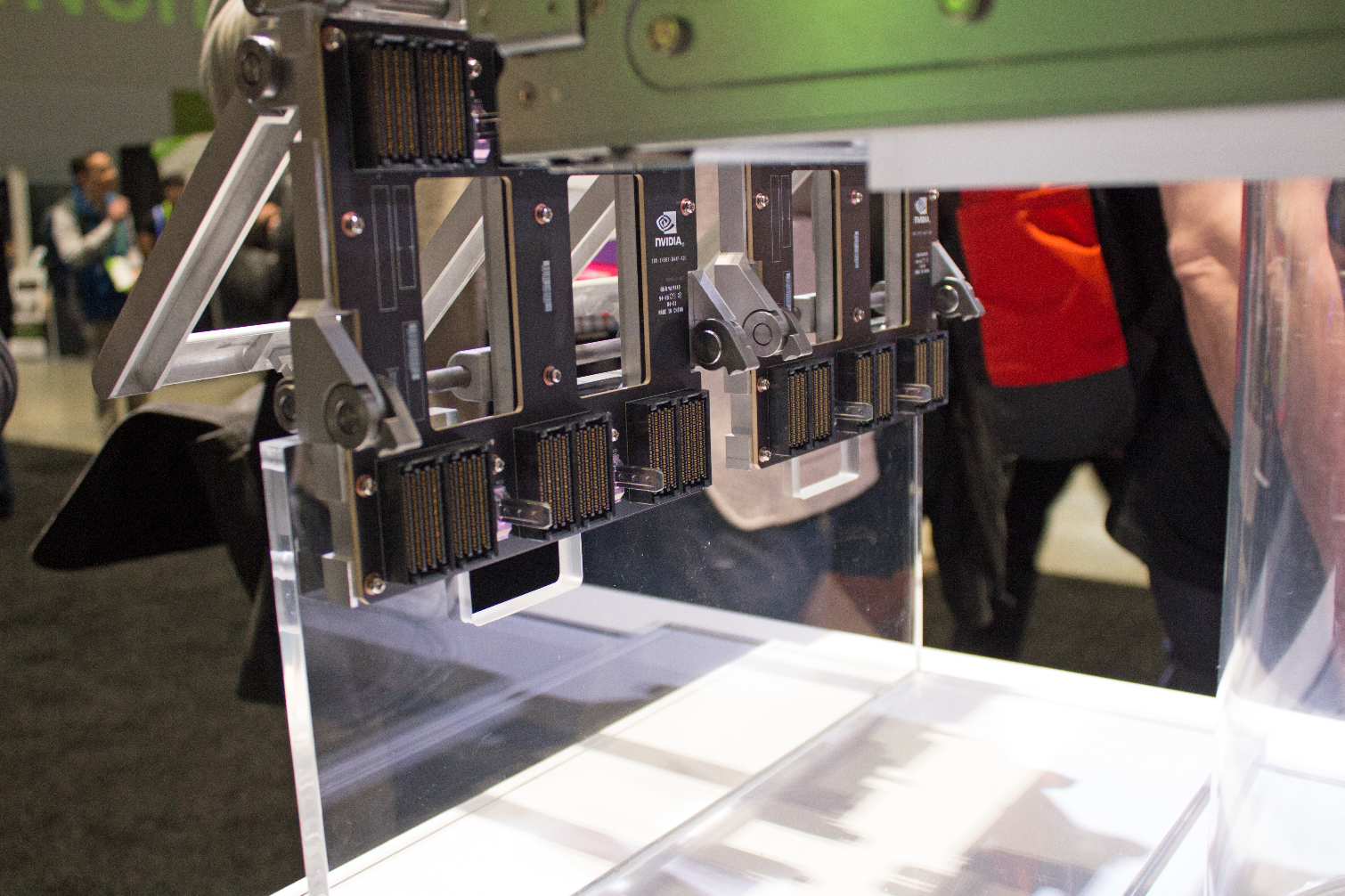

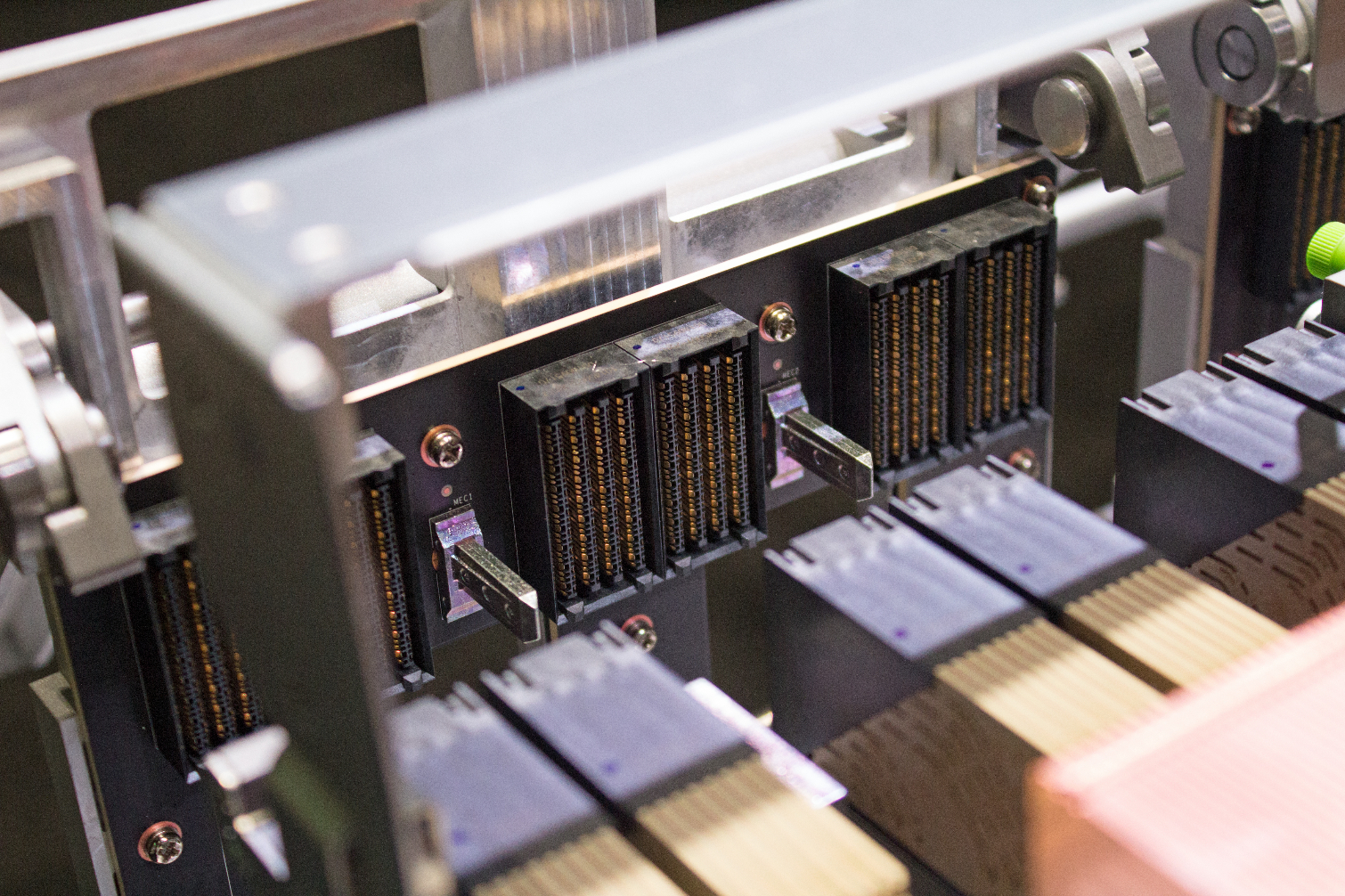

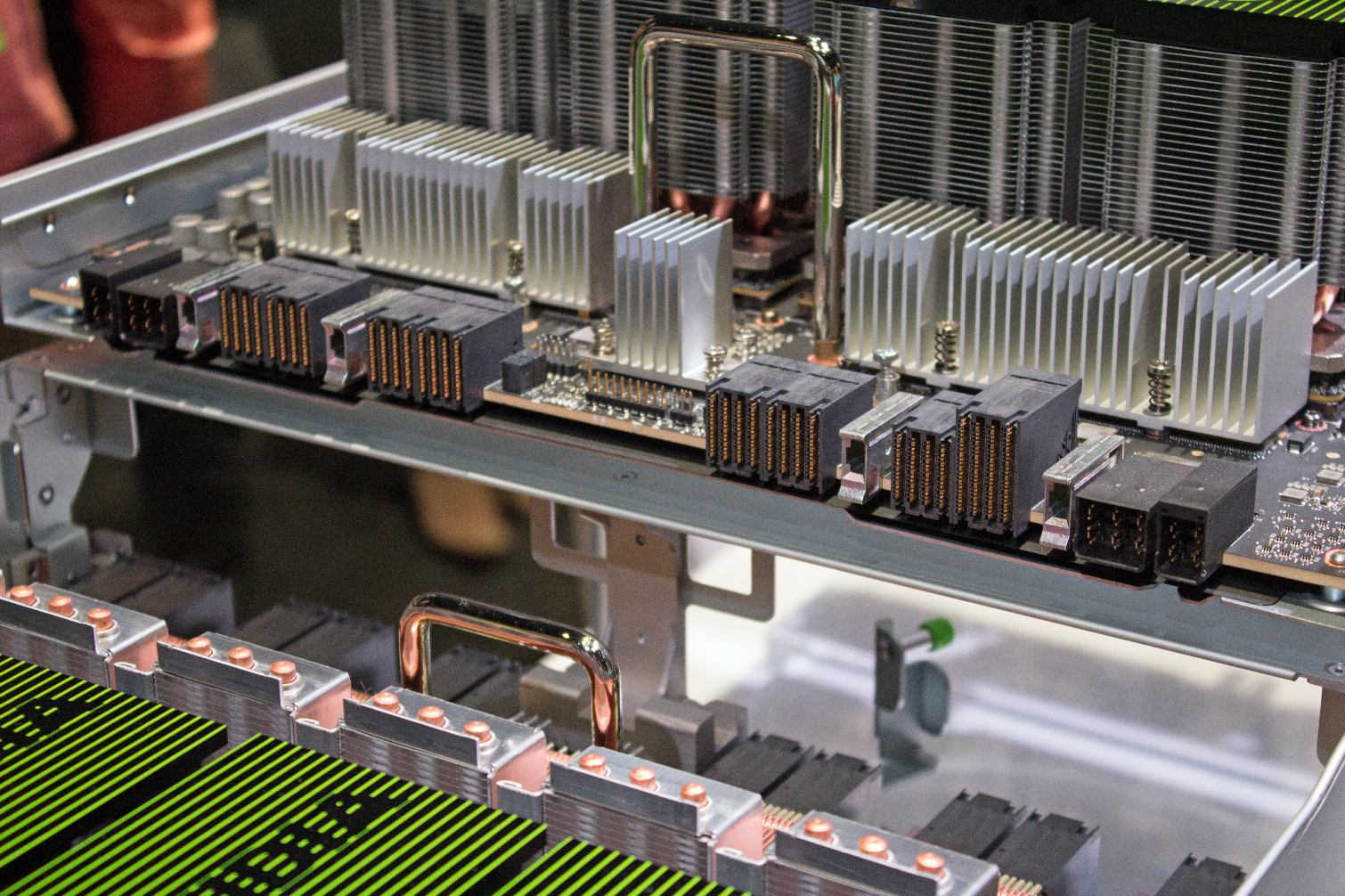

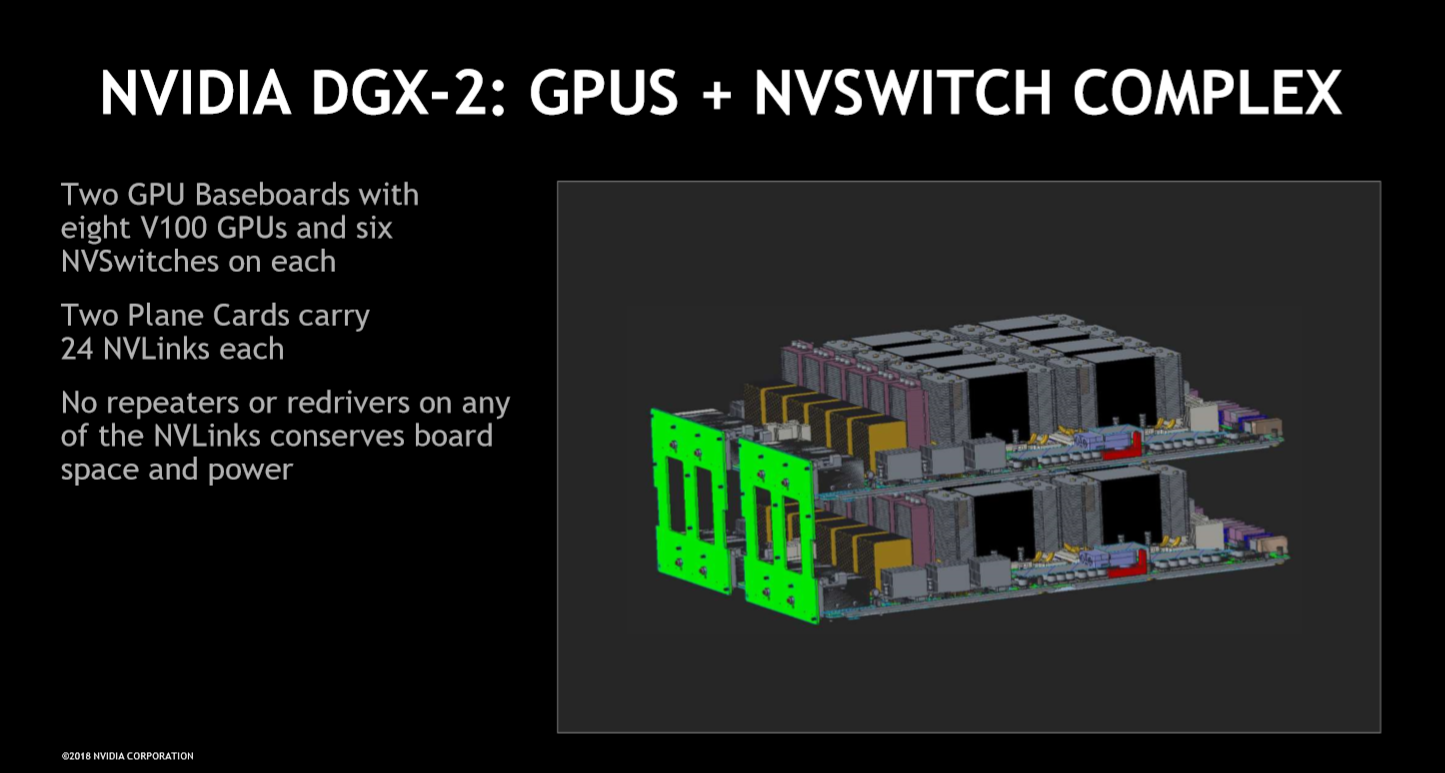

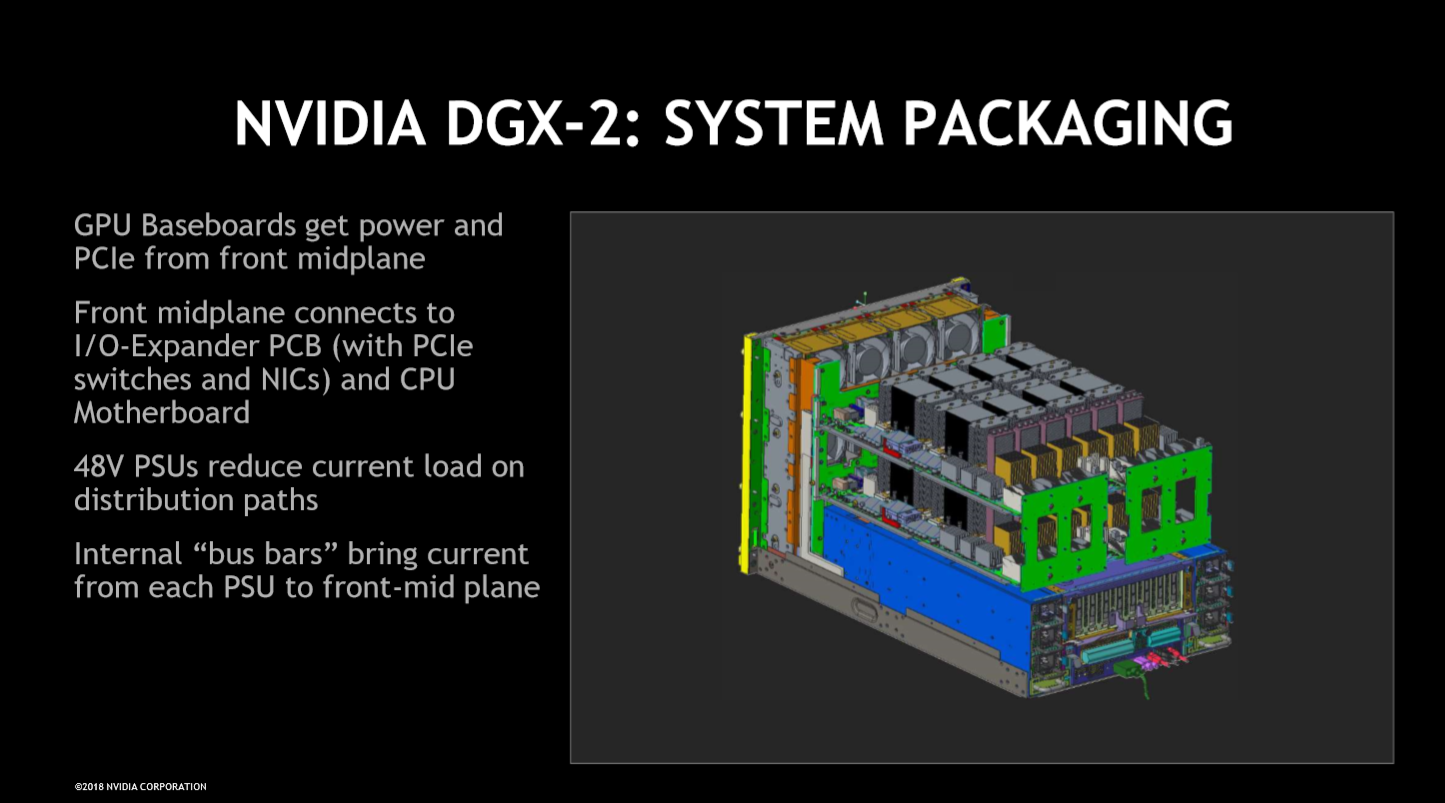

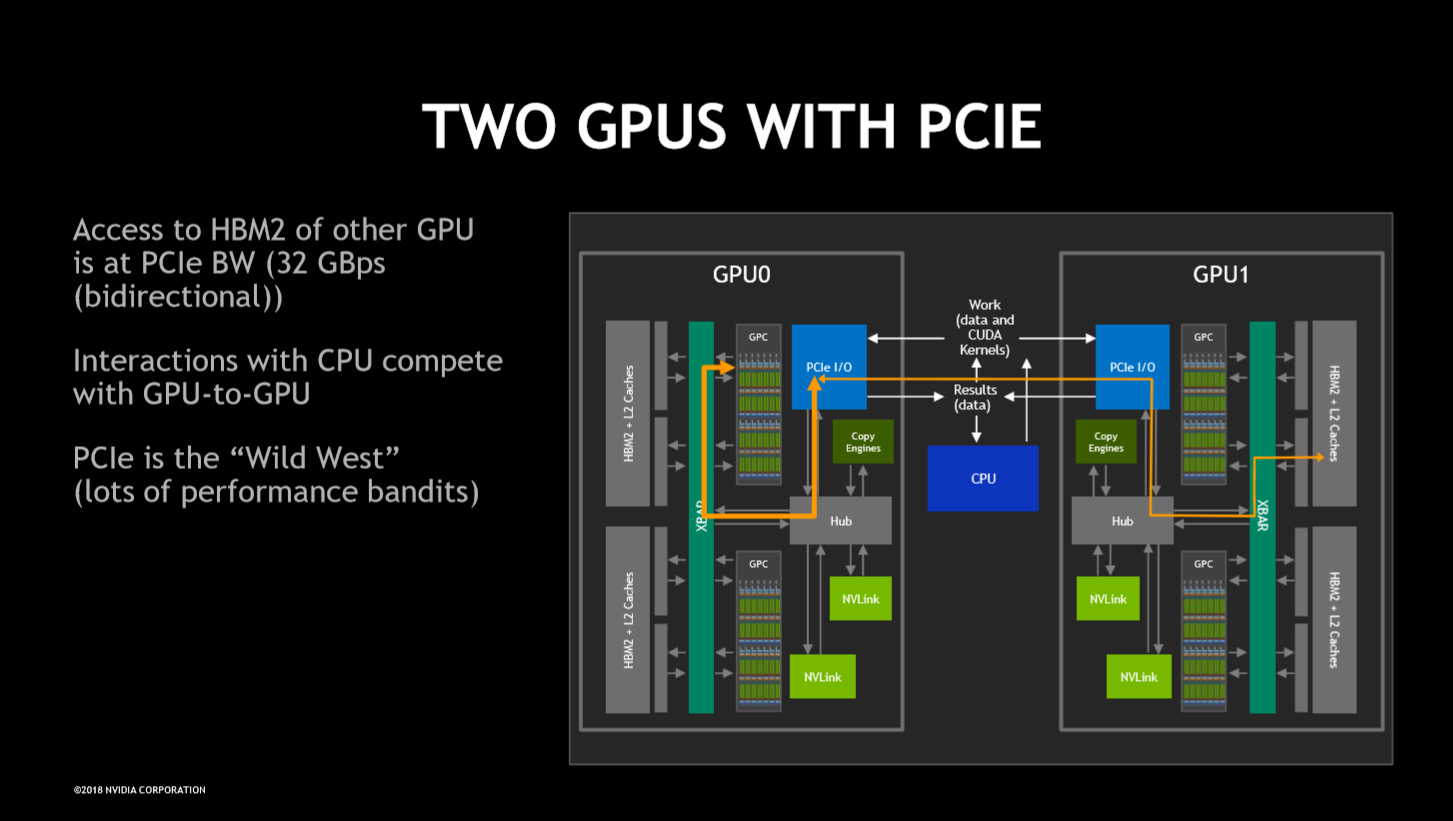

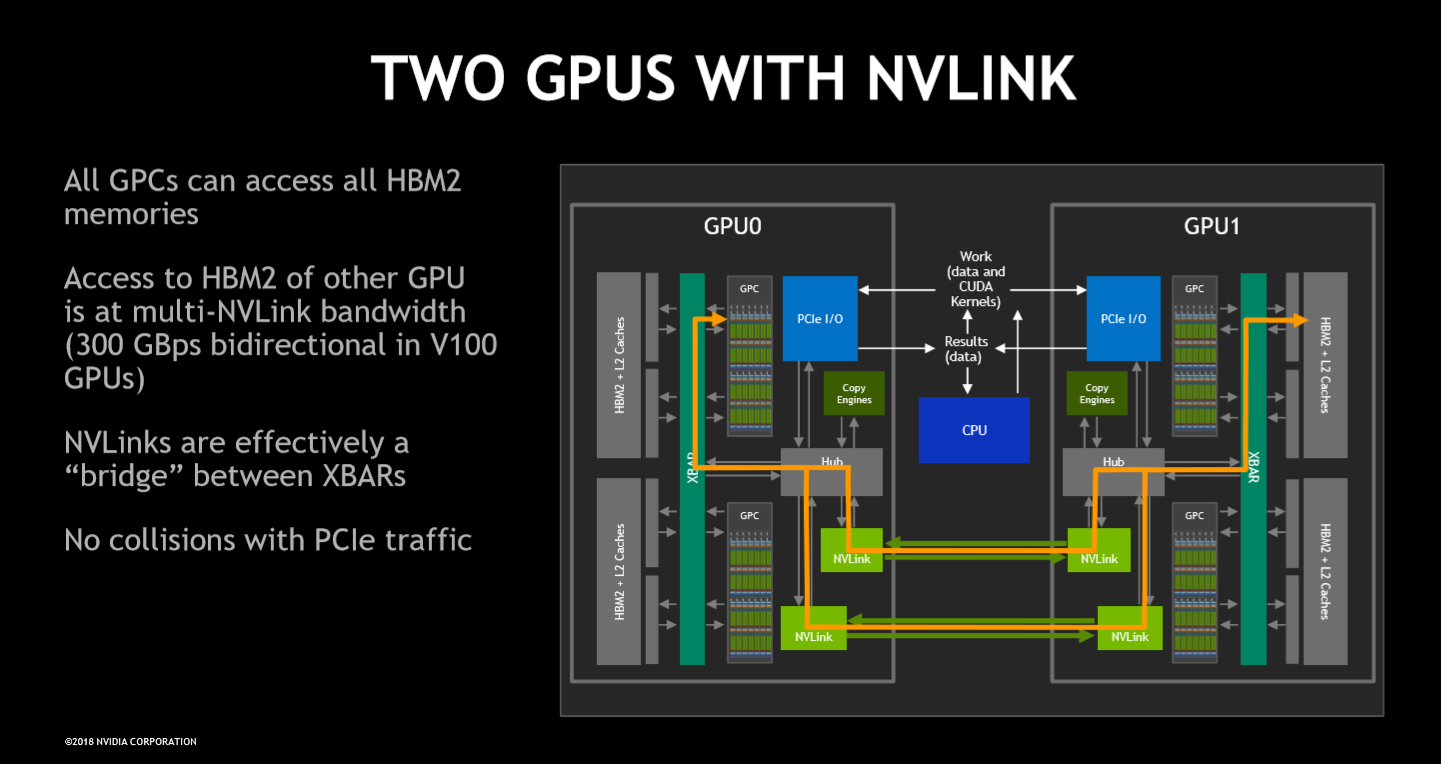

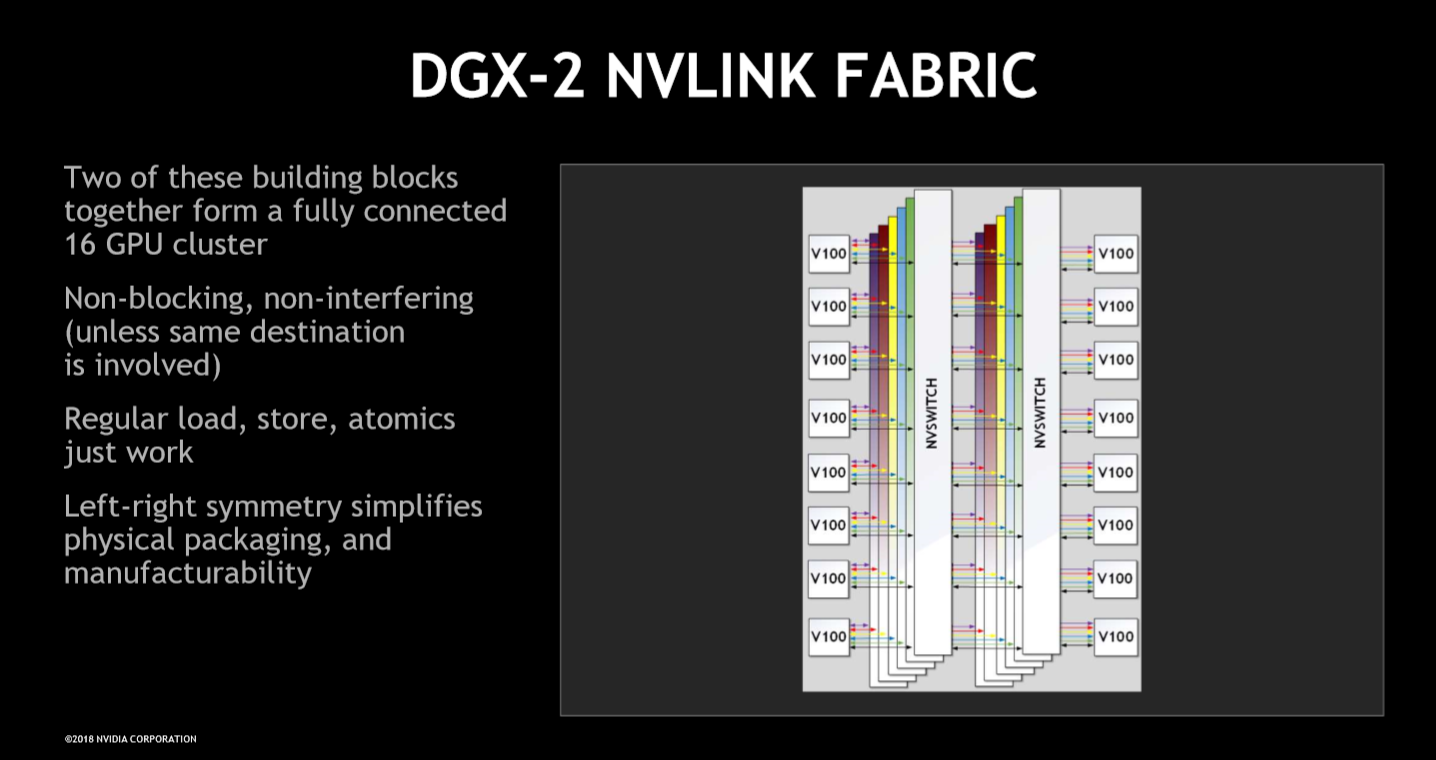

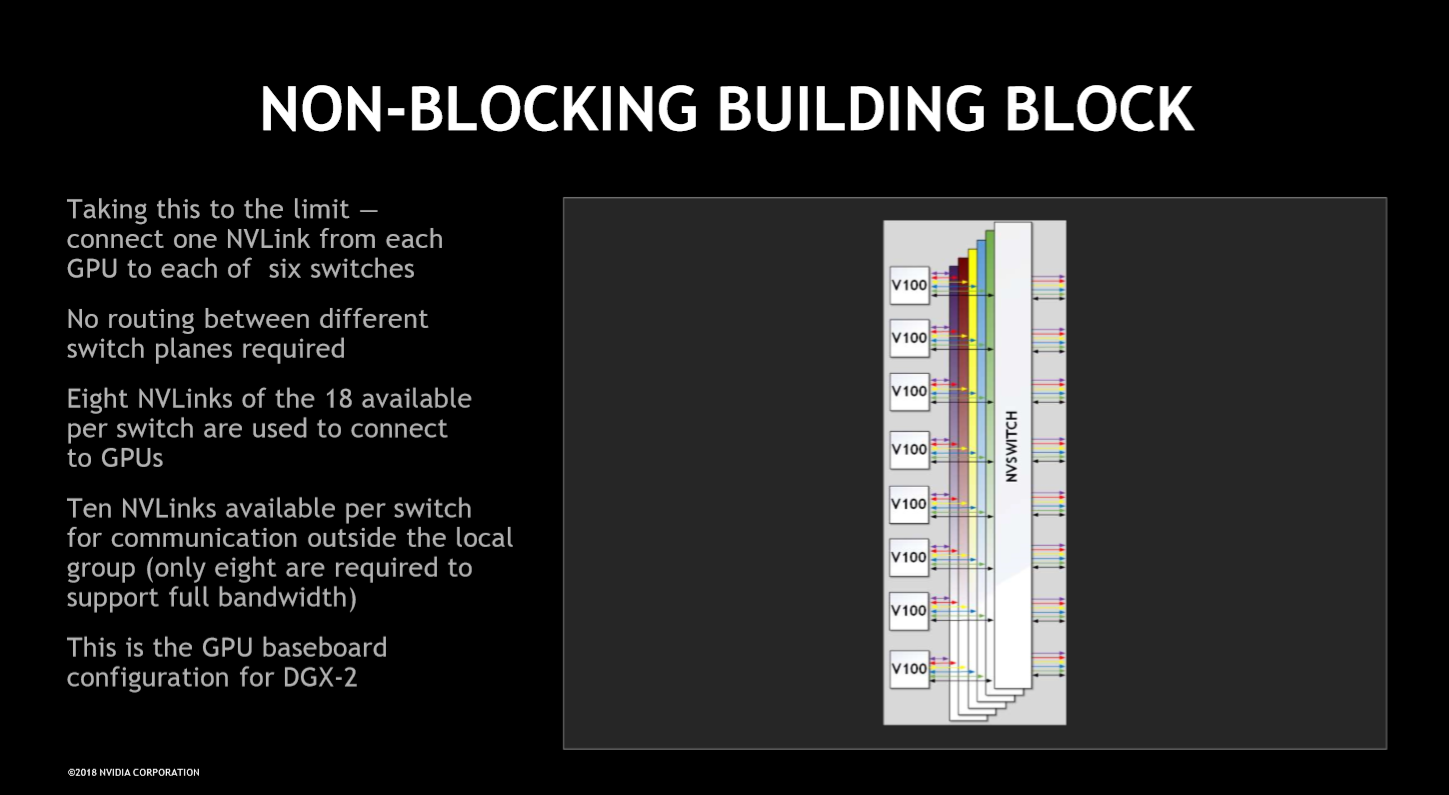

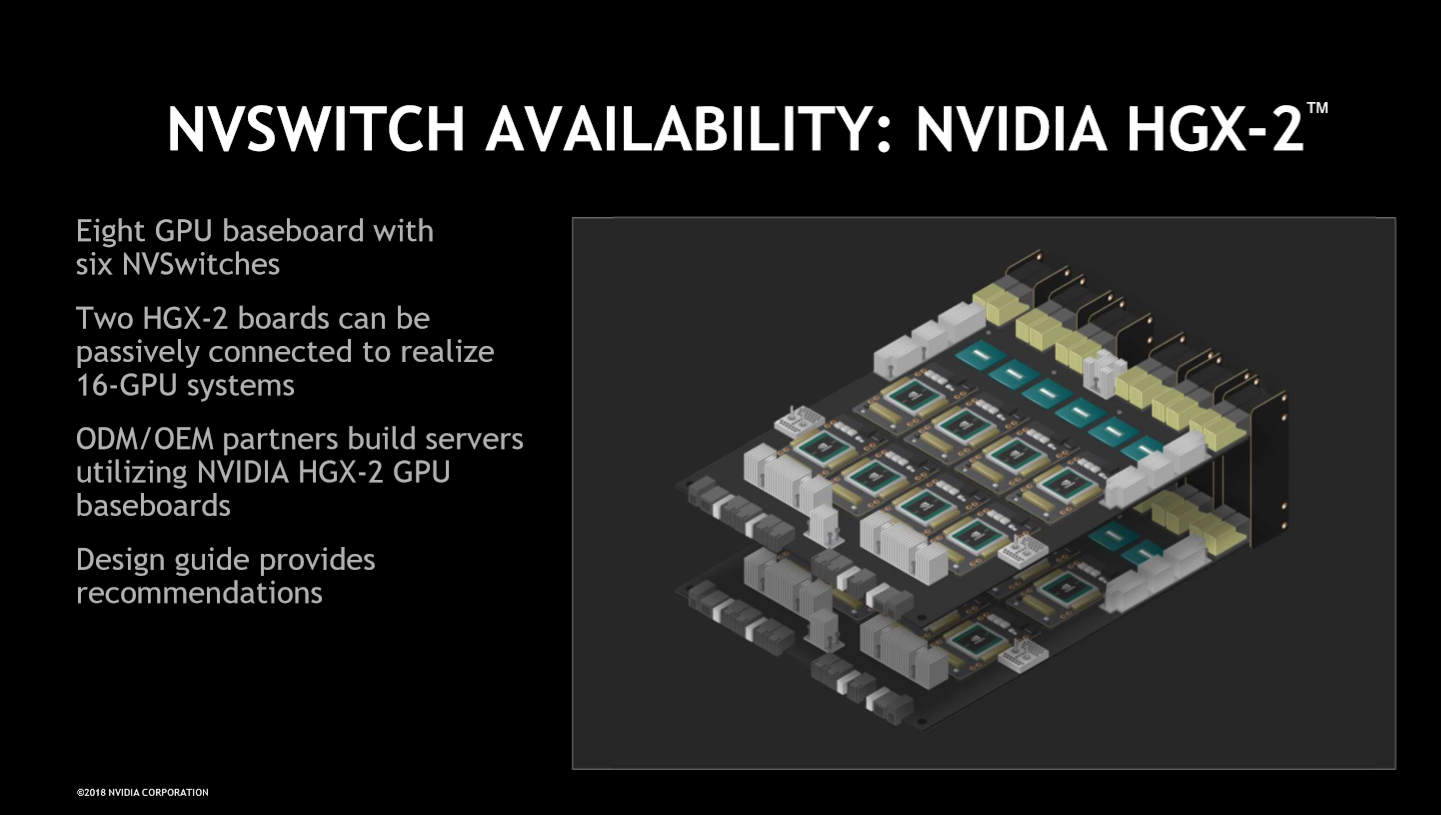

The DGX-2 features two distinct communication topologies, thus sidestepping the limitations of the PCIe interface for GPU-to-GPU communication. Each V100 GPU interfaces with the motherboard via two mezzanine connectors: One carries PCIe traffic to the passive backplane on the front of the server, while the other carries NVLink traffic to the rear backplane. These backplanes facilitate communication between the top and bottom system boards that house eight V100 GPUs apiece. The PCIe topology features four switches that connect the CPUs, RDMA-capable networking, and up to 30TB of NVMe SSDs to the GPUs.

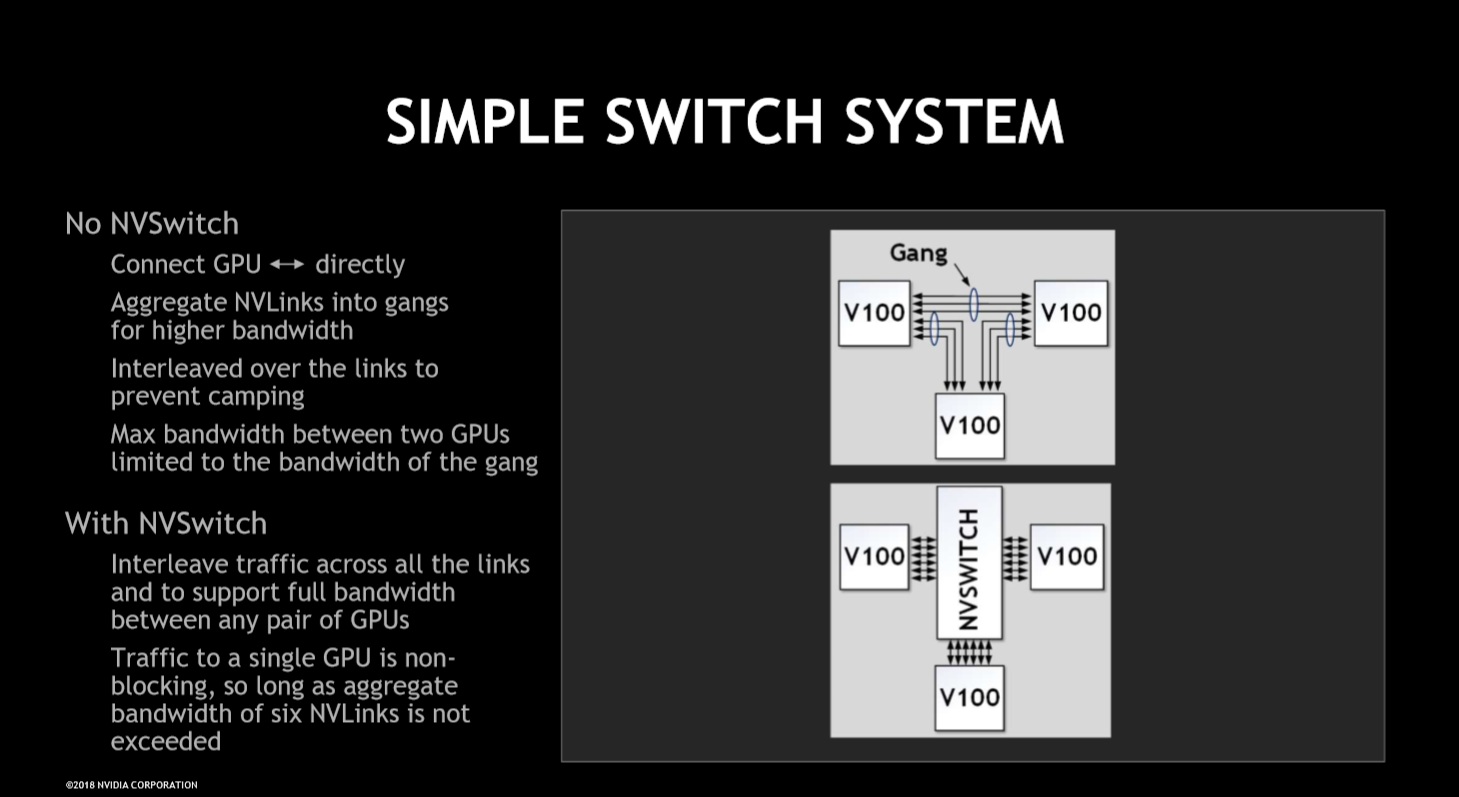

Nvidia's DGX-2 design required a high-performance switch for the NVLink traffic, but off-the-shelf designs couldn't deliver on the company's bandwidth and latency goals. Without a solution in sight, the company went to the drawing board and designed its own new groundbreaking switch.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

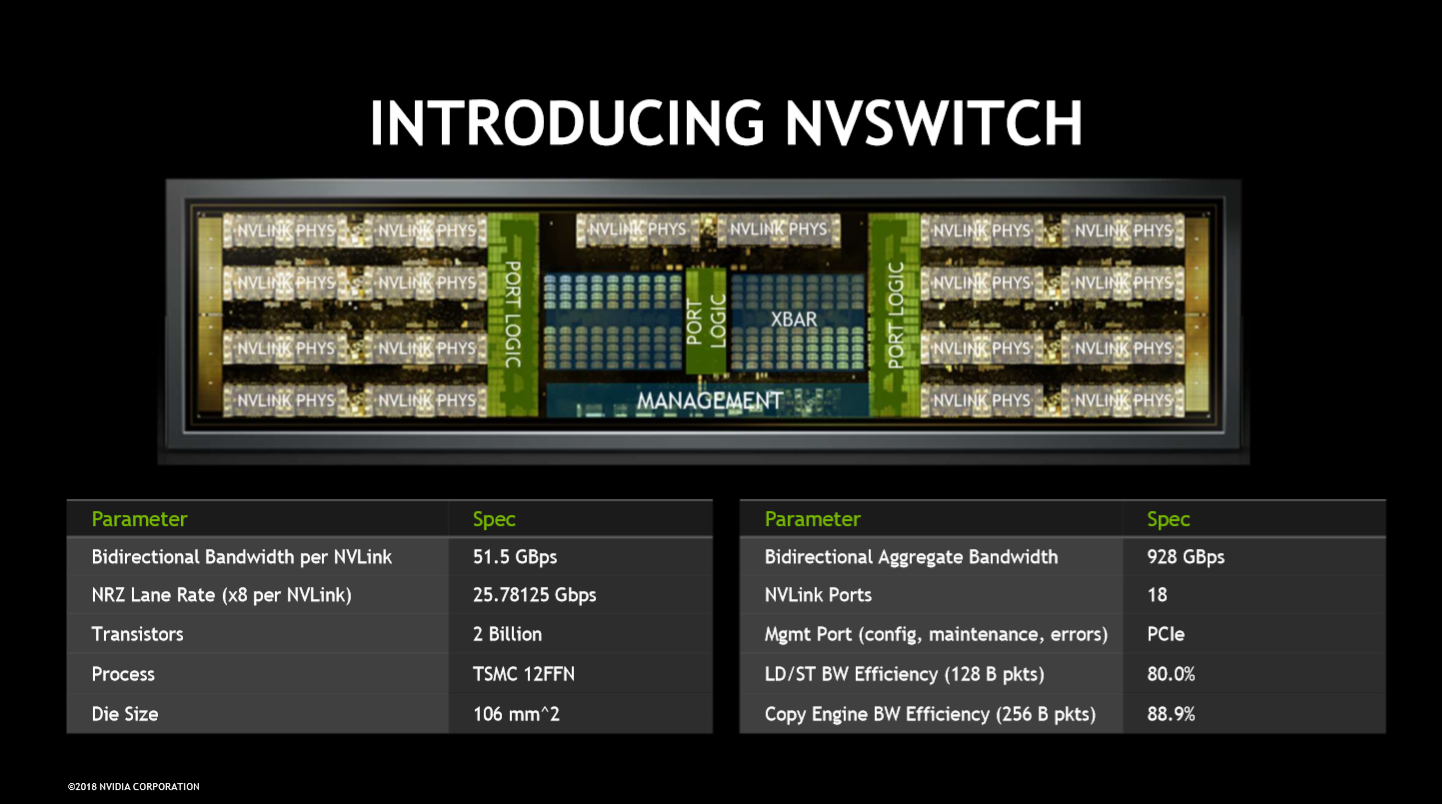

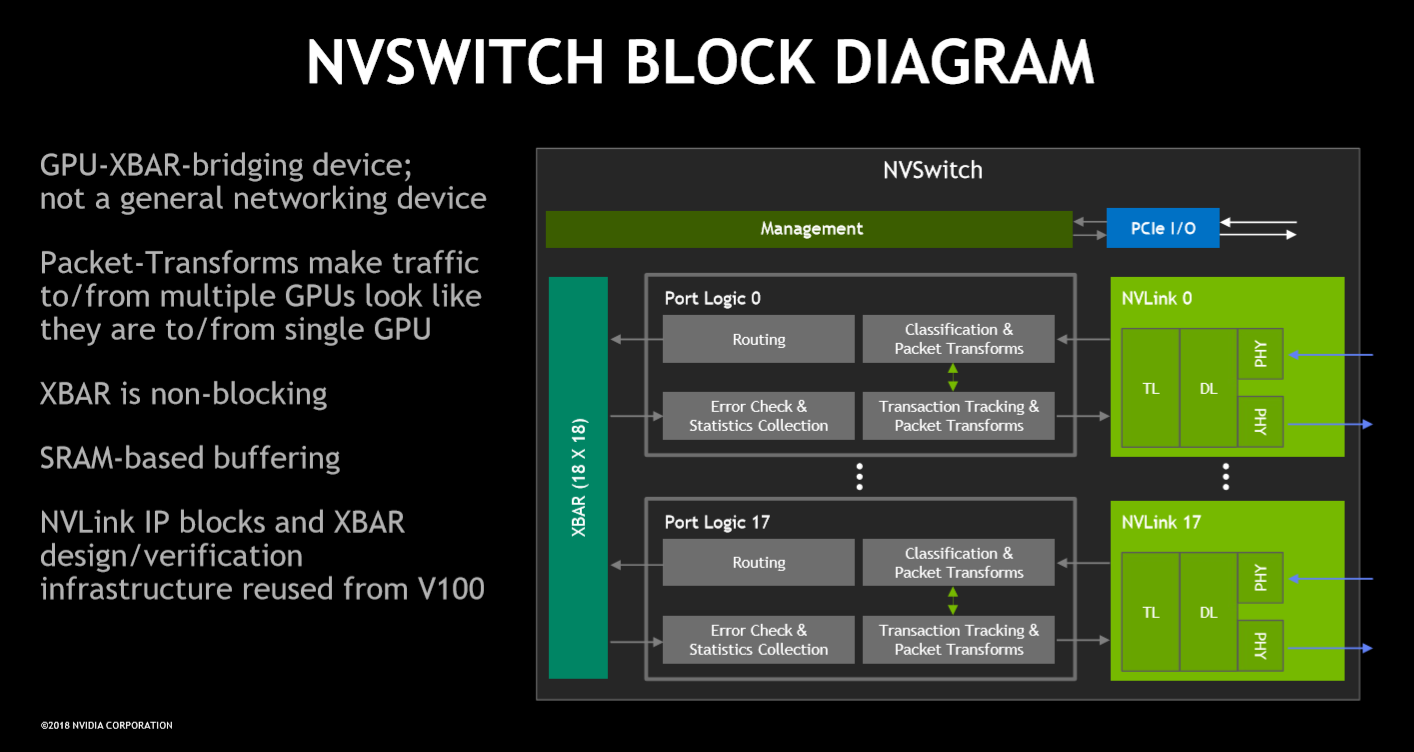

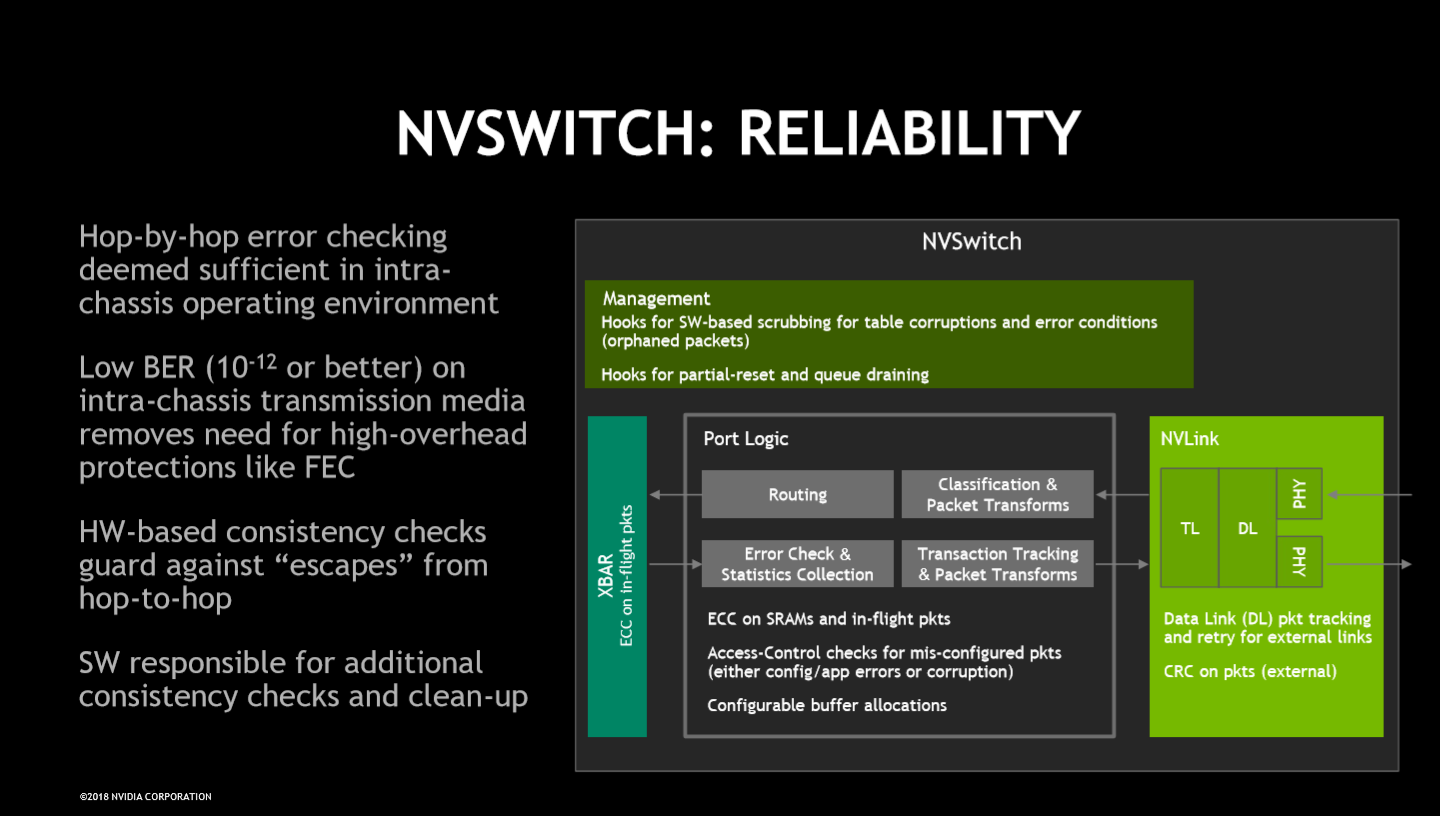

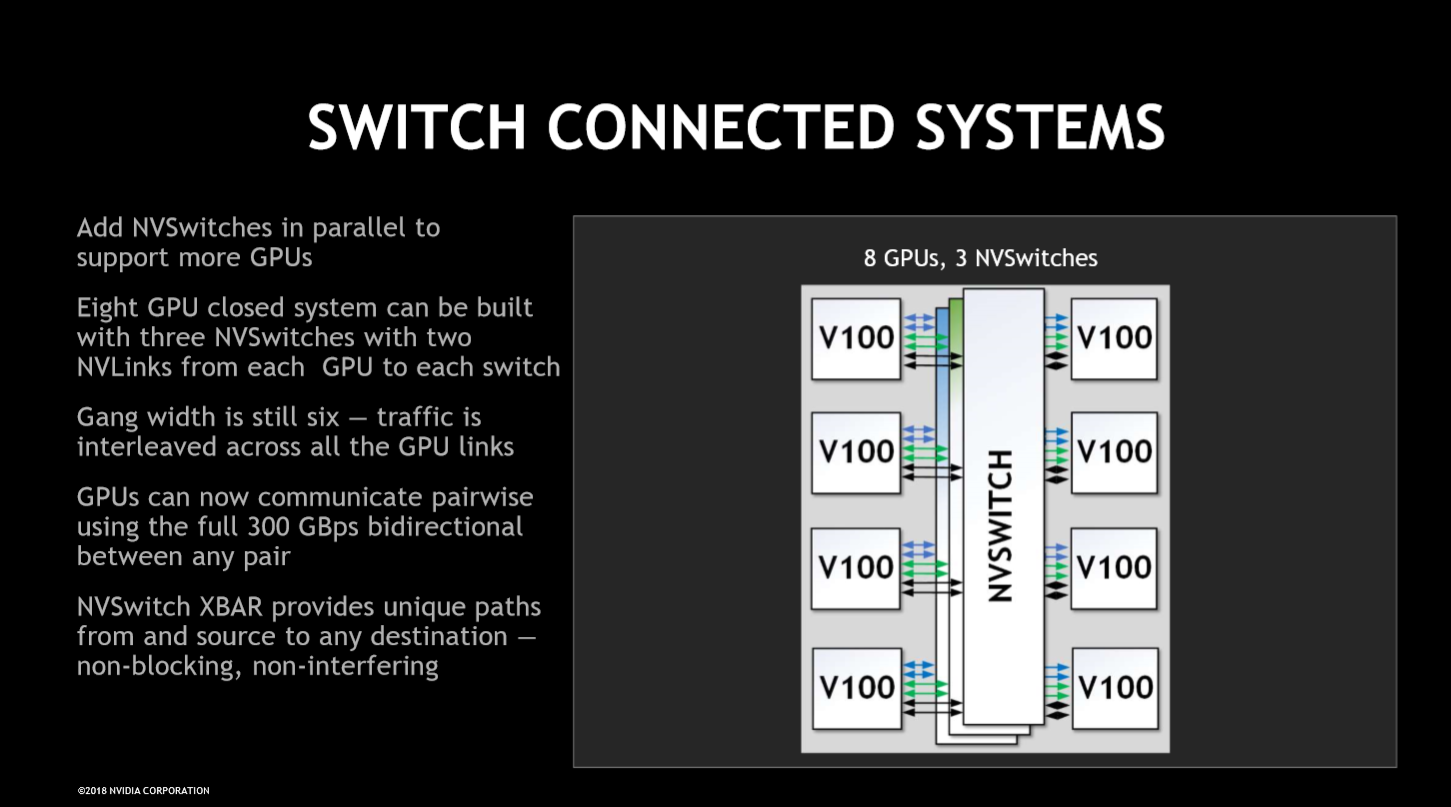

The NVSwitch is fabbed on TSMCs 12FFN process. The die has 18 billion transistors and features 18 NVLinks and one PCIe link for device management. The NVLinks use Nvidia's proprietary protocol to deliver 25GB/s of bandwidth per port, for an aggregate of 450GB/s of total throughput. All told, the eighteen switches push up to 2.4TB/s of bi-sectional bandwidth between the GPUs.

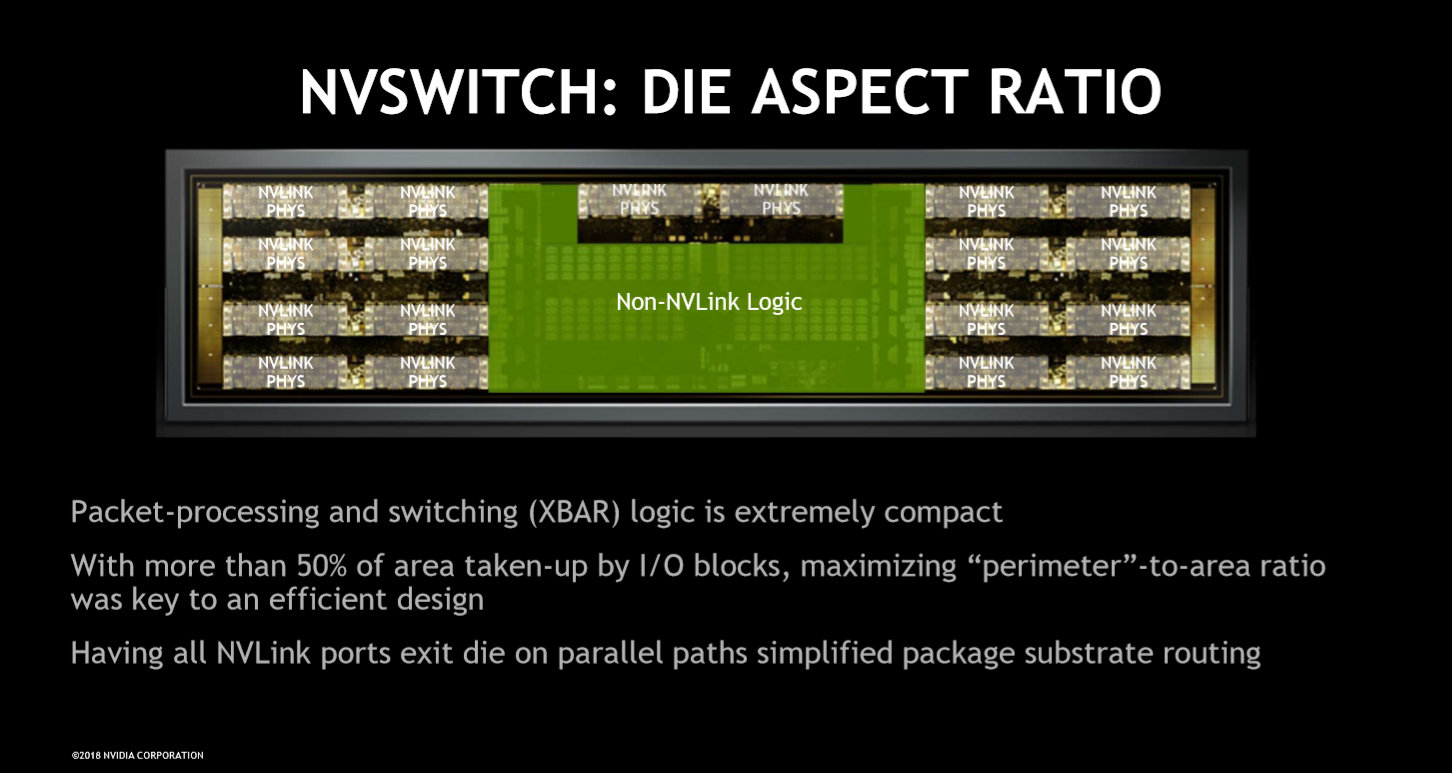

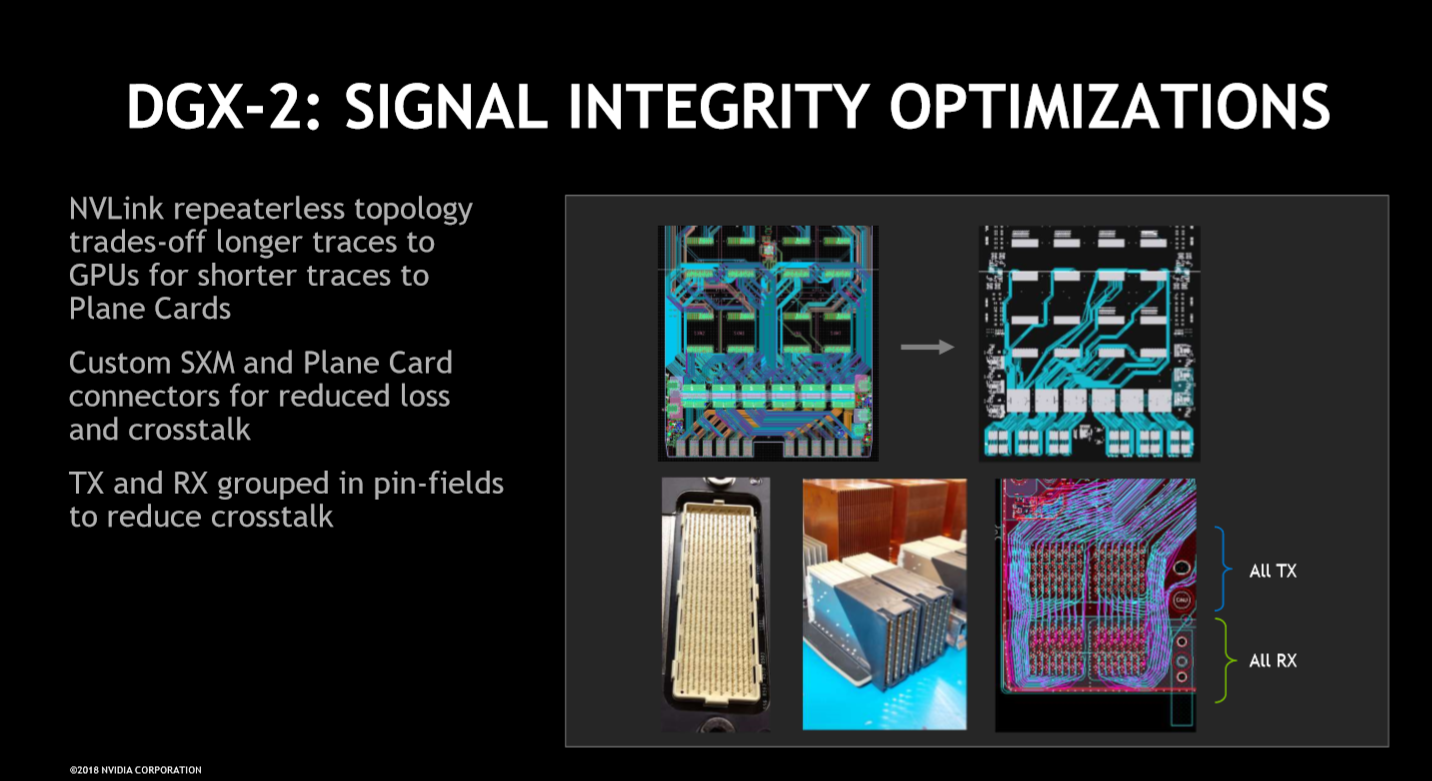

The 106mm2 NVSwitch die has a unique elongated die aspect ratio that surprisingly doesn't have a serious impact on the number of die that Nvidia can harvest per wafer. Fifty percent of the die is dedicated to I/O controllers (like the NVLink PHYs that line the top, right, and left portions of the die) instead of logic. The small port logic blocks conduct packet-transforms to make the entire system appear as a single GPU. The unique stacked PHY design enables parallel path routing out of the die. That simplifies substrate routing.

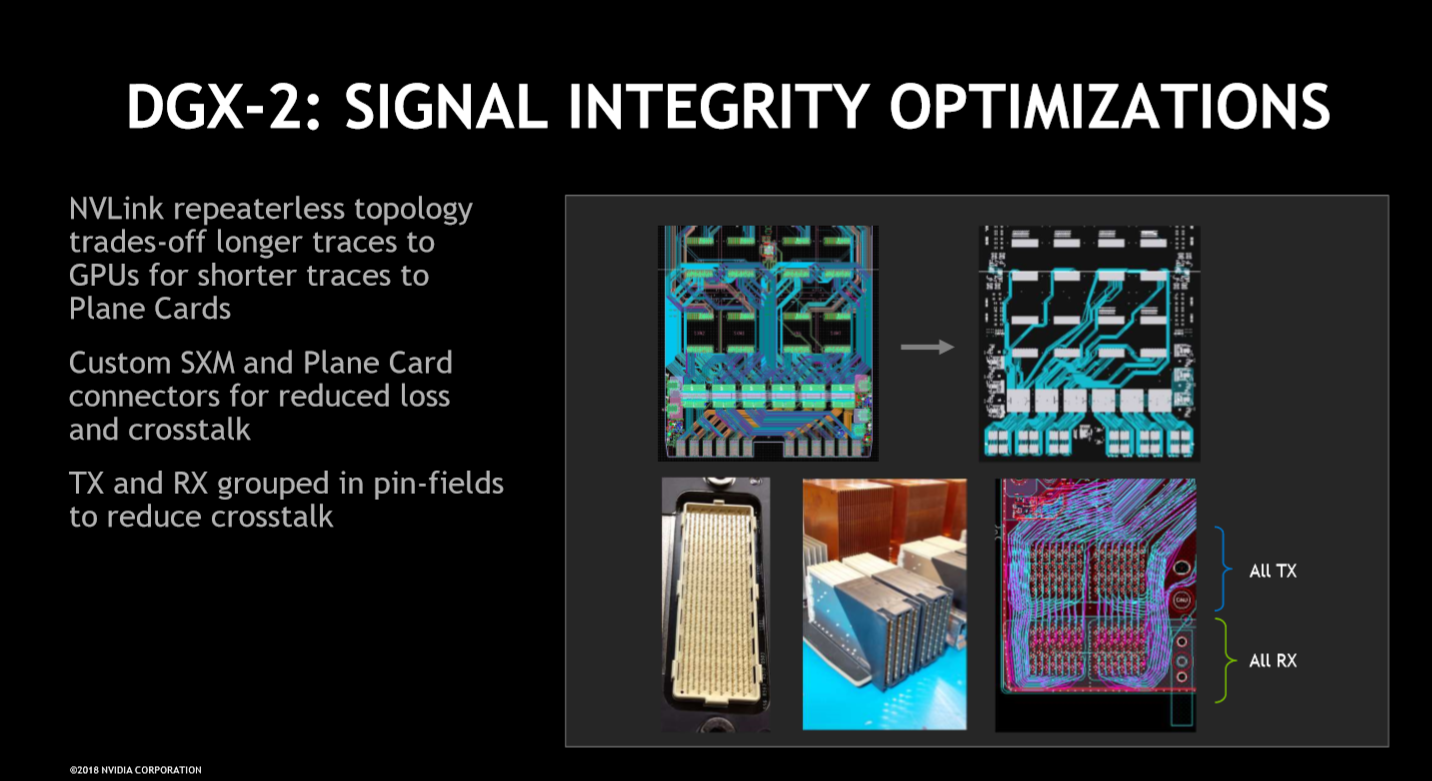

The NVSwitch die is relatively simple in comparison to full-fledged networking switches, largely because the DGX-2 doesn't need forward error correction. Instead, Nvidia uses standard CRC to enable internal consistency checks. The switch has internal SRAM buffers, but the external lanes are left unbuffered. The DGX-2 also doesn't have any repeaters or redrivers for NVLink pathways.

The NVSwitches are laid out in a dual-crossbar arrangement, so accesses from GPUs on the top board to their counterparts on the bottom does incur slightly higher latency. AI models crave extreme bandwidth, though, and can tolerate slight variances in latency.

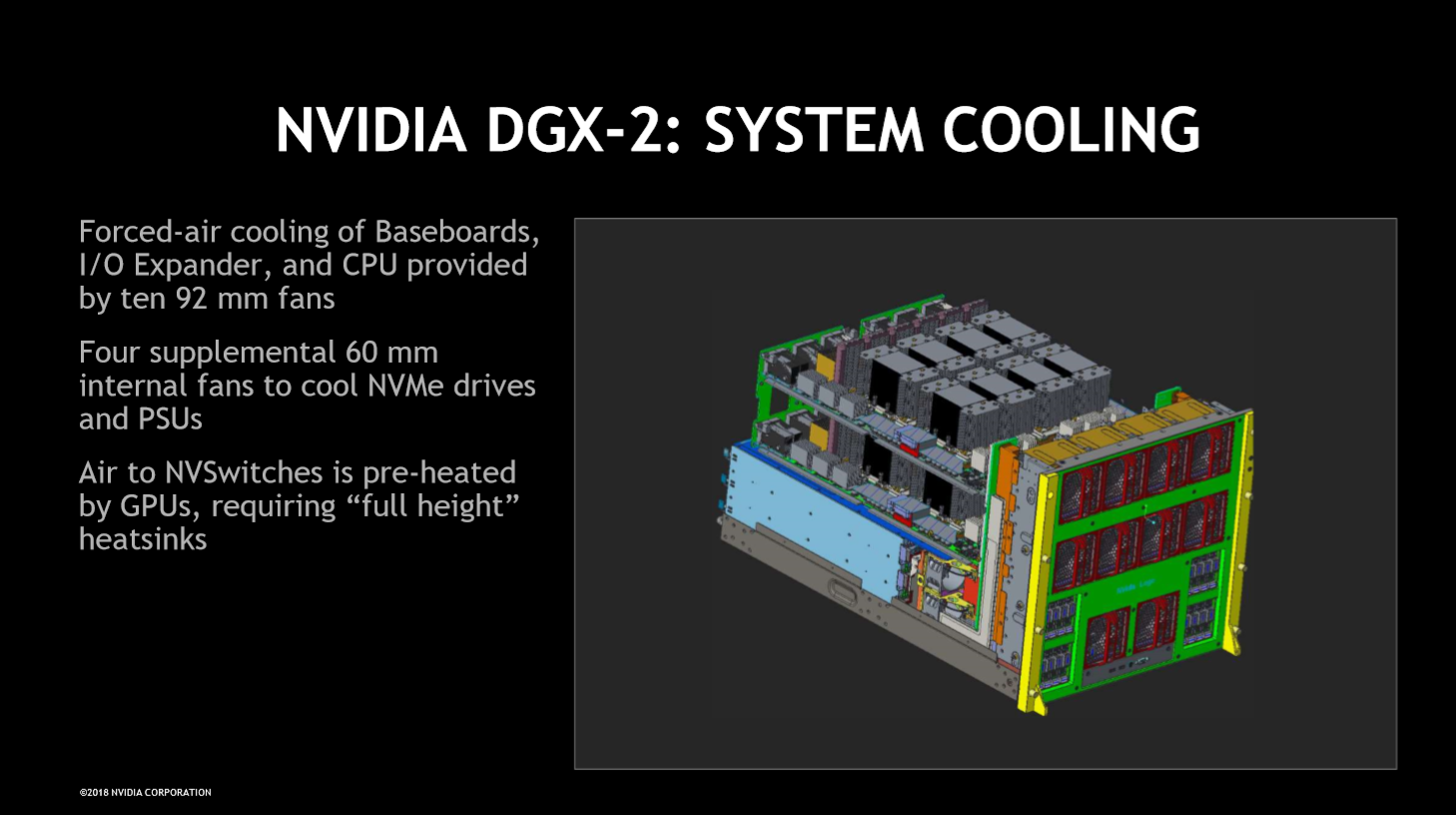

The entire chassis pulls 10kW, but the DGX-2 uses a 48V power distribution subsystem to reduce the amount of current needed to drive the system. Copper bus bars carry current from the power supplies to the two system boards. Cooling the beast requires 1000 linear feet per minute of airflow, and preheated air exiting from the GPU heatsinks reduces cooling efficacy at the rear of the chassis. The NVSwitches are positioned at the rear of the box, necessitating large full-height heatsinks. Nvidia hasn't divulged the NVSwitch's power draw, but claims it consumes less than a standard networking switch.

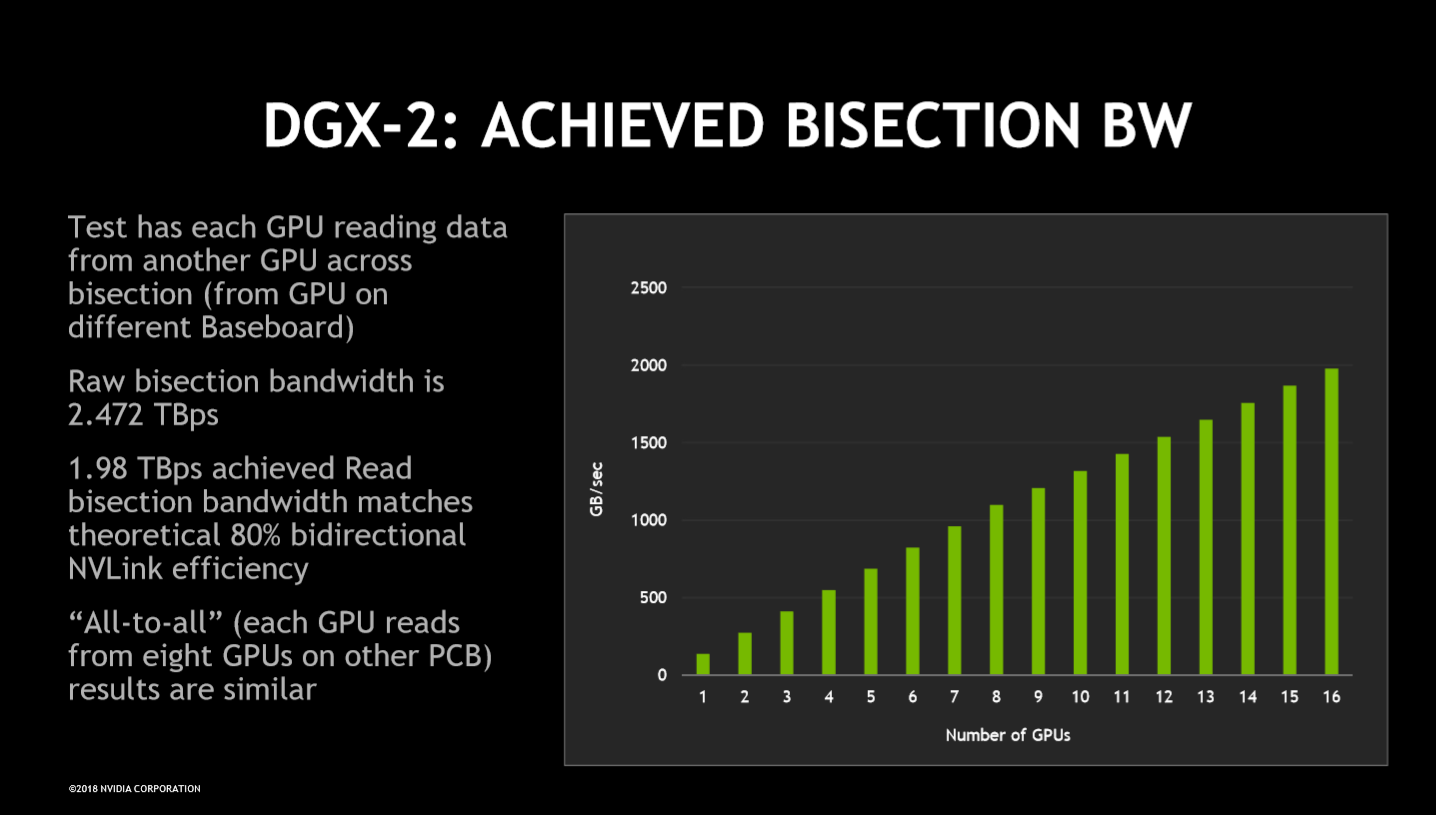

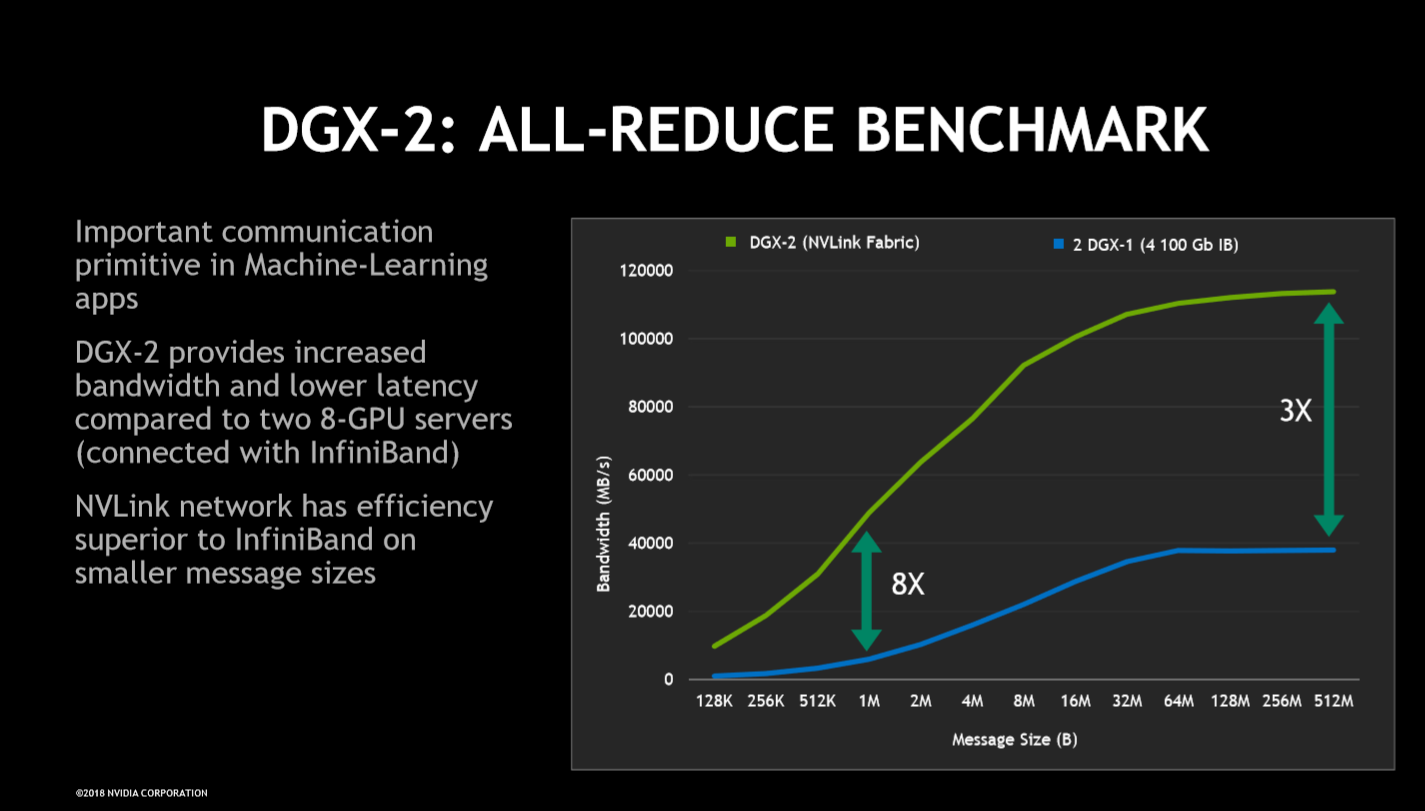

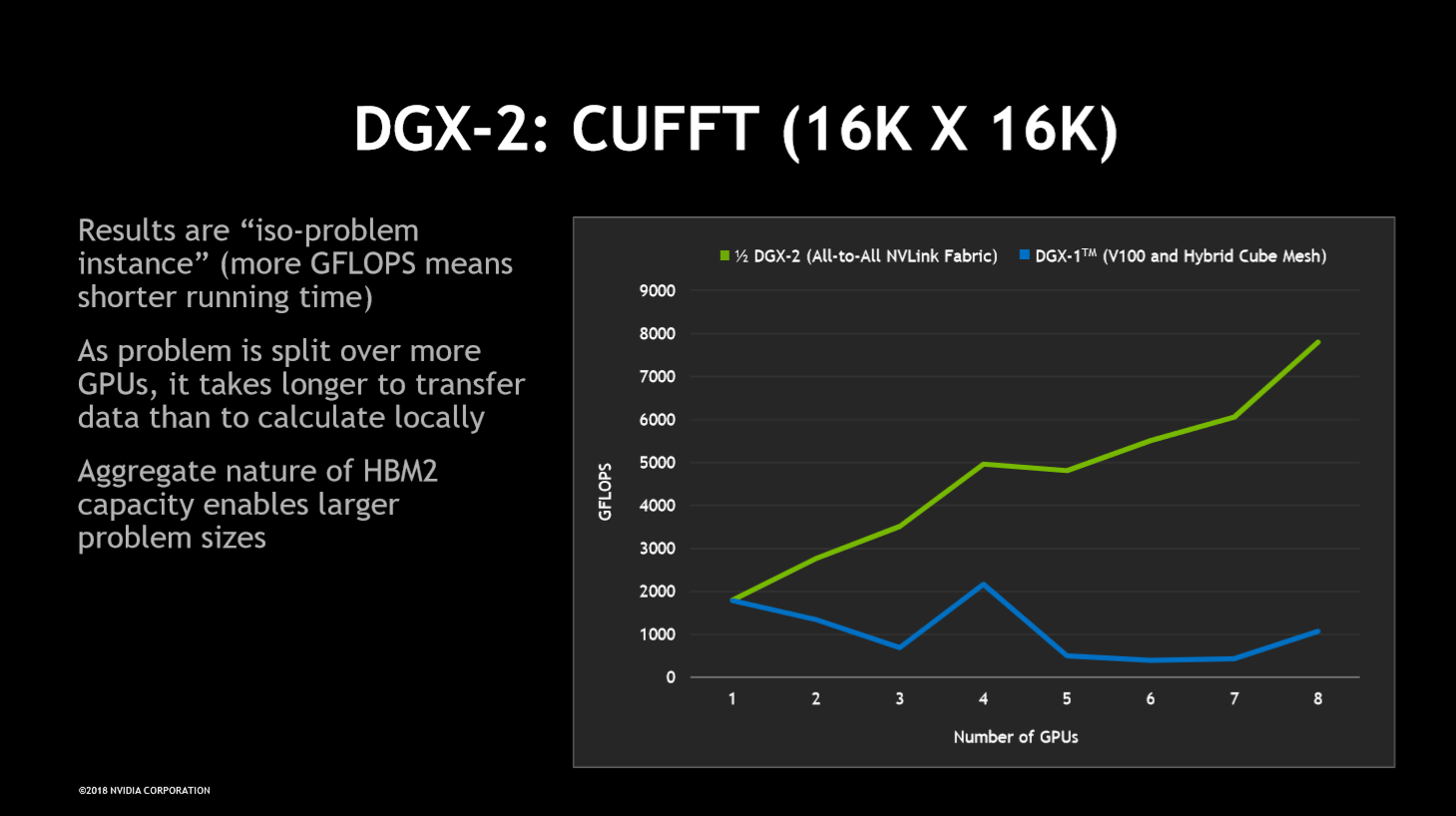

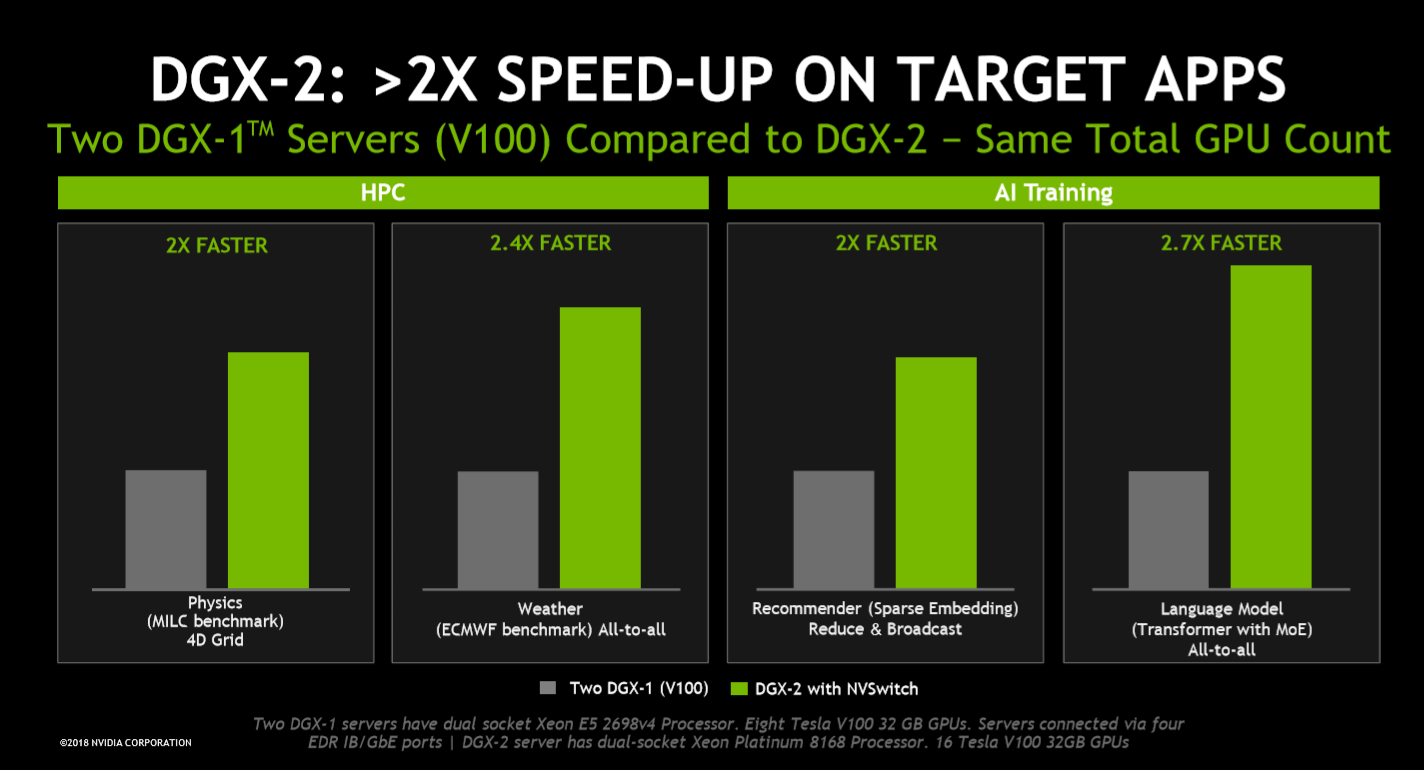

Nvidia shared benchmark results that highlight the near-linear bandwidth scaling between remote GPUs on different system boards, with the obvious intent of highlighting the efficiency of the NVSwitch. Other benchmarks, such as all-reduce and cuFFT, highlight the advantages of DGX-2's topology over the previous-gen DXG-1's mesh.

Nvidia's Hot Chips presentation answered many of the lingering questions around the NVSwitch, with the notable exception of power draw. The only other unanswered question is when Nvidia will update to a Turing-based DGX-3. Nvidia insists that Volta is its current platform for AI systems and wouldn't commit to a timeline for a next-gen system.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

bit_user Reply

I don't expect they will. Turing is really like Volta 2.0. For AI, the only substantial gain is the new integer-based inferencing capability of the Turing-generation tensor cores.21257621 said:The only other unanswered question is when Nvidia will update to a Turing-based DGX-3.

The only other thing they have to gain by releasing a new server GPU at 12 nm would be to make an AI-focused version that cuts back on fp64 throughput. But, I don't know if they could save enough die space to make that worthwhile, nor do I know if there's a big enough HPC market for Volta without Tensor cores.

An argument against anything Turing-based is that most HPC and AI customers probably have no interest in the RT cores. So, I'm not sure we'll see them in whatever succeeds the V100. Or maybe just enough to provide API-level compatibility. -

bit_user Reply

You've seen this?21257656 said:That thing's pretty beefy. I'd like to give it a spin with Crysis on a 4K monitor.

https://www.anandtech.com/show/12170/nvidia-titan-v-preview-titanomachy/8

The problem is that the DGX-2 GPUs have no video output. I'm also not sure if Crysis could use more than one of them.

So, your best bet is a Titan V, Quadro GV100, or RTX 2080 Ti. -

AgentLozen A graphics card with no video output huh? Well I don't want it anymore. Nvidia has lost a loyal customer.Reply