Nvidia's Tesla P4 And P40 GPUs Boost Deep Learning Inference Performance With INT8, TensorRT Support

Nvidia continues to double down on deep learning GPUs with the release of two new “inference” GPUs, the Tesla P4 and the Tesla P40. The pair are the 16nm FinFET direct successors to Tesla M4 and M40, with much improved performance and support for 8-bit (INT8) operations.

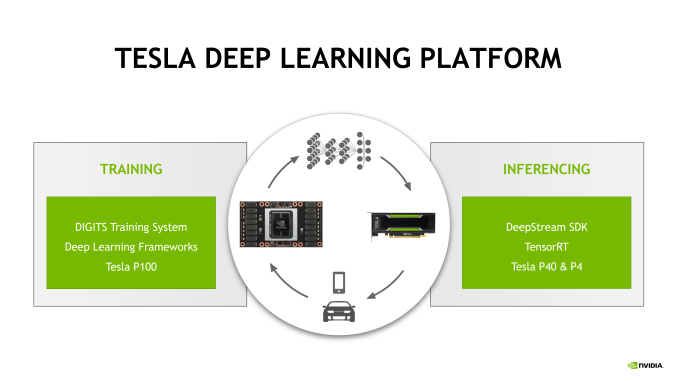

Deep learning consists of two steps: training and inference. For training, it can take billions of TeraFLOPS to achieve an expected result over a matter of days (while using GPUs). For inference, which is the running of the trained models against new data, it can take billions of FLOPS, and it can be done in real-time.

The two steps in the deep learning process require different levels of performance, but also different features. This is why Nvidia is now releasing the Tesla P4 and P40, which are optimized specifically for running inference engines, such as Nvidia’s recently launched TensorRT inference engine.

Unlike the Pascal-based Tesla P100, which comes with support for the already quite low 16-bit (FP16) precision, the two new GPUs bring support for the even lower 8-bit INT8 precision. This is because the researchers have discovered that you don’t need especially high precision for deep learning training.

The expected results will appear significantly faster if you use twice as much data with half the precision. Because inference operates on already-trained data, even less precision is needed than for training, which is why Nvidia’s new cards now have support for INT8 operations.

Tesla P4

The Tesla P4 is the lower-end GPU from the two that were announced, and it’s targeted at scale-out servers that want highly-efficient GPUs. Each Tesla P4 GPU uses between 50W and 75W of power, for a peak performance of 5.5 (FP32) TeraFLOP/s and 21.8 INT8 TOP/s (Tera-Operations per second).

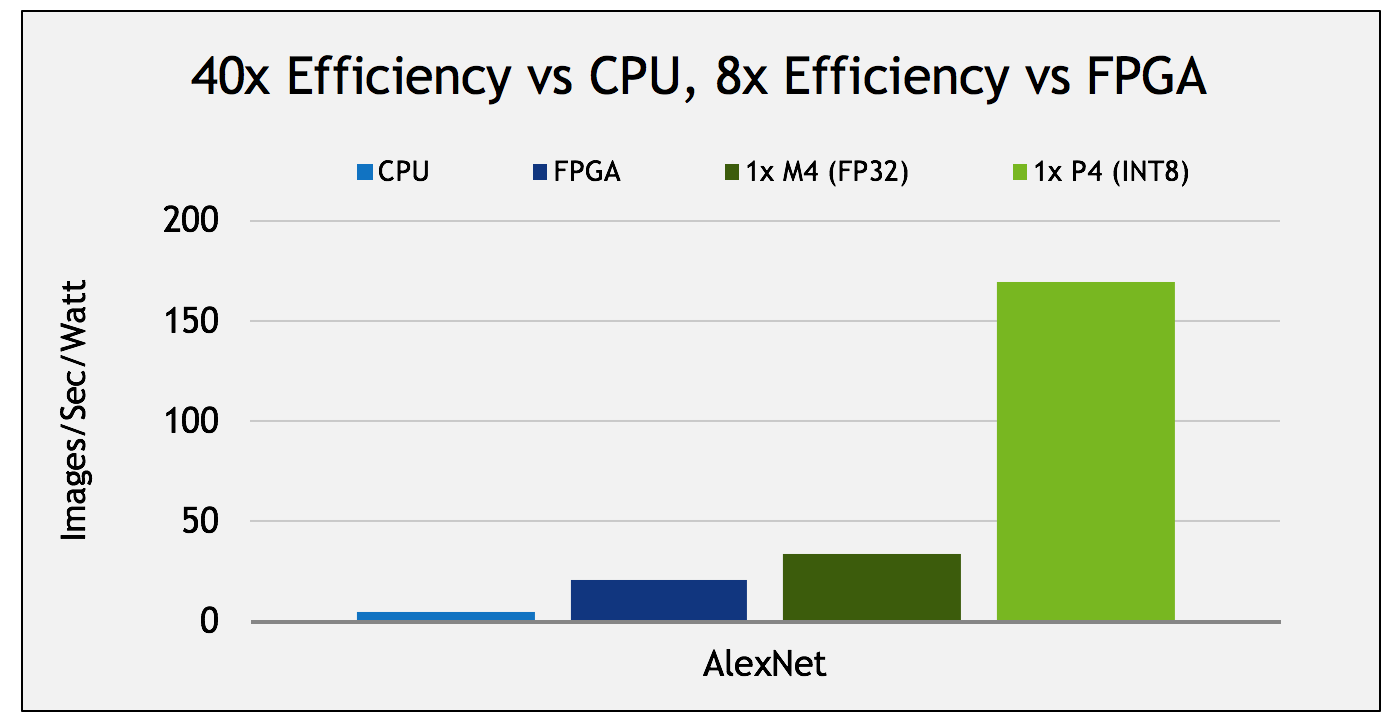

Nvidia compared its Tesla P4 GPU to an Intel Xeon E5 general purpose CPU and alleged that the P4 is up to 40x more efficient on the AlexNet image processing test. The company also claimed that the Tesla P4 is 8x more efficient than an Arria 10-115 FPGA (made by Altera, which Intel acquired).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Tesla P40

The Tesla P40 was designed for scale-up servers, where performance matters most. Thanks to improvements in the Pascal architecture as well as the jump from the 28nm planar process to a 16nm FinFET process, Nvidia claimed that the P40 is up to 4x faster than its predecessor, the Tesla M40.

The P40 GPU has a peak performance of 12 (FP32) TeraFLOP/s and 47 TOP/s, so it’s about twice as fast as its little brother, the Tesla P4. Tesla P40 has a maximum power consumption of 250W.

TensorRT

Nvidia also announced the TensorRT GPU inference engine that doubles the performance compared to previous cuDNN-based software tools for Nvidia GPUs. The new engine also has support for INT8 operations, so Nvidia’s new Tesla P4 and P40 will be able to work at maximum efficiency from day one.

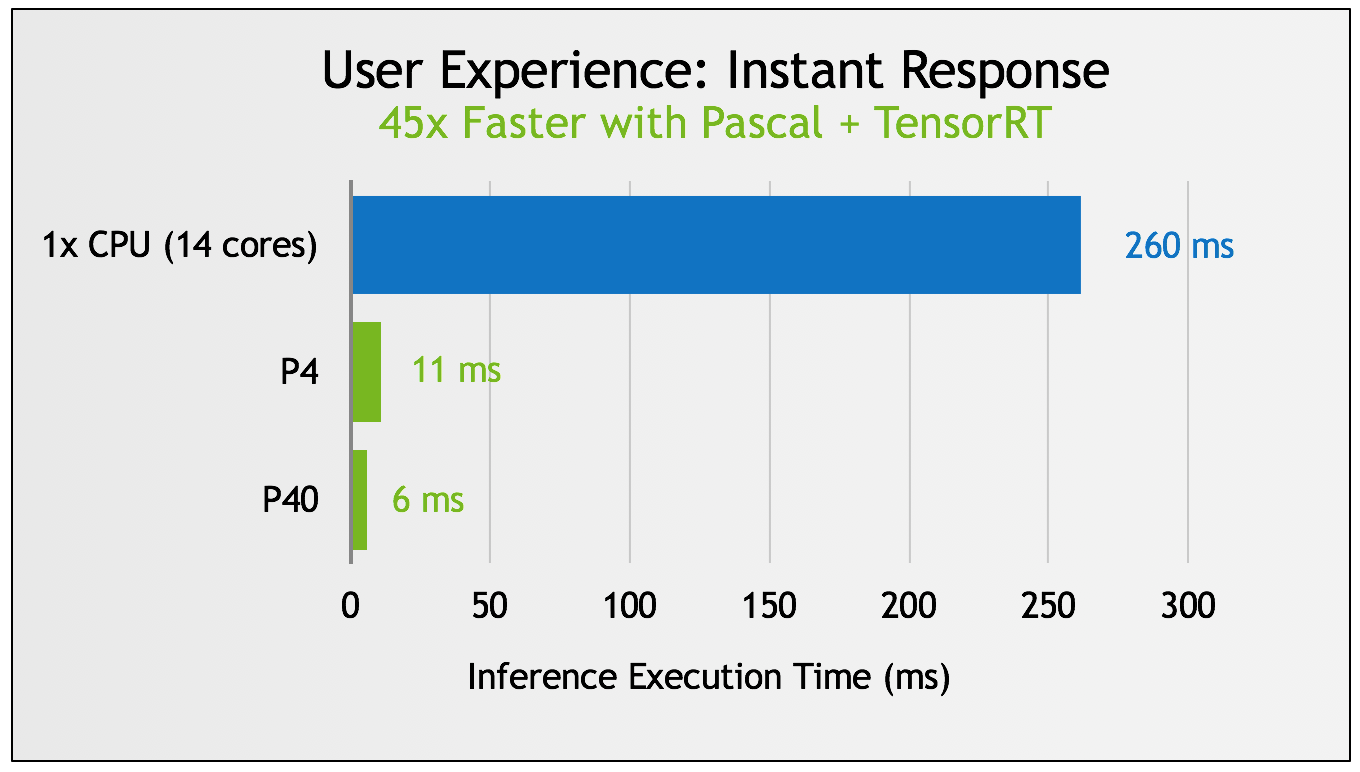

In the graph below, Nvidia compared the performance of the Tesla P4 and P40 GPUs while using the TensorRT inference engine to a 14-core Intel E5-2690v4 running Intel’s optimized version of the Caffe neural networking framework. According to Nvidia’s results, the Tesla P40 seems to be up to 45x faster than Intel’s CPU here.

So far Nvidia has been comparing its GPUs to Intel’s general purpose CPUs alone, but Intel’s main product for deep learning is now the Xeon Phi line of chips with its “many-core” (Atom-based) accelerators.

Nvidia’s GPUs likely still beat those chips by a healthy margin due to the inherent advantage GPUs have even over many-core CPUs for such low-precision operations. However, at this point, comparing Xeon Phi with Nvidia’s GPUs would be a more realistic scenario in terms of what their customers are looking to buy for deep learning applications.

DeepStream SDK

Nvidia also announced the DeepStream SDK, which can utilize a Pascal-based server to decode and analyze up to 93 HD video streams in real time. According to Nvidia, this will allow companies to understand video at scale for applications such as self-driving cars, interactive robots, and filtering and ad placement.

Partnership With Coursera, Udacity, and Microsoft

Nvidia’s Deep Learning Institute, which offers online courses and workshops around the world for using deep learning to solve real problems, partnered with Coursera and Udacity to make its courses available to more people.

The courses include teaching people how to become self-driving car engineers and using deep learning to predict risk of a disease. Thanks to a partnership with Microsoft, there will also be a workshop for teaching robots how to think through the use of deep learning.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

bit_user ReplyFor training, it can take billions of TeraFLOPS to achieve an expected result over a matter of days (while using GPUs)

Hmmm... Using round numbers, GPUs top out at about 20 TFLOPS (fp16). So, 2 billion TFLOPS would take a single top-end GPU approximately 100 million seconds, or about 3.2 years. Furthermore, neural nets don't scale very well to multi-GPU configurations, meaning no more than about 10 GPUs (or a DGX-1) would be used, for training a single net. So, I think we can safely say this overshot the mark, a bit.

So, I take it these are basically the GP106 (P4) and GP102 (P40), or tweaked versions thereof? Does the P100 have INT8-support?

-

hst101rox How well would a 1060 or 1080 at this sort of workload? I take it, these don't support the lower precision INT-8 precision but do have support up to the same level precision that the deep learning cards are capable of? Would not be nearly as efficient as the tailored GPUs?Reply

Then we have the CAD GPUs, Quadro. Which of the 3 types of Nvidia GPUs work best with all 3 applications? -

bit_user Reply

I'm also curious how they compare. I didn't find a good answer, but I did notice one of the most popular pages on the subject has been updated to include recent hardware through the GTX 1060:18593691 said:How well would a 1060 or 1080 at this sort of workload? I take it, these don't support the lower precision INT-8 precision but do have support up to the same level precision that the deep learning cards are capable of?

http://timdettmers.com/2014/08/14/which-gpu-for-deep-learning/

I've taken the liberty of rewriting his performance equation, in a way that's mathematically correct:GTX_TitanX_Pascal = GTX_1080 / 0.7 = GTX_1070 / 0.55 = GTX_TitanX / 0.5 = GTX_980Ti / 0.5 = GTX_1060 / 0.4 = GTX_980 / 0.35

GTX_1080 = GTX_970 / 0.3 = GTX_Titan / 0.25 = AWS_GPU_g2 (g2.2 and g2.8) / 0.175 = GTX_960 / 0.175

So, in other words, a Pascal GTX Titan X is twice as fast as the original Titan X or GTX 980 Ti, in his test. That said, I don't know that his test can exploit fp16 or int8.