Real-Time Cinematic VR Rendering With Epic Games And The Mill

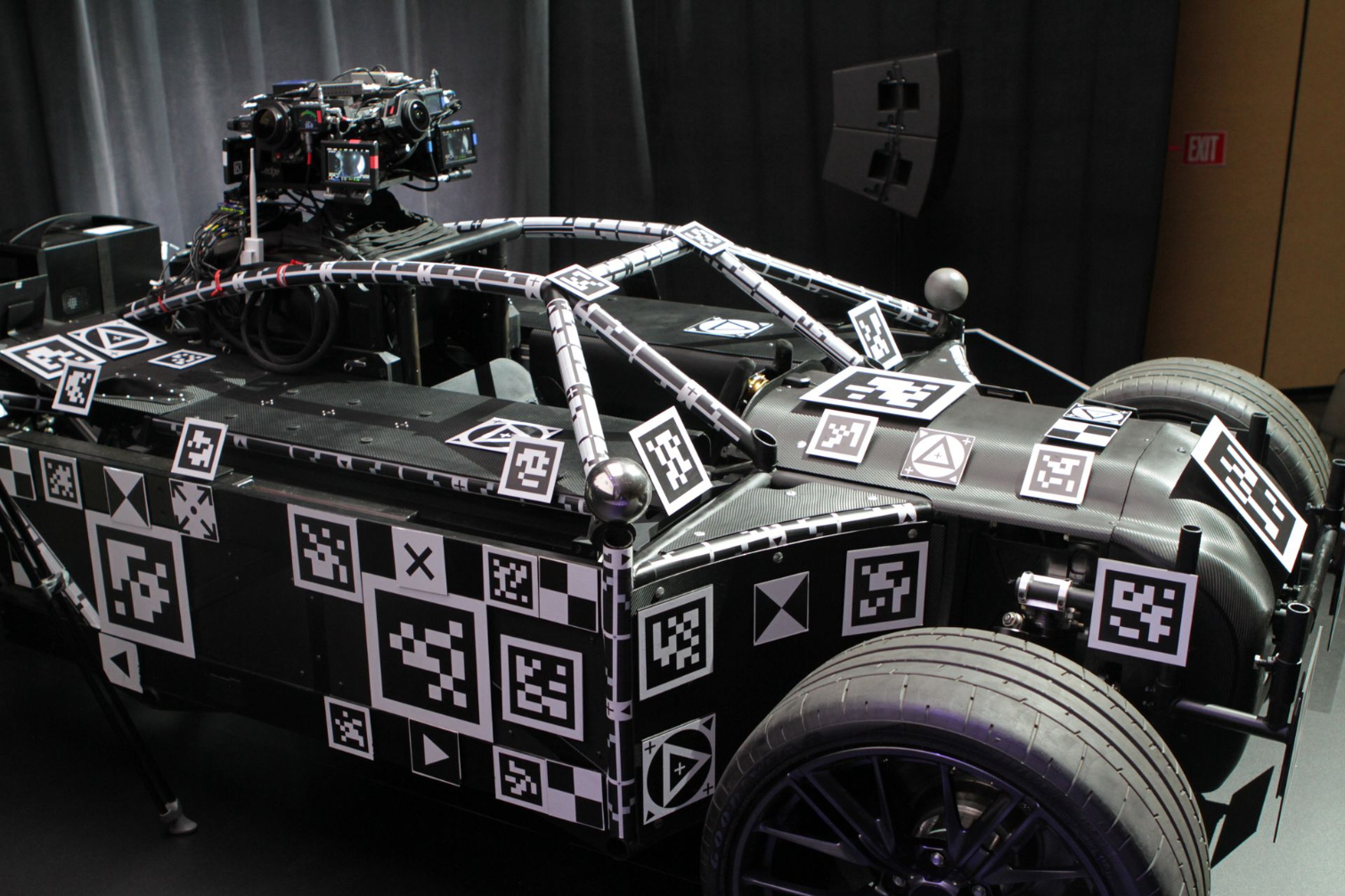

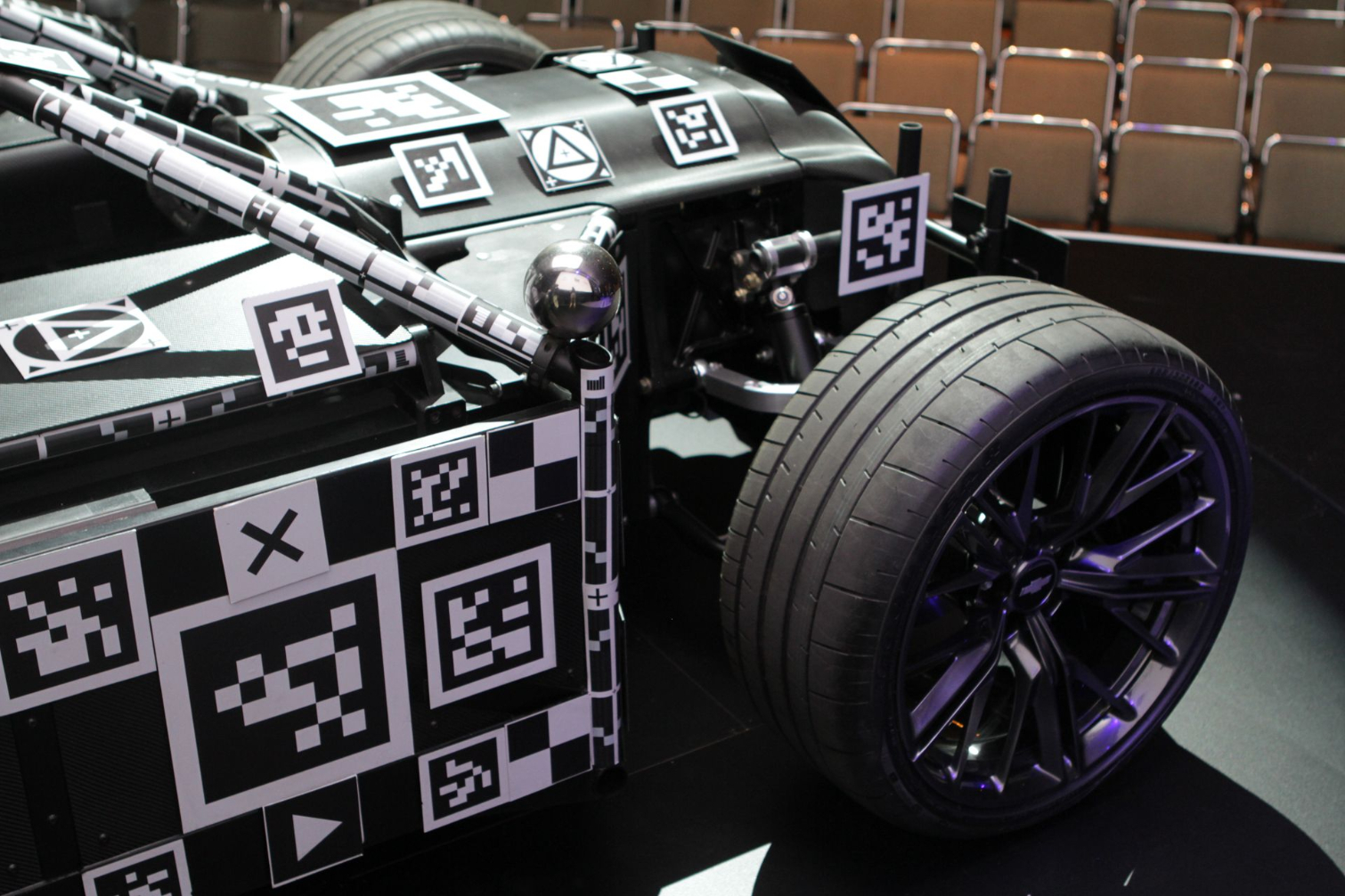

They call it the Blackbird. It looks like a dune buggy with a fancy camera rig on top, and in truth, that’s kind of what it is, but that’s not what it’s for. It’s every car you can imagine, or at least that’s the idea.

The Blackbird is the brainchild of The Mill, a visual effects and content creation studio. It’s a modular vehicle, meaning you can add various components to it depending on what you want to do with it.

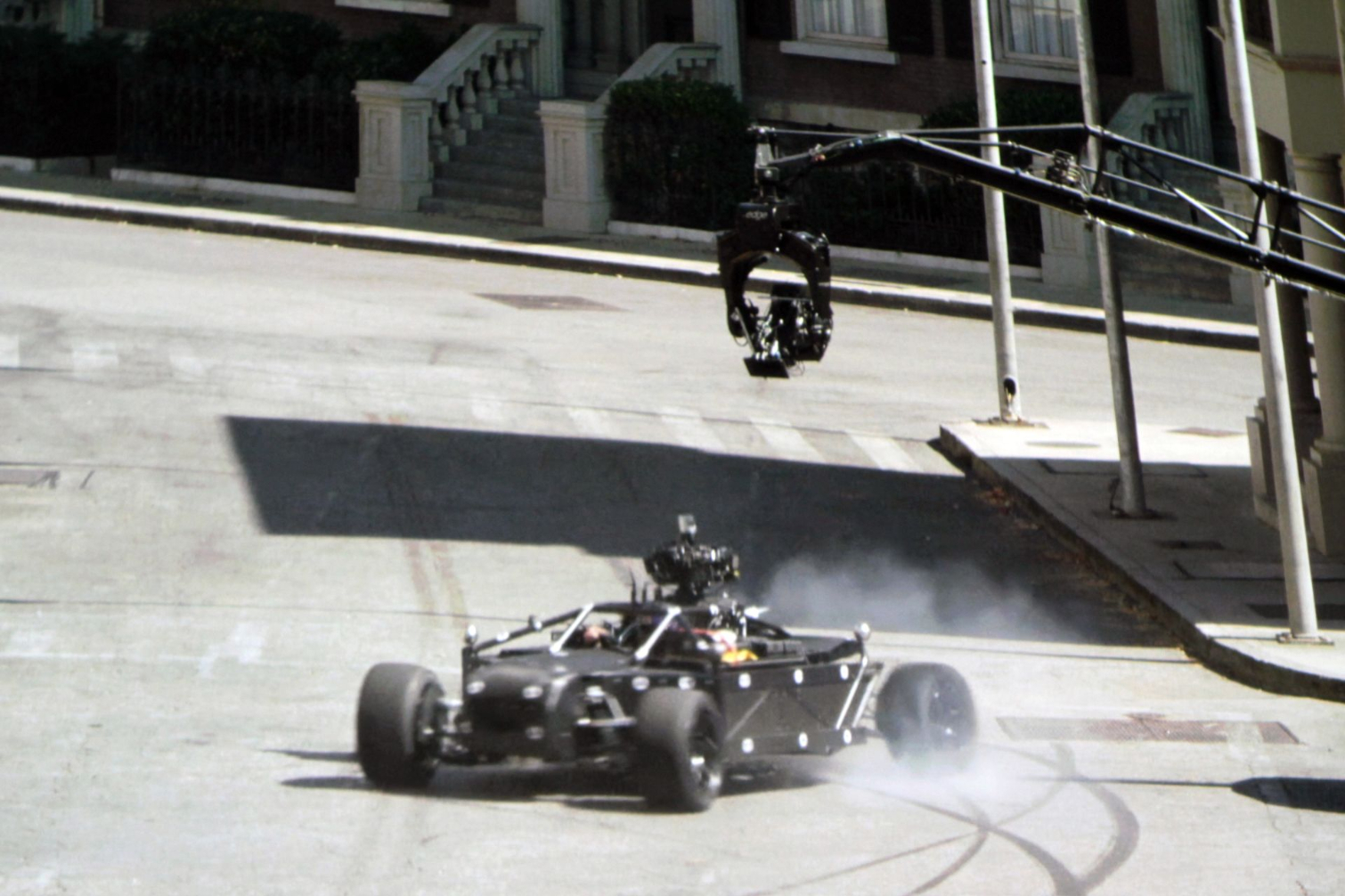

At a GDC event co-hosted by The Mill, Epic Games, and Chevrolet, they played a trailer for a fake movie called The Human Race wherein a hotshot race car driver agrees to race against an AI driver. It’s man versus machine, a John Henry story for the new age. (Spoiler: The human wins--or does he?) In the trailer, two cars race, but both of them were fake. They were Blackbirds in disguise, wearing rendered car skins that looked as real as you can imagine.

[Applause], nice work Epic Games and The Mill, that’s very cool. But there was a twist: The race cars were rendered in real-time. [More applause], WOW, we didn’t see that coming.

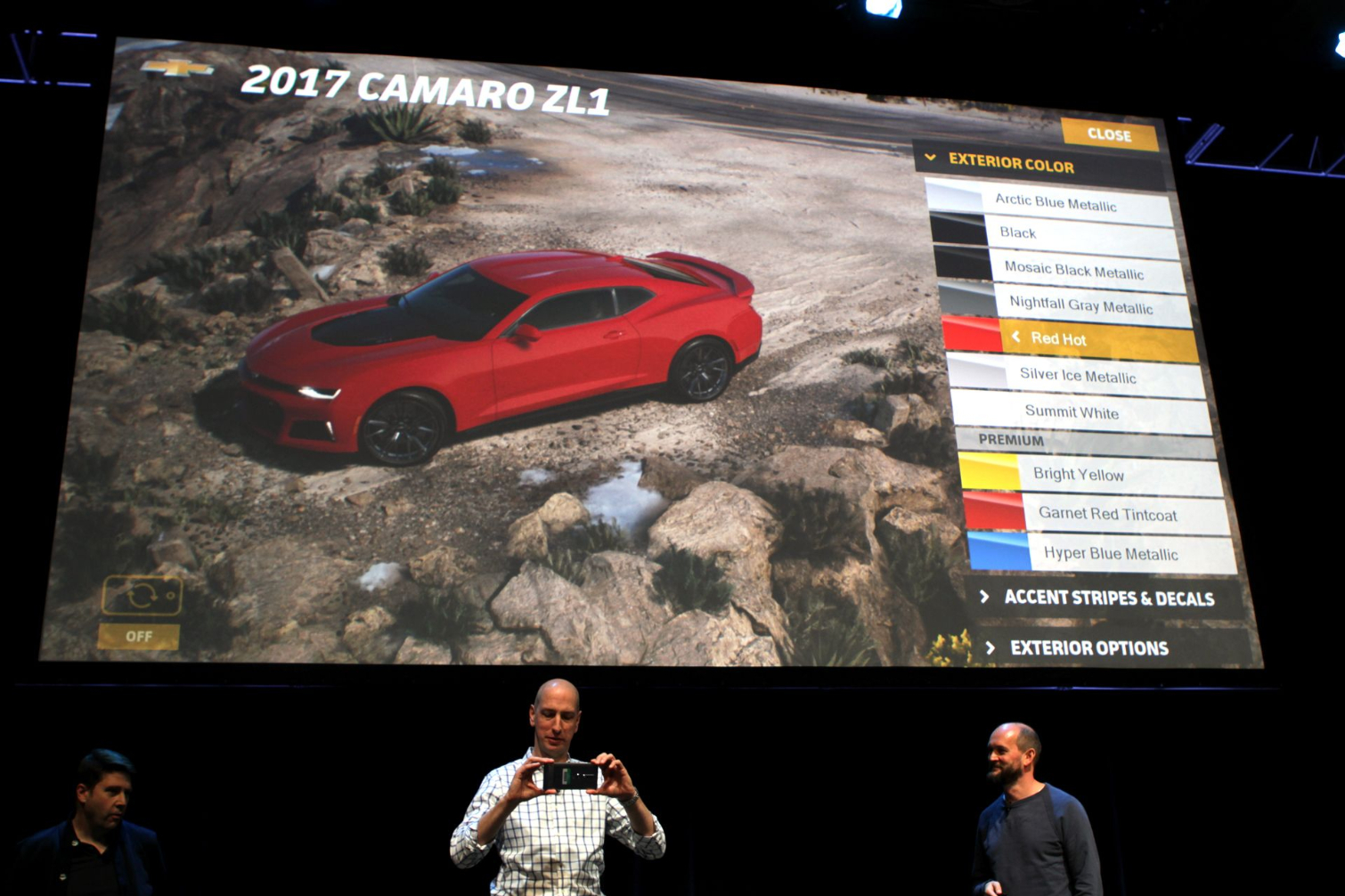

Then came the second, and frankly more impressive, twist. A Chevy executive, Sam Russell, took the stage and picked up a Lenovo Phab 2 Pro smartphone (which has Tango on board). He fired up an app that lets you customize the new Chevy Camaro ZL-1--paint colors, trim colors, and so on. Because it was a Tango app, he could move the phone around and look at the car from different angles. They had the phone’s display mirrored on the giant middle screen in the presentation hall. On the two enormous screens flanking the middle one, they let the race car trailer play on a loop. When Russell changed the color on the car on his phone, the colors of the car in the video also changed, in real-time.

[Applause]

How It Works

We pinned down some of the guys from Epic Games and The Mill to understand how exactly they were able to accomplish this feat.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

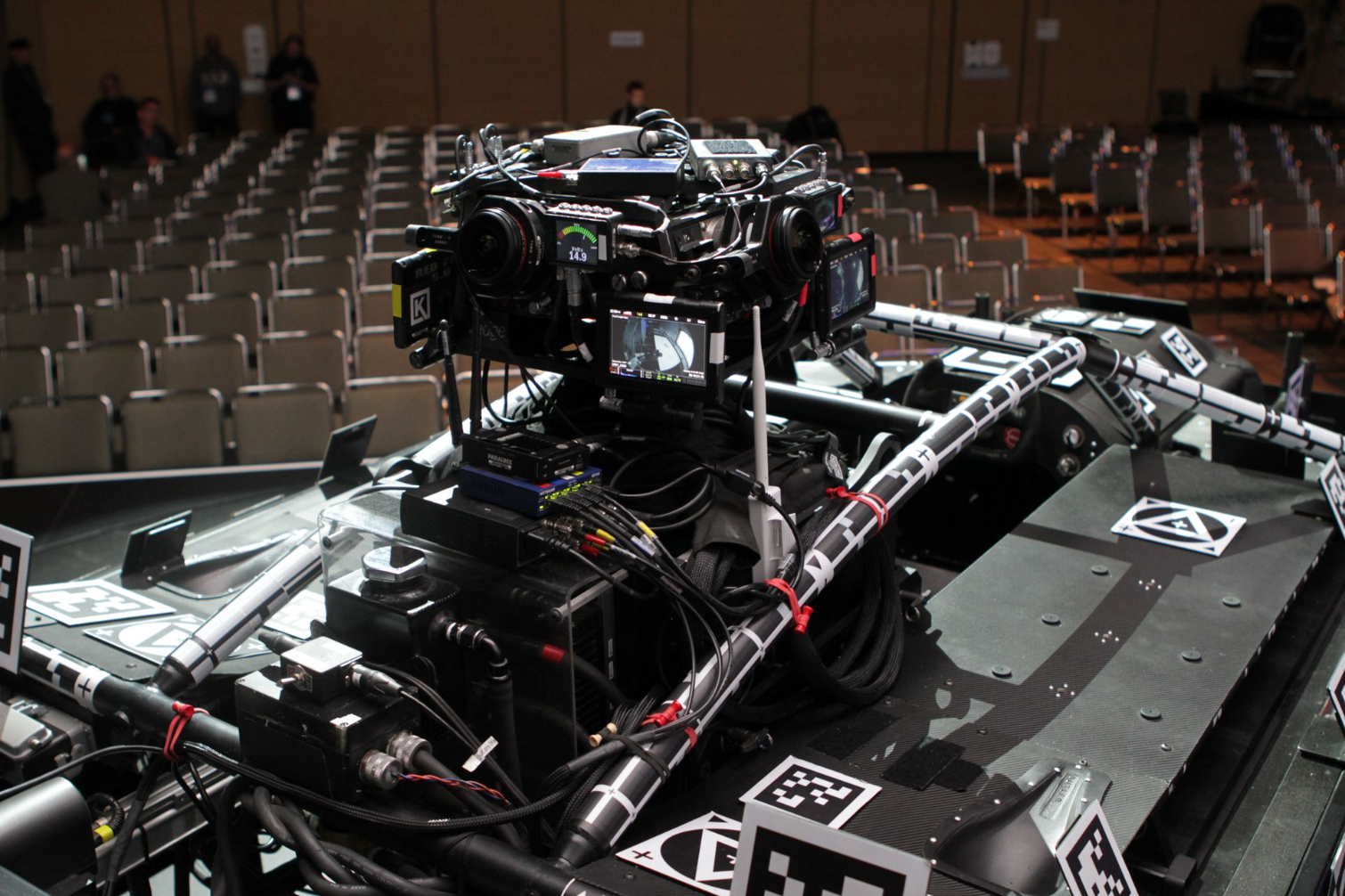

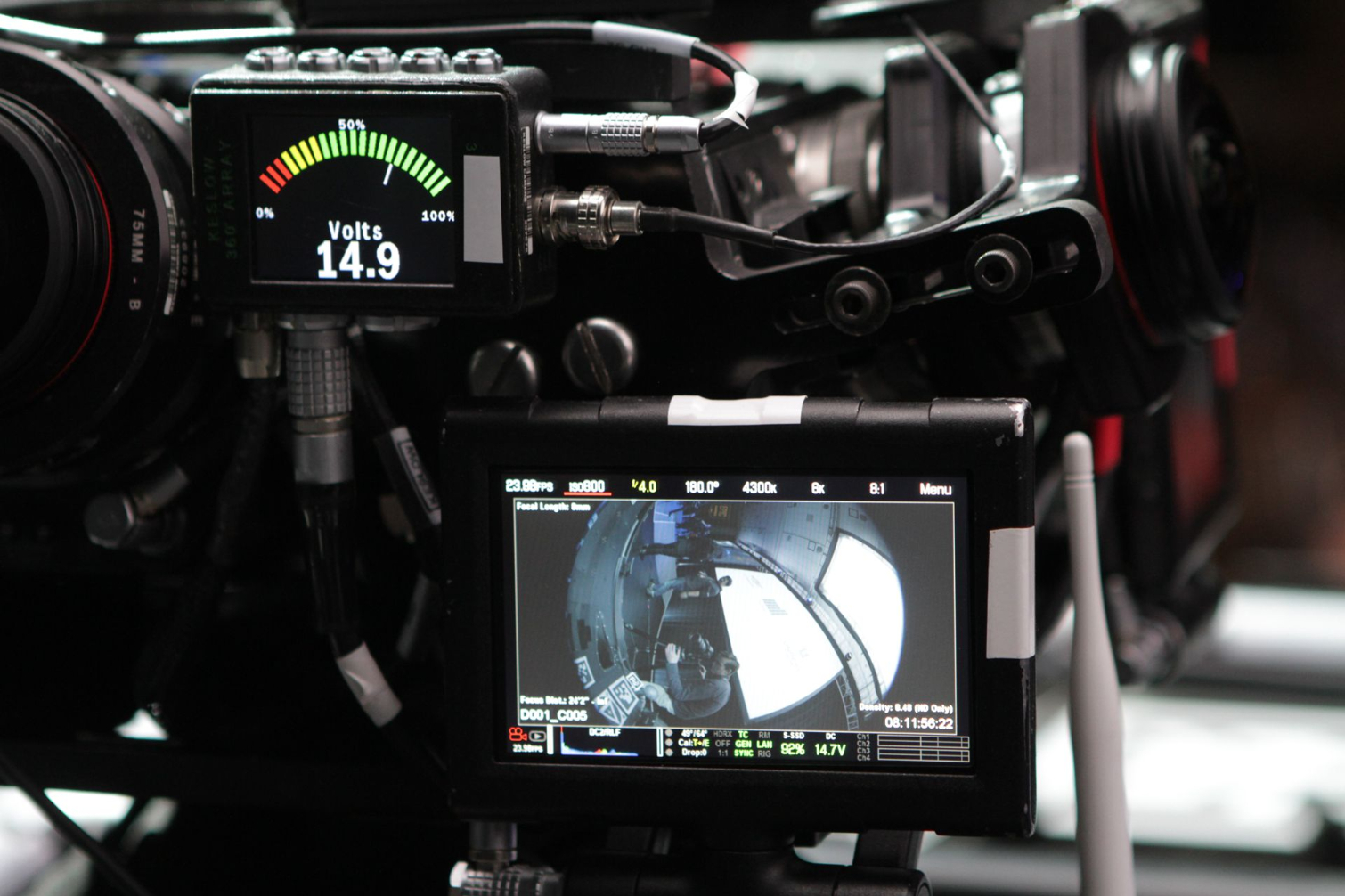

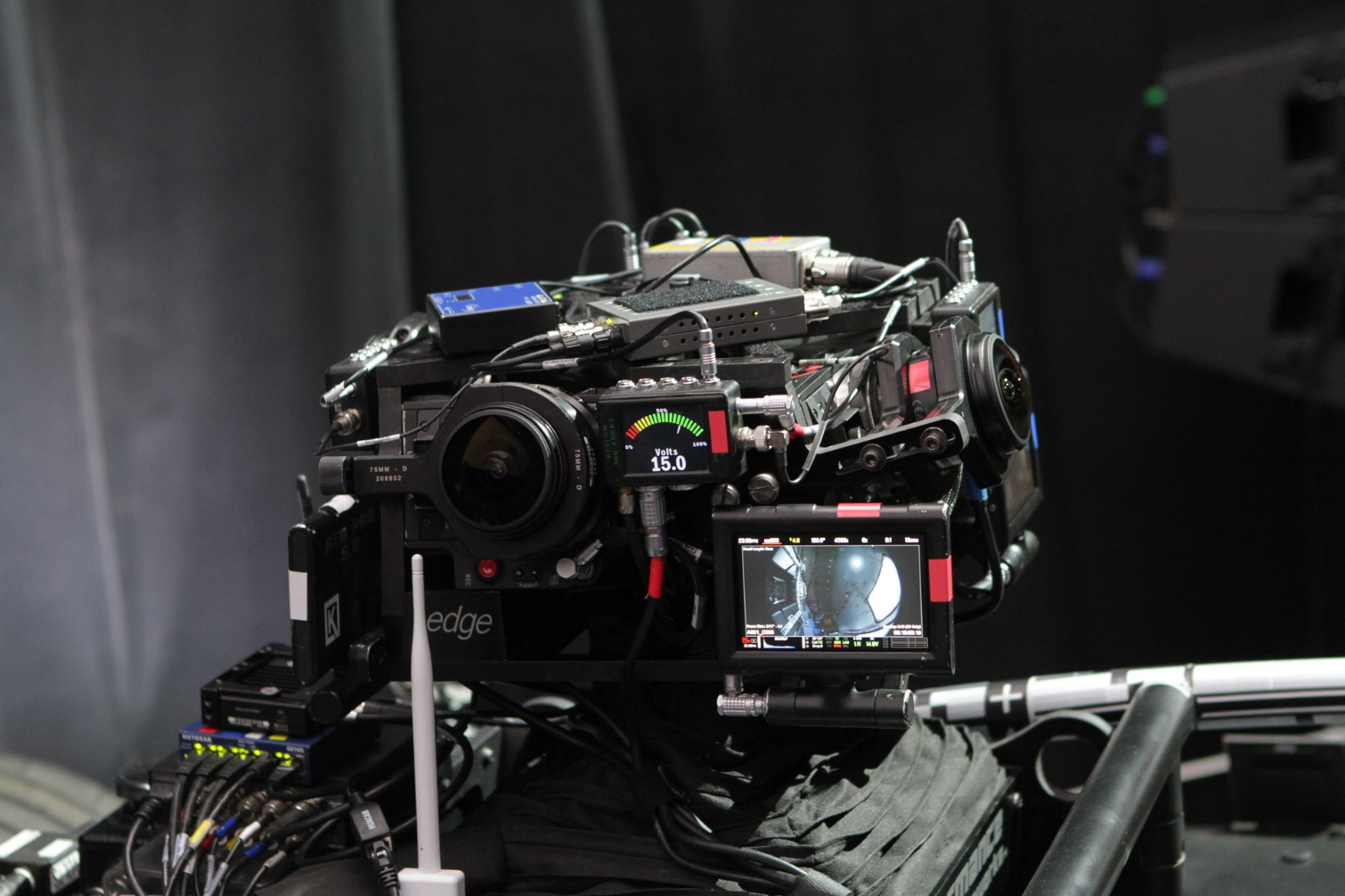

The Blackbird’s four-camera rig shoots 360-degree, 3D video at a 6K resolution. It measures the depth of the terrain using Lidar, and marries that data with the captured images. (This is more or less how the Mars Rover maps the surface of Mars.) It’s a multi-camera setup, but there’s a PC mounted in a box on the back of the vehicle that does all the stitching right there in real-time. This is The Mill’s proprietary “Mill Cyclops” virtual reality toolkit, which includes both hardware and software components.

There’s a second “hero” camera that a filmmaker would use to shoot the Blackbird in action. That is, an actual driver zips around pell-mell in the car, and a filmmaker shoots it, as one would.

So then, at this point you have the capture from the Blackbird’s camera array as well as the framed footage from the hero camera.

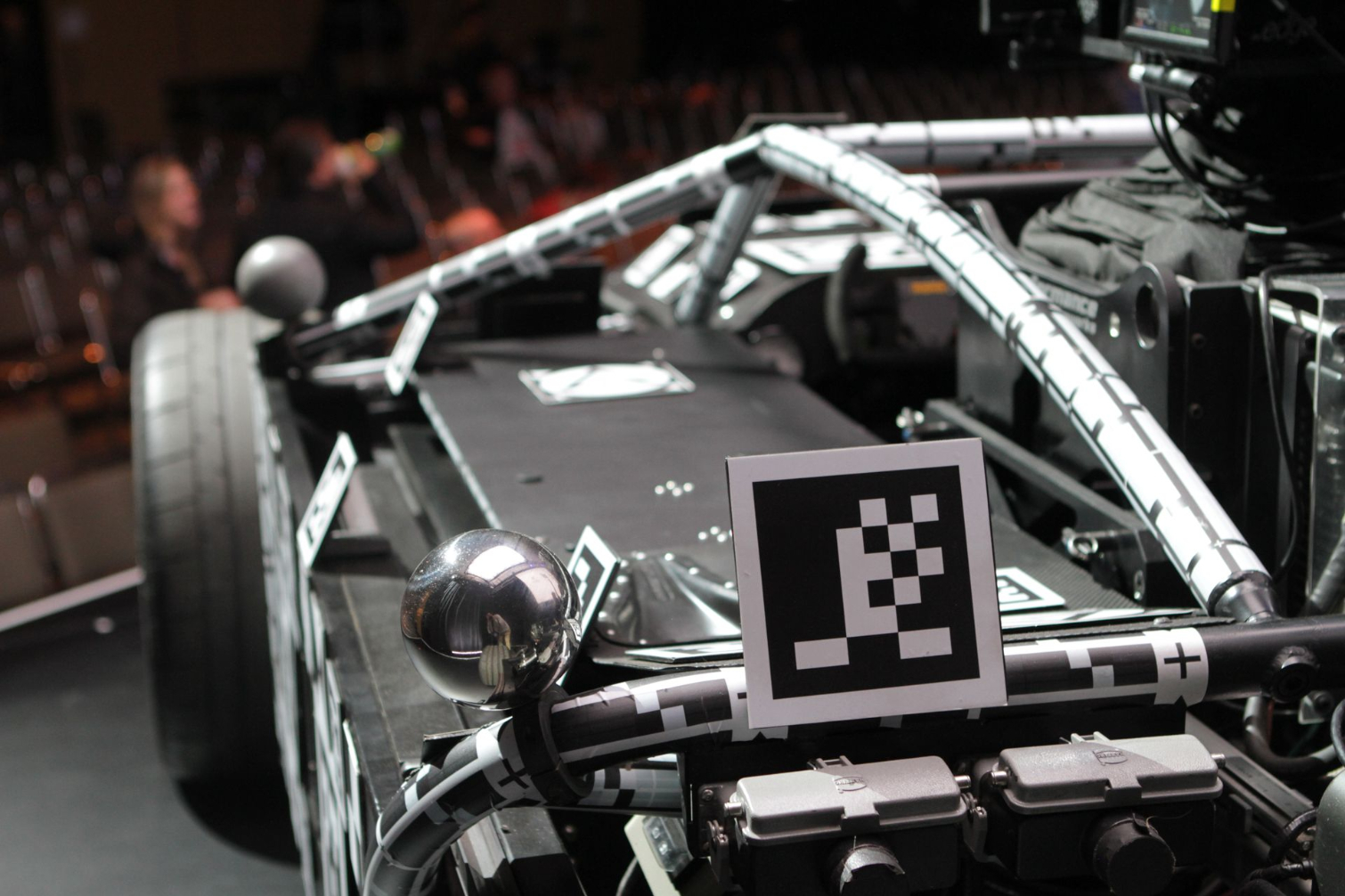

The next step is an Epic one, if you will. Blackbird is covered in markers, and using those for tracking, Unreal Engine can paint essentially anything--in this case, pretty much any car--onto the Blackbird. Because those capabilities are already in UE, it can work on any platform. This demo relies on UE’s Sequencer to seamlessly line up all the footage and renders. Part of the magic is that the software is able to use the data from the 360-degree/3D capture to figure out where all the reflections should go, and this is why it can paint any car into the scene.

That’s Unreal

The PC that Epic had running the demos at the event had an Nvidia Quadro GPU and relied on an Intel PCIe SSD that could handle 1.8 GBps data.

The Unreal Engine technologies involved with this project are:

Multiple streams of uncompressed EXR images (1.3GBps) Dynamic Skylight IBL (image-based lighting) for lighting the cars Multi-element compositing, with edge wrap, separate BG and FG motion blur Fast Fourier Transform (FFT) blooms for that extra bling PCSS shadows with directional blur settings Bent Normal AO with reflection occlusion Prototype for next generation Niagara particles and FX Compatibility with Nvidia Quadro graphics card Support for Google Tango-enabled devices (currently Lenovo Phab 2 Pro)

Epic said that these capabilities will come “later this year.”

There are some tricks involved. The “movie” itself is just a 24fps HD video, so by itself, it’s not exactly a resource hog. And because you don’t have to render the entire scene--just the car--that’s also not terribly resource intensive. Rendering in real-time, of course, does require lots and lots of horsepower; but you can get away with it because it’s just the car that gets rendered.

Epic Games and The Mill hope to use this mixed reality technique for filmmakers, who can shoot scenes that require VFX and see the finished product right in front of them, in real-time.

Seth Colaner previously served as News Director at Tom's Hardware. He covered technology news, focusing on keyboards, virtual reality, and wearables.

-

WFang At first I got really excited when I thought the Mill was a reference to the new CPU architecture ...Reply

https://millcomputing.com/ -

TheAfterPipe I remember seeing something similar last year and I think the consensus was that it was very impractical when it was much easier to create a CGI model.Reply -

bit_user I'd have loved to know more details about the car, BTW. Like, what did they use as a basis for it, weight, horespower, FWD/RWD/AWD, etc. I'm sure it can't go too fast, with that bulky camera rig.Reply

In the shots they used, it looks real enough not to be distracting. On my somewhat average LCD monitor, anyway. I can believe that you could pick out the CG car next to a physical one.19367087 said:But the cars dont look real....

I'll try watching this on my plasma TV and update if I find it looks much worse.

Huh? Do you understand that the rig is needed to make the CGI model more practical?19370727 said:I remember seeing something similar last year and I think the consensus was that it was very impractical when it was much easier to create a CGI model. -

bit_user Reply

Interesting, but I doubt they can beat the single-thread performance of the big boys. Some of the tricks they mention are already used (i.e. register bypass) for decades. IMO, they might have some advantages in secure computing, and they might be able to deliver better performance per Watt.19369324 said:At first I got really excited when I thought the Mill was a reference to the new CPU architecture ...

https://millcomputing.com/

But, like other alternative CPU architectures, they're heavily dependent on machine translation or getting software to use different APIs and programming models. That's a big ask.