Clever VR Tricks: A 60fps VR Experience On An Old Phone With Tessellation And Vertex Displacement

Running VR content on a phone is a technical challenge. Desktop VR solutions including Oculus and Vive recommend an Intel Core i5 CPU and GTX 970 as the minimum specs. However, phones lack these high-end desktop components and have to run VR content on a mobile SOC while simultaneously processing all motion tracking.

Framing the Issue

Although Google’s new “Daydream VR” specification standard hasn’t been clarified, it will almost certainly apply only to the newest high-end phones. But Google Cardboard, the ubiquitous folded paper viewers, were supposed to be the one-size-fits all VR solution for everyone. How then, do you get an immersive VR experience to run on an iPhone 5, or even a Galaxy S3?

Let’s lay out the challenges here. For VR content, 60 fps is generally considered the minimum for immersive content (the target is generally held to be 90 fps, and Sony’s PSVR is even pushing for 120 fps). Below that, we tend to notice the lag in motion controls and head tracking, and for some people, nausea becomes a bigger problem. Even on a low-end phone, this means pushing out a lot of pixels per second.

Through the Looking Glass

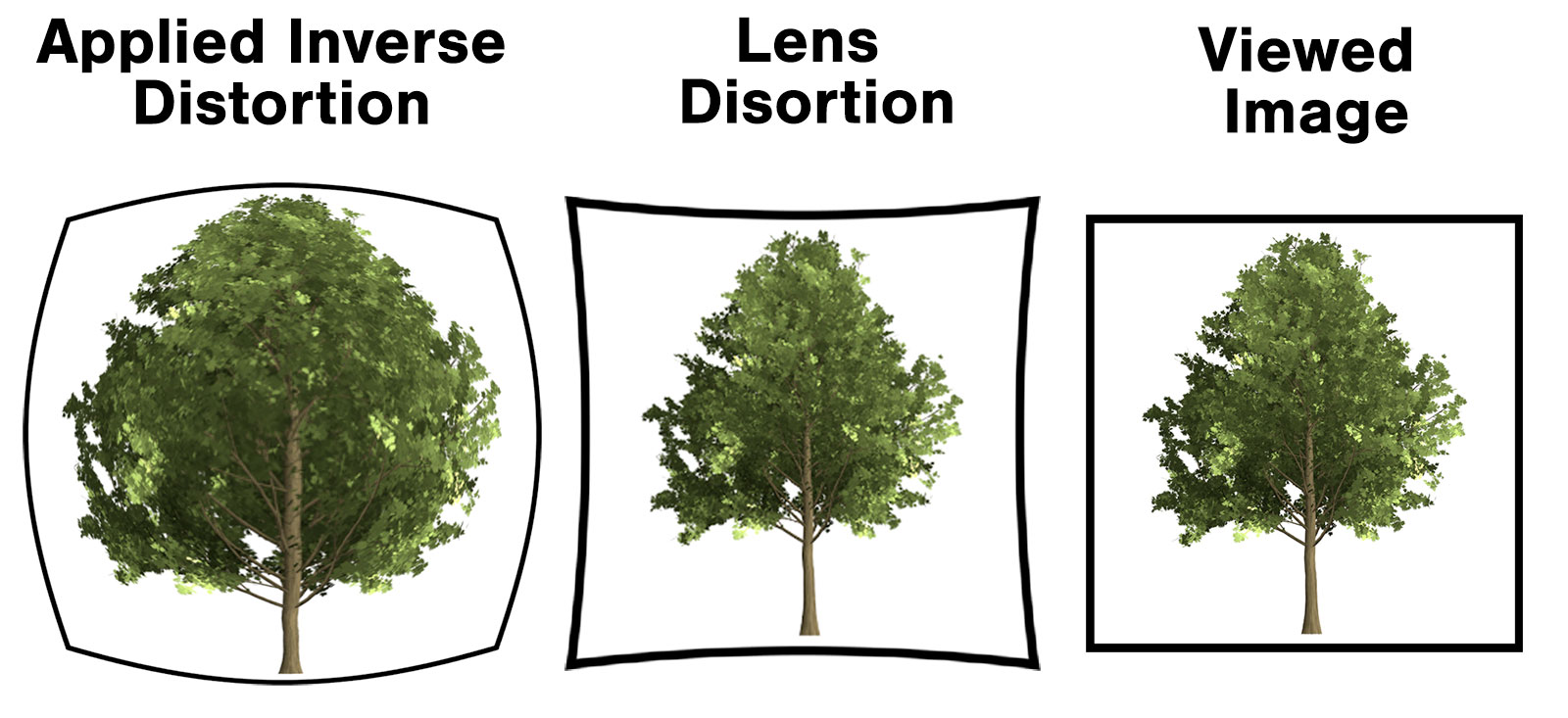

Then there’s the fundamental nature of how we view VR. VR systems render a separate image for each eye, but these images are each roughly square, and it’s the lenses and our perception that create the illusion of a wide scene with depth. Lenses are responsible for much of the quality of the perceived image, but regardless of their own quality, they also invariably introduce distortion, warping and stretching the image.

The solution has generally been to render the image, apply an inverse distortion to it that is the opposite of the lens distortion, and then feed it to the screen buffer to output on the phone’s display. This inverse distortion means the final image will look correct when viewed through the lens.

In short, inverse distortion plus lens distortion equals an undistorted image.

In photography terms, these are called “barrel” and “pincushion” distortion. The lenses of an HMD generally cause pincushion distortion, making the image look like it's being pulled into a central point, and the correction takes the form of barrel distortion, which looks like the images were stretched over part of a sphere.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

However, distorting and interpolating the pixels of each image to cancel out the lenses adds considerable processing time to each frame and significantly increases the work a phone has to do to create a frame. It also lowers the effective resolution, because the areas near the center of the image that are stretched outward are essentially smeared across too many pixels, similar to zooming into a low-res photo. By extension, extra detail is compressed in the edges of the frame, wasting CPU time and packing in detail where the user is least likely to be looking.

A Million Little Pieces: Vertex Displacement And Tessellation

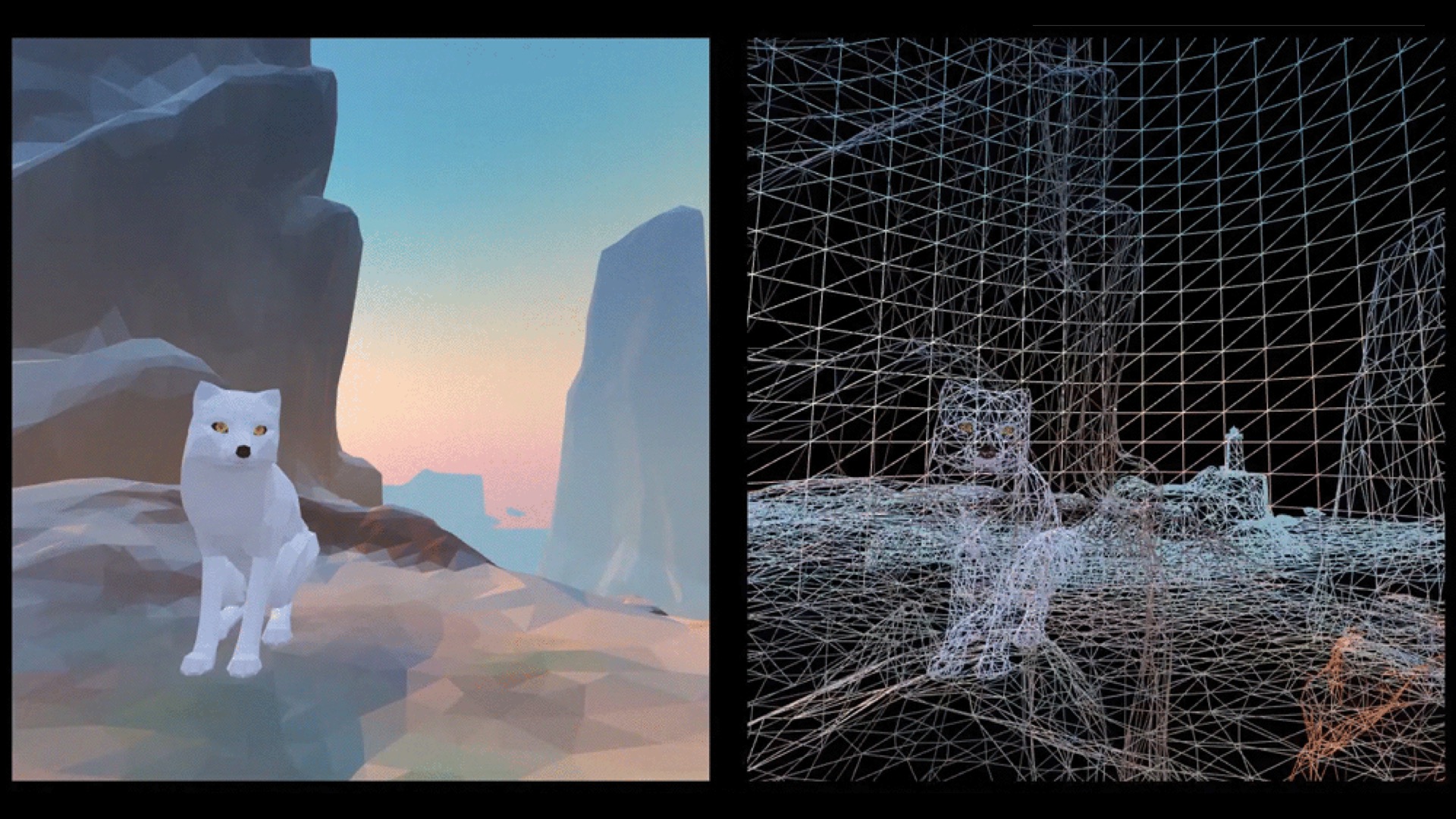

One studio has come up with an innovative solution. Brian Kehrer is a game designer who worked with ustwo (the developers behind Monument Valley) to create Arctic Journey, a VR tour of a polar wilderness that has become one of the default experiences in Google Cardboard. In order to try to create a truly universal VR experience, Kehrer’s team had a goal to run the software at a stable 60fps with dynamic lighting running on a Galaxy S3--a phone from 2012.

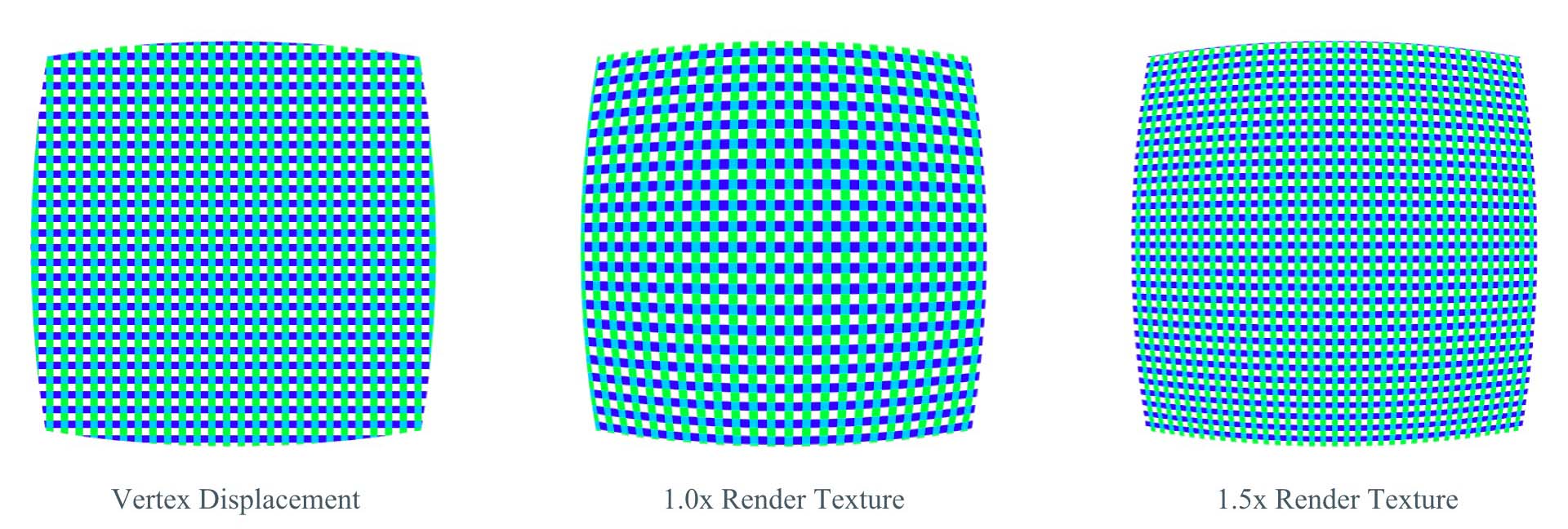

The team’s preliminary testing showed that there was no way a phone that old could render the image, apply distortion, and output it to the screen fast enough to maintain 60 fps. So Kehrer and his team used an innovative solution: Instead of rendering the game world and then distorting it, what if you warped the entire world beforehand? The idea, which is called Vertex Displacement, is to literally warp the geometry of the game to approximate the inverse lens distortion. This means the scene renders with the proper lens correction and can be sent straight to the screen, cutting out the middle step.

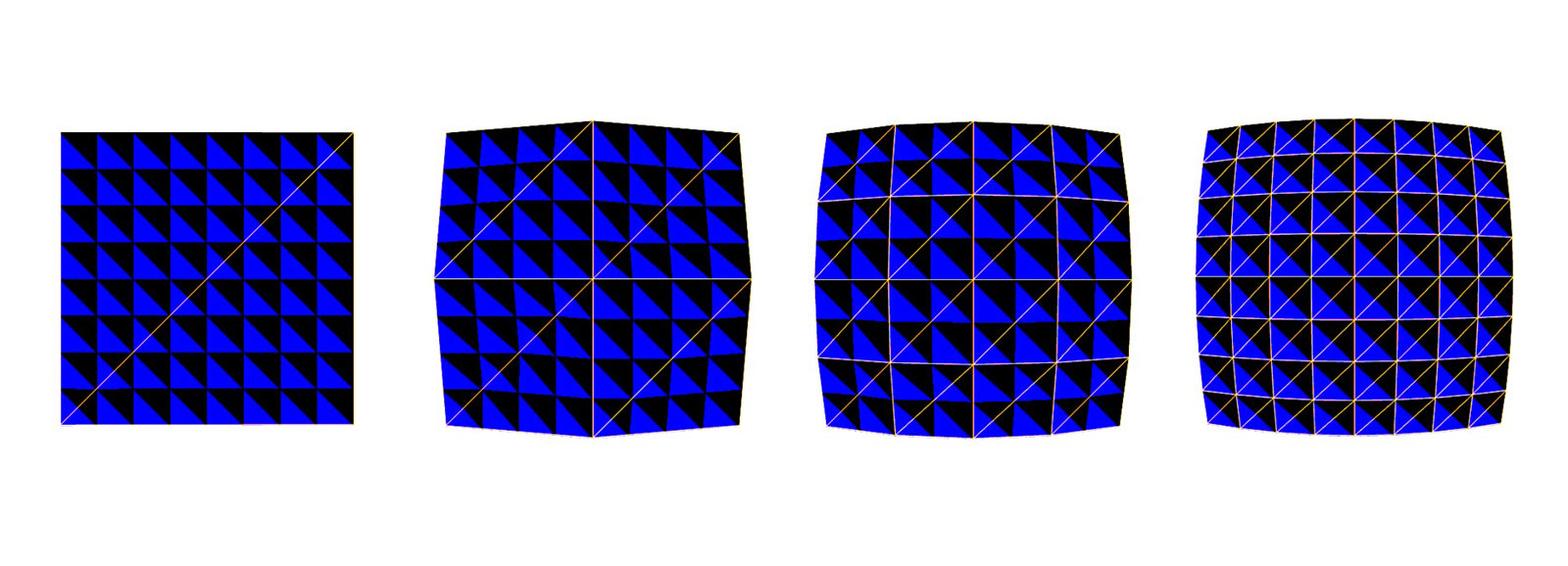

If you remember that all computer graphics are made of a collection of polygons, this starts to make sense. Vertex Displacement doesn’t warp lines; the points between two vertices (the corners of the polygons) always stay straight. However, by shifting where the vertices are, you can approximate lens correction in the scene itself and render the final image in one pass.

There is one challenge here: Low-polygon objects (for example, a perfect square) will be completely unaffected by Vertex Displacement. To correct for lens distortion, a square actually needs its sides to be bowed out in slight arcs. As Kehrer pointed out, a curved lens makes curved lines look straight. Without this, the lens distortion will make a square look like it’s collapsing inwards. However, because Vertex Displacement doesn’t actually warp lines, it can’t correct a simple object like a square. This is especially challenging when you consider that most user-interface elements incorporate simple polygons, such as squares and rectangles.

The solution is something that’s become commonplace with rendering complex geometry: tessellation. Tessellation is the process of using repeating, interlocking simple shapes to approximate a complex object. Generally, this means taking fairly rough, large polygons and breaking them down into dozens or hundreds of small triangles, which lets you approximate much more complex textures and surfaces using simple shapes. You can easily scale the number of triangles up or down based on how much processing power is available.

In Vertex Displacement, this means that you can break down a simple polygon, such as a square, into one hundred smaller squares, each made up of two interlocking triangles. Rendering this many more vertices isn’t significantly taxing on the CPU.

Kehrer said most mobile devices can reliably render 100,000 to 400,000 vertices, so turning a few 4-vertex squares into 121-vertex tessellated objects doesn’t have a huge impact (121 vertices is the number required for splitting a square into our theoretical 10x10 grid of paired triangles). This tessellated approach means that Vertex Displacement can now shift the corners of all those triangles to bend the cube into a rough approximation of the curved object needed for lens correction.

There is another advantage: The image isn’t rendered and then stretched. It is actually rendered in the proper dimensions, so detail isn’t lost in the stretched-out middle of the frame, creating improved perceived resolution.

This technique makes Arctic Journey (and Cardboard Design Lab, another VR product that uses Vertex Displacement) something of a technological marvel, but the technique may not see wide adoption. Google’s new VR platform, Daydream, doesn’t seem to support Vertex Displacement, and Oculus and Vive rely on a more processor-intensive implementation called “Timewarp.”

It also highlights a serious question that Kehrer raised in a blog post: If his team can run a VR game on a Galaxy S3, why do the Oculus and Vive require such powerful hardware? This isn’t an answer we currently have, but it’s one of the things Tom’s Hardware will be investigating as we dive deeper into VR.

-

none12345 Or we could just do VR with large high quality screens, and get rid of the lenses, distortions, and all the extra processing power they entail.Reply -

none12345 With eye tracking and rendering only your binocular vision in high detail, and render your perferial with lower detail, you would also cut a lot more processing power.Reply -

bit_user Reply

It's not the same thing. However, the implementation of timewarp might be one thing that drives them away from vertex displacement.17999554 said:Oculus and Vive rely on a more processor-intensive implementation called “Timewarp."

I wonder whether these pixels are lit using normal geometry or in the warped space. If the latter, then your shadows would be distorted. However, lighting them in world space would involve another transform. That might pose another limitation for this technique. Perhaps this app doesn't use dynamic shadows?

What you're describing is called foveated rendering. Some eye tracking systems already utilize that technique.18000599 said:With eye tracking and rendering only your binocular vision in high detail, and render your perferial with lower detail, you would also cut a lot more processing power.

-

Crystalizer "Rendering this many more vertices isn’t significantly taxing on the CPU." But GPU is the one that actually draws those triangles. Adding shadows and dynamic lights to game multiplies the amount of vertices to be drawn. The game they developed doesn't seem to have those. According to the picture.Reply

"If his team can run a VR game on a Galaxy S3, why do the Oculus and Vive require such powerful hardware?" It's easier to do optimization in specific cases, with specific rules for the content developers. How painful and limiting would it be to develop for vive or oculus if you are given specific way to develop your games. Would minecraft even be possible. You would have to use tesselated cube for every cube in the world. There are 60,000,000x, 256x, 60,000,000 cubes. Some of them are rendered in chunks and with limited distance. There is a way of getting games in vr with older hardware too, but it would require drawing all unlit with artistic textures and with the latest optimization advancements in vr rendering. -

beetlejuicegr We all know the era of 8 bit gaming where software developers had to surpass with their brilliant minds the hardware limitations.Reply

I almost cried from happiness when i read this article, it just means there are still ways and people who can prove you can make development with ideas and not raw hardware power.

Gratz to these guys and i also see it as a slap on the hardware requirements the vr gear needs. GG -

bit_user Reply

Well, no. As the article pointed out, modern games already make use of dynamic tessellation for adjusting the level of detail, based on the distance. That should still be the case, here.18002951 said:Would minecraft even be possible. You would have to use tesselated cube for every cube in the world.

Okay, but show me an 8-bit game that was more aesthetically appealing than its 16-bit counterpart. There were plenty of fun 8-bit games, but the technological constraints imposed huge creative constraints, as well. I'm sure there were plenty of deep games that couldn't be made, or didn't fulfill the creators vision, until 16-bit came along.18003567 said:We all know the era of 8 bit gaming where software developers had to surpass with their brilliant minds the hardware limitations.

Gratz to these guys and i also see it as a slap on the hardware requirements the vr gear needs. GG

I don't mean to detract from what they've accomplished, at all. I agree with you that it's great.

But I think going too far to conclude that the PC VR hardware requirements are overkill. For VR to fulfill its potential, games and experiences can' be limited to environments with no dynamic lights or shadows. It needs to run at > 60 Hz. And tricks like time warping are needed to keep some users from getting sick.

Google's Daydream VR spec will be in between this one and the current PC spec. I suggest you pay close attention to the differences in the kinds of experiences available on each. It's going to be at least as big of a difference as there is between console generations.

-

Crystalizer Reply18004660 said:

Well, no. As the article pointed out, modern games already make use of dynamic tessellation for adjusting the level of detail, based on the distance. That should still be the case, here.18002951 said:Would minecraft even be possible. You would have to use tesselated cube for every cube in the world.

Their example of where it works. When I look at the wireframe there aren't that many polygons so doing some tesselation on quads and transforming every vertex might not be a great issue. In pc games the situation is very different. The requirements from players are too. You need realism, which means high res shadows, tesselation maps, cool particles and superior antialiasing that needs to support latest shading etc. The amount of polygons/vertices is getting so high due to addittional passes that warping the pixels at post process way is wiser. What I'm trying to say is that in my opinion this is a duck tape solution for a very specific and primitive case. It's exactly the same reason this is not adopted by unreal. Don't know maybe they will figure this somehow to work well with billions of polygons. There has to be a threshold when it comes a problem. That applies to the other direction too

Minecraft is on vr now. I can't test it because the crashing issue still exists. How would this work on it? I would love to know. I think it would be the ultimate test for their solution. I think that the best solution for the pc and consoles would be to improve the headsets and their screens. Something like curved/improved screens and maybe improved lenses too, but anyways warping the image is not the reason why Vive and Oculus have gtx 970 as recommendation. As said in the article it's done by cpu in additional pass. Correct me if I'm wrong.

-

bit_user Reply

Yes. I don't know if you read my whole post, but we definitely agree on this point. I was just saying you wouldn't have to subdivide every cube in the world - just the ones close to the camera.18005156 said:What I'm trying to say is that in my opinion this is a duck tape solution for a very specific and primitive case.

I'd expect & hope that it'd be a GPU pass. But, as you say, the hardware requirement has to do with a lot more than that.18005156 said:Something like curved/improved screens and maybe improved lenses too, but anyways warping the image is not the reason why Vive and Oculus have gtx 970 as recommendation. As said in the article it's done by cpu in additional pass. Correct me if I'm wrong.

Curved screens is a good idea, but I think the added cost to the VR HMD might be more than the work it'd save, on the GPU. In other words, if it added $100 to the cost of a HMD, you could as easily spend that $100 on a better GPU, continue to do the warping in software, and probably have additional performance left over. I think it's not a very time-consuming task, for higher-end GPUs. It probably simplifies time warping, too.

-

ammaross ReplyCurved screens is a good idea, but I think the added cost to the VR HMD might be more than the work it'd save, on the GPU. In other words, if it added $100 to the cost of a HMD, you could as easily spend that $100 on a better GPU, continue to do the warping in software, and probably have additional performance left over. I think it's not a very time-consuming task, for higher-end GPUs. It probably simplifies time warping, too.

As the SMP feature of the 1080/1070 has shown, it's the brute-force methods of current VR that demands the GPU horsepower. A few more innovative corner-cutting ideas and VR will continue to improve without much need of stronger hardware. Don't, however, expect Battlefield One to be playable on an Intel iGPU or even an R7 370.