What Is 10-Bit (And 12-Bit) Color?

So, 10-bit color: It's important and new, but...what is it? Before we dive into that, the first question is what is bit depth, and why does it matter for displays?

What Is Bit Depth

In computer programming, variables are stored in different formats with differing amounts of bits (i.e., ones and zeros), depending on how many bits that variable needs. In general, each bit you add allows you to count up to double the previous number and store double the previous amount of information. So if you need to count up to two (excluding zero), you need one bit. Two bits allow you to count up to four, three bits up to eight, and so on.

The other thing to note here is that in general, the fewer bits, the better. Fewer bits mean less information, so whether you’re transmitting data over the internet or throwing it at your computer’s processing capabilities, you get whatever it is you want faster.

However, you need enough bits to actually count up to the highest (or lowest) number you want to reach. Going over your limit is one of the most common software errors. It’s the type of bug that initially caused Gandhi to become a warmongering, nuke-throwing tyrant in Civilization. After his “war” rating tried to go negative, he flipped around to its maximum setting possible. So it’s a balancing act for the number of bits you need; the fewer bits you use, the better, but you should never use less than what’s required.

How Bit Depth Works With Displays

With the image you’re seeing right now, your device is transmitting three different sets of bits per pixel, separated into red, green, and blue colors. The bit depth of these three channels determines how many shades of red, green, and blue your display is receiving, thus limiting how many it can output.

The lowest-end displays (which are entirely uncommon now) have only six bits per color channel. The sRGB standard calls for eight bits per color channel to avoid banding. In order to match that standard, those old six-bit panels use Frame Rate Control to dither over time. This, hopefully, hides the banding.

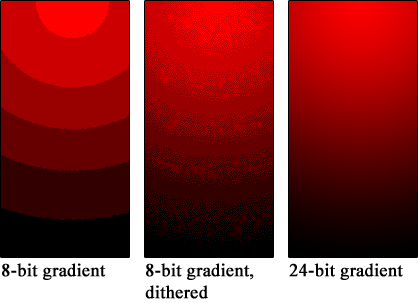

And what is banding? Banding is a sudden, unwanted jump in color and/or brightness where none is requested. The higher you can count, in this case for outputting shades of red, green, and blue, the more colors you have to choose from, and the less banding you’ll see. Dithering, on the other hand, doesn’t have those in-between colors. Instead, it tries to hide banding by noisily transitioning from one color to another. It’s not as good as a true higher bitrate, but it’s better than nothing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

With today’s HDR displays, you’re asking for many more colors and a much higher range of brightness to be fed to your display. This, in turn, means more bits of information are needed to store all the colors and brightness in between without incurring banding. The question, then, is how many bits do you need for HDR?

Currently, the most commonly used answer comes from the Barten Threshold, proposed in this paper, for how well humans perceive contrast in luminance. After looking it over, Dolby (the developer of how bits apply to luminance in the new HDR standard used by Dolby Vision and HDR10) concluded that 10 bits would have a little bit of noticeable banding, whereas 12 bits wouldn’t have any at all.

This is why HDR10 (and 10+, and any others that come after) has 10 bits per pixel, making the tradeoff between a little banding and faster transmission. The Dolby Vision standard uses 12 bits per pixel, which is designed to ensure the maximum pixel quality even if it uses more bits. This covers the expanded range of luminance (that is, brightness) that HDR can cover, but what about color?

How many bits are needed to cover a “color gamut” (the range of colors a standard can produce) without banding is harder to define. The scientific reasons are numerous, but they all come back to the fact that it’s difficult to accurately measure just how the human eye sees color.

One problem is the way human eyes respond to colors seems to change depending on what kind of test you apply. Human color vision is dependent on “opsins,” which are the color filters your eye uses to see red, green, and blue, respectively. The problem is that different people have somewhat different opsins, meaning people may see the same shade of color differently from one another depending on genetics.

We can make some educated guesses, though. First, based on observations, eight-bit color done in the non-HDR “sRGB” standard and color gamut can almost, but not quite, cover enough colors to avoid banding. If you look closely at a color gradient, assuming you have an eight-bit screen, there’s a decent chance you’ll notice a bit of banding there. Generally, though, it’s good enough that you won’t see it unless you’re really looking for it.

The two HDR gamuts have to cover a huge range of brightness and either the P3 color gamut, which is wider than sRGB, or the even wider BT2020 color gamut. We covered how many bits you need for luminance already, but how many bits do you need for a higher gamut? Well, the P3 gamut is less than double the number of colors in the SRGB gamut, meaning nominally, you need less than one bit to cover it without banding. However, the BT 2020 gamut is a little more than double the sRGB gamut, meaning you need more than one extra bit to cover it without banding.

What this means is that the HDR10 standard, and 10-bit color, does not have enough bit depth to cover both the full HDR luminance range and an expanded color gamut at the same time without banding. Remember, 10-bit color doesn’t quite cover the higher range of brightness by itself, let alone more colors as well.

This is part of the reason why HDR10, and 10-bit color (the HLG standard also uses 10 bits) is capped at outputting 1k nits of brightness, maximum, instead of 10k nits of brightness like Dolby Vision. Without pushing the brightness range a lot, you can keep apparent banding to a minimum. In fact, with today’s panels’ limited brightness and color range, which leads to limited brightness and color content, very few people can notice the difference between 12-bit and 10-bit signals.

So what does all this look like in actual hardware and content that you might use?

Putting It Into Practice

First, should you worry about the more limited color and brightness range of HDR10 and 10-bit color? The answer right now is no, don't worry too much about it. Because a lot of content is mastered and transmitted in 10-bit color, 12-bit color hardware isn't going to do much for you today anyway.

The second thing is how to ensure you're getting 10-bit color on your monitor. Fortunately, this will almost always be listed in a device's tech specs, but beware of any HDR display that doesn't list it. You'll need 10-bit inputs for color, but outputs are a different story. It can be a 10-bit panel output or eight-bit with FRC.

The other trick display manufacturers use involves look up tables. Not all scenes use all colors and brightnesses that are available to a standard--in fact; most don't. Look up tables take advantage of this by varying what information the bits you have available represent into a more limited set of colors and brightness. This limits the number of bits needed to produce a scene without banding, and it can significantly reduce banding in 95% or more of scenes. We should note, though, that currently this is found exclusively in high-end reference monitors like those from Eizo. That's also the only place this trick is needed, because after being transferred from a camera (or what have you) to a device on which you'd watch the content, today's HDR signals already come with a not dissimilar trick of metadata, which tells the display the range of brightness it's supposed to display at any given time.

The third and final piece is when to worry about 12-bit color. When the BT2020 color gamut is usable on devices like monitors, TVs, and phones, and those devices are able to reach a much higher brightness, that's then you can think about 12 bits. Once the industry gets to that point, 10-bit color isn't going to be enough to display that level of HDR without banding. But we aren't there yet.

-

abtocool I find this article of poor quality, not-clear-enough, too many words were used to express few things.Reply -

bit_user The point about underflow is an unhelpful digression. If we're talking about unsigned, unbiased integers, then no amount of bits will avoid that problem. An example using overflow would've been slightly more relevant.Reply

Why not just use the stair step analogy? It's simple and easy to understand.

The example that uses 8-bit indexed color is misleading. Most people don't know about indexed color and would confuse 8 bits per pixel with 8 bits per channel (i.e. the 24-bit example). -

cia1413 I don't think you are understanding the use of a look up table, LUT. A LUT is used to correct an the color of an image. The reason you use a LUT on an Eizo is because you can easily add color correction, used a lot of the time to just change the feel of a image, and quickly apply it to the output of the monitor. Its not used as a "trick" to make the image look better, just a tool to change it.Reply -

abtocool Hey, isn't the LUT table basically a database of colors (running into billions) and the whole idea is that the monitor processor doesn't need to process which color to produce each time, and just look it up (recall) from the LUT table?Reply

a 14bit LUT (Benq/Viewsonic) table generates 4.39 trillion colors, which are then used to cherry pick 1.07 billion colors (10bit), and produce appropriate colors.

-

koga73 Another benefit to 8-bits per channel is it fits nicely into a 32-bit value. ARGB = 8-bits per channel x 4 channels (A is Alpha for transparency). 10 or 12-bit color values wouldn't work with 32-bit applications. Assuming your applications are 64-bit you could go up to 16-bits per channel (16 x 4 = 64).Reply -

cia1413 Reply20951176 said:Hey, isn't the LUT table basically a database of colors (running into billions) and the whole idea is that the monitor processor doesn't need to process which color to produce each time, and just look it up (recall) from the LUT table?

a 14bit LUT (Benq/Viewsonic) table generates 4.39 trillion colors, which are then used to cherry pick 1.07 billion colors (10bit), and produce appropriate colors.

No, its a way to modify color to display them in a new way. You make a LUT to correct for an error in a displays light curve or to add a "look" like make it blue and darker to emulate night time. -

bit_user Reply

That's a separate matter from what the display actually supports and how the data is transmitted to it.20951594 said:Another benefit to 8-bits per channel is it fits nicely into a 32-bit value.

Of course it can work. It just doesn't pack as densely or easily as 8 bits per channel.20951594 said:10 or 12-bit color values wouldn't work with 32-bit applications.

Moreover, 3D rendering typically uses floating-point formats to represent colors. It's only at the end that you'd map it to the gamut and luminance range supported by the display. -

TripleHeinz Reply20952597 said:

That's a separate matter from what the display actually supports and how the data is transmitted to it.20951594 said:Another benefit to 8-bits per channel is it fits nicely into a 32-bit value.

Of course it can work. It just doesn't pack as densely or easily as 8 bits per channel.20951594 said:10 or 12-bit color values wouldn't work with 32-bit applications.

Moreover, 3D rendering typically uses floating-point formats to represent colors. It's only at the end that you'd map it to the gamut and luminance range supported by the display.

I was about to answer something similar but you nailed it first and perfectly.

I understand that all this is about the convenient packiness of a color in a 32bit value.

D3D9 was able to work extensively with 32bit unsigned integer formats for color representation. But current APIs use normalized (between 0 and 1) 32bit floating point units to represent a color, then internally the GPU uses the floating point data to convert and pack to the output format. I think this is an awesome way to do it and virtually compatible with any format.

A 32bit application can perfectly work and represent a 10 and 12 bit color value. A 32bit system can perfectly work with 64 bit values. Even more, in the case of the x86 architecture, it supports an 80bit extended precision floating point unit in hardware. -

TripleHeinz There is this option in the nvidia control panel to output 8bpc or 12bpc to the display. The 12bpc option is available when using a fairly new panel but it is not when using an old one. Does it mean that my monitor has a panel that supports 12 bit colors or is it something else?Reply

I honestly doubt that my panel is a super duper 12bit one. It came from a questionable OEM and brand, but color and contrast have always looked really well though. -

bit_user Reply

Ah, sad but true. Support for this exists in the x87 FPU, but not in SSE or AVX. I thought I ran across 128-bit float or int support, in some iteration of SSE or maybe AVX, but I'm not finding it.20952754 said:Even more, in the case of the x86 architecture, it supports an 80bit extended precision floating point unit in hardware.

The other thing that x87 had was hardware support for denormals. If you enable denormal support in SSE (and presumably AVX), it uses software emulation and is vastly slower. Also, x87 had instructions for transcendental and power functions.

Lastly, sort of one cool thing about x87 is that it's stack based - like a RPN calculator. I think that's one of the reasons it got pushed aside by SSE - that it's probably not good for pipelined, superscalar, out-of-order execution. But, still kinda cool.

If you're fond of over-designed, antique machinery, the nearly 4 decade-old 8087 is probably a good example.

https://en.wikipedia.org/wiki/Intel_8087