The History of DOE Nuclear Supercomputers: From MANIAC to AMD's EPYC Milan

Big Blue's Blue Gene/L

IBM designed the Blue Gene/L to study protein folding and gene development. It pushed out a sustained 478.2 teraFLOPS of computing power, thus earning the top spot on the TOP500 list from November 2004 to June 2008.

The supercomputer featured compute cards with a single ASIC and DRAM memory chips affixed. Each ASIC housed two PowerPC 440 embedded processors that supplied up to 5.6 gigaFLOPS of performance per compute card. IBM's decision to use relatively slow embedded processors was born of a desire to reign in power consumption while exploiting the benefits of a massively parallel design.

Above you can see 16 of these compute cards slotted into a single node. The two additional cards handle I/O operations. This dense design allowed for up to 1,024 compute nodes to fit into a single 19-inch server rack. Blue Gene/L could scale up to 65,536 total nodes.

Cray's Red Storm

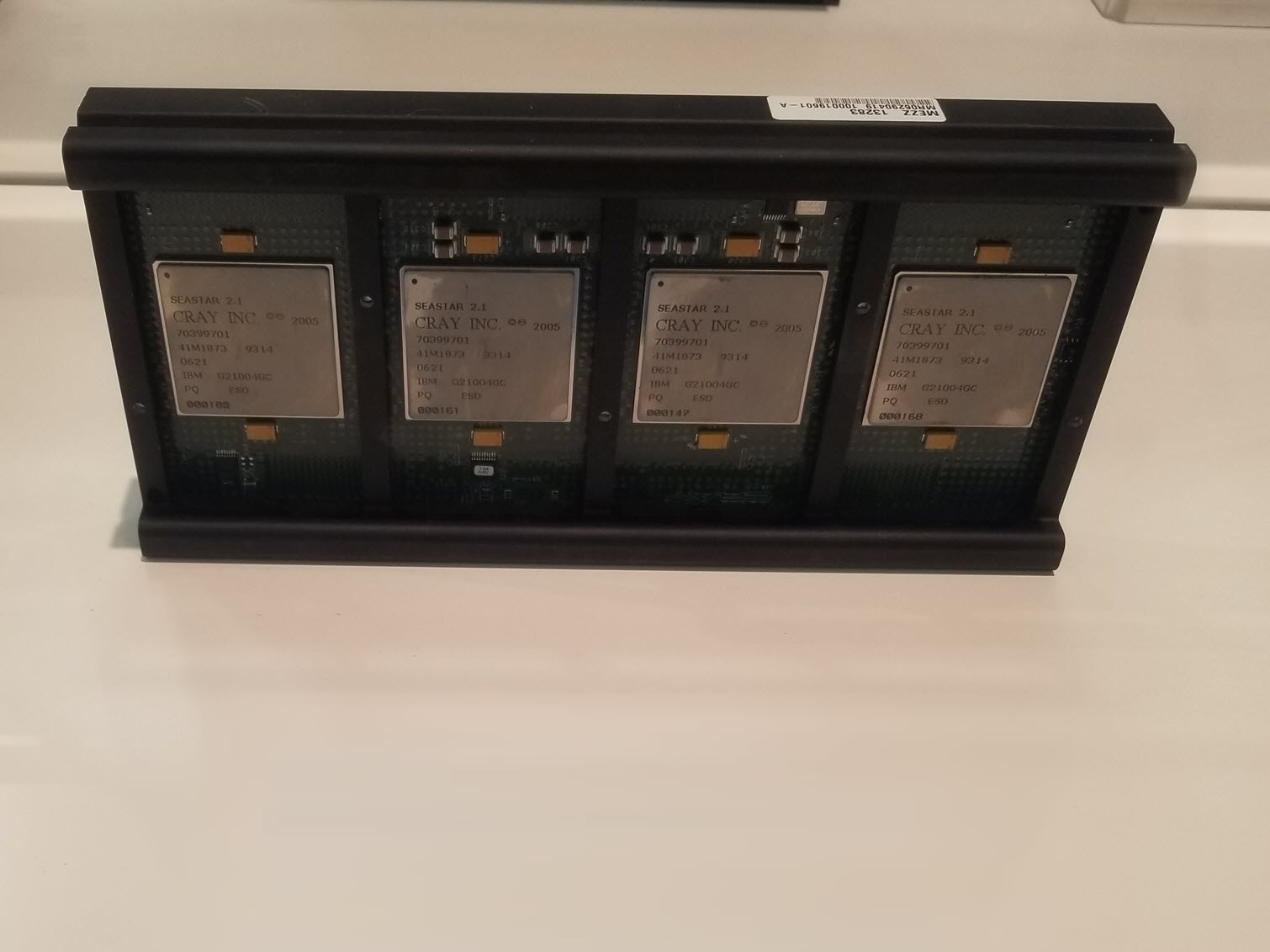

Popular supercomputer-builder Cray developed "Red Storm" in 2005. Modern supercomputing is all about data movement, and this design is most notable for its 3D mesh networking topology and its custom SeaStar chips that combined both a router and NIC onto the same silicon. The design used 10,880 off-the-shelf AMD Opteron single-core CPUs clocked at 3.0 GHz.

The original 140-cabinet system consumed 3,000 square feet of floor space and peaked at 36.19 teraFLOPS. As time progressed, Red Storm scaled up via faster 2.4 GHz dual-core AMD Opteron’s and scaled out with another row of cabinets, thus totaling 26,000 processing cores in a single machine. That yielded a peak of 101.4 teraFLOPS of performance, but a subsequent upgrade to quad-core Opteron’s and 2GB of memory per core brought peak performance up to 204.2 teraFLOPS.

The Red Storm (Cray XT3) occupied the Top 10 of the TOP500 list from 2006 to 2008.

The Hopper

Cray's XE6 "Hooper" came with 12,768 AMD Magny-Cours chips. Each of those chips came with 12 cores, making this a 153,408-core supercomputer. Hopper was the first petaFLOP supercomputer in the DOE Office of Science's arsenal.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The system had 6,384 of the nodes pictured above, with each node featuring two of the AMD Magny-Cours processors and 64 GB of DDR3 SDRAM memory. Up to 3,072 cores fit into a single cabinet. Unlike the Red Storm, this machine moved from the SeaStar networking chips to new Gemini router ASICs.

Hopper was listed in fifth place in the November 2010 TOP500 list with a peak speed of 1.05 petaFLOPS.

Blue Gene/Q Sequoia

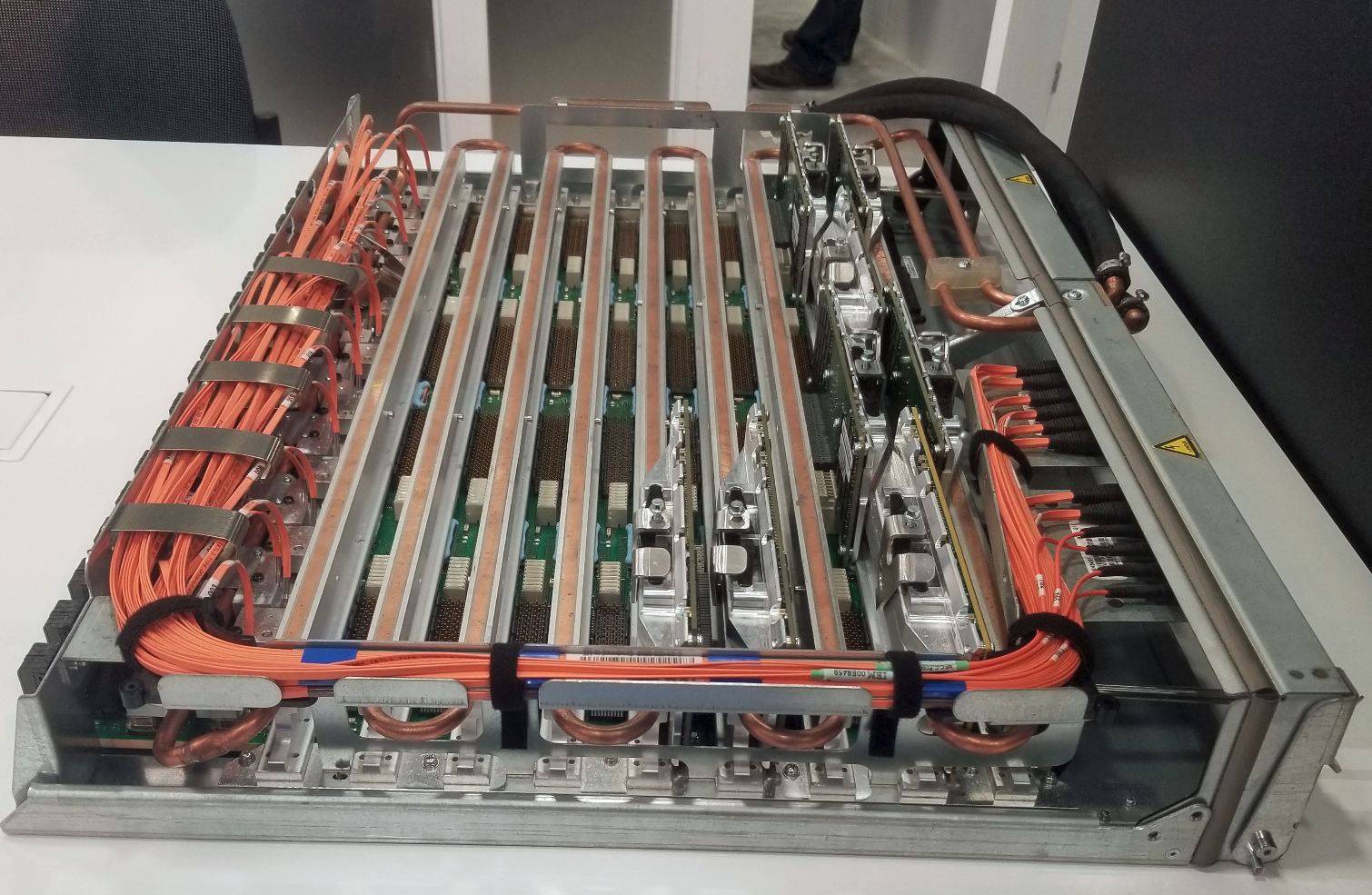

IBM's crams 1,572,864 cores into its 96-rack Blue Gene/Q "Sequoia" system. This system has 1.6 petabytes of memory spread across 98,304 compute nodes. The system uses IBM's 16-core A2 processors that run at 2.3 GHz. Each A2 core runs four threads simultaneously, meaning this is an SMT4 processor.

As we can see from the copper tubing snaking throughout the ASC Sequoia node, this system relies heavily upon water cooling. The Blue Gene/Q spent time on the Green500 list, meaning it was one of the most power-efficient supercomputers at the time. Even though the system is orders of magnitudes faster than the Blue Gene/L we covered on a preceding slide, it is 17 times more power efficient.

In 2013, the system set a record of 504 billion events processed per second, easily beating the earlier record of 12.2 billion events per second. To put that in perspective, the Lawrence Livermore National Laboratory, which houses Sequoia, equates one hour of the supercomputer’s performance to all 6.7 billion people on earth using calculators and working 24 hours per day, 365 days per year, for 320 years.

Blue Gene/Q took the crown as the fastest supercomputer in the world in 2012 with 17.17 petaFLOPS of performance.

Reaching the Summit - The World's Fastest Supercomputer

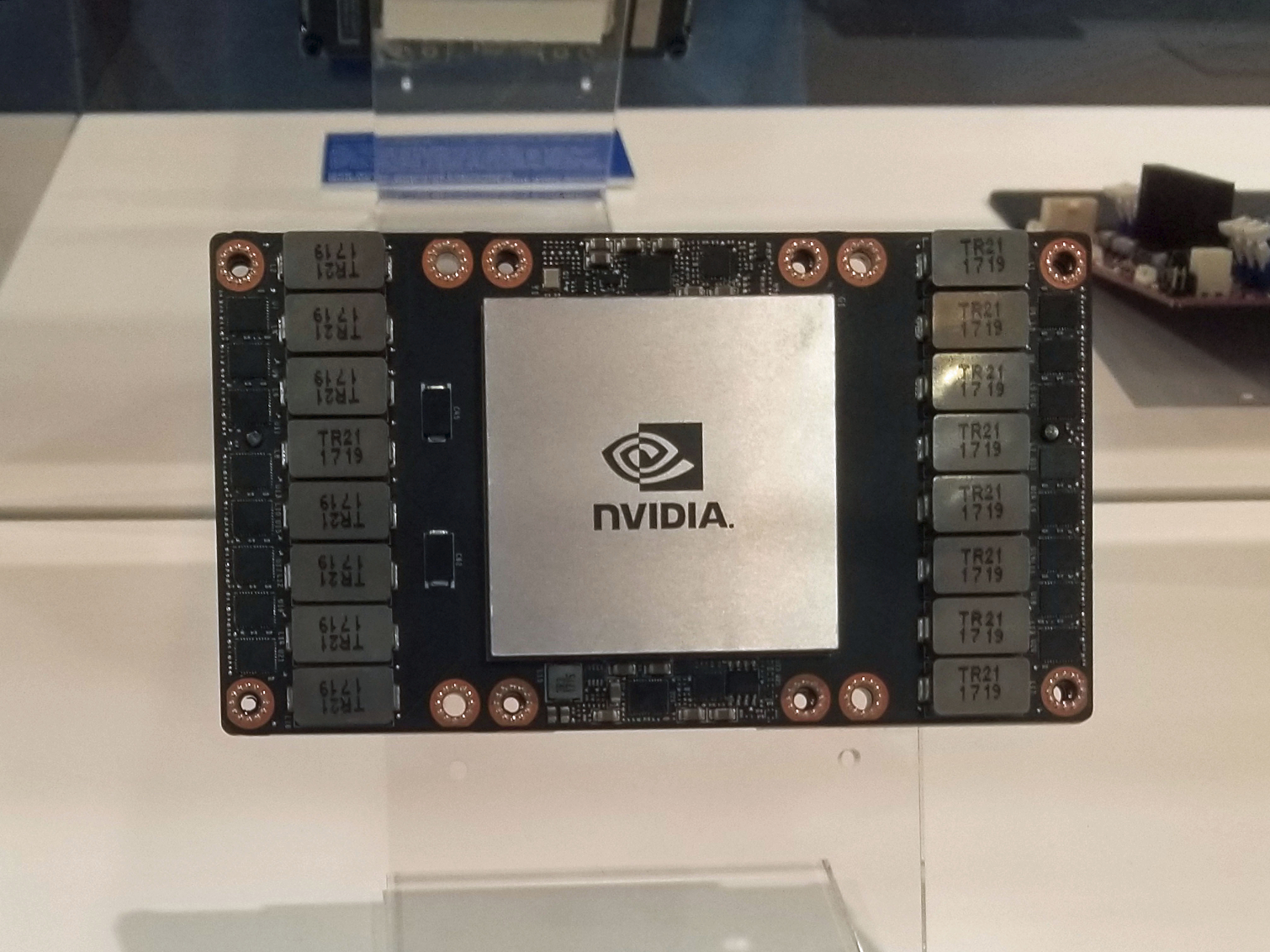

The age of accelerators is upon us. Or, as we call them, GPUs. Most of the new compute power added to today's supercomputers comes from GPUs, while the CPUs have now fallen to more of a host role.

Here we see a Nvidia Volta GV100 GPU that's used in the Summit supercomputer at Oak Ridge. This 4,600-node supercomputer helped the United States retake the supercomputing lead from China last year, and it is currently the fastest supercomputer in the world. It comes with 2,282,544 compute cores and pushes up to 187,659 teraFLOPS.

We've got a deep dive of the Summit supercomputer node here, so we won't dive too deep into details here. Just know that it comes equipped with 27,000 Nvidia Volta GPUs and 9,000 IBM Power9 CPUs tied together at the node level via native NVLink connections. It also has other leading-edge tech, like PCIe 4.0 and persistent memory.

Just What is This?

We aren't sure what this is, but it could be a prototype node based on Intel's now-canceled Knights Hill. That 10nm processor was destined to appear in the DOE's Aurora supercomputer, but Intel canceled the product with little fanfare. The DOE later announced that Aurora would feature an "advanced architecture" for machine learning but hasn't provided specifics.

Much like Intel's Xeon Scalable processors, Knight's Mill featured an integrated Omni-Path connection that interfaced with cabling attached directly to the chip, hence the need for an open-ended socket like we see on this unnamed node. We inquired with DOE representatives about the mystery node, but to no avail. This could simply be a socket LGA 3647 system built to house Intel's Xeon Scalable processors, which can also sport integrated Omni-Path controllers and six-channel memory, but perhaps it is something more.

Astra - The First Petascale ARM Supercomputer

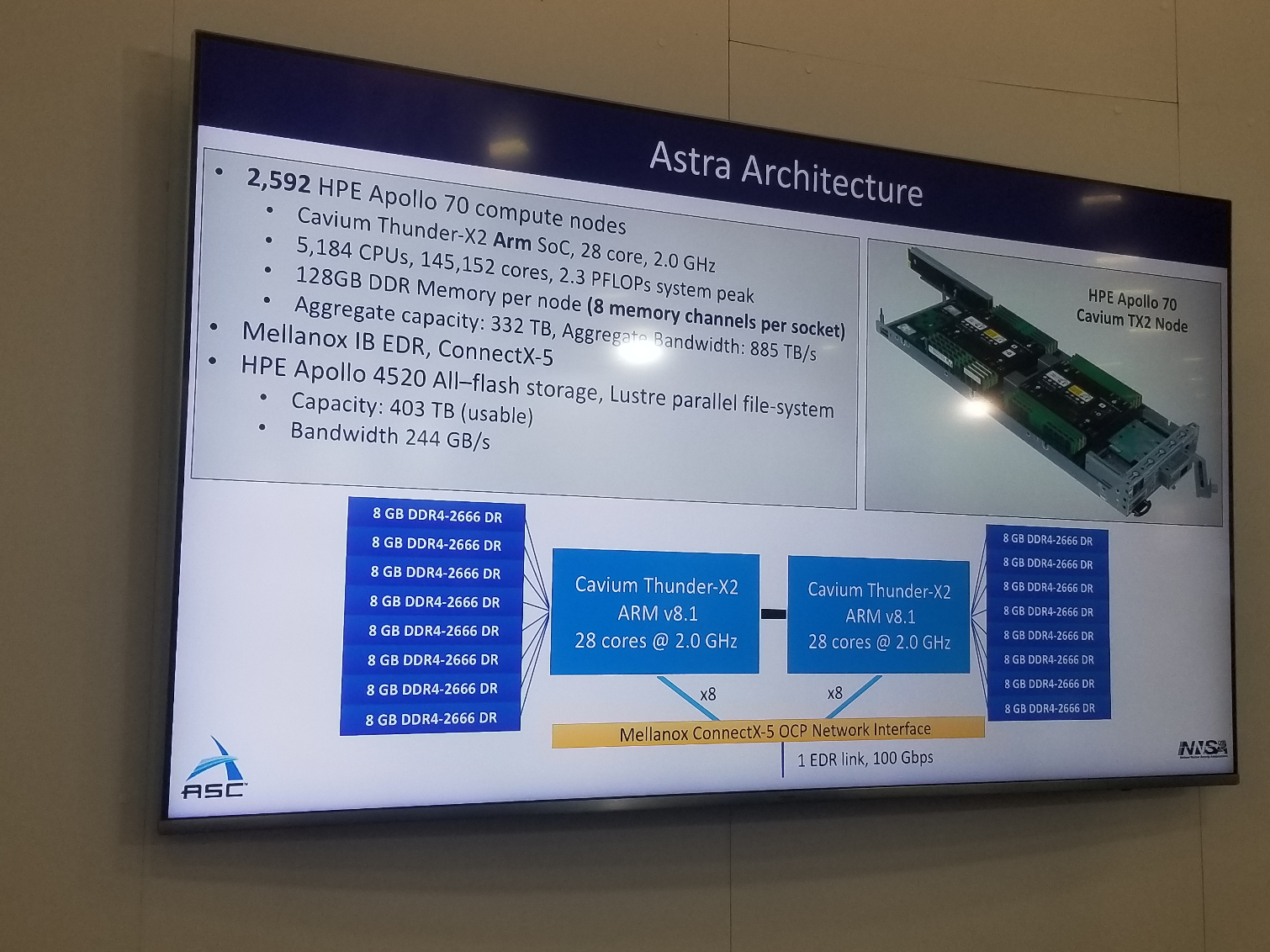

The DOE has almost finished building its new Astra supercomputer, which is the first petascale-class ARM-based supercomputer. This supercomputer features 36 racks of compute that come with 18 quad-node HPE Apollo 70 chassis per rack. That equates to 2,592 compute nodes in total.

Each node has two 28-core Cavium Thunder-X2 ARM SoCs running at 2.0 GHz for a total of 5,184 CPUs and 145,152 cores. Each socket supports eight memory channels, for a total of 128GB of memory per node. That leads to an aggregate capacity of 332 TB and bandwidth of 885 TB/s.

The incomplete system has already broken the 1.5 petaFLOPS barrier, and upon completion, it is projected to hit 2.3 petaFLOPS or more. Other notable inclusions include the 244 GB/s all-flash storage system that eliminates the need for costly and complex burst buffers that absorb outgoing data traffic.

As expected from an ARM-based system, low power consumption is a key consideration. The system consumes 1.2 MW in the 36 compute racks, but only 12 fan coils cool those due to its advanced liquid-based Thermosyphon cooler hybrid system.

AMD's Milan to Power Perlmutter

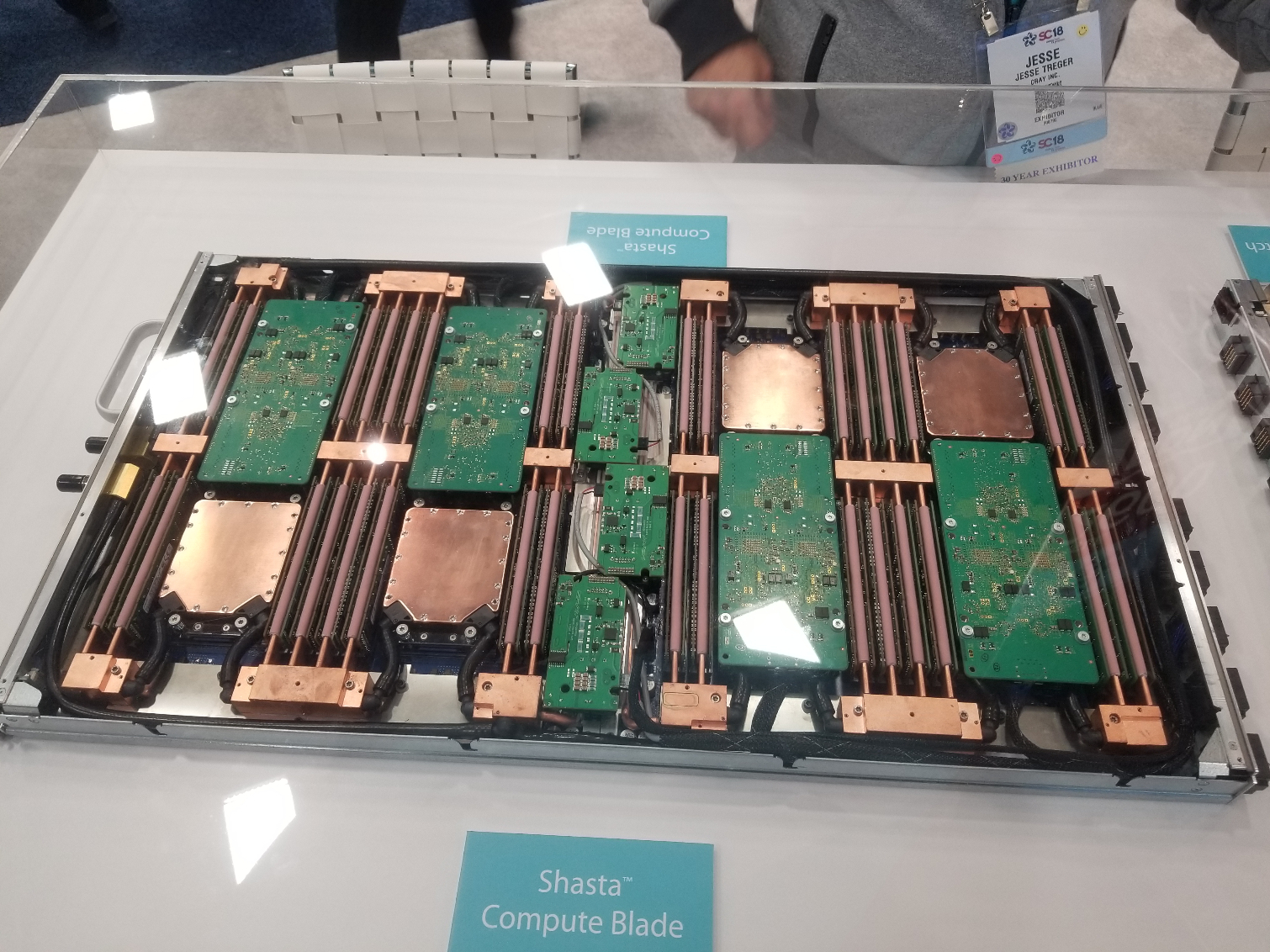

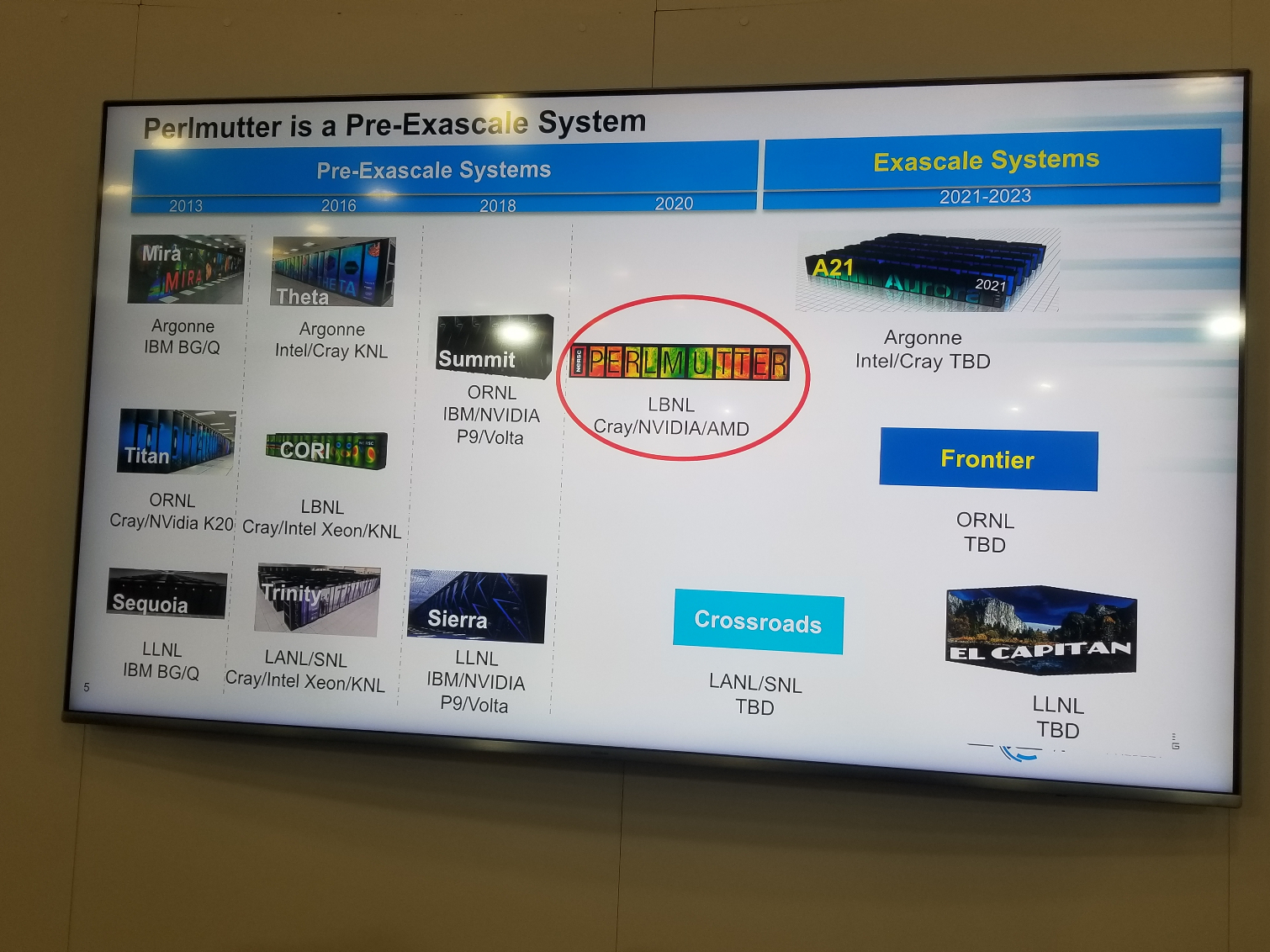

It didn't take long for us to hone in on Cray's Shasta Supercomputer (deep dive here). This new system comes packing AMD's unreleased EPYC Milan processors. Those are AMD's next-next generation data center processors. The new supercomputer will also use Nvidia's "Volta-Next" GPUs, with the two combining to make an exascale-class machine that will be one of the fastest supercomputers in the world.

The Department of Energy's forthcoming Perlmutter supercomputer will be built with a mixture of both CPU and GPU nodes, with the CPU node pictured here. This watercooled chassis houses eight AMD Milan CPUs. We see four copper waterblocks that cover the Milan processors, while four more processors are mounted inverted on the PCBs between the DIMM slots. This system is designed for the ultimate in performance density, so all the DIMMs are also watercooled.

Intel to Power Aurora, First Exascale Supercomputer

Intel and the U.S. Department of Energy (DOE) announced that Aurora, the world's first supercomputer capable of sustained exascale computing, would be delivered to the Argonne National Laboratory in 2021. Surprisingly, the disclosure includes news that Intel's not-yet-released Xe graphics architecture will be a key component of the new system, along with Intel's Optane Persistent DIMMs and a future generation of Xeon processors.

Intel and partner Cray will build the system, which can perform an unmatched quintillion operations per second (sustained). That's a billion billion operations, or one million times faster than today's high-end desktop PCs. The new system is comprised of 200 of Cray's Shasta systems and its "Slingshot" networking fabric.

More importantly, the system leverages Intel's Xe graphics architecture. In its announcement, Intel said Xe will be used for compute functions, meaning it will be primarily used for AI computing. Aurora also comes armed with "a future generation" of Intel's Optane DC Persistent Memory using 3D XPoint that can be addressed as either storage or memory. This marks the first known implementation of Intel's new memory in a supercomputer-class system.

The DOE told us that the new system will be "stood up" in early 2021 and will be fully online at exascale compute capacity before the end of that year.

The Future is Exascale

The Department of Energy has a robust pipeline of future supercomputers in the works for several of its sites, here's a list with links to the next-gen supercomputers.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.