AlphaGo AI Defeats Sedol Again, With 'Near Perfect Game'

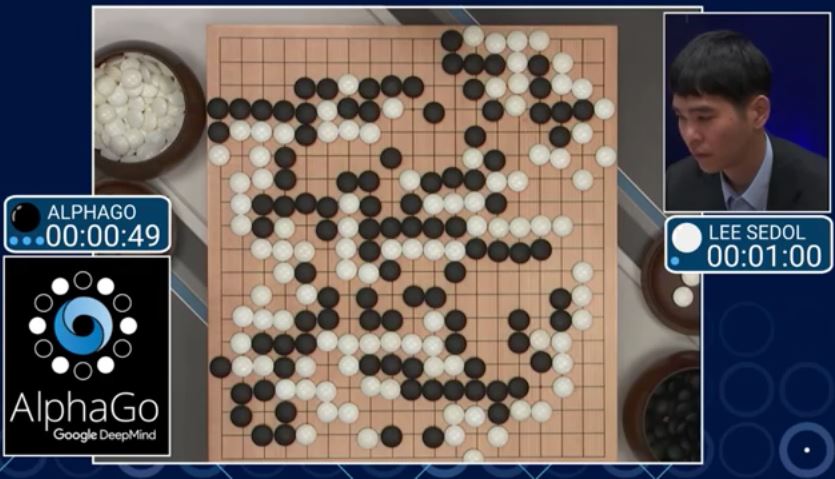

Google’s Go-playing version of DeepMind AI, AlphaGo, managed to score yet another win against Lee Sedol, the 18-time world champion, in the second match out of a total of five.

AlphaGo’s Near Perfect Game

Yesterday, Lee Sedol believed that AlphaGo didn’t do so well in the beginning, but the AI managed to squeeze a win against him towards the end of the game. In the second game, it was also Lee Sedol’s opportunity to take advantage of AlphaGo’s potential weaknesses after learning a little bit about its style. However, to his surprise, AlphaGo played a “near perfect game” this time.

“Yesterday, I was surprised, but today I am quite speechless. I would have to say, if you look at the way it was played, I admit that it was a very clear loss on my part. From the very beginning of the game, there was not a moment in time where I thought that I was leading the game,” Lee Sedol said in the post-game conference.“Yesterday, as I was playing the game, I felt that AlphaGo played some problematic positions, but today I really feel that AlphaGo had played a near perfect game. There was not a moment that I thought AlphaGo’s moves were unreasonable,” he added.

At the conference he was also asked if he found any weaknesses in AlphaGo’s game, but he said that he lost the game because he couldn’t find any.

Sedol also expects that the next games are only going to become more difficult, possibly because AlphaGo becomes ever so slightly better after each game it plays. Therefore, he added that he would need to focus even more for the next games. He would also need to try and get an edge in the early game, when AlphaGo could be at its weakest, even though AlphaGo’s weakest moves could still be too good to be easily exploited.

A Stronger Challenger?

Chinese Go Grandmaster Ke Jie, who some believe that in the past few years has been a better player than Lee Sedol, said that at this point in time, he would have a 60 percent chance of beating AlphaGo.

Google would likely not give everyone who says they can beat AlphaGo a chance to play five matches against the AI, but it could be interesting to see additional matches against other world-class players. However, this would have to happen soon, as even Ke Jie said that it may be a matter of months, or at most years, until even he couldn’t beat the AI anymore.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Ke Jie only became a 9-dan (the highest level in Go) player last year, and since then he defeated Lee Sedol with a score of 3-2 earlier this year.

The next match between AlphaGo and Lee Sedol will be played on March 11, 11pm ET (March 12, 1pm KST).

Lucian Armasu is a Contributing Writer for Tom's Hardware. You can follow him at @lucian_armasu.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

hellwig So, where do we go from here? AlphaGo gets so good because it keeps playing the same game with known constraints against opponents working within those same constraints. It can even play itself to get better.Reply

AlphaGo can play Go, but could it play a different game? Chess? Checkers? Settlers of Catan? -

paladinnz ReplySo, where do we go from here? AlphaGo gets so good because it keeps playing the same game with known constraints against opponents working within those same constraints. It can even play itself to get better.

AlphaGo is just a Go program, but I don't see why they couldn't make a Chess or Checkers program that uses the same DeepMind system.

AlphaGo can play Go, but could it play a different game? Chess? Checkers? Settlers of Catan?

They probably wouldn't bother is my guess as the number of permutation for chess and checkers are small enough brute force. The unique thing about Go is the unbelievably massive number of possible moves makes the brute force approach untenable. -

hellwig ReplyAlphaGo is just a Go program, but I don't see why they couldn't make a Chess or Checkers program that uses the same DeepMind system.

Reminds me of the concept behind "Virtual Intelligence" in Mass Effect. Intelligences with pre-programmed limitations on knowledge, performance, etc... but which are free to develop within those parameters.

They probably wouldn't bother is my guess as the number of permutation for chess and checkers are small enough brute force. The unique thing about Go is the unbelievably massive number of possible moves makes the brute force approach untenable.

I'm not sure that's a distinction the real world makes when it comes to "AI". But I'm not going to worry about SkyNet until a computer can master both Go AND Go Fish on it's own. -

Bogdan Barbu Obviously. It's just machine learning implemented algorithms using neural nets. For a different problem, you'd use a slightly different architecture and a different training set. They merely chose Go because it is an EXPTIME-complete problem with a huge game tree (way bigger than that of chess, which is why it has been problematic).Reply -

stevo777 Fascinating stuff. Even if it is just Go, it is pretty clear that AlphaGo is adjusting to its mistakes and making rapid improvements. With all the Neuromorphic chips starting to hit the market, machine learning is improving very quickly.Reply -

Johnpombrio I always have trouble with the middle of the board. AlphaGo just OWNS the middle and starts working it early on. Damn, these are good players!Reply -

Alfred_4 ReplySo, where do we go from here? AlphaGo gets so good because it keeps playing the same game with known constraints against opponents working within those same constraints. It can even play itself to get better.

ALphago is a generic AI, which happened to have been trained at playing go. It could just as well have been trained in medical diagnosing, daytrading or writing lyrics.

AlphaGo can play Go, but could it play a different game? Chess? Checkers? Settlers of Catan?

-

lun471k ReplySo, where do we go from here? AlphaGo gets so good because it keeps playing the same game with known constraints against opponents working within those same constraints. It can even play itself to get better.

AlphaGo can play Go, but could it play a different game? Chess? Checkers? Settlers of Catan?

The goal of DeepMind isn't to show versatility of their AI against games that can be bruteforced, but rather to show the potential of the AI in a game/environment where possibilities are simply too high for the right move to be calculated that way.