AMD Unveils EPYC Server Processor Models And Pricing Guidelines

AMD officially unveiled the breadth of its new EPYC server processor line, as well as rough pricing guidelines, at its Tech Day in Austin, Texas. AMD has enjoyed significant success with its new Zen architecture, which serves as the foundation of its Ryzen lineup of desktop host processors, and due to the modularity of the design, the company will employ the same building blocks in its forthcoming EPYC server lineup.

Intel has a commanding lead in the data center--some estimate its market share as high as 99.6% of the worlds' server sockets--so the industry is pining for a competitive x86 alternative. The high-margin data center market represents a tremendous growth opportunity for AMD as it returns to a competitive stance. The same disruptive pricing model we've seen on the desktop carries over to the server segment.

Two Socket Platform

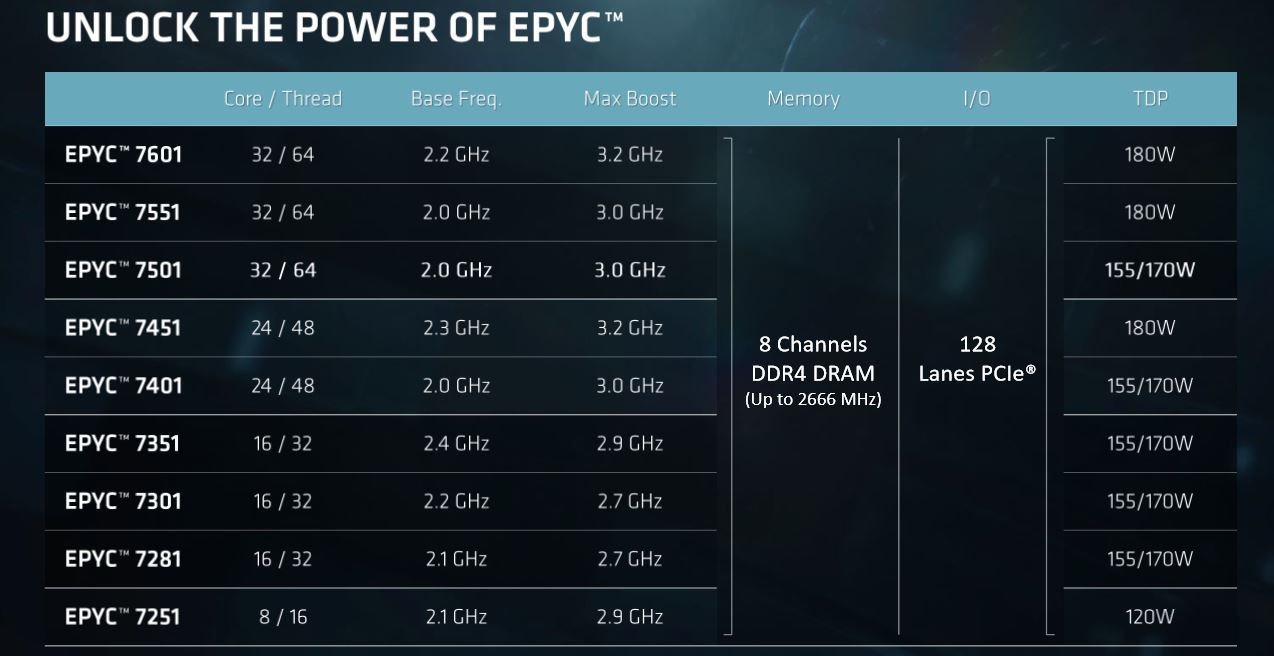

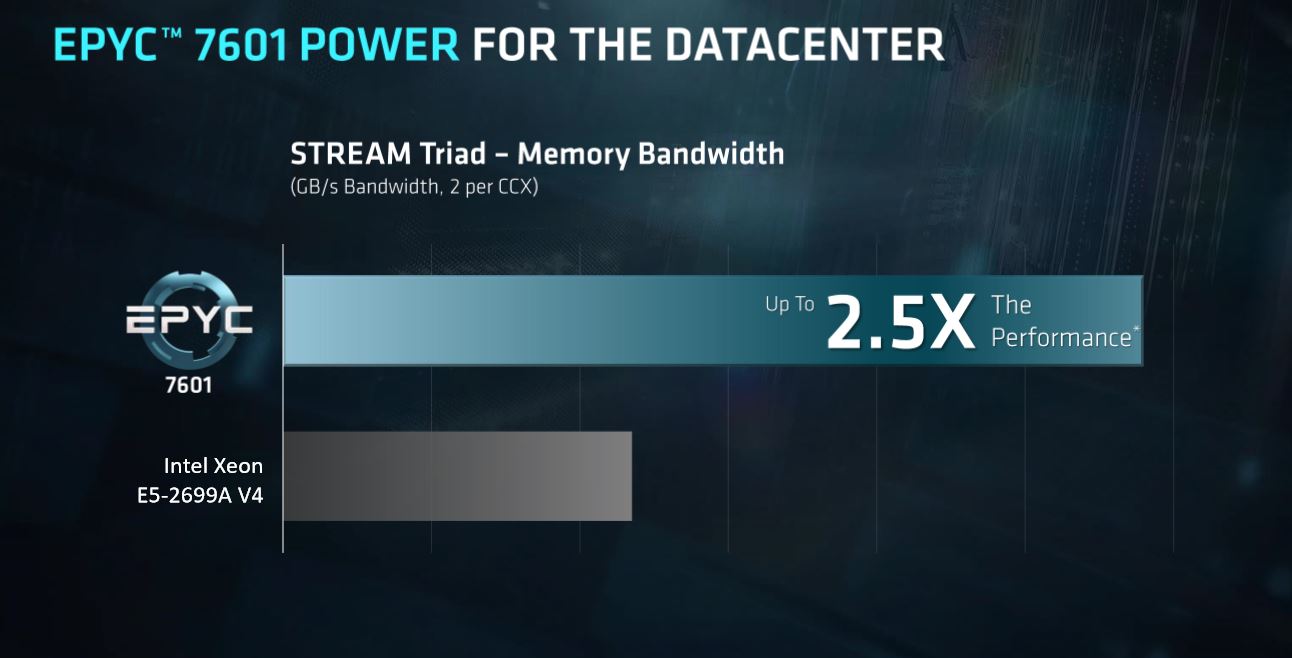

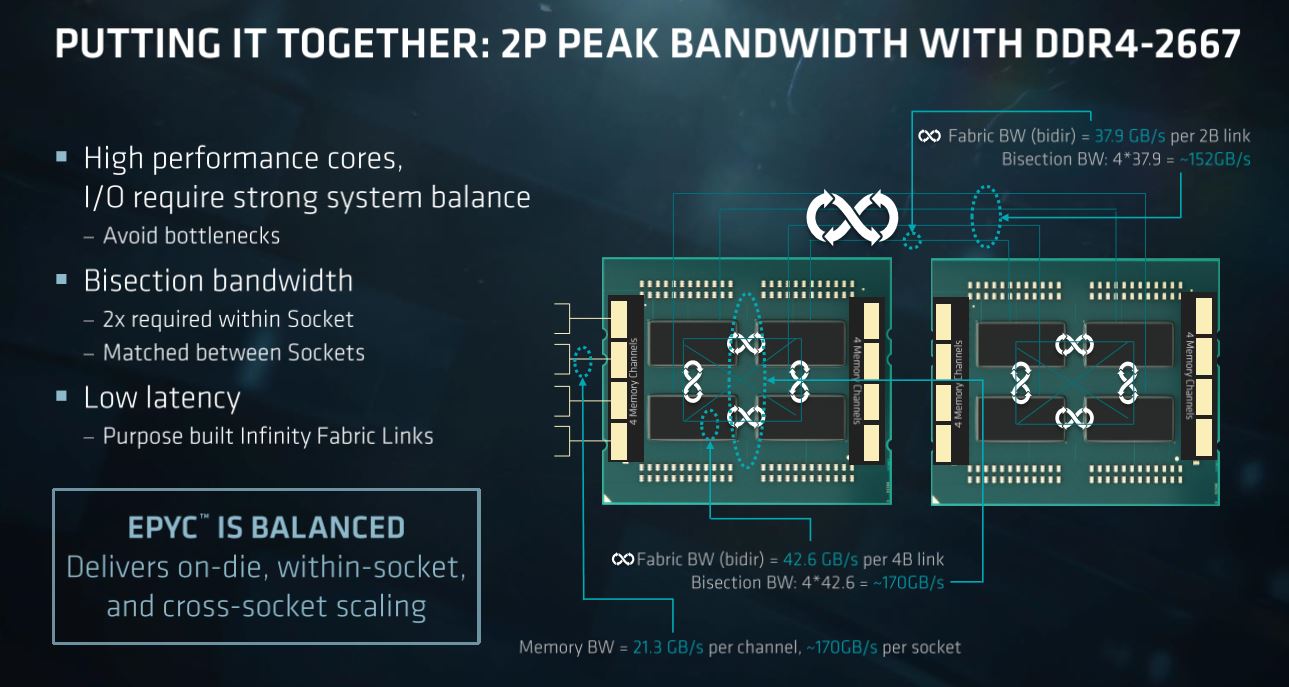

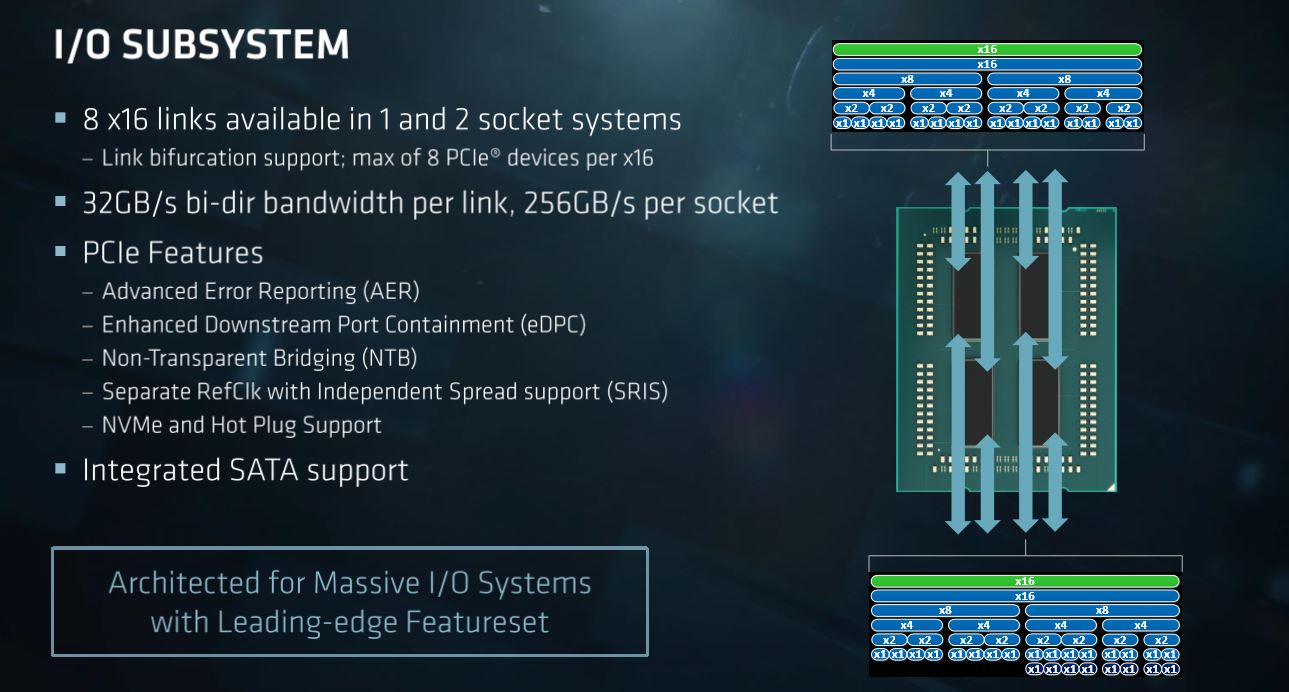

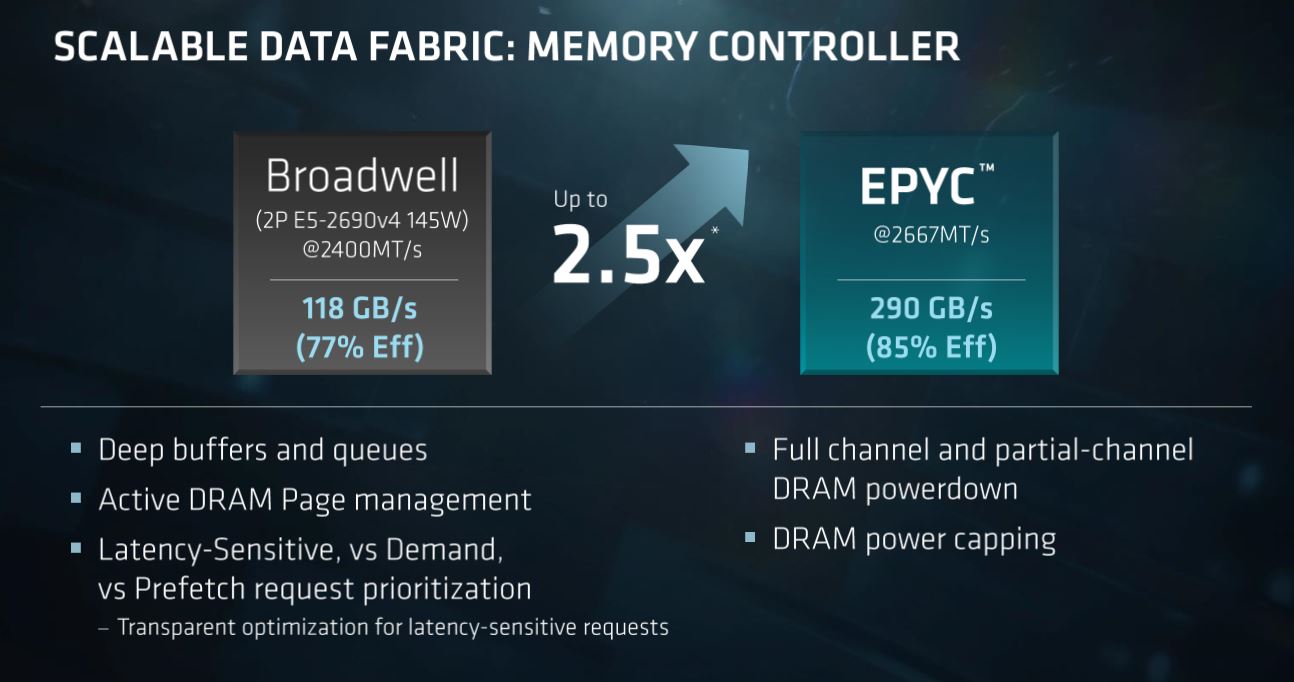

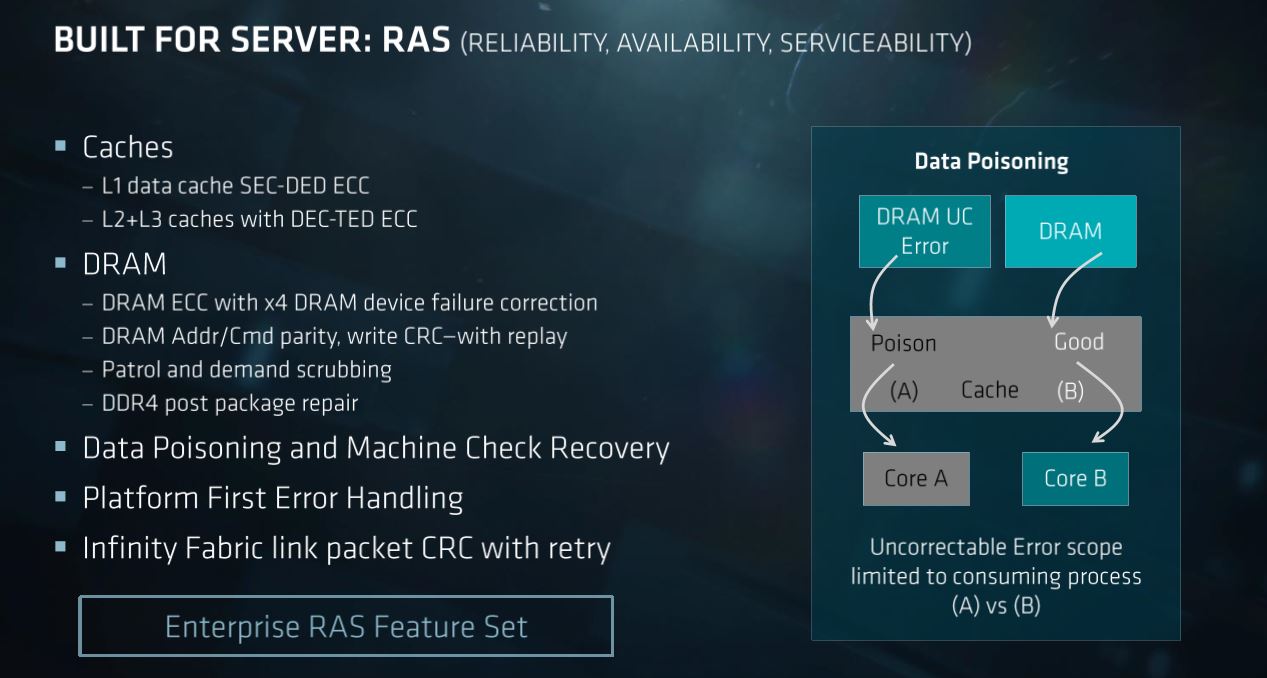

AMD designed the EPYC platform around its "Power, Optimize, and Secure" mantra, and the company is surprisingly forthcoming about the fact that it won't win in every application or use case. Instead, it will focus on the areas that leverage the strengths of its processor and platform design. The lineup begins with two-socket processor derivatives designed to offer more cores, memory bandwidth, and I/O capabilities compared to similarly priced Intel Xeon processors. The family includes nine SKUs that all offer eight channels of DDR4-2666 memory support, which outweighs Intel's rumored six-channel support for the Skylake Xeon models.

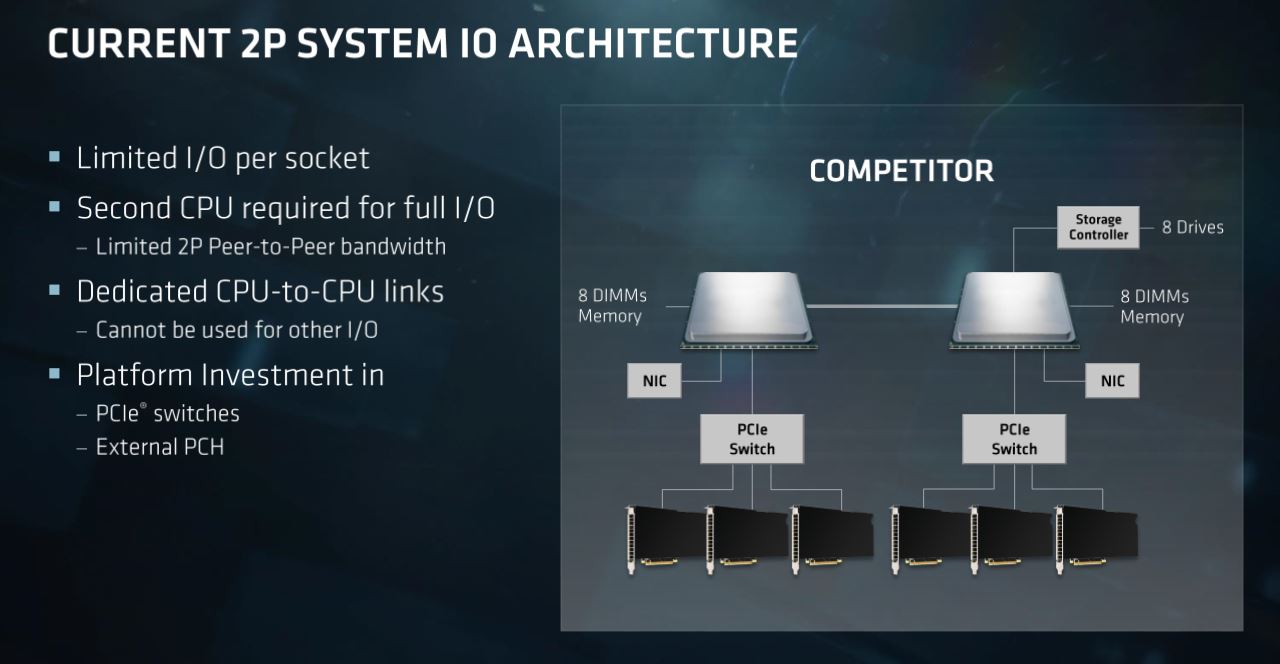

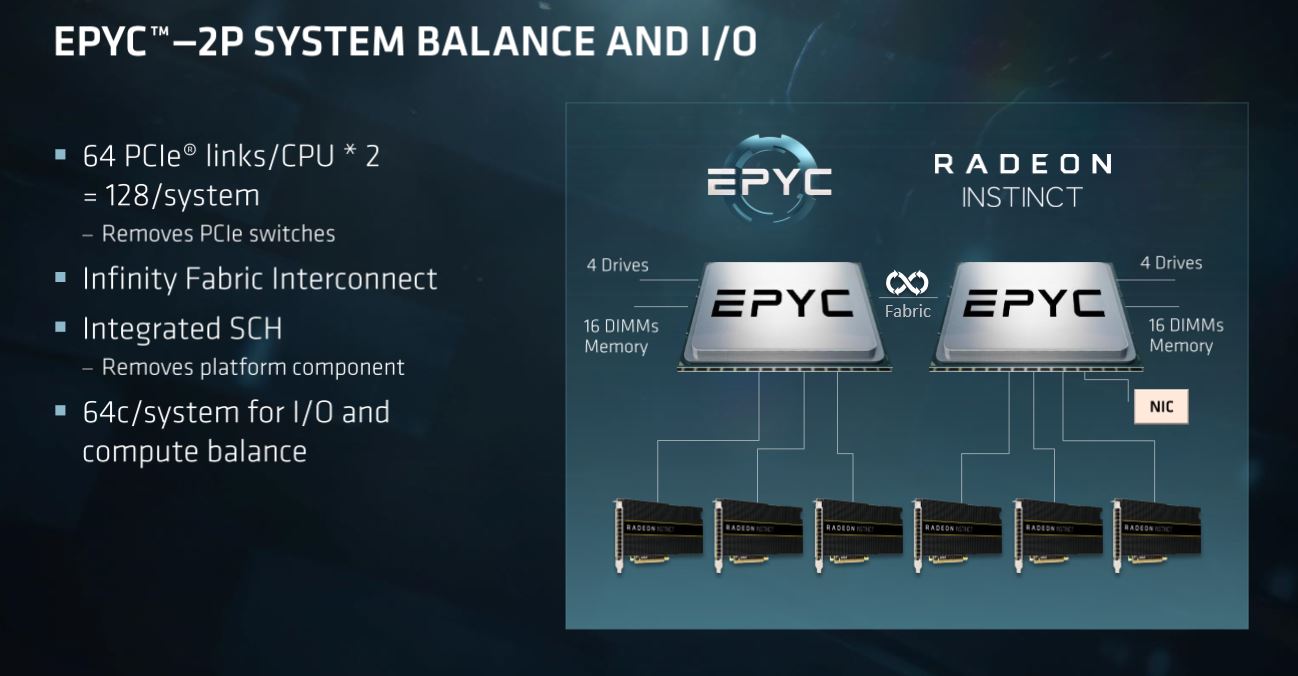

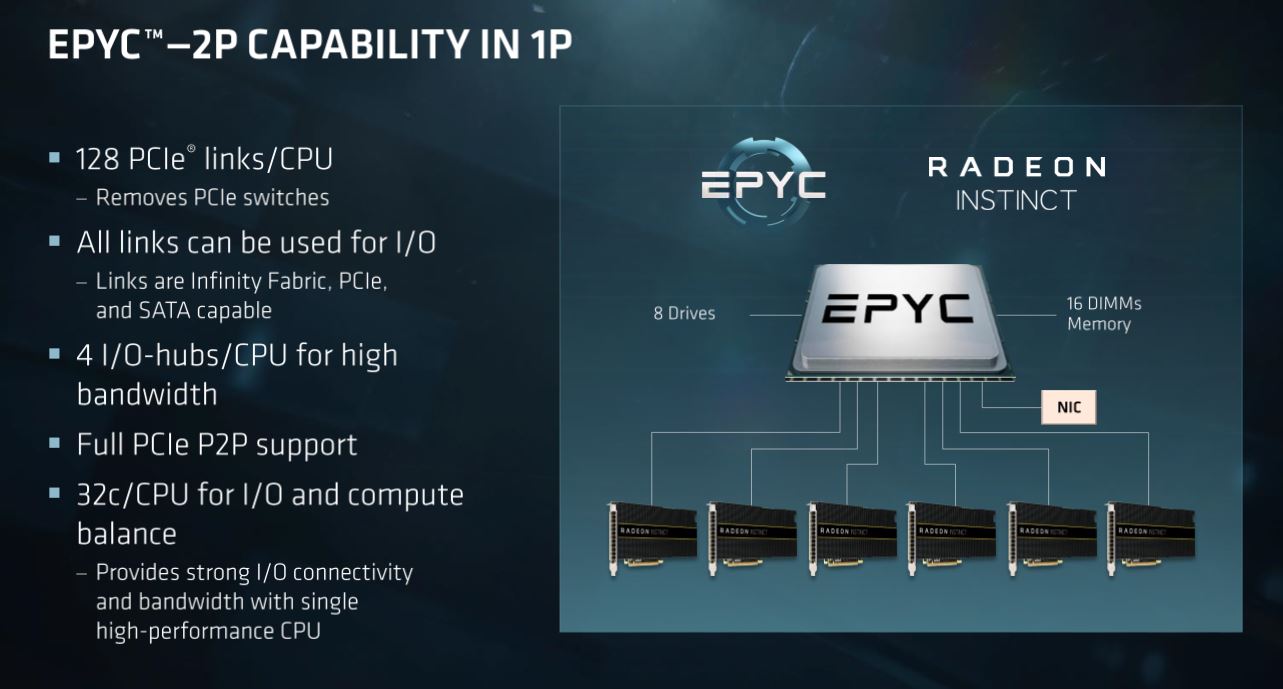

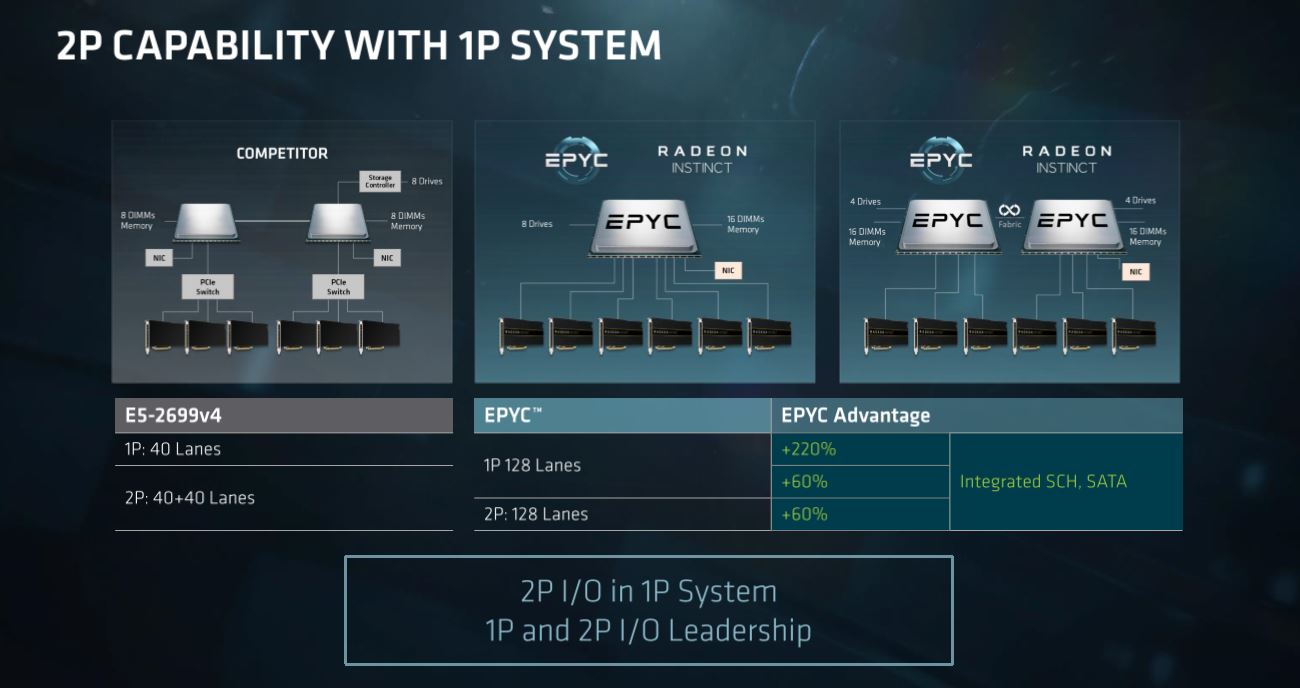

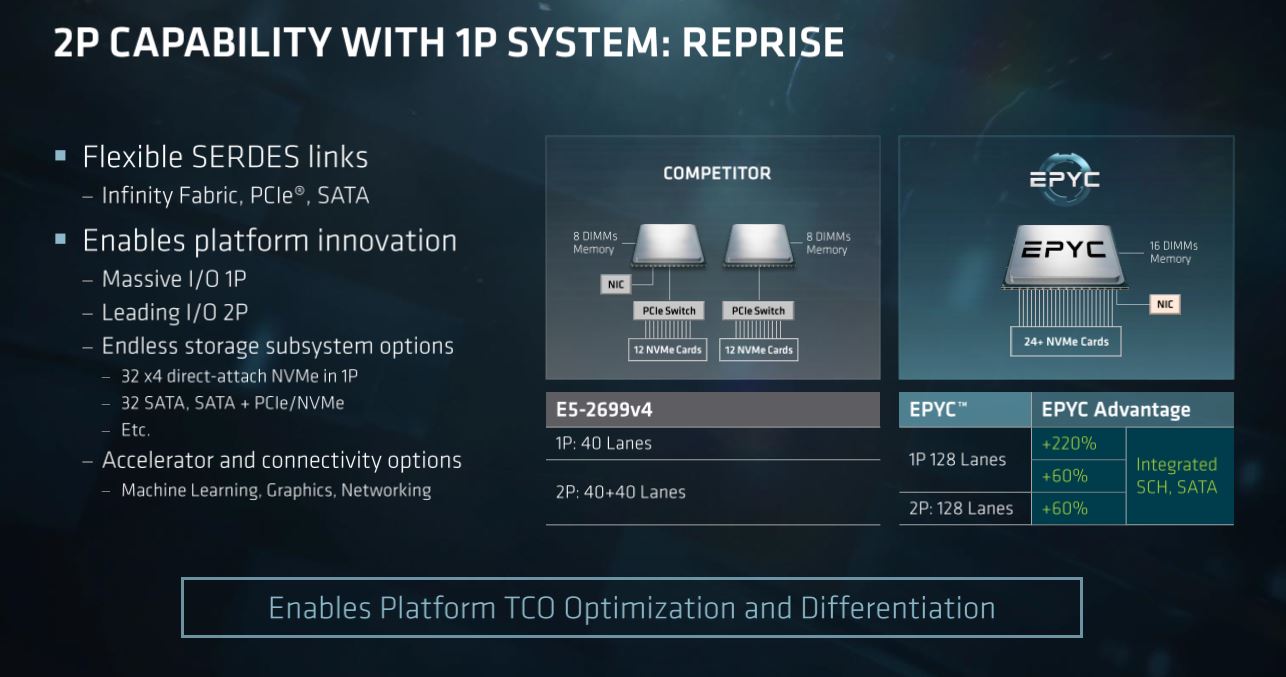

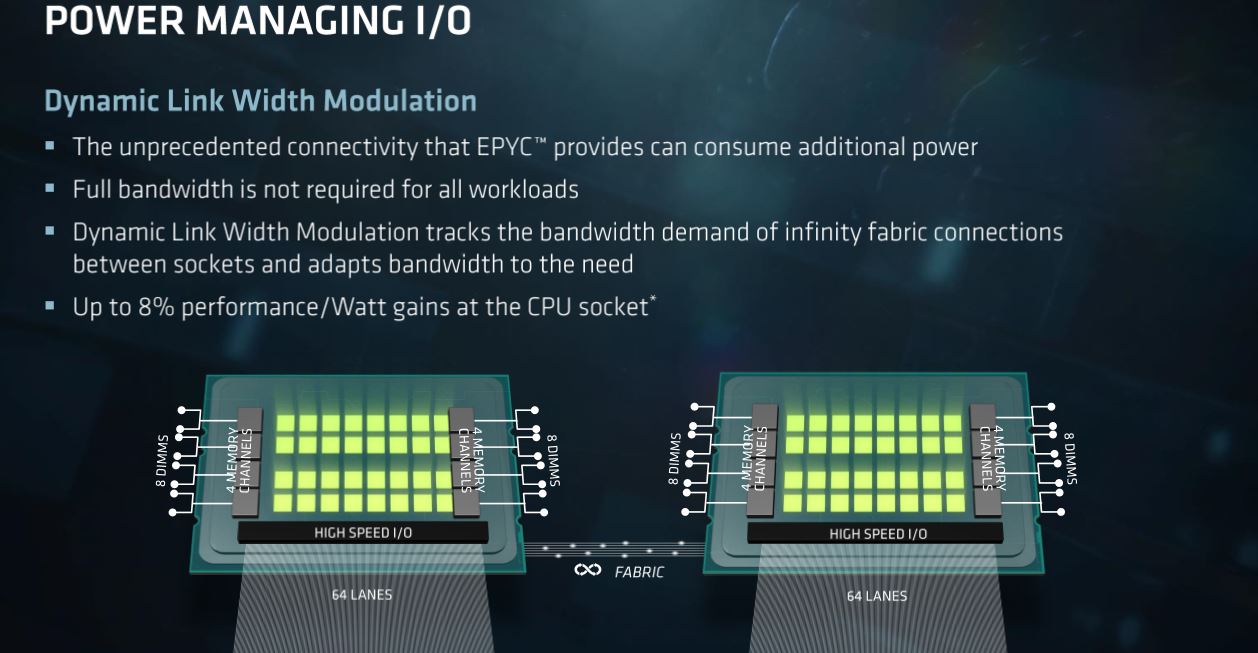

AMD is also forgoing Intel's segmentation tactics and offering the full 128 lanes of PCIe connectivity for the entire lineup, and it also doesn't segment memory support. (Cutting PCIe lanes for less expensive SKUs is a common Intel strategy.) The hefty PCIe allotment eliminates the need for PCIe switches, which magnify lane allocations on Intel servers. This reduces motherboard complexity and also provides performance advantages for PCIe-based devices, such as direct-attached NVMe SSDs.

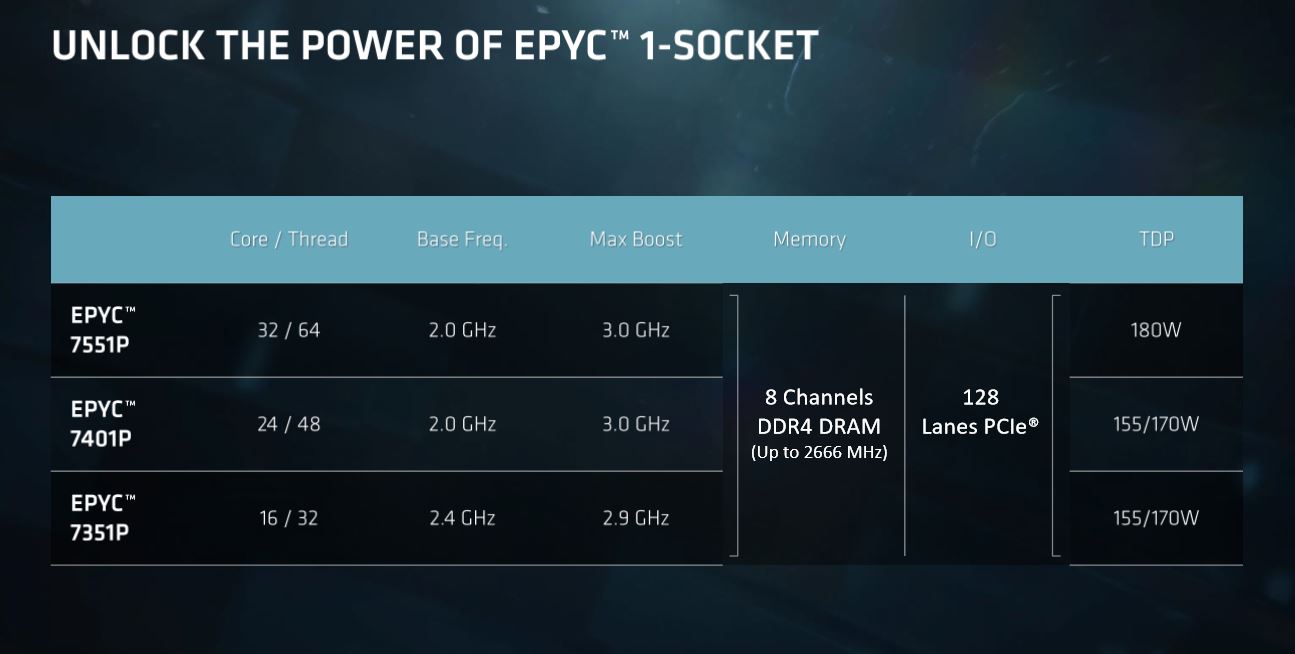

TDPs range from 120W with the 8C/16T EPYC 7251 up to 180W for the 32C/64T 7601. We aren't aware of Intel's Skylake Xeon TDP ranges as of yet. However, considering the 140W-165W TDP ranges we've found with the comparable Skylake-X models, which feature the same die found in Intel's Xeons, AMD might have parity, or even an advantage.

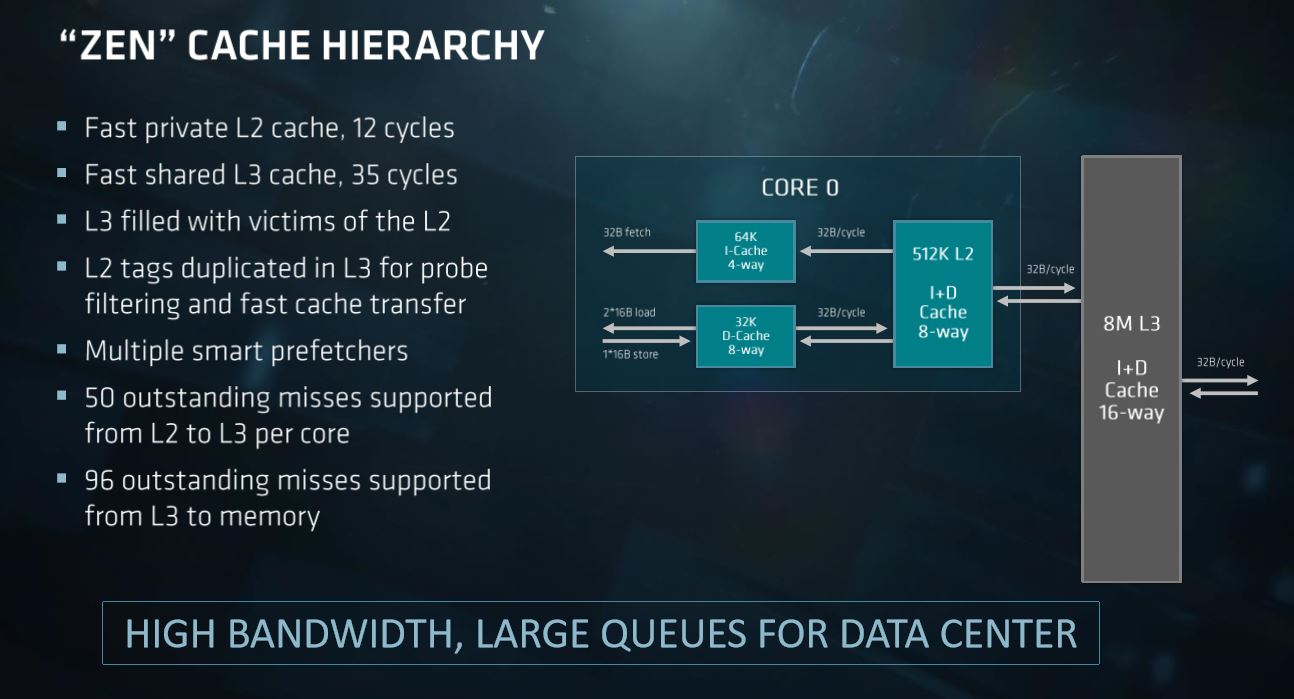

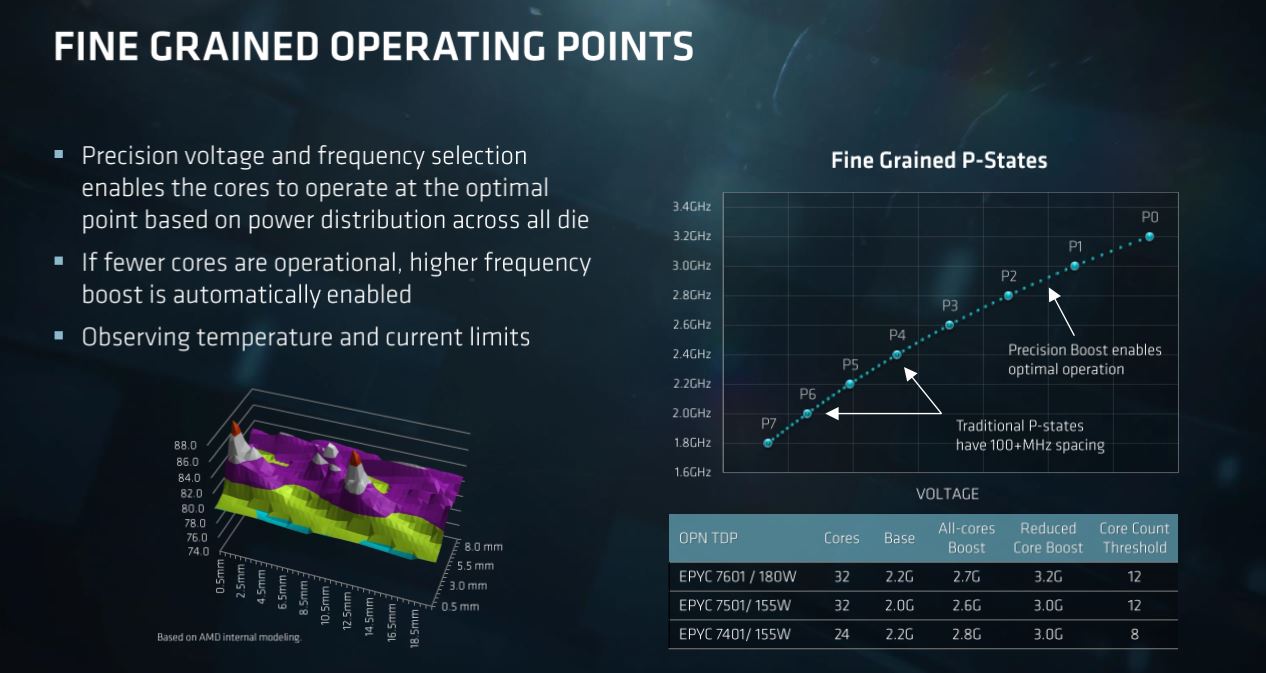

EPYC's base processor frequencies range from 2.1GHz to 2.4GHz, which is a relatively small frequency range compared to Intel's lineup. We see a slightly higher delta between the 2.7-3.2GHz maximum boost frequencies, but again, the envelope is fairly restricted. We do know that AMD will employ the same four-die design for the entire lineup, disabling cores along the way to create the various SKUs. EPYC's I/O capabilities, such as memory channels and PCIe controllers, are contained in each respective die. Disabling entire dies would reduce functionality. The beefy allotment of extra silicon on the low core count models, and thus active die consuming power, might create the restricted thermal envelope that yields the similar clock speeds across the product family. The extra memory channels provide AMD with a memory capacity advantage of 2TB per CPU.

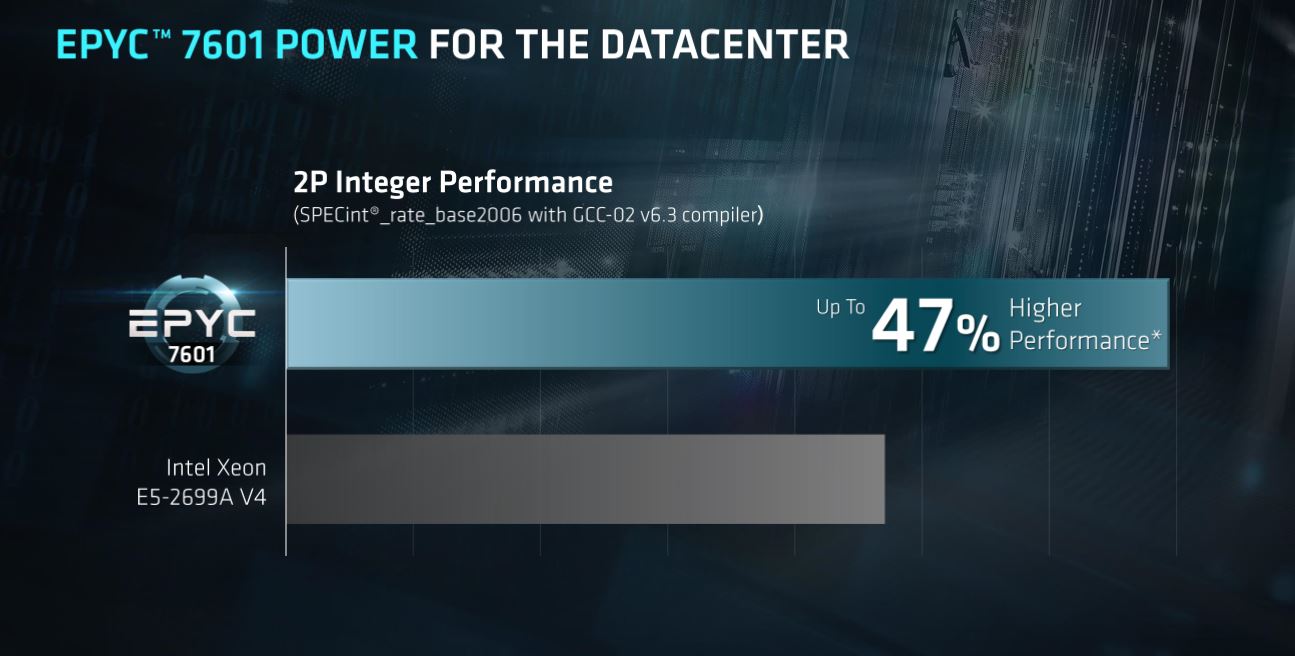

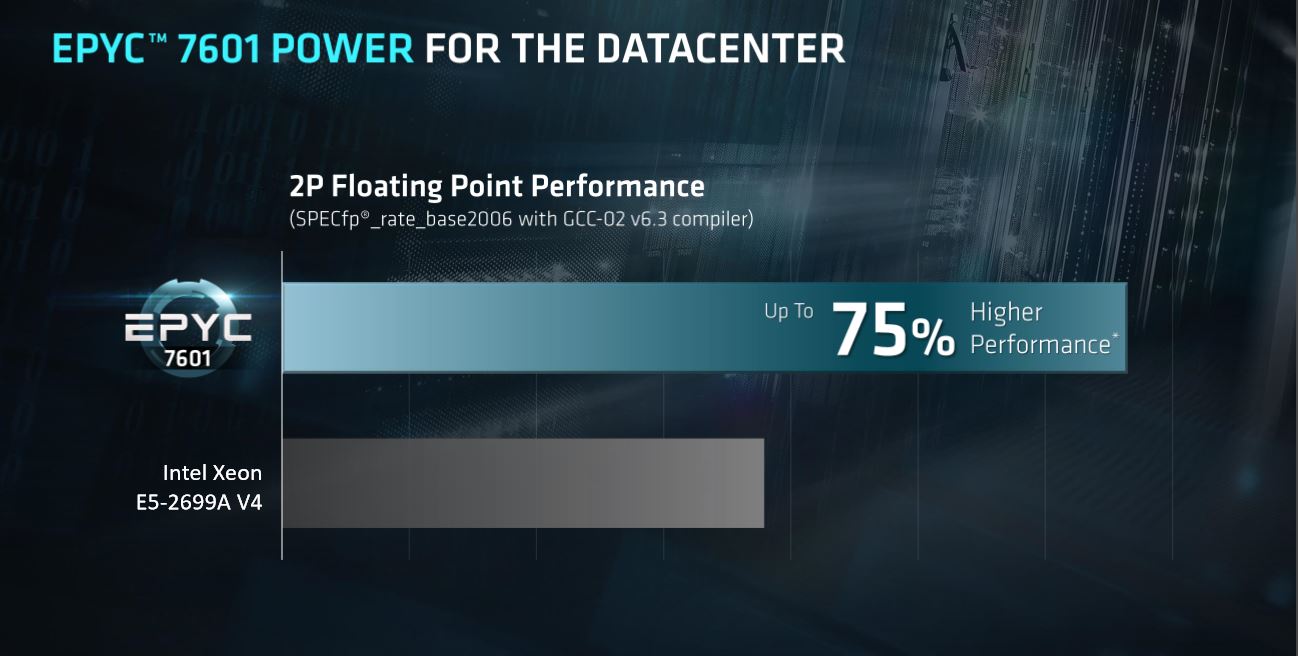

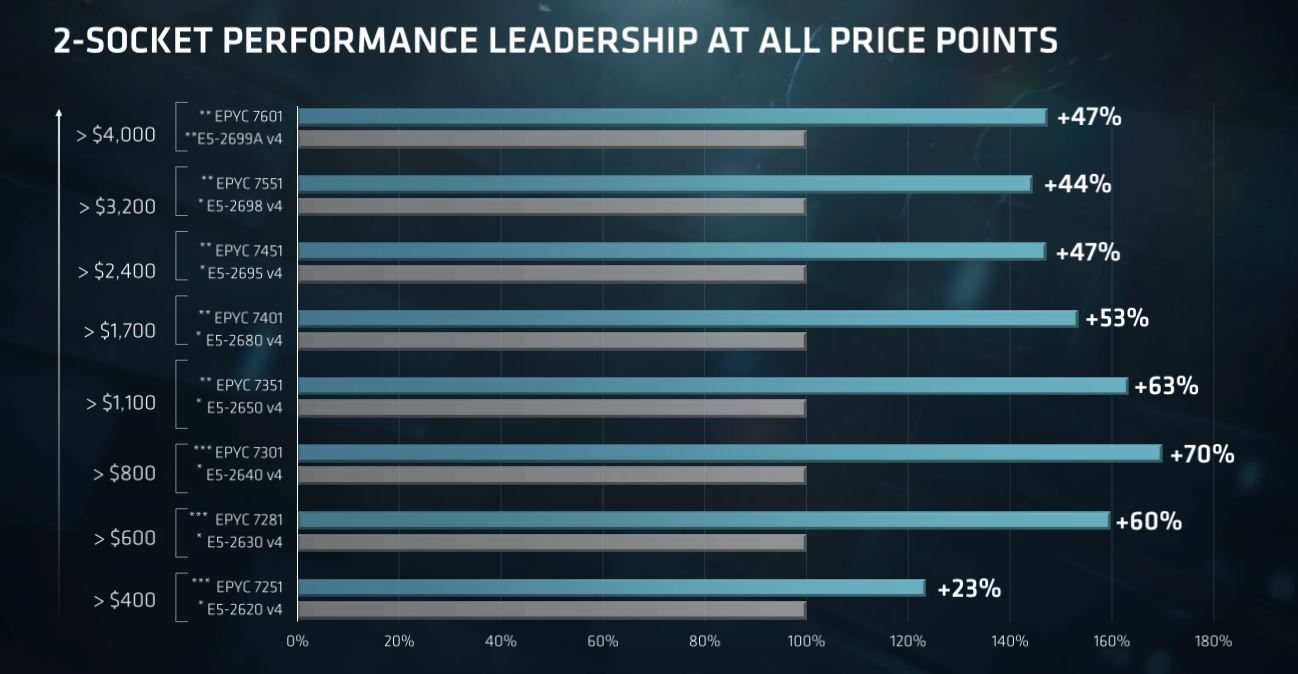

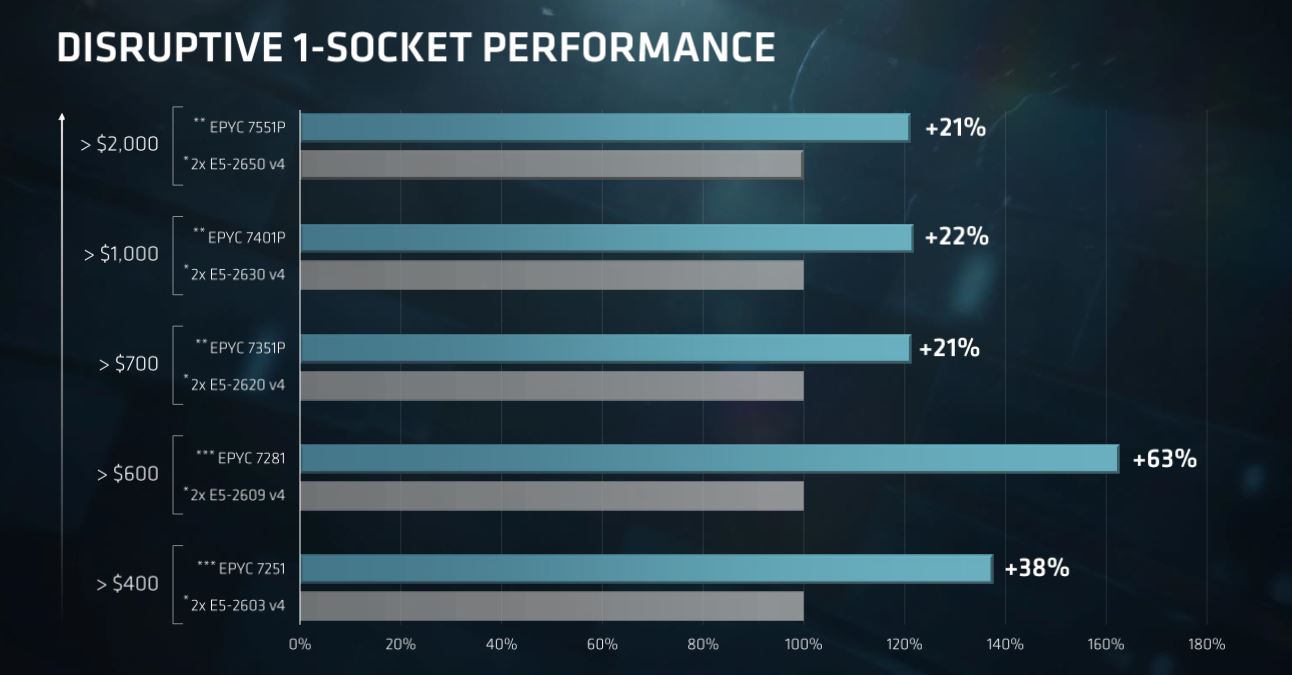

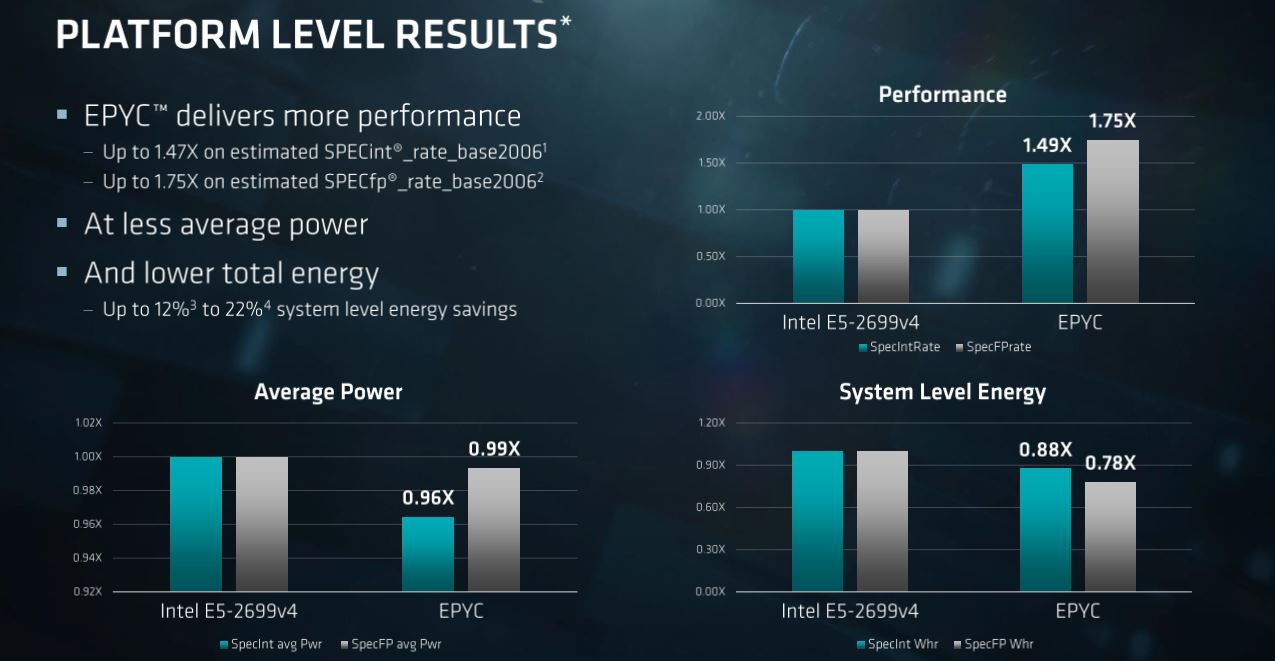

AMD provided some basic benchmarks, seen in the slides above, that compare its processors to the nearest Intel comparables. The price and performance breakdown chart is perhaps the most interesting, as it indicates much higher performance (as measured by SPECint_rate_base2006), at every price point. It bears mentioning that Intel publicly posts its SPEC benchmark data, and AMD's endnotes indicates that it reduced the scores used for these calculations by 46%. AMD justified this adjustment because they feel the Intel C++ compiler provides an unfair advantage in the benchmark. There is a notable advantage to the compiler, but most predict it is in the 20% range, so AMD's adjustments appear aggressive. As always, we should take vendor-provided price and performance comparisons with the necessary skepticism and instead rely upon third-party data as it emerges.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

These comparisons are in relation to Broadwell Xeons, though; of course, AMD doesn't have data to compare against Intel's Skylake models, which are yet on the cusp of release. AMD did note that it didn't design EPYC to compete with Broadwell-based Xeons. Instead, it planned for Intel's next-generation products. The Xeon E5-2640 is the high volume mover for Intel in this segment, and AMD claimed that it offers 70% more performance at a similar price point.

AMD has identified the single-socket server as a potential high-growth market. Roughly 25% of two-socket servers have only one socket populated, so offering a capable single-socket platform reduces unnecessary redundancies (such as sockets, networking components, and power supplies), thus saving on up-front cost, power, space, and cooling expenses. AMD feels it has an advantage in this burgeoning space due to EPYC's hefty allotment of I/O, memory bandwidth, and memory capacity. As such, the company created three single-socket SKUs that feature the same connectivity options and a TDP range of 155W-180W. We also noticed that the highest frequency of the lineup was at 2.4GHz on the 7351P.

Again, these value comparisons are derived from a data set that AMD adjusted to remove what it considers an unfair advantage.

Each single socket server will provide a capacious 128 lanes of PCIe and full P2P (peer-to-peer) support, which is crucial for GPU-based AI-centric architectures. AMD envisions data center operators loading up these servers with up to six GPUs, preferably AMD's Vega Frontier Edition, to create powerful machine learning platforms. AMD provided a similar set of benchmark data for the respective price points.

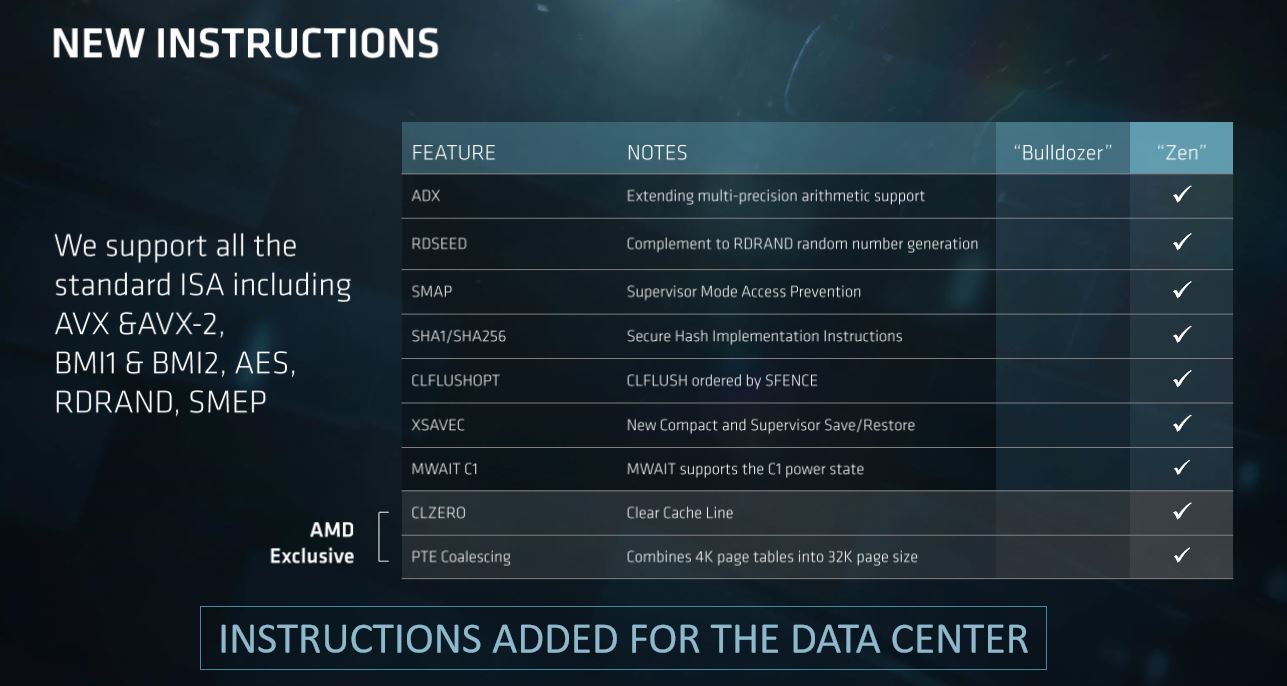

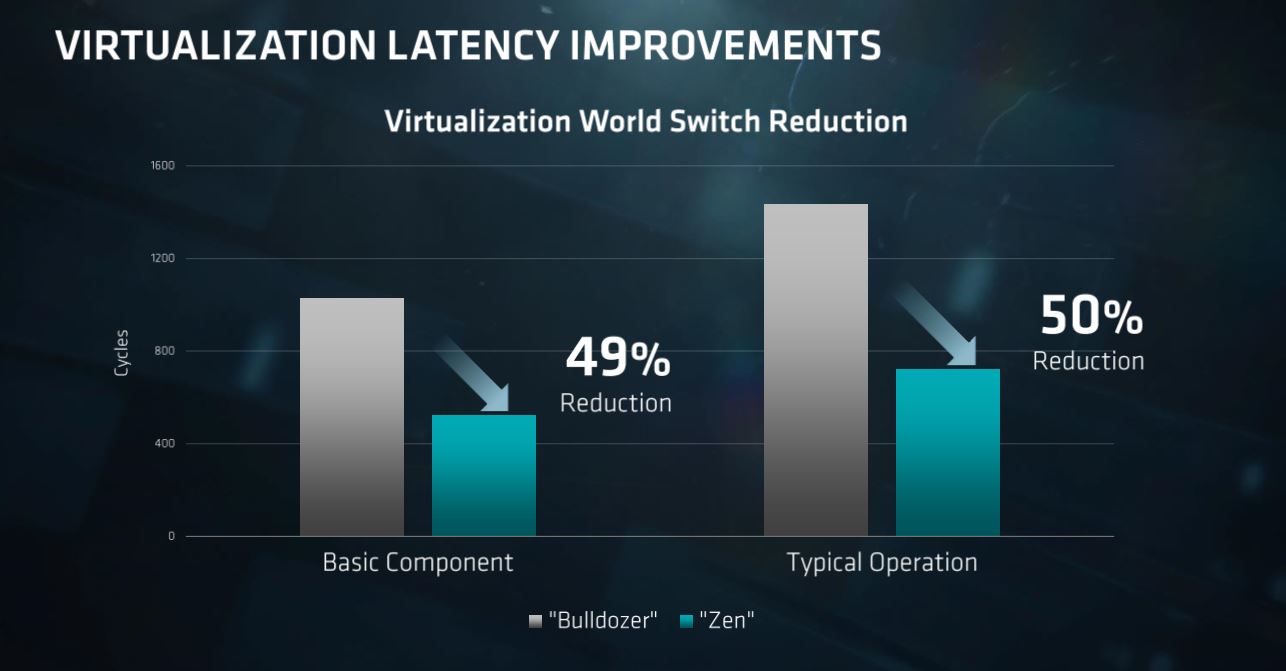

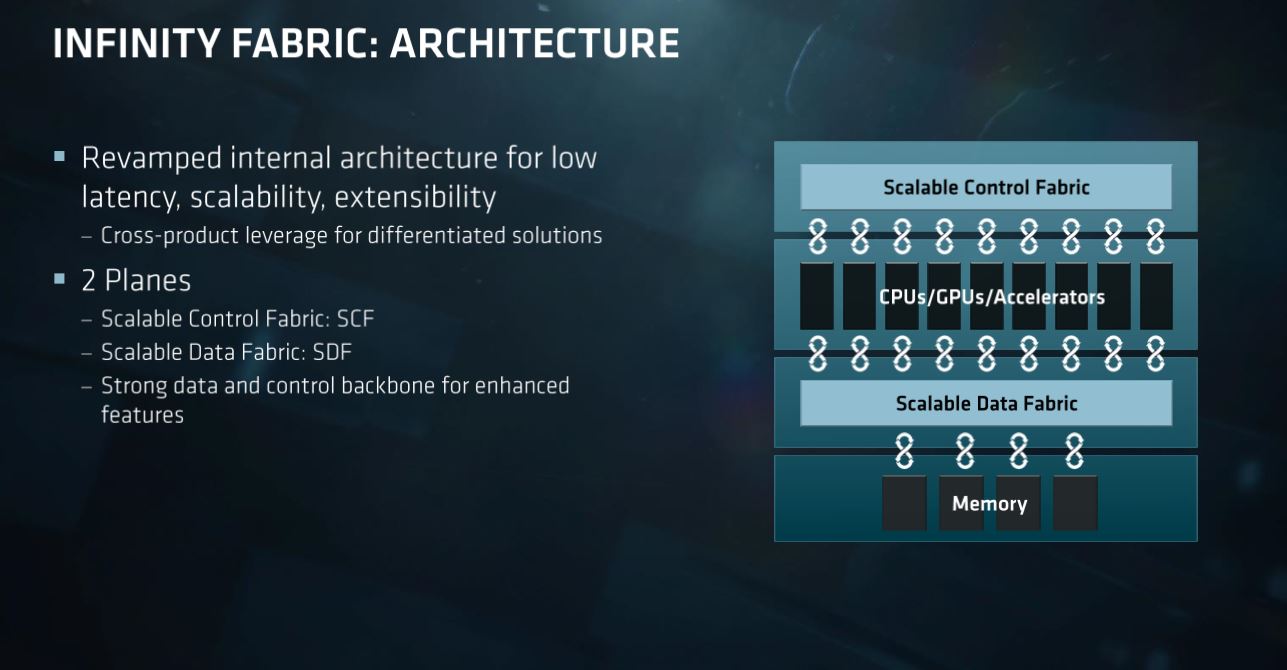

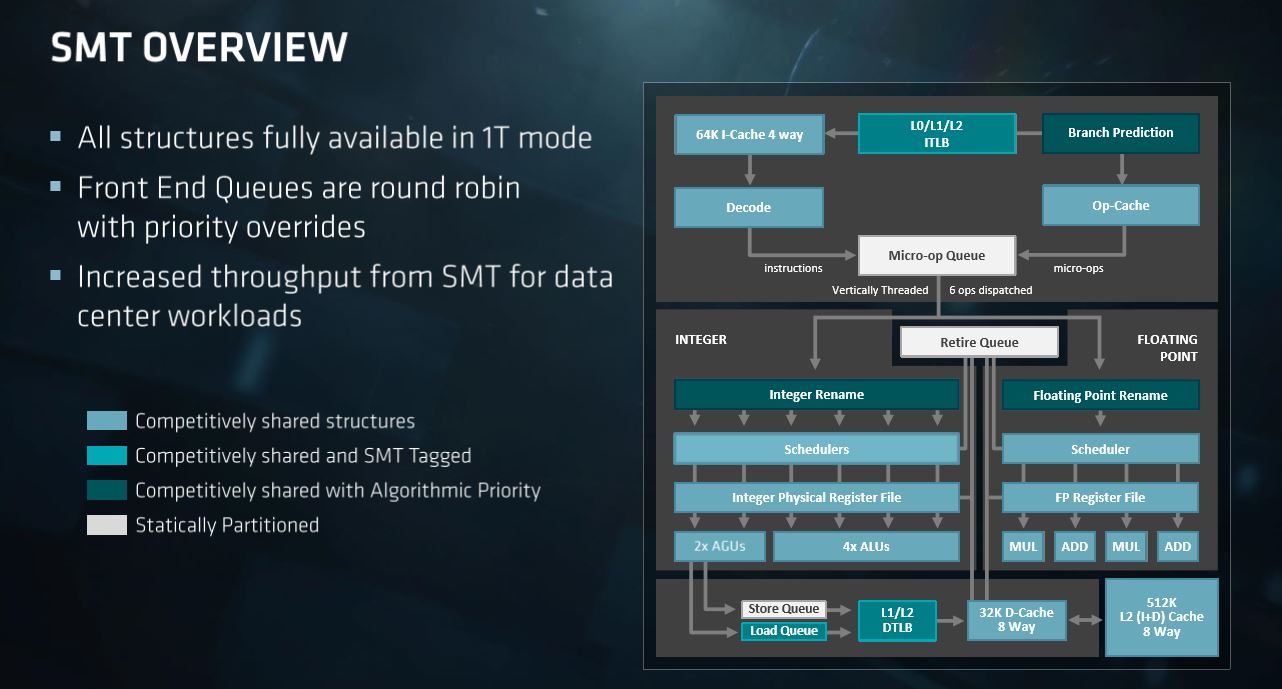

AMD highlighted several of its new instructions and also indicated that its design is optimized for virtualized applications. The company also provided a comparison of world switch reduction between its previous-generation Bulldozer-based products. The Infinity Fabric may pose a challenge in this regard.

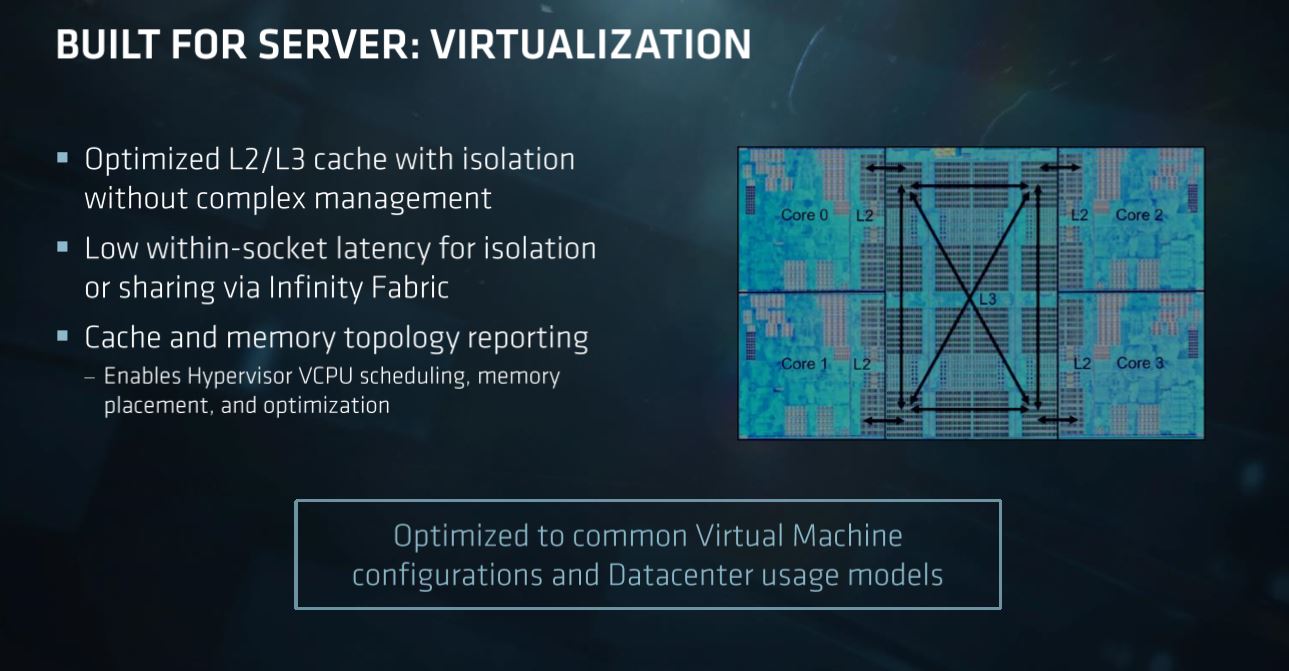

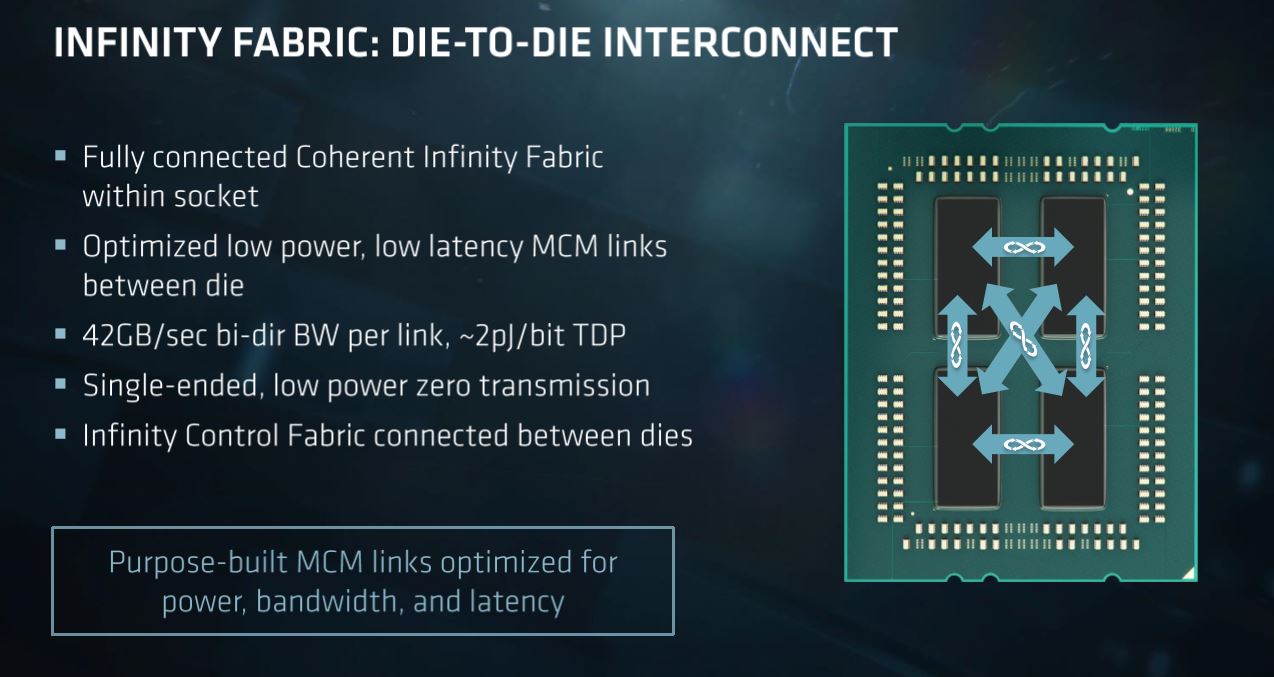

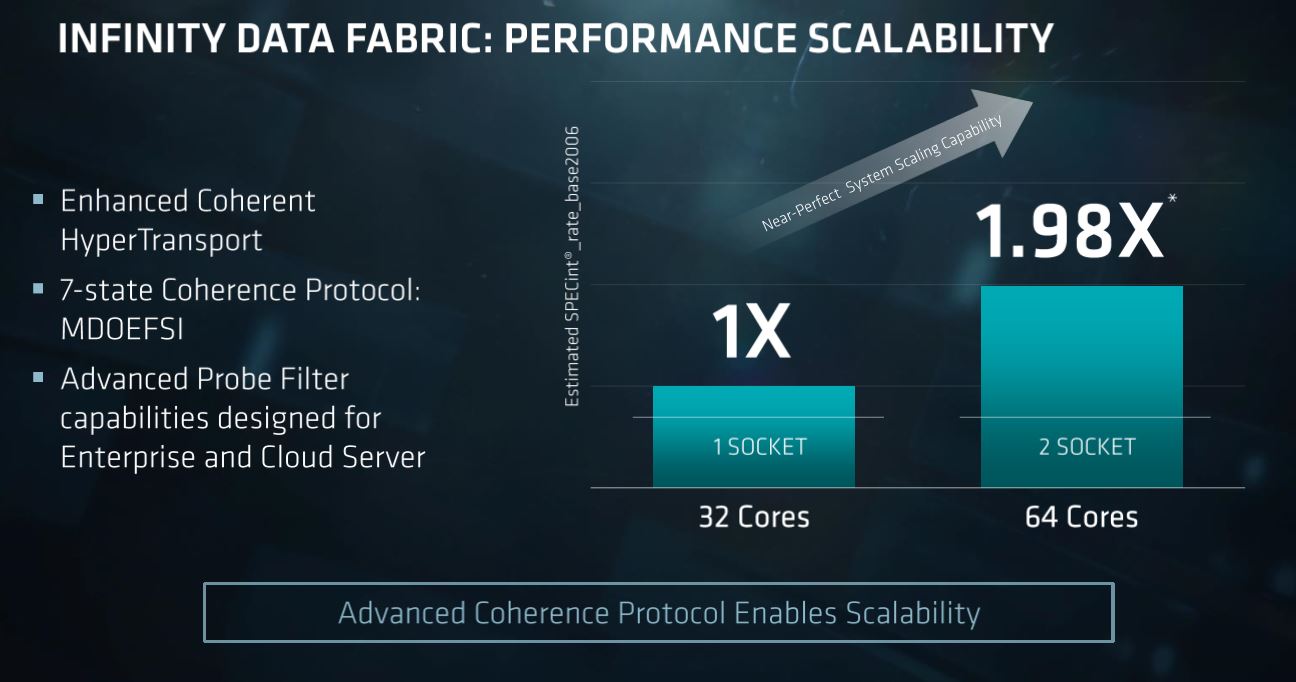

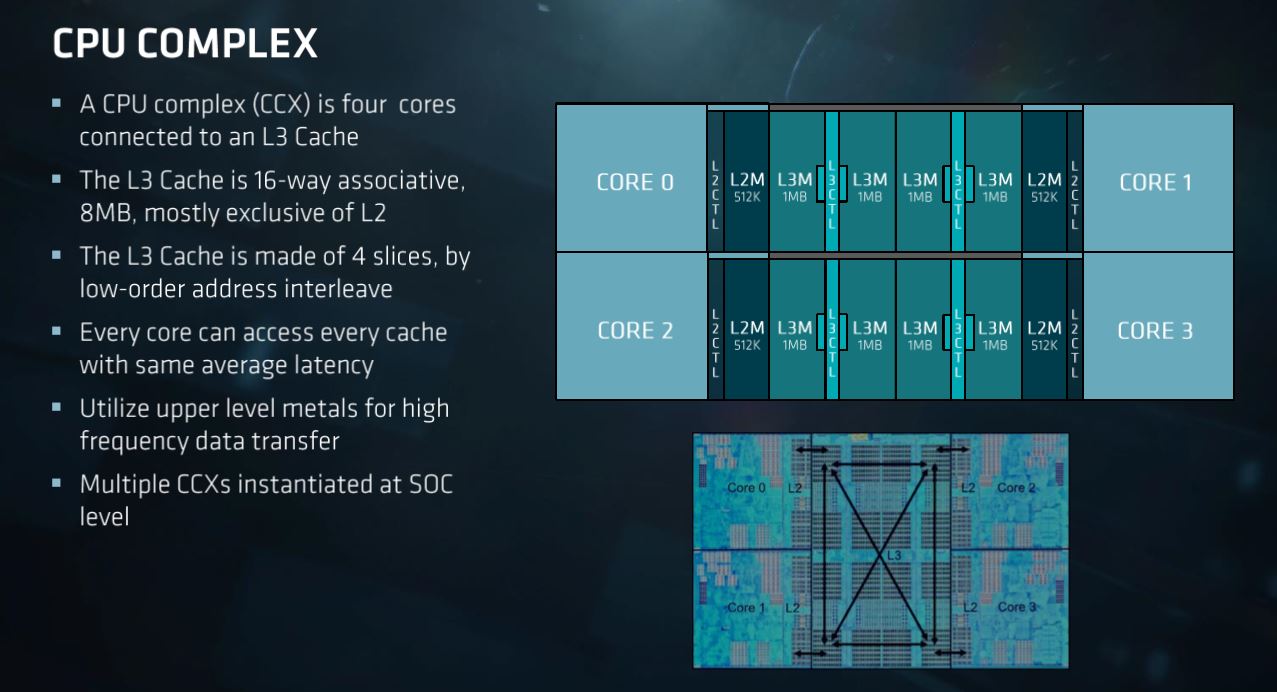

AMD disclosed more fine-grained bandwidth specifications for its Infinity Fabric, likely to quell concerns about the higher-latency interconnect between the die. AMD creates four-core Core Complexes (CCX) and then ties them together with the Infinity Fabric. This is very similar to multiple quad-core processors communicating over a standard PCIe bus. This tactic incurs latency when data has to travel between the CCX, which some have opined could lead to inconsistent VM performance.

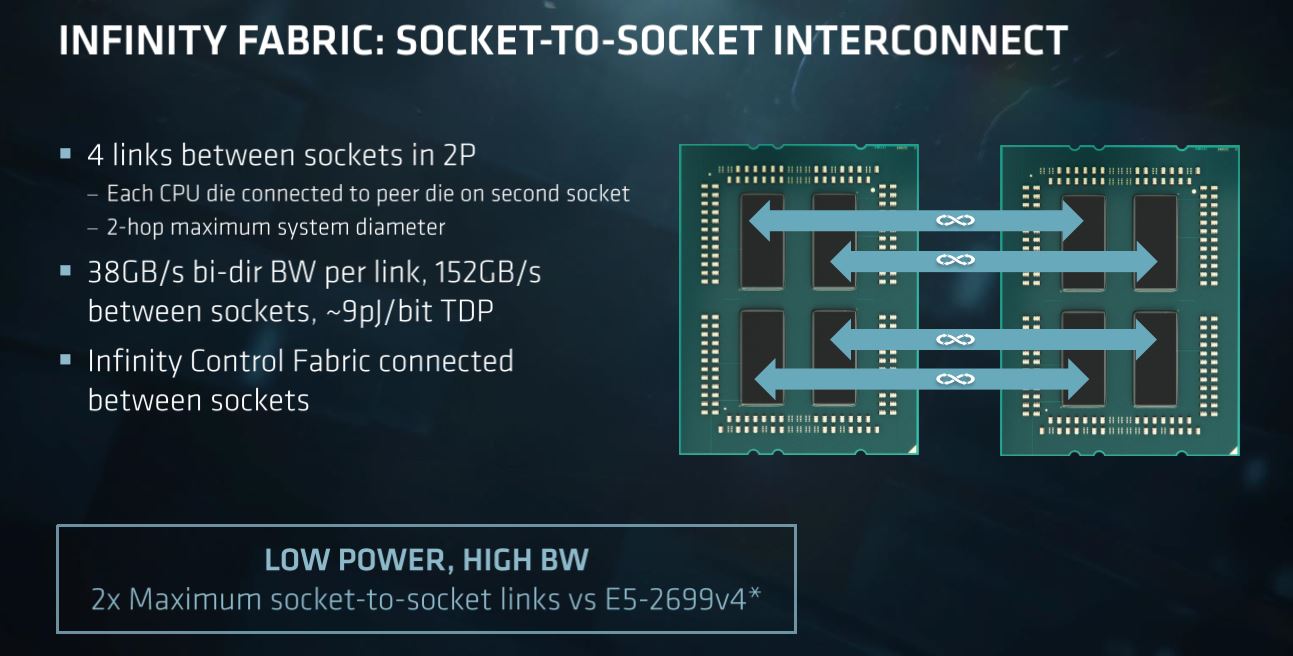

Administrators define strict SLAs (Service Level Agreements) for virtual machines, so inconsistent performance is undesirable. VMs that are larger than four cores will spread across multiple CCX, thus regularly encountering the increased latency of the Infinity Fabric. AMD disclosed bandwidth specifications for the various connections, including the CCX-to-CCX and socket-to-socket interfaces. The design includes three connections from each die to neighboring die, thus providing a direct one-hop connection between all four of the die. The layout is intended to reduce bus contention and latency problems that would arise from multiple hops across the MCM (Multi Chip Module). Of course, the only real way to quantify performance viability is to test performance, but AMD hasn't revealed specifics yet.

Intel has a COD (Cluster on Die) feature it employs in high-core count Xeons to bifurcate the processor's disparate ring buses into two separate NUMA domains. It's a novel approach that reduces latency and improves performance, and a similar technology would significantly benefit AMD. Interestingly, the graphic indicates the Infinity Fabric has a direct link between each respective CCX in separate sockets (third slide). Direct CCX-to-CCX communication should drastically reduce resource contention, thus simplifying scheduling and routing. It also leads us to believe that the Infinity Fabric is more scalable than previously thought, which is a positive development.

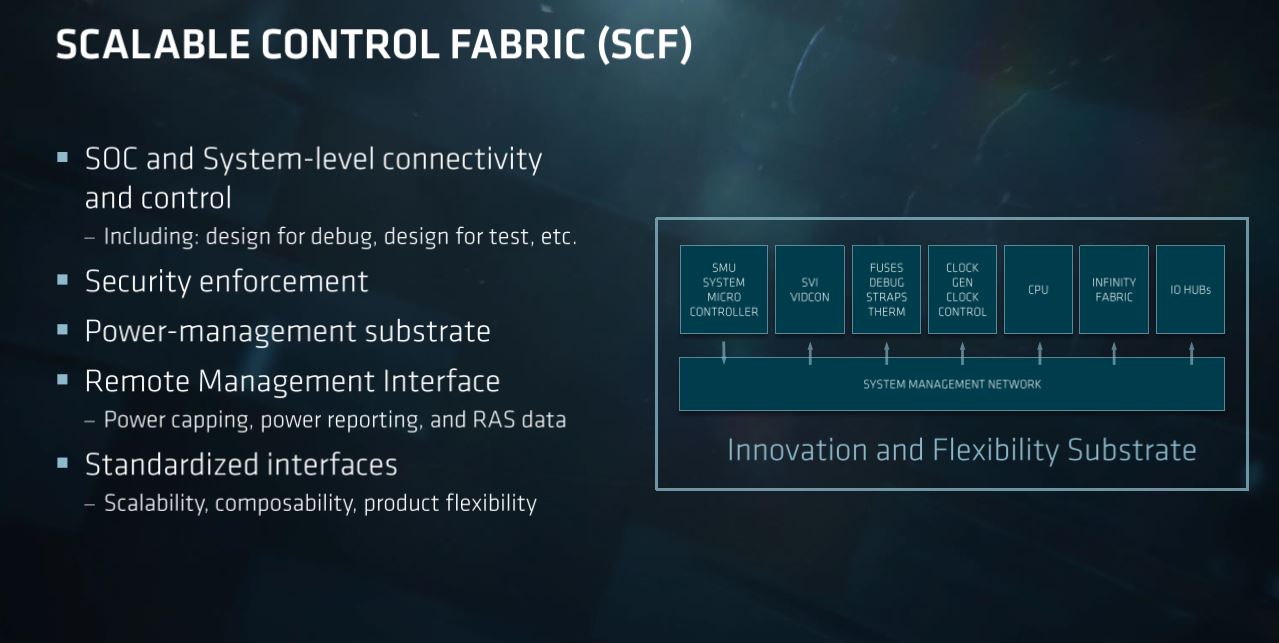

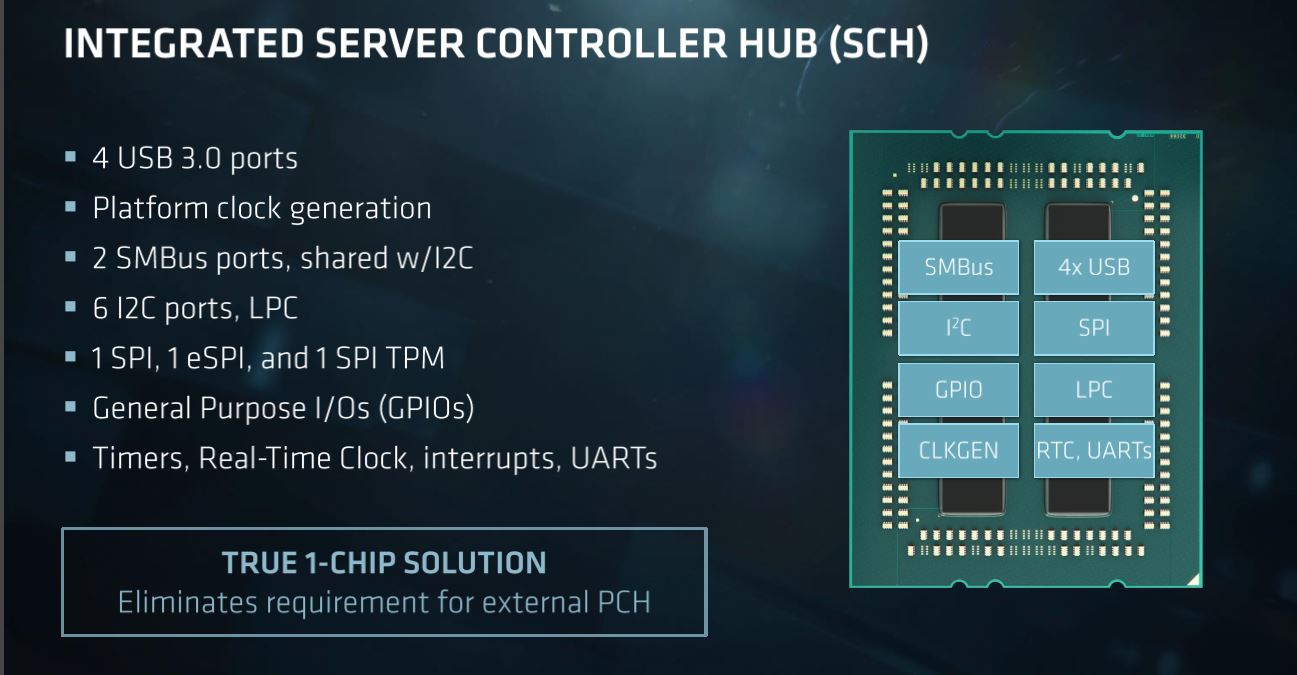

AMD also touted the advantages of its Server Controller Hub that eliminates the need for a traditional PCH (Platform Controller Hub). This reduces motherboard complexity, power consumption, and cost, and it also serves as an important addition for the single socket platforms. The I/O Subsystem slide provides a breakout of the PCIe lane bifurcation support.

We'll dive in deeper on the new Infinity Fabric data as time permits.

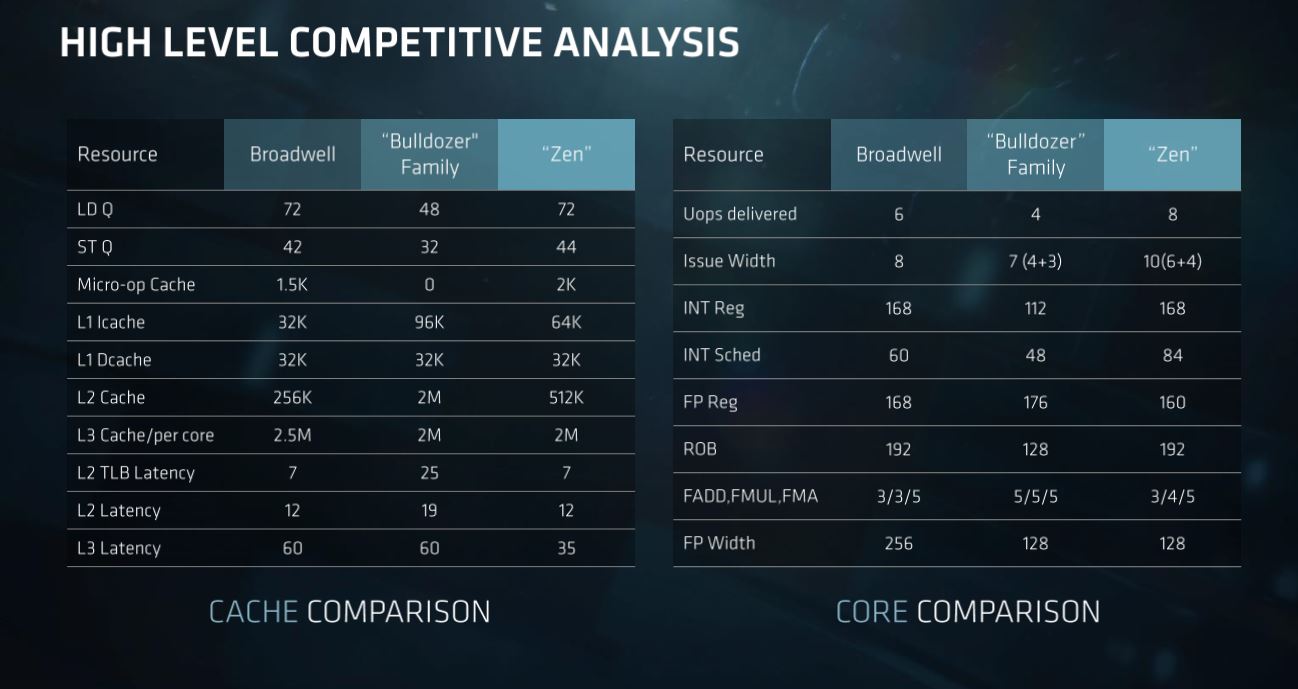

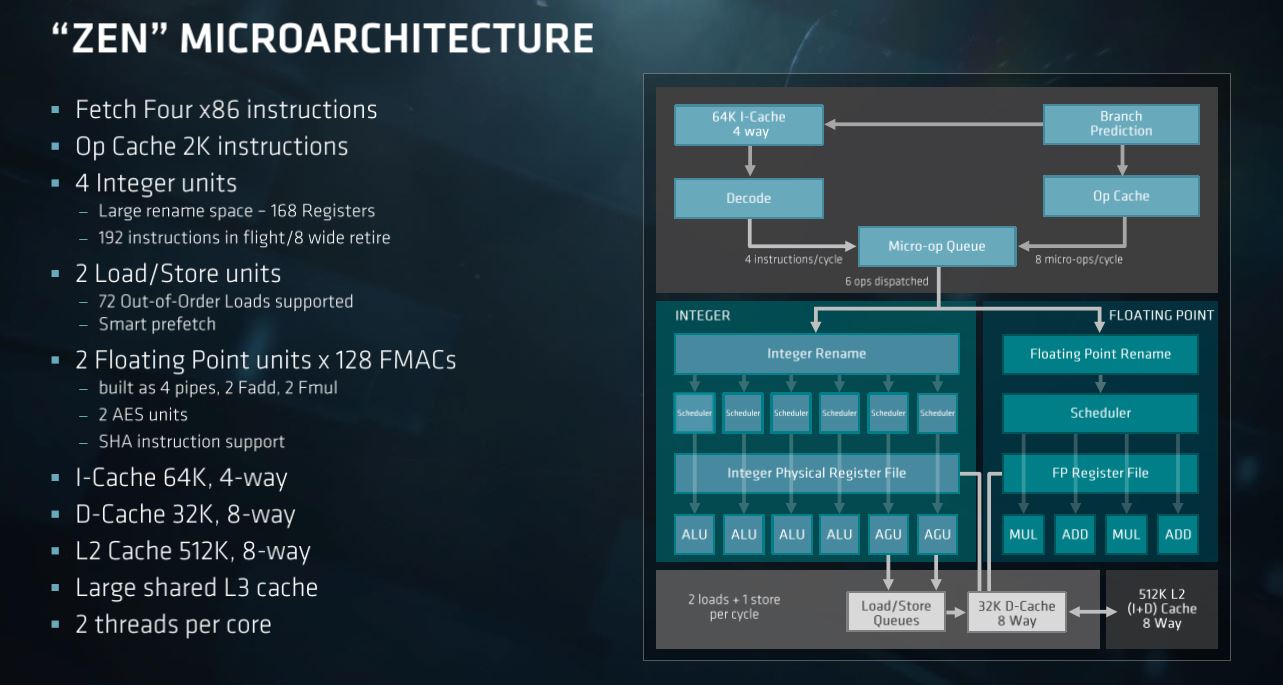

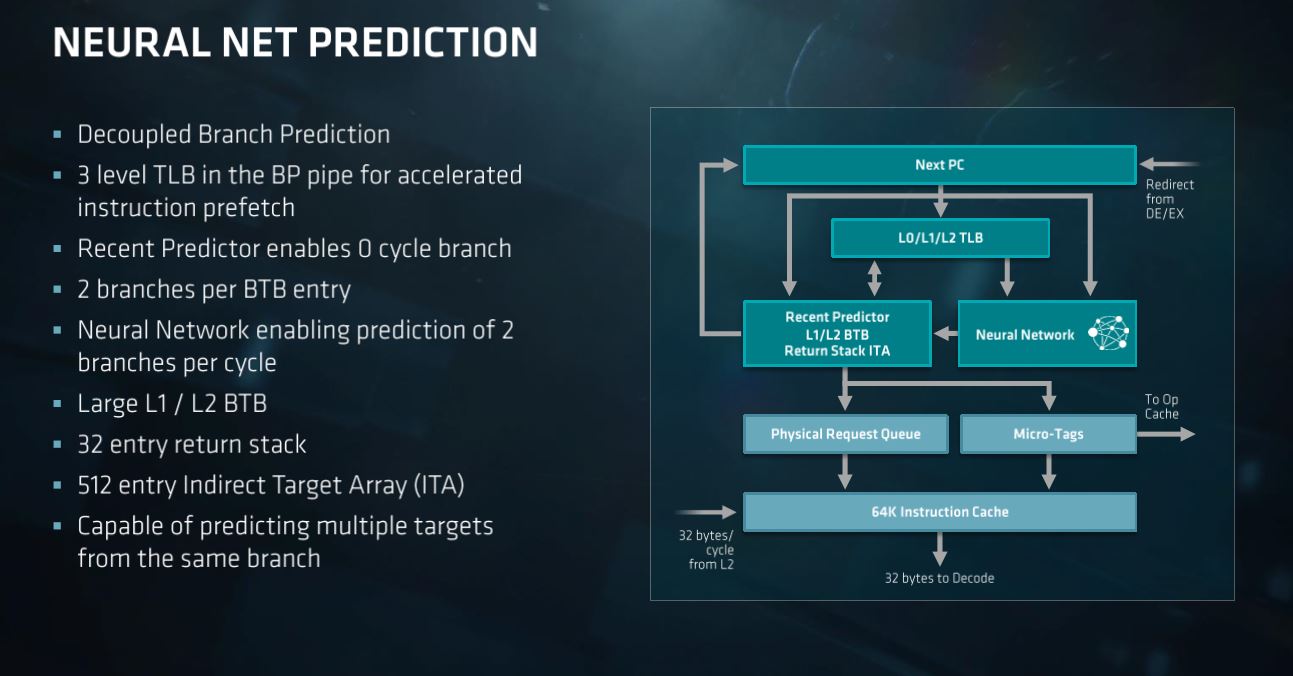

AMD also provided an in-depth briefing on the Zen microarchitecture, but there were very few new details compared to the initial announcement we covered at HotChips. Head over to that article for more detail.

The second slide is an exception--that direct comparison between Intel and AMD's products contains some new data.

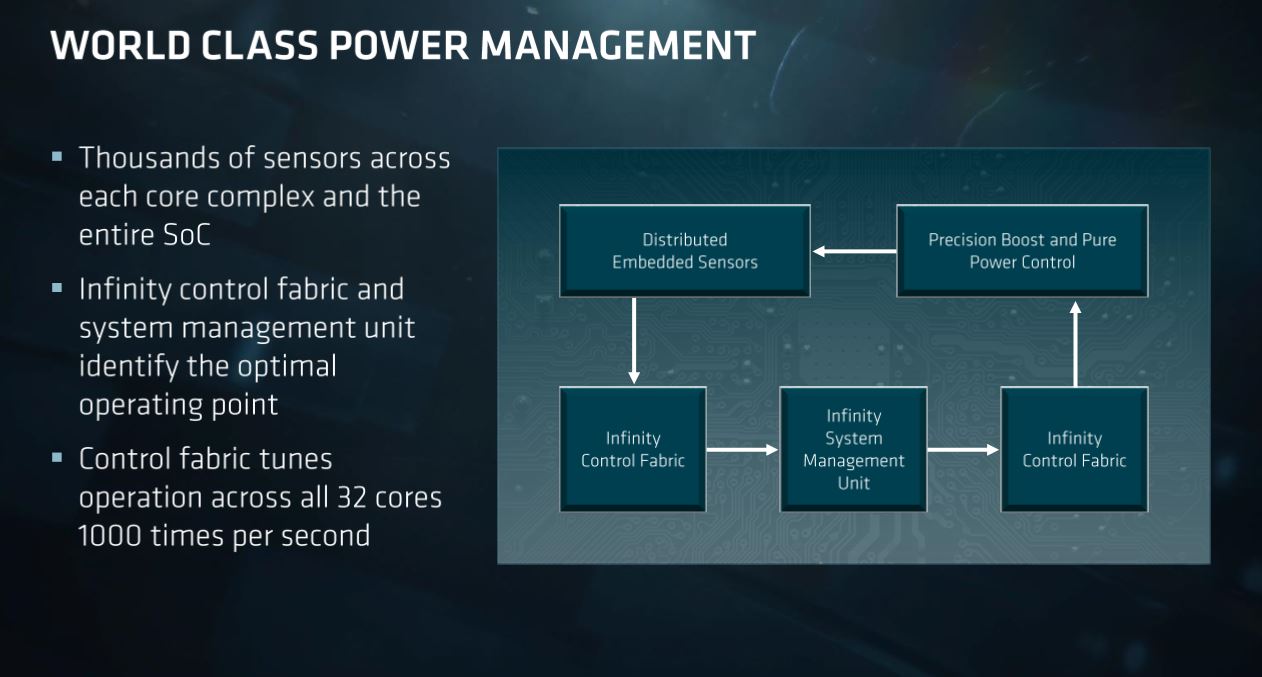

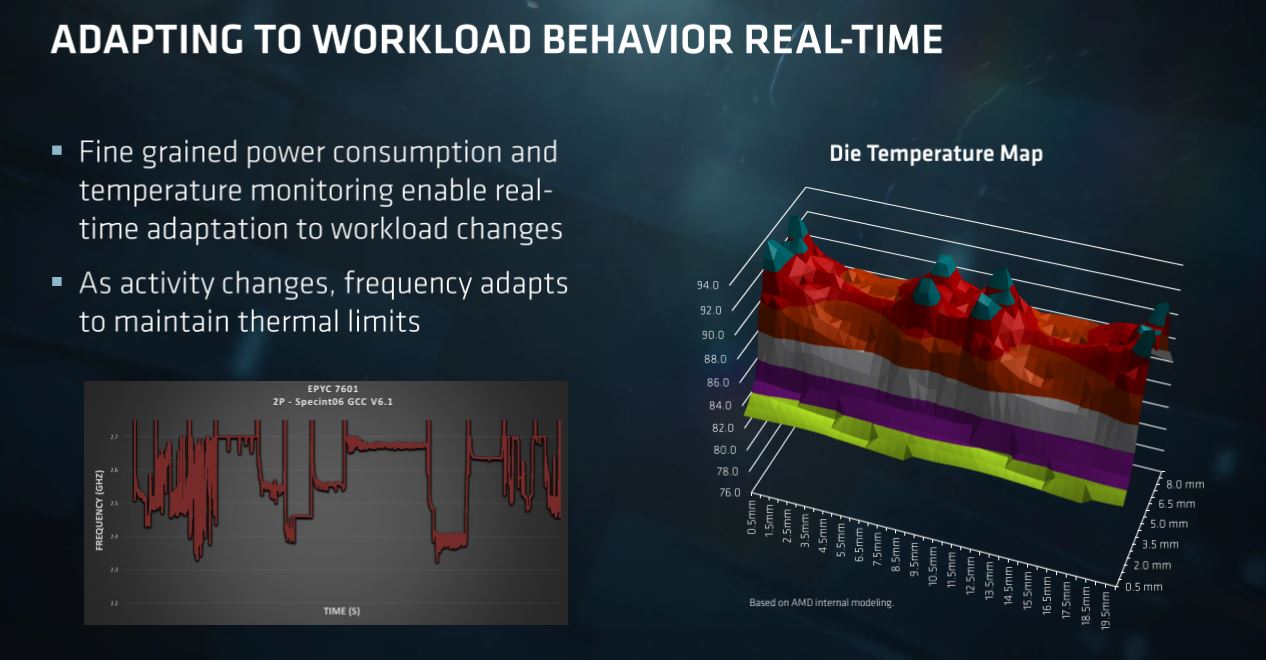

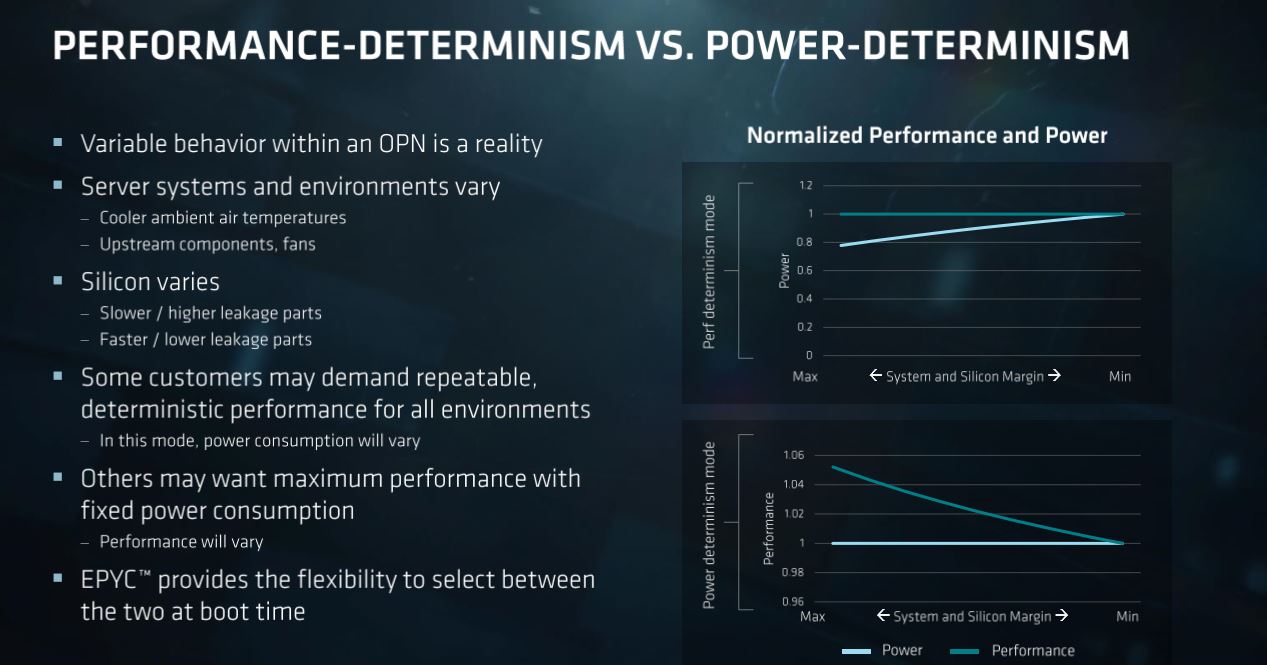

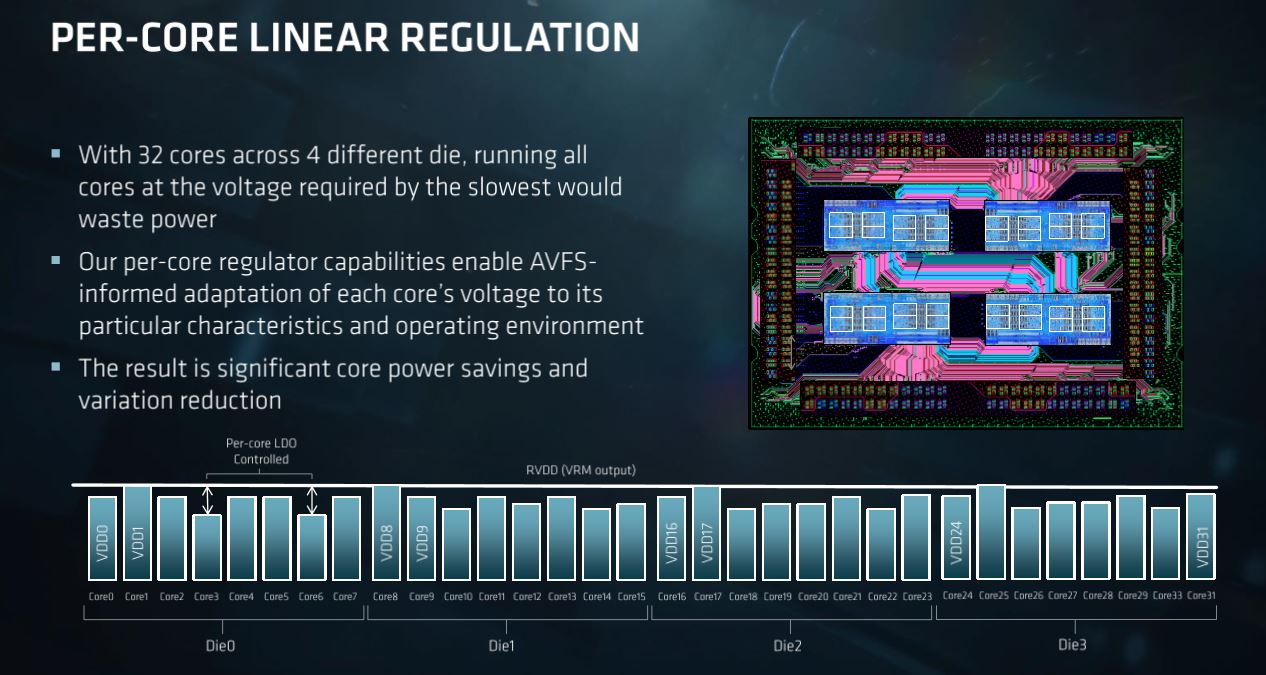

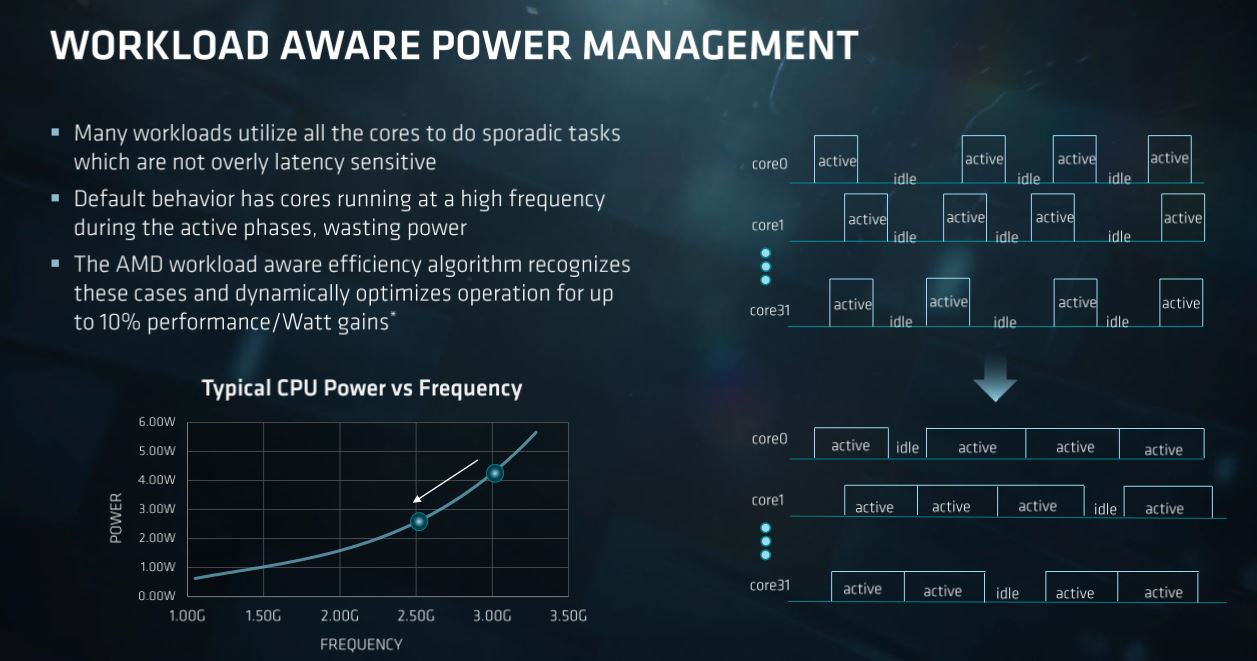

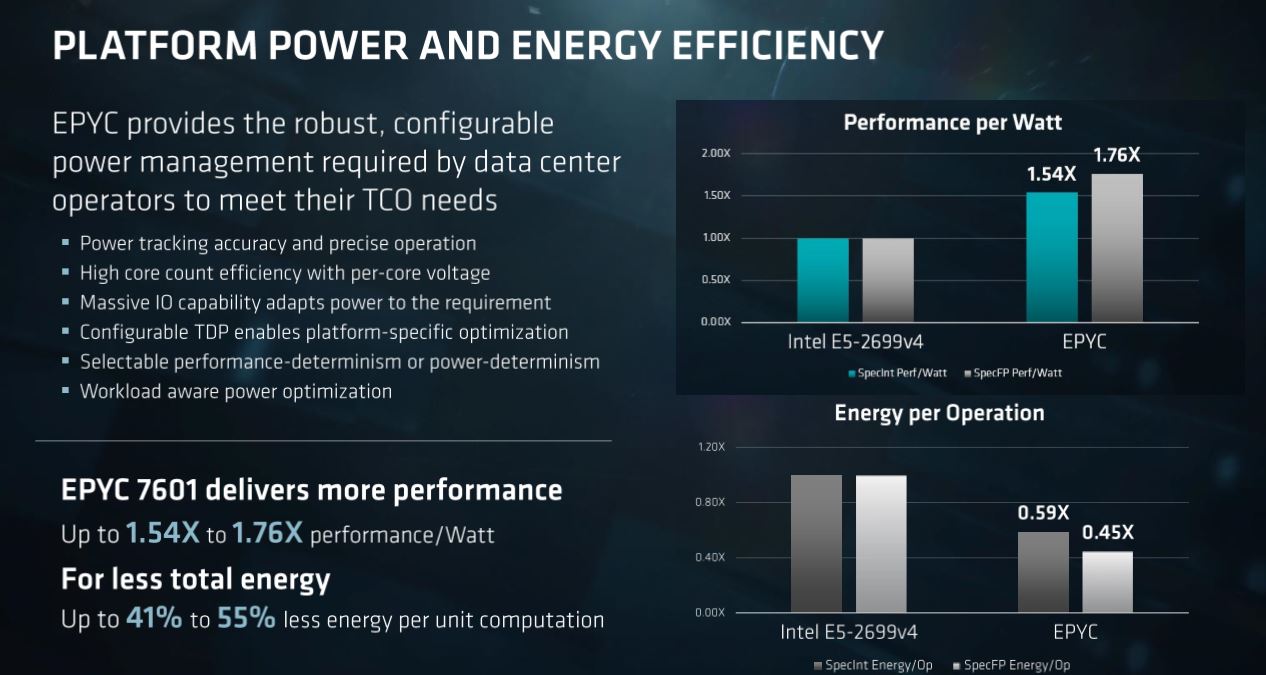

Performance is nice, but the power-to-performance ratio is king in the data center. Optimized platforms reduce long-term TCO by reducing long-term power consumption and cooling requirements. AMD has a novel set of features to manage EPYC's power consumption, including many of the same fine-grained technologies found in the SenseMI suite. AMD provided a few interesting comparisons to existing Broadwell-EP platforms, but as with all vendor-provided data, we have to take it with a grain of salt.

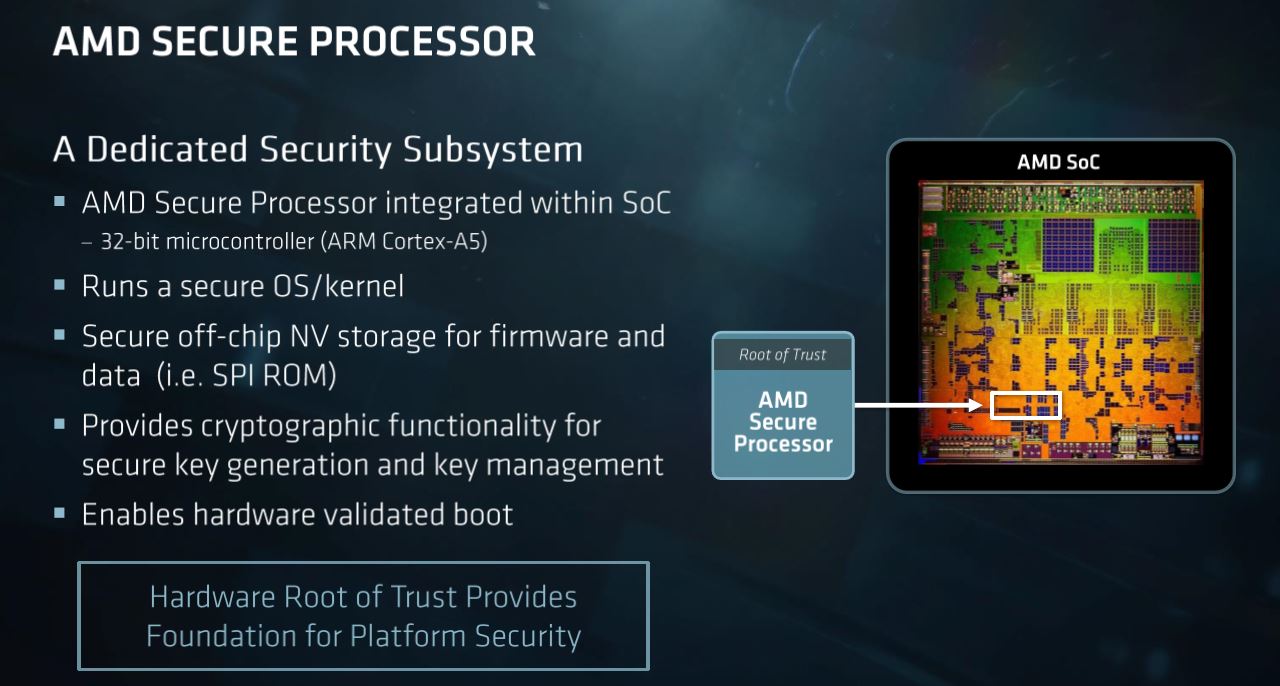

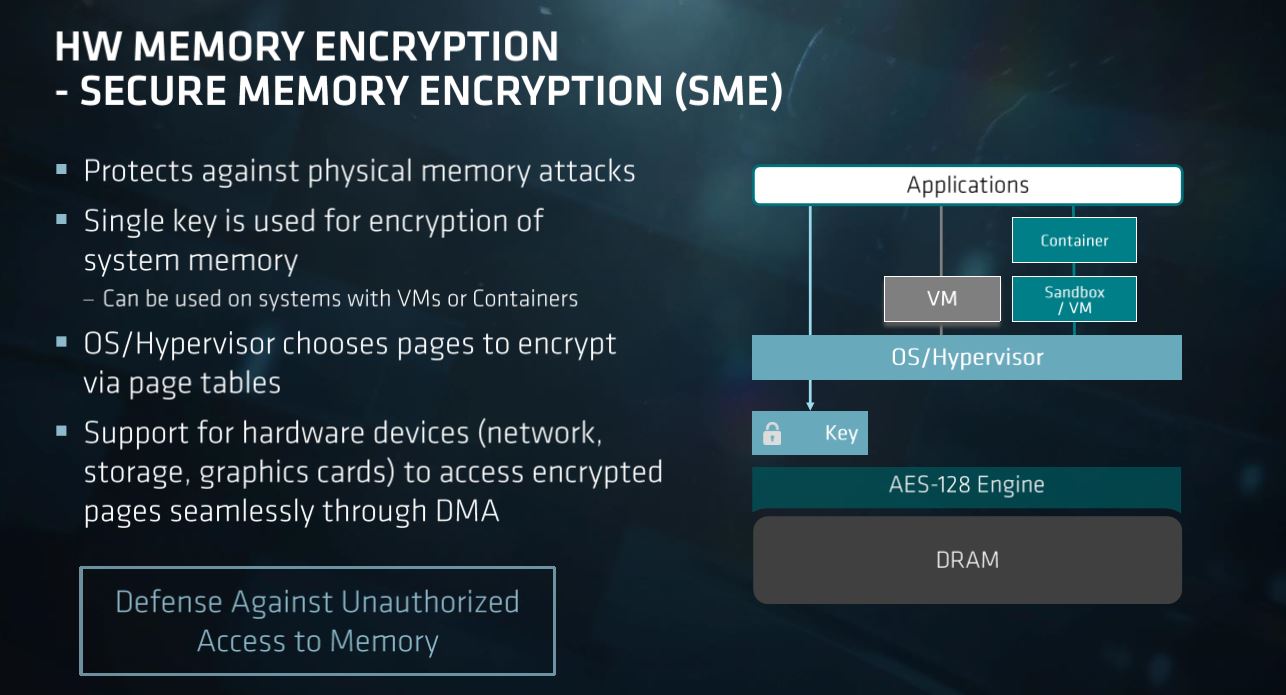

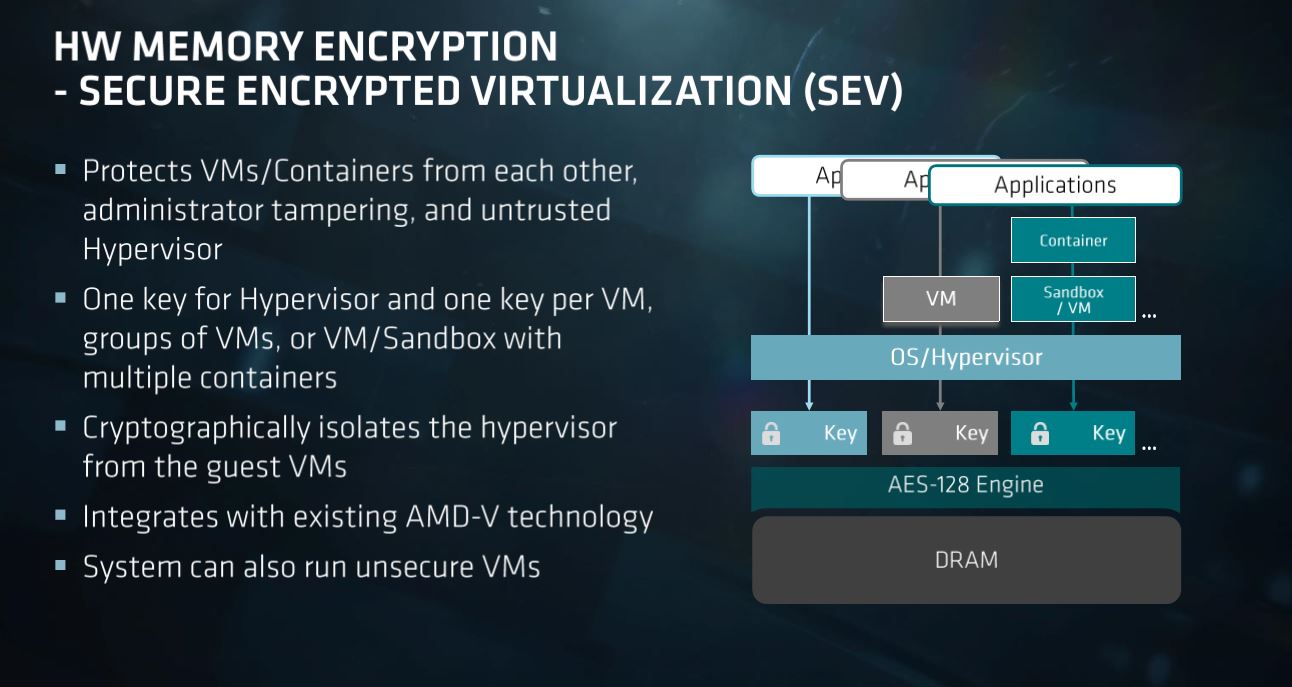

What use is all of the fancy technology if it isn't secure? Well...none. To that end, AMD has a robust set of security features that are all controlled by a sandboxed ARM processor on the SoC package. This separates the security apparatus from the host operating system/hypervisor and provides hardware-based memory encryption, which is useful in multi-tenancy environments, among other features.

AMD's re-entrance into the server market brings about understandable concerns about the ecosystem. At the end of the day, most will purchase systems from OEM providers, and administrators expect rock-solid support with enterprise-class applications. AMD's been hard at work on the enablement front and has amassed a solid set of launch partners for both hardware and software.

AMD has also developed a robust set of features that should further its objectives in the data center. The company noted that it designed the architecture from the ground up for data center workloads, which isn't a surprising admission. AMD's reentrance into the server market will be a long process, which company representatives have repeatedly acknowledged, but it does look promising.

Four of the high-end SKUs, along with several OEM systems, are available today. The remainder of the product stack, and further expansion of OEM's servers, comes in July.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

ClusT3R Just Amazing they double every single performance, lets wait for some benchmarks but it looks very promise on paper.Reply -

Blas Right below the first slide that details the cores/threads, base clocks and boost frequencies, it reads:Reply

"EPYC's base processor frequencies range from 2.1GHz to 2.2GHz, which is a relatively small frequency range compared to Intel's lineup. We see a slightly higher delta between the 2.9-3.2GHz maximum boost frequencies"

But the slide shows base clocks ranging from 2.0 to 2.4 GHz, and boost clocks ranging from 2.7 to 3.2 GHz. -

bit_user Nice article, Paul (and your Intel i9-7900X, as well... which I'm still reading)!Reply

I think this:19842555 said:Good eye, fixed!

VMs that are larger than four cores will spread across multiple CCX, thus regularly encountering the increased latency of the Infinity Fabric. AMD disclosed bandwidth specifications for the various connections, including the CCX-to-CCX and socket-to-socket interfaces. The design includes three connections from each CCX to neighboring CCX, thus providing a direct one-hop connection between all four of the core complexes.

should be:

VMs that are larger than eight cores will spread across multiple dies, thus regularly encountering the increased latency of the Infinity Fabric. AMD disclosed bandwidth specifications for the various connections, including the die-to-die and socket-to-socket interfaces. The design includes three connections from each die to neighboring die, thus providing a direct one-hop connection between all four of the dies.

My understanding is that communication between the two CCX's on the same die traverses a crossbar that's not considered part of the Infinity Fabric. -

bit_user ReplyIntel has a COD (Cluster on Die) feature it employs in high-core count Xeons to bifurcate the processor's disparate ring buses into two separate NUMA domains. It's a novel approach that reduces latency and improves performance, and a similar technology would significantly benefit AMD.

It seems like it should be easy for AMD to enable each die to have its own address space and run independently of the others. You could certainly do this with a VM, but there'd have to be some hardware support to gain the full benefits from it. -

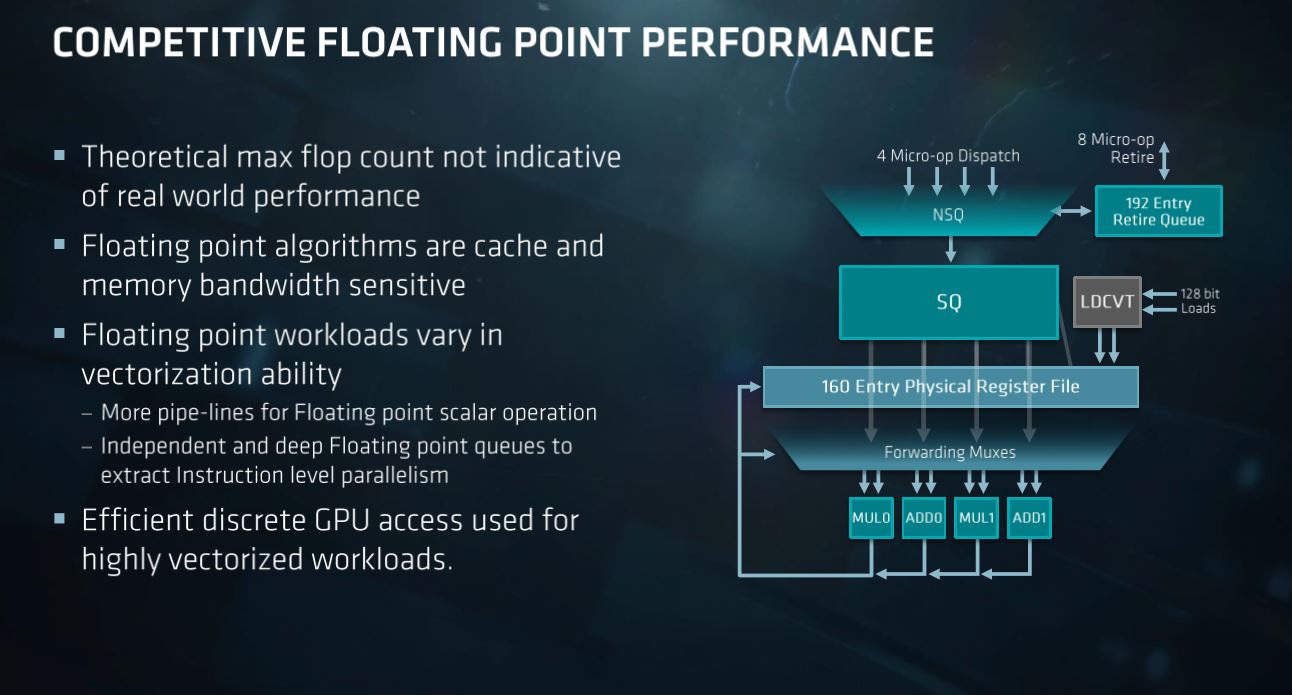

bit_user Looks like a lot of good stuff in there.Reply

The 128-bit FPU sticks out as one of the biggest chinks in their armor. I take their point about using GPUs to fill the gap, but this comes across weak by comparison with Intel's inclusion of some AVX-512 instructions in Skylake-X.

If they' could've at least matched Broadwell's 3-cycle FMUL latency, that would've helped.

-

Paul Alcorn Reply19842843 said:Nice article, Paul (and your Intel i9-7900X, as well... which I'm still reading)!

I think this:19842555 said:Good eye, fixed!

VMs that are larger than four cores will spread across multiple CCX, thus regularly encountering the increased latency of the Infinity Fabric. AMD disclosed bandwidth specifications for the various connections, including the CCX-to-CCX and socket-to-socket interfaces. The design includes three connections from each CCX to neighboring CCX, thus providing a direct one-hop connection between all four of the core complexes.

should be:

VMs that are larger than eight cores will spread across multiple dies, thus regularly encountering the increased latency of the Infinity Fabric. AMD disclosed bandwidth specifications for the various connections, including the die-to-die and socket-to-socket interfaces. The design includes three connections from each die to neighboring die, thus providing a direct one-hop connection between all four of the dies.

My understanding is that communication between the two CCX's on the same die traverses a crossbar that's not considered part of the Infinity Fabric.

VMs that occupy more than four physical cores will spread across multiple CCX. The CCX are connected by the Infinity Fabric, which is a crossbar. AMD has indicated that the CCX-to-CCX connection is via Infinity Fabric. In fact, they claim the Infinity Fabric is end-to-end, even allowing next-gen protocols, such as CCIX and Gen-Z, to run right down to the CCX's. I did make a mistake on the last sentence, it is 'die,' thanks for catching that. It's been a long week :P

-

Puiucs Reply

The reality is that GPUs are the ones that will provide that kind of raw power for servers. Not having AVX-512 is not that important (at least not yet). Server applications don't generally employ bleeding edge technology. AVX2 support should be more than enough for the majority of cases.19843215 said:Looks like a lot of good stuff in there.

The 128-bit FPU sticks out as one of the biggest chinks in their armor. I take their point about using GPUs to fill the gap, but this comes across weak by comparison with Intel's inclusion of some AVX-512 instructions in Skylake-X.

If they' could've at least matched Broadwell's 3-cycle FMUL latency, that would've helped.

At the moment, its price or performance that will keep AMD from being used in servers, but the fact that it's considered bleeding edge technology. It will have to prove that it's a stable platform with no bugs or other issues in the long run. Server admins should not have to worry about bios and driver updates. -

bit_user Reply

Then it would be in the last two sentences.19844085 said:I did make a mistake on the last sentence, it is 'die,' thanks for catching that. It's been a long week :P