3D XPoint: A Guide To The Future Of Storage-Class Memory

Memory and storage collide with Intel and Micron's new, much-anticipated 3D XPoint technology, but the road has been long and winding. This is a comprehensive guide to its history, its performance, its promise and hype, its future, and its competition.

Is 3D XPoint Stagnating?

When Intel and Micron made the historic joint 3D XPoint announcement in July 2015, enthusiasm was high. The interim has been riddled with mere speculation, largely because the companies still haven't revealed just exactly what 3D XPoint is (although the IMFT co-CEO gave an indicator in a public forum). Recently the companies have also walked back many of the initial, aggressive performance and delivery claims.

On the delivery side, it does appear that we will see a robust ecosystem of 3D XPoint SSDs from third parties, much like the current SSD market, and we gather that Seagate and IBM will serve as Micron's leading partners. On the technology side, we've investigated every facet of the emerging 3D XPoint technology, shedding light on both the hidden hardware and the controller structure, and covering the timeline of its pending release.

We have also learned more information during briefings and a recent conference marathon, specifically about performance (we can even compare the Optane to QuantX performance), the inner workings of 3D XPoint, and the software ecosystem, so it’s time to revisit the topic with a broader view.

The Original Claims

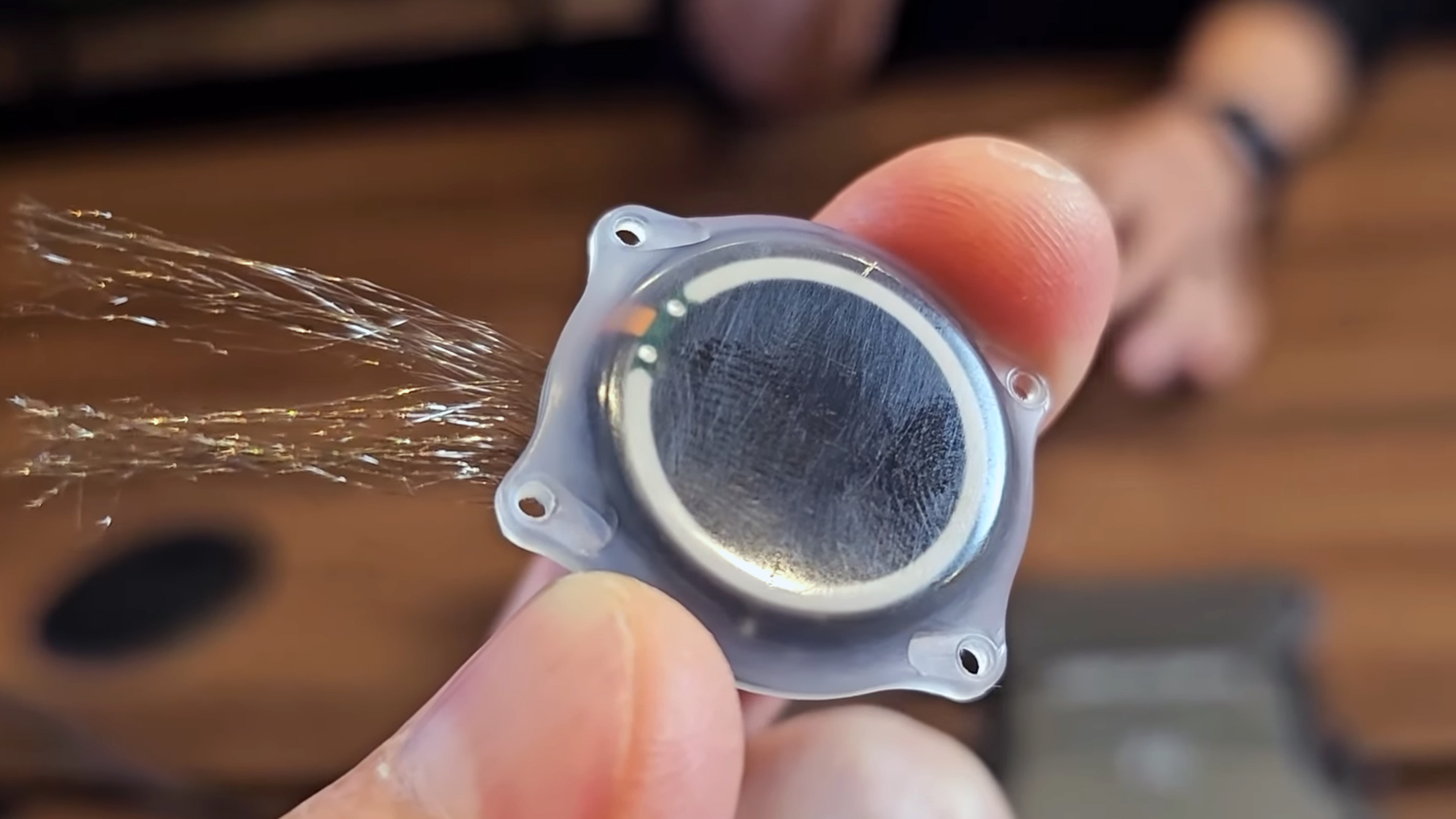

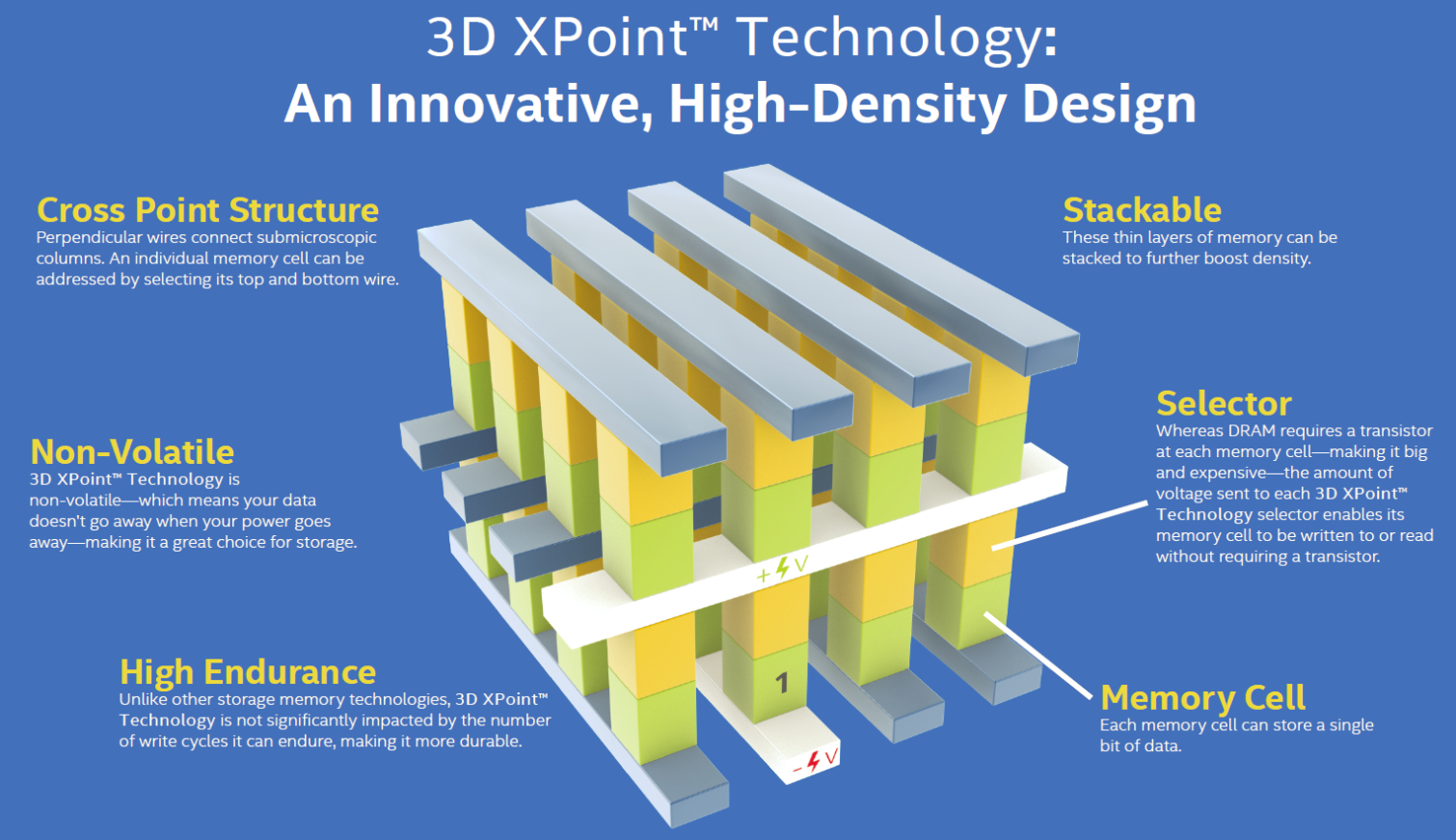

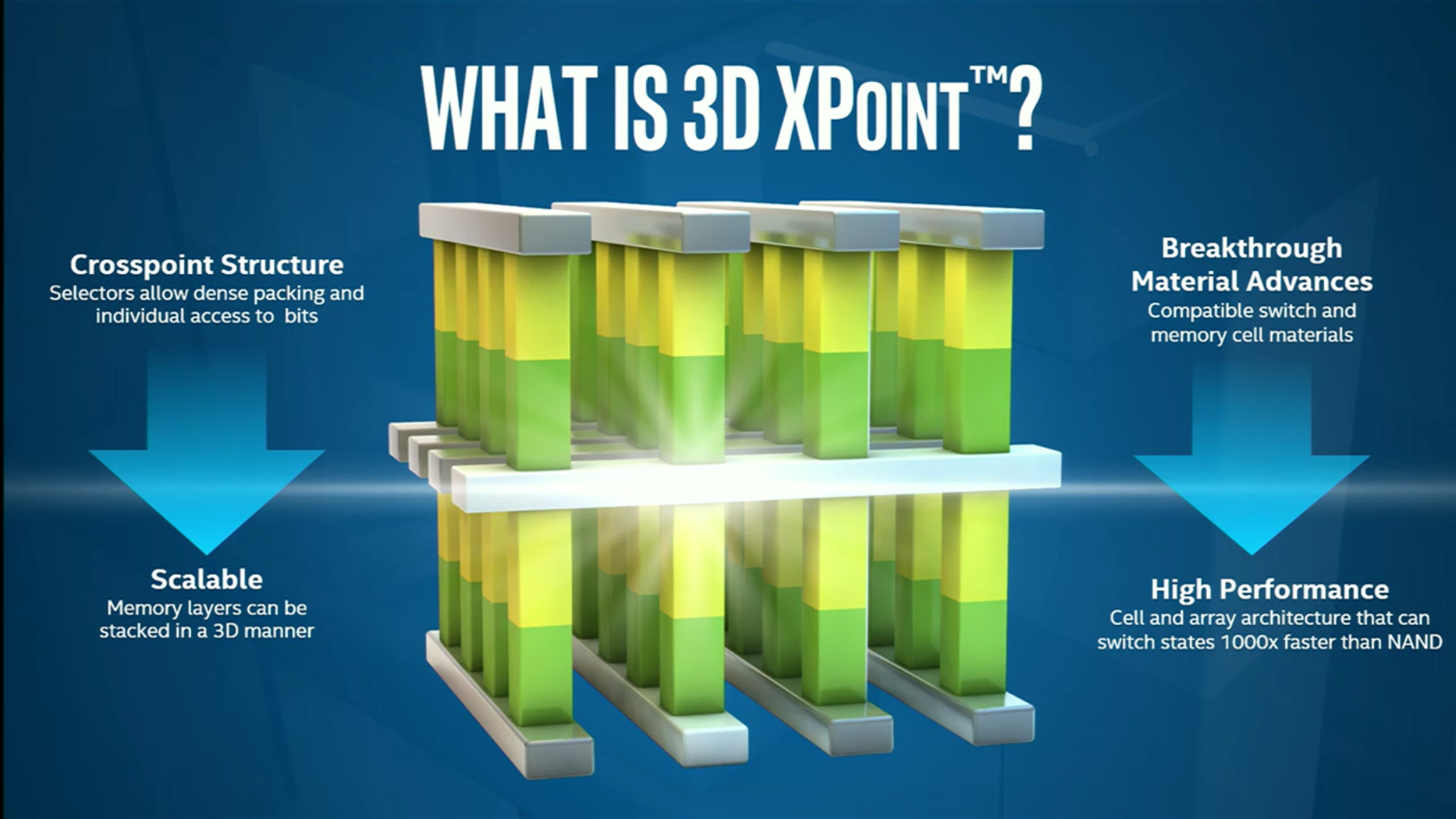

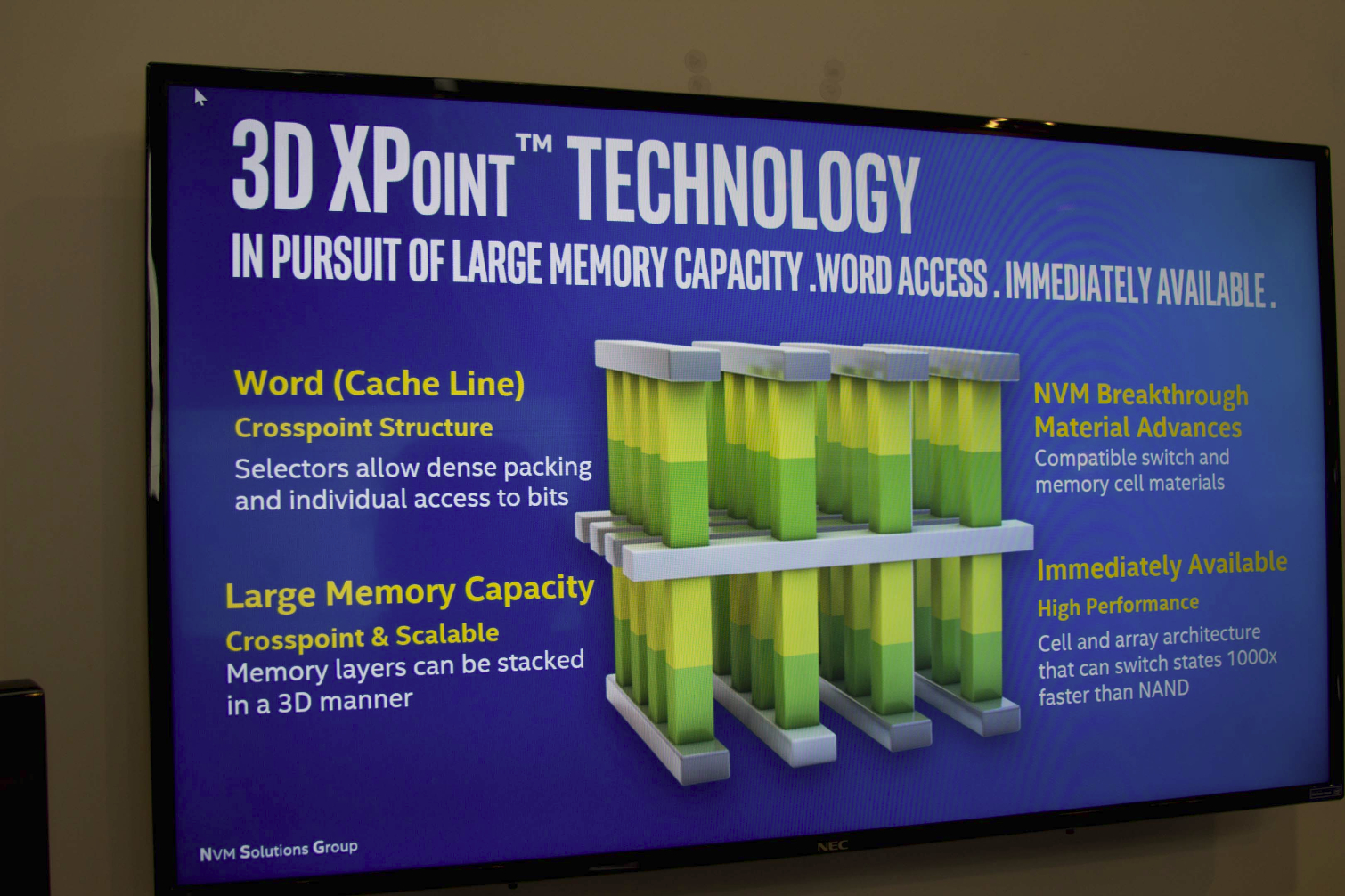

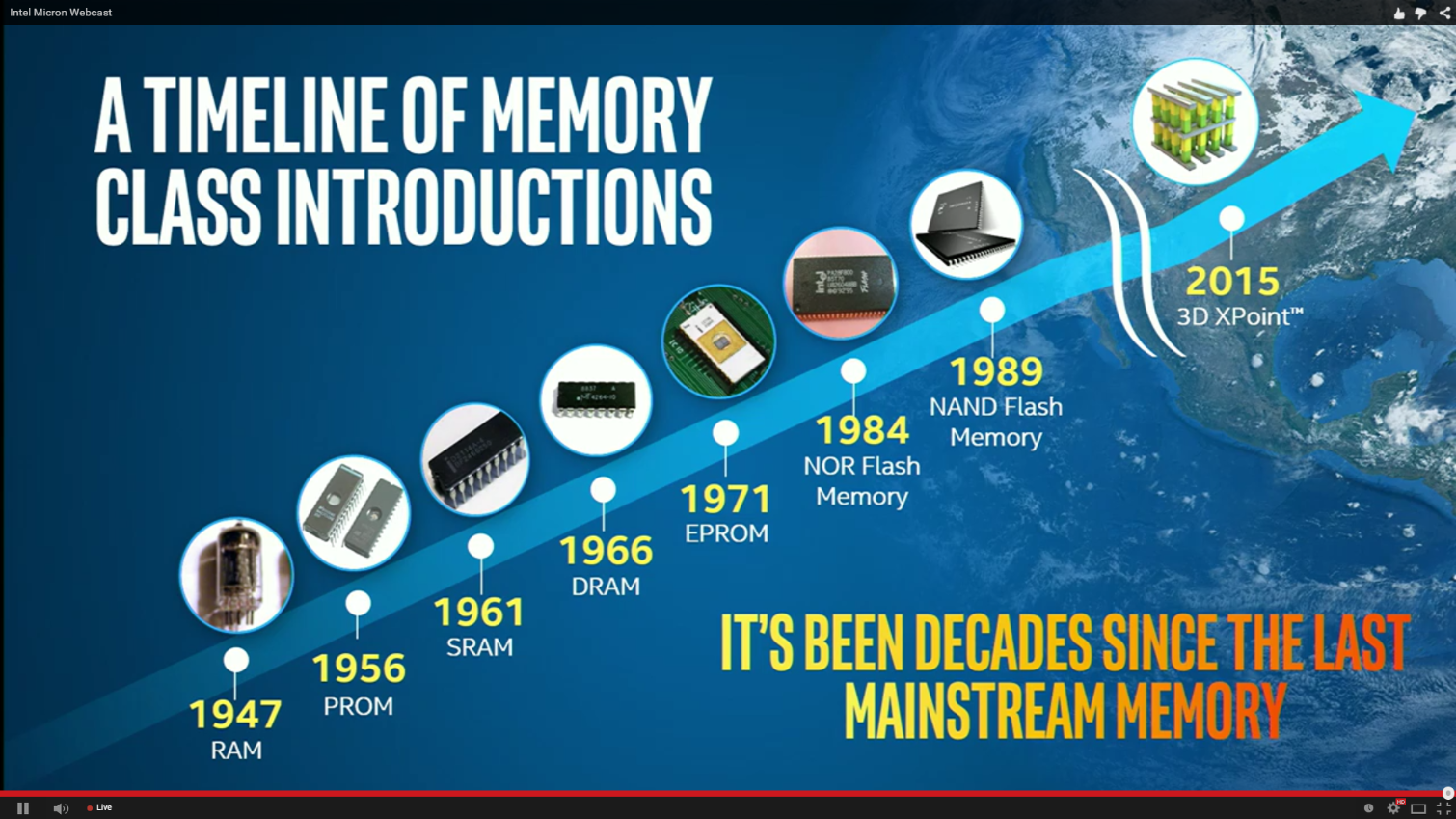

Intel and Micron developed 3D XPoint for more than a decade in a skunkworks-class project, and it spawned the world's first new memory since 1966. Because of 3D XPoint's persistence (it retains data after power is removed), it can serve in both memory and storage roles and is designed to bridge the gap between the two. As such, it also promises to be the first new productized storage medium since the introduction of NAND 25 years ago. In some ways, 3D XPoint highlights just how stagnant the computer industry has become.

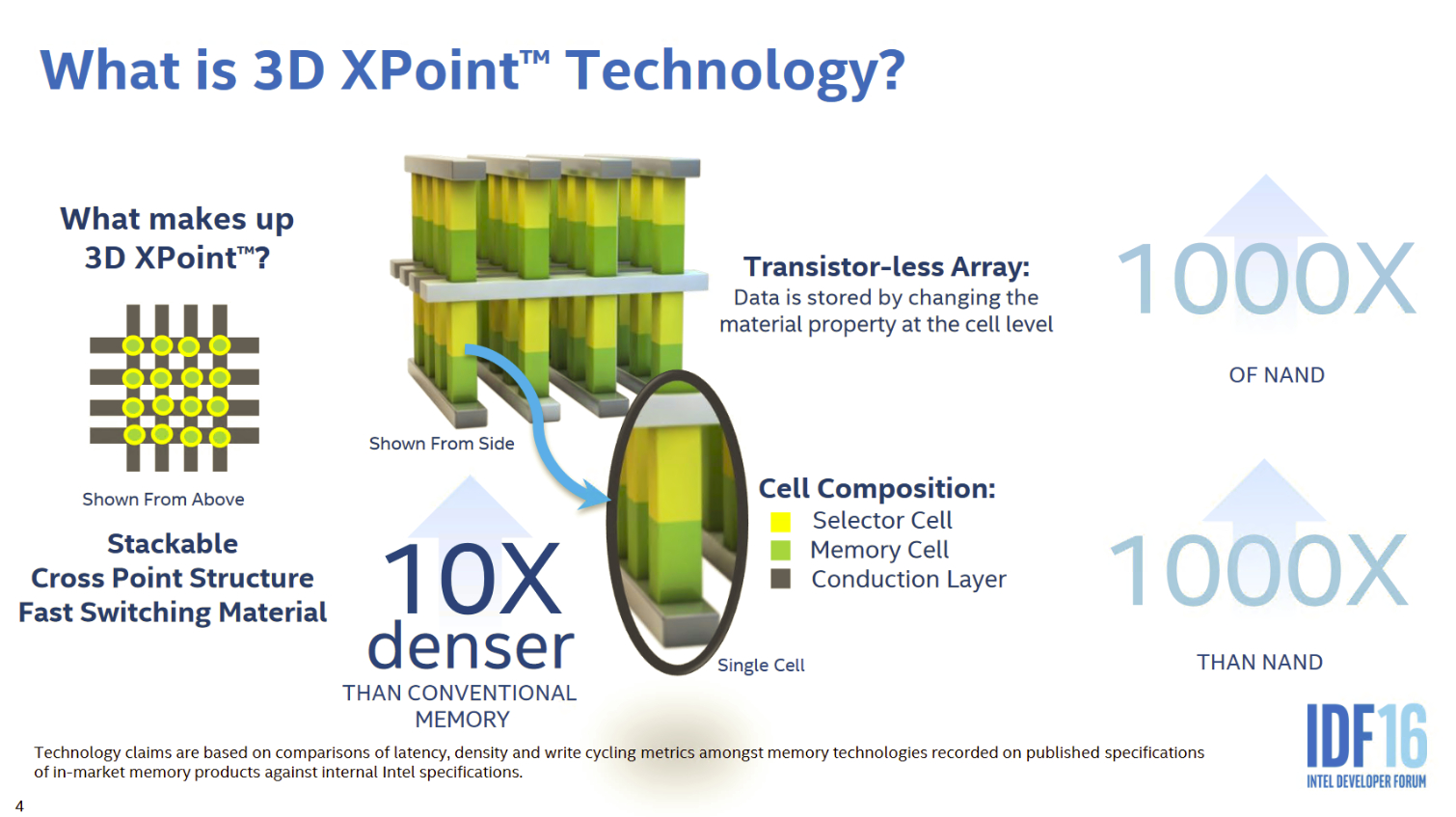

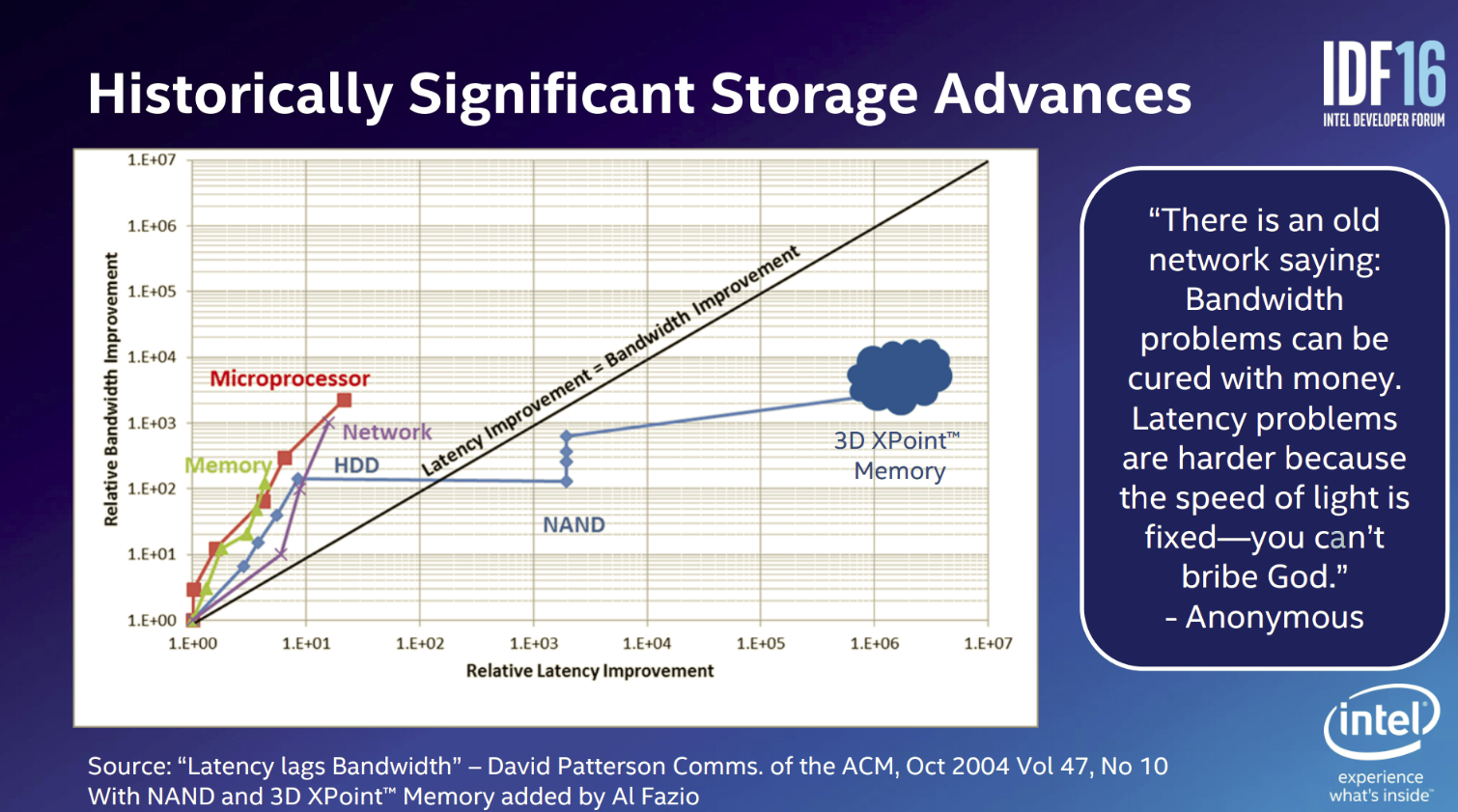

The IMFT (Intel/Micron Flash Technologies) venture, which began in 2006 and includes joint NAND production, laid out pretty bold performance claims during the original 3D XPoint launch. The companies repeated the "1000X faster and 1000X more endurance than NAND, 10X the density of DRAM" mantra relentlessly. As with all marketing wizardry, we have to take these projections with a grain of salt; we don't know what generation of NAND the companies are comparing 3D XPoint to, which blurs our ability to assess the true performance advantages.

Intel embarked on a traveling roadshow of ambiguous demonstrations with enough marketing hype to induce mental vertigo. The hits included a demonstration of 3D XPoint as a backup device, which is an unlikely use case due to its high price, and also placed the medium behind the Thunderbolt 3 interface to mask its true performance. Many speculated that perhaps 3D XPoint wasn't far along enough to provide a more impressive demonstration.

Intel answered with a Blender rendering benchmark during Computex 2016, but those impressive performance gains were accompanied by little technical detail. Again, skeptics speculated that the company was struggling to find real-world use cases that could benefit from the increased performance. This may be true, in part because of the restrictions imposed by existing interfaces, but strategic maneuvering is the more likely reason for the unenlightened demos: Intel simply may not want to show its hand to its competitors. Still, the company revealed far more information on the Blender benchmark, among others, at IDF 2016, and these go a long way to highlighting the actual advantages, which we will cover in the performance section.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Walking It Back

It appears the display of marketing prowess has bitten IMFT; unfortunately, the two companies recently began walking the performance claims back.

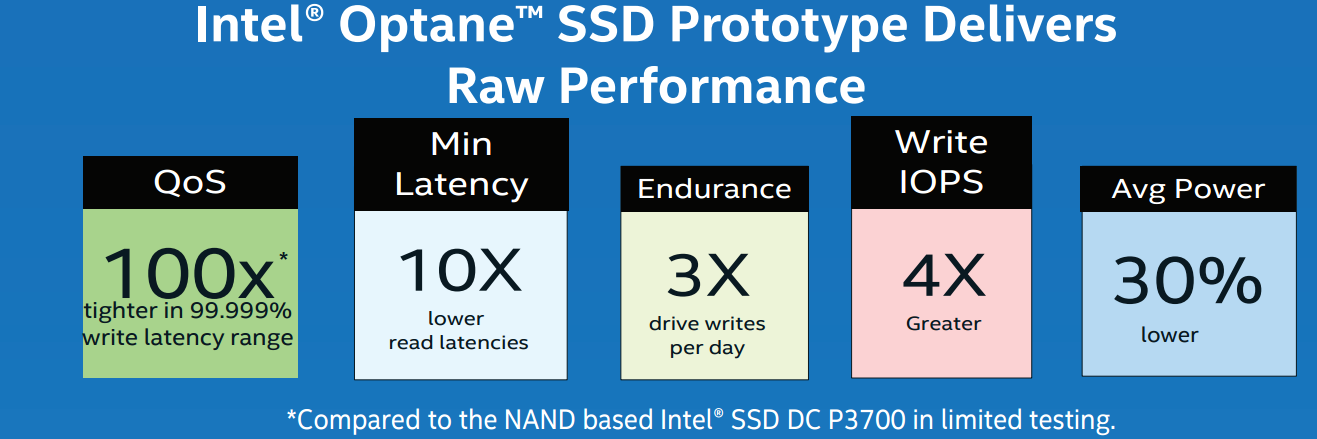

Although both companies share the same underlying 3D XPoint material, they will have unique designs and market the end devices under different names. Intel's products fall under the Optane branding, and Micron wields its QuantX (quantum leap) products. Intel began the long walk back to reality with revised specification that indicates a mere 4X gain in random write performance and a mere 3X increase in endurance, which is exponentially lower than the initial projections.

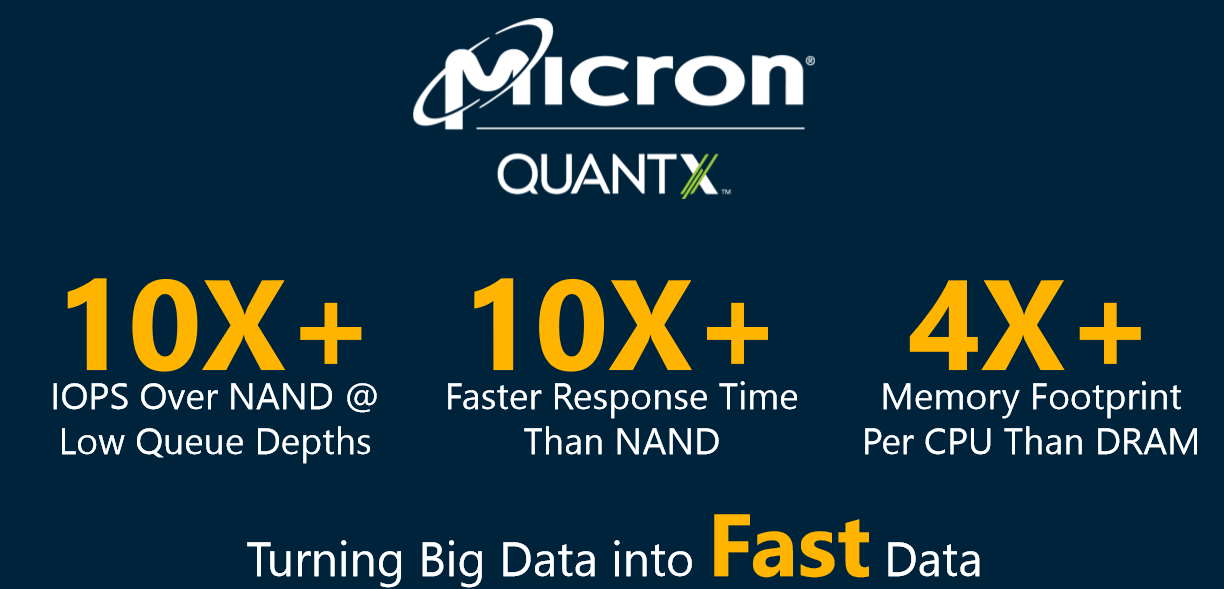

Micron also doused the 1000X fire with its QuantX claims of 10X IOPS (vs NAND), which includes the specific qualifier that the advantage only occurs at low queue depths (QD). Performance during light-QD workloads is, by far, the most important metric, but it’s clear that 3D XPoint has lost some of its zing as IMFT hammers it into products that need to conform to existing infrastructure limitations. The 10X+ improved response time stands out as a key improvement, and it hints at the radically lower latency that is possible with the new devices.

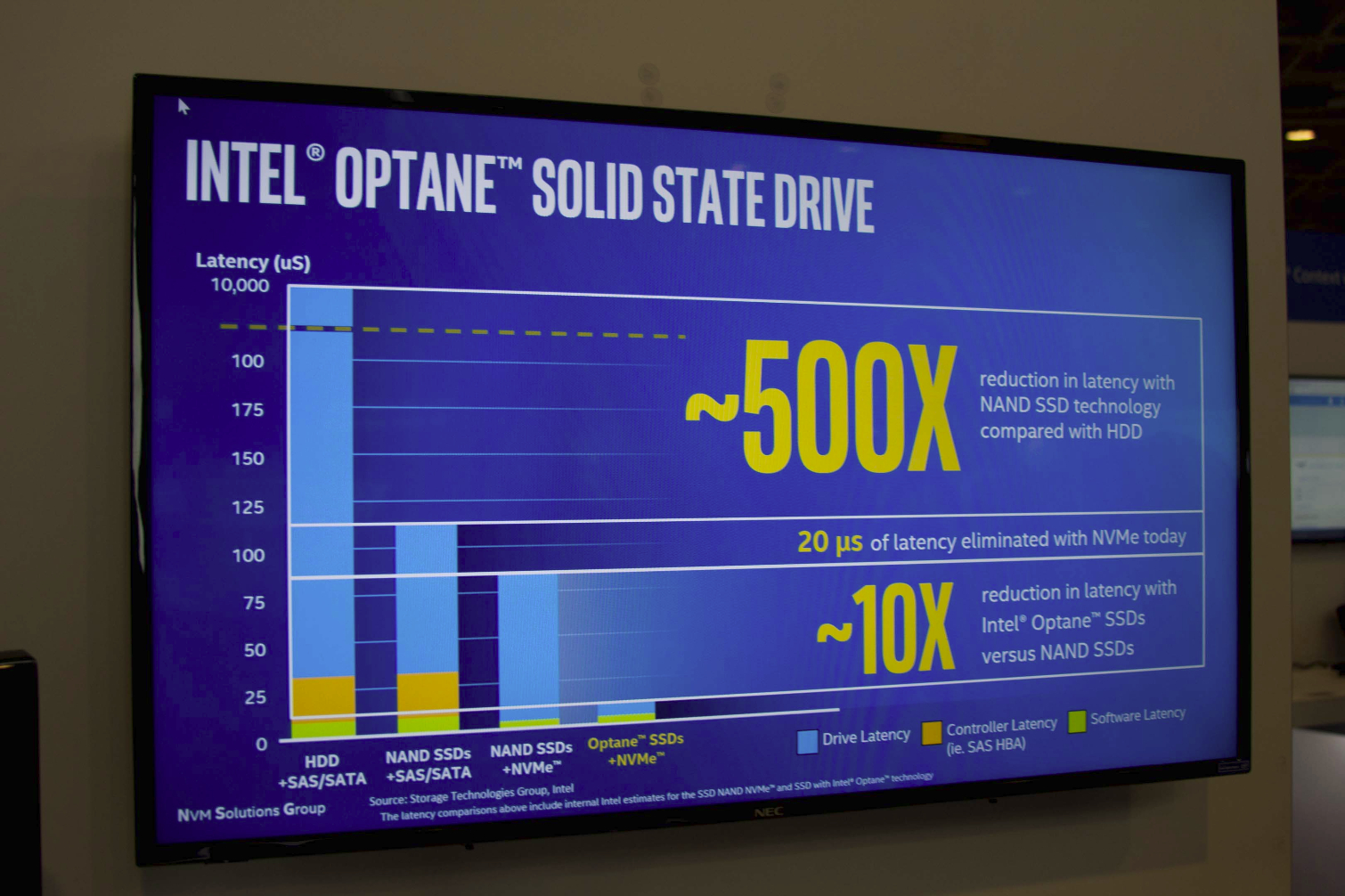

As expected, the press roundly roasted both companies for the large disparities in performance and endurance projections. IMFT responded by saying that it based the original projections on the speed of the raw media, and not the speed of the end products. This does make technical sense, because the system incurs a crushing overhead on anything related to storage. We will cover this on the following pages. The revised specifications extinguished plenty of the enthusiasm, but 3D XPoint delivers many tangible advantages beyond just top-end performance numbers. We will dive into this as well.

IMFT explained the relatively disappointing performance specifications coherently and factually, but the endurance specifications are another matter entirely. The performance takes a hit due to system overhead, but the companies have yet to explain, beyond ECC tuning, why the endurance specifications have taken such a drastic plunge. The disparity appears to be larger than just ECC concerns.

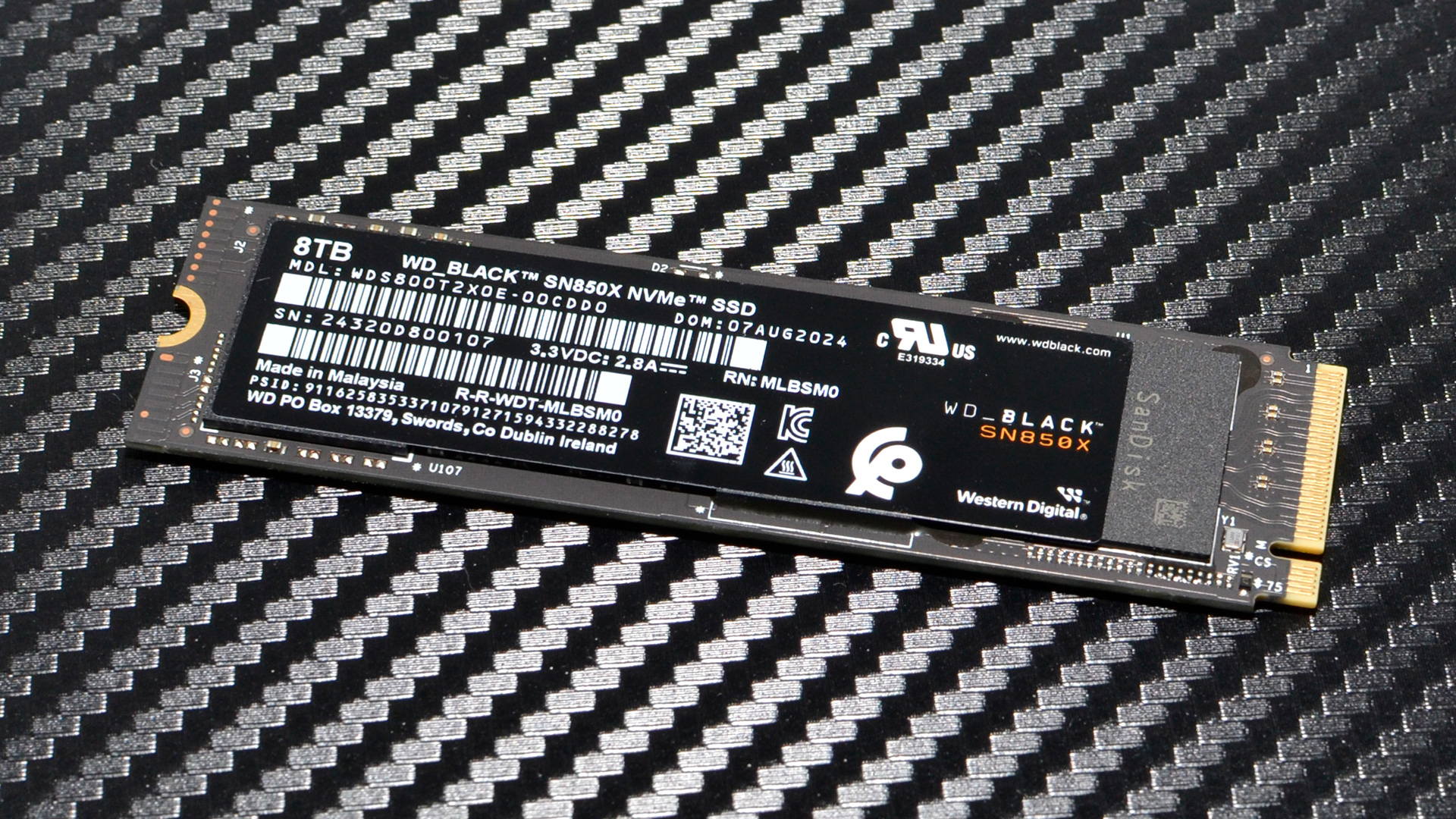

The key ingredient to the 3D XPoint recipe is persistence, so if the technology eventually supplants or compliments volatile DRAM, it has the capability to alter computing at all levels of the stack. However, like NAND, 3D XPoint doesn't retain data forever, and IMFT has not revealed just how long it can retain data without power. Data retention is an important indicator of the overall endurance of any persistent medium; for instance, high-endurance SSDs designed for intense data center workloads only have a retention of three months, while light-endurance client SSDs can retain data for one year.

3D XPoint's endurance is a key factor in determining the target use cases. Micron indicated that QuantX offers 25 DWPD (Drive Writes Per Day) for storage applications, which triples the rating of most of existing mid-range enterprise SSDs, but there are high-endurance NAND-based SSDs that provide similar endurance. Also, if the retention is only specified for three months, that implies an even lower endurance threshold for client-focused products.

However, the vendors are also pouring 3D XPoint into the DIMM mold for memory usage, which will require an exponential amount of endurance compared with storage. The drastically reduced endurance specification presents serious concerns, but we'll dive into that a bit later.

The Timeline

The initial joint 3D XPoint announcement pegged mass production in "12 to 18 months," with samples shipping in 2015. The effort, then, is nearly a year behind that schedule. We do know that Intel and Facebook are working together closely, but Facebook is the only publicly acknowledged company to have Optane samples. Micron has since pushed back its QuantX release until 2Q2017. Intel remains committed to shipping Optane samples this year.

At IDF 2016, Intel announced that it is providing cloud-based access to service providers, which implies there will not be broad sample availability this year. The cloud-based access to 3D XPoint likely indicates that Intel is keeping a tight rein on samples to prevent its competitors from examining the technology. Intel's CEO later announced that the company is qualifying samples this quarter and shipping thousands of samples, so it may achieve its revised 2016 goals.

Intel's CEO also noted that 3D XPoint would come to the second-generation Xeon Purley platform, which also signifies a delay, because Intel originally slated the technology for release in the first-generation Purley product time frame. However, the statement almost certainly refers to on-package or on-die implementations, because the CEO mentioned its inclusion along with other on-package technologies. Contrary to widespread speculation, Optane SSDs and/or DIMMs could still debut with the first-gen Purley platform, which fits within Intel's proposed timeline.

Recent document leaks indicate that Intel plans to ship both SSDs and memory products in late 4Q2016. Leaked benchmarks of the Stony Beach Intel Optane Memory product emerged, but the press has largely misinterpreted the test results. Many have ignored the distinction between the Stony Beach Memory and Mansion Beach Optane SSDs, which are two separate products on Intel's roadmap, and mistakenly compared the specifications of the memory products to existing SSDs.

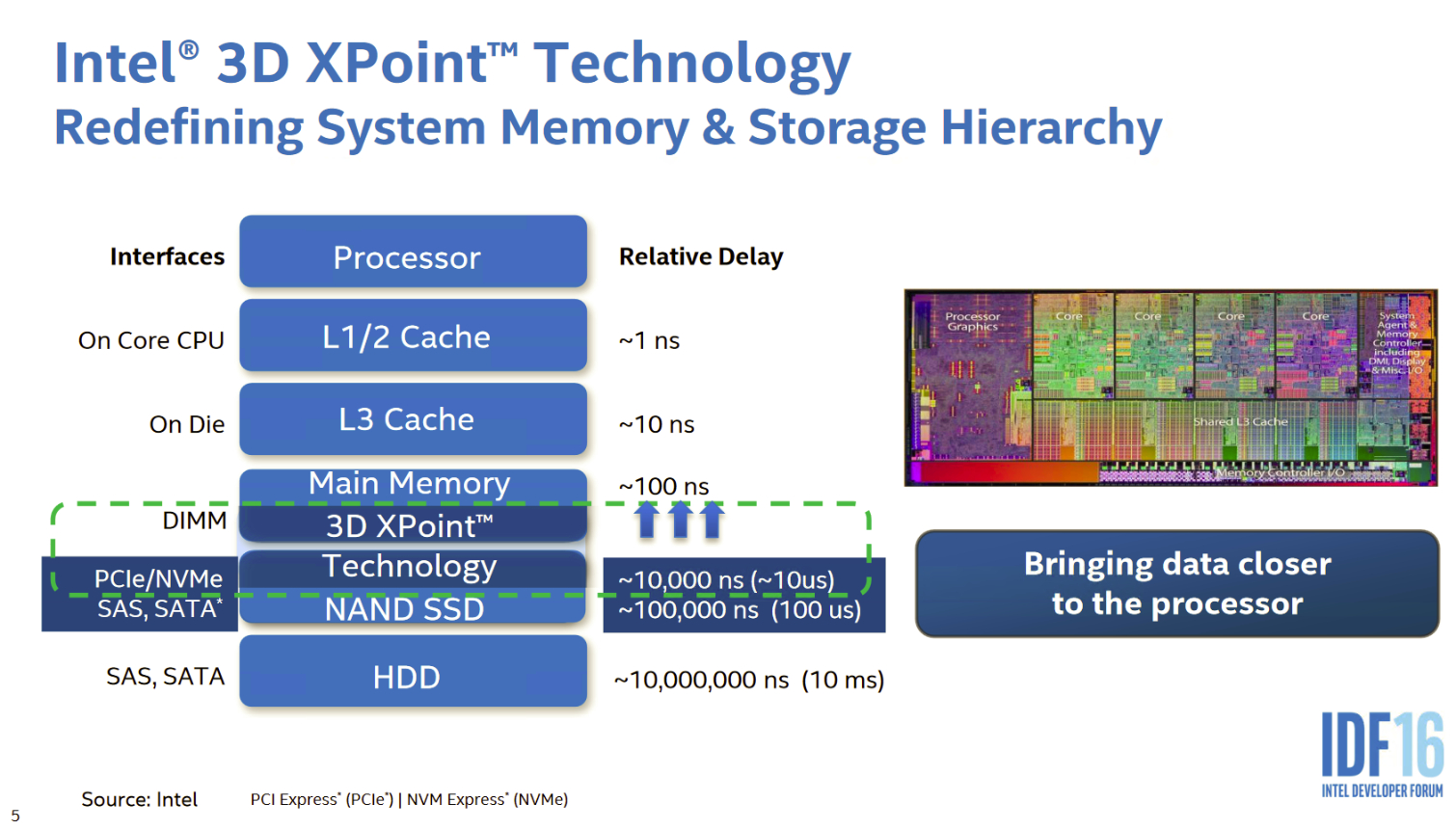

3D XPoint provides near-DDR2 speeds, which moves storage from the millisecond range into the hundreds-of-nanoseconds realm, which exposes significant hardware and software bottlenecks on existing platforms. Using 3D XPoint as memory will also require significant modifications to the existing DDR4 spec, but other industry forces are aligning against the use of proprietary interconnects.

IMFT will have to jump numerous hurdles, like the economics associated with production and sales, along with hardware, firmware, controller, and software support for a totally new type of memory. Let's take a closer look at how Intel and Micron are bringing the products to market, and how they plan to address the other key requirements.

MORE: Best SSDs

Paul Alcorn is the Managing Editor: News and Emerging Tech for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

coolitic the 3 months for data center and 1 year for consumer nand is an old statistic, and even then it's supposed to apply to drives that have surpassed their endurance rating.Reply -

PaulAlcorn Reply18916331 said:the 3 months for data center and 1 year for consumer nand is an old statistic, and even then it's supposed to apply to drives that have surpassed their endurance rating.

Yes, that is data retention after the endurance rating is expired, and it is also contingent upon the temperature that the SSD was used at, and the temp during the power-off storage window (40C enterprise, 30C Client). These are the basic rules by which retention is measured (the definition of SSD data retention, as it were), but admittedly, most readers will not know the nitty gritty details.

However, I was unaware that JEDEC specification for data retention has changed, do you have a source for the new JEDEC specification?

-

stairmand Replacing RAM with a permanent storage would simply revolutionise computing. No more loading an OS, no more booting, no loading data, instant searches of your entire PC for any type of data, no paging. Could easily be the biggest advance in 30 years.Reply -

InvalidError Reply

You don't need X-point to do that: since Windows 95 and ATX, you can simply put your PC in Standby. I haven't had to reboot my PC more often than every couple of months for updates in ~20 years.18917236 said:Replacing RAM with a permanent storage would simply revolutionise computing. No more loading an OS, no more booting, no loading data

-

Kewlx25 Reply18918642 said:

You don't need X-point to do that: since Windows 95 and ATX, you can simply put your PC in Standby. I haven't had to reboot my PC more often than every couple of months for updates in ~20 years.18917236 said:Replacing RAM with a permanent storage would simply revolutionise computing. No more loading an OS, no more booting, no loading data

Remove your harddrive and let me know how that goes. The notion of "loading" is a concept of reading from your HD into your memory and initializing a program. So goodbye to all forms of "loading". -

hannibal The Main thing with this technology is that we can not afford it, untill Many years has passesd from the time it comes to market. But, yes, interesting product that can change Many things.Reply -

TerryLaze Reply

Sure you won't be able to afford a 3Tb+ drive in even 10 years,but a 128/256Gb one just for windows and a few games will be affordable if expensive even in a couple of years.18922543 said:10 years later... still unavailable/costs 10k

-

zodiacfml I dont understand the need to make it work as DRAM replaement. It doesnt have to. A system might only need a small amount RAm then a large 3D xpoint pool.Reply

The bottleneck is thr interface. There is no faster interface available except DIMM. We use the DIMM interface but make it appear as storage to the OS. Simple.

It will require a new chipset and board though where Intel has the control. We should see two DIMM groups next to each other, they differ mechanically but the same pin count.