3D XPoint: A Guide To The Future Of Storage-Class Memory

Memory and storage collide with Intel and Micron's new, much-anticipated 3D XPoint technology, but the road has been long and winding. This is a comprehensive guide to its history, its performance, its promise and hype, its future, and its competition.

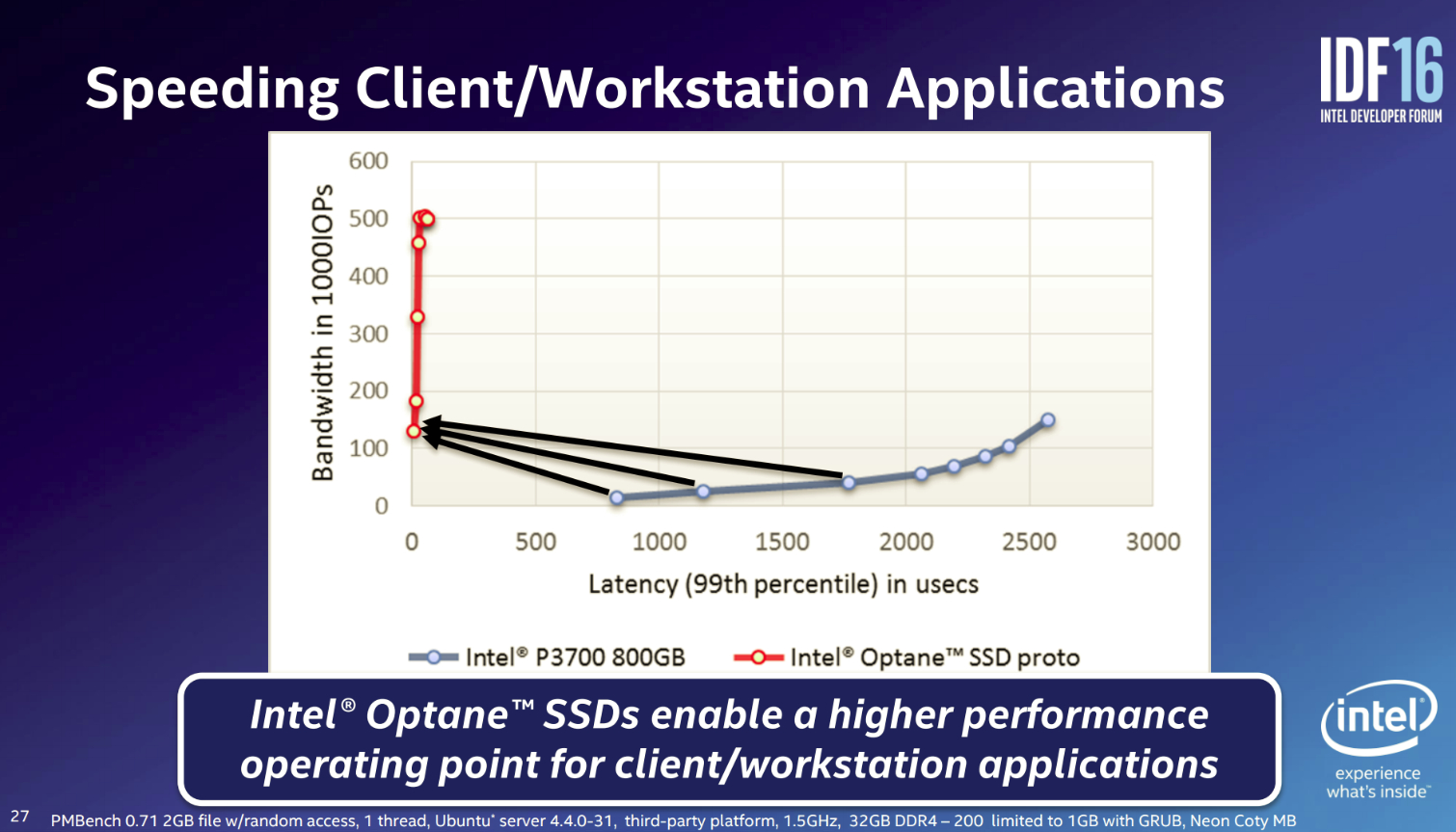

Enthusiast, Workstation, Data Center Performance

Optane Consumer Workloads

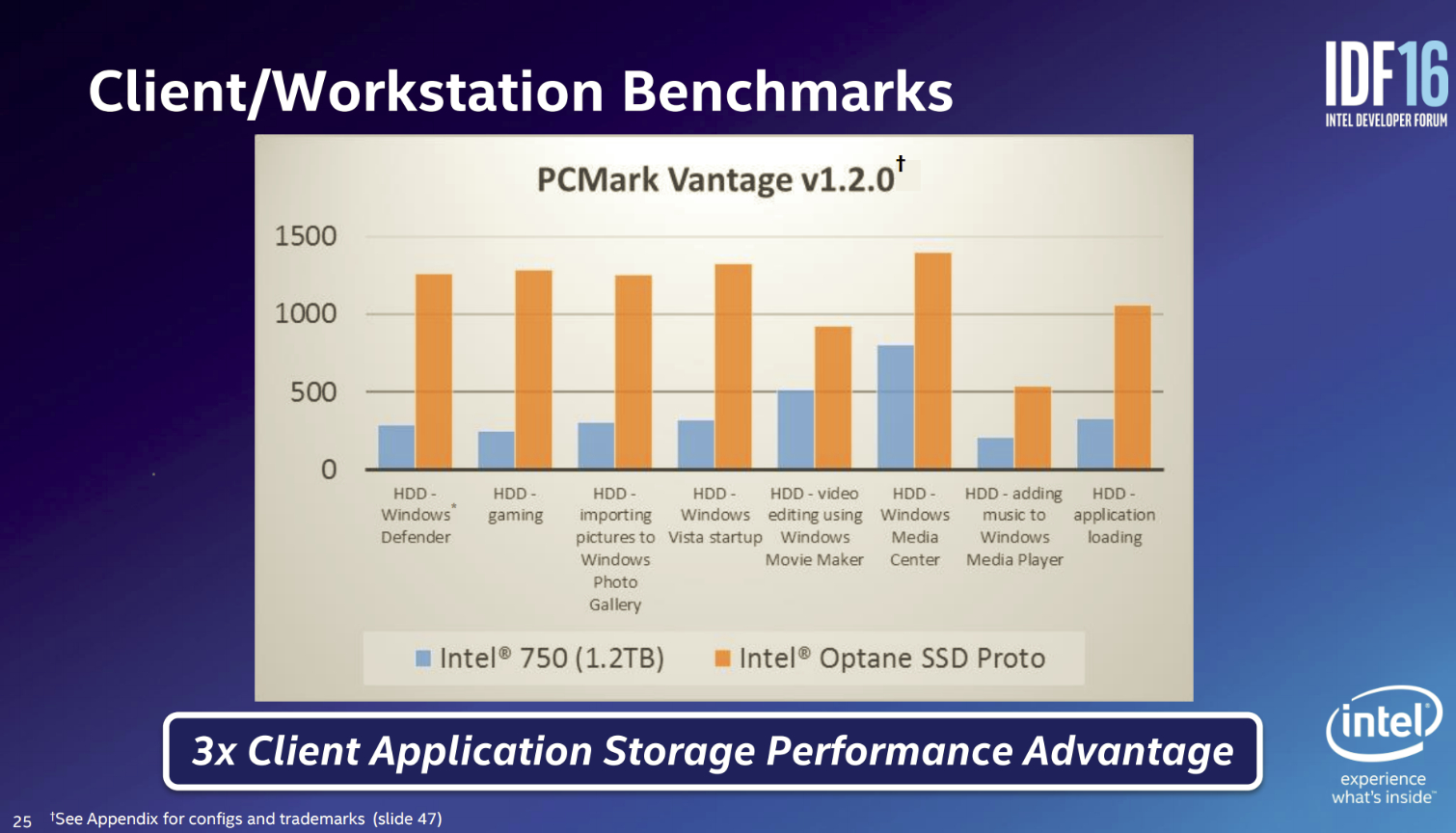

Intel broke out the PCMark Vantage benchmark to highlight the difference between the enthusiast-oriented Intel 750 and the Intel Optane SSD prototype. Optane provides a large improvement in theoretical bandwidth during the tests, particularly in the gaming and Vista startup tests. Of course, we would prefer to see newer and more accurate version of the benchmark, such as PCMark 8. Also, developers haven't optimized today's games and applications for flash storage, let alone something as exotic as 3D XPoint.

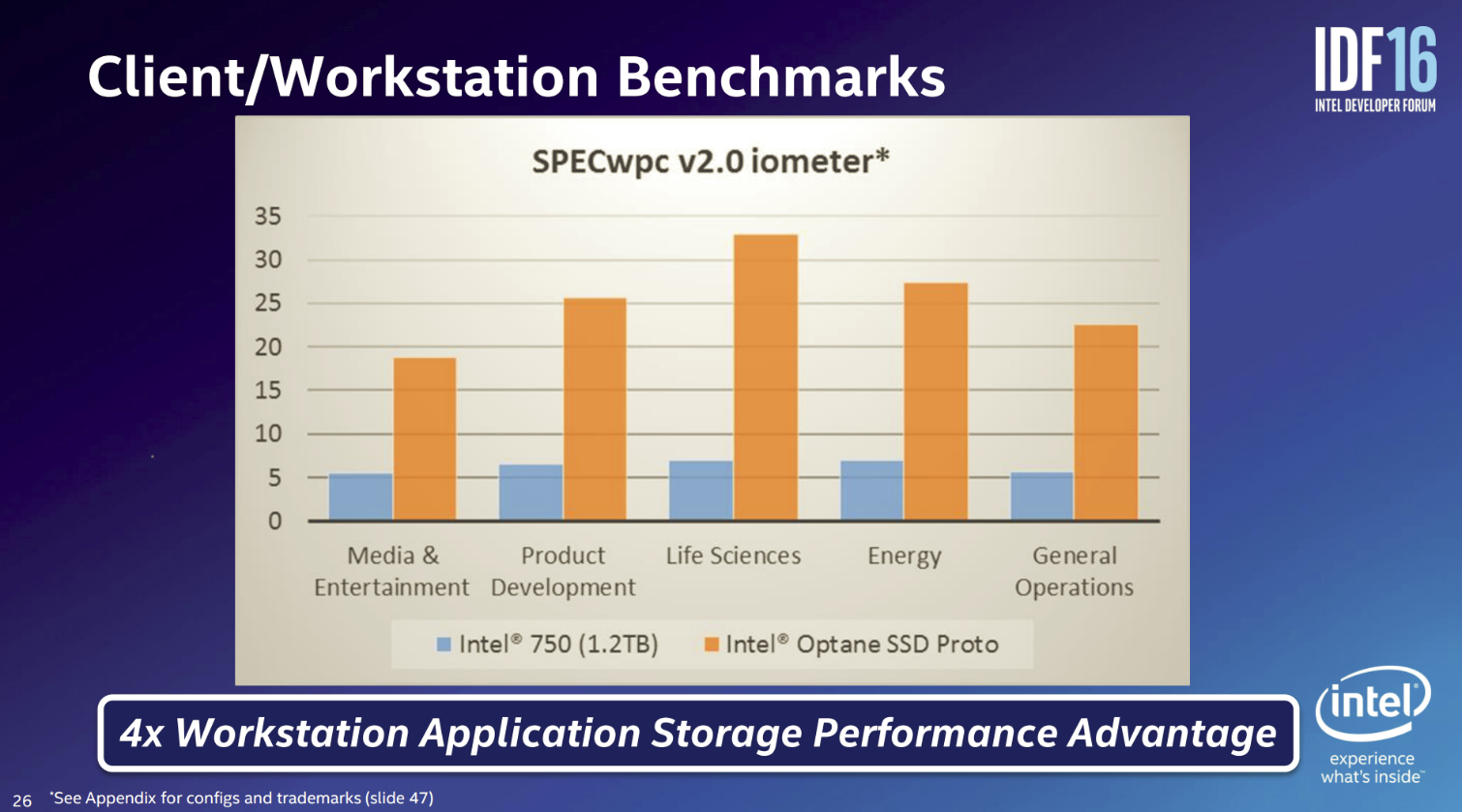

Intel prefers to use SPECwpc v2.0 to characterize performance improvements for workstation use-cases, and again we notice a big jump in the professional-class workloads. We expect the much higher performance in both enthusiast and workstation use-cases, but performance-per-dollar and whether the technology delivers a measurable impact on our everyday computing experience will factor heavily.

Optane Rendering

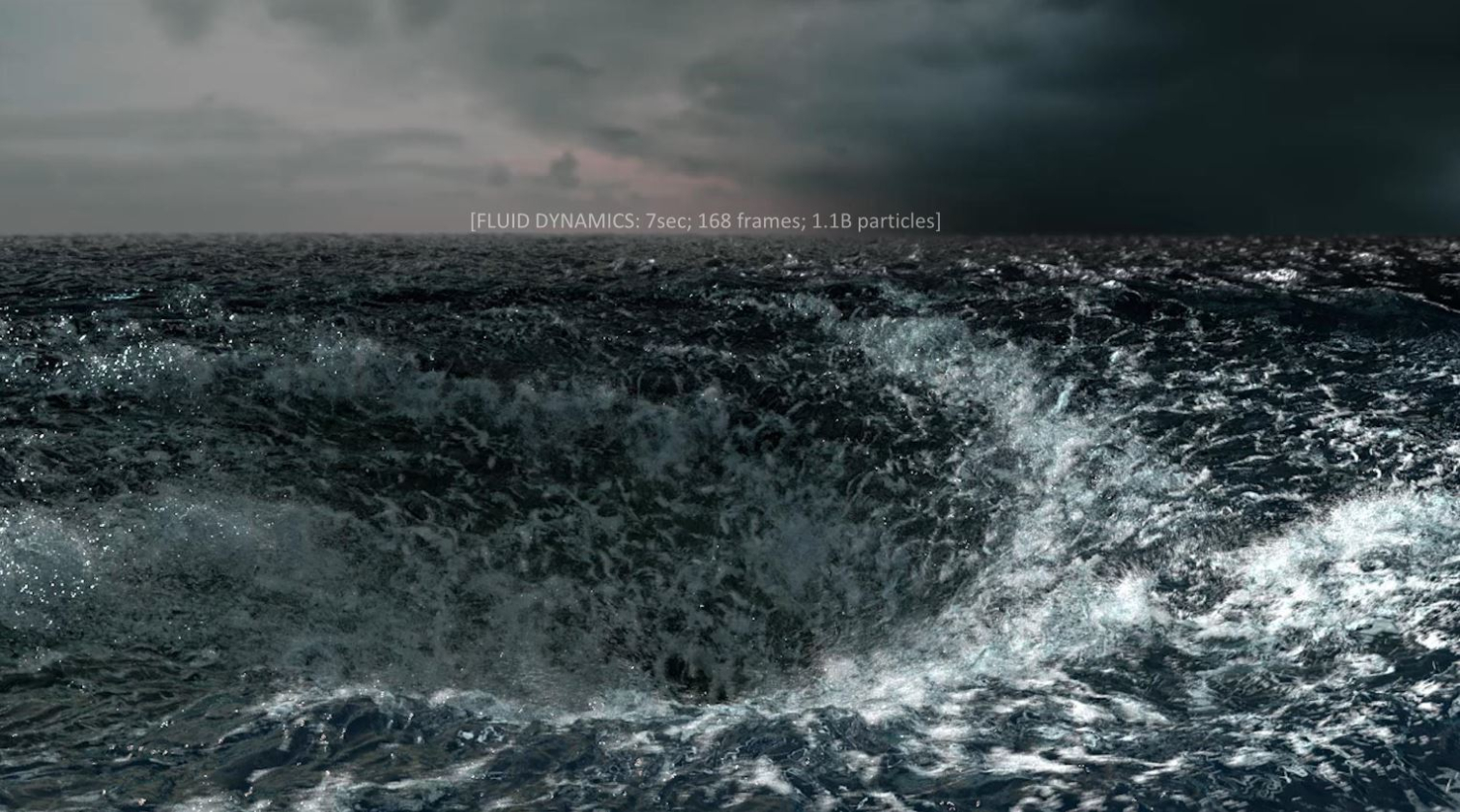

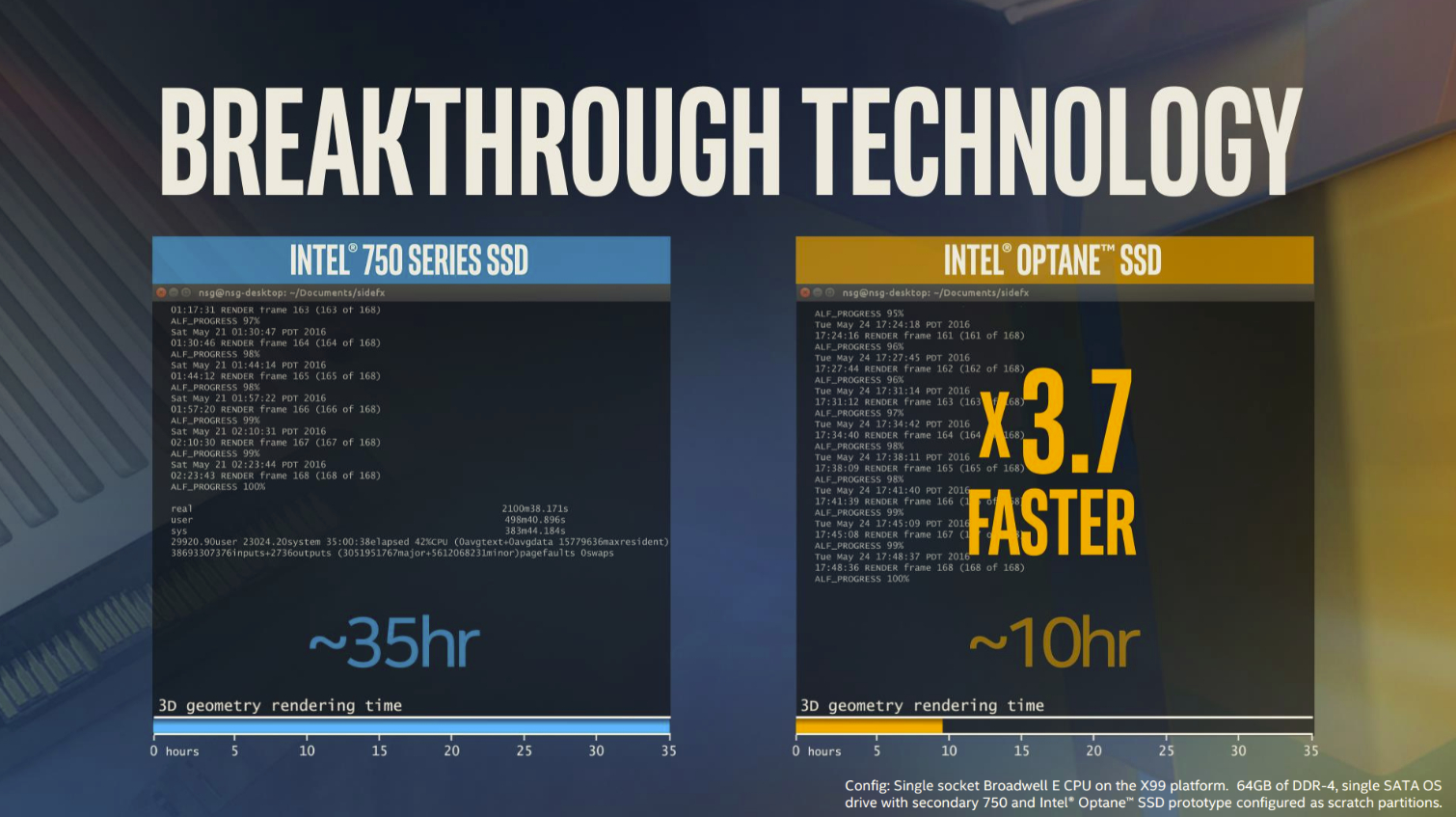

Intel positions its SideFX Houdini test as a client-class benchmark, but it’s more of a workstation use-case. We covered the benchmark results at Computex, but Intel provided more data on the benchmark at IDF. From a high level, the test consists of rendering a seven-second clip of 1.1 billion water particles. The task requires 35 hours with Intel's fastest SSD (presumably the DC P3608), but only nine hours with the Optane SSD.

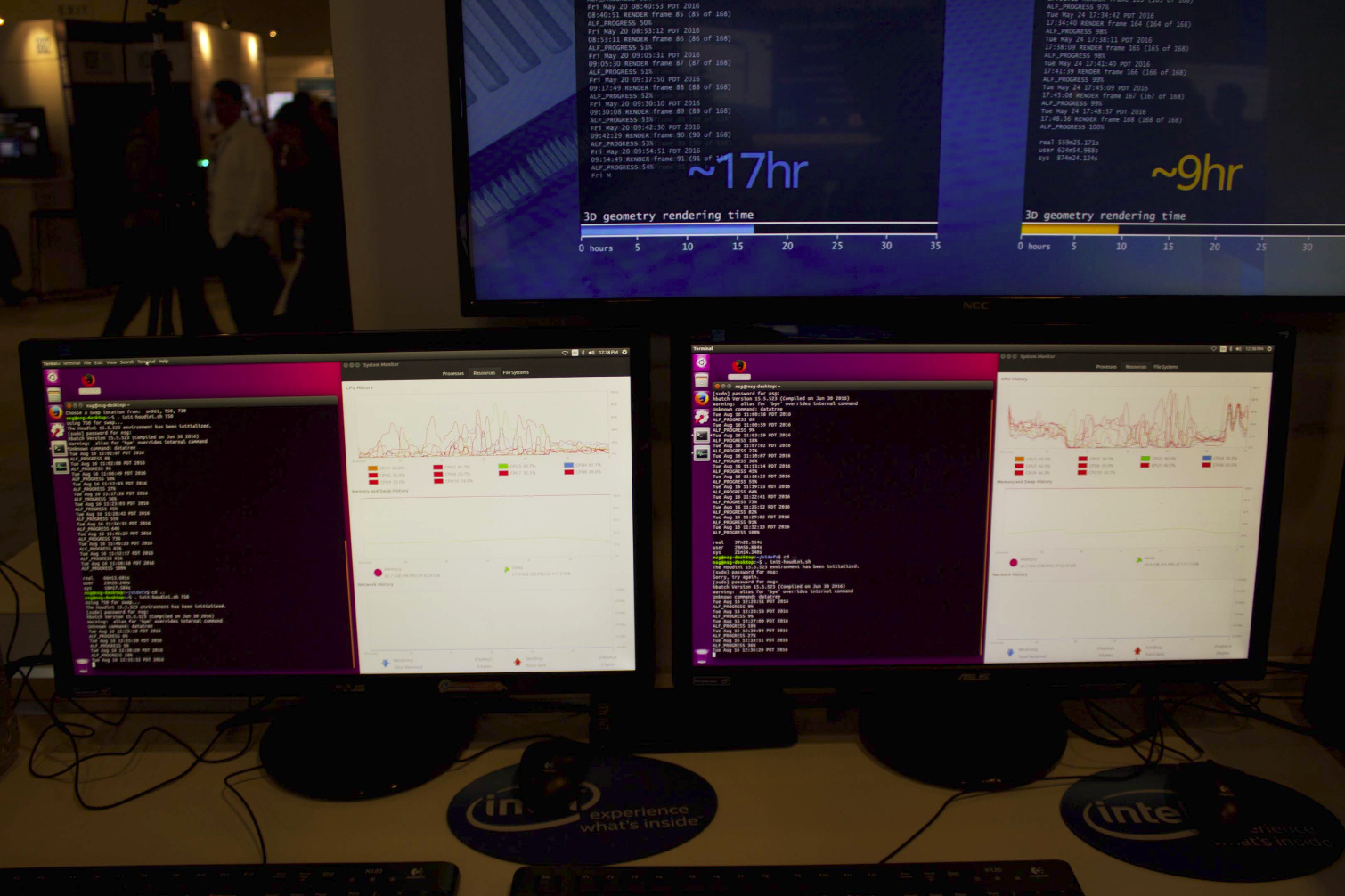

The IDF 2016 demo included a recording of the CPU usage for both test platforms during the test. The 3D XPoint-powered system, on the right, features a much higher level of CPU utilization during the benchmark. CPU usage is an important measurement that highlights the true meaning of faster I/O, particularly for data center scenarios. With 3D XPoint, the system spends less time waiting on I/O, and thus unlocks the expensive CPU resource to perform more work. In a normal deployment, this can equate to more VMs per server, or more available application resources.

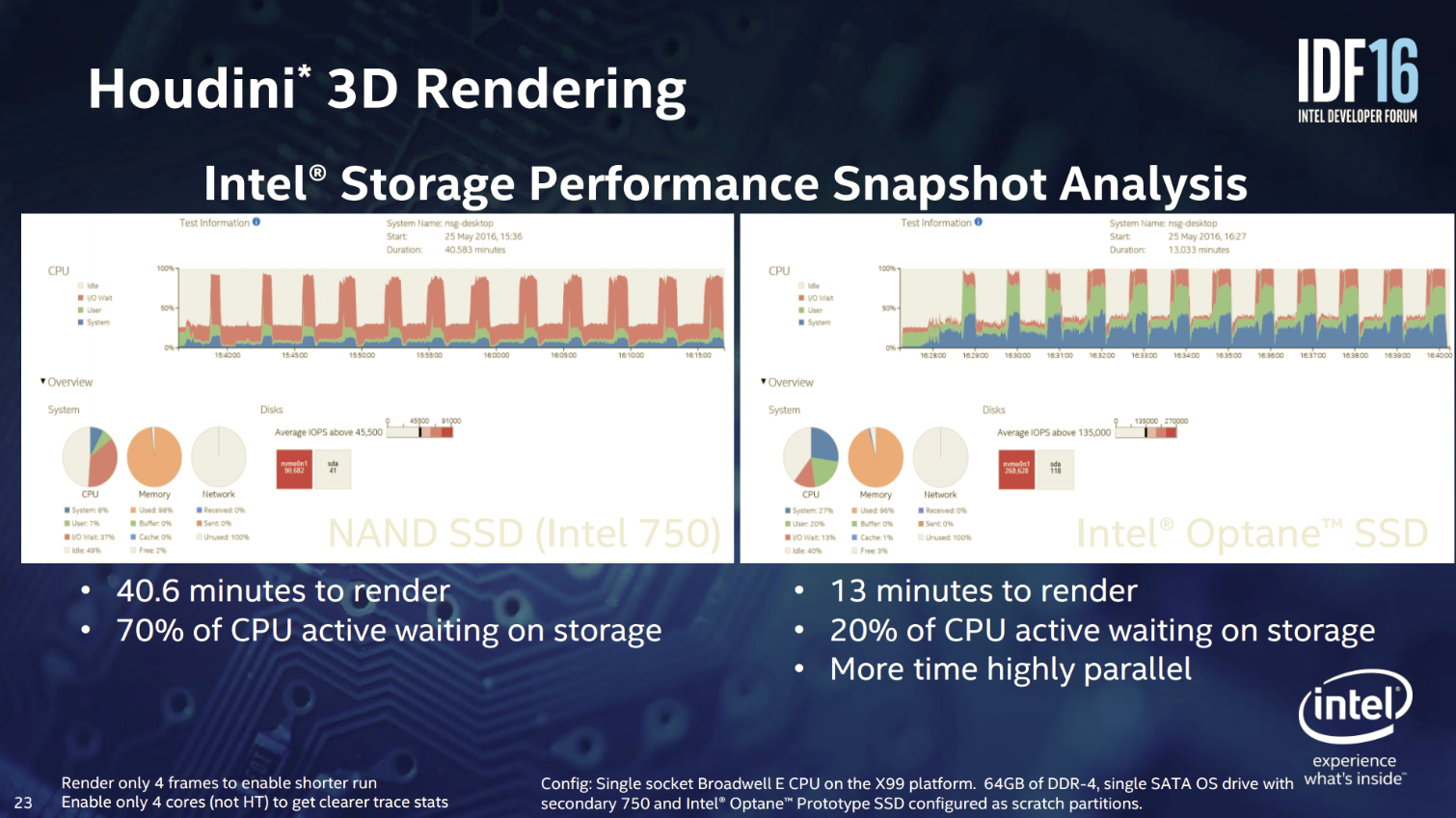

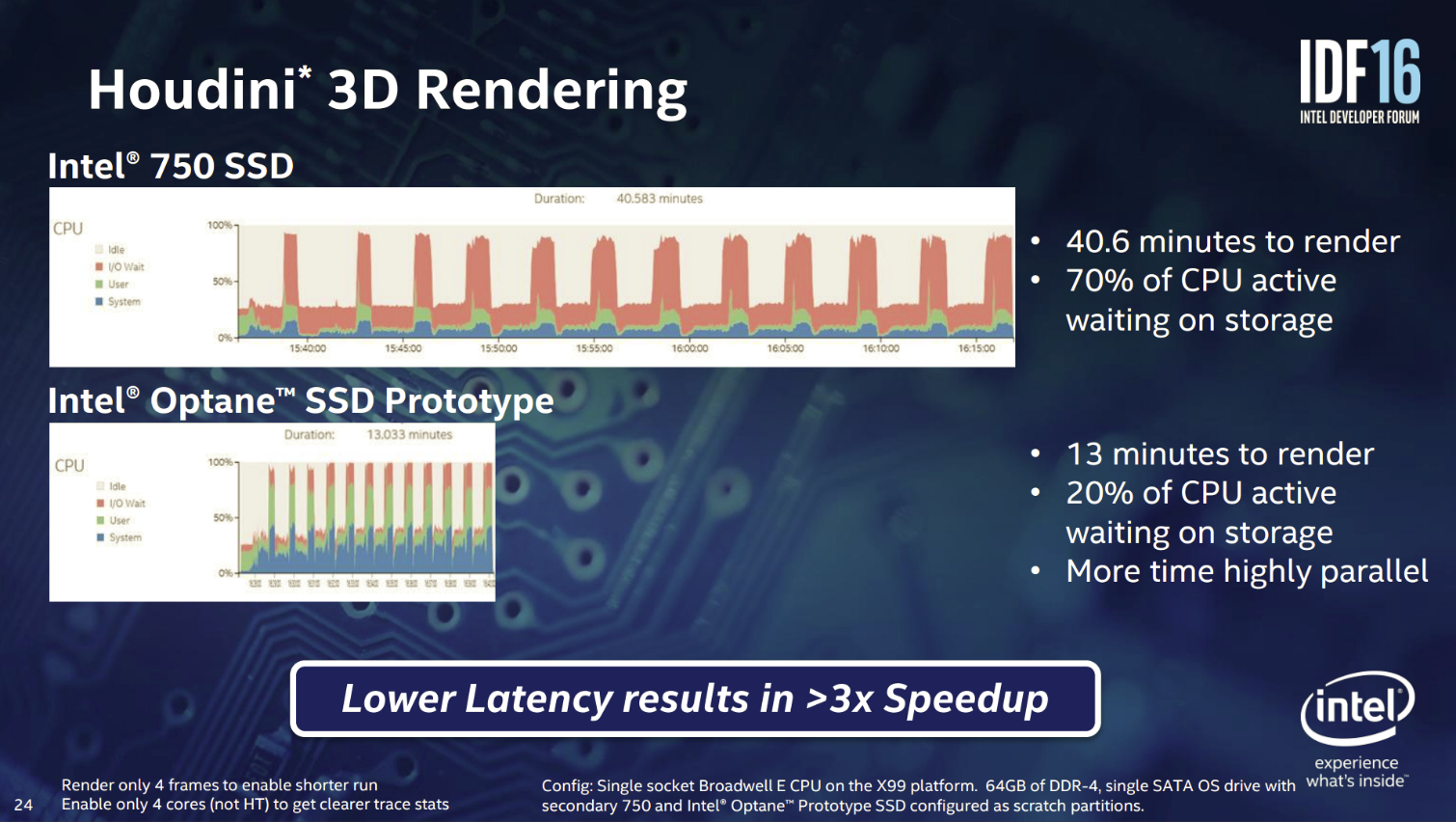

During a separate presentation, Intel also compared the same benchmark, but only rendered four frames for an easier comparison. An Intel 750 required 40.6 minutes, while the Optane only needed 13 minutes to complete the test. The orange portion of the bar trace up top (Houdini 3D Rendering slide) indicates the amount of time the system is waiting for incoming I/O.

As expected, the I/O wait time for the Intel 750 is much more prevalent at 70% of the CPU's active time. The Optane-powered demo rig only waited 20% of the time for I/O. Interestingly, the slide lists the Optane NVMe0n1 device (a Linux designation for an NVMe device) as 268GB, which is much higher than Intel's disclosed 140GB prototype capacity. The Intel 750 averaged 45,500 IOPS during the test, while the Optane provided 135,000. As we can see in the final slide with the two traces presented atop each other, the vast reduction in wait times leads to a 3x performance increase.

Optane Data Center Workloads

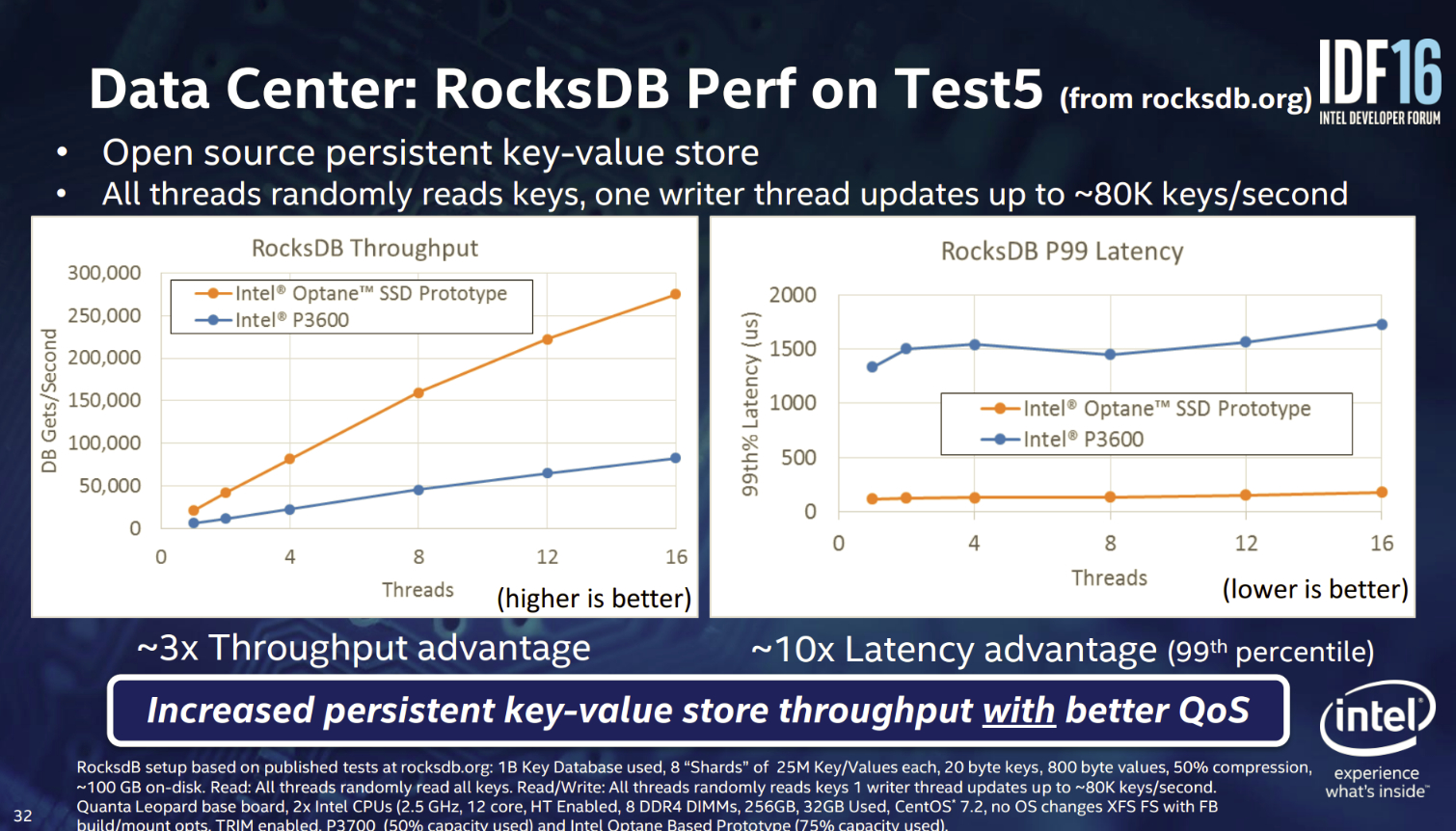

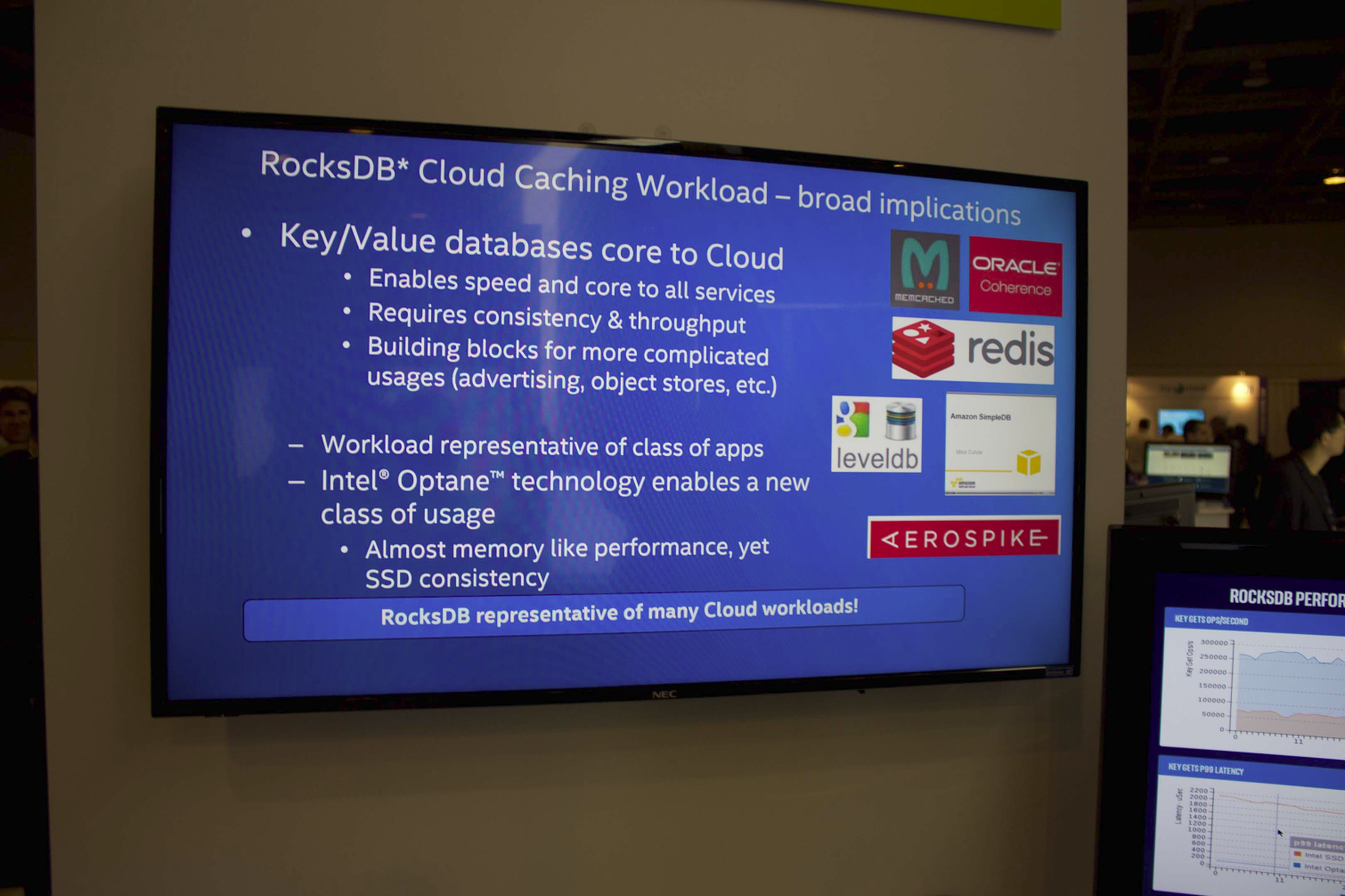

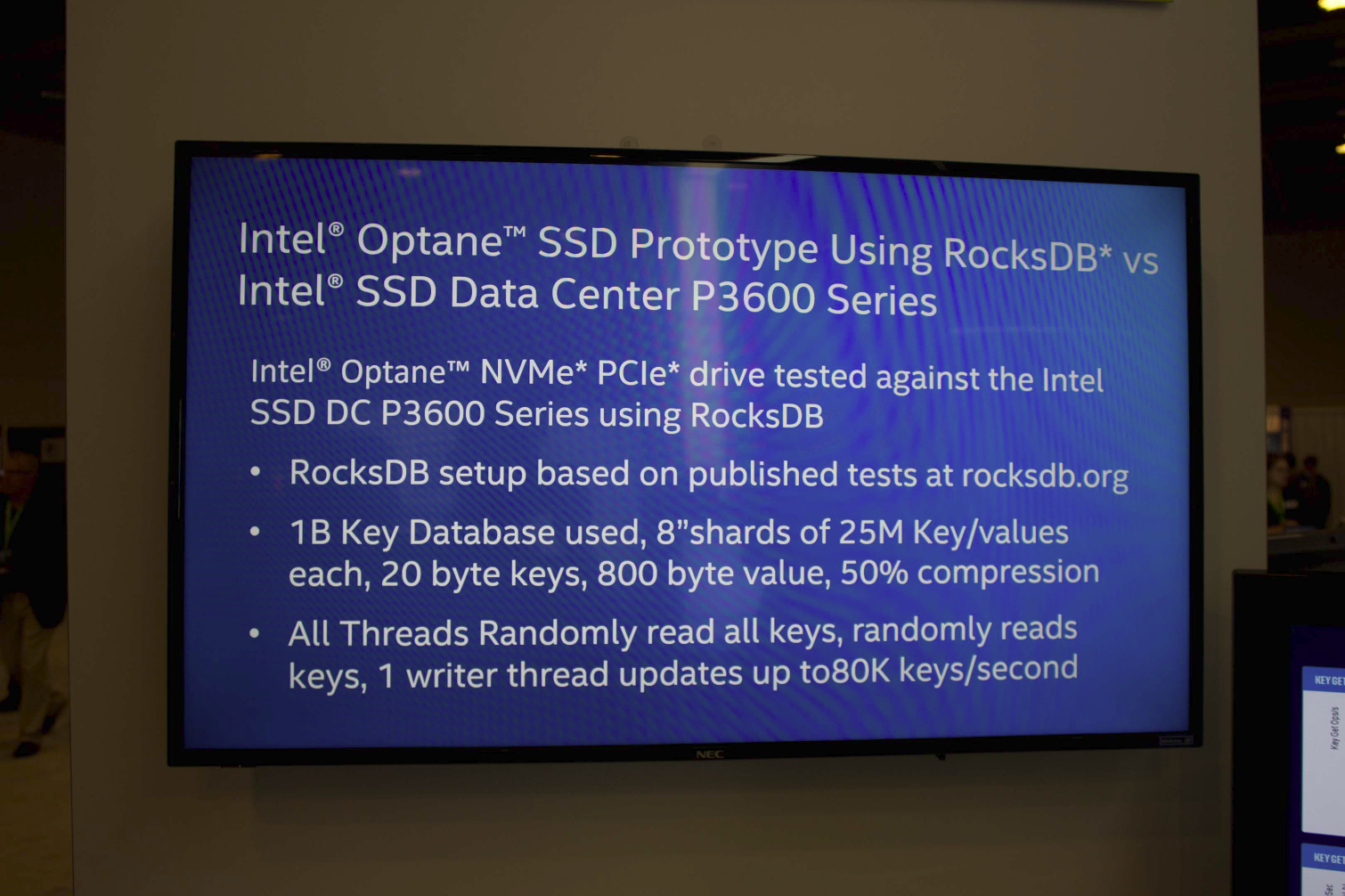

Synthetics tests are great because they highlight the amount of performance that is available to the application, but unfortunately, many applications aren't tuned to exploit 3D XPoint fully. RocksDB is one of the few programs that can unlock the performance of speedy non-volatile media. RocksDB is an open-source, key-value database that offers increased performance and scalability compared to traditional databases. There are other databases, most notably Redis and Aerospike, that also unlock the performance of bleeding-edge non-volatile media.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

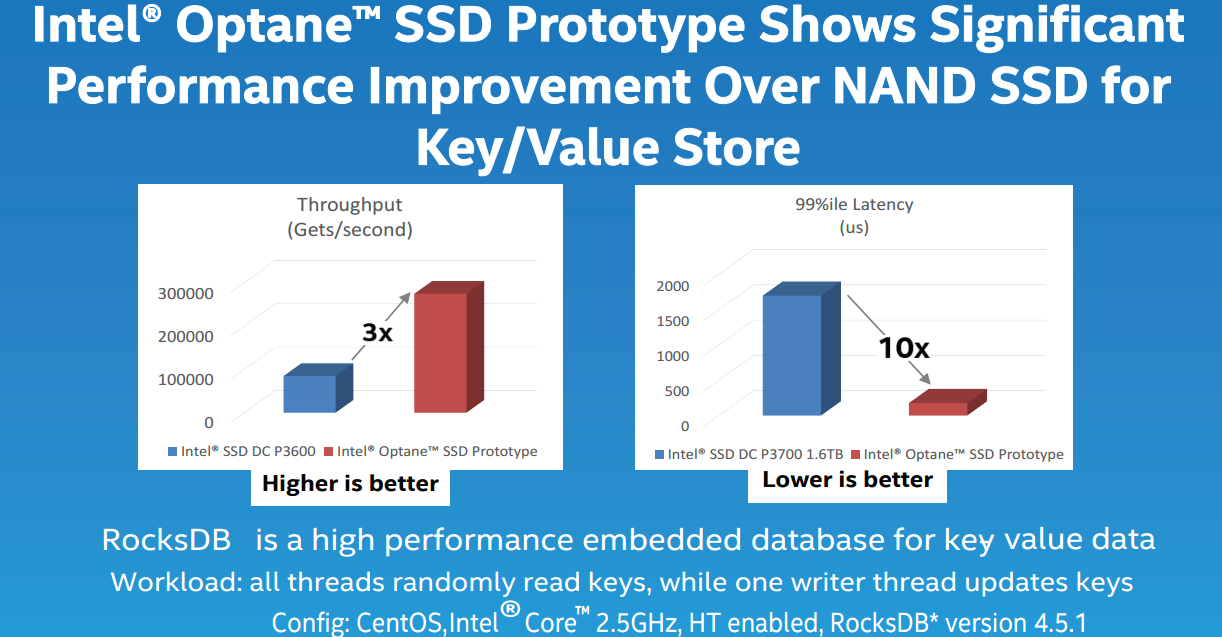

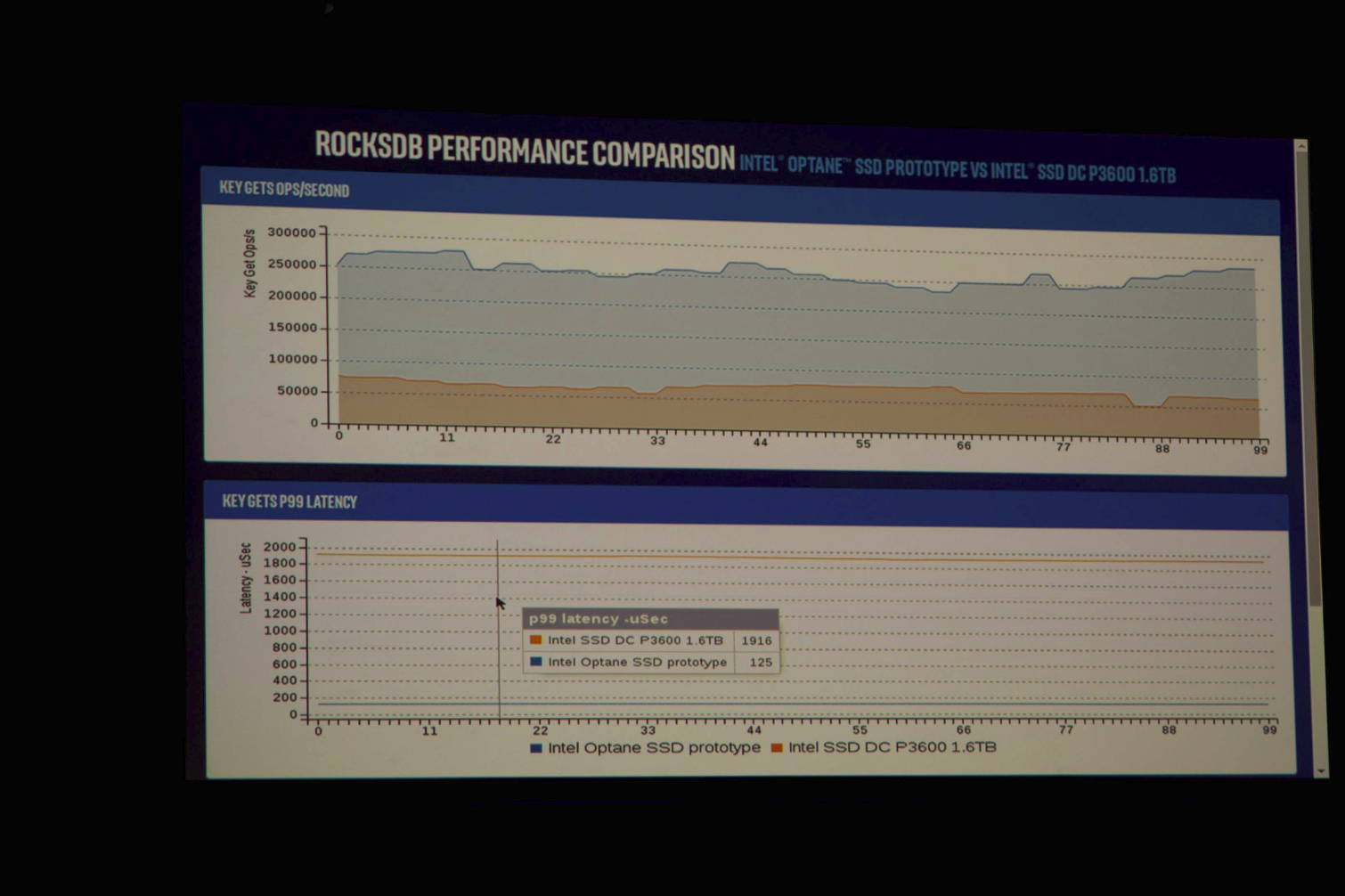

Facebook and Intel are working together closely, and during an FMS keynote, Facebook outlined how it is redesigning its stack for 3D XPoint. Facebook is implementing the technology because of its radical performance improvements. During the RocksDB test, the Optane prototype provided 3X more throughput and a 10X latency advantage for 99th percentile measurements. It is somewhat interesting that Intel and Facebook chose the lower-endurance DC P3600 for the demo, because the DC P3700 is the stouter alternative for this workload.

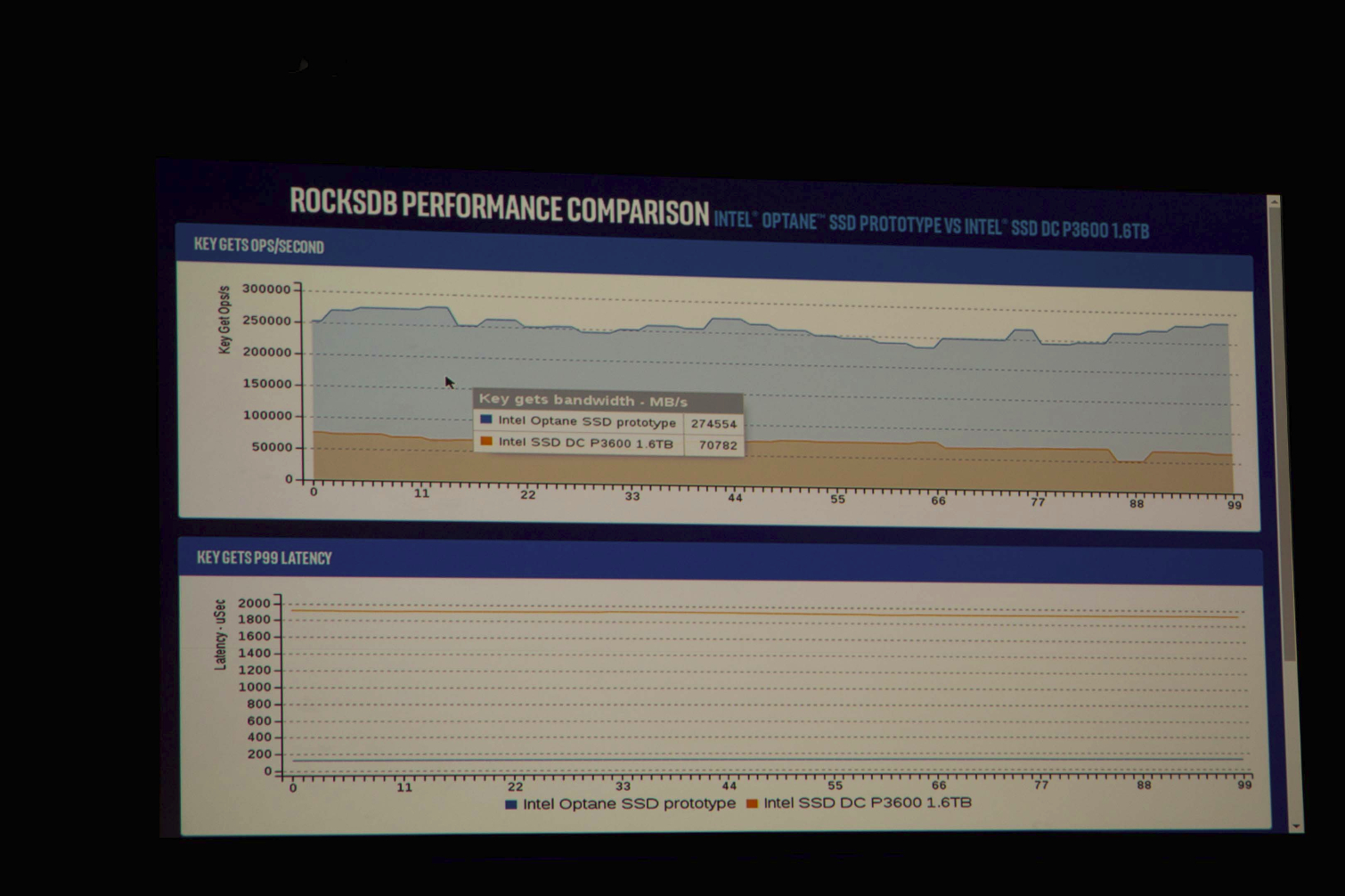

The Optane prototype delivered 274,554 read-centric key "gets" per second, compared to 70,782 for the DC P3600. Shifting gears to 99th percentile latency, the DC P3600 weighs in with 1.9ms, while the Optane provides an impressive .125ms. Interestingly, Facebook and Intel did not provide performance information for "puts," which is the write-centric portion of the workload.

Of course, faster performance means the system utilizes the cores more efficiently to maximize the CPU investment, but it also equates to big savings in application licensing. Some application licenses can run into multiple tens of thousands of dollars per core (some Oracle licenses can be $50K per core), so squeezing out more license utilization can easily pay for the higher price of the storage solution.

MORE: Best Deals

MORE: Hot Bargains @PurchDeals

Current page: Enthusiast, Workstation, Data Center Performance

Prev Page Synthetic Performance Previews - Optane Vs QuantX Next Page Storage Or Memory, Or Both?

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

coolitic the 3 months for data center and 1 year for consumer nand is an old statistic, and even then it's supposed to apply to drives that have surpassed their endurance rating.Reply -

Paul Alcorn Reply18916331 said:the 3 months for data center and 1 year for consumer nand is an old statistic, and even then it's supposed to apply to drives that have surpassed their endurance rating.

Yes, that is data retention after the endurance rating is expired, and it is also contingent upon the temperature that the SSD was used at, and the temp during the power-off storage window (40C enterprise, 30C Client). These are the basic rules by which retention is measured (the definition of SSD data retention, as it were), but admittedly, most readers will not know the nitty gritty details.

However, I was unaware that JEDEC specification for data retention has changed, do you have a source for the new JEDEC specification?

-

stairmand Replacing RAM with a permanent storage would simply revolutionise computing. No more loading an OS, no more booting, no loading data, instant searches of your entire PC for any type of data, no paging. Could easily be the biggest advance in 30 years.Reply -

InvalidError Reply

You don't need X-point to do that: since Windows 95 and ATX, you can simply put your PC in Standby. I haven't had to reboot my PC more often than every couple of months for updates in ~20 years.18917236 said:Replacing RAM with a permanent storage would simply revolutionise computing. No more loading an OS, no more booting, no loading data

-

Kewlx25 Reply18918642 said:

You don't need X-point to do that: since Windows 95 and ATX, you can simply put your PC in Standby. I haven't had to reboot my PC more often than every couple of months for updates in ~20 years.18917236 said:Replacing RAM with a permanent storage would simply revolutionise computing. No more loading an OS, no more booting, no loading data

Remove your harddrive and let me know how that goes. The notion of "loading" is a concept of reading from your HD into your memory and initializing a program. So goodbye to all forms of "loading". -

hannibal The Main thing with this technology is that we can not afford it, untill Many years has passesd from the time it comes to market. But, yes, interesting product that can change Many things.Reply -

TerryLaze Reply

Sure you won't be able to afford a 3Tb+ drive in even 10 years,but a 128/256Gb one just for windows and a few games will be affordable if expensive even in a couple of years.18922543 said:10 years later... still unavailable/costs 10k

-

zodiacfml I dont understand the need to make it work as DRAM replaement. It doesnt have to. A system might only need a small amount RAm then a large 3D xpoint pool.Reply

The bottleneck is thr interface. There is no faster interface available except DIMM. We use the DIMM interface but make it appear as storage to the OS. Simple.

It will require a new chipset and board though where Intel has the control. We should see two DIMM groups next to each other, they differ mechanically but the same pin count.