Samsung's revived Z-NAND targets 15x performance increase over traditional NAND — once a competitor to Intel's Optane, Z-NAND makes a play for AI datacenters

Samsung's Optane competitor is coming back, stronger and faster than ever before.

Samsung is bringing its Z-NAND memory technology back from the dead, with very high performance targets. DigiTimes reports that Samsung is targeting up to a 15x performance increase with its next-generation of Z-NAND, and creating a new technology that allows the GPU to access Z-NAND-powered storage devices directly (similar to Microsoft's DirectStorage API).

The executive VP of Samsung's memory business reportedly stated that Samsung is targeting up to a 15x performance jump with Z-NAND, while cutting power consumption by as much as 80% compared to conventional NAND flash memory.

This new version of Z-NAND is reportedly being adapted for AI applications, specifically AI GPUs, with a key focus on minimizing latency over its predecessor. Samsung is adopting a new technology dubbed GPU-Initiated Direct Storage Access (GIDS) to help with this, and it will be integrated into its next-generation Z-NAND flash. GIDS lets the GPUs access Z-NAND storage devices directly, bypassing the CPU and DRAM, significantly reducing access times and system latency.

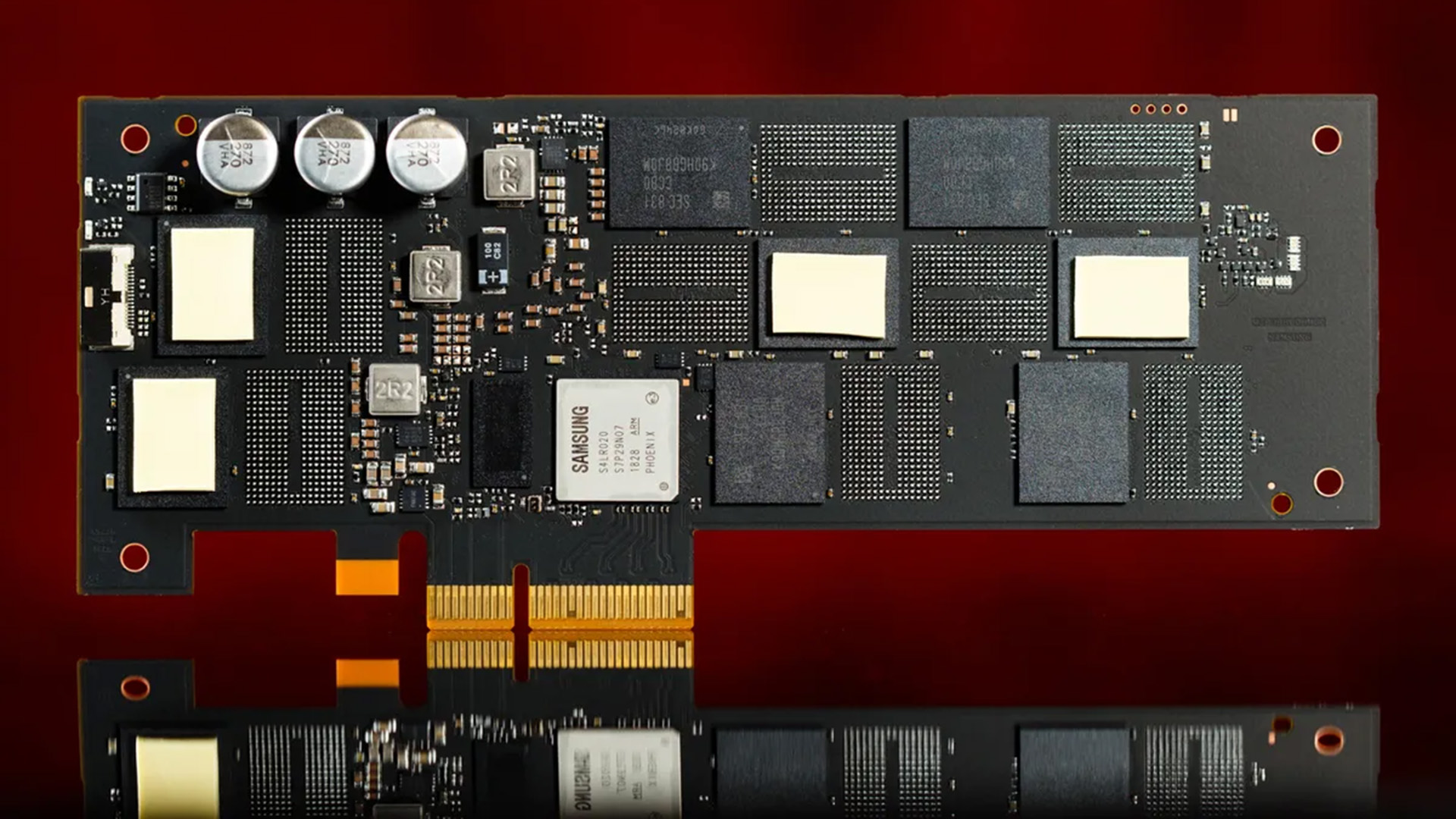

Z-NAND first came out in the mid-to-late 2010s, as Samsung's alternative to Intel's 3D XPoint Optane memory. Both of these memory technologies introduced a new tier of solid-state storage that behaved like a traditional SSD but had far lower system latency and better performance, almost akin to DRAM.

While Intel's 3D XPoint Optane behaved entirely differently from traditional NAND flash, Samsung's first-generation Z-NAND was essentially a turbocharged version of NAND-based SLC SSDs, based on a modified V-NAND design featuring 48 layers and operating in SLC mode. One key upgrade of Samsung's first-gen Z-NAND was a reduction in page sizes, as small as 2-4 KB. This helped the SSD to read and write data in smaller chunks, making it faster and reducing system latency. Regular SSDs, by contrast, typically use page sizes in the 8 to 16 KB range.

At the time, drives powered by Intel's Optane technology and Samsung's Z-NAND competitor were roughly six to 10 times faster than traditional SSDs from that era. If Samsung's targets hold true, its next-generation Z-NAND technology will be 15x more performant than today's NVMe SSDs. Traditional SSDs are also getting faster, with PCIe 6 SSDs on the horizon, so cost is going to be an issue for future versions of Z-NAND. But so long as the AI hardware boom keeps booming, Samsung won't likely have much trouble finding customers.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Li Ken-un ReplyRegular SSDs, by contrast, typically use page sizes in the 8 to 16 KB range.

8 KiB is if you are lucky. You tend to get that with TLC run in pSLC mode. 16 KiB is the norm nowadays. Some pSLC SSDs made from QLC NAND will do 4 KiB pages.

If Samsung's targets hold true, its next-generation Z-NAND technology will be 15x more performant than today's NVMe SSDs.

What we’d really like to know is what differentiates Z-NAND from SLC. Because SLC and pSLC are far more accessible now, why would anyone want Z-NAND? -

Notton Question I have is, what purpose does Z-NAND and Optane serve now or in the near future?Reply

Because if there is demand, Intel could sell off their Optane IP and let someone else make it. -

DS426 Moving SSD's directly onto GPU's, CPU and RAM being bypassed... this makes me think that the System-on-GPU concept is potentially getting closer to reality.Reply -

bit_user Reply

Nvidia has said they want vastly higher-performing SSDs, which probably caught Samsung's interest.Notton said:Question I have is, what purpose does Z-NAND and Optane serve now or in the near future?

https://www.tomshardware.com/pc-components/ssds/smi-ceo-claims-nvidia-wants-ssds-with-100m-iops-up-to-33x-performance-uplift-could-eliminate-ai-gpu-bottlenecks

Also, Sandisk is proposing to use flash in a HBM-like fashion for direct streaming of AI model weights. Perhaps Samsung is also heading in that direction, with Z-NAND?

https://www.tomshardware.com/pc-components/dram/sandisks-new-hbf-memory-enables-up-to-4tb-of-vram-on-gpus-matches-hbm-bandwidth-at-higher-capacity

I doubt it. Optane fell short of expectations on multiple levels. Perhaps the final nail in its coffin was its failure to effectively scale in 3 dimensions, as Intel had initially hyped. IIRC, the first gen Optane dies were only 2 layers and the second gen were only 4? Meanwhile, NAND is doing like 300 layers, these days.Notton said:Because if there is demand, Intel could sell off their Optane IP and let someone else make it.

From what I've heard, Optane also has an efficiency problem on writing, which sounds like another potential scaling bottleneck.

Regardless, if this technology had legs, I'm sure Intel would've sold it. I think the issue must've been that Intel never turned a profit on Optane, the trends pointed in the wrong direction, and there was probably nobody interested in buying it. The fact that XL-Flash and Z-NAND are competitive on performance, while certainly being much higher density, shows that Optane was probably a dead-end technology. -

bit_user Reply

That's not what they said. It's still going to be decoupled and accessed via PCIe or CXL. They're just talking about the GPU going straight to the SSD, without having to involve the CPU. This is one of the things CXL is designed to do, so I hope that's what they have in mind.DS426 said:Moving SSD's directly onto GPU's, CPU and RAM being bypassed... this makes me think that the System-on-GPU concept is potentially getting closer to reality. -

usertests Reply

I think it would be interesting to see the "SSG" concept revived though. It probably would only be done for AI, and looks like they're already getting started.bit_user said:That's not what they said. It's still going to be decoupled and accessed via PCIe or CXL. They're just talking about the GPU going straight to the SSD, without having to involve the CPU. This is one of the things CXL is designed to do, so I hope that's what they have in mind. -

bit_user Reply

It only makes sense for that sort of "HBF" concept, because you cannot fit that much bandwidth over normal I/O interfaces.usertests said:I think it would be interesting to see the "SSG" concept revived though. It probably would only be done for AI, and looks like they're already getting started.

Otherwise, it's a bad idea to put NAND on a GPU. NAND doesn't like high temperatures and is higher-latency than DRAM. That means it's bad to put it next to a 1 kW chip and the latency-impact of going over the interconnect fabric to reach it should be negligible. Furthermore, moving it off the card lets you more easily scale in capacity and bandwidth, while also giving multiple accelerators more symmetrical access to it.

It's like two great flavors that are worse, together. -

DS426 Reply

Oh I know. Still, it seems like one step closer and something that will have to happen eventually to meet the insatiable demand of AI compute. It's still many steps away for sure.bit_user said:That's not what they said. It's still going to be decoupled and accessed via PCIe or CXL. They're just talking about the GPU going straight to the SSD, without having to involve the CPU. This is one of the things CXL is designed to do, so I hope that's what they have in mind.

CXL is a great standard in the datacenter. -

bit_user Reply

As mentioned, the "HBF" is the way that must be integrated tightly with the GPUs.DS426 said:Oh I know. Still, it seems like one step closer and something that will have to happen eventually to meet the insatiable demand of AI compute. It's still many steps away for sure.

As for everything else, there'd be tons of headroom merely by somehow incorporating the SSDs into the NVLink fabric.