OpenCL In Action: Post-Processing Apps, Accelerated

We've been bugging AMD for years now, literally: show us what GPU-accelerated software can do. Finally, the company is ready to put us in touch with ISVs in nine different segments to demonstrate how its hardware can benefit optimized applications.

Tom's Hardware Q&A With ArcSoft

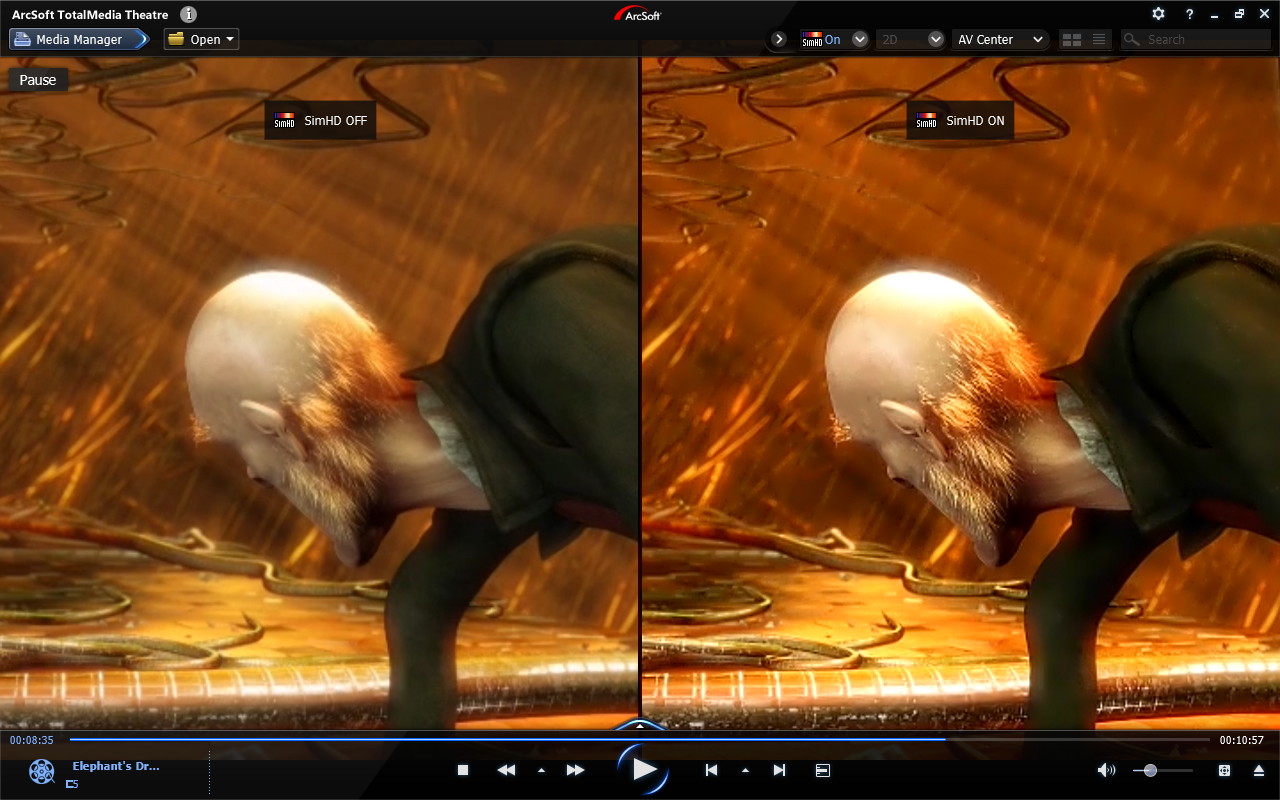

ArcSoft’s Total Media Theater is one of the top names in PC-based movie playback, and the SimHD functionality within the application, which enhances video with resolution upscaling and other features, has long been an early beneficiary of GPU-based hardware acceleration. Kam Shek, director of technical marketing for ArcSoft, sat down with Tom’s Hardware to provide an inside glimpse of his company’s process in making the switch to OpenCL-based acceleration.

Tom's Hardware: Many people fail to appreciate the expense and hard work required of hardware vendors in order to enable next-generation software. Obviously, the graphics vendors have a vested interest in popularizing OpenCL. How did that interest translate into action with ArcSoft?

Kam Shek: There are a couple of things we’ve done in optimizing our software. For AMD specifically, we added support for the fixed-function UVD 3 decode logic that includes hardware acceleration for the MPEG-4 ASP [Advanced Simple] profile. We also have our SimHD technology, which is our in-house upscaler. We recently ported that to the OpenCL language. In the past, we used ATI Stream, but about six months ago, we started the port to the new industry standard, working very closely with the company on that.

TH: Why did you choose to support OpenCL?

KS: Because OpenCL provides a heterogeneous environment for us, meaning we can actually program on OpenCL regardless of what part of the algorithm might be running on the CPU or GPU. As a result, we can have a much better balancing of the system resources when we schedule our algorithm between those two.

TH: In moving from proprietary approaches like Stream and CUDA to OpenCL, what advantages do you see in the new technology over the old?

KS: Well, one of the most important is that OpenCL is an open standard. ATI Stream was proprietary. Second, OpenCL allows us to scale better between the CPU and GPU. Let me explain a little more what we do with SimHD. SimHD is about more than upscaling. It has multiple processing units in it. One is for scaling, but then there’s a denoise unit, deblocking, sharpening, dynamic lighting, etc. So some of the algorithms by nature run better on CPU. For instance, if there’s case switching, which happens a lot in dynamic lighting, that’s more efficient on the CPU. But for floating-point, high-precision calculations, those actually run better on the GPU. So we schedule our algorithm between which function runs better on which processing core, then we balance accordingly.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

TH: Do you have a sense of the optimization ratio, CPU versus GPU?

KS: I think we’re seeing, on our AMD system here, CPU usage drop by about 10% and then the GPU utilization increases about 20%.

TH: So increasing GPU usage by 20% saves 10% off your CPU utilization?

KS: I’d say 15% to 20%, yes, but I’d probably need to look up the exact number.

TH: Are there any considerations that must be made when coding for an APU compared to a discrete GPU?

KS: No, from an OpenCL perspective, it’s all the same. Now, before we run the algorithm, we do query the capability of the graphics. If the graphics have more Stream cores available, then we’ll run more algorithms on them. But in general, it’s the same. We don’t need a different binary for discrete graphics versus an APU. However, I should add something. As I said, the GPU is better for many floating-point, high-precision tasks. The CPU version for these algorithms are somewhat streamed down, so the precision is not as good as running on the GPU. The algorithm for the GPU is more complex than the one for the CPU, so it provides better precision.

Current page: Tom's Hardware Q&A With ArcSoft

Prev Page Test Setup And Benchmarks Next Page Tom's Hardware Q&A With MotionDSP-

bit_user amuffinWill there be an open cl vs cuda article comeing out anytime soon?At the core, they are very similar. I'm sure that Nvidia's toolchain for CUDA and OpenCL share a common backend, at least. Any differences between versions of an app coded for CUDA vs OpenCL will have a lot more to do with the amount of effort spent by its developers optimizing it.Reply

-

bit_user Fun fact: President of Khronos (the industry consortium behind OpenCL, OpenGL, etc.) & chair of its OpenCL working group is a Nvidia VP.Reply

Here's a document paralleling the similarities between CUDA and OpenCL (it's an OpenCL Jump Start Guide for existing CUDA developers):

NVIDIA OpenCL JumpStart Guide

I think they tried to make sure that OpenCL would fit their existing technologies, in order to give them an edge on delivering better support, sooner. -

deanjo bit_userI think they tried to make sure that OpenCL would fit their existing technologies, in order to give them an edge on delivering better support, sooner.Reply

Well nvidia did work very closely with Apple during the development of openCL. -

nevertell At last, an article to point to for people who love shoving a gtx 580 in the same box with a celeron.Reply -

JPForums In regards to testing the APU w/o discrete GPU you wrote:Reply

However, the performance chart tells the second half of the story. Pushing CPU usage down is great at 480p, where host processing and graphics working together manage real-time rendering of six effects. But at 1080p, the two subsystems are collaboratively stuck at 29% of real-time. That's less than half of what the Radeon HD 5870 was able to do matched up to AMD's APU. For serious compute workloads, the sheer complexity of a discrete GPU is undeniably superior.

While the discrete GPU is superior, the architecture isn't all that different. I suspect, the larger issue in regards to performance was stated in the interview earlier:

TH: Specifically, what aspects of your software wouldn’t be possible without GPU-based acceleration?

NB: ...you are also solving a bandwidth bottleneck problem. ... It’s a very memory- or bandwidth-intensive problem to even a larger degree than it is a compute-bound problem. ... It’s almost an order of magnitude difference between the memory bandwidth on these two devices.

APUs may be bottlenecked simply because they have to share CPU level memory bandwidth.

While the APU memory bandwidth will never approach a discrete card, I am curious to see whether overclocking memory to an APU will make a noticeable difference in performance. Intuition says that it will never approach a discrete card and given the low end compute performance, it may not make a difference at all. However, it would help to characterize the APUs performance balance a little better. I.E. Does it make sense to push more GPU muscle on an APU, or is the GPU portion constrained by the memory bandwidth?

In any case, this is a great article. I look forward to the rest of the series. -

What about power consumption? It's fine if we can lower CPU load, but not that much if the total power consumption increase.Reply