Workstation Storage: Modeling, CAD, Programming, And Virtualization

Visual Studio (Programming): Compiling Code

| Overall Statistics | Header Cell - Column 1 |

|---|---|

| Elapsed Time | 49:54 |

| Read Operations | 1071 |

| Write Operations | 61 070 |

| Data Read | 64.31 MB |

| Data Written | 2.08 GB |

| Disk Busy Time | 10.966 s |

| Average Data Rate | 199.62 MB/s |

Even though programmers spend much of their time editing code, that task doesn't require a fast CPU or SSD. Really, performance is most needed for compiling.

There are a variety of examples we could have used to demonstrate a compiling workloads, but we wanted something common and familiar. That's why we downloaded the source code for Firefox and used Visual Studio 2010's compiler. The Mozilla foundation largely prepares the source code, which makes it easy to compile.

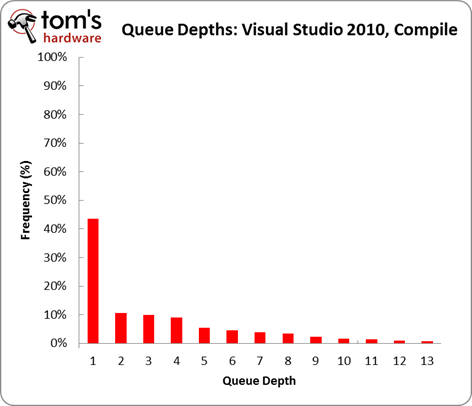

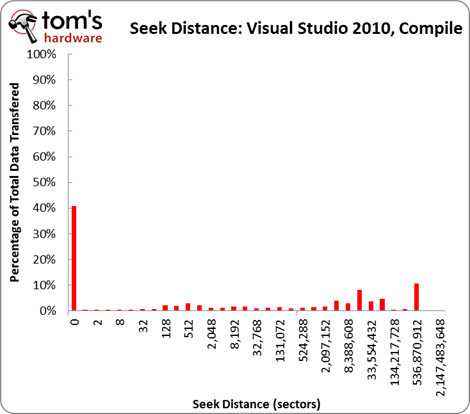

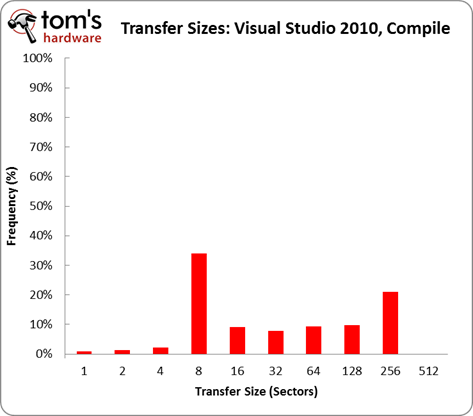

According to the trace, a majority of operations occur at a queue depth higher than one. Because the compiler accesses multiple files in quick succession, operations quickly stack up. Interestingly, there's also a fairly even balance of 4 KB random and 128 KB sequential transfers. This is a little unexpected, because most of the source code files are less than 10 KB in size.

Consequently, compiling code isn't a usage scenario where SSDs provide a clear lead. Remember, reading and writing random data is where hard drives fall behind SSD performance most dramatically. This difference (or lack thereof) is demonstrated when we compare our single Vertex 3 a pair of Caviar Green 1 TB drives in RAID 0. The SSD-based system takes 49 minutes to compile the whole code base, whereas the hard drive based system finishes the job in 55 minutes.

I/O Trends:

- 43% of all operations occur at a queue depth of one

- 30% of all operations occur at queue depth between two and four

- 41% of all data transferred is sequential

- 29% of all operations are 4 KB in transfer size

- 21% of all operations are 128 KB in transfer size

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Visual Studio (Programming): Compiling Code

Prev Page Visual Studio (Programming): Opening Project Next Page MATLAB: Loading Data-

clownbaby Thanks for the workstation analysis. I'd really like to see some tests comparing performance while utilizing multiple programs and lots of disk caching. I.E. having many complimentary programs open, photoshop, illustrator, after effects and premiere pro ), with many gigs worth or projects opened and cached and multiple background renders. Something like this would be a worst case scenario for me, and finding the balance between ssds, raided disks, and memory properly configured would be interesting.Reply

I currently run my OS and production software from an SSD, have 24gb of system memory, page file set to write to ssd, and user files on striped 1tb drives. I'd be interested to see the benefits of installing a separate small ssd only to handle a large page-file, and different configurations with swap drives. Basically, there are a lot of different drive configuration options with all of the hardware available atm, and it would be nice to know the most streamlined/cost effective setup. -

acku clownbabyThanks for the workstation analysis. I'd really like to see some tests comparing performance while utilizing multiple programs and lots of disk caching. I.E. having many complimentary programs open, photoshop, illustrator, after effects and premiere pro ), with many gigs worth or projects opened and cached and multiple background renders. Something like this would be a worst case scenario for me, and finding the balance between ssds, raided disks, and memory properly configured would be interesting.I currently run my OS and production software from an SSD, have 24gb of system memory, page file set to write to ssd, and user files on striped 1tb drives. I'd be interested to see the benefits of installing a separate small ssd only to handle a large page-file, and different configurations with swap drives. Basically, there are a lot of different drive configuration options with all of the hardware available atm, and it would be nice to know the most streamlined/cost effective setup.Reply

We'll look into that!

Cheers,

Andrew Ku

TomsHardware.com -

cknobman As an applications developer working on a brand new dell m4600 mobile workstation with a slow 250 mechanical hard drive it is very interesting to see tests like this and makes me wonder how much improvement I would see if my machine was equipped with an SSD.Reply

I would really like to see more multitasking as well including application startup and shutdowns. Throughout the day I am constantly opening and closing applications like remote desktop, sql management studio, 1-4 instances at a time of Visual Studio 2010, word, excel, outlook, visio, windows xp virtual machine, etc.......

-

teddymines Is having to wait for a task really that much of a deal-breaker? I tend to use that time to hit the restroom, get a coffee, discuss with co-workers, or work on another task. Besides, if computers get to be too fast, then we'll be expected to get more done. ;^)Reply -

willard ReplyConsequently, compiling code isn't a usage scenario where SSDs provide a clear lead.

I disagree. Try the test again with a distributed build system.

I work on a project with around 3M lines of code, which is actually smaller than Firefox. To get compile times down, we use a distributed build system across about a dozen computers (all the developers and testers pool their resources for builds). Even though we all use 10k RPM drives in RAID 0 and put our OS on a separate drive, disk I/O is still the limiting factor in build speed.

I'll agree that building on a single computer, an SSD has little benefit. But I'd imagine that most groups working on very large projects will probably try to leverage the power of more than one computer to save developer resources. Time spent building is time lost, so hour long builds are very, very expensive. -

jgutz2006 ackuWe'll look into that!Cheers,Andrew KuTomsHardware.comReply

On top of the SSD Cache, i would like to know where this performance gains plateau off (like if a 16gb SSD cache performs the same as a 32 or 64+ etc etc)

I'd like to see these put up against some SAS drives in RAID 0, RAID 1 and RAID10 @ 10k and 15k RPMs. I"m currently running a dual socket xeon board with 48gb RAM on a 120GB Vertex2 SSD and a 4 pack of 300GB 10K SAS Disks in RAID10.

I think i'd LOVE to see Something along the lines of the Momentus XT in a commercial 10k/15k RPM SAS disk with 32gb SSD which could be the sweet spot for extremely large CAD/3dModeling Files out there. -

It's nice that you test "workstation software", however you do not test any compositing software such as Eyeon Fusion or Adobe After Effects. Testing 3D rendering seems pretty silly. Compositing and video editing is a LOT more demanding on storage.Reply

-

jaquith Very nice Article & Thanks! Pictures, in this case a Video is all you needed to make the point ;)Reply

Andrew - the reference to the 'Xeon E5-2600 Workstation' completely screwed me up, the benchmarks made no sense until I looked at the 'Test Hardware' and then noticed an i5-2500K??!! Please, swap-out the image, it's misleading at best.

Try doing this on a RAM Drive and better on the DP E5-2600 with 64GB~128GB; 128GB might be a hard one. I've been 'trying' to experiment with SQL on a RAM Drive (my X79 is out for an RMA visit). However, the few times with smaller databases it's remarkable. Like you feel about going from HDD to SSD's, it's the same and them some going from a RAM Drive. Also, playing with RAM Cache on SSD's, stuck until RMA is done.