AMD 4800S Xbox Chip Shows Perils of Pairing CPUs with GDDR6

Turns out GDDR6 is much worse in the latency department compared to DDR4 and DDR5.

Digital Foundry tested an Xbox Series X-inspired AMD 4800S desktop kit to see how it would fare against some of AMD's standard desktop Ryzen processors in gaming tasks. This kit is unique because it integrates an Xbox Series X SoC and GDDR6 memory. Despite its gaming-focused nature, the console CPU did not fare well and was constantly outperformed by a Ryzen 5 3600, despite the additional memory bandwidth GDDR6 offers and the Xbox chip's two additional cores.

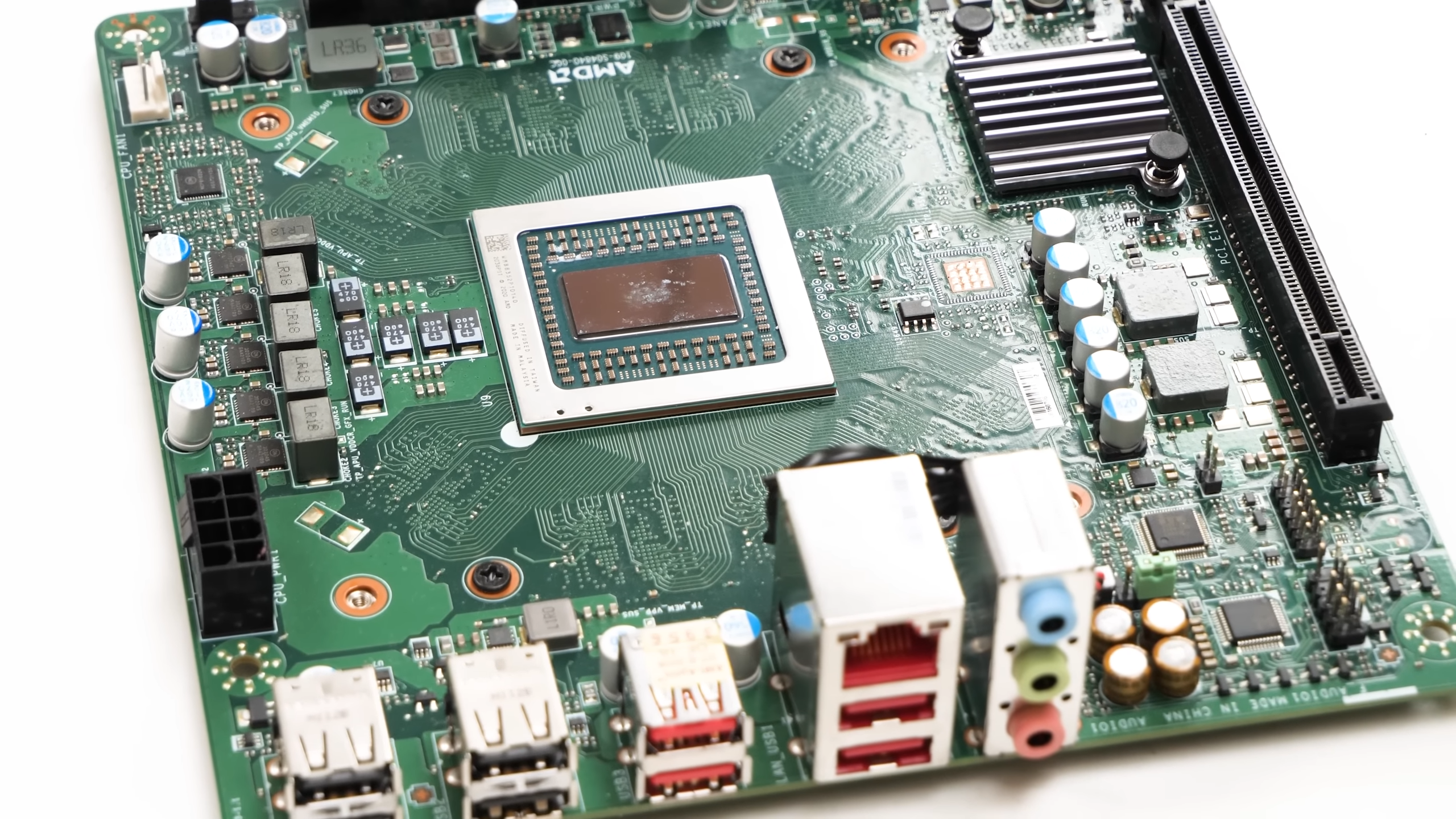

AMD developed the 4800S desktop kit to sell off Xbox Series X SoCs that lacked a functioning GPU. As a result, these kits feature no integrated graphics, leaving the CPU as the only working part of the chip. The kit comes in a micro-ATX form factor, featuring a standard AM4 Wraith cooler and enough USB ports and PCIe slots to set up an average gaming PC.

This makes the 4800S an interesting candidate for testing since it can show us how AMD's latest console hardware performs in a like-for-like comparison against current PC hardware in PC games. The chip itself is your typical Zen 2 8-core, 16-thread design. However, what makes the CPU platform different from AMD's standard desktop solutions, is the integration of GDDR6 memory for the entire memory system and less cache compared to AMD's Zen 2 desktop CPUs. Digital Foundry did not say how much cache it has, but apparently, it has a similar cache capacity to AMD's Ryzen 7 4750G, which features 8MB of L3 cache.

Compared to the Xbox Series X hardware, the 4800S does vary slightly. The CPU turbo clock is higher, operating at up to 4GHz boost compared to 3.6GHz on Microsoft's console, and features full access to all eight cores, which is not the case with the Series consoles with one core reserved for OS functions. Additionally, the 4800S kit features less than half the GDDR6 ICs found on the Series X, with just four compared to ten. But with no integrated GPU active, there's no need to have all ten just for the CPU since all four ICs still provide 16GB of memory capacity. Sadly we have no idea if the memory bandwidth was also affected due to the IC changes, but Digital Foundry does not mention it, so we suspect it might be the same as the Series X.

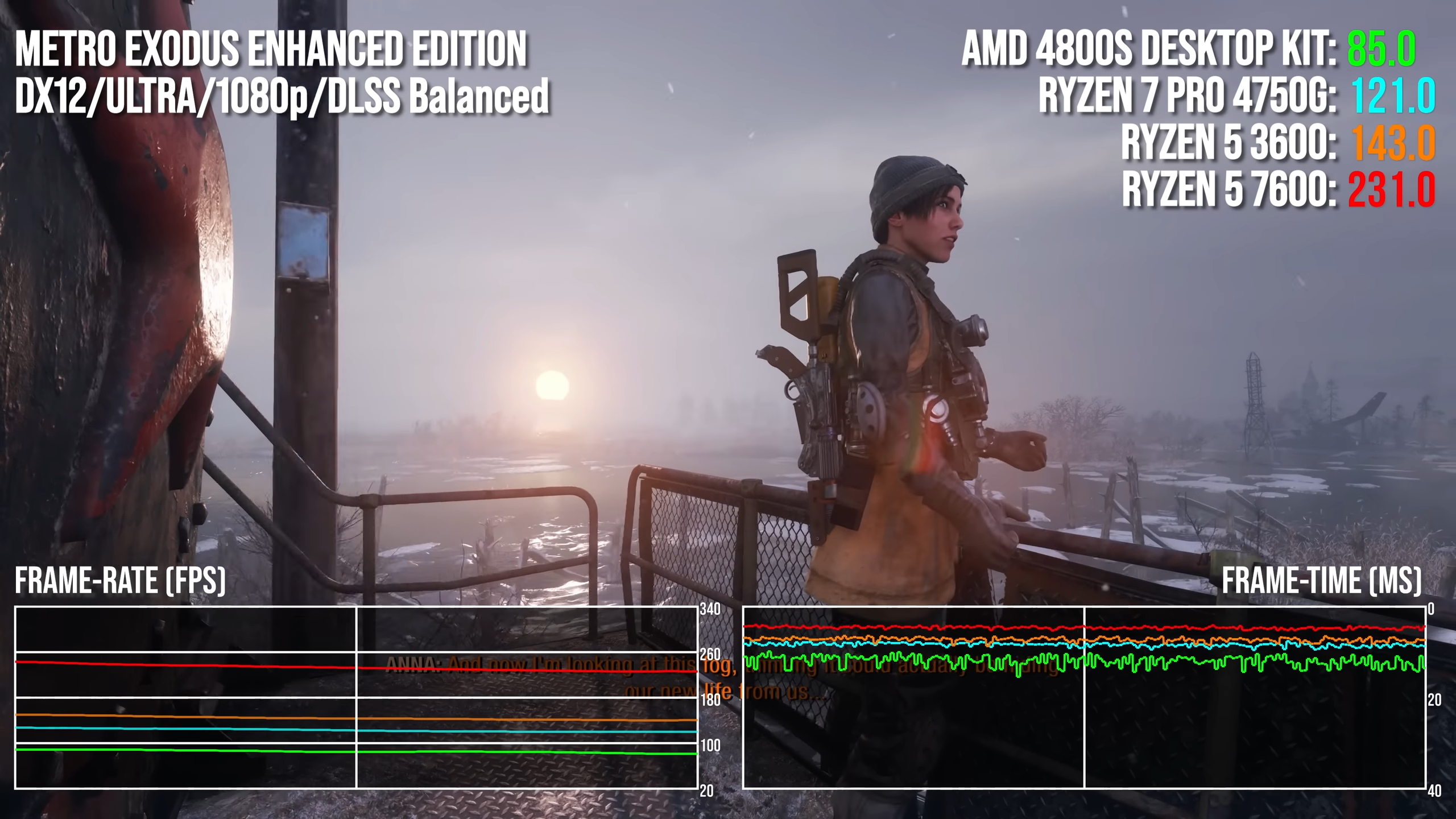

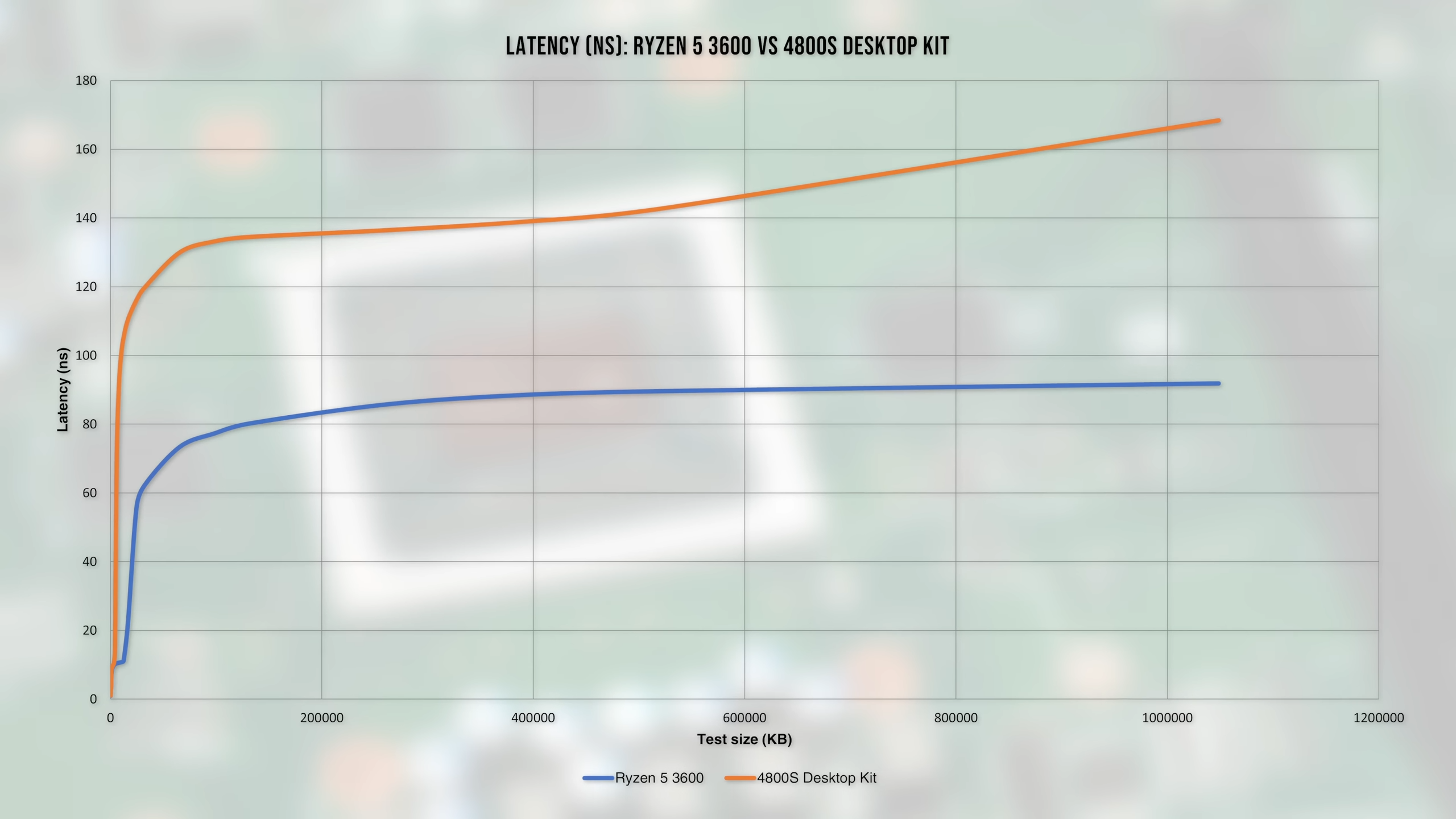

Ironically, Digital Foundry's testing revealed that the Xbox Series chip inside the 4800S desktop kit is not as good of a gaming processor as one might think. The CPU failed to outperform similar CPUs with the Zen 2 architecture, including the Ryzen 5 3600 and Ryzen 7 Pro 4750G. The culprit was not the CPU itself, but the GDDR6 memory the CPU is attached to, which provides substantially better memory bandwidth than DDR4 memory but vastly inferior latency results.

Digital Foundry found that the latency disparity is so bad that the 4800S chip could not outperform any of its Zen 2 counterparts in Digital Foundry's testing suite, including Call of Duty: Black Ops: Cold War, Cyberpunk 2077, Crysis 3 Remastered, Ashes of the Singularity, Metro Exodus: Enhanced Edition, CS:GO, Far Cry 6, Hitman 3, and Microsoft Flight Simulator. There were also a few synthetic benchmarks, including memory testing.

Despite having a two-core advantage over the 3600, and a respectable 4GHz clock speed, the 8-core console chip could not outperform the 3600 or 4750G in most games tested. The closest it could come was matching the performance of the 4750G — which features less L3 cache than the 3600, but that was a best-case scenario, and it couldn't do it all the time.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

This shows how cache and latency are crucial to boosting game performance on the CPU side and why GDDR6 is not used in conjunction with modern desktop CPUs. GDDR6 offers excellent memory bandwidth potential, but as Digital Foundry showed, it comes at the considerable cost of memory latency which is more important than bandwidth for gaming CPUs.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

bit_user Thanks for posting, but I already saw benchmarks of this (including latency) months ago. I forget where.Reply

What's interesting is that it did very nicely on memory bandwidth, matching or possibly even beating the bandwidth of anything out there on DDR4. And keep in mind that the CPU portion of the SoC doesn't get access to the entire GDDR6 data rate. -

MooseMuffin This write up makes it sound like the choice of GDDR6 is just a bad decision, but its there for the graphics, and this chip doesn't have a working GPU.Reply -

bit_user Reply

Memory latency is on the order of 100 nanoseconds (10^-7), while humans can only perceive latency on the order of 10s of milliseconds (10^-2). So, that's 5 orders of magnitude difference, or a ratio of about 100k.Kamen Rider Blade said:Low Latency matters for Real Time Gaming & Responsiveness in Applications

Therefore, the only way that you would perceive higher-latency memory is if you're using a CPU-bound software that makes heavy use of main memory and gets a comparatively poor L3 cache hit-rate. That covers some games, but not all.

Furthermore, it's probably not much different than if you just had low-latency memory that was also a lot lower-bandwidth. In other words, it's just one of many performance parameters that can potentially affect system performance, if it's worsened. It's not that special, particularly when you consider that CPUs can use techniques like caches, prefetching, and SMT to partially hide it. -

bit_user Reply

Exactly. Sony and MS knew what they were doing, when they opted for a unified pool of GDDR6 memory. The goal was to build the fastest machine possible, at a specific price point.MooseMuffin said:This write up makes it sound like the choice of GDDR6 is just a bad decision, but its there for the graphics, and this chip doesn't have a working GPU.

I wonder what the fastest new machine you could spec out with mid-2020 era hardware, at mid-2020 MSRP, equaling the console's price point. Or, if you tried to build an identically-performing machine, at launch (again, assuming non-pandemic prices), how much would it have cost? -

tamalero Reply

Id say human perception in this is irrelevant.bit_user said:Memory latency is on the order of 100 nanoseconds (10^-7), while humans can only perceive latency on the order of 10s of milliseconds (10^-2). So, that's 5 orders of magnitude difference, or a ratio of about 100k.

Therefore, the only way that you would perceive higher-latency memory is if you're using a CPU-bound software that makes heavy use of main memory and gets a comparatively poor L3 cache hit-rate. That covers some games, but not all.

Furthermore, it's probably not much different than if you just had low-latency memory that was also a lot lower-bandwidth. In other words, it's just one of many performance parameters that can potentially affect system performance, if it's worsened. It's not that special, particularly when you consider that CPUs can use techniques like caches, prefetching, and SMT to partially hide it.

As a single delay could cause a cascade of events in computers that brings the chain to slowdown even further until we see what we call "low fps". -

bit_user Reply

Although they have limits, modern computers are designed with queues, buffering, prefetching, speculation, threading, etc. to maximize system throughput, even in the face of heavy memory accesses.tamalero said:Id say human perception in this is irrelevant.

As a single delay could cause a cascade of events in computers that brings the chain to slowdown even further until we see what we call "low fps".

It's pretty rare that you can have one of these mechanisms backfire, but it's certainly possible. Cache-thrashing is a classic example, where a problematic access pattern can trigger an order of magnitude worse performance than raw memory bandwidth should be able to support. Something similar (but hopefully not nearly as bad) could happen with prefetching and speculative execution.

If games are well-optimized, they should mostly avoid those pitfalls. If not, then something like cache-thrashing could be compounded by longer memory-latency, but the effect should still be linear (i.e. only proportional to however much worse the latency is). Nonlinearities are where things get interesting, like your working set increases by just a little bit, but it's enough to blow out of L3 cache and then you're suddenly hit with that huge GDDR6 latency. -

Thunder64 Replybit_user said:Thanks for posting, but I already saw benchmarks of this (including latency) months ago. I forget where.

What's interesting is that it did very nicely on memory bandwidth, matching or possibly even beating the bandwidth of anything out there on DDR4. And keep in mind that the CPU portion of the SoC doesn't get access to the entire GDDR6 data rate.

Probably here. It is well known that GDDR trades latency or bandwidth. GPU's like bandwidth as they are very parallel. CPU's want low latency. -

InvalidError Reply

Memory latency penalty hits are cumulative. While individual events may be imperceptible at the human scale, their cumulative effect when they occur by the millions per second does become macroscopically evident and that is how stutters go away when you do things like upgrade from 2400-18 memory to 3200-16.bit_user said:Memory latency is on the order of 100 nanoseconds (10^-7), while humans can only perceive latency on the order of 10s of milliseconds (10^-2). So, that's 5 orders of magnitude difference, or a ratio of about 100k.

You can throw all of the cache, buffering, prefetching, etc. you want at a CPU, there will always be a subset of accesses that cannot be predicted or mitigated with some algorithms being worse offenders than others. Good luck optimizing memory accesses to things that use hashes for indexing into a sparse array such as a key-value store for one common example. Parsing stuff is chuck-full of input-dependant conditional branches that the CPU cannot do anything about until the inputs are known and outcomes will likely either get stuffed in a key-value store or require looking up stuff from one.bit_user said:Although they have limits, modern computers are designed with queues, buffering, prefetching, speculation, threading, etc. to maximize system throughput, even in the face of heavy memory accesses.

GPUs don't mind GDDRx's increased latency because most conventional graphics workloads are embarrassingly parallel and predictable. -

JamesJones44 I'm not sure I would call this a like-for-like comparison. From a pure CPU point of view I get it and agree. However, without being able to test the integrated GPU vs an equivalent dGPU it's hard to say if there are or aren't benefits for strait up Zen2 build. This is especially true for the frame rate tests.Reply

I also wonder how optimized the drivers are for the 4800s and its features. My guess is not very much, but for this argument ill assume they are optimized enough.