AMD Unveils 7nm EPYC Rome Processors, up to 64 Cores and 128 Threads for $6,950

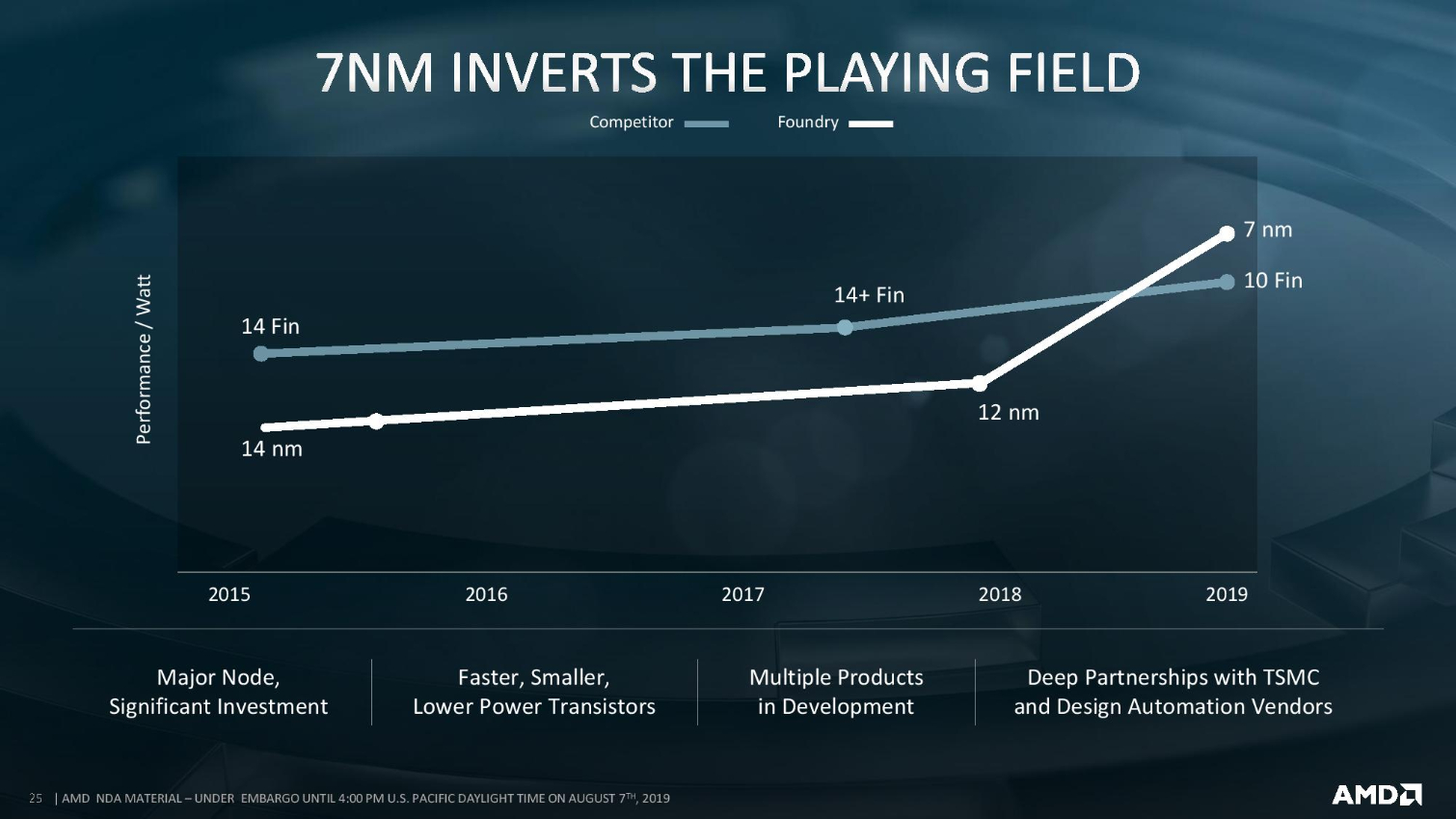

AMD's launch of its 7nm processors marked the first time in the company's history that it had wrested the process lead from Intel, an advantage that can't be overstated. With TSMC's 7nm process paired with AMD's chiplet-based Zen 2 microarchitecture under the hood, AMD's EPYC Rome processors are largely thought to be the turning point that will allow the company to take a serious chomp out of Intel's ~95% data center market share. Even stealing 20% of the server market would have a truly transformative impact on the perennial underdog AMD: Consider that Intel generates more profit in a single day than AMD generates in an entire quarter, and you can get a sense of the seemingly-insurmountable odds that AMD has overcome to get to this moment.

The debut of AMD's EPYC Rome processors marks not only the culmination of the company's big bets made years in advance, shrewd go-to-market strategies, and clever engineering, but it could also mark the beginning of the biggest upset in semiconductor history.

As always, it all starts with the silicon, but the data center also requires an extended period of development from multiple angles, like operating system and software optimizations paired with OEM relationships and establishing a robust hardware ecosystem. That applies doubly-so for a totally new and unique architecture like Zen.

AMD's first-gen EPYC Naples processors allowed the industry to familiarize itself with the new Zen microarchitecture. The processors offered some advantages over Intel's Xeon chips, but big shifts take time and Naples lacked a killer feature that would spur an industry to switch to AMD silicon en masse. Especially an industry that is notoriously conservative in adopting new architectures.

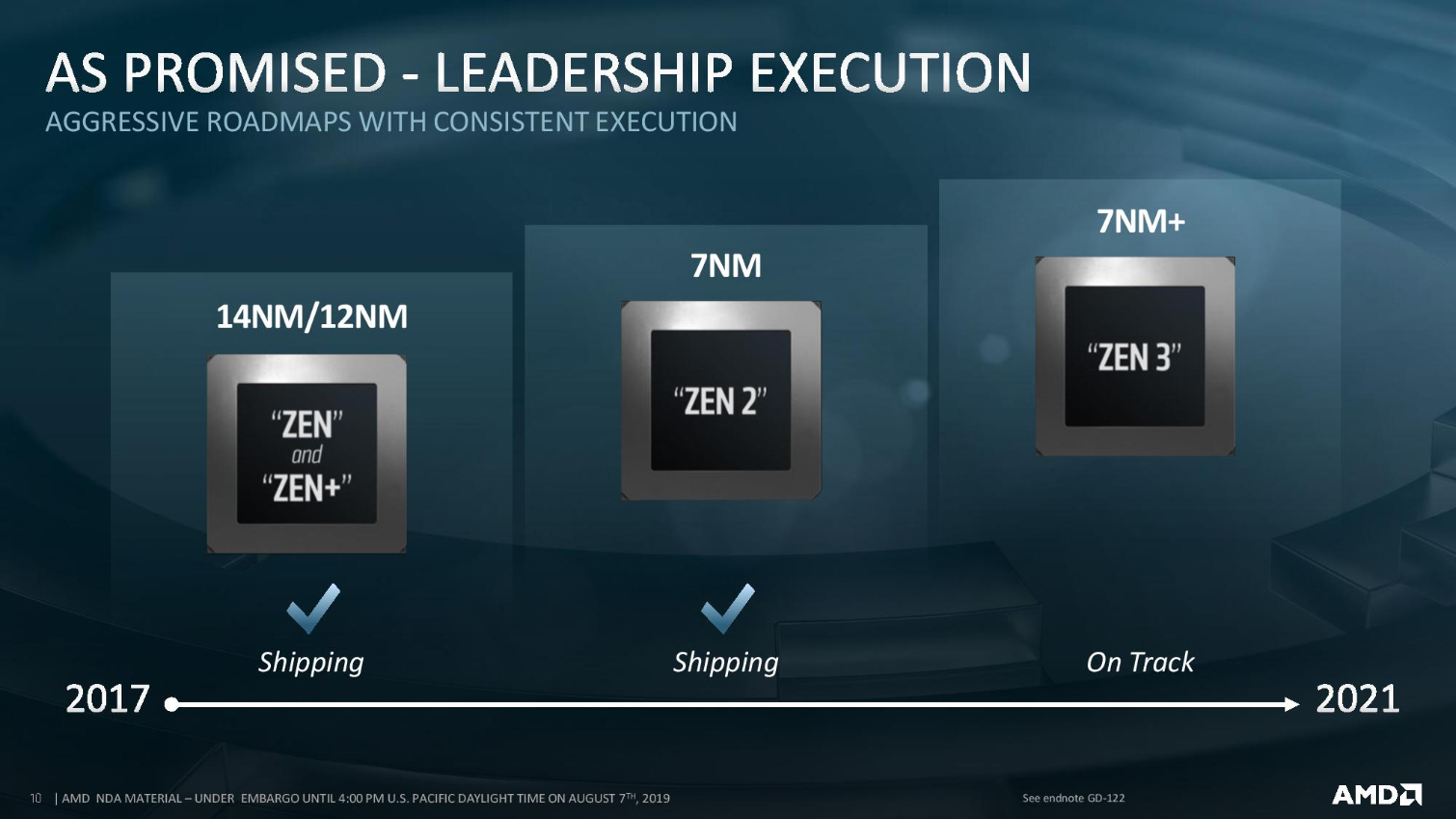

With the Naples silicon on the market (it debuted in 2017), AMD had to make yet another big decision with profound implications: The company could move EPYC to an incrementally faster and efficient 12nm process like it did with its desktop chips, or it could focus on moving directly to the 7nm process.

AMD chose to go for the jugular, as it were, and plowed ahead to the 7nm process, giving it a killer feature that sets the stage for radical improvements in density and power consumption.

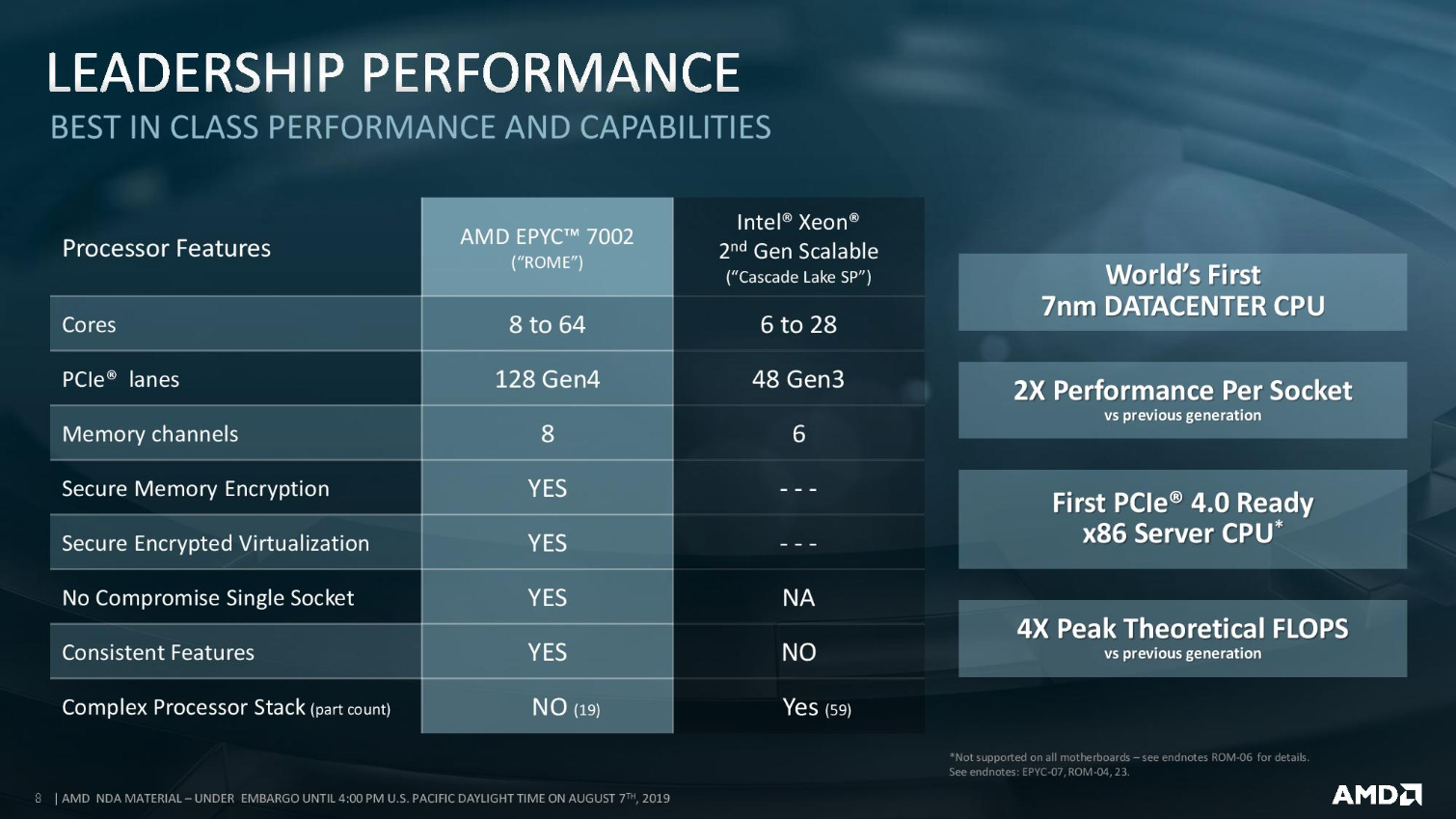

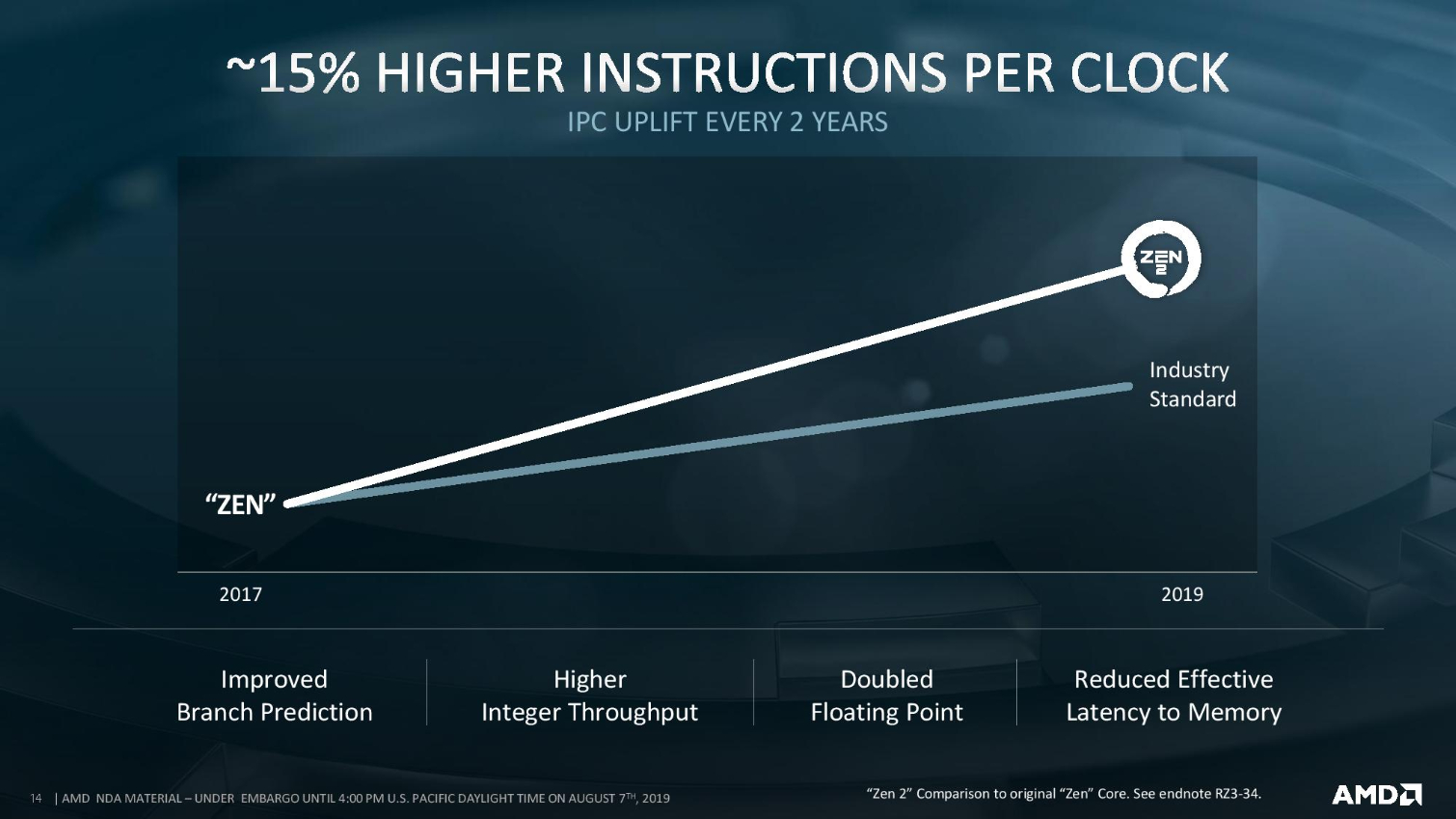

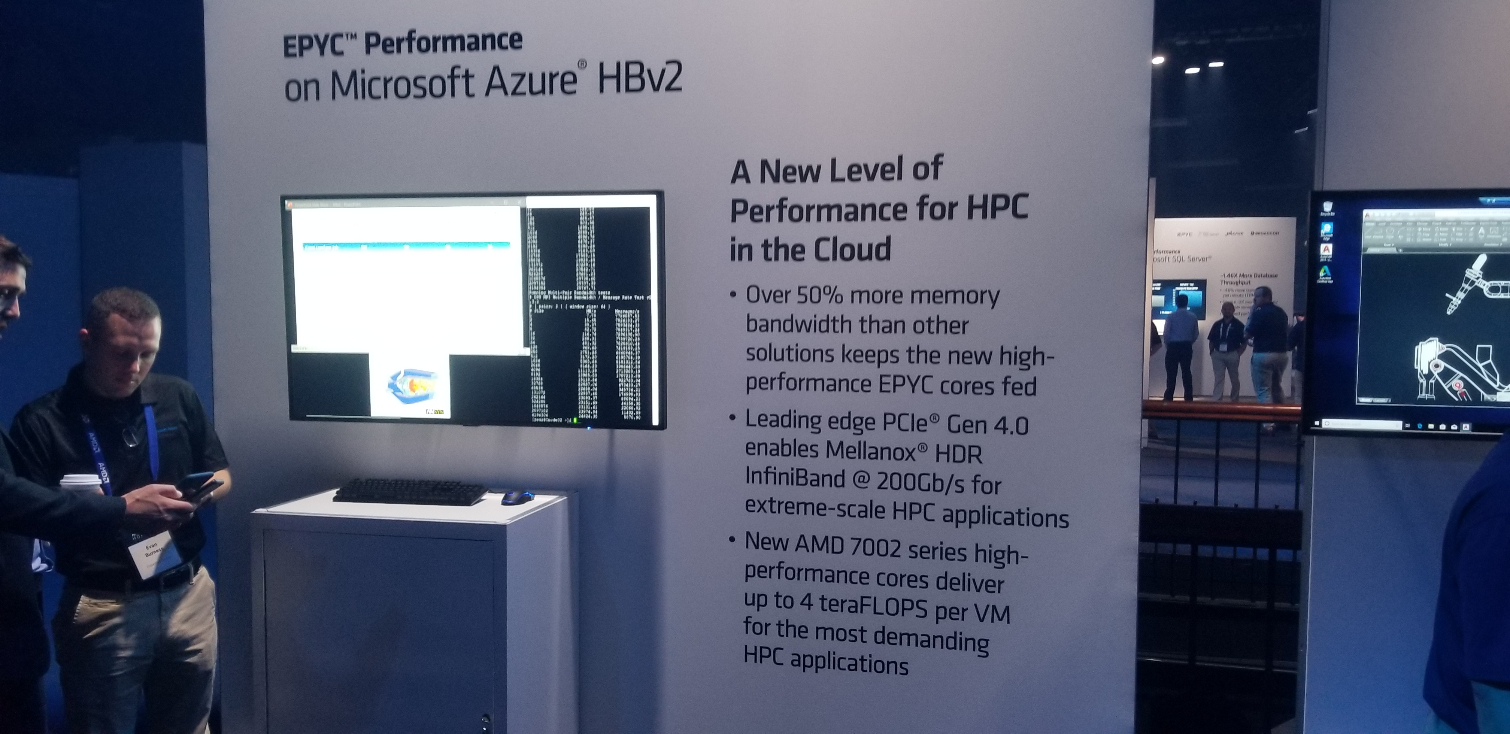

The 7nm process brings density advantages over Intel's 14nm, which equates to higher core counts. It also brings power reductions (albeit with a 12nm I/O die caveat) that lead to more work done per watt (a critical consideration in the data center), higher clock speeds, more cache, and ultra-competitive pricing. Pair that with inherent cost and yield advantages of a chiplet-based design, a revamped Zen 2 architecture that brings an ~15% uplift to instructions per cycle (IPC) throughput, a fast move to PCIe 4.0, and an industry-leading serving of memory channels and throughput for x86 processors, and EPYC is no longer seen as the Intel "alternative." Now it's perceived as the leader in terms of leading-edge features that attract the heavyweights of the industry, as evidenced by the explosive uptake of Rome in the HPC and supercomputing space.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

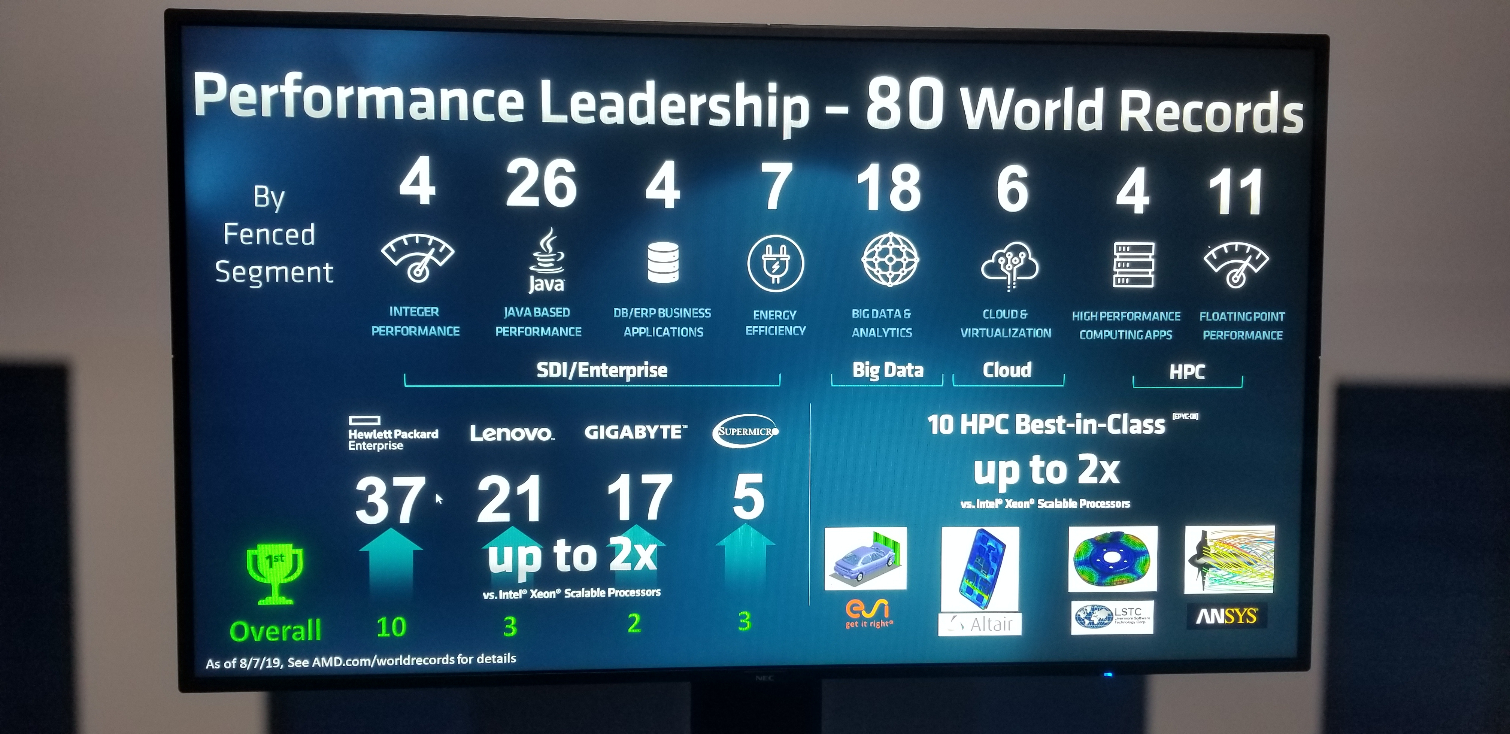

Today the rationale behind those early moves become clear as AMD's partners will post 80 world records, the highest number of world records AMD has had for its data center silicon. Impressively, these records come as big upsets that range from 40-50% up to 80% in a wide range of real-world workloads. That performance uplift comes from quadrupled floating point performance and larger L3 caches that also help in AI/ML workloads, but also industry-leading I/O capabilities that provide doubled throughput to GPU accelerators (not to mention supporting more accelerators per server). That increased PCIe 4.0 throughput also benefits storage devices, particularly with bursts from main memory.

The desktop PC market grabs the flashbulbs, look at the excitement that surrounded the Ryzen 3000 launch, but make no mistake, the data center is the land of big margins paired with high volumes. The data center is the king-maker; just look to Intel for validation of that fact.

If AMD is going to win the larger war with Intel, it must win the data center battle. But Intel isn't just sitting idly by. Let's see what the battle for the data center looks like over the coming years.

The AMD EPYC Rome Processors

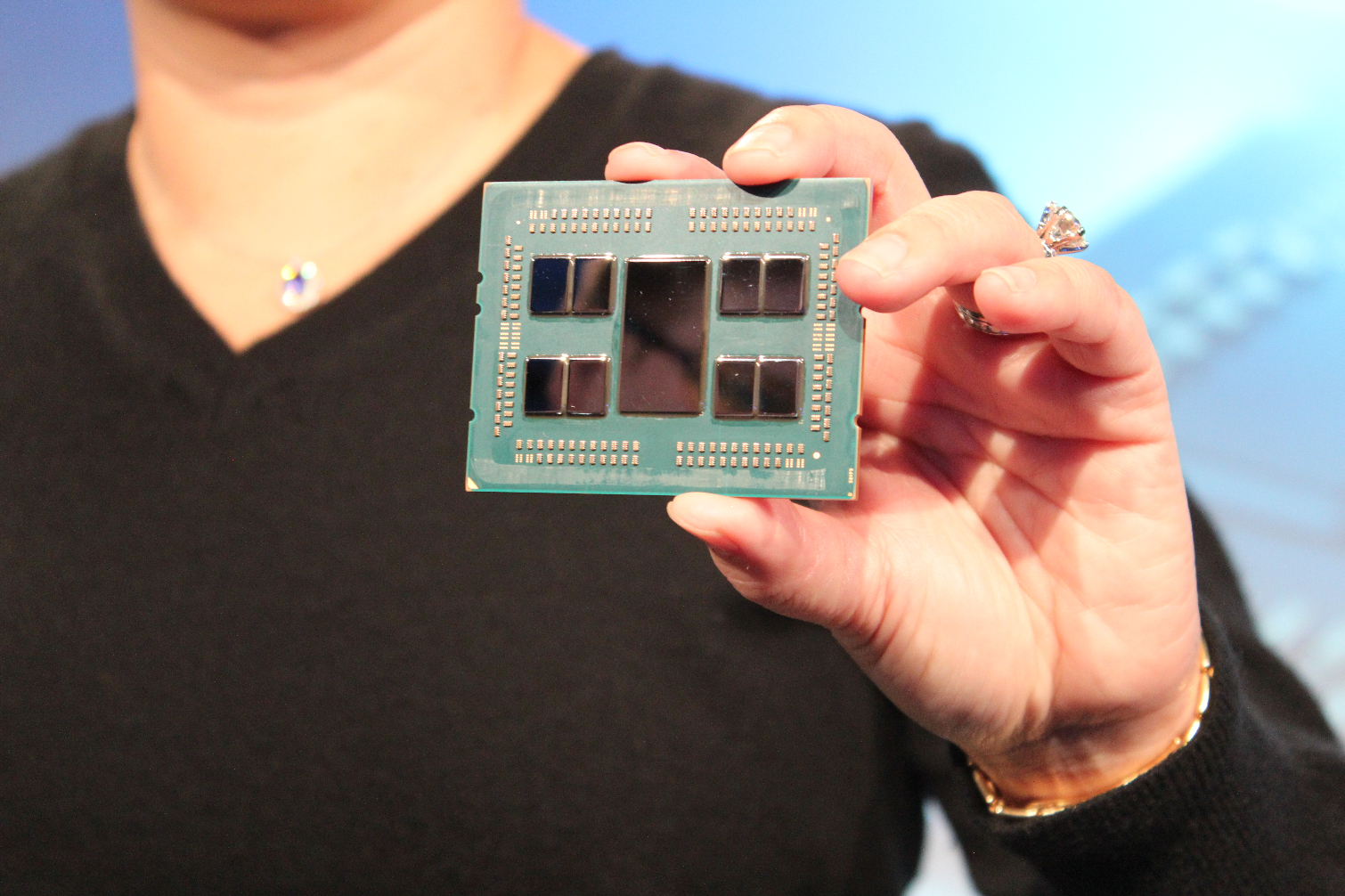

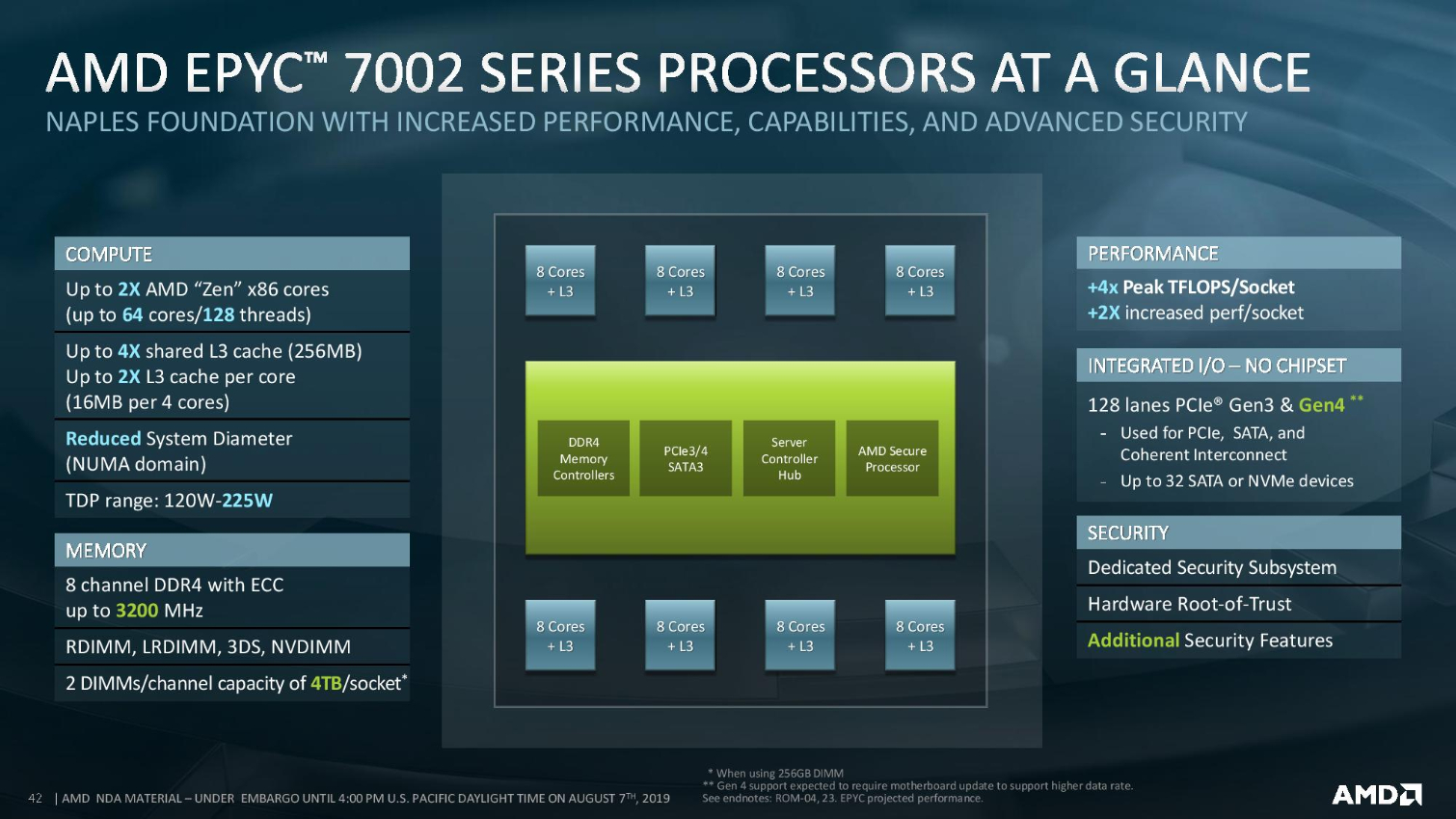

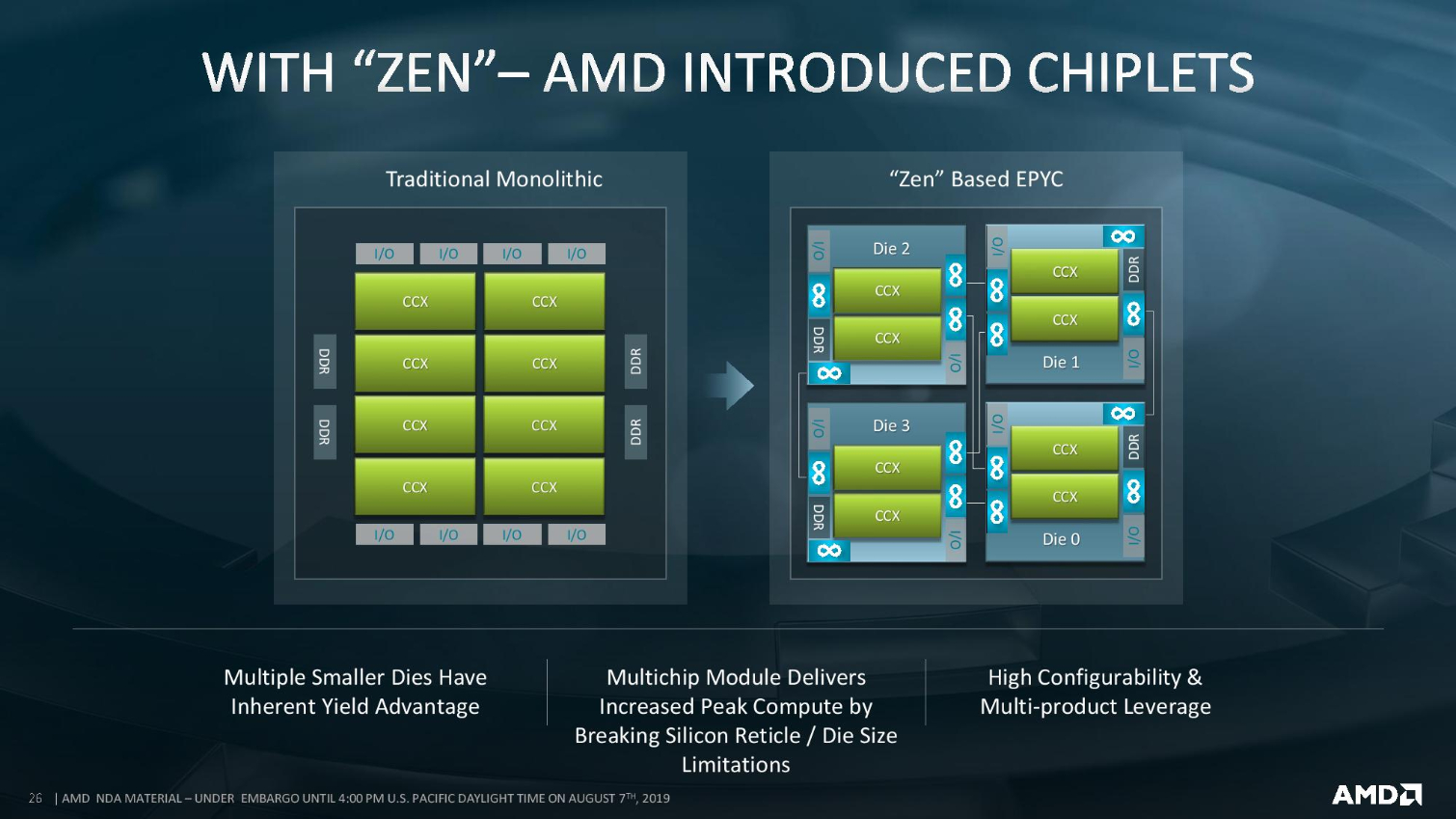

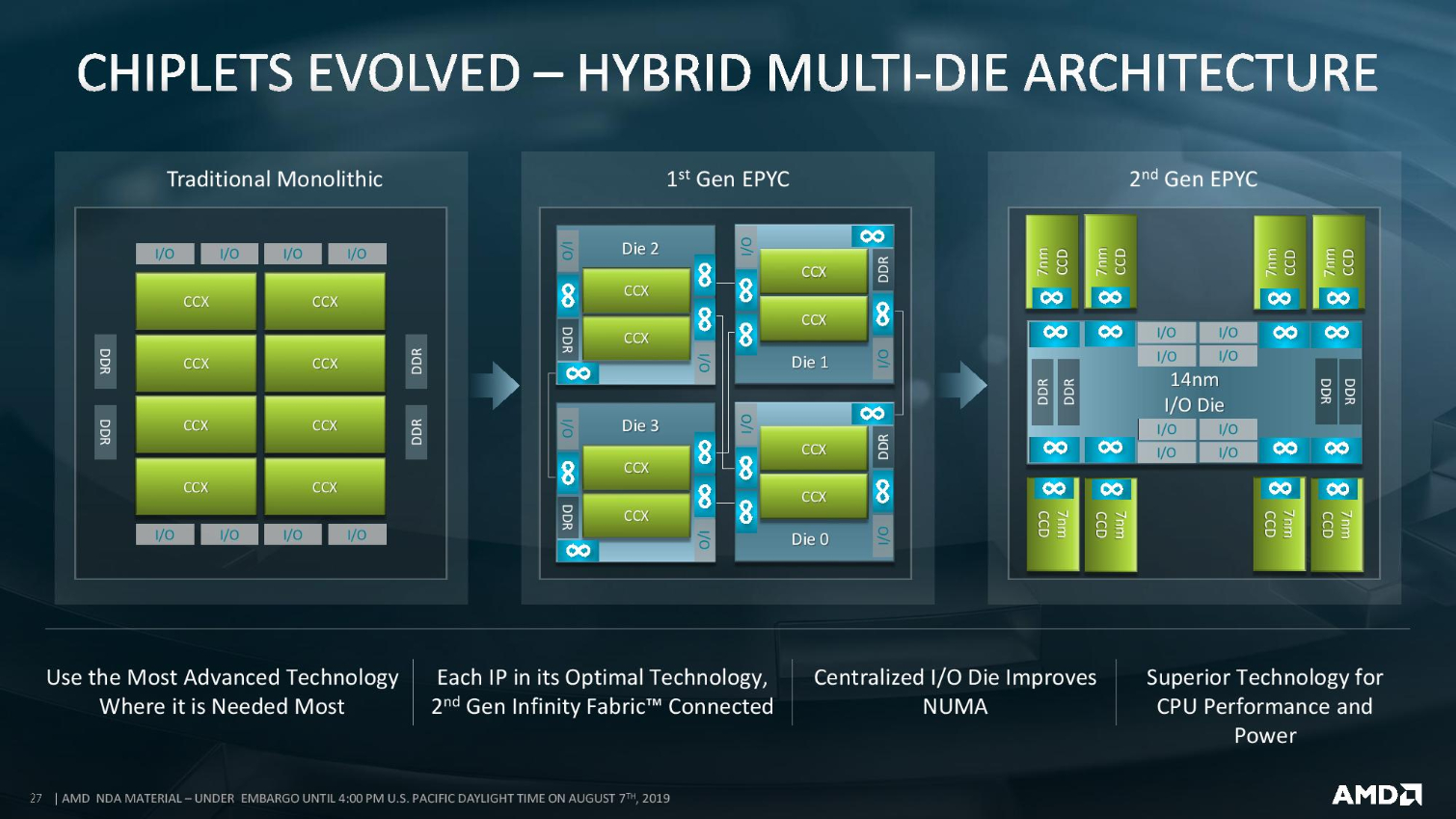

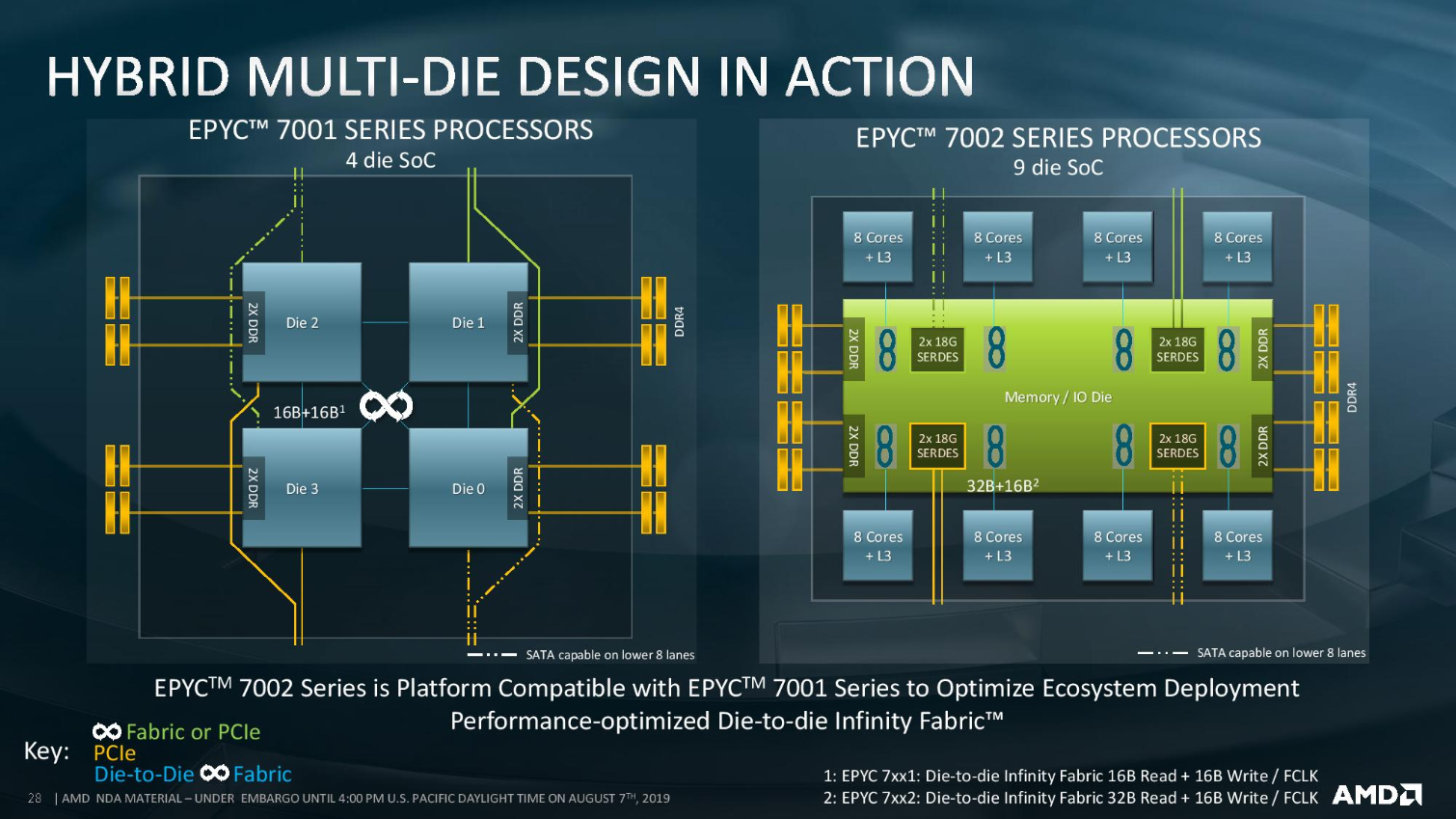

The EPYC Rome SoCs come with a unique architecture that consists of up to eight 7nm compute die with eight cores apiece, connected via the Infinity Fabric to a central 12nm I/O die that houses the memory and PCIe controllers. AMD tailors the number of compute chiplets, and the number of active cores, for each specific model.

The processors drop into the Socket SP3 (FCLGA 4094) interface that is backward compatible with Naples platforms, albeit at the loss of PCIe 4.0 connectivity, and forward compatible with next-gen EPYC Milan models. Custom-built platforms can expose up to 162 lanes of PCIe 4.0 to the user through clever provisioning tricks, while most standard implementations will wield 128 lanes.

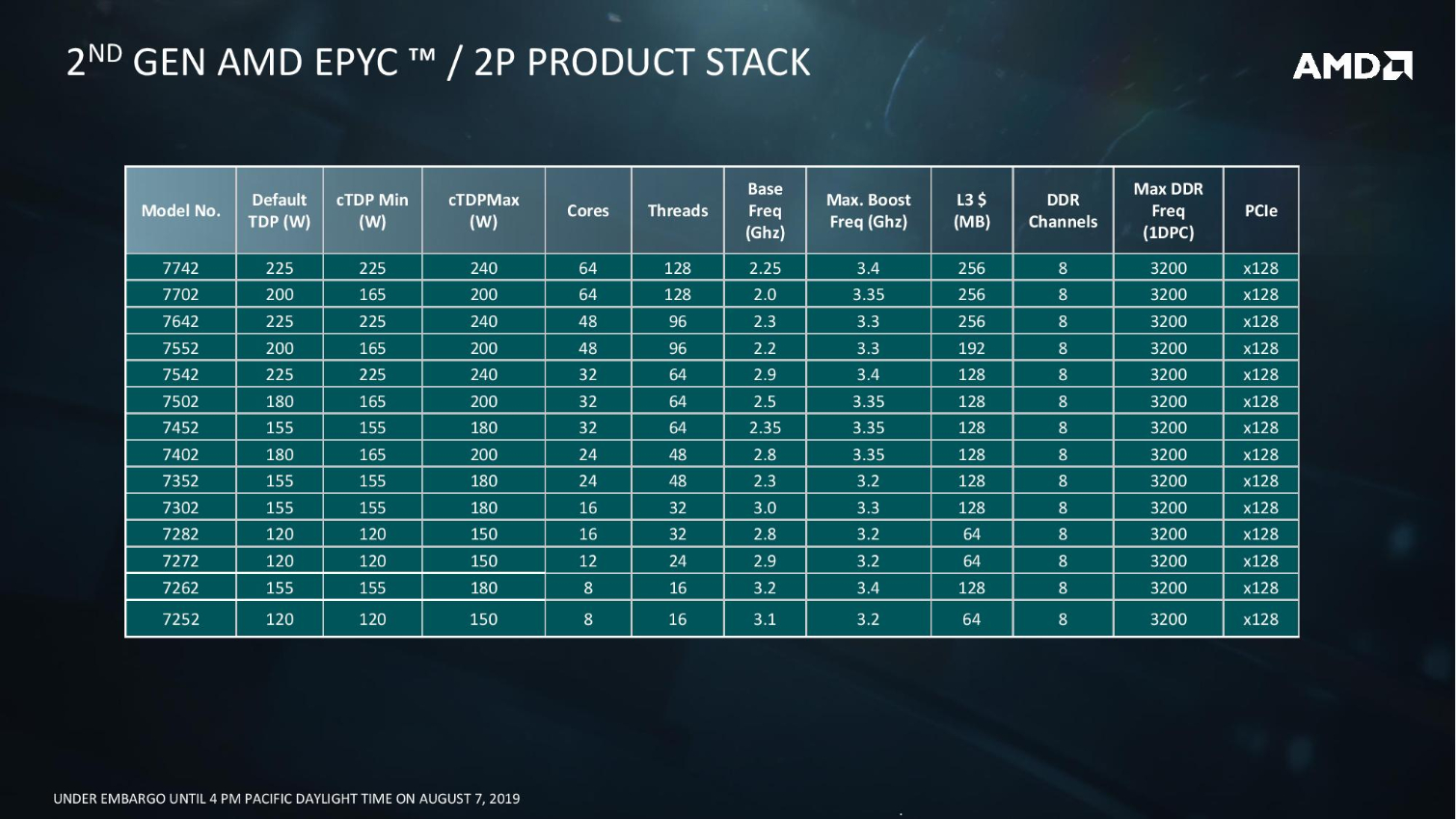

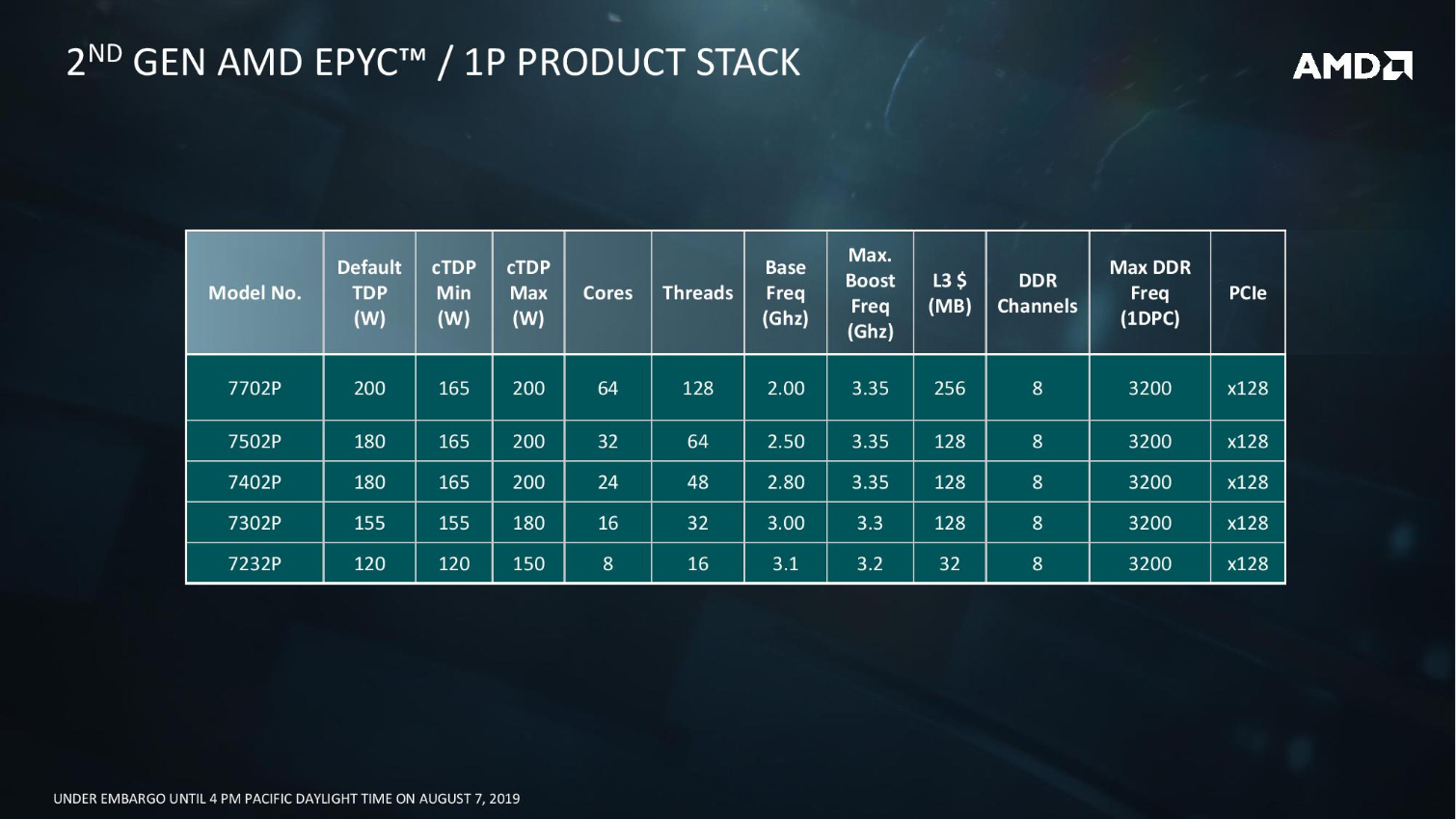

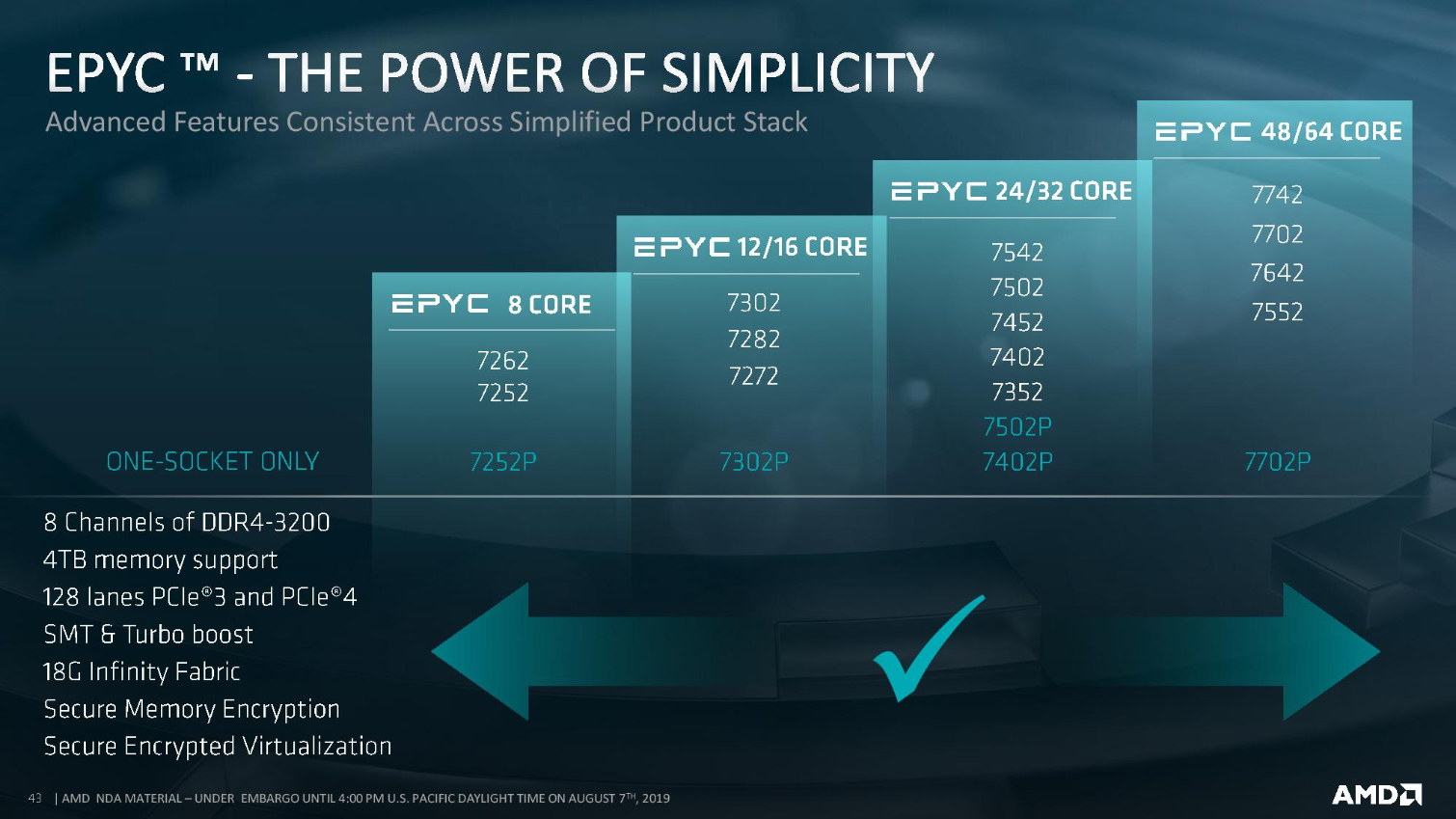

AMD continues its practice of offering specific models for two-socket servers (2P), and models for single-socket servers (denoted by a "P" suffix).

Rome's core counts range from eight cores and 16 threads up to an x86-industry-leading 64 cores and 128 threads. We typically expect turbo frequencies to decline as core counts/TDP's rise, just like we see with Rome's base clocks, but AMD bucks that trend. In fact, it's highest core-count models come with the highest boost frequencies.

Base clock speeds range from 2.0 GHz to 3.2 GHz, while boost clocks range from 3.0 GHz to 3.4 GHz, a notable across-the-board improvement in peak frequencies over the Naples predecessors. That's impressive given that some models come with twice the number of cores, and AMD says the heightened base frequencies should offset some of Intel's per-core performance advantages.

AMD's power-aware boost algorithms also enable high multi-core frequencies, with the EPYC 7742 able to sustain a beastly 3.2 GHz when all cores are loaded. Meanwhile, Intel's biggest general-purpose Cascade Lake Xeon weighs in at 28 cores and 56 threads (no, we aren't counting the exotic Cascade Lake-AP as a viable general-purpose competitor), and that won't change until sometime in the first half of 2020 when Intel fields its new 56-core Cooper Lake models.

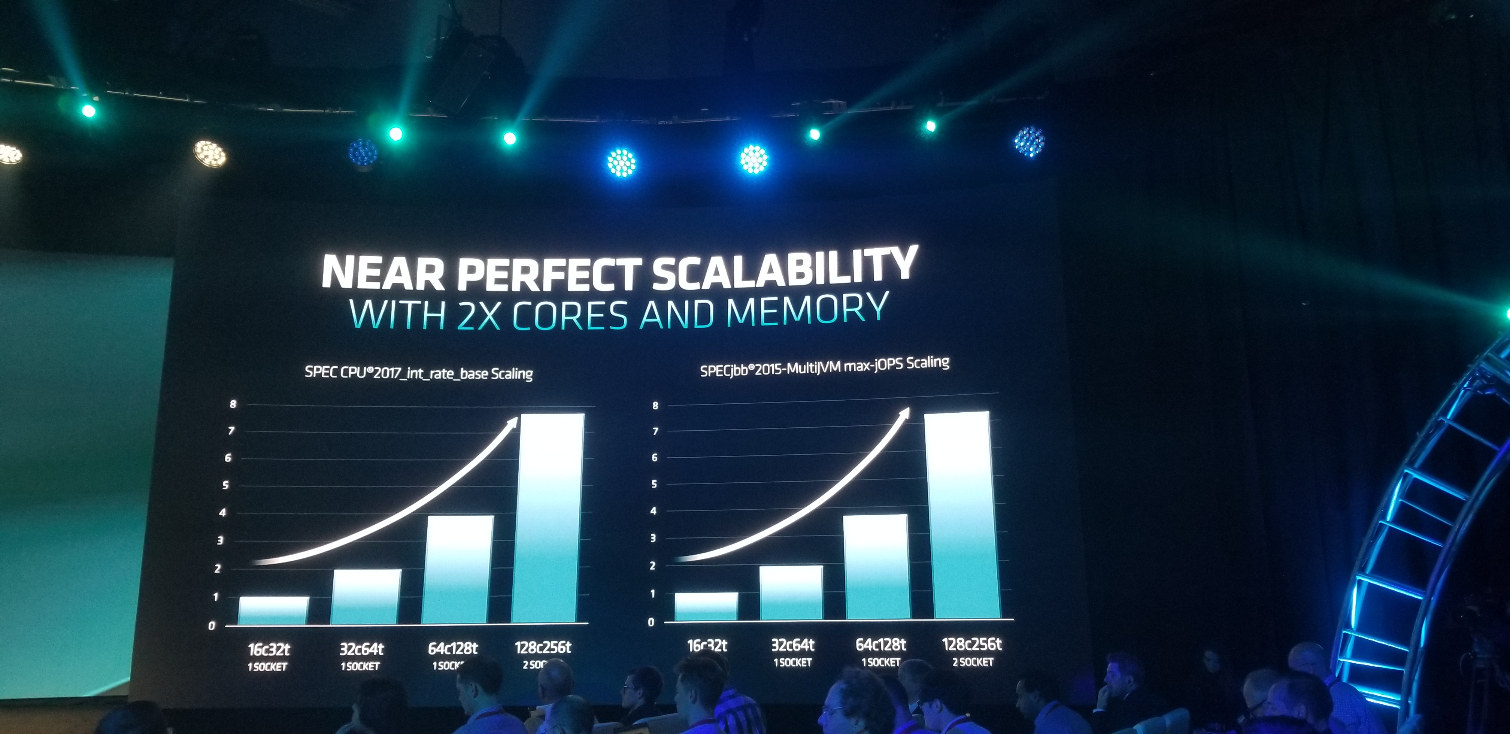

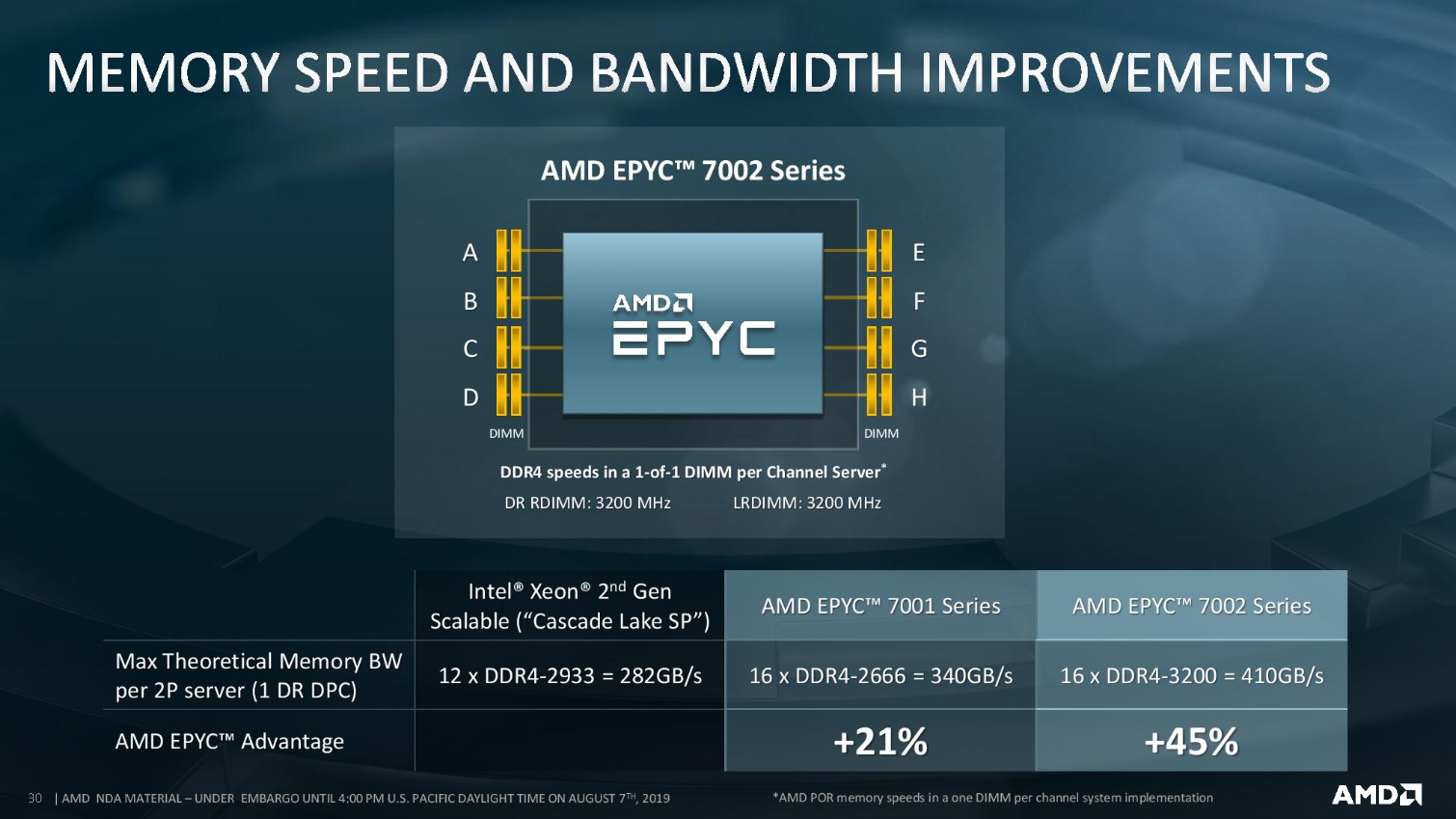

All of the Rome processors support 2TB of memory apiece, up to 4TB per server, spread across eight channels of DDR4-3200, a notable improvement over Xeon's six channels of DDR4-2933. Rome's eight channels of memory have raised concerns about memory throughput per core, but AMD claims performance scales well with increased core counts, and even out to two sockets. Intel is expected to jump up to eight channels of DDR4 support, albeit at unspecified speeds, when 14nm Cooper Lake chips debut next year.

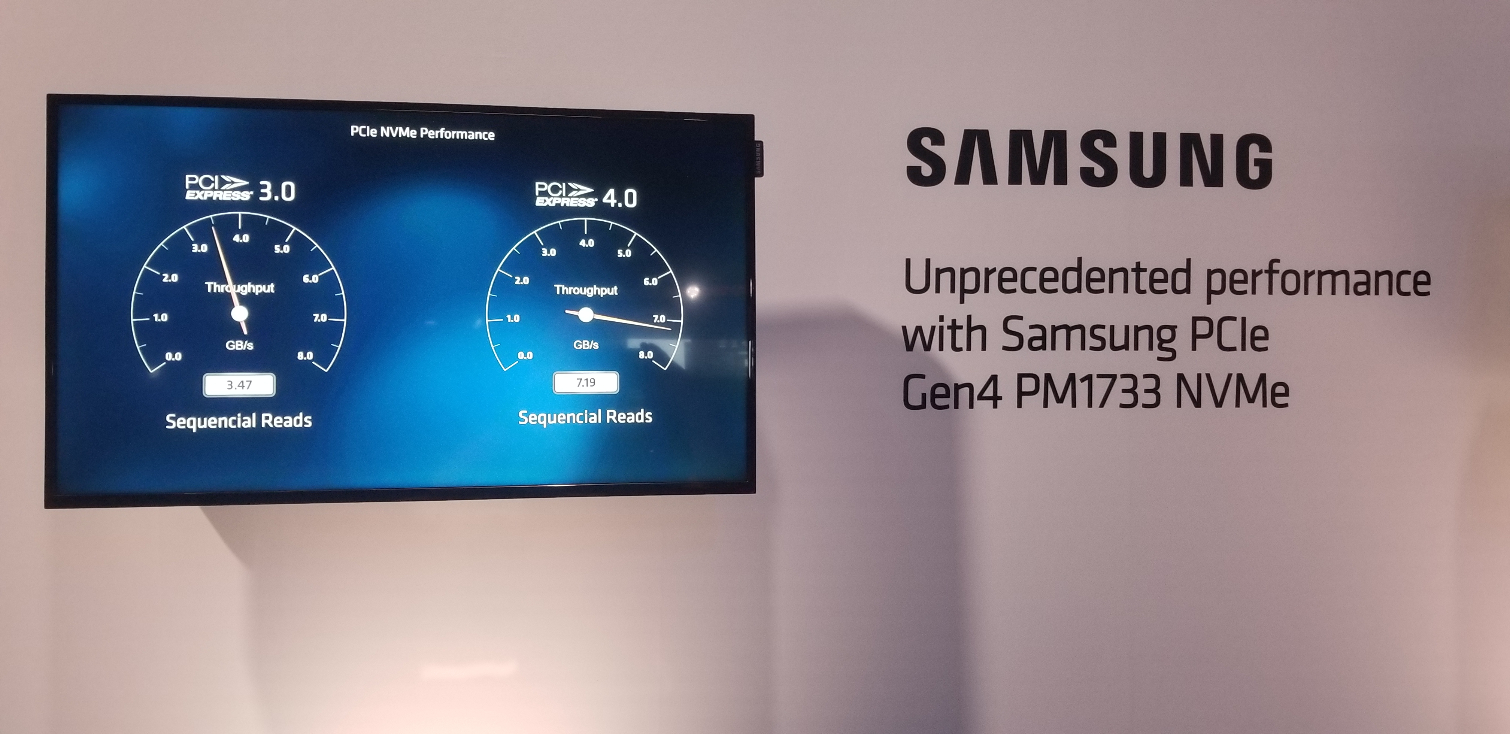

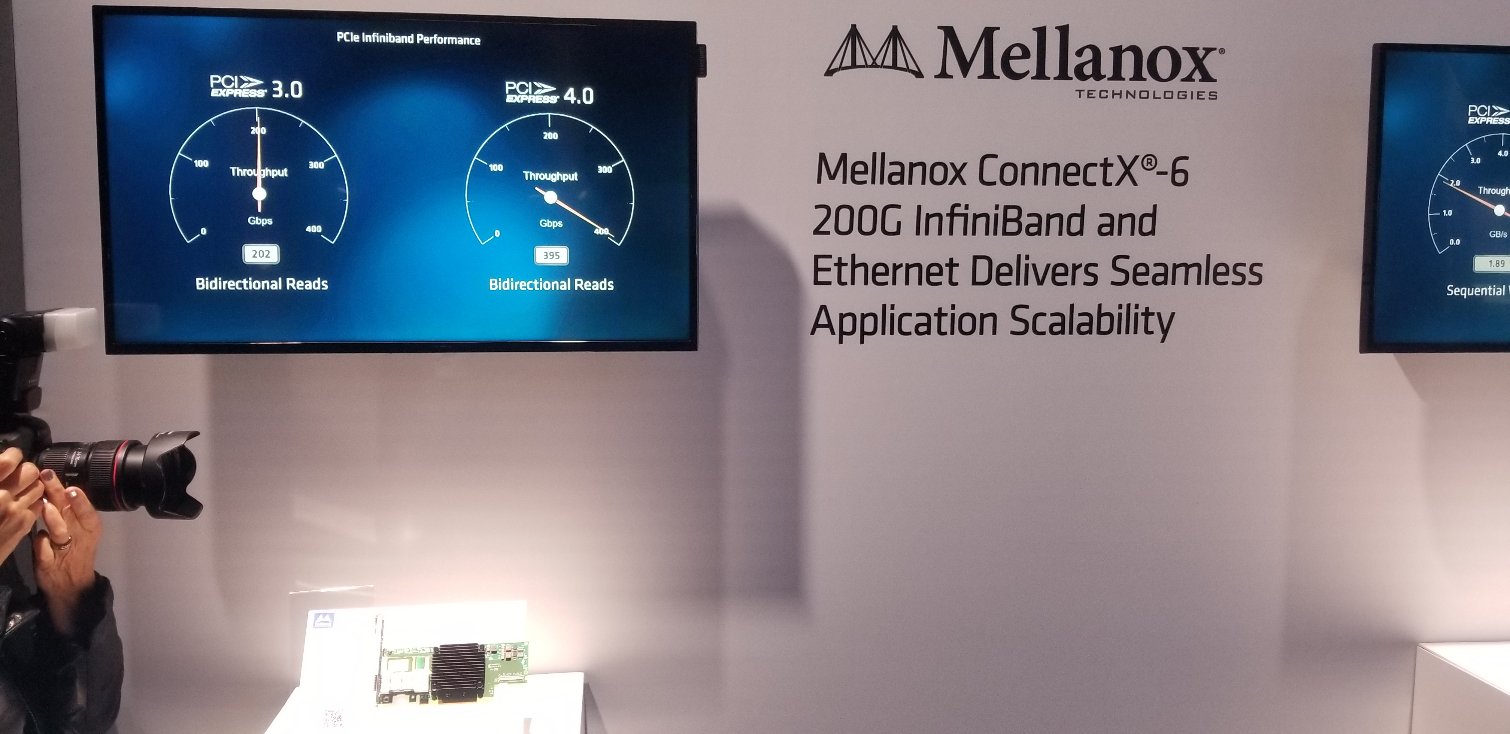

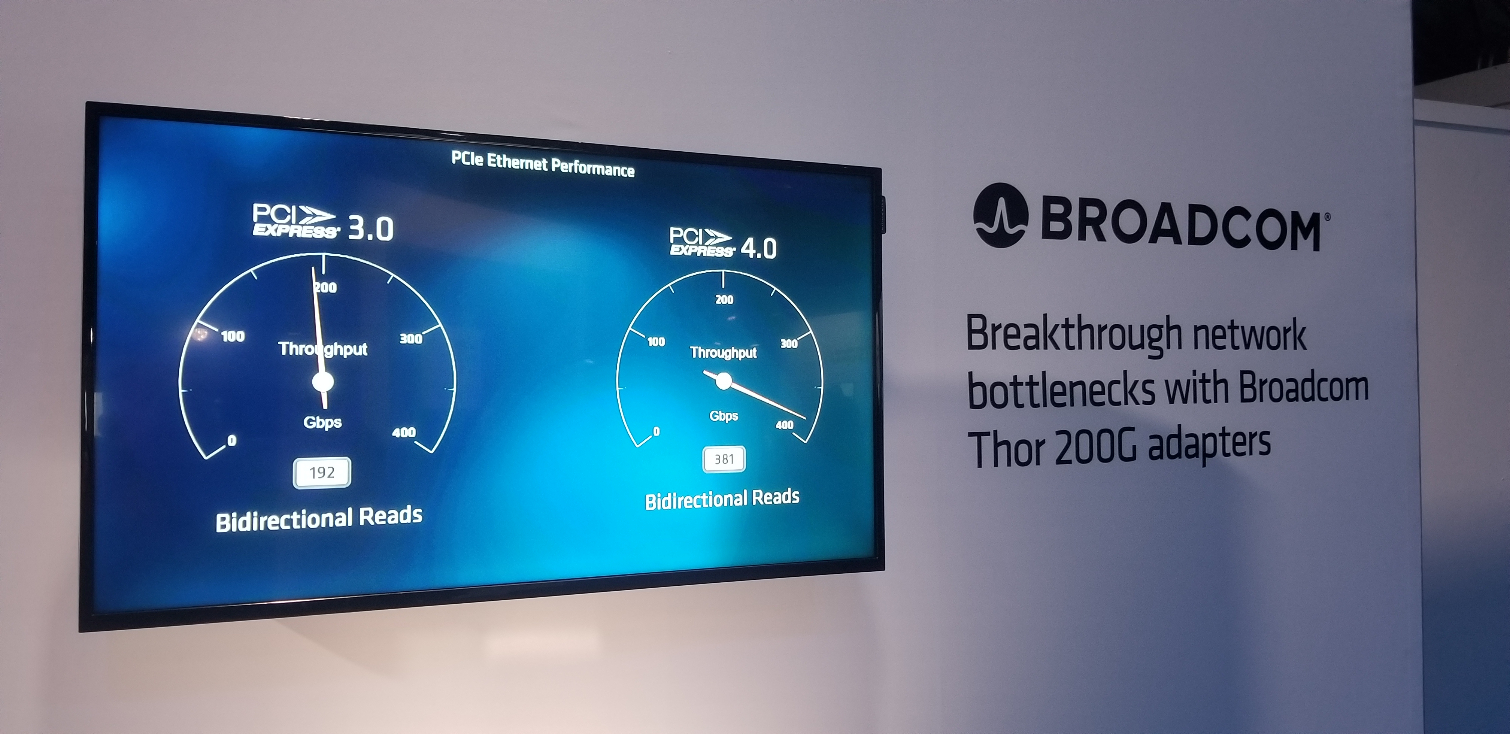

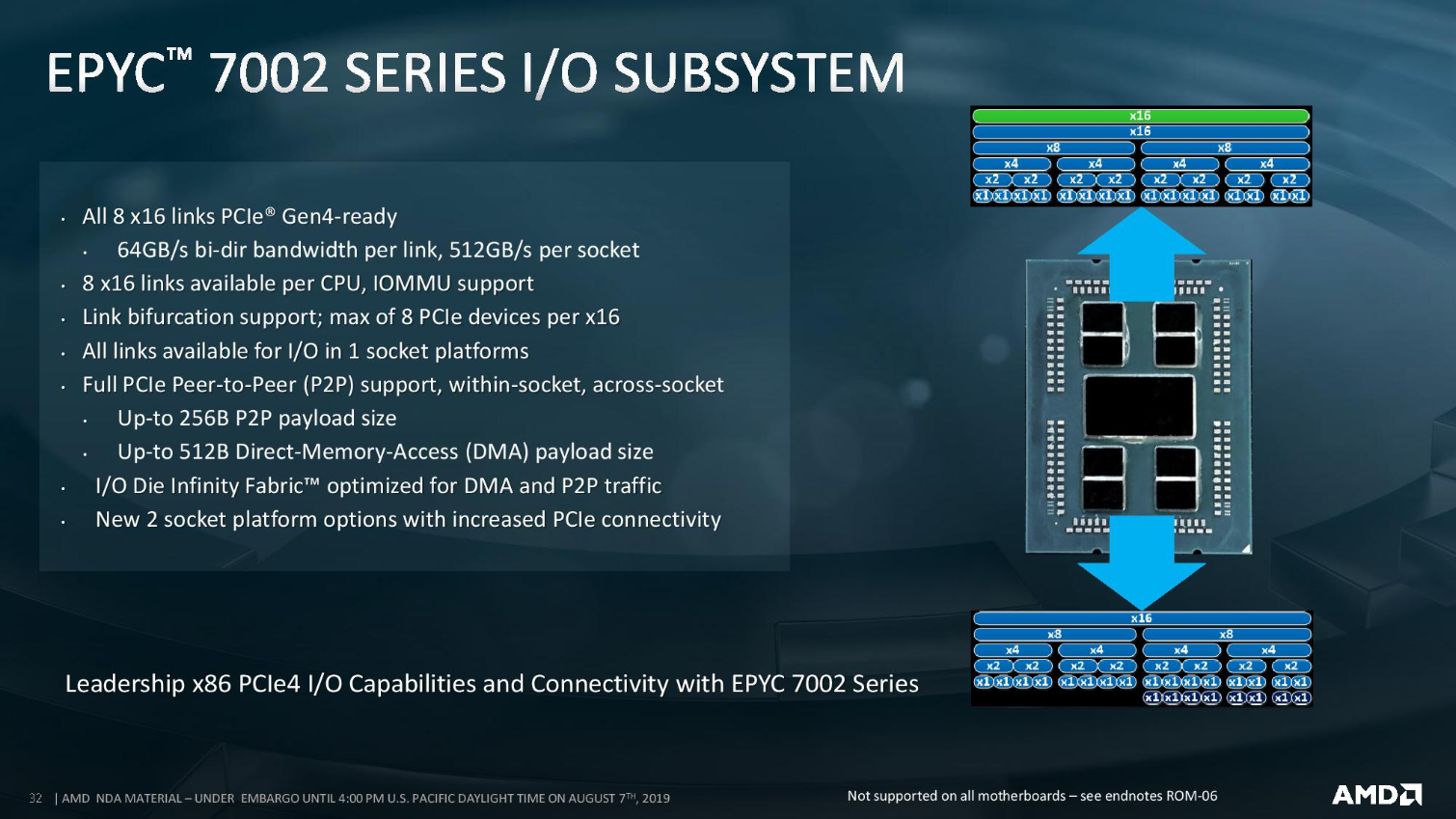

Rome offers a base level of 128 lanes of PCIe 4.0 for all models, including the single-socket variants, and can expose up to 162 lanes to the user. Notably, both single- and dual-socket servers will expose 128/162 PCIe 4.0 lanes to the user. The PCIe 4.0 interface offers twice the throughput of PCIe 3.0, which is a feature that Intel simply cannot match with its current-gen products. Intel is rumored to have PCIe 4.0 support with its Ice Lake processors, but those should arrive in Q2 2020, leaving a weakness in Intel's stack for high-speed I/O devices, like new PCIe 4.0-equipped GPUs, networking, and storage devices.

Rome's L3 cache varies but tops out at a beastly 256MB for the 64-core models. AMD also offers 48-core models with either 192MB or 256MB of L3 cache and 32-core models with either 64MB or 128MB of L3, indicating the company has higher-performance models tailored for certain workloads. The beefiest Rome models offer nearly a half of a gigabyte of L3 cache in a two-socket server.

AMD splits its Rome lineup into five distinct TDP ranges that extend from 120W to 225W. Those TDP ranges can be altered on a SKU-by-SKU basis, offering users the ability to extract more performance from each model, up to a cTDP max of 240W. The higher-TDP ranges often require custom-built platforms, so not all previous-gen servers can support the 240W TDP. The new peak TDP's extend beyond the previous-gen models, but that's expected because Rome comes with up to twice the number of cores at its disposal.

AMD EPYC Rome Pricing

AMD has not released official pricing for the EPYC Rome lineup, but our sources have provided the numbers below. AMD aims to offer performance leadership, more cores, more memory bandwidth and more I/O at every price point, which the company says equates to a better total cost of ownership play than Intel.

| EPYC Rome SKUs | Cores / Threads | Base / Boost (GHz) | L3 Cache (MB) | TDP (W) | 1K Unit Price |

| 7742 | 64 / 128 | 2.25 / 3.4 | 256 | 225 | $6,950 |

| 7702 | 64 / 128 | 2.0 / 3.35 | 256 | 200 | $6,450 |

| 7642 | 48 / 96 | 2.3 / 3.2 | 256 | 225 | $4,775 |

| 7552 | 48 / 96 | 2.2 / 3.3 | 192 | 200 | $4,025 |

| 7542 | 32 / 64 | 2.9 / 3.4 | 128 | 225 | $3,400 |

| 7502 | 32 / 64 | 2.5 / 3.35 | 128 | 180 | $2,600 |

| 7452 | 32 / 64 | 2.35 / 3.35 | 128 | 155 | $2,025 |

| Intel Xeon 8280 | 28 / 56 | 2.7 / 4.0 | 38.5 | 205 | $10,009 |

| Intel Xeon 8276 | 28 / 56 | 2.2 / 4.0 | 38.5 | 165 | $8,719 |

| Intel Xeon 8270 | 26 / 52 | 2.7 / 4.0 | 35.75 | 205 | $7,405 |

| 7402 | 24 / 48 | 2.8 / 3.35 | 128 | 180 | $1,783 |

| Intel Xeon 8268 | 24 / 48 | 2.9 / 3.9 | 35.75 | 205 | $6,302 |

| 7352 | 24 / 48 | 2.3 / 3.2 | 128 | 155 | $1,350 |

| Intel Xeon 8256 | 24 / 48 | 3.8 / 3.9 | 16.5 | 105 | $7,007 |

| Intel Xeon 8260 | 24 / 48 | 2.4 / 3.9 | 35.7 | 165 | $4,702 |

| Intel Xeon 6252 | 24 /48 | 2.1 / 3.7 | 35.75 | 150 | $3,665 |

| 7302 | 16 / 32 | 3.0 / 3.3 | 128 | 155 | $978 |

| 7282 | 16 / 32 | 2.8 / 3.2 | 64 | 120 | $650 |

| Intel Xeon 8253 | 16 / 32 | 2.2 / 3.0 | 35.7 | 165 | $3,115 |

| Intel Xeon 5218 | 16 / 32 | 2.3 / 3.9 | 22 | 125 | $1,273 |

| 7272 | 12 / 24 | 2.6 / 3.2 | 64 | 120 | $625 |

| Intel Xeon 4214 | 12 / 24 | 2.2 / 3.2 | 16.5 | 100 | $1,002 |

| Intel Xeon 6226 | 12 / 24 | 2.8 / 3.7 | 19.25 | 125 | $1,776 |

| 7262 | 8 / 16 | 3.2 / 3.4 | 128 | 155 | $575 |

| 7252 | 8 / 16 | 3.1 / 3.2 | 64 | 120 | $475 |

| Single-Socket SKUs | |||||

| 7702P | 64 / 128 | 2.0 / 3.35 | 256 | 200 | $4,425 |

| 7502P | 32 / 64 | 2.5 / 3.35 | 128 | 180 | $2,300 |

| 7402P | 24 / 48 | 2.8 / 3.35 | 128 | 180 | $1,250 |

| 7302P | 16 / 32 | 3.0 / 3.3 | 128 | 155 | $825 |

| 7232P | 8 / 16 | 2.8 / 3.2 | 32 | 120 | $450 |

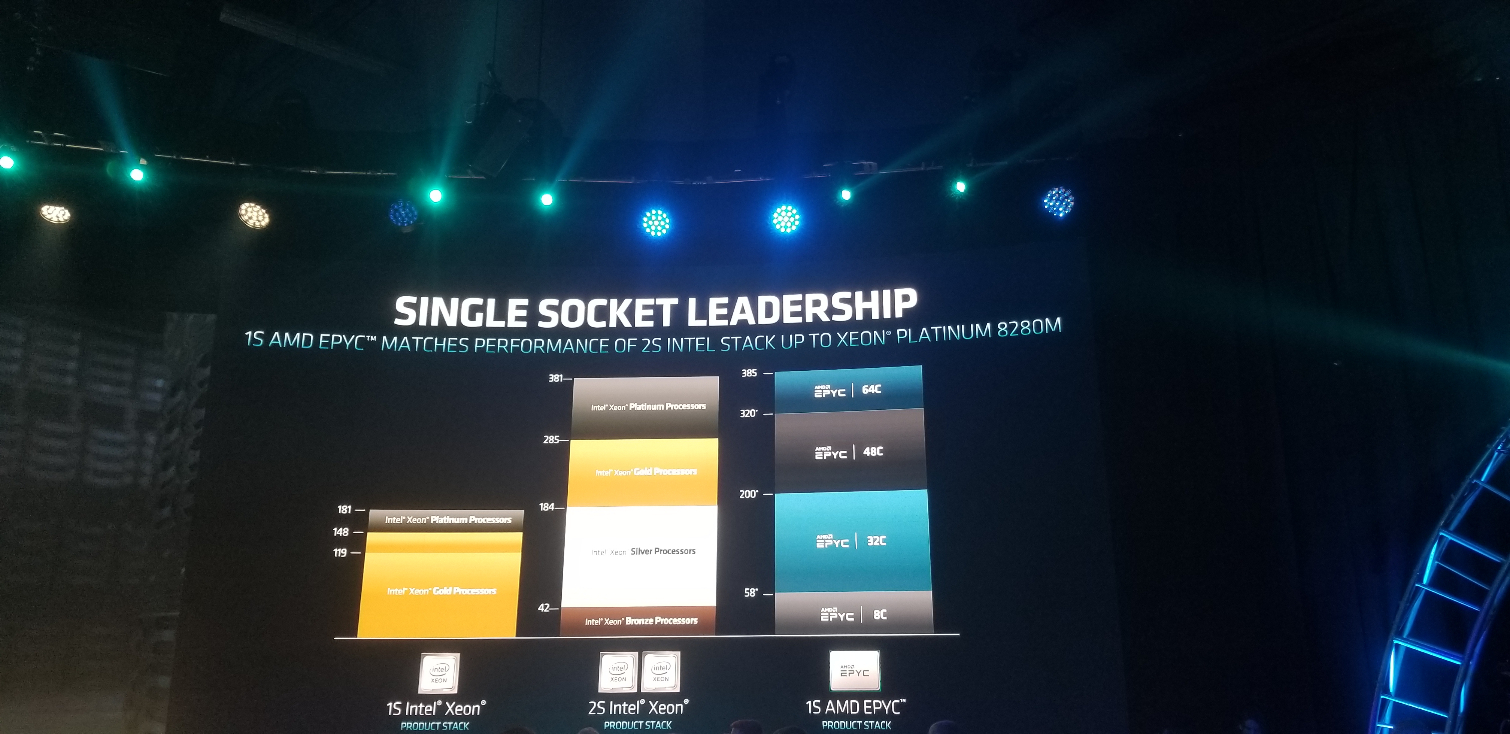

While this isn't a full accounting of Intel's competing Xeon Scalable product stack, and Intel doesn't have products that compete over the 28-core watermark, the basic story rings true: AMD offers more cores and threads in every segment and up to three times more L3 cache at drastically lower pricing. In fact, Intel's 28-core models are more expensive than AMD's beefiest 64-core 128-thread behemoths.

AMD has a lower TDP range than Intel's high core-count models, but both company's offer similar TDP ranges for the lesser-equipped chips. It's notable that although AMD has a power advantage with its 7nm chiplets, the large 12nm I/O die adds some tertiary power consumption. As always, TDP isn't a measure of power consumption, so we'll have to wait or third-party analysis to gauge relative power efficiency between the two stacks.

AMD's Soc's also don't require a chipset on the host motherboard, largely due to the expansive serving of PCIe 4.0 lanes native to the processor. That reduces cost and platform power consumption.

AMD EPYC Rome Performance

AMD has significantly improved the performance of its Zen microarchitecture with the addition of new features with the Zen 2 architecture fabbed on the 7nm process, but the company is already moving forward with its Zen 3 microarchitecture on the 7nm+ process by 2021.

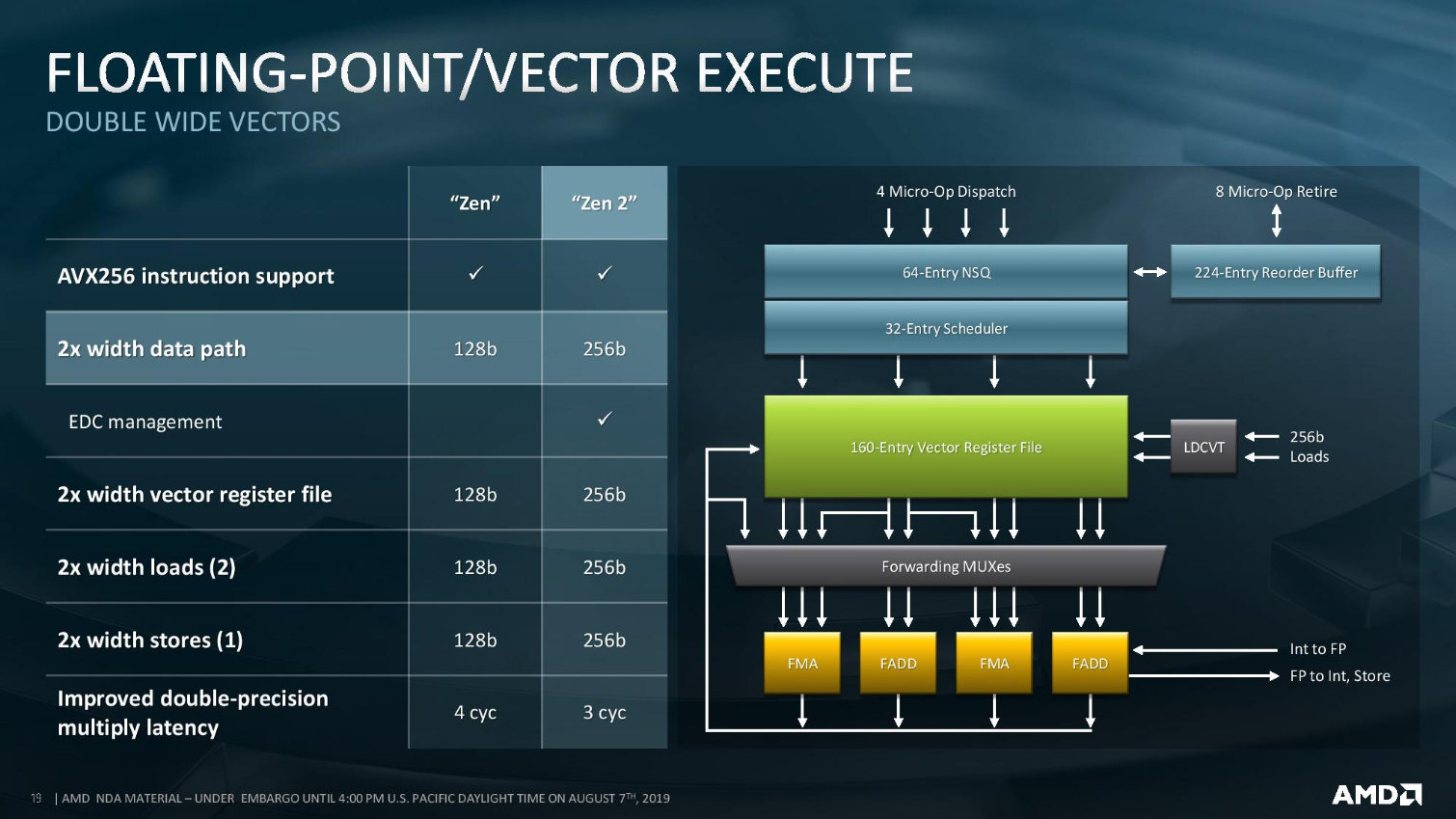

AMD claims it has doubled per-socket performance relative to the Naples chips, and quadrupled the peak theoretical FLOPS by doubling 256-bit AVX throughput. Rome offers up to 204GB/s of memory throughput and supports up to 4TB of RAM per socket. The PCIe 4.0 accommodations provide a peak I/O throughput of 512 GB/s. Rome marks the first x86 server chip to support PCIe 4.0, though IBM's POWER architecture already supports the faster standard.

Unlike Intel's Xeon stack, which has nearly 100 different SKUs, AMD has optimized its stack into four swim lanes with 8, 12/16, 24/32 and 48/64-core segments, for a total of 19 SKUs with very limited segmentation. Unlike Intel, AMD doesn't curtail features, like PCIe lanes or memory speeds/channels, to differentiate its stack.

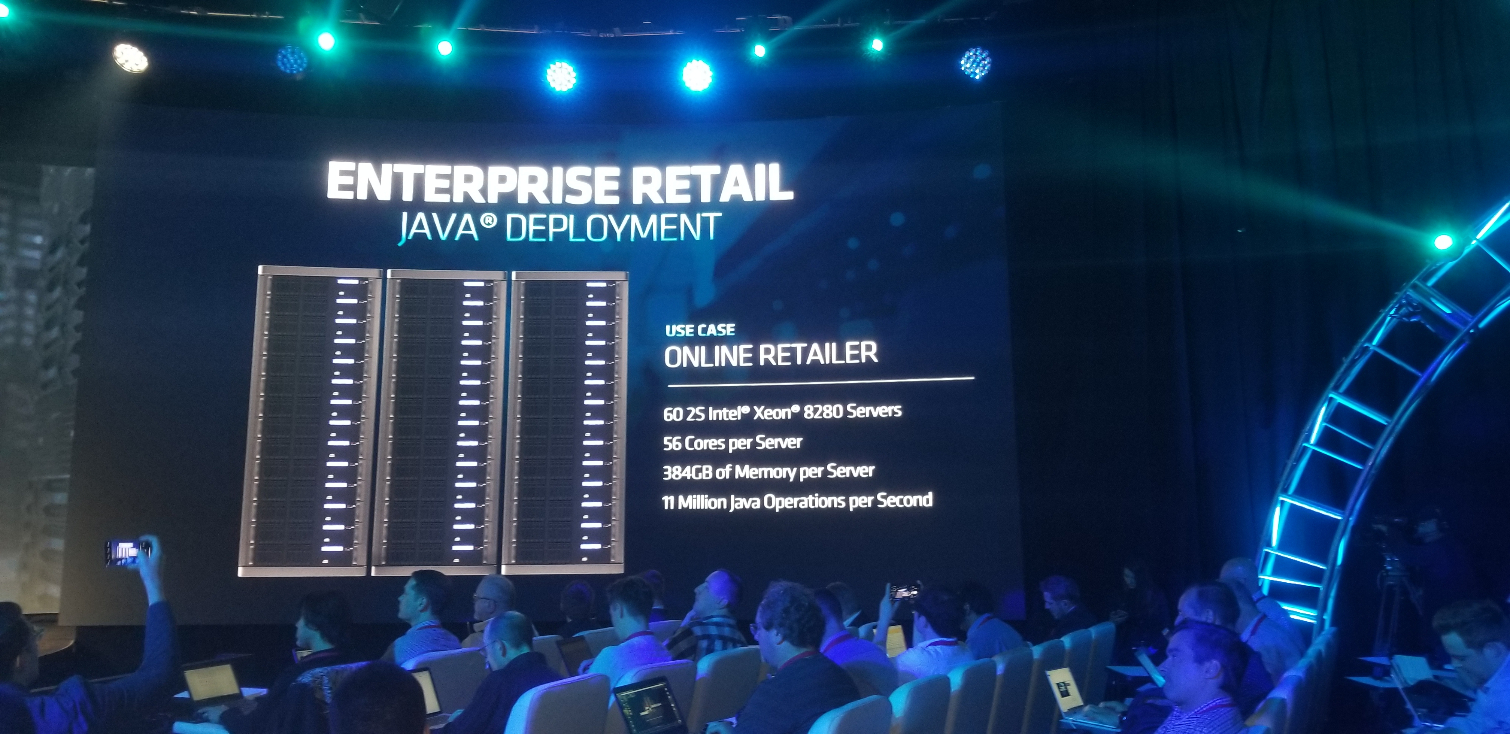

AMD claims that a single-socket server armed with its 64-core models can outperform Intel's dual-socket servers up to Intel's 8280M.

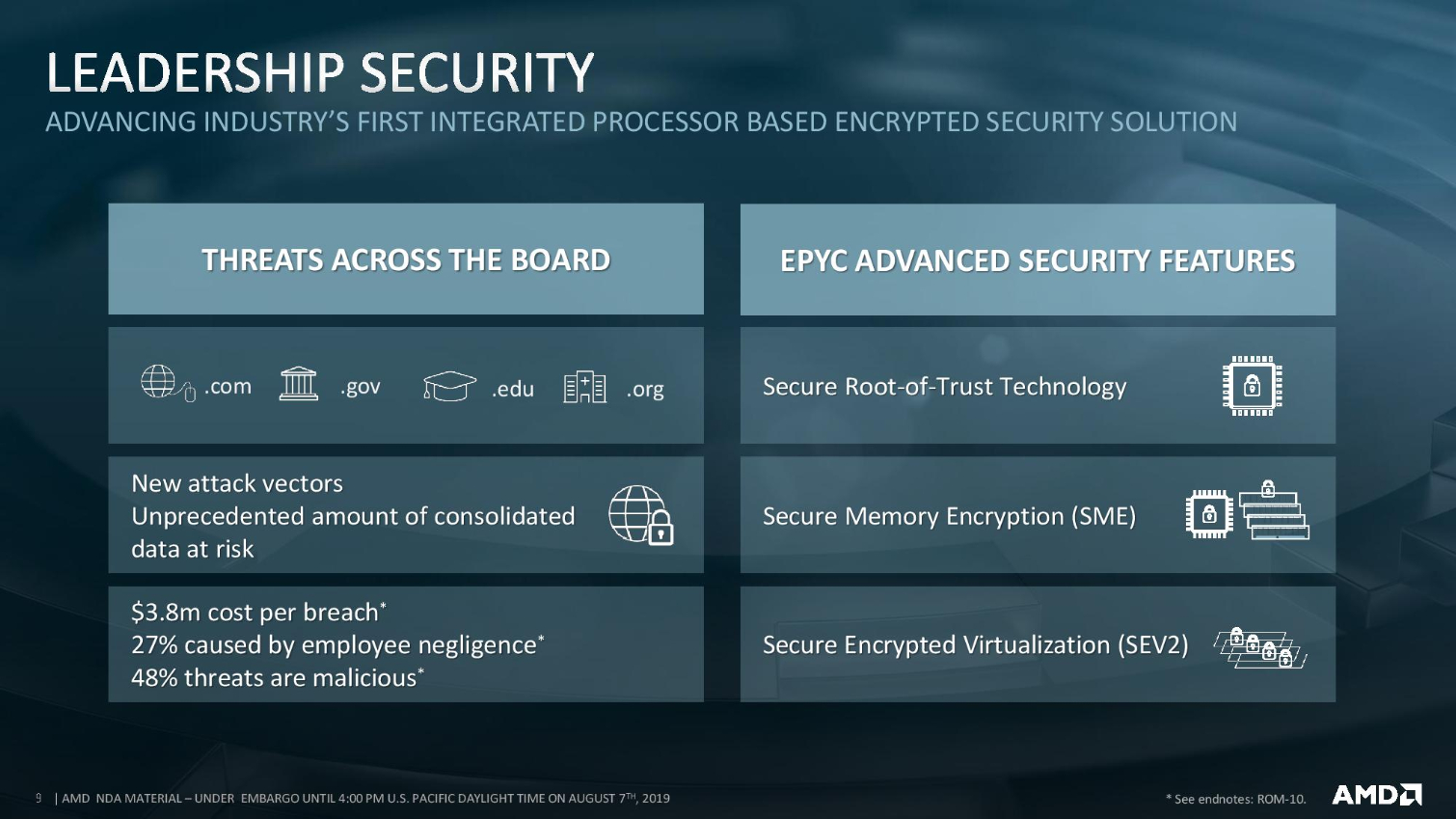

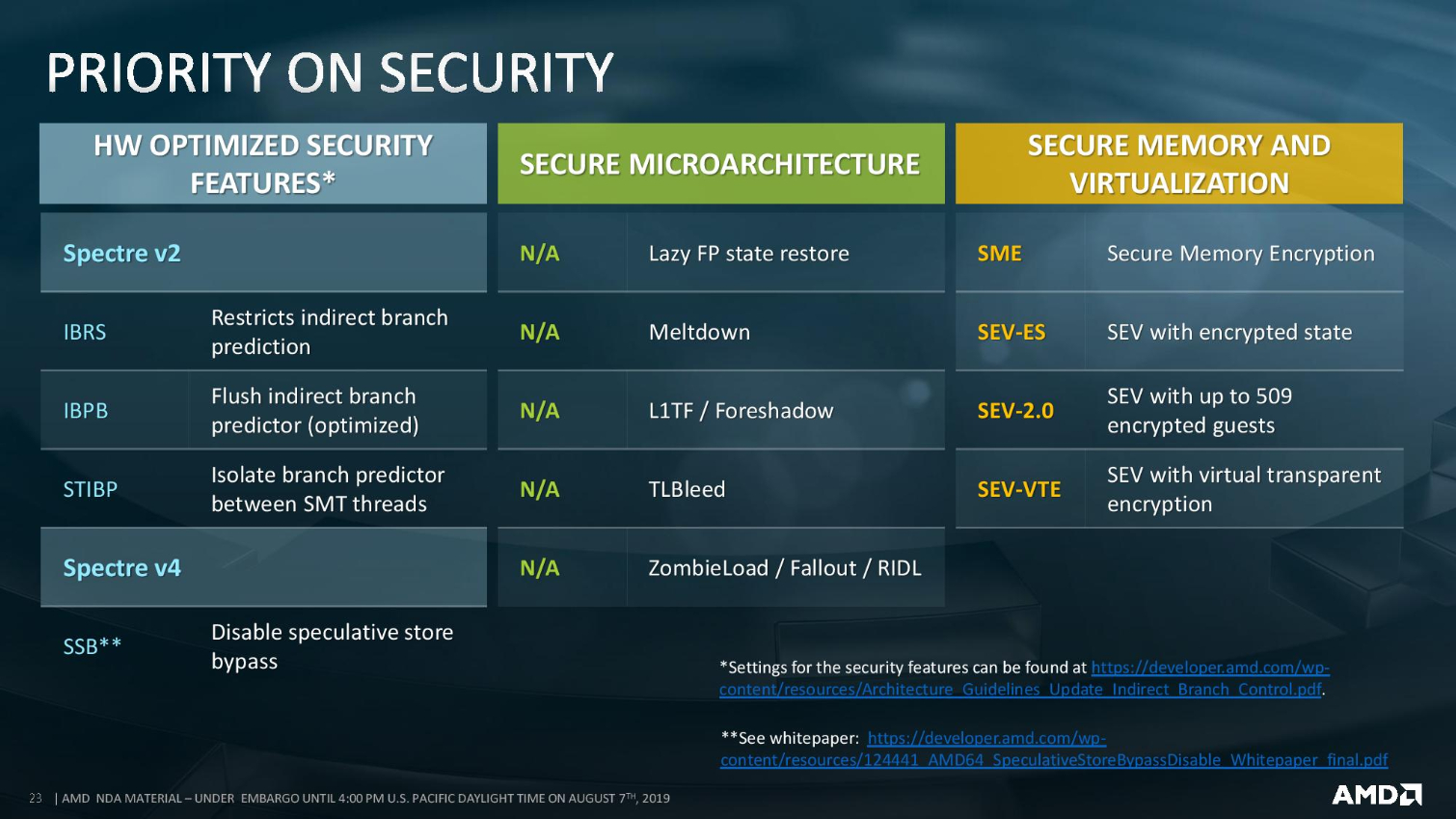

AMD EPYC Rome Security

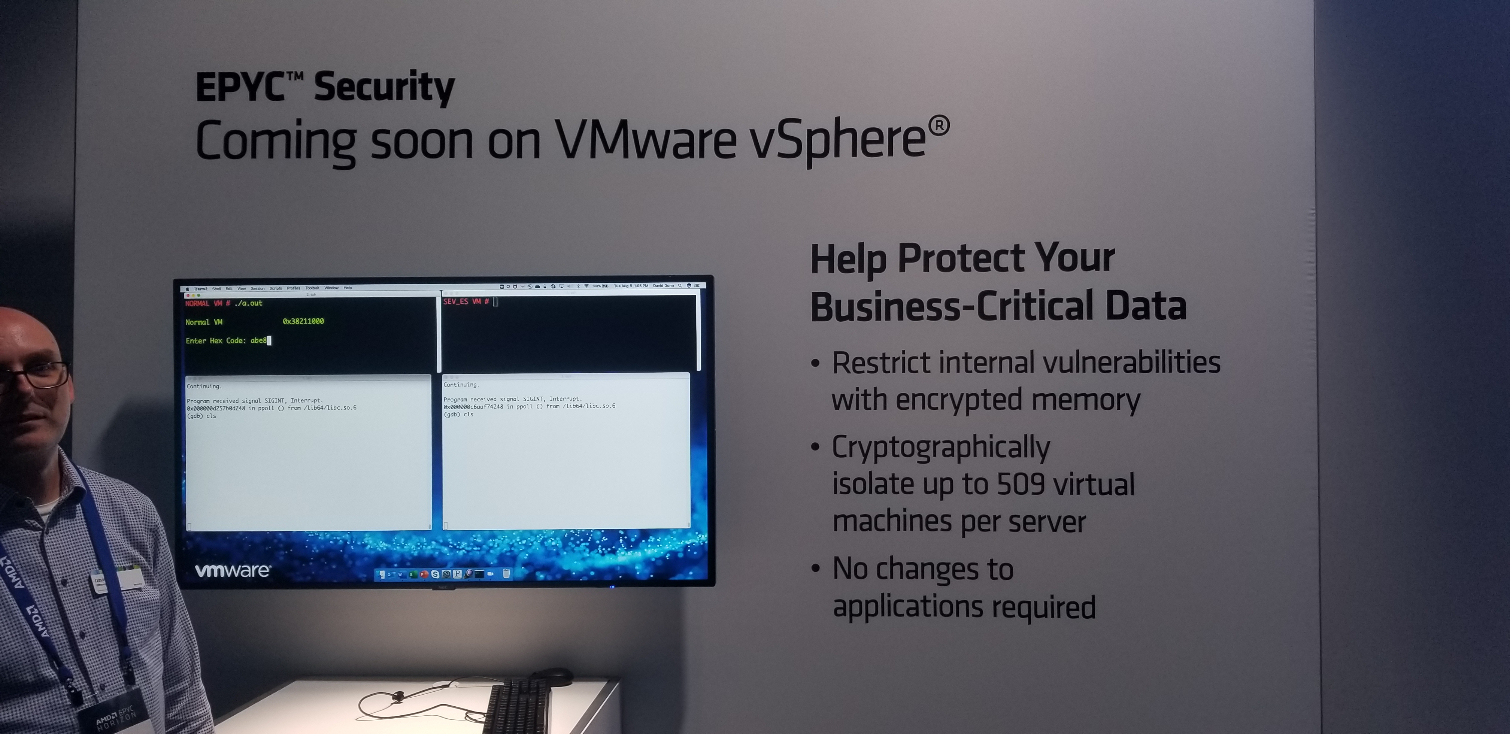

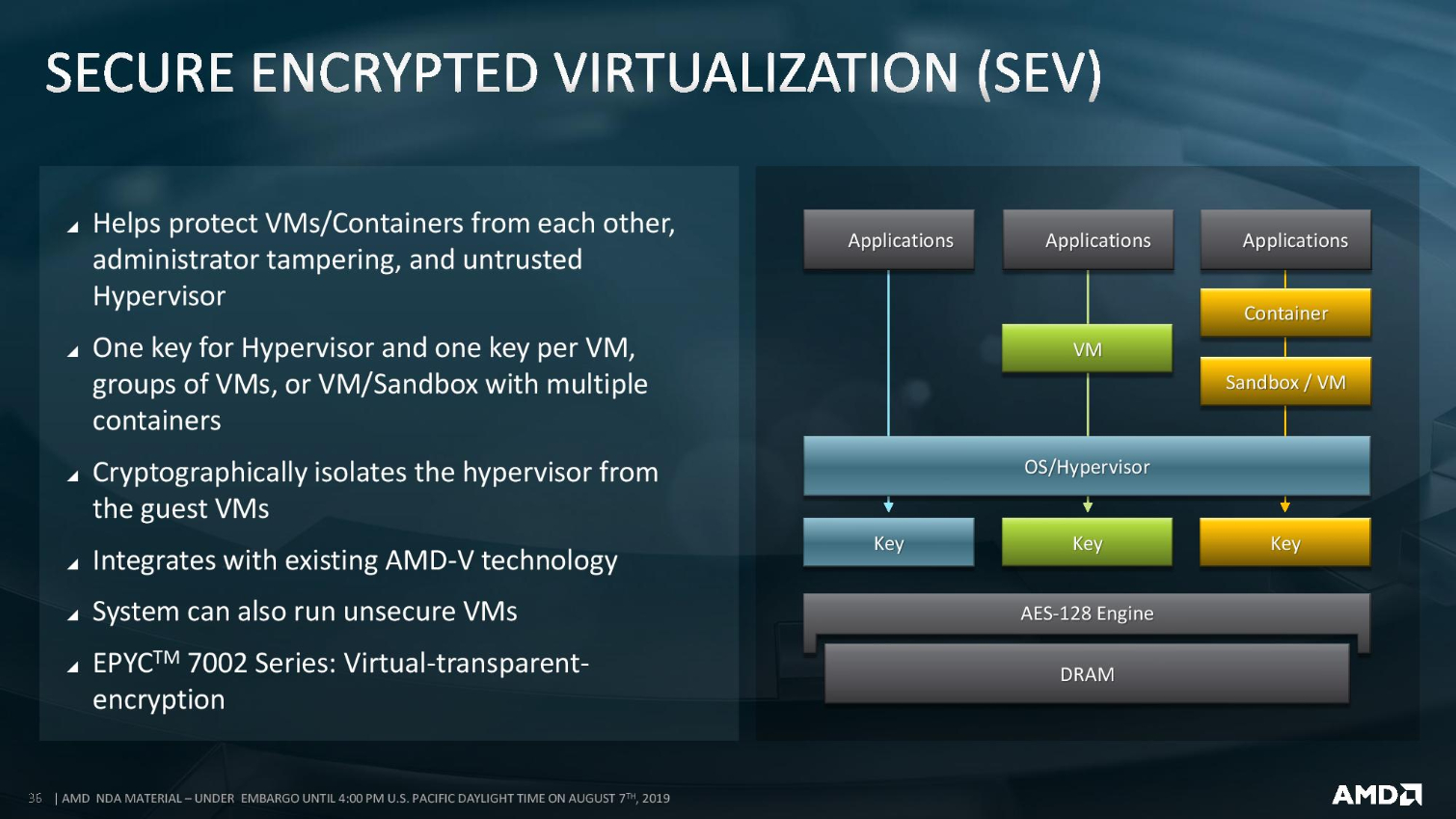

AMD has built Spectre v2 mitigations into the silicon, which reduces the performance impact. AMD has also patched IBRS and IBPB, along with Spectre v4. Rome is also not as vulnerable as Intel to the full range of speculative execution vulnerabilities that have emerged over the course of the last year. Rome also supports secure memory encryption features.

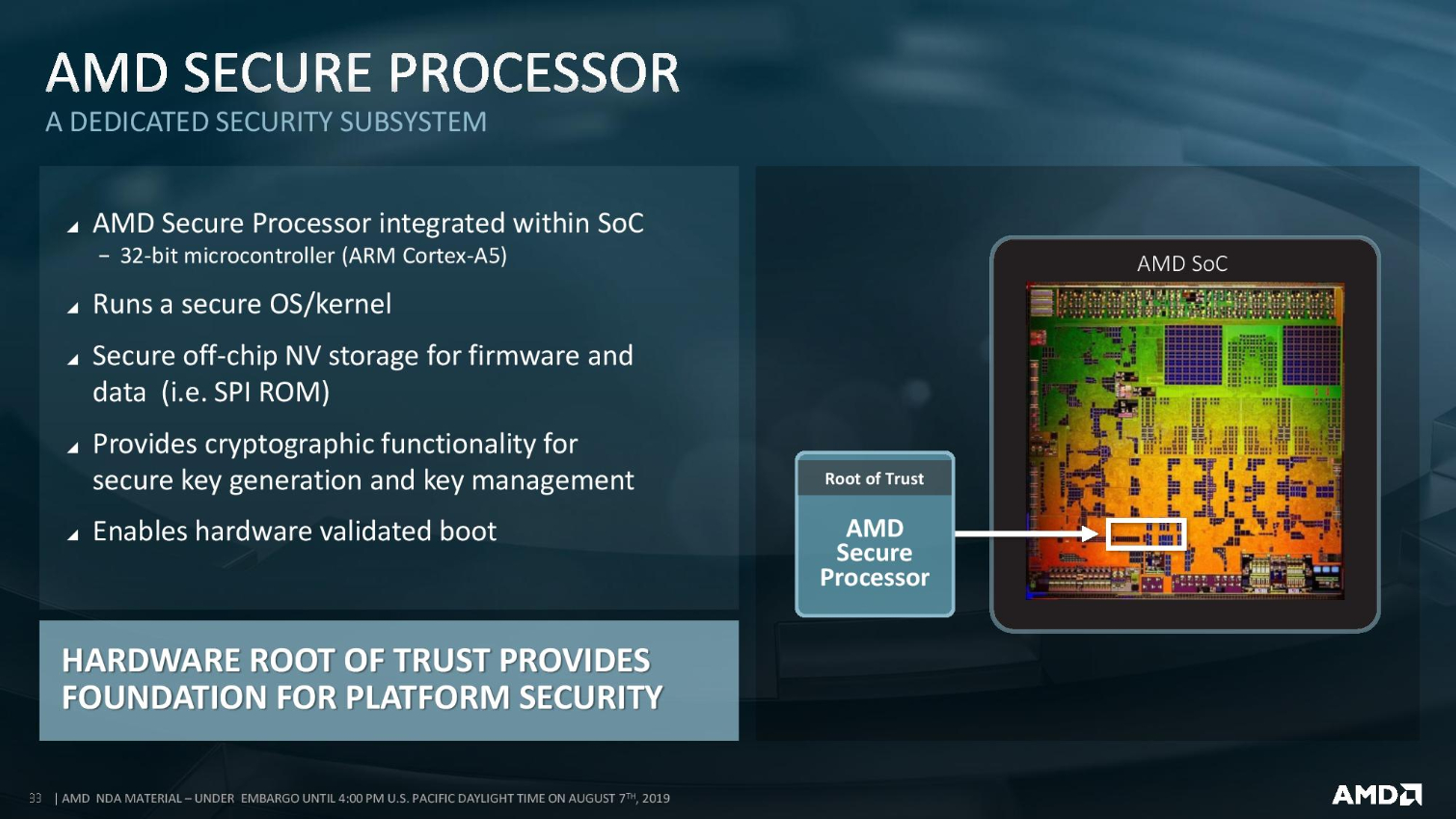

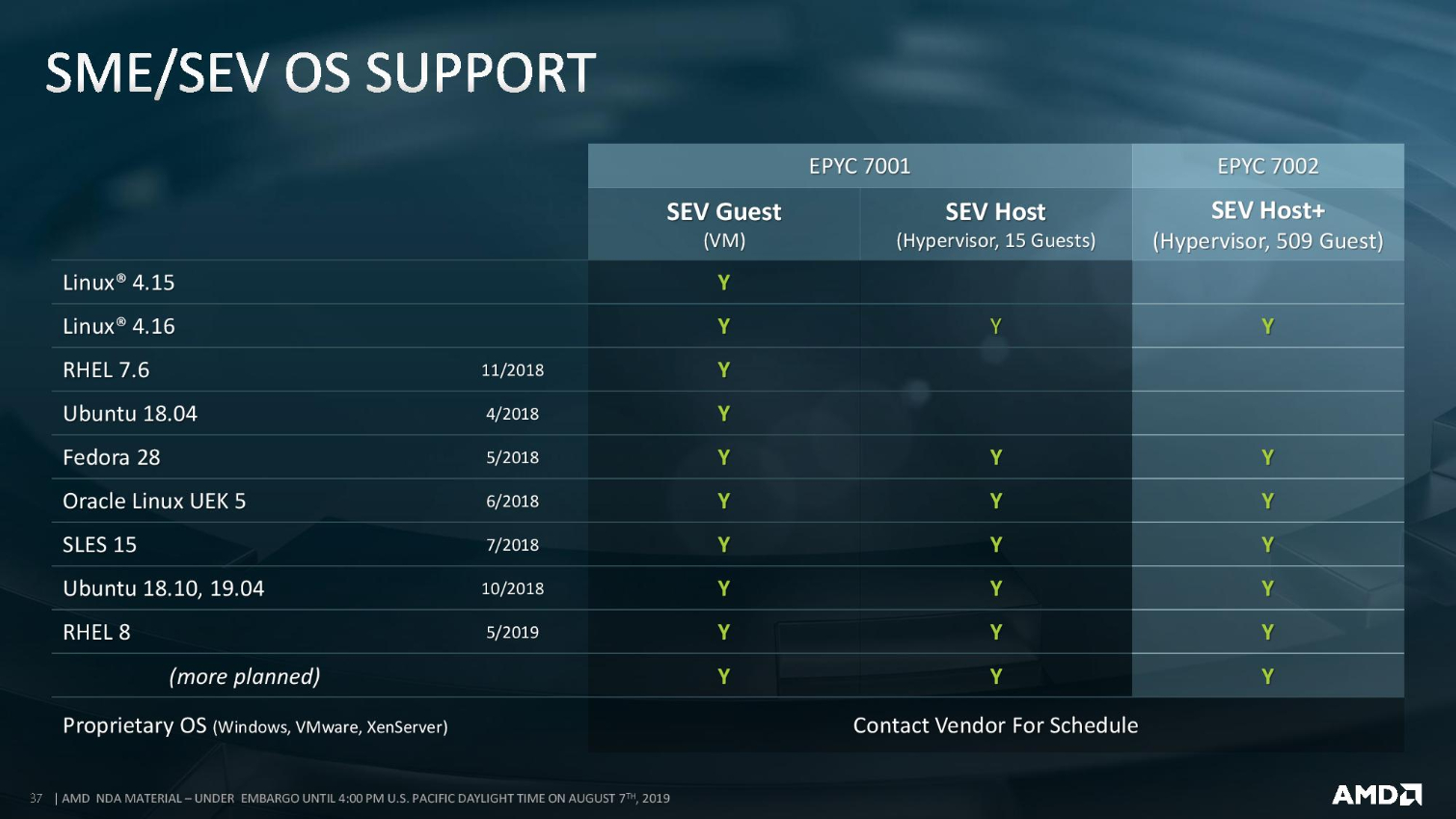

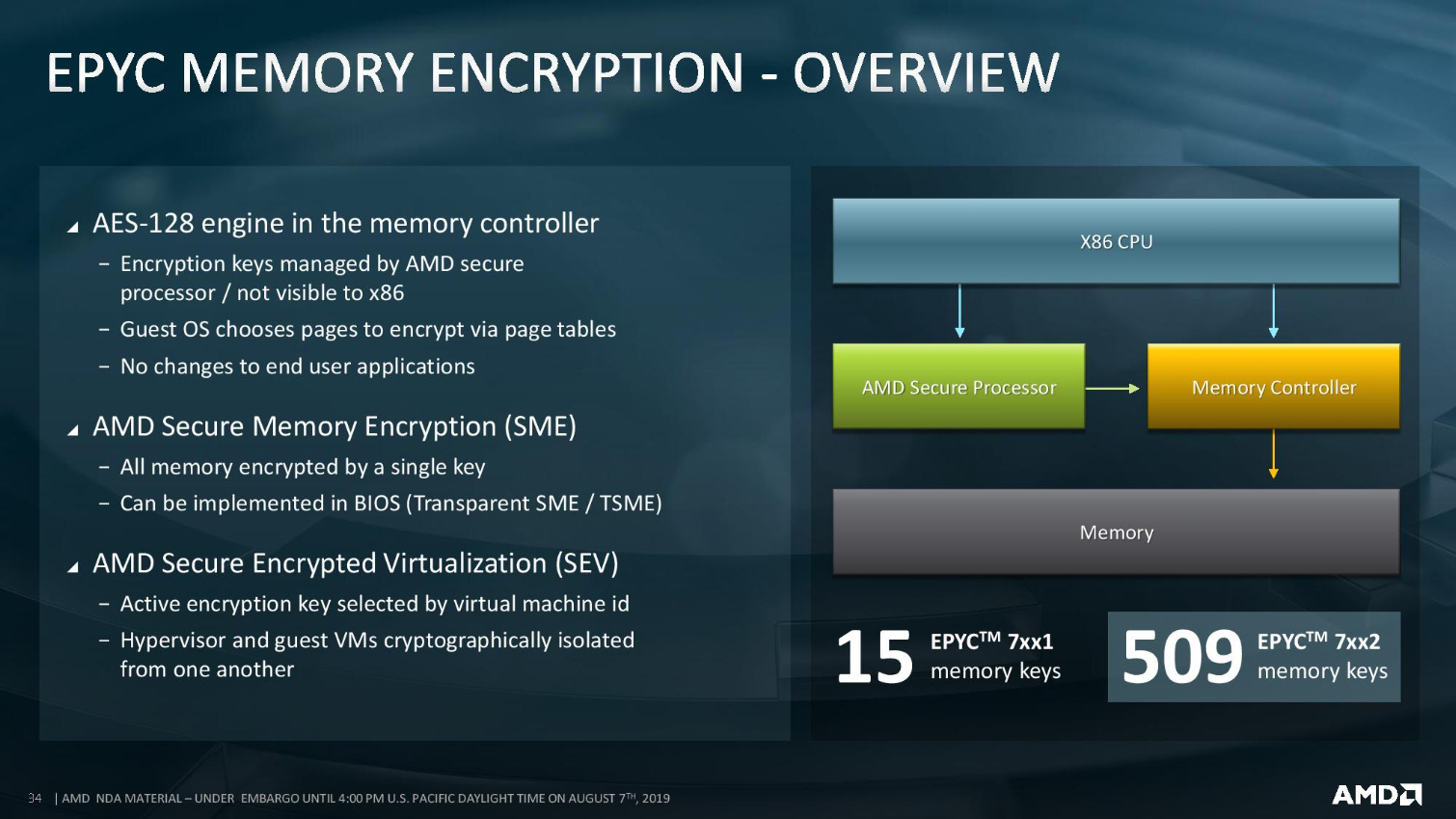

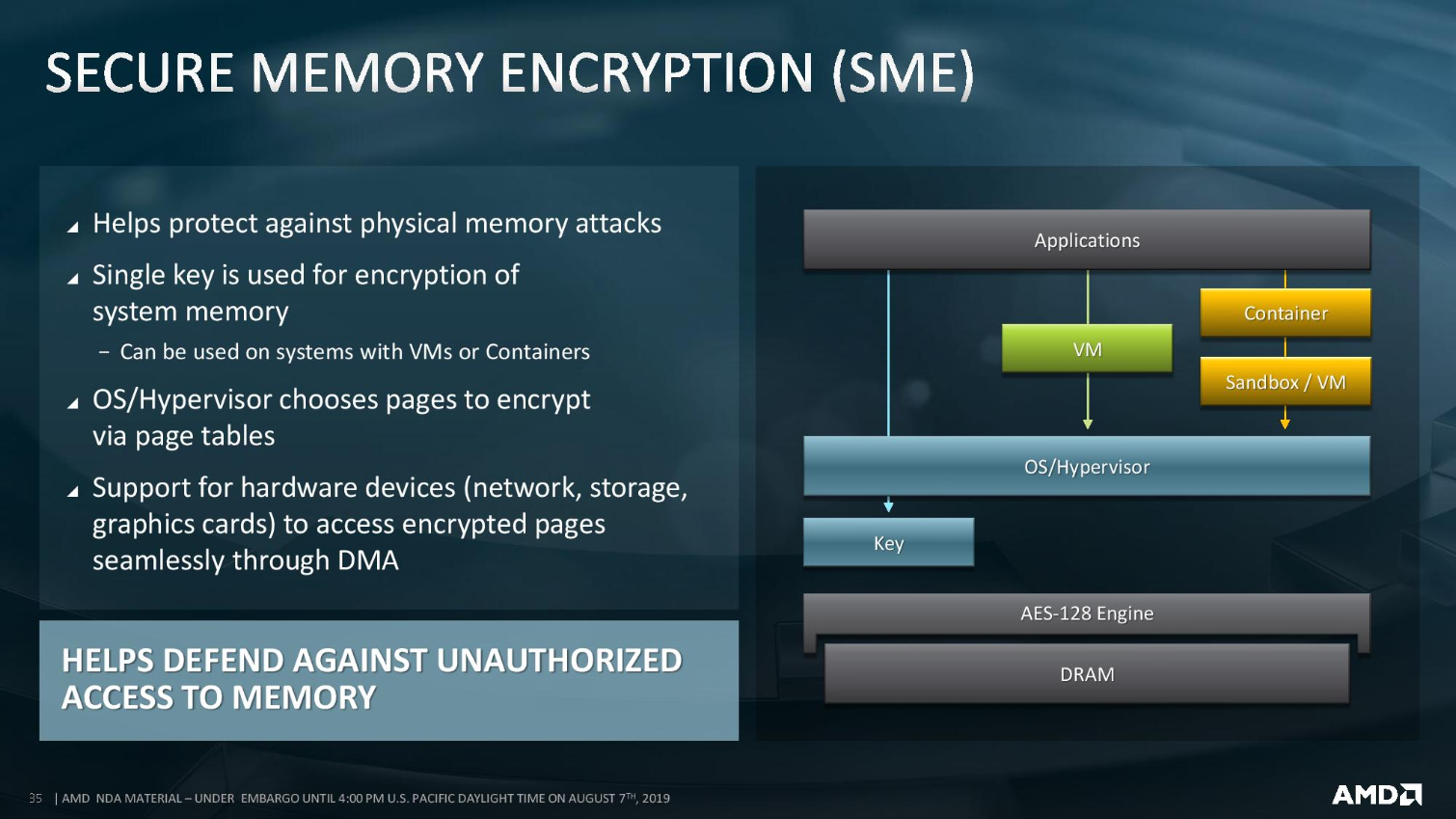

AMD's root of trust comes from a secure processor that runs separate code with an isolated ISA. The chips also have an AES-128 engine in the memory controllers, with the keys managed by the security processor. As such, the keys are walled off from the x86 domain. The chip supports up to 509 encrypted hardware guests (keys). SME protects against physical memory attacks, and can be done at either the hardware or hypervisor level. SEV is built on top of SME and lets each guest have its own key that is only managed by the secure processor, which isolates the guests from each other and the hypervisor.

AMD EPYC Rome Zen 2 Microarchitecture

EPYC Rome uses the same underlying microarchitecture as the Ryzen 3000 series processors, so the gen-on-gen improvements, like a 15% uplift in instructions per cycle (IPC) throughput, are identical.

The 7nm process serves as the cornerstone, offering doubled density, up to 1.25X higher frequency at any given power point, or it can be tuned to provide half the power consumption with the same level of performance as the previous-gen models.

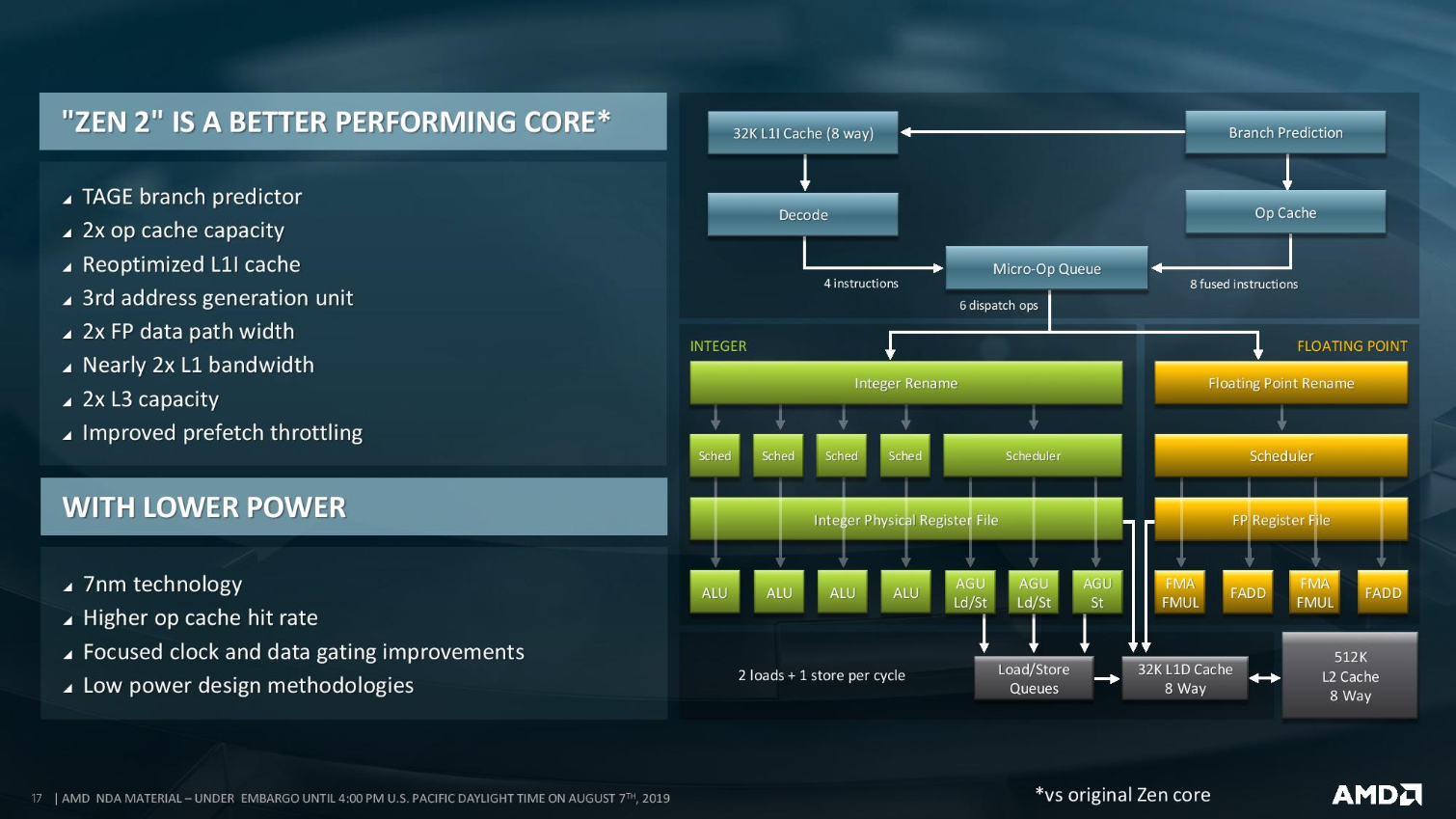

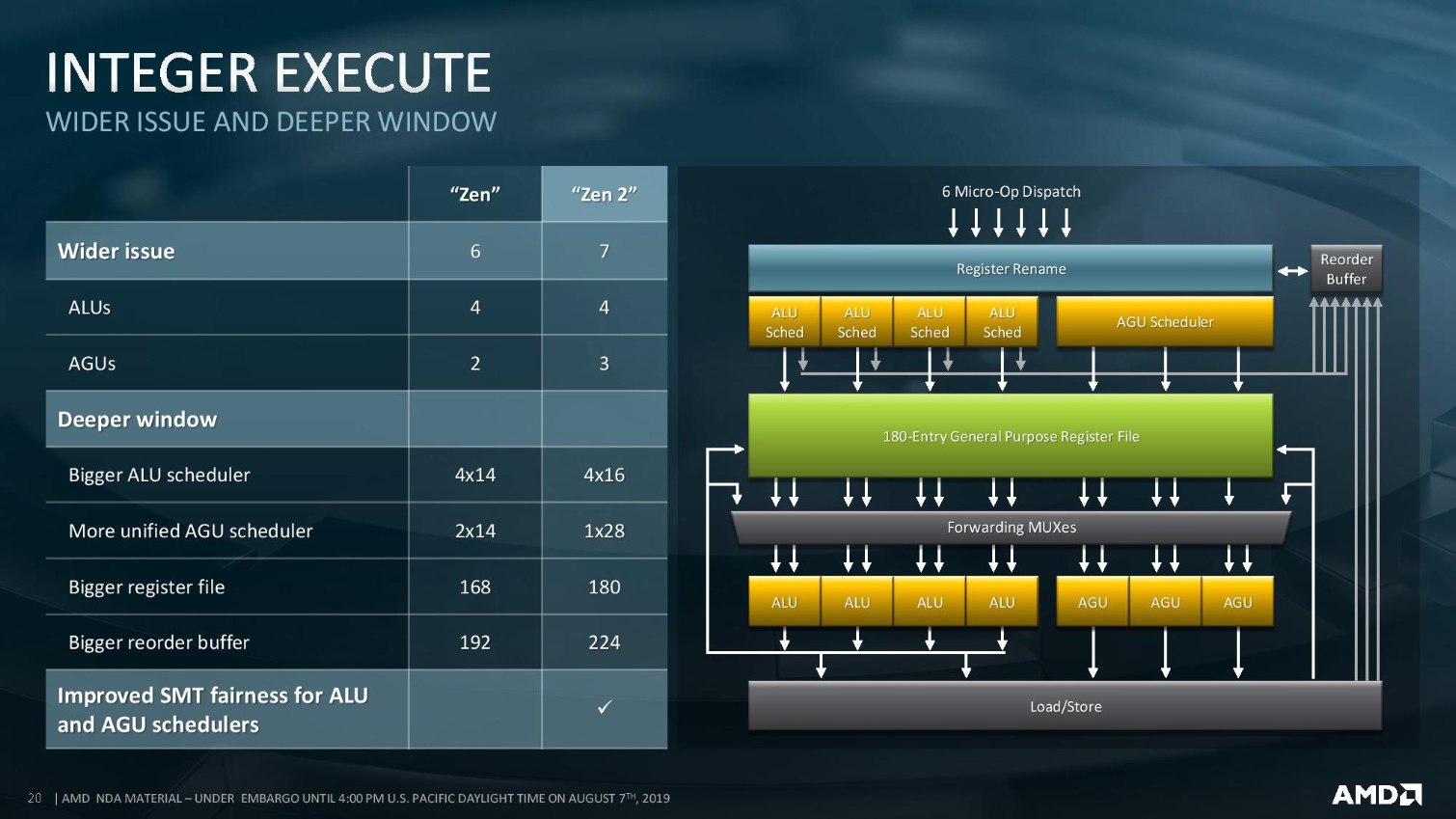

The Zen 2 microarchitecture is a well-traveled topic, but the high-level improvements include a new TAGE branch predictor that serves as a second stage to complement the perceptron-based prediction unit. The company also doubled L3 cache capacity and moved to 8-way associativity for the L1 instruction cache, allowing it to shrink the L1 cache and double the op cache.

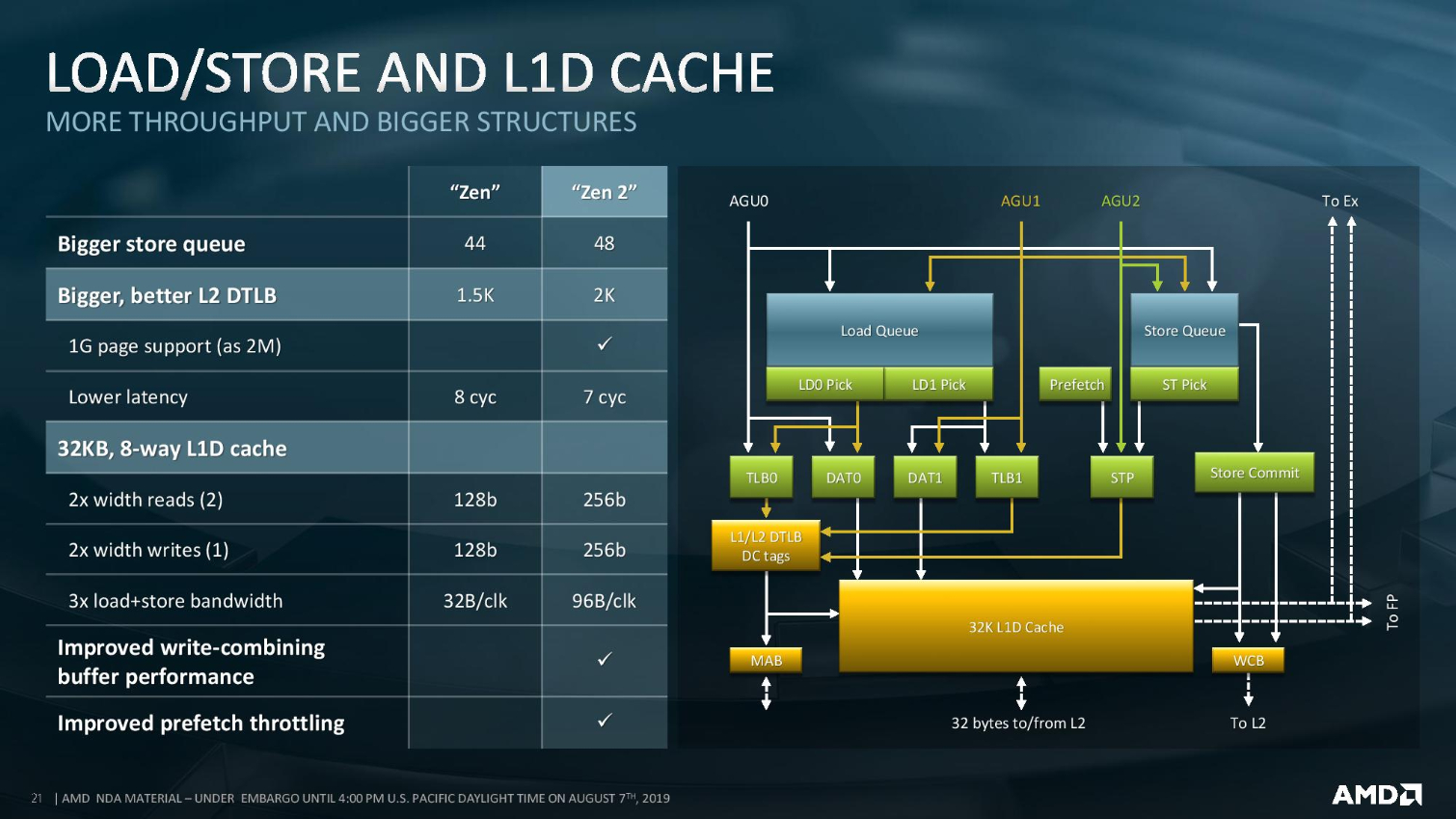

AMD always supported 256-bit AVX, but it required splitting the instructions into two 128-bit pipes. For Zen 2, AMD doubled the data path width and vector register files. Changes to the Load/Store unit include a bigger store queue and a larger L2 DTLB block. AMD also beefed up the read and write widths to 256b, and tripled the load+store bandwidth.

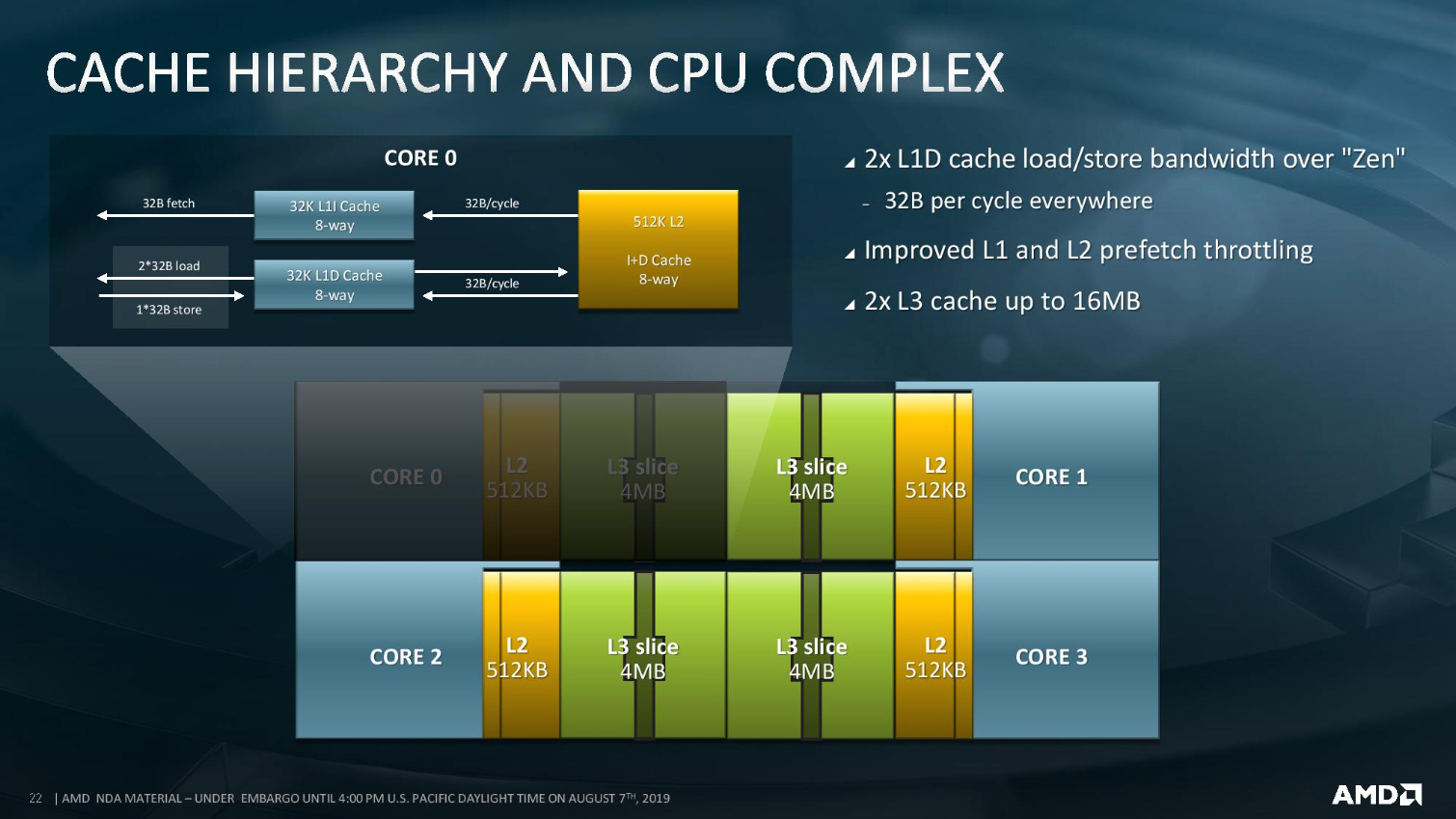

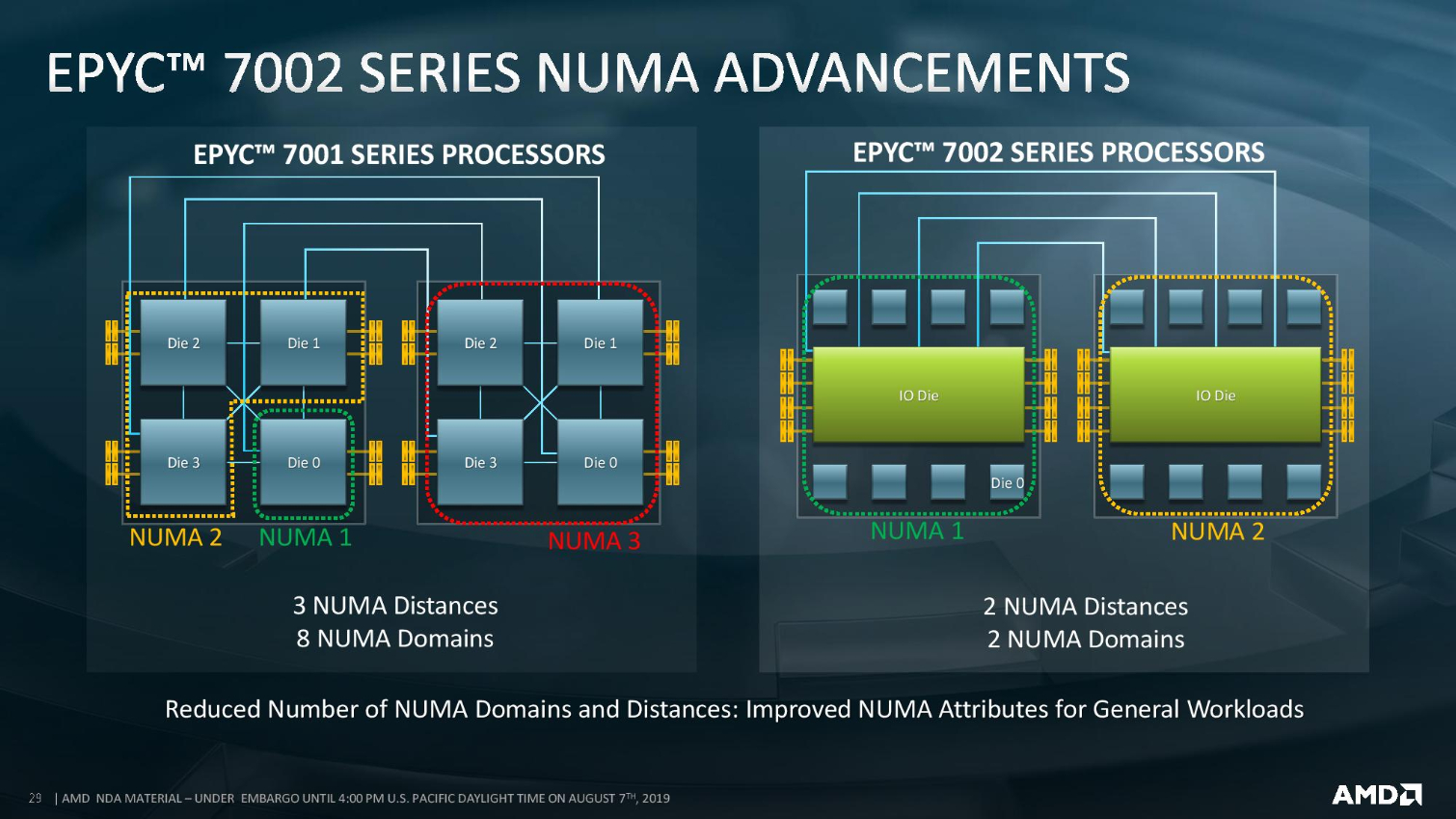

Each compute chiplet (CCD) consists of two standard four-core CCXes, except now they come armed with twice the amount of L3 cache, which helps reduce the amount of accesses to main memory. AMD also reduced the effective memory latency through its new NUMA arrangement, which we'll cover below.

AMD EPYC Rome Multi-Chip Hybrid Chiplet Architecture

As before, Rome is based on an SoC design, but the company moved to a 12nm I/O die to tie together the eight-core compute chiplets. The compute chiplet design is shared with the consumer Ryzen 3000 parts. The chiplet-based architecture provides cost advantages due to the inherent yield advantage of smaller dies. It also allows AMD to put more silicon in the socket because the reticle limit no longer applies when compute cores are spread across multiple die. As such, AMD can pack up to ~1000mm2 of silicon into a single package. That amounts to 32 billion transistors.

The 12nm I/O die ties together the chiplets with eight infinity fabric links. The DDR4 and PCIe 4.0 controllers reside on the I/O die, which allows the processor to provide similar latencies for memory access, as opposed to the three-layer latency profile of the previous-gen chips. That also has the side effect of improving NUMA performance. Rome now only has two NUMA domains, whereas the Naples chips had three. That equates to an equal latency distribution of 104ns and 201ns for the two domains, a reduction of 19% and 14%, respectively. The chips can also be configured into three NUMA domains, which enables a 94ns latency for the extra domain.

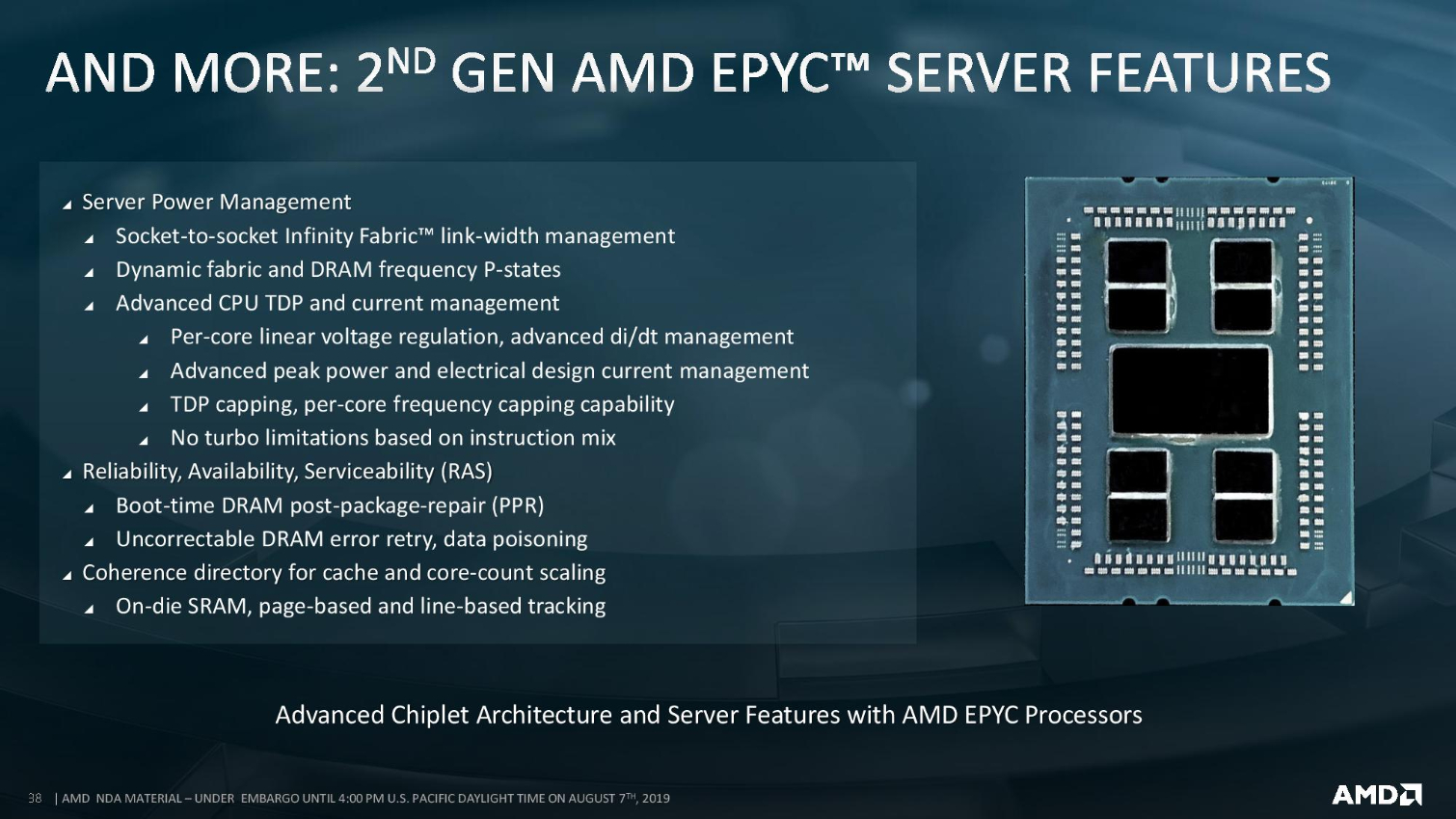

AMD added a dynamic uncore DVFS system to conserve power when the uncore isn't needed or fully utilized, or the saved power can be dedicated to the compute cores. Unlike Intel, AMD doesn't drop into lower frequencies based on the type of instruction being processed, instead being power aware, which helps Rome maintain higher maximum turbos for high core-count models. That helps particularly for heavy multi-core turbo ratios, as shown in the 7742's maximum frequency boost chart in the album above.

Along with the doubling of cores per socket, AMD also roughly doubled the bandwidth of the Infinity Fabric, with first-gen platforms supporting 10.7 GT/s of throughput between two processors in a two-socket system, while platforms optimized for Rome can hit up to 18 GT/s. AMD doubled the Infinity Fabric read width per clock to 32B, but retained the 16B write width. The Infinity Fabric also has a link-width management system to conserve power during periods of low utilization, and the same technique applies to the memory subsystem.

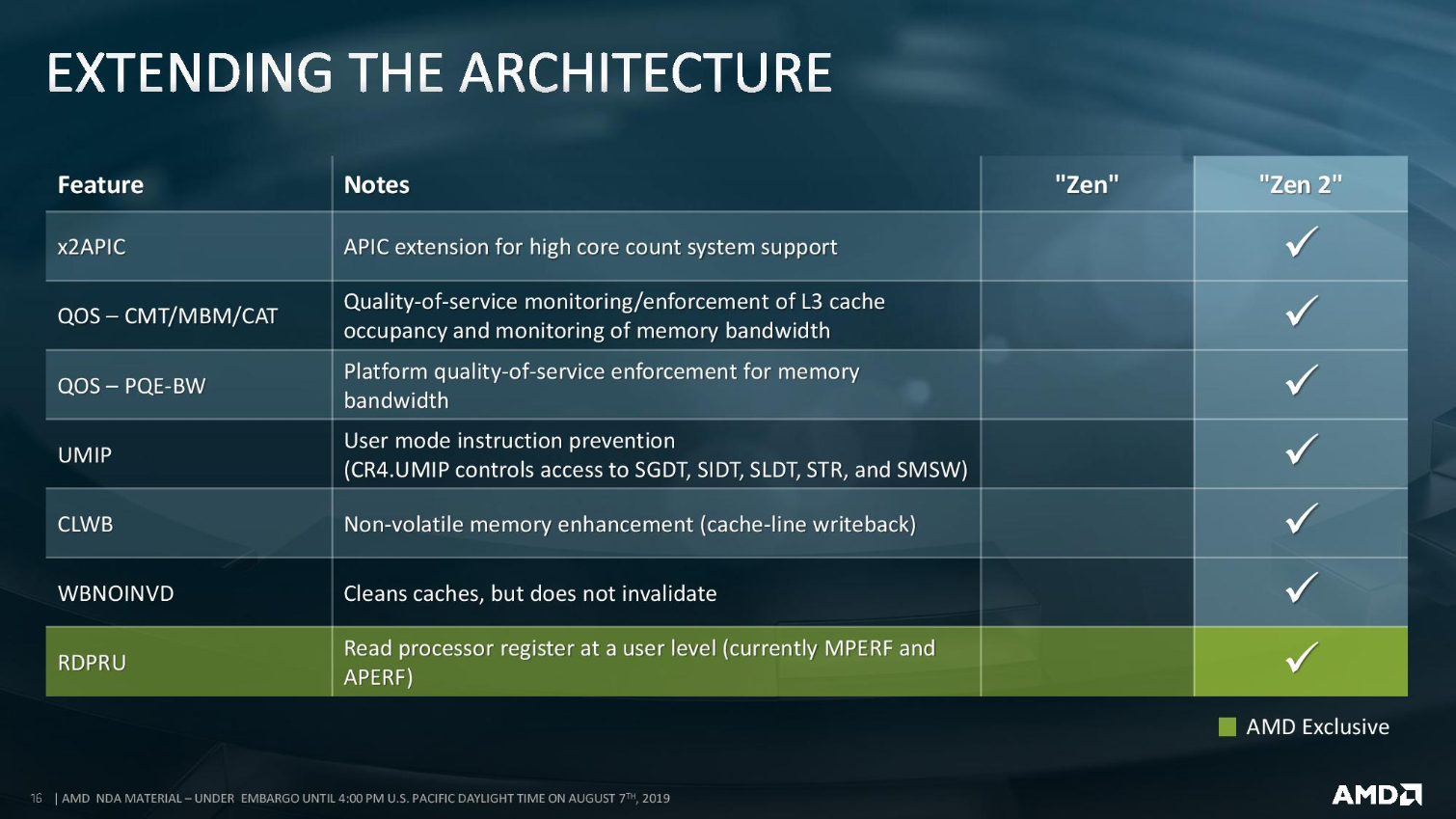

Rome offers up to 410 GB/s of memory throughput, which easily outweighs Intel's peak throughput of 282 GB/s. AMD added the x2APIC extension to improve support for high core counts, bolstered its Quality of Service mechanisms for memory bandwidth and L3 cache access, and now supports new commands for non-volatile memories.

Rome's I/O links can be configured to several different uses, with links capable of being dedicated to socket-to-socket connections, or just used as standard PCIe links. That allows the company to support 128 lanes on single-socket systems. The PCIe subsystem also supports bifurcation, which allows up to eight devices per x16 link.

In a smart move given the company's Radeon Instinct GPUs, some 2P systems can get more lanes for I/O by disabling socket-to-socket links, with up to 162 lanes of PCIe 4.0 being exposed to the user in a two-socket server. These techniques require specialized platforms that aren't compatible with first-gen Naples systems.

All Rome processors can work in a single-socket server, but AMD retains models specifically for single-socket systems to drive that specific ecosystem.

Thoughts

On paper, the AMD EPYC Rome processors look to be capable performers that marry innovative and often unrivaled features with unprecedented core counts. We'll have to wait for third-party verification in labs like our own, but if the chips meet expectations, Rome could be AMD's inflection point in the data center.

Intel is busy promoting its platform-level advantages, such as tight integration with accelerators and Optane DC Persistent Memory, but what could be viewed as complementary products that boost the value proposition could also be viewed as vendor lock-in. It all depends on your perspective.

Intel is also making sure its partners and customers are aware that it does have high core-count models of its own coming in the form of 14nm 56-core Cooper Lake models, but those chips won't come until next year and do not have PCIe 4.0 connectivity. It's clear Intel is attempting to stave customers off from investing in optimizing for EPYC Rome processors when there are competing Intel systems on the near horizon.

And it does take quite a bit of qualification for data centers and enterprise customers to validate software stacks and hardware configurations, particularly for mission-critical applications. Given the amount of time and money needed to spin up new systems that support new hardware, AMD will have its hand's full convincing customers to make the switch. But that's why the company messages its continuing roadmap and solid execution: It wants potential customers to know those investments will pay off over the long run.

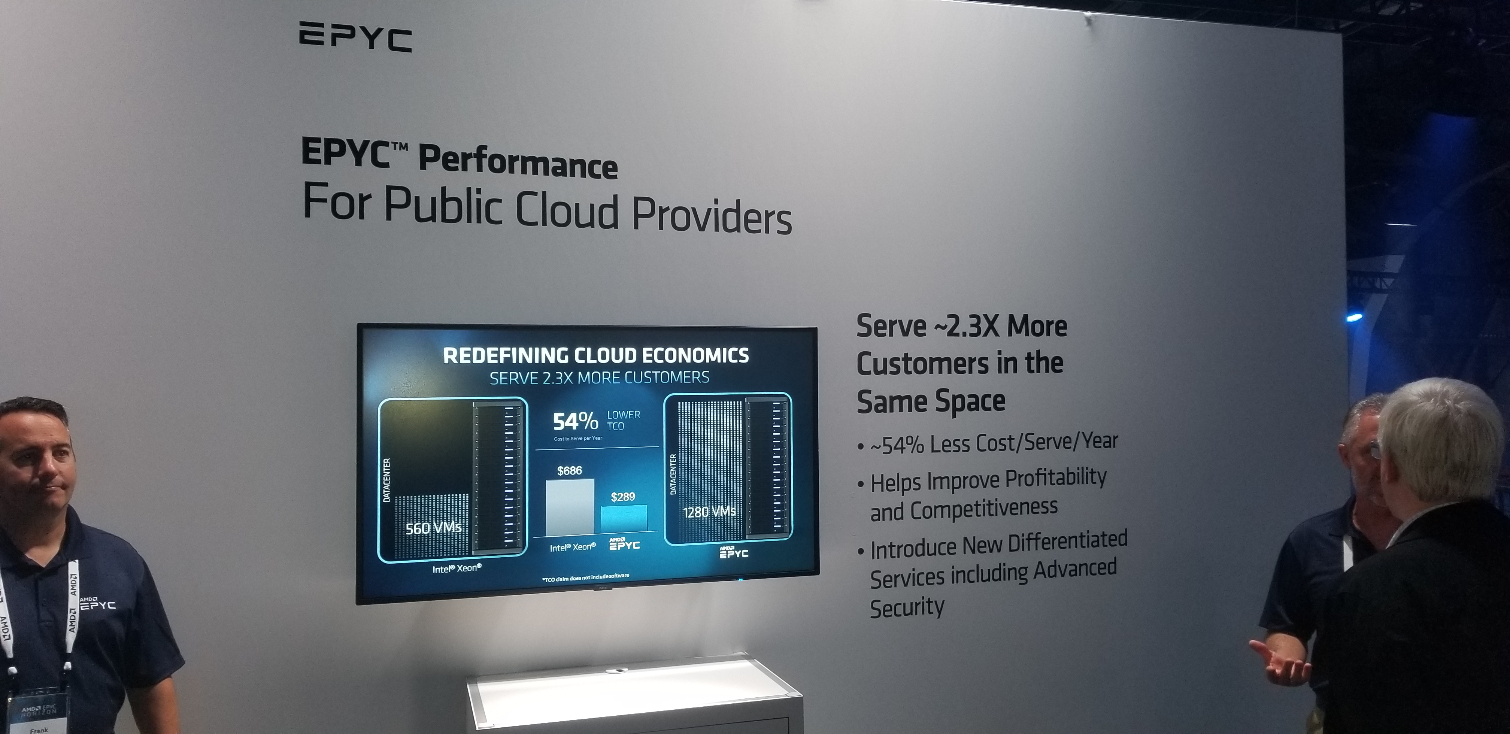

As AMD wisely did with its first-gen Naples processors, it is focusing its efforts on larger hyperscale and cloud service providers, which eases the overhead on the company's limited resources. Courting CSPs also fosters an ecosystem of cloud-based instances that prospective customers can use to test out the new hardware, but without the burden of upfront investments.

If AMD's Rome can deliver on its promises, Intel's primary advantage may be its incumbency. Intel has built its data center dominance on strong relationships with the big OEMs and ODMs, and the company has taken pains over the last few weeks to remind us of those relationships, but AMD is busy building its own relationships with those same companies. The industry has long wished for real substantiative competition to keep prices in check. There's no doubt that Rome delivers on that front, and if the chips enjoy the broad uptake expected by the majority of analysts, they could change the entire paradigm of the data center.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

NightHawkRMX Wow! Only 7 grand? What a steal!Reply

I could buy a used Corvette or I could buy a lump of silicon. Hmm, I wonder whats a better deal.

I'm aware I'm not the target audience lol. -

mdd1963 With Microsoft's current Windows licensing scheme, it will cost about $3,000 dollars (per socket) to run Windows Server 2019 Standard, or, ~$25-$27K to run DataCenter....Reply

Tends to drive folks to CentOS, Debian, etc., I'd think.... :) -

LiviuTM Actually, the Rome I/O die is manufactured on GlobalFoundries 14nm process. Only consumer I/O die is on 12nm.Reply -

svan71 Replyremixislandmusic said:Wow! Only 7 grand? What a steal!

If you had a job that requires this cpu you could afford a new corvette. Oh and Intels closest yet inferior competitor is 10K. -

bourgeoisdude Replyremixislandmusic said:Wow! Only 7 grand? What a steal!

I could buy a used Corvette or I could buy a lump of silicon. Hmm, I wonder whats a better deal.

I'm aware I'm not the target audience lol.

Datacenters and businesses that run VMs. We are a small business but our biggest server currently has two older gen Xeon CPUs at 10 cores each. We easily run 6 server VMs with those and likely could run 8-10. The CPUs cost over $2000 each at the time. So our next server may very well use AMD EPYC for cost savings alone. -

jrazor247 I am having trouble understanding the performance without a gaming benchmark. Will it warp Crysis?Reply

Sent from my iPad using Tapatalk Pro -

NightHawkRMX Reply

Im sure it would, but a 12 year old game wont use all cores.jrazor247 said:I am having trouble understanding the performance without a gaming benchmark. Will it warp Crysis?

Sent from my iPad using Tapatalk Pro -

jrazor247 Replyremixislandmusic said:Im sure it would, but a 12 year old game wont use all cores.

If you are a 12 year old, Google Microsoft WARP & DirectX.

You can use CPU to render Counter Strike, no waiting for the bus. -

bit_user Reply

I just think it's funny that you choose to look at the CPU in terms of its raw materials, but not the Corvette.remixislandmusic said:I could buy a used Corvette or I could buy a lump of silicon. Hmm, I wonder whats a better deal.

I'm aware I'm not the target audience lol.

The real value in either is what you use it for.