AMD Posts EPYC Benchmarks, Teases Vega Frontier Edition

AMD is well on its way to snatching more share in the mainstream desktop PC market, and the company also has plans for the HEDT and value ends of the totem pole with its ThreadRipper and Ryzen 3 processors on the horizon. That leaves the margin-rich data center as AMD's final target. Intel and Nvidia are both titans in the data center, with Intel holding 95%+ of the global server CPU sockets and Nvidia holding the lion's share of the burgeoning AI segment with its lineup of GPUs. AMD, however, is the only company that has both GPUs and CPUs under the same roof, which could lend it a tactical advantage against its competitors.

Of course, first, the company has to deliver products that are competitive enough to gain share in the data center, especially in light of the conservative nature of risk-averse data center operators who are slow to adopt new architectures. This isn't as much of a concern with GPUs as CPUs, and AMD has acknowledged that stealing market share in the data center isn't going to be an overnight endeavor.

During its Computex 2017 presentation, the company presented a few benchmarks that might give us an indication of its potential in both segments. Bear in mind, these are AMD-provided benchmarks.

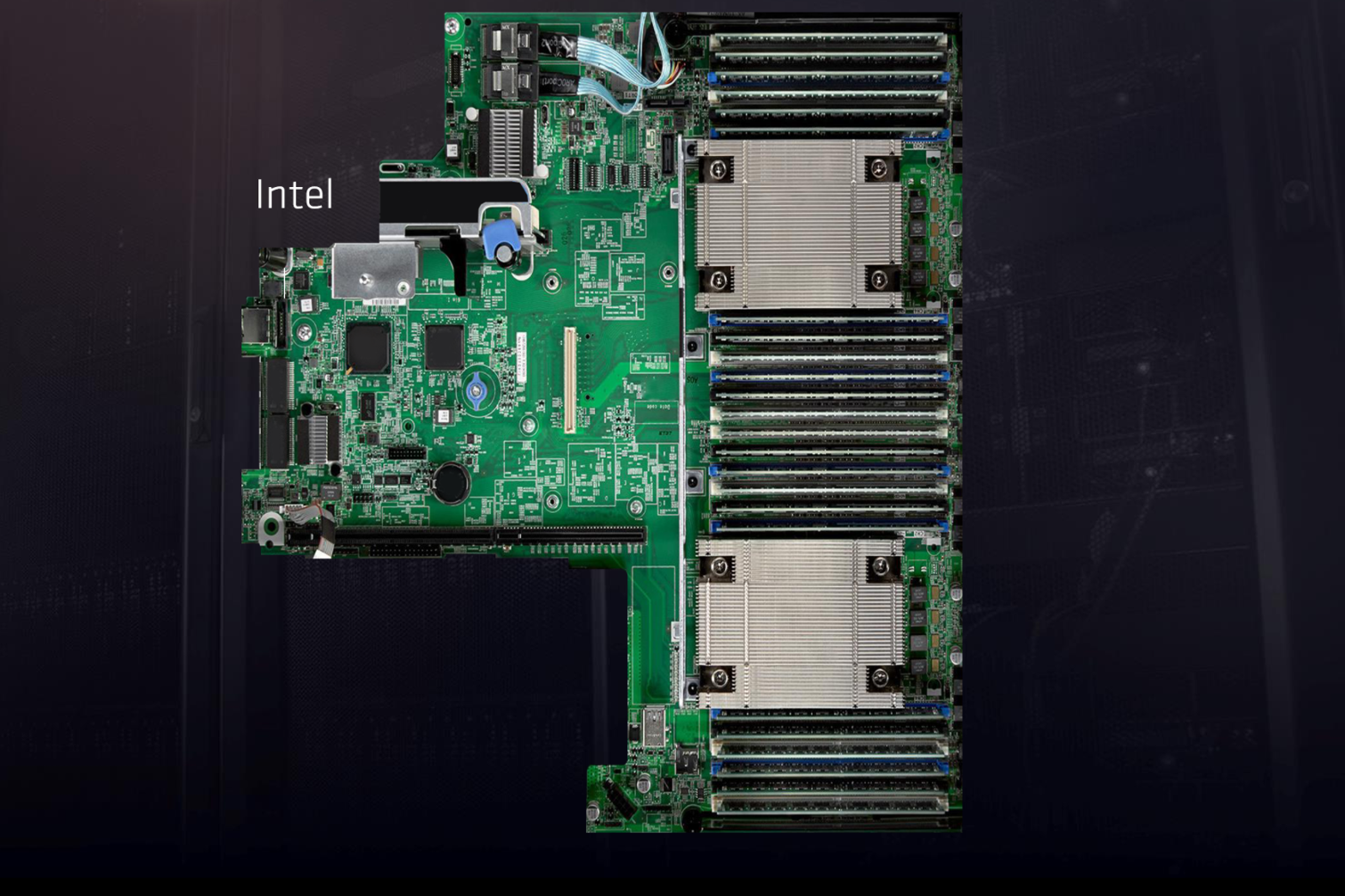

Dual Socket EPYC Servers

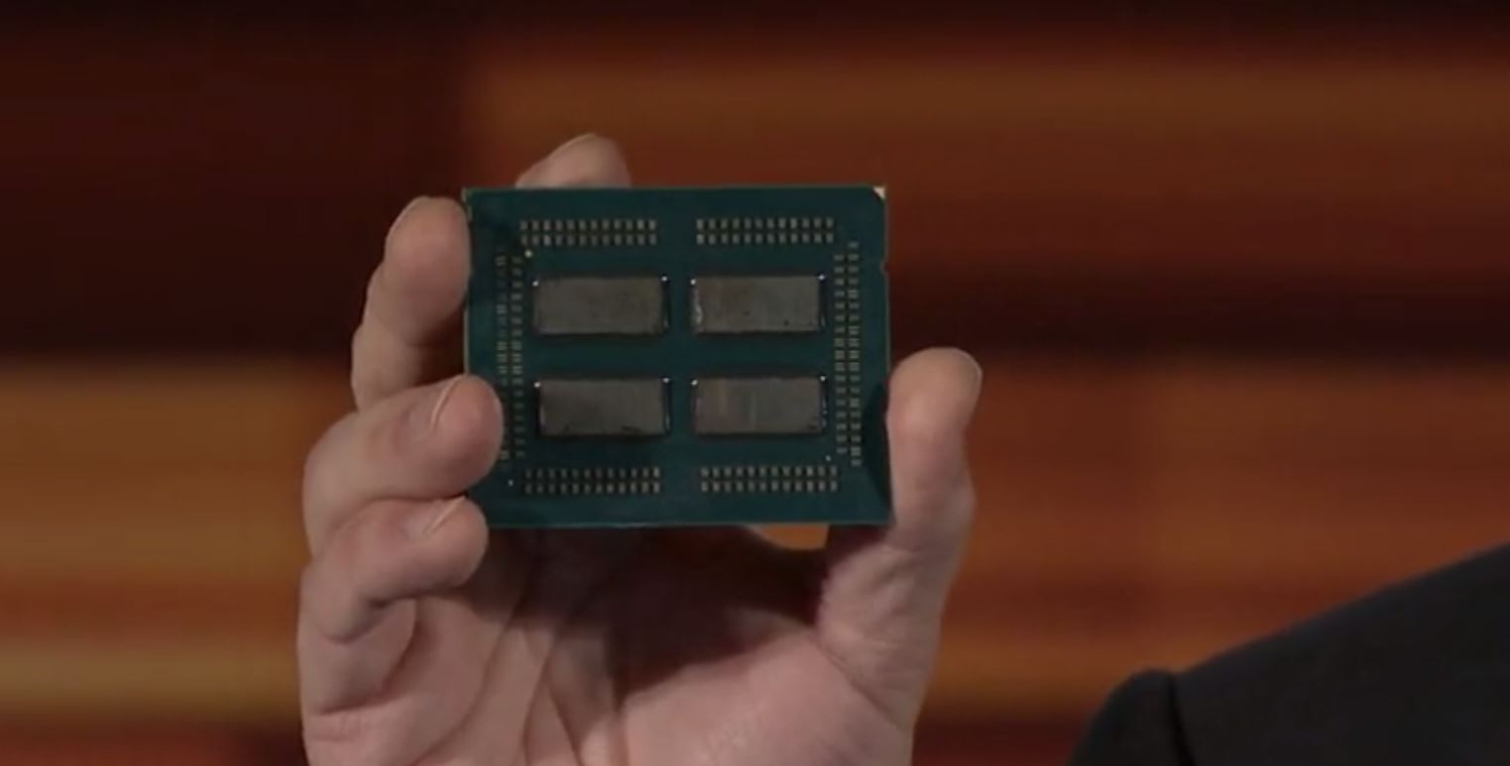

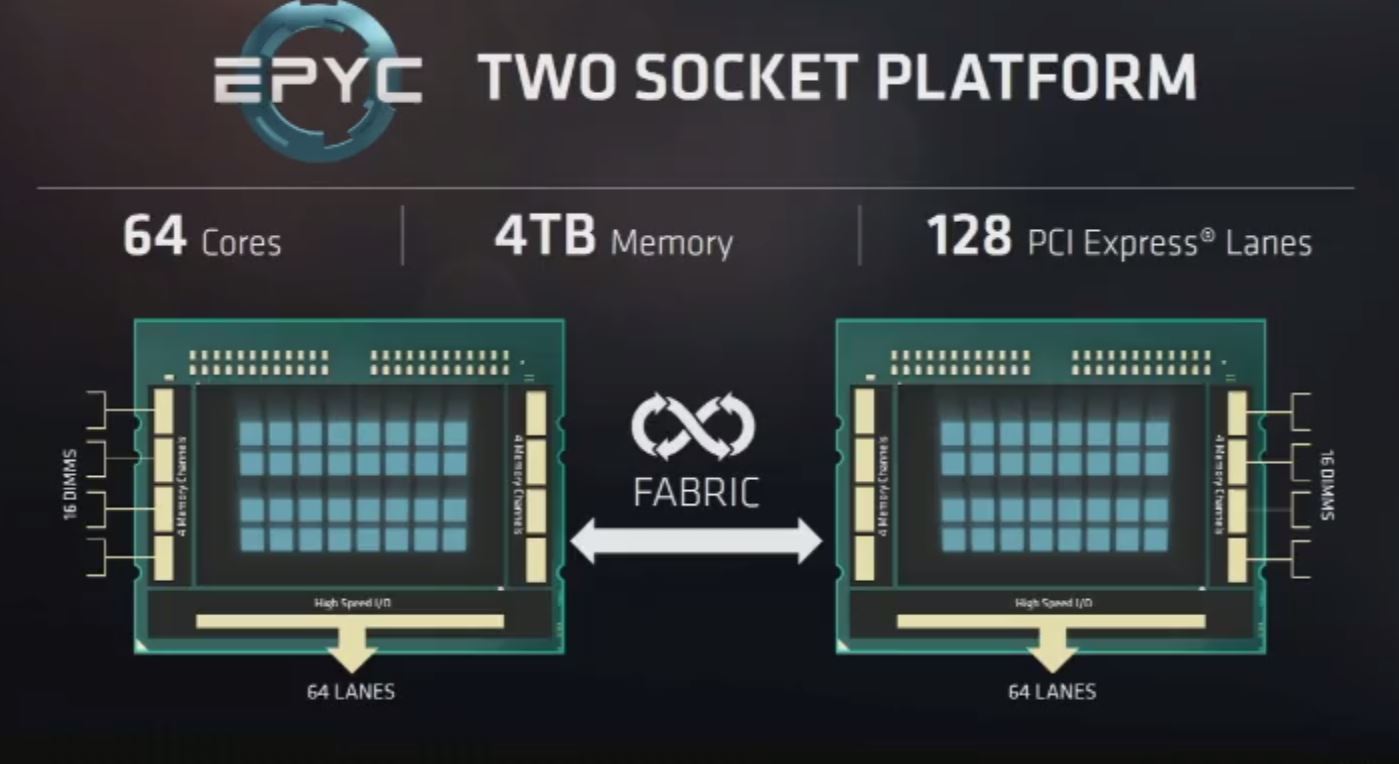

The processor side of AMD's data center equation begins with EPYC, a 32C/64T behemoth that sports 128 lanes of PCIe 3.0 and eight memory channels. The robust memory capabilities provide tremendous potential for in-memory compute workloads, and the copious PCIe connectivity opens the door for GPU-heavy architectures for machine learning training and inference workloads. EPYC-powered systems don't require an I/O hub or southbridge, which simplifies motherboard design.

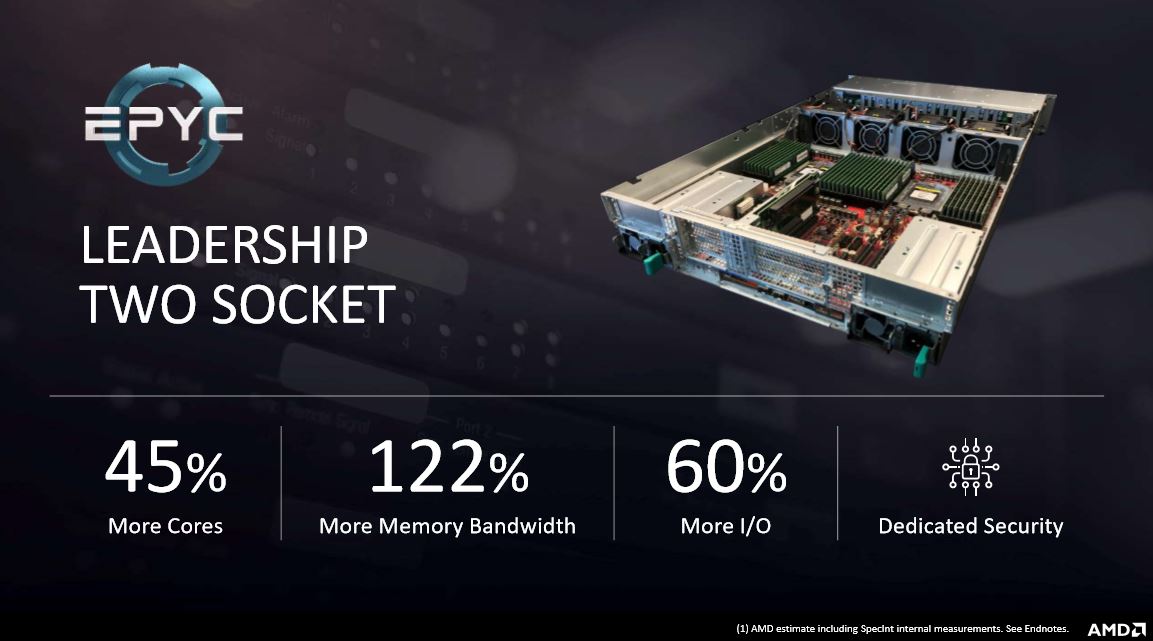

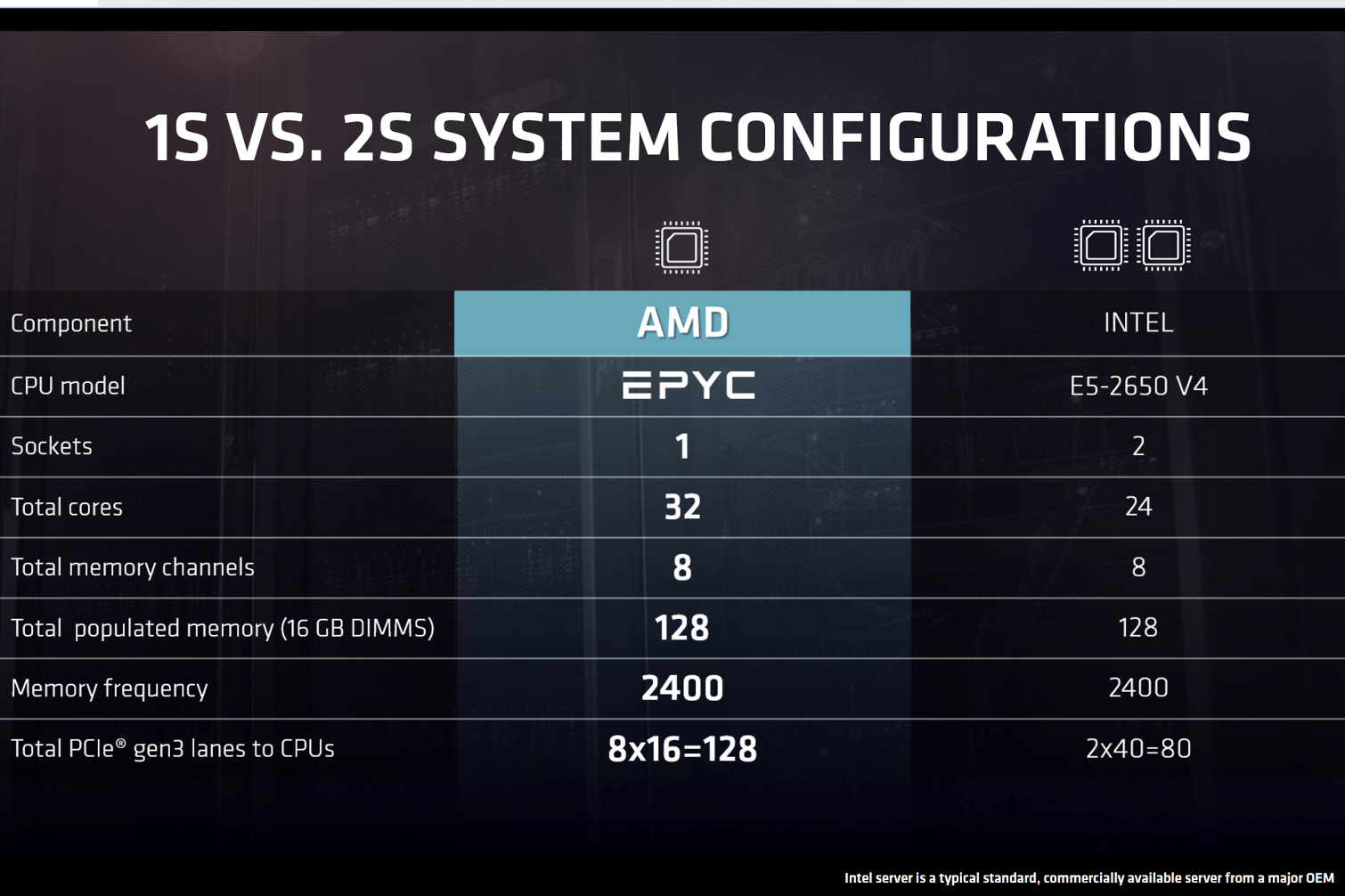

The EPYC package consists of four Zepplin die glued together with the Infinity Fabric. Combining two of the processors into a two-socket server yields 64C/128T that supports up to 4TB of memory and exposes 128 PCIe lanes to the host. Each processor provides 128 lanes for a total of 256 lanes, but 128 are used for the Infinity Fabric connection between the two processors. AMD claims this setup provides 45% more cores, 122% more memory bandwidth and 60% more I/O capabilities than competing two-socket Intel servers.

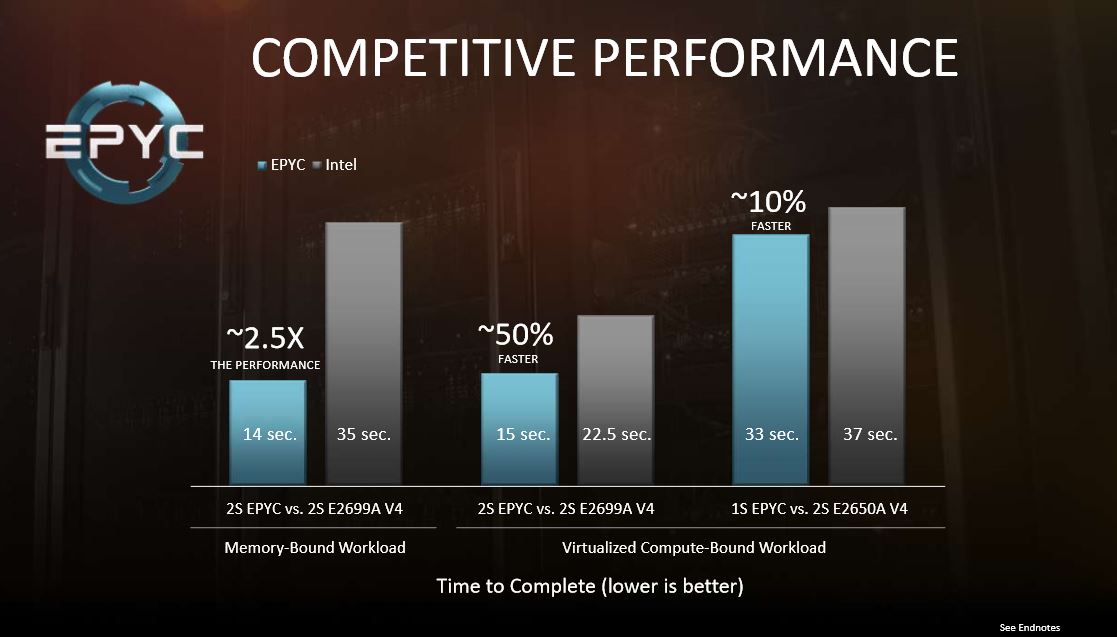

AMD's benchmarks compared a dual-socket EPYC server to dual Intel Xeon E5-2650A v4 (two 24C/48T processors) and E5-2699A v4 (22C/44T) platforms. The results show the company beating Intel by 2.5X in a memory-bound 3D Leplace computation, which simulates a seismic analysis workload (991.232 million cells), on Ubuntu 16.10. Both servers employed DDR4-2400, though the AMD setup utilized 512GB of memory compared to Intel's limit of 384GB. The AMD platform completed 712.85 computations per second compared to 286.53 for the Intel E5-2699A v4 platform.

According to AMD, the EPYC processors also beat the Intel servers in a compute-bound virtualization workload by 50% and 10%, respectively. The workloads consisted of gcc compiles of a bare-bones Linux kernel on a 2P test platform with 8 VMs per server (details below).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

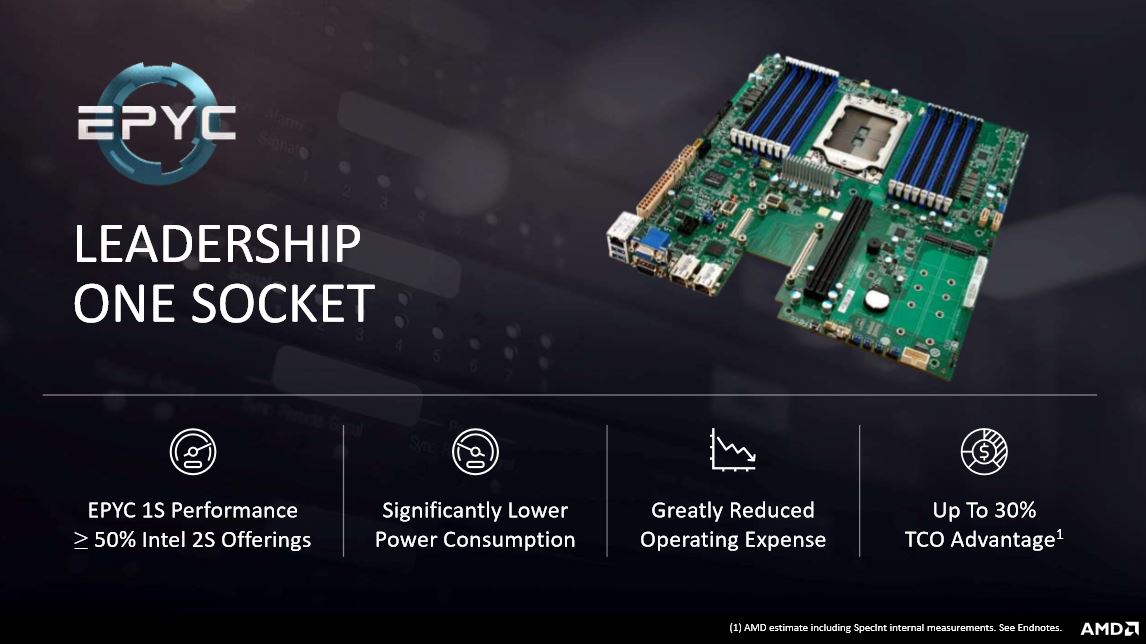

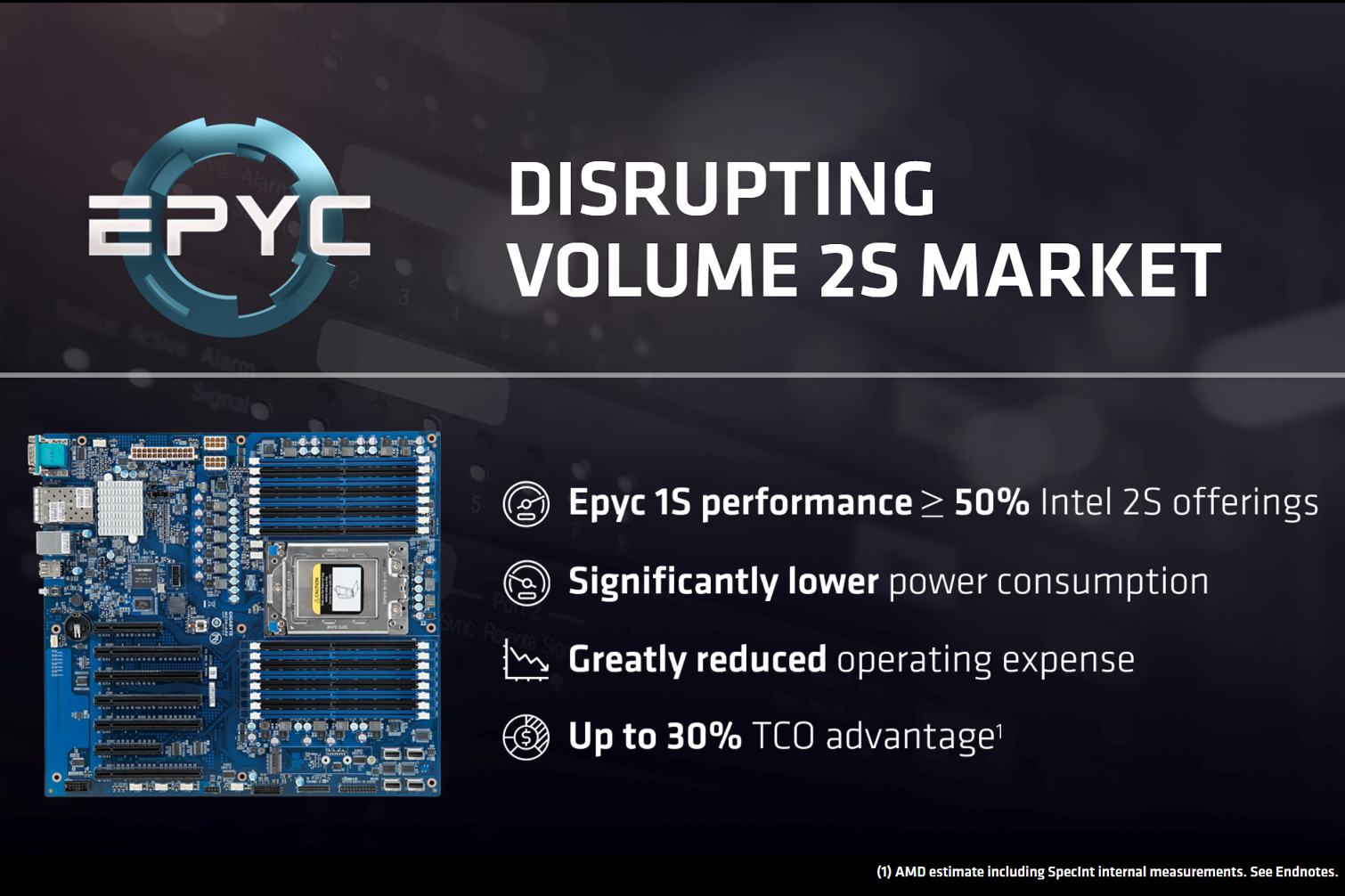

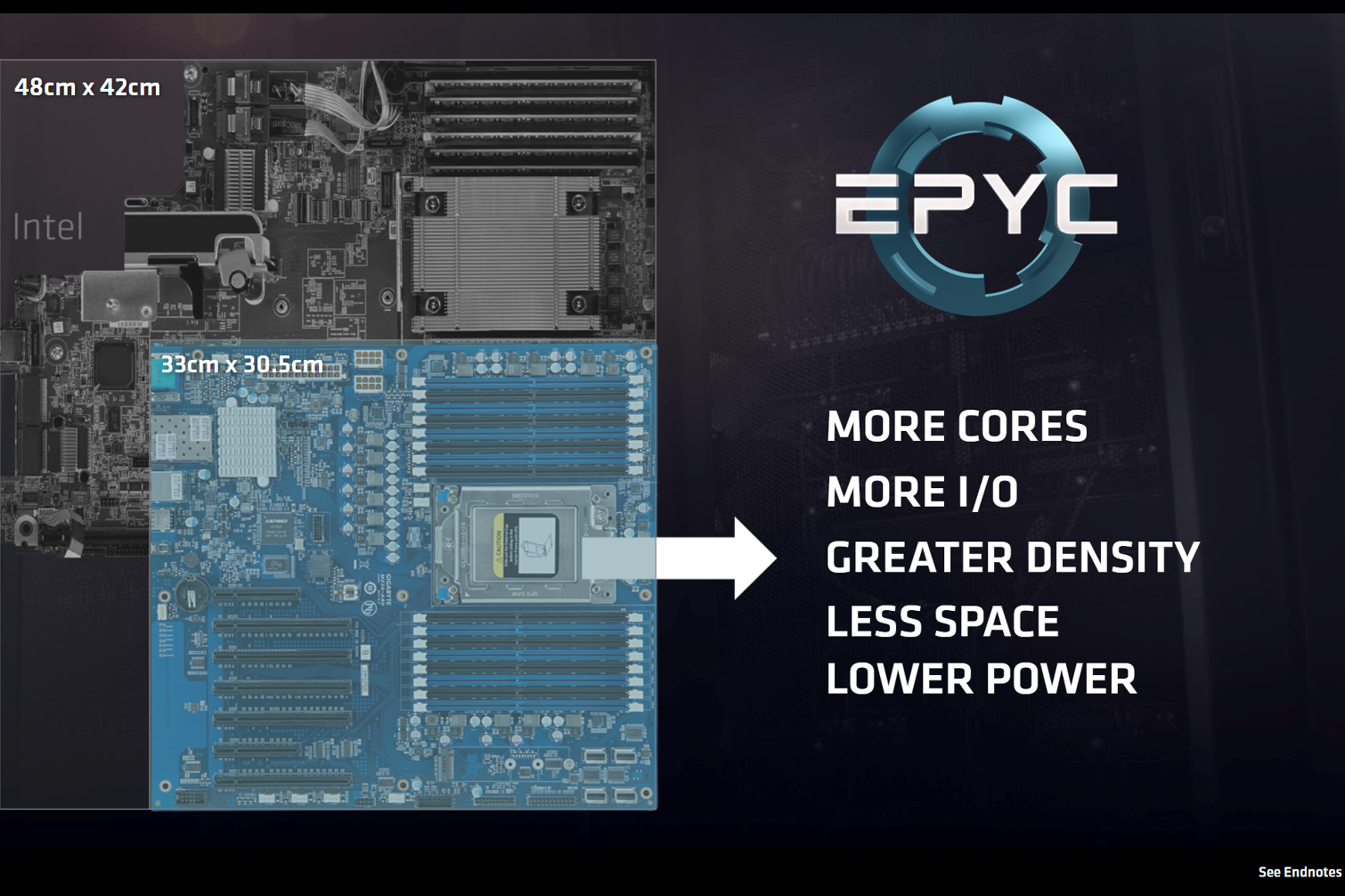

Single Socket EPYC Servers

AMD also sees growth opportunity in the single-socket server market. The EPYC processors provide more cores and twice the memory channels and memory capacity of competing Intel processors. Adding in the PCIe lane advantage makes for a powerful single-socket server that AMD feels can take on Intel's finest. Of course, floor space, power, cooling, and heat are all critical concerns in the data center, so offering more capabilities in a smaller package has long-term total cost of ownership advantages. The company hasn't provided any performance claims for its single-socket servers, but we can expect those to emerge soon--the EPYC processors launch on June 20, 2017.

Radeon Vega Frontier Edition

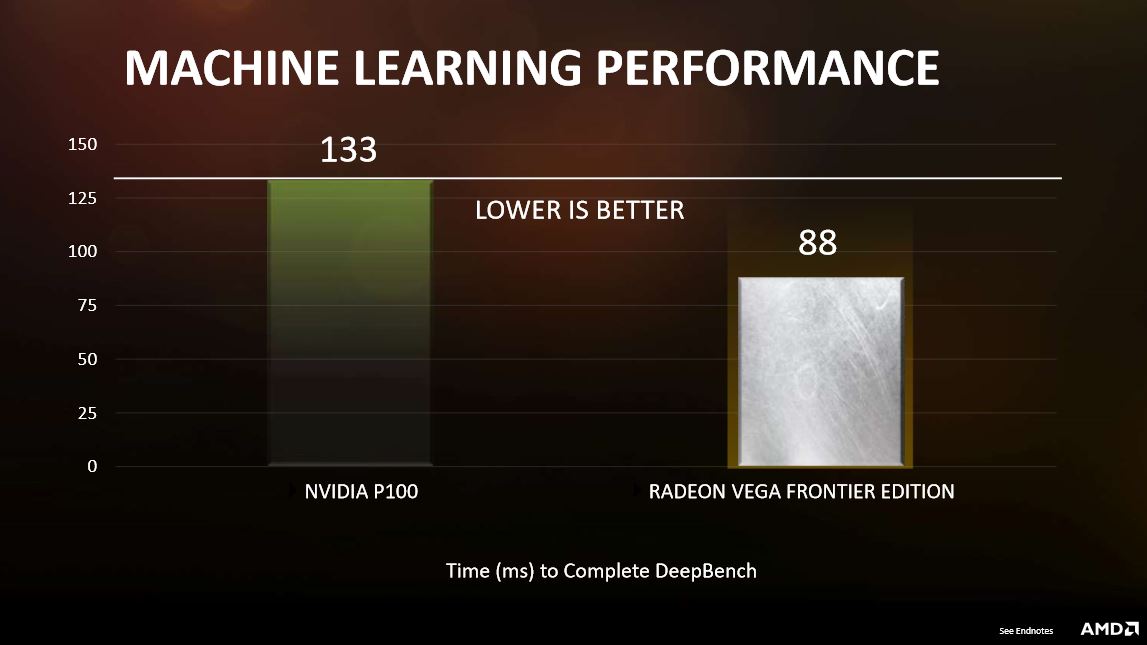

AMD also released a single benchmark for its Vega Frontier Edition GPU that targets at artificial intelligence workloads. The Frontier Edition sports 64 Next-Generation Compute Units, which AMD has bestowed with the "nCU" moniker due to its support for new data types, that feature 4,096 stream processors. AMD has optimized the nCUs for higher clocks and has also integrated larger instruction buffers to feed them. AMD provided estimated performance specifications of 12.5 TFLOPS in peak FP32 single precision compute and 25 TFLOPS of peak FP16 compute. The card features 16GB of HBC (High Bandwidth Cache), which is AMD's new term for HBM (High Bandwidth Memory). The new card also features support for 8K displays, and it can access up to 256TB of virtual memory.

AMD claims the extra resources equate to a run time of only 88ms for the DeepBench AI benchmark, whereas the Nvidia P100 required 133ms. Of course, it would be interesting to see Volta pressed into service on the GV100 in this test, but we'll have to wait for that. In either case, we can expect to see the Vega Frontier Edition come to market on June 27th.

We've included the test endnotes below, click to enlarge.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

redgarl Well, their benchmark for Rysen were true...Reply

Trolls (BUT LOOK AT THE PERFORMANCE AT 1080P WITH A 1080 GTX ROLFCOPTER)... sure, a totally unpractical and unprobable bench...

1. You don't bundle a 500$ CPU with a 1080 GTX to play at 1080p

2. You don't bundle a 1600x to play at 1080p with a 1080 GTX

Thanks Toms for generating people like XFRGTR. -

msroadkill612 You say "That leaves the margin-rich data center as AMD's final target. Intel and Nvidia are both titans in the data center, with Intel holding 95%+ of the global server CPU sockets and Nvidia holding the lion's share of the burgeoning AI segment with its lineup of GPUs."Reply

and then:

"...gain share in the data center, especially in light of the conservative nature of risk-averse data center operators who are slow to adopt new architectures."

I would think yes and no. Are google and amazon really such pussys? Datacenters have the skillsets for workarounds. Cheaper means more affordable redundancy, & hence, safer....

Whatever, objective comparisons with epyc are very embarassing for intel atm.

But the MCM/fabric story has just started.

True to form of lucrative pro models first, i expect vega GPU inclusive variants of the epyc MCM before raven ridge APUs release (they are variants of the same theme), and all processors inter connected via the 128 lane fabric (? I asume, as thats the interconnect for the 2 socket epyc servers) , with absurd speed and efficiency, independent of the legacy beholden system board, and its busy shared bus.

an exciting image IMO:

http://semiaccurate.com/assets/uploads/2017/05/AMD-FAD-Infinity-Fabric-Reach.png

it grapically shows, as we know from vega specs, a path for gpuS to be linked w/ cpuS on the same fabric.

The fabric can even mount its own ssd raid 0 arrays for onboard storage or virtual memory, and on fabric ~500GBps hbm2 ram, as per vega specs.

Clearly, using storage as virtual memory, aint what it used to be, given the above scenario, and amd make much of their advanced memory pooling, prioritising and layering technology, and tho inevitably slower than vram, it allows ~unlimited (512TB) virtual ram and vram. NB also, its concurrently adding a chunk of radically fast vram to the hierarchical memory resource pool

Its a wet dream for cgpu server guys.

The big picture is that an inactive player in the ~server market, has come from nowhere and trounced the ~100% share incumbents metrics. The minnow cant digest that 100% share anyway, but they can sure prosper on over 30% of intels river of gold, and that seems very doable. -

John Wittenberg "1. You don't bundle a 500$ CPU with a 1080 GTX to play at 1080p"Reply

Except when the best video card on the market can't play that shiny new game at 4K with the eyecandy turned up and not dip below 40FPS (I can't stand less than 55, and my TV doesn't have Gsync ya know). Cough, Ghost Recon Woodlands, cough.

So yeah, my $700 (used) E5-1660 V3 is paired with my $700 1080 Ti to play some games at 1080P.

Totally realistic scenario, champ.