AMD's FSR 2.0 Even Worked With Intel Integrated Graphics

We tested FSR 1.0 as well, for science!

When AMD officially debuted it's FidelityFX Super Resolution 2.0 technology last week in Deathloop, we were rather impressed. It's more demanding than FSR 1.0, but image quality was worlds better, comparable even to DLSS — at least in this one game. We even tested it on some older graphics cards and found it could still boost performance by 20–25 percent. That's potentially enough to make a game "playable" on hardware that otherwise would come up short. But what about on laptops running Intel integrated graphics?

Officially, AMD provides loose recommendations for what sort of GPU you should have in order to get the best results from FSR 2.0. For 4K upscaling, AMD recommends a Radeon RX 6000-series GPU. For 1440p, RX 5000-series and RX Vega cards should suffice. Finally, for 1080p upscaling, AMD suggests having at least a Radeon RX 590 or similar. Again, we've tested with lower tier GPUs already and found FSR 2.0 still worked and provided some fps gains. Now we're going for minimum level hardware to see how it goes.

We pulled out a couple of laptops using Intel integrated graphics to find out. One is a Tiger Lake laptop from 2020, so not exactly old but also not brand new. It has a Core i7-1165G7 processor with Iris Xe Graphics — 96 EUs to be precise. Short of Intel's new Arc Alchemist GPUs, this is basically as fast as Intel graphics get for the time being. It also has 16GB of LPDDR4x-4267 memory, which also matters.

The second laptop steps back one more generation, to a Core i7-1065G7 with Gen11 graphics. It still has 16GB of memory, but this time it's LPDDR4x-3200. Not that it matters much with Ice Lake, which in theory is about half as fast as Xe-LP graphics. Even with 96 EUs, Gen11 isn't going to do much with Deathloop.

First, some disclaimers are in order. Deathloop itself warns that Intel graphics solutions are currently unsupported and may not work properly. We pressed onward, heedless of such trivial matters. Science must have answers!

Loading the game took several minutes, just to get to the main menu. Loading into a level felt like it too forever, even at very low settings and a 1280x720 resolution. (It was actually about 4.5 minutes.) At one point, I thought the game had simply crashed, but then I was greeted with actual working graphics. Yes, the game was working!

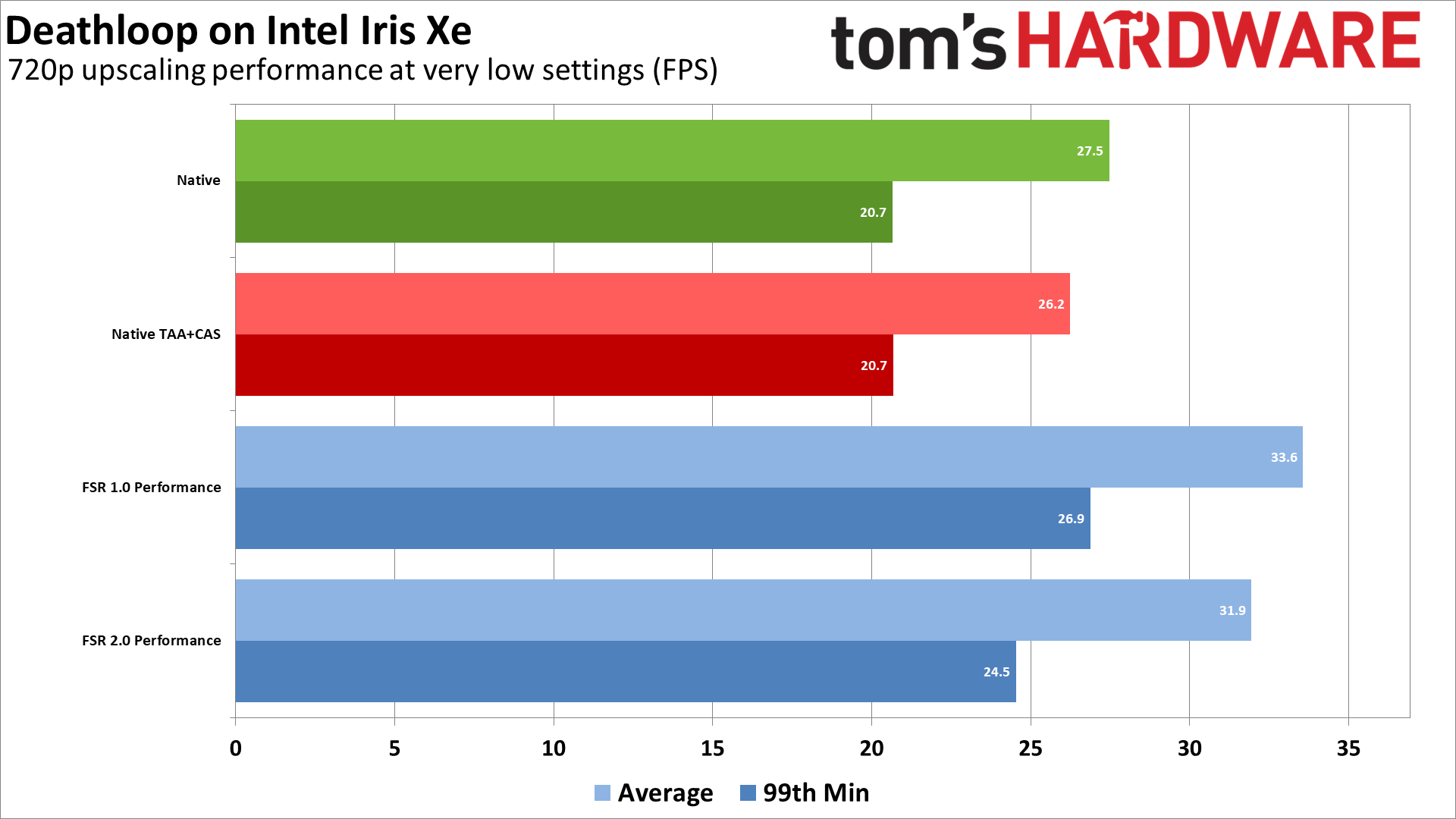

I proceeded to run some benchmarks, first at native 720p and very low settings, then with native but with temporal anti-aliasing (TAA) and FidelityFX CAS (Contrast Aware Sharpening) enabled, and finally with FidelityFX Super Resolution 1.0 as well as FSR 2.0, both using the "Performance" upscaling mode in order to provide maximum framerates. Screenshots are below, and FSR 1.0 looked quite awful, with a very blurry appearance plus sort of a "static" interference error that kept showing up, possibly from the falling snow. FSR 2.0 on the other hand was still quite serviceable.

Now granted, playing at 720p with 100% upscaling isn't going to be ideal, but was it workable? Almost! I'd even go so far as to say FSR 2.0 looked better than native, at least using the default very low settings that disable temporal AA. But how did Deathloop perform?

So yeah, that's actually not too bad! The starting point of 28 fps for Iris Xe was almost high enough to be playable, though with TAA+CAS that dropped to 26 fps. FSR 1.0 gave 22% more performance, averaging 34 fps — at the cost of image quality. FSR 2.0 helped a bit in framerates as well, to the tune of 16%, just clearing 30 fps. That's the minimum we shoot for in order to deem a game "playable."

Note that if we enable TAA and FidelityFX CAS, which was our baseline on dedicated GPU testing, FSR 2.0 provided a 22% bump in performance while FSR 1.0 provided a 28% improvement. Considering FSR 2.0 takes care of anti-aliasing as well as sharpening, that's perhaps a better comparison point.

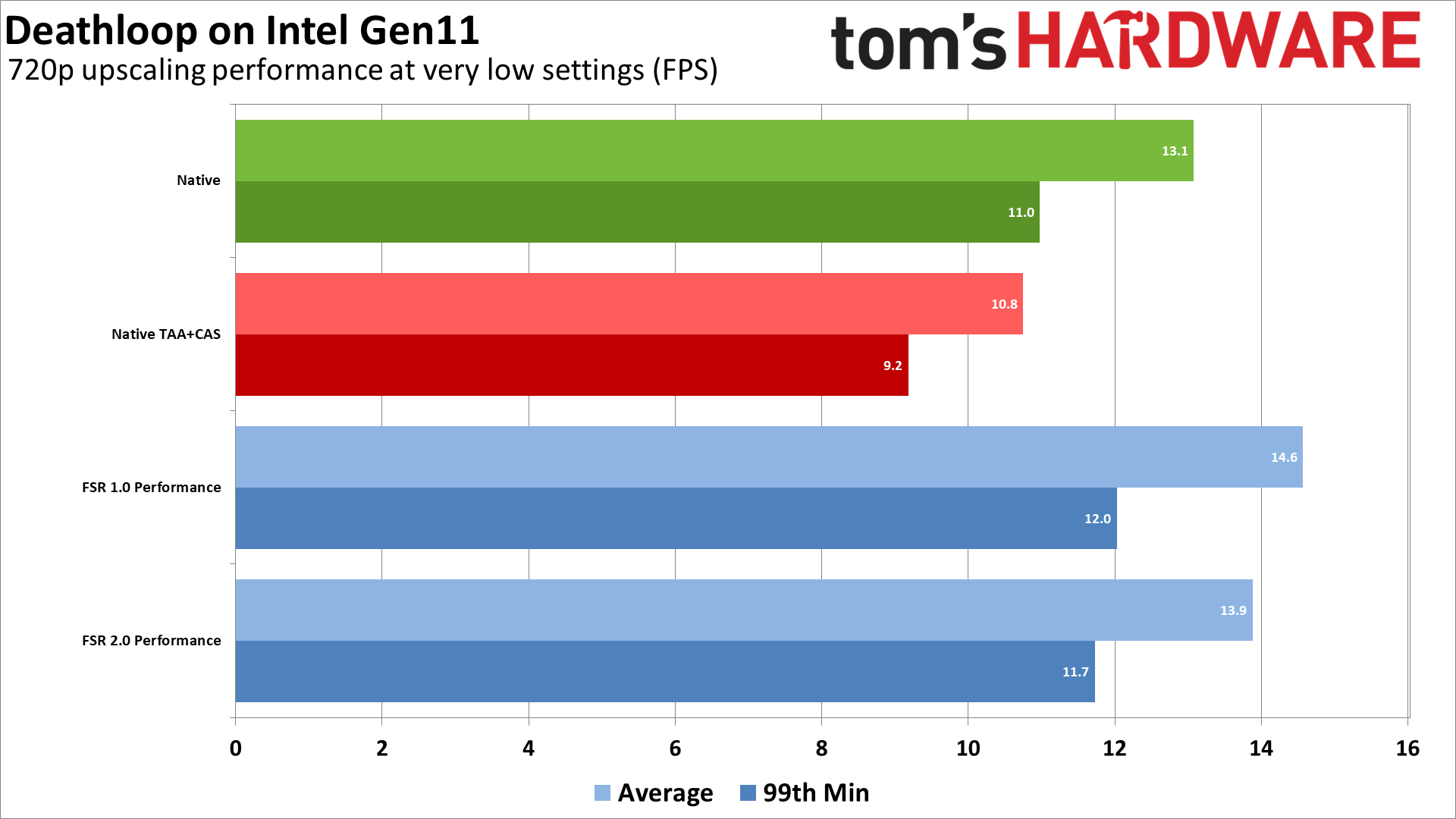

On the older Gen11 graphics, things weren't quite so rosy. Baseline performance at 720p and very low settings was only 13 fps, and that was without TAA. With TAA+CAS, performance dropped to just 11 fps. FSR 1.0 Performance mode bumped that to nearly 15 fps, while FSR 2.0 Performance mode managed 14 fps. That's a 28% improvement for FSR 2.0 (and 35% for FSR 1.0), though it's a pretty meaningless gain as Intel's Gen11 GPU is nowhere near playable still.

Slight purple sparkles visible in this scene

More purple sparkles here.

Darker areas tended to be even worse on the purple sparkles.

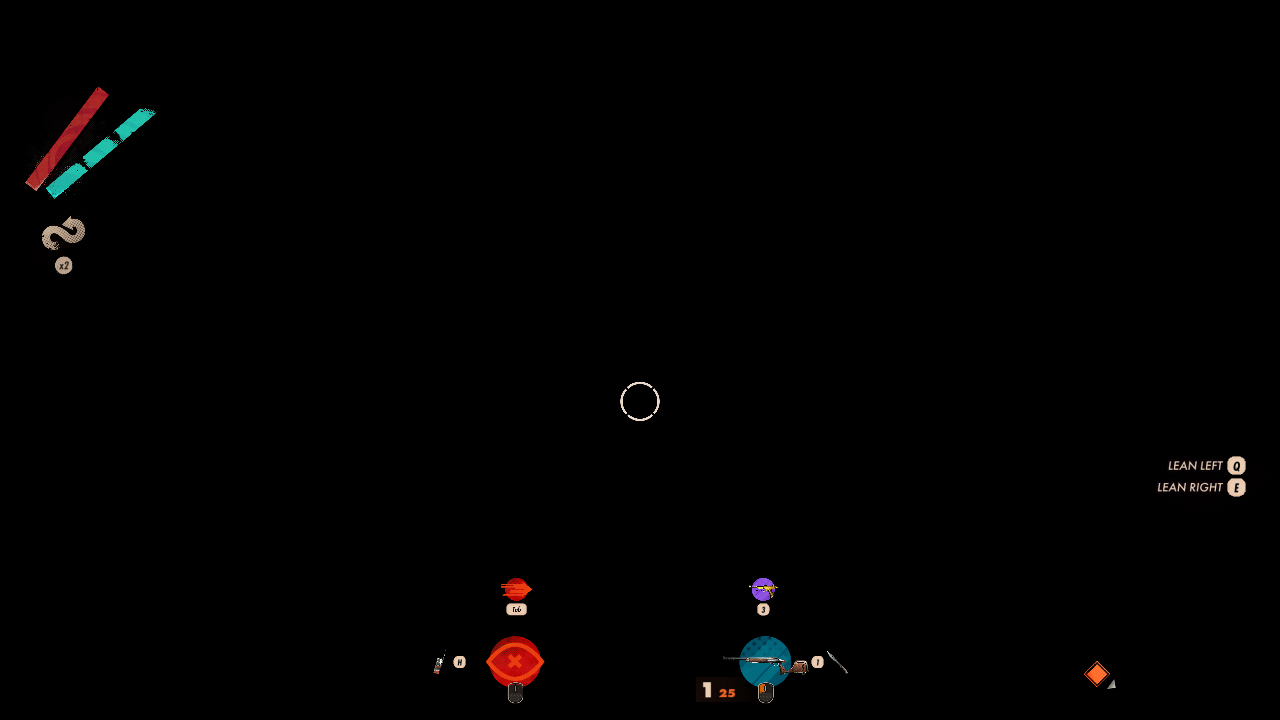

Often, all the textures and scenery would simply fail to render altogether.

The good news is at least FSR 2.0 worked. Sort of. See, the first time I ran Deathloop, on each of the laptops, everything rendered basically basically okay — very sluggish in some cases, but working. Most of my subsequent attempts to run the game resulted in a variety of rendering errors. Sometimes a few textures would look corrupted, sometimes everything except for the GUI overlay would be missing, and once in a blue moon the level would load and render more or less properly.

To be clear, none of these errors have anything to do with FSR — 1.0 or 2.0. In fact, FSR will happily try to upscale the rendering errors, giving larger purple speckles in some cases. The problem stems from Intel's GPUs as far as I can tell.

These are probably driver issues that could be fixed, as we've seen issues like this with Intel GPUs before. I also found D3D12 error messages in the Intel log files. Repeatedly exiting to the main menu and reloading the last save would sometimes improve the situation, but outside of the first run on each laptop, I only managed to get "clean" rendering again one time, out of literally dozens of attempts. Exiting the level and proceeding to a new area "resets" things, meaning what was fine could end up broken or vice versa. Thankfully, load times are only horrific on the first load — reloads when I didn't fully exit the game only took around 20 seconds.

Native 720p very low preset on Intel Graphics

Native 720p very low preset with TAA and CAS on Intel Graphics

FSR 1.0 Performance 720p very low preset on Intel Graphics

FSR 2.0 Performance 720p very low preset on Intel Graphics

Native 720p very low preset with TAA and CAS

FSR 1.0 Performance 720p very low preset on Intel Graphics

FSR 2.0 Performance 720p very low preset on Intel Graphics

The above gallery shows how the game looked when things were rendering properly. Aliasing is quite prevalent at native without TAA, but TAA on itself looks pretty blurry. Combine TAA and FidelityFX CAS and you get better image quality, but performance dropped almost 20% on Gen11 graphics, while it cause the more typical 5% drop on Gen12 (Tiger Lake Iris Xe).

With FSR 1.0 in Performance mode, things looked quite bad, and they're even worse in motion. FSR 2.0 on the other had looked pretty decent. Native 720p with TAA and CAS looks a bit better, but performance was 20–30% faster with FSR 2.0.

It's a shame the game wouldn't consistently "work" properly, but that was a separate issue from FSR 2.0 support. When the game did load a level and render properly, Tiger Lake's Iris Xe GPU actually managed a pretty tolerable experience. Getting a "free" 20% more performance on a low-end graphics solution was better than nothing, and hopefully FSR 2.0 will prove more beneficial on Intel's Arc GPUs, once the drivers are up to snuff.

Now we just need a lot more games to support FSR 2.0 upscaling.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

-Fran- For science indeed. Nice findings for sure.Reply

Now to wait for Devs to tell us, with a straight face, they can only implement DLSS due to technical reasons XD

Regards. -

JarredWaltonGPU Reply

Seriously, at this point, if a game supports DLSS, it really should be trivial to add FSR 2.0 support as well. And it goes the other way, too: if something supports FSR 2.0, adding in DLSS support should be pretty simple.-Fran- said:Now to wait for Devs to tell us, with a straight face, they can only implement DLSS due to technical reasons XD

Now, the real question I have is how much effort beyond the base integration is required to get good results. I've seen games that just don't seem to benefit much from DLSS — Red Dead Redemption 2 is a good example. It doesn't get a big boost to performance. Deathloop is a good example of an FSR 1.0 game where the integration also didn't work well for whatever reason. So, getting DLSS or FSR working shouldn't be too difficult. Getting it working well is another story. -

evdjj3j "Most of my subsequent attempts to run the game resulted in a variety of rendering errors."Reply

I imagine if Intel ever releases the rest of the desktop Xe GPUs this will be a common experience for people who buy them. -

That's some unexpected benefit for Intel.Reply

I'm surprised Iris iGPU can't properly render a game. I use AMD's APU and didn't encounter any problems, playing old games, even. It doesn't bode well for Arc. Intel unable to get their drivers right is not something I expect from such big company. -

JarredWaltonGPU Reply

I'm sure this is part of the reason for the Arc delays. Intel is probably finding out there are minor bugs in the drivers that need fixing for a bunch of games to work properly. In theory, at some point the drivers will address nearly all of the critical issues and things should just work, but it might take a while to get there. Look at my Intel DG1 story from nearly a year ago:evdjj3j said:"Most of my subsequent attempts to run the game resulted in a variety of rendering errors."

I imagine if Intel ever releases the rest of the desktop Xe GPUs this will be a common experience for people who buy them.

Which is not to say the DG1 had a strong showing at all. Besides generally weak performance relative to even budget GPUs, we did encounter some bugs and rendering issues in a few games. Assassin's Creed Valhalla would frequently fade to white and show blockiness and pixelization. Here's a video of the 720p Valhalla benchmark, and the game failed to run entirely at 1080p medium on the DG1. Another major problem we encountered was with Horizon Zero Dawn, where fullscreen rendering failed but the game could run in windowed mode okay (but not borderless window either). Not surprisingly, both of those games are DX12-only, and we encountered other DX12 issues. Fortnite would automatically revert to DX11 mode when we tried to switch, and Metro Exodus and Shadow of the Tomb Raider both crashed when we tried to run them in DX12 mode. Dirt 5 also gave low VRAM warnings, even at 720p low, but otherwise ran okay.

DirectX 12 on Intel GPUs has another problem potential users should be aware of: sometimes excessively long shader compilation times. The first time you run a DX12 game on a specific GPU, and each time after swapping GPUs, many games will precompile the DX12 shaders for that specific GPU. Horizon Zero Dawn as an example takes about three to five minutes to compile the shaders on most graphics cards, but thankfully that's only on the first run. (You can skip it, but then load times will just get longer as the shaders need to be compiled then rather than up front.) The DG1 for whatever reason takes a virtual eternity to compile shaders on some games — close to 30 minutes for Horizon Zero Dawn. Hopefully that's just another driver bug that needs to be squashed, but we've seen this behavior with other Intel Graphics solutions in the past, and HZD likely isn't the sole culprit.

Testing Deathloop, a DX12-only game, all of the above issues were once again in play. Ironically, when installing Intel's latest drivers, Intel even has the audacity to have a little blurb saying, "Did you know Intel was the first company to have a fully DirectX 12 compliant GPU?" I'm not sure what world Intel is living in, but that's absolute bollocks. Even if Intel was the first with DX12 drivers, I can pretty much guarantee the hardware and drivers didn't work properly in quite a few cases. -

-Fran- Reply

Good point. It does go both ways there. FSR2.0/DLSS should be equivalent going forward, from a developer's perspective (effort-wise; in theory).JarredWaltonGPU said:Seriously, at this point, if a game supports DLSS, it really should be trivial to add FSR 2.0 support as well. And it goes the other way, too: if something supports FSR 2.0, adding in DLSS support should be pretty simple.

Now, the real question I have is how much effort beyond the base integration is required to get good results. I've seen games that just don't seem to benefit much from DLSS — Red Dead Redemption 2 is a good example. It doesn't get a big boost to performance. Deathloop is a good example of an FSR 1.0 game where the integration also didn't work well for whatever reason. So, getting DLSS or FSR working shouldn't be too difficult. Getting it working well is another story.

As for why it's better implemented in some games and not others. I'd imagine it has to do with how the engine behaves and handles the rendering pipeline, plus how they apply the texture filtering. From a very high level, DLSS and FSR need to be put in the right place for the libraries to do their work correctly. That place is still decided by the developer and attached to their engine rendering pipeline. The devil is always in the details, but I think I'm not that off the mark. Specially with the AA and texture filtering techniques used.

Regards. -

artk2219 Replytommo1982 said:That's some unexpected benefit for Intel.

I'm surprised Iris iGPU can't properly render a game. I use AMD's APU and didn't encounter any problems, playing old games, even. It doesn't bode well for Arc. Intel unable to get their drivers right is not something I expect from such big company.

Unfortunately it's intels biggest problem, I have no doubt that they are able to build some decent GPU's on a hardware level, but on a driver level they've never had to keep up. They've had issues patching their existing crummy products for general compatibility since they've existed, and they figured that so long as their gpu's displayed a mostly stable image it was "good enough". Now they'll actually have to work to get a driver thats more than "it displays an image mostly correctly", and keeping up to date GPU drivers is something they've never done a good job of before. -

jkflipflop98 ReplyJarredWaltonGPU said:Seriously, at this point, if a game supports DLSS, it really should be trivial to add FSR 2.0 support as well. And it goes the other way, too: if something supports FSR 2.0, adding in DLSS support should be pretty simple.

You know enough to see where this is going. Microsoft will implement "DirectScaling" or something to directx and that will be the end of it. Just like every other graphics tech in the last 20 years. -

blppt ReplyJarredWaltonGPU said:Now, the real question I have is how much effort beyond the base integration is required to get good results. I've seen games that just don't seem to benefit much from DLSS — Red Dead Redemption 2 is a good example.

I get the feeling that RDR2s limitations sometimes involve CPU rather than GPU---this Rockstar engine (GTAV/RDR2) has always scaled well with more powerful CPUs. -

JarredWaltonGPU Reply

The problem with RDR2 is that even at higher resolutions, where the GPU should be the bottleneck, and running a slower GPU (e.g. RTX 3070 at 4K), DLSS Quality mode only gives you maybe 15%. In that same scenario, other games like Horizon Zero Dawn get around 35% more performance. It's also not CPU limited, as a faster GPU will still get much higher performance. So, something odd is going on, but I'm not precisely sure what it is. Then again, Rockstar is one of the few companies still supporting MSAA, which makes me think it had to do some extra stuff to make sure DLSS didn't screw anything up. But RDR2 has had TAA support since the beginning, which usually has the same basic requirements as DLSS/FSR2 (depth buffer, frame buffer, and even motion vector buffer IIRC).blppt said:I get the feeling that RDR2s limitations sometimes involve CPU rather than GPU---this Rockstar engine (GTAV/RDR2) has always scaled well with more powerful CPUs.