AMD Aims to Increase Chip Efficiency by 30x by 2025 (Updated)

Bold intentions, but AMD has been executing to perfection - mostly

Update 8/30/21 7am PT: AMD shared the formula used for calculating the efficiency metric, which we've included below.

Update 8/29/21 1:00pm PT: We've added more details about AMD's measurement criteria below.

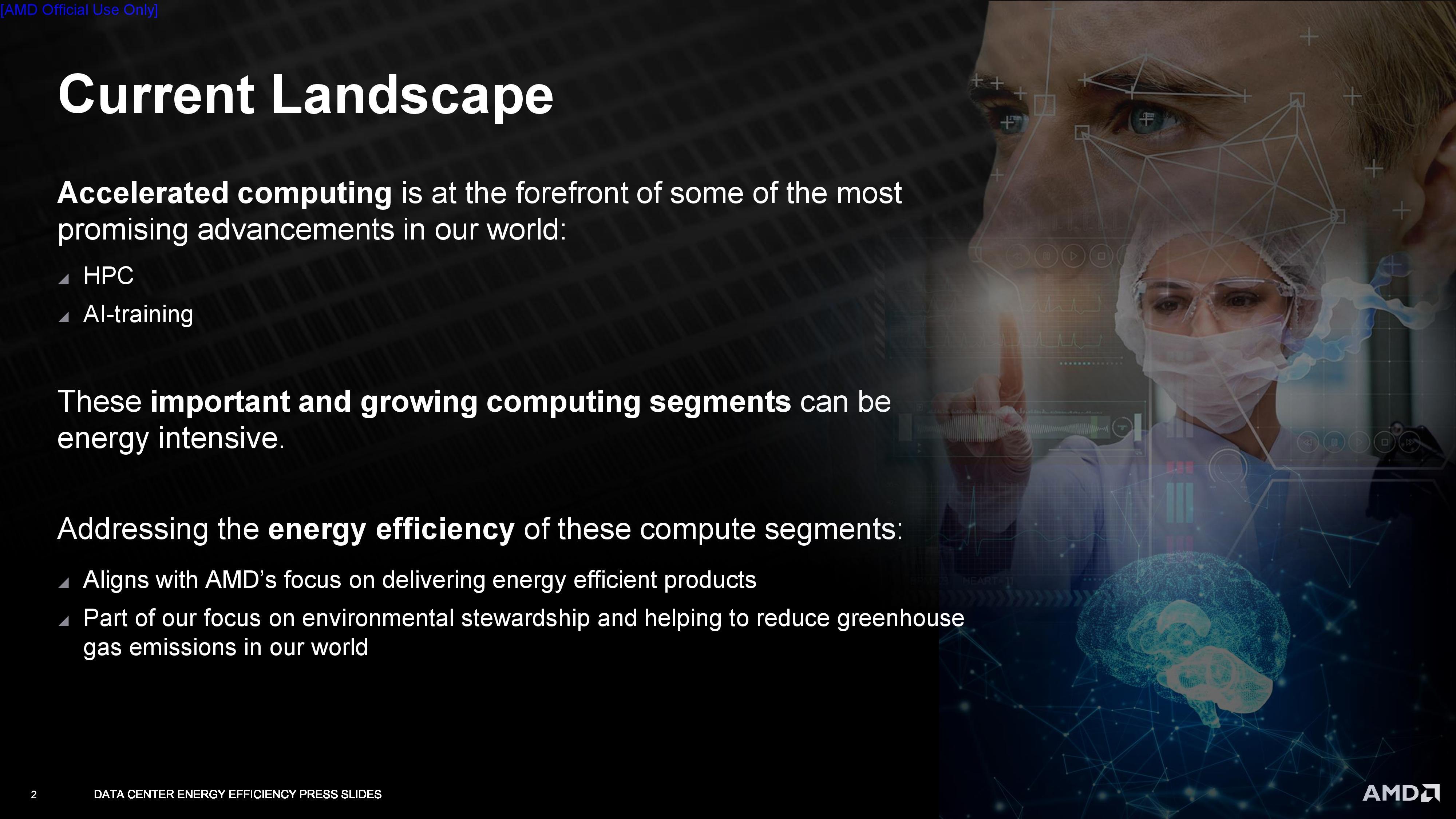

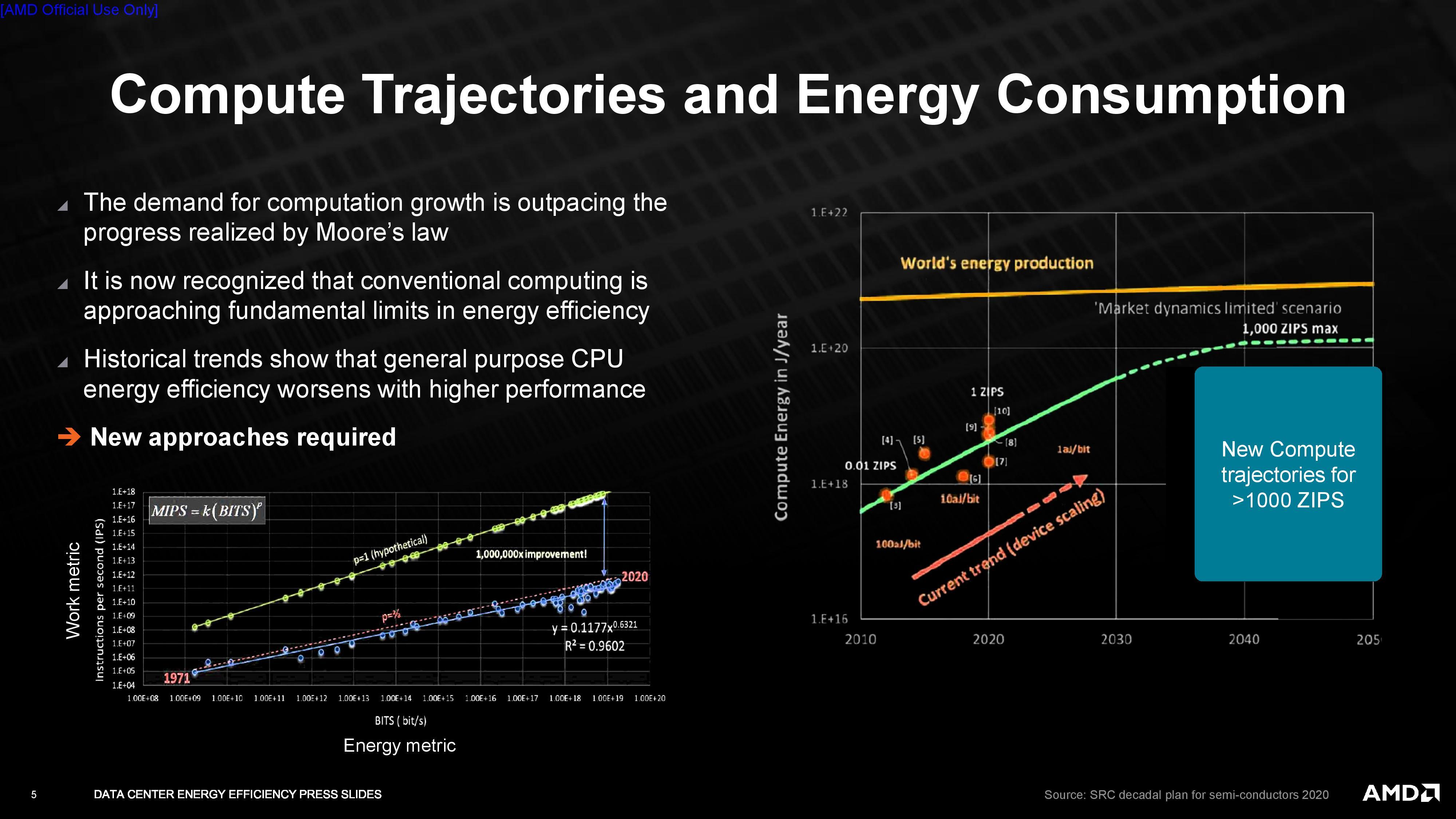

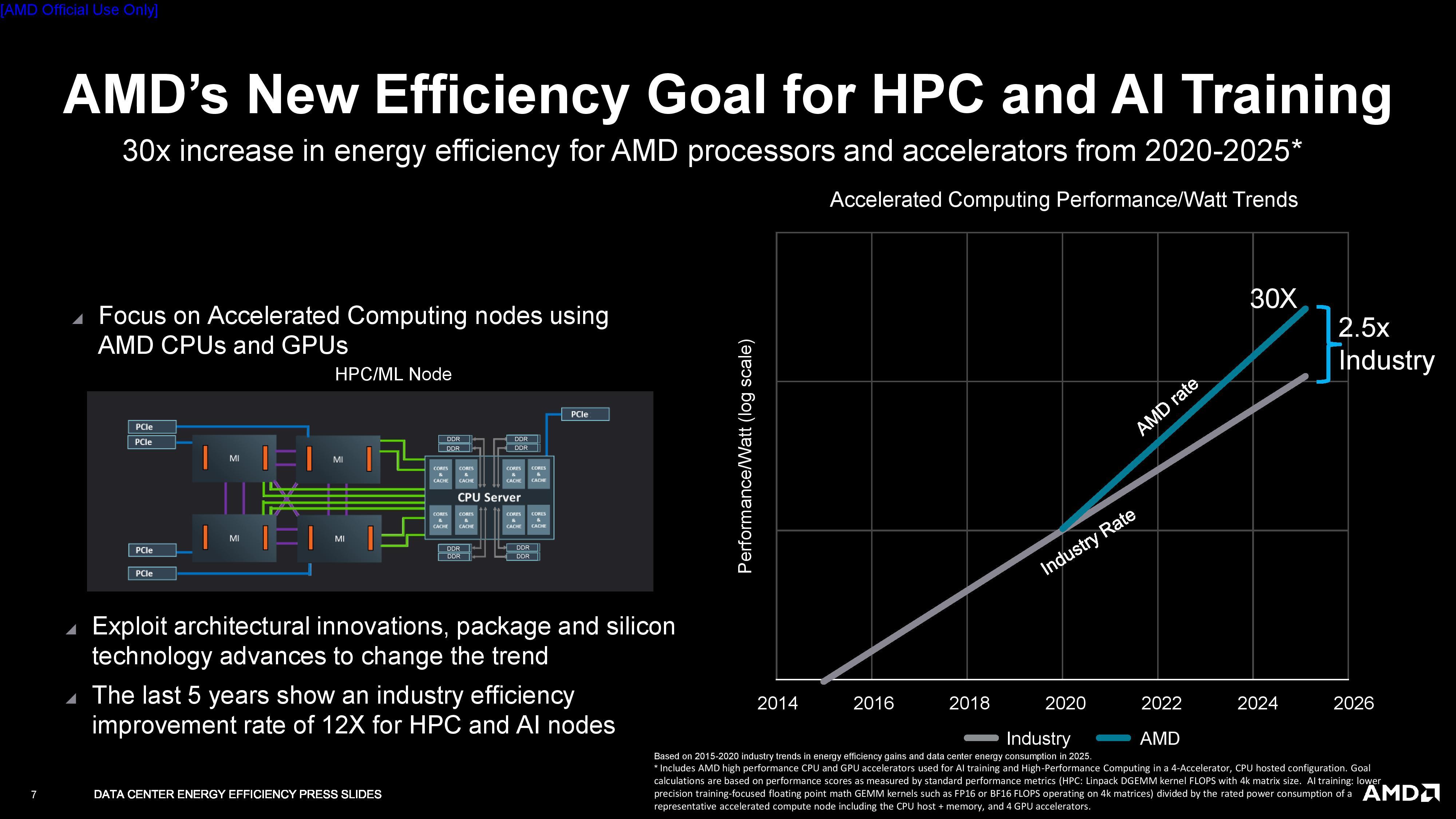

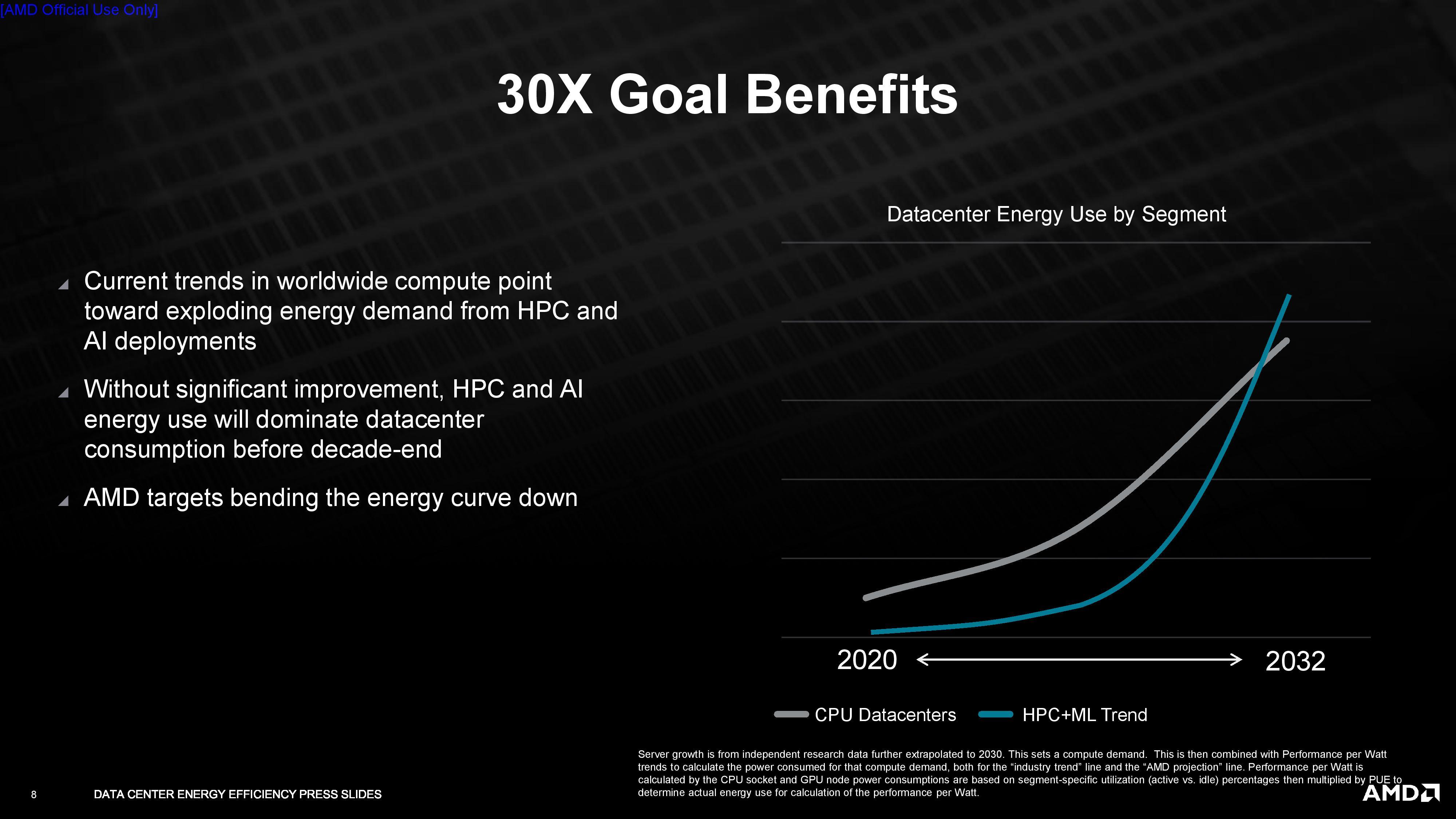

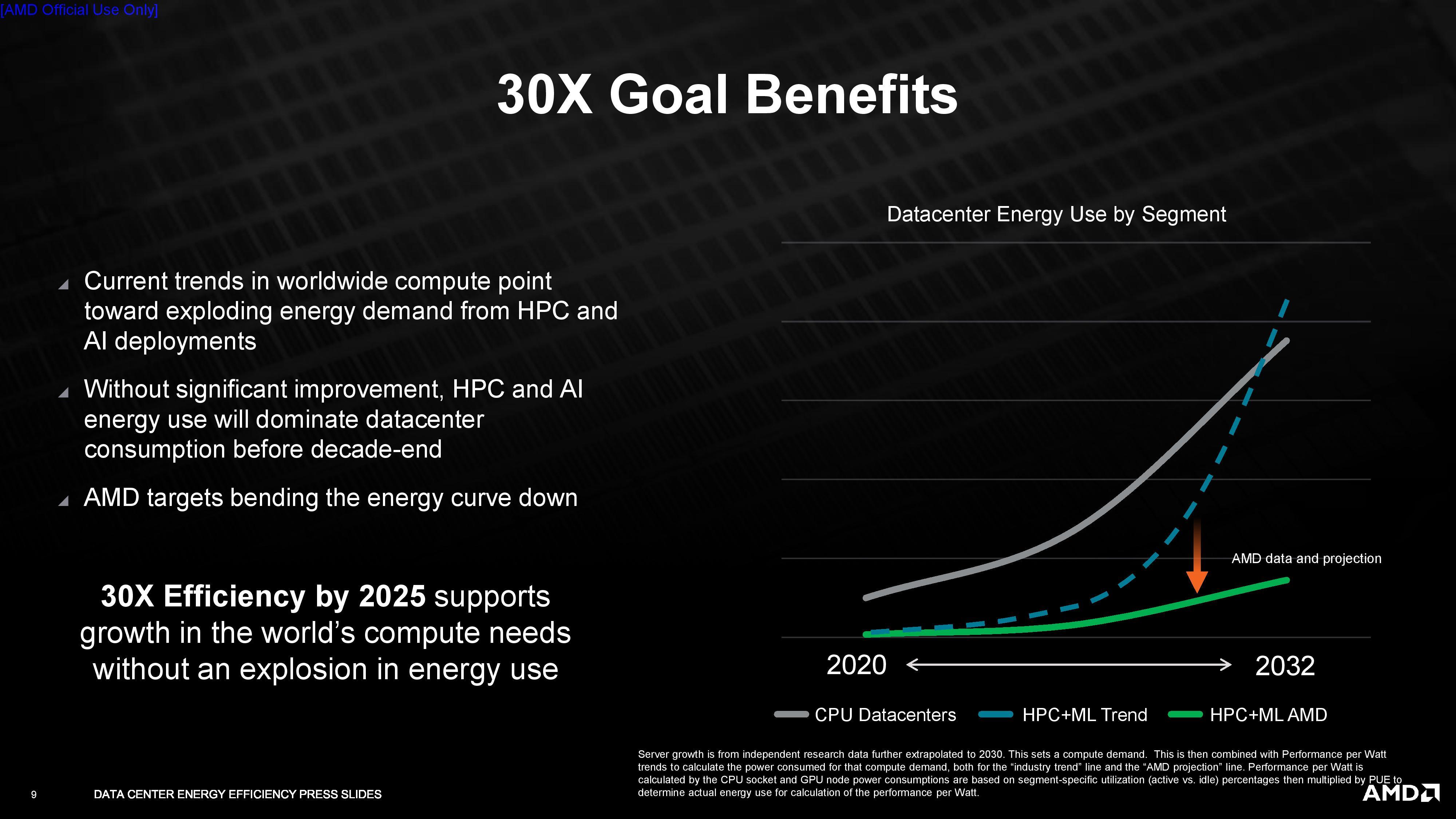

AMD today announced an extremely ambitious goal: to reach thirty times higher energy efficiency of its EPYC CPUs and Instinct GPU accelerators by 2025. AMD itself knows how lofty a goal this is: the aim surpasses typical industry-wide efficiency improvements by 150%.

AMD's new effort comes on the heels of its 20x25 initiative that ran from 2014 to 2020, yielding a 25x energy efficiency improvement for the company's notebook chips (notably, this included efficiency both when the processor was at idle and under load).

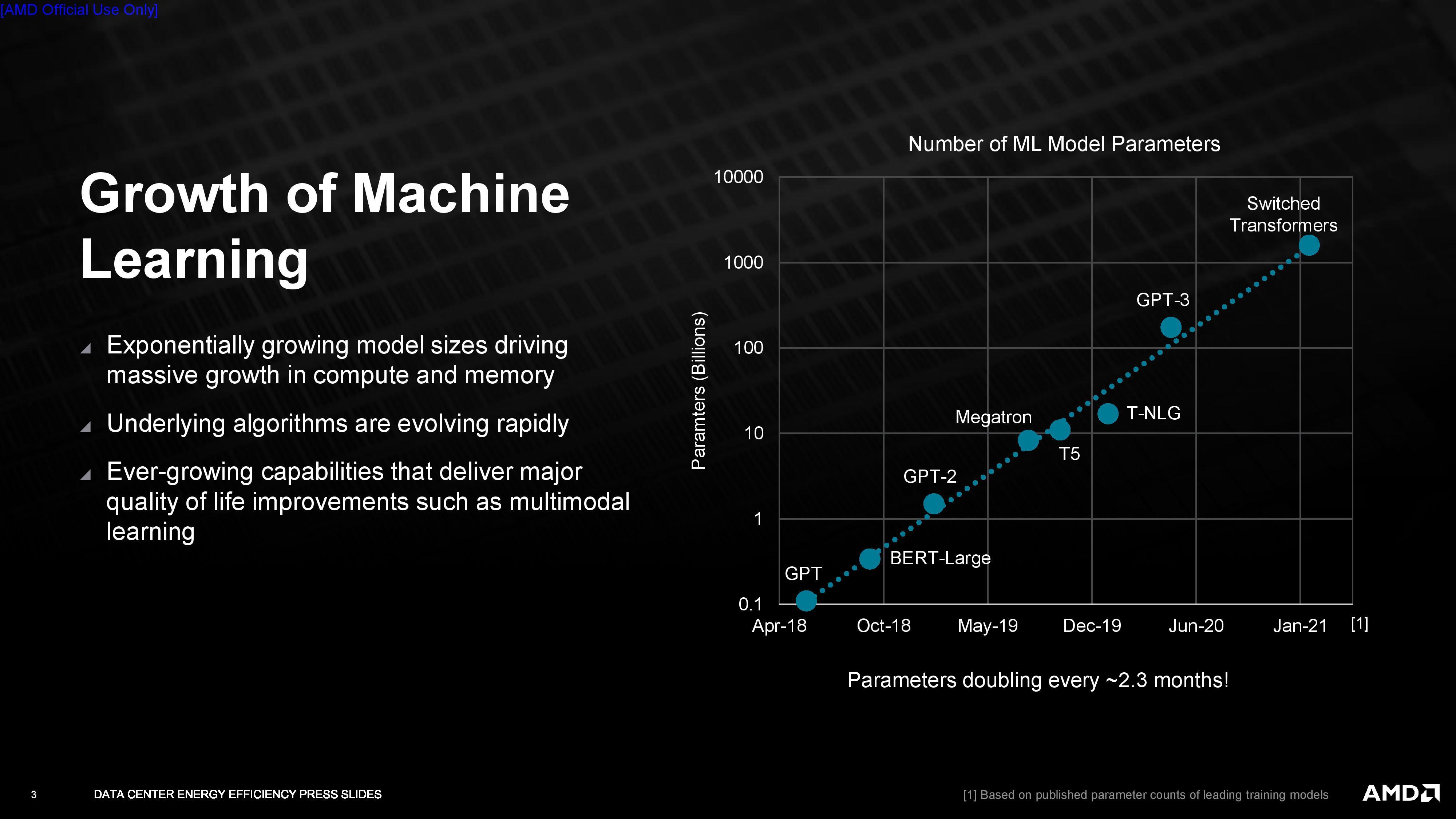

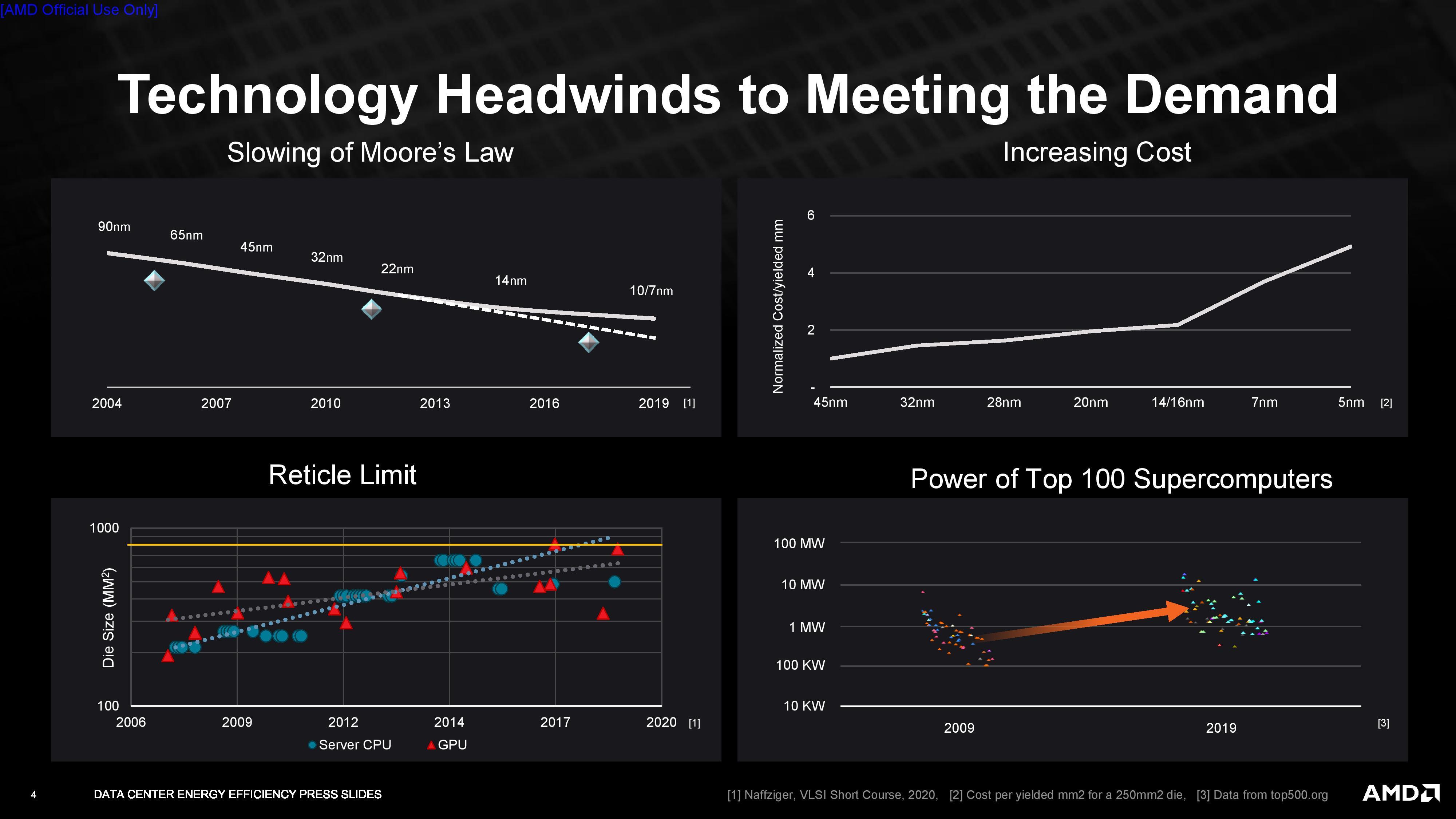

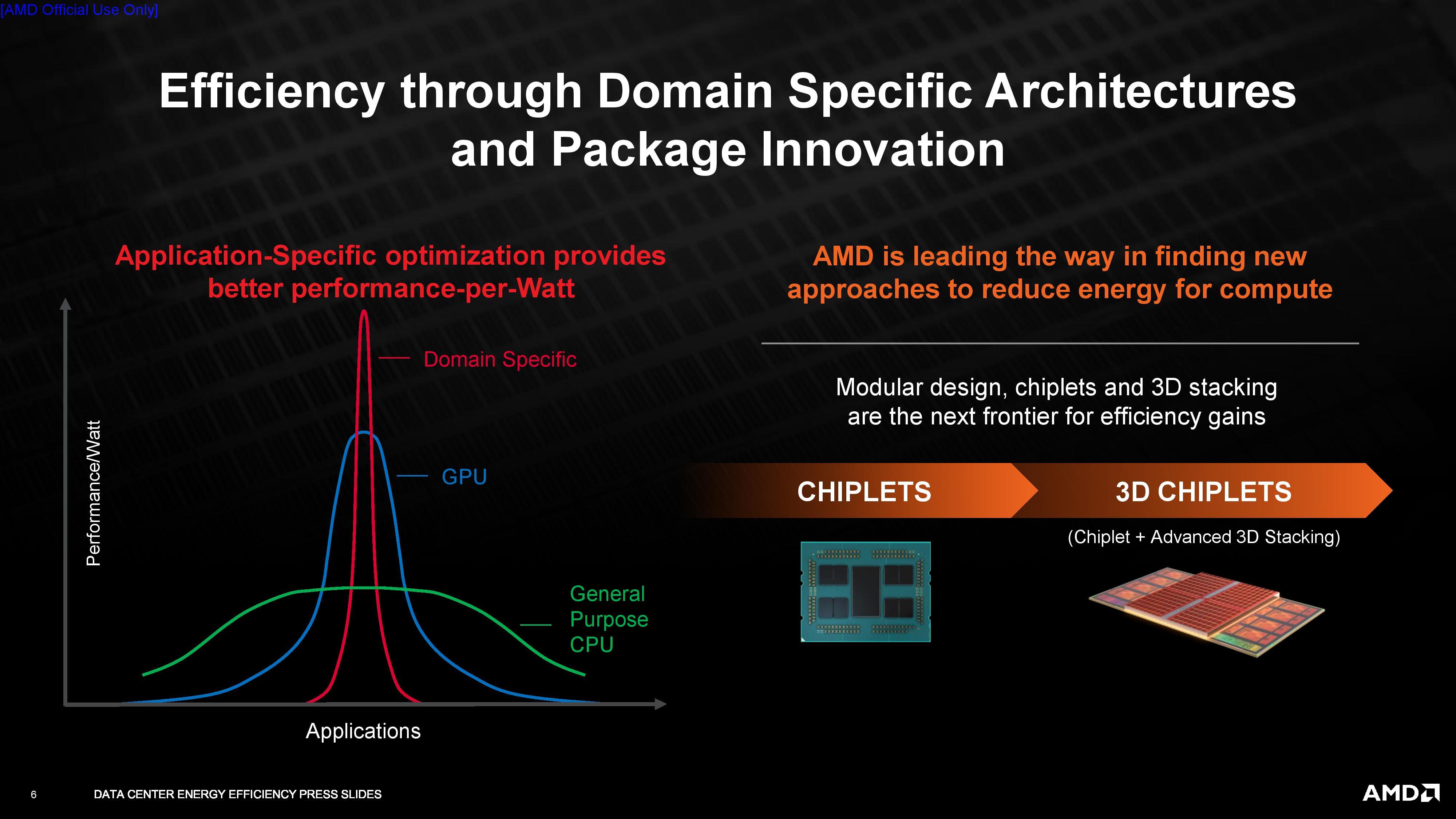

AMD's new initiative focuses specifically on AI and HPC workloads, and the company's goals may hint at its future hardware design plans. For example, AMD plans to improve performance as it works towards its new power consumption goals, but it doesn't simply want to throw more die area (i.e., larger chips) at the performance problem. Instead, the idea is to improve performance and performance-per-watt in lockstep to deliver both performance and efficiency gains.

As with any goal, AMD has to have a way of measuring its progress towards its goal. Given that the company is focused on performance in AI and HPC workloads, AMD has settled on FP16 or BF16 FLOPS (Linpack DGEMM kernel FLOPS with 4k matrix size), meaning it uses a data type that is commonly used for AI training workloads.

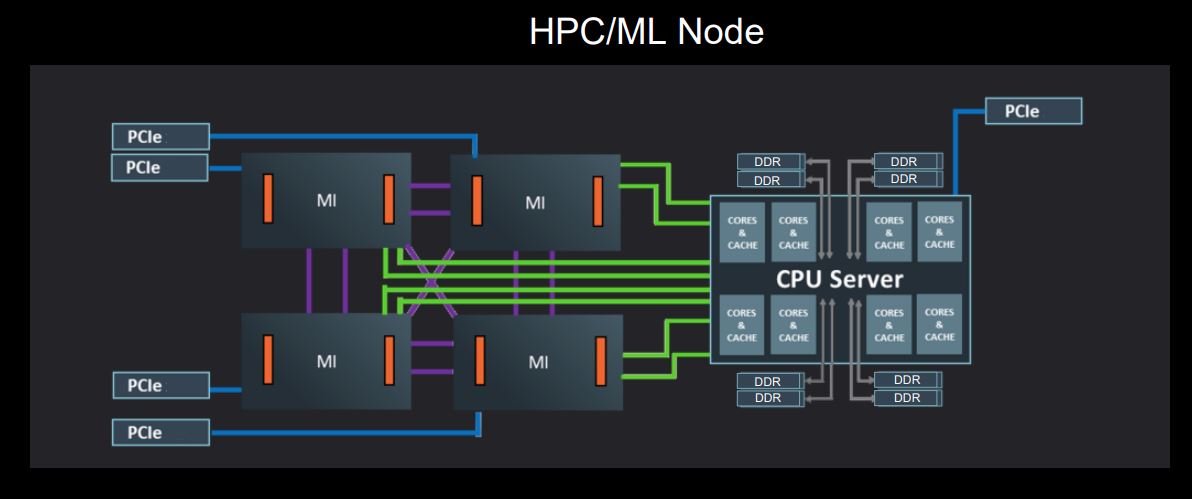

AMD has set a baseline performance measurement with the aggregate performance of an existing system (compute node) with four MI50 GPUs and one EPYC Rome 7742 CPU. This has been defined as the baseline "2020 system." AMD will measure milestones with new generations of server nodes with the same number of GPUs and CPUs.

It's important to understand that AMD can make a big jump towards its goal simply by adding fixed-function (hardware-level) acceleration for BF16 and FP16 data types, thus gaining relatively 'easy' and large performance and efficiency gains. For instance, the MI60 supports FP16, but not BF16.

AMD tells us that it will rely upon both hardware and software optimizations to reach its goals, but was non-commital on the types of hardware acceleration we can expect along the way — the company wouldn't confirm that it will add fixed-function BF16 acceleration to its CPUs and GPUs. This addition alone could yield an impressive performance gain in the target workloads. Additionally, software optimizations regularly lead to massive improvements with existing hardware, meaning AMD has several options to reach its goals.

Unlike AMD's previous goal for notebook efficiency improvements, the company isn't rolling in measurements of idle power consumption into its test methodology. Instead, the company will use typical utilization rates for these workloads (around 90%) multiplied by the data center PUE (Power Usage Effectiveness - a measure of data center efficiency). AMD says this yields a value that sticks closely to power-per-watt metrics. Here is the company's additional information, and the formula:

"We used internal AMD lab measurements of MI50 paired with an AMD EPYC 7742 CPU which produced 5.26TF per MI50 on 4k matrix DGEMM with trigonometric data initialization, and 21.6TF of FP16 on 4k matrices. Summing up the rated power for 4 MI50s (300W TBP), the 225W TDP for EPYC and include 100W for DRAM, plus power conversion losses and overheads to get 1582W for the compute node.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

HPC perf/W baseline = 4*5.26TF/1582W

AI training perf/W baseline = 4*21.56TF/1582W

Average these two metrics for the aggregate baseline."

AMD's energy efficiency goal comes in wake of vastly increased processing requirements for accelerated compute nodes, which carry out functions such as AI training, climate prediction, genomics, and large-scale supercomputer simulations. If AMD reaches its goal, the company says the overall energy consumption of these systems would be reduced by a staggering 97% over a five-year period.

"Achieving gains in processor energy efficiency is a long-term design priority for AMD and we are now setting a new goal for modern compute nodes using our high-performance CPUs and accelerators when applied to AI training and high-performance computing deployments," said Mark Papermaster, AMD's Executive Vice President and CTO. "Focused on these very important segments and the value proposition for leading companies to enhance their environmental stewardship, AMD's 30x goal outpaces industry energy efficiency performance in these areas by 150% compared to the previous five-year period."

AMD has already been exploring a wealth of power-efficiency improvements on its CPU and GPU designs - so much so that AMD's Zen CPUs actually beat Intel's best in the performance/watt ratio. The company also made massive improvements to power consumption on its RDNA 2 GPUs, claiming the energy efficiency crown from Nvidia. Part of these improvements can be attributed to manufacturing node jumps, at least on the GPU side. However, as costs for denser manufacturing processes balloon and research and development times increase, AMD clearly isn't banking solely on these.

Rather, technologies such as 3D cache stacking (Infinity Cache as applied to RDNA 2's chips reduces power consumption substantially) and an increasingly efficiency-first engineering approach will be required. Fixed-function acceleration and software improvements will also play a big role. It remains to be seen what techniques AMD will be looking for in order to reach this goal, but it is encouraging that the company obviously believes it can deliver this type of improvement over the next four years.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

digitalgriffin More power efficient = More Mining purchases.Reply

Lets face it AMD got fat on the mining boom. How many of you want to bet: If you extrapolate mining difficulty with profitability up to 2025, it will require 30x more efficient GPUs

AMD and NVIDIA at this point should just create a sub company dedicated to mining solutions. And when the market falls out, they can write it off as a loss. -

King_V And . . Nvidia didn't? Intel didn't?Reply

Also, isn't Ethereum supposed to move to proof of stake by early next year?

Bitcoin is easier with ASICs. Chia requires storage mostly.

I'm not sure why you're assuming that cryptocurrency will completely stagnate rather than move forward. (or get banned, if I had my preference) -

JarredWaltonGPU Reply

Honestly, I think this has nothing to do with mining. I think AMD will end up referring to some specific hardware feature that it doesn't currently support, meaning it has to do the work via software. Then it will implement it in hardware and get a 30X increase in efficiency over the next five years. It will almost certainly be something AI / machine learning related, as that's the big area of growth right now in datacenter stuff.digitalgriffin said:More power efficient = More Mining purchases.

Lets face it AMD got fat on the mining boom. How many of you want to bet: If you extrapolate mining difficulty with profitability up to 2025, it will require 30x more efficient GPUs

AMD and NVIDIA at this point should just create a sub company dedicated to mining solutions. And when the market falls out, they can write it off as a loss. -

eklipz330 Reply

ah yes, the grand conspiracy! they should stop becoming more efficient altogether! they should actually go in the OTHER direction and create LESS efficient products! BRILLIANT!digitalgriffin said:More power efficient = More Mining purchases.

Lets face it AMD got fat on the mining boom. How many of you want to bet: If you extrapolate mining difficulty with profitability up to 2025, it will require 30x more efficient GPUs

AMD and NVIDIA at this point should just create a sub company dedicated to mining solutions. And when the market falls out, they can write it off as a loss. -

hotaru.hino Reply

Darn, I was hoping for a 10W GPU with the same performance chops as a 6800XT :pJarredWaltonGPU said:Honestly, I think this has nothing to do with mining. I think AMD will end up referring to some specific hardware feature that it doesn't currently support, meaning it has to do the work via software. Then it will implement it in hardware and get a 30X increase in efficiency over the next five years. It will almost certainly be something AI / machine learning related, as that's the big area of growth right now in datacenter stuff.

EDIT: Out of curiosity I wanted to see how far the lower TDP GPUs can perform and the best that I'd bother to investigate is <=25W GPUs can now get the same performance as maybe a higher-end Radeon HD 5000 or GTX 500 GPU.

It only took about ten years but hey, it's something! -

plateLunch Reply

I agree. I believe there was a press release in the past month or two where AMD won a large data center or supercomputer order because their power consumption was much lower than Intel. The customer had evaluated the total cost of their installation and power was a deciding factor.JarredWaltonGPU said:Honestly, I think this has nothing to do with mining.

This was the first time I've seen power mentioned as a deciding factor so it surprised me. But it's a cost factor so not unexpected. Power consumption is a selling point. -

2Be_or_Not2Be Replyhotaru.hino said:Darn, I was hoping for a 10W GPU with the same performance chops as a 6800XT :p

EDIT: Out of curiosity I wanted to see how far the lower TDP GPUs can perform and the best that I'd bother to investigate is <=25W GPUs can now get the same performance as maybe a higher-end Radeon HD 5000 or GTX 500 GPU.

It only took about ten years but hey, it's something!

Hey, the PCIe slot can supply 75W, so why not go for a 6800XT that needs no additional power connectors! That would 30x more efficient (to my wallet)! :) -

digitalgriffin ReplyJarredWaltonGPU said:Honestly, I think this has nothing to do with mining. I think AMD will end up referring to some specific hardware feature that it doesn't currently support, meaning it has to do the work via software. Then it will implement it in hardware and get a 30X increase in efficiency over the next five years. It will almost certainly be something AI / machine learning related, as that's the big area of growth right now in datacenter stuff.

I know you are likely right. AI & ML are huge profit makers.

But I have a lot of cynicism about motivations lately. The very community that kept them afloat, they are turning their back on. (Value oriented builder community) -

mattkiss ReplyJarredWaltonGPU said:Honestly, I think this has nothing to do with mining. I think AMD will end up referring to some specific hardware feature that it doesn't currently support, meaning it has to do the work via software. Then it will implement it in hardware and get a 30X increase in efficiency over the next five years. It will almost certainly be something AI / machine learning related, as that's the big area of growth right now in datacenter stuff.

It's not over the next five years. It's from the beginning of 2020 to the beginning of 2025. -

TheOtherOne Reply

I will take the bait ... Even tho I think it's sarcasm.2Be_or_Not2Be said:Hey, the PCIe slot can supply 75W, so why not go for a 6800XT that needs no additional power connectors! That would 30x more efficient (to my wallet)! :)

No additional power connectors doesn't mean no power consumption. If PCIe slot can supply enough power, that still means the same power is being consumed and it comes from the same source (wall socket), it just gets to GPU via different route, so to speak. It still won't be 30x (or any xxs) more efficient (to your wallet)! :p