AMD's Third-Gen Infinity Architecture Enables Coherent CPU-GPU Communication

The connecting tissue for Multi-Chip-Modules and Epyc HPC

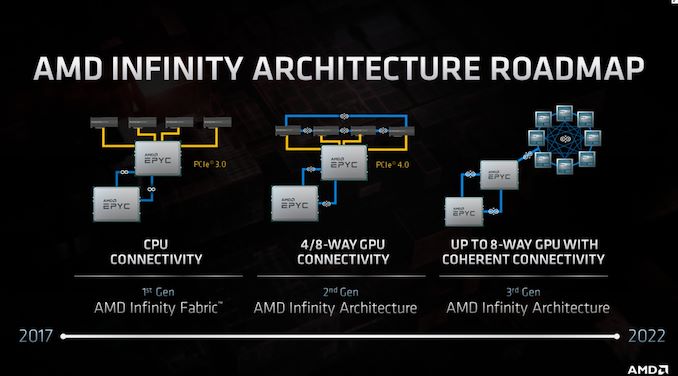

There's a lot of technological ground to cover following today's announcements from AMD. Genoa and Bergamo, 3D V-Cache powered Milan-X, and the Instinct MI200 MCM (Multi-Chip Module) GPUs aside, there's one element that crucially stands at the crossroads of all of these technologies: AMD's Infinity Fabric, with its version 3.0 introduced today, has continued to evolve dramatically since AMD first introduced the new connectivity scheme in March 2020.

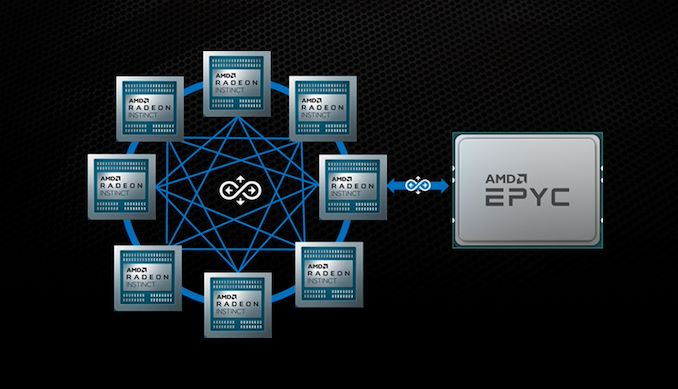

In many ways, AMD's Infinity Fabric is an extension of AMD's dreams of Heterogeneous System Architecture (HSA) systems; it now powers intra- and inter-chip communication on AMD's CPU and GPU solutions. There's no singular technology that can be described as being the Infinity Architecture solution; the name aggregates several interconnect technologies employed on AMD's latest products, culminating in a coherent CPU + GPU technology that aims to improve system performance (and especially HPC performance) by leaps and bounds.

Infinity Fabric 3.0 ushers in what AMD dreamed the future was with its "The Future is Fusion" marketing campaign from way back in 2008. It brings a coherent communications bus for interconnectivity and resource sharing between the company's CPU and GPU solutions, allowing for increased performance, lower latencies, and lower power consumption.

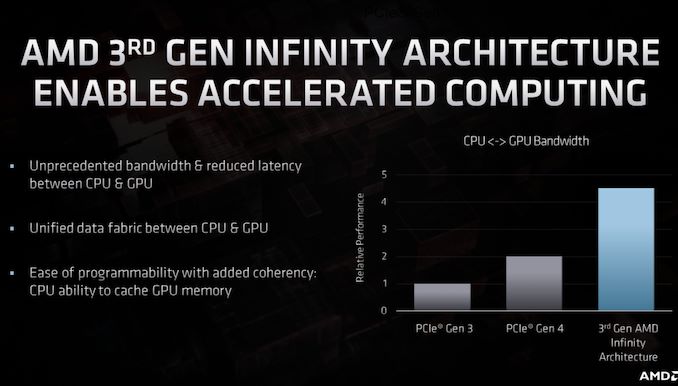

The idea is simple: moving data is computationally expensive. Hence the Infinity Architecture is designed to reduce data movement between storage banks (whether it be VRAM, system RAM, or CPU cache) as much as possible. If every piece of the hardware puzzle is aware of what information lies where and can access it on an "as-needed" basis, big performance gains can be realized.

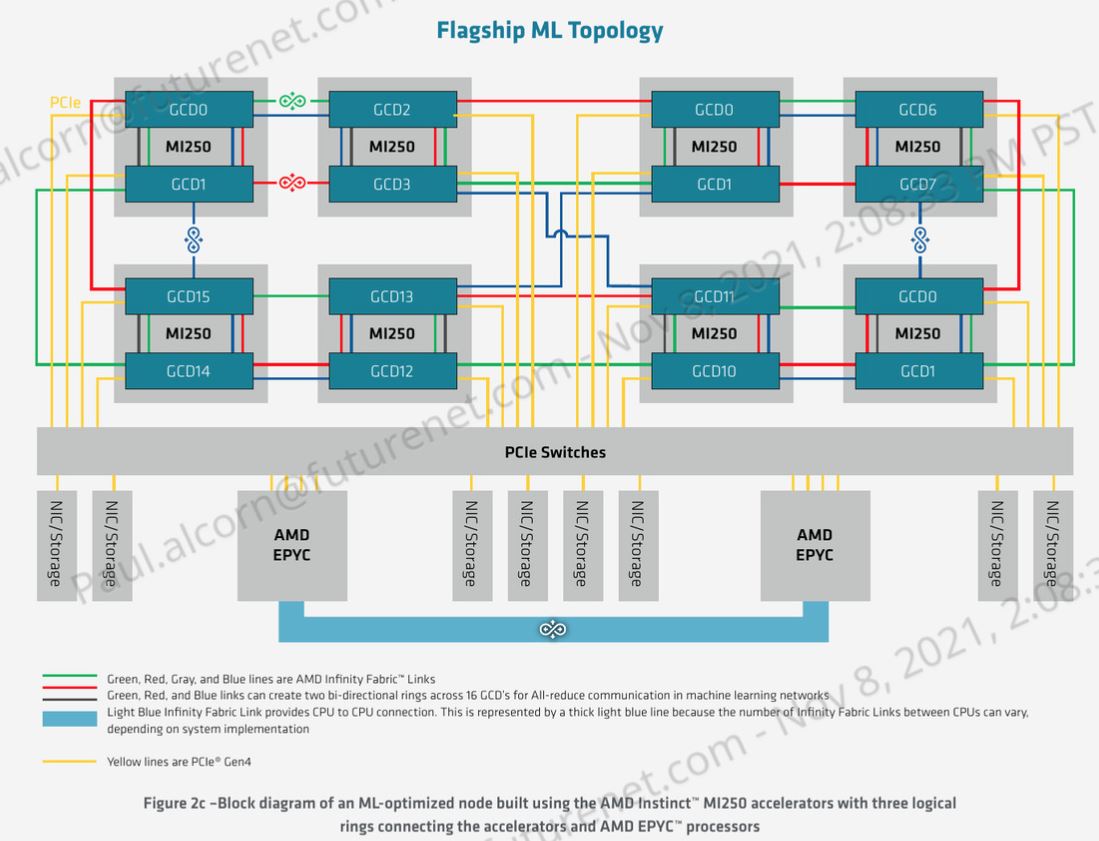

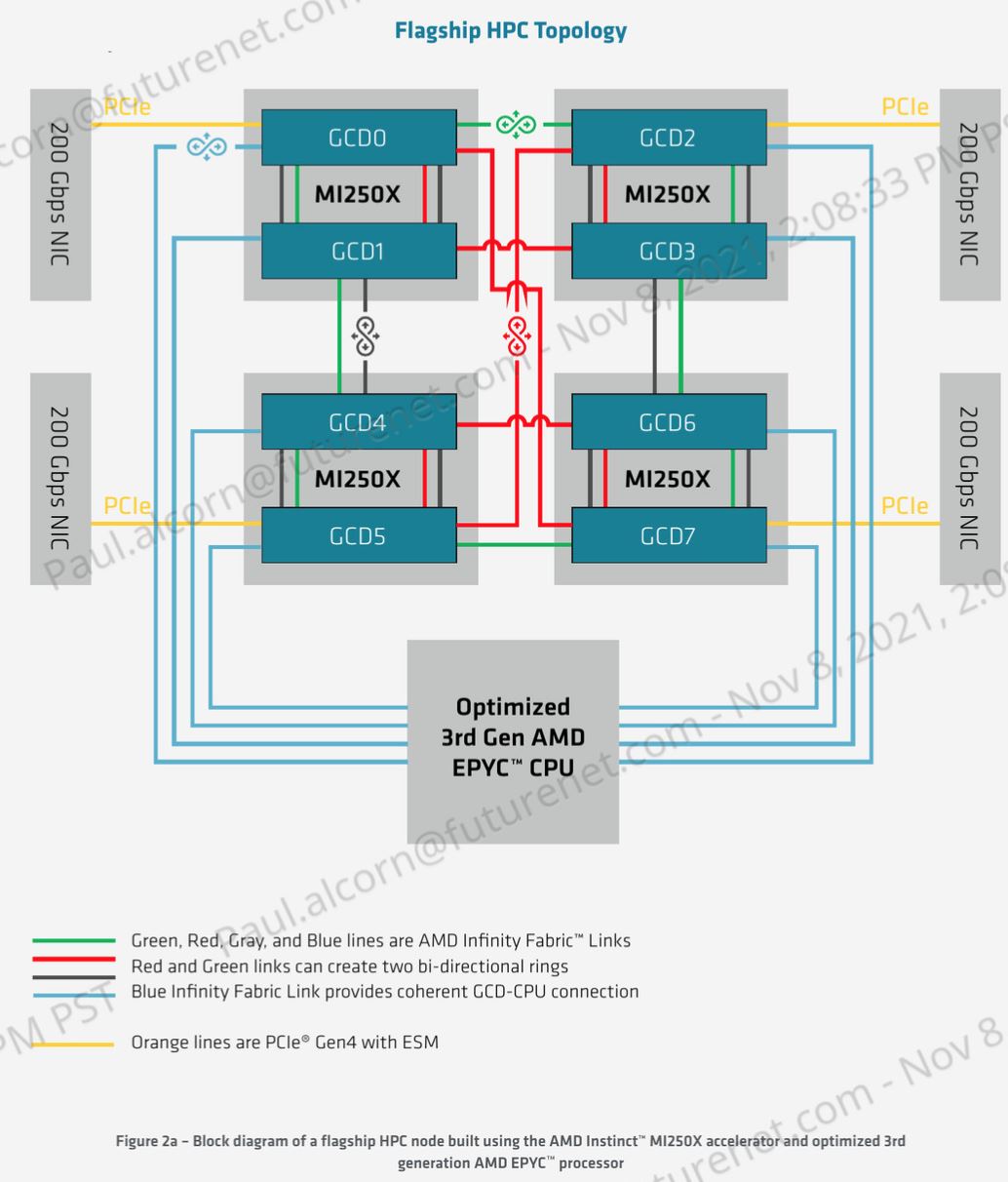

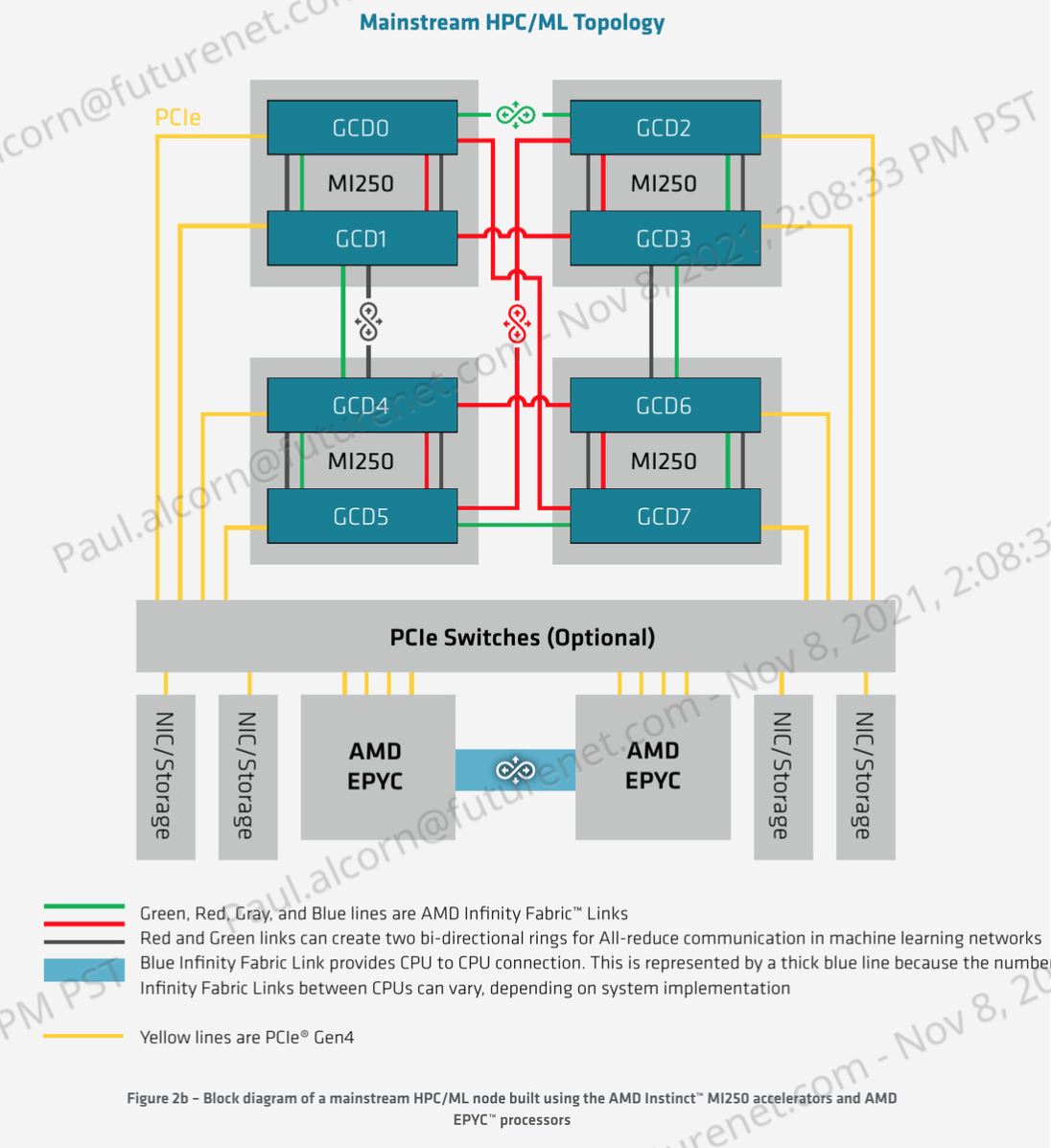

AMD's Infinity Architecture 3.0 builds upon its Infinity Fabric technology in almost every conceivable way. The previous-generation Infinity Fabric architecture forced communication between CPU and GPU to be done (non-coherently) via the PCIe bus - which means that peak theoretical bandwidth would scale up to that link's limit (16 GT/s for PCIe 4.0), but no more. It also limited the maximum number of PCIe-interconnected GPUs in a dual-socket system to four graphics cards. The new Infinity Architecture, however, enables the entire communication to happen via the Infinity Fabric 3.0 link, meaning there is no PCIe non-coherent communication, though the link does have a fallback to PCIe if needed.

Additionally, the Infinity Fabric is now used to enable an 400 GB/s bi-directional link between the two GPU die found in the MI250X, enabling the first productized multi-chip GPU.

The new, improved Infinity Architecture actually allows for a coherent communication channel between not only a dual-socket Epyc CPU system but increases the maximum amount of simultaneous GPU connections from four to eight. The speed at which graphics cards talk to one another has also been greatly improved - the Infinity Architecture now allows for 100 GB/s bandwidth throughout each of its Infinity Fabric links, providing enough throughput to feed entire systems that can include up to two EPYC CPUs and eight GPU accelerators.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

TheOtherOne Maybe my next GPU upgrade will end up going AMD too. Or the one after that will be the whole PC upgrade, rather than just the GPU. Unless of course nVidia comes up with something better and interesting and maybe even "team-up" with Intel for something similar.Reply -

Blacksad999 Nvidia has had something similar for awhile now that they've been working on.Reply

NVIDIA GPUDirect® is a family of technologies, part of Magnum IO, that enhances data movement and access for NVIDIA data center GPUs. Using GPUDirect, network adapters and storage drives can directly read and write to/from GPU memory, eliminating unnecessary memory copies, decreasing CPU overheads and reducing latency, resulting in significant performance improvements. These technologies - including GPUDirect Storage, GPUDirect Remote Direct Memory Access (RDMA), GPUDirect Peer to Peer (P2P) and GPUDirect Video - are presented through a comprehensive set of APIs.

GPUDirect for VideoOffers an optimized pipeline for frame-based devices such as frame grabbers, video switchers, HD-SDI capture, and CameraLink devices to efficiently transfer video frames in and out of NVIDIA GPU memory, on Windows only. -

VforV Reply

No way intel will team-up with nvidia. Intel already said they are directly competing with nvidia in GPU space with intel ARC and their XeSS.TheOtherOne said:Maybe my next GPU upgrade will end up going AMD too. Or the one after that will be the whole PC upgrade, rather than just the GPU. Unless of course nVidia comes up with something better and interesting and maybe even "team-up" with Intel for something similar.

This is the only battle where I am behind intel, my popcorn is ready. Of course I support AMD, before both, until they screw up, if it ever happens.

Both AMD and intel will have CPU+GPU combos and nvidia is in the worst position from now on. Let's see how and what they do next in this situation (Arm CPU?) to fight back... it's not looking that good for them. -

renz496 ReplyVforV said:Both AMD and intel will have CPU+GPU combos and nvidia is in the worst position from now on. Let's see how and what they do next in this situation (Arm CPU?) to fight back... it's not looking that good for them

actually nvidia are the most flexible. they can use ARM, PowerPC and x86. and when it comes to x86 nvidia can choose both intel or AMD. in the past some people think that AMD will be the only one have the tech to combine their CPU & GPU properly (same case with intel). but to avoid this from becoming an issue nvidia end up acquiring mellanox which is extremely popular for it's interconnect tech. also the past few years nvidia has been building tons of ecosystem. this is where nvidia major strength is. if anything those that against nvidia acquisition of ARM are not really afraid nvidia to withheld ARM best tech to themselves only. with nvidia even if they still have access to all ARM latest tech going forward they won't be able to compete with the complete ecosystem that being provided by nvidia. so to them the moment nvidia allowed to own ARM is the moment they will be dead. -

VforV Reply

:LOL::ROFLMAO: Love those 2 parts, especially. Must be nice up there, with your head in the clouds...renz496 said:actually nvidia are the most flexible. they can use ARM, PowerPC and x86. and when it comes to x86 nvidia can choose both intel or AMD. in the past some people think that AMD will be the only one have the tech to combine their CPU & GPU properly (same case with intel). but to avoid this from becoming an issue nvidia end up acquiring mellanox which is extremely popular for it's interconnect tech. also the past few years nvidia has been building tons of ecosystem. this is where nvidia major strength is. if anything those that against nvidia acquisition of ARM are not really afraid nvidia to withheld ARM best tech to themselves only. with nvidia even if they still have access to all ARM latest tech going forward they won't be able to compete with the complete ecosystem that being provided by nvidia. so to them the moment nvidia allowed to own ARM is the moment they will be dead. -

husker If I recall correctly, the gaming benchmarks showed maybe a 5% boost with Infinity enabled using an RX 6900XT. I'd love to see some updated numbers.Reply -

TJ Hooker Contrary to the comments above, I don't think this article or the technology discussed therein have anything to do with consumer PCs or gaming. As far as I can tell, that would require replacing the PCIe x16 slot(s) on consumer motherboards with a proprietary IF connection, which I can't see happening.Reply

This is for HPC/supercomputers.